Abstract

The rapid evolution of 5G and emerging 6G networks has increased system complexity, data volume, and security risks, making anomaly detection vital for ensuring reliability and resilience. However, existing machine learning (ML)-based approaches still face challenges related to poor generalization, weak temporal modeling, and degraded accuracy under heterogeneous and imbalanced real-world conditions. To overcome these limitations, a hybrid time series transformer–deep belief network (HTST-DBN) is introduced, integrating the sequential modeling strength of TST with the hierarchical feature representation of DBN, while an improved orchard algorithm (IOA) performs adaptive hyper-parameter optimization. The framework also embodies the concept of symmetry and asymmetry. The IOA introduces controlled symmetry-breaking between exploration and exploitation, while the TST captures symmetric temporal patterns in network traffic whose asymmetric deviations often indicate anomalies. The proposed method is evaluated across four benchmark datasets (ToN-IoT, 5G-NIDD, CICDDoS2019, and Edge-IoTset) that capture diverse network environments, including 5G core traffic, IoT telemetry, mobile edge computing, and DDoS attacks. Experimental evaluation is conducted by benchmarking HTST-DBN against several state-of-the-art models, including TST, bidirectional encoder representations from transformers (BERT), DBN, deep reinforcement learning (DRL), convolutional neural network (CNN), and random forest (RF) classifiers. The proposed HTST-DBN achieves outstanding performance, with the highest accuracy reaching 99.61%, alongside strong recall and area under the curve (AUC) scores. The HTST-DBN framework presents a scalable and reliable solution for anomaly detection in next-generation mobile networks. Its hybrid architecture, reinforced by hyper-parameter optimization, enables effective learning in complex, dynamic, and heterogeneous environments, making it suitable for real-world deployment in future 5G/6G infrastructures.

1. Introduction

The rapid proliferation of the Internet of Things (IoT) has transformed diverse sectors by enabling massive interconnectivity between sensors, actuators, and intelligent devices [1]. IoT applications span from smart grids, where real-time monitoring and demand-response mechanisms improve energy efficiency [2] to healthcare systems that leverage wearable devices and remote patient monitoring for enhanced diagnosis and treatment [3]. Emerging paradigms such as batteryless IoT devices and backscatter communication have further extended the scope of ultra-low-power connectivity [4,5,6,7], supporting sustainable deployments in resource-constrained environments [8]. Meanwhile, intelligent transportation relies on vehicle-to-vehicle (V2V) and vehicle-to-infrastructure (V2I) communication to enhance traffic safety and efficiency [9,10,11]. In contrast, industrial IoT (IIoT) facilitates predictive maintenance, automation, and process optimization [12]. Collectively, these advancements highlight IoT as a cornerstone of modern digital ecosystems and a key enabler of next-generation mobile communication networks [13].

Despite its transformative potential, IoT remains highly vulnerable to security threats due to its heterogeneous architecture, constrained resources, and massive attack surface [14,15,16]. Security challenges manifest across multiple layers, ranging from hardware vulnerabilities and side-channel attacks [17,18,19] to physical-layer risks such as jamming, spoofing, and eavesdropping [20,21,22]. At the network layer, issues such as routing manipulation, denial-of-service, and replay attacks compromise availability and data integrity, while lightweight authentication remains a persistent challenge in ensuring trust among resource-limited devices [23,24,25,26]. In this context, intrusion detection systems (IDSs) have become a critical line of defense for mobile IoT environments [27]. An IDS is tasked with monitoring network traffic, detecting abnormal behaviors, and identifying potential attacks in real-time, thereby safeguarding system reliability and continuity [28]. The integration of effective IDS mechanisms into mobile IoT is particularly vital, as these networks support critical infrastructures and latency-sensitive services that cannot tolerate prolonged downtime or undetected intrusions [29].

Machine learning (ML) and deep learning (DL) have emerged as powerful tools to address the limitations of traditional security mechanisms in IoT-based mobile networks [30,31,32]. Unlike signature-based approaches, which struggle against zero-day attacks or highly dynamic traffic, ML- and DL-driven models can autonomously learn complex patterns from heterogeneous and high-dimensional data, enabling robust generalization across diverse environments [33,34,35]. DL architectures, in particular, offer advanced temporal modeling and hierarchical feature abstraction, making them well-suited for capturing subtle anomalies hidden within noisy or imbalanced datasets. Their ability to adapt in real-time and -scale with massive traffic flows positions them as indispensable for next-generation IDS solutions in IoT, where resilience, accuracy, and efficiency are essential [35,36,37].

1.1. Related Works

In [30], an attention-based convolutional neural network (ABCNN) was introduced for intrusion detection in IoT networks. The approach integrated an attention mechanism to address minority classes and applied mutual information for feature selection. Evaluation on multiple IoT datasets, including Edge-IoTset, IoTID20, ToN-IoT, and CIC-IDS2017, against several ML/DL baselines demonstrated superior performance, with accuracy of 99.81%, precision of 98.02%, recall of 98.18%, and f1-score of 98.08%. In [31], an IDS for IoT networks was developed using ML-based feature selection combined with a stacked ensemble of classifiers. By applying the K-Best algorithm to select the 15 most relevant features, the method achieved accuracy up to 99.92%, significantly outperforming individual models.

The work [32] proposed a DL-based IDS for DDoS detection in IoT, focusing on extensive preprocessing (duplication removal, feature elimination, normalization) on the CIC-DDoS2019 dataset. Their model achieved 99.99% accuracy for binary classification and 99.30% accuracy for multiclass classification. They also reported inference time favorable compared to baseline models with fewer trainable parameters. The work [33] proposed a DL-based IDS for DDoS detection using the sensors dataset. They evaluated models including Bi-long short-term memory (LSTM) and several ML baselines on CICDDoS2019, among others. The study reported that Bi-LSTM outperformed all other tested models. They achieved precision of 99.885% and accuracy of nearly 99.90%, with very low false alarm rates.

The work [34] investigated a network intrusion detection model that extended a radial basis function neural network (RBFNN) by embedding it as the policy network in an offline reinforcement learning (RF) framework. They learned all RBF parameters and weights end-to-end by gradient descent and explored the impact of using denser hidden-layers and varying the number of RBF kernels. Evaluation was performed on five datasets (NSL-KDD, UNSW-NB15, AWID, CICIDS2017, CICDDOS2019) under noisy and unbalanced conditions. The results showed that this extended RBF-NN achieved better performance metrics than state-of-the-art models, particularly strong accuracy and robustness to dataset imbalance. The work [35] compared feature selection and feature extraction techniques within ML-based intrusion detection systems for IoT networks. It applied methods such as Principal component analysis (PCA) and mutual information on datasets like ToN-IoT, Edge-IoTset, and CIC-DDoS2019. The study found that feature selection yielded higher accuracy, reaching about 99.90%, while also reducing model complexity and improving processing time.

The approach [36] developed DeepTransIDS, a transformer-based DL model for detecting DDoS attacks on the 5G-NIDD dataset. It utilized transformer encoder layers with self-attention to capture long-range dependencies in 5G traffic. The model was tested exclusively on the 5G-NIDD dataset and compared against CNN, LSTM, and ML baselines. It achieved high accuracy (≈99.94%) and recall (≈99.91%), outperforming other published models on the same dataset. The work [37] investigated DTL-5G, a deep transfer learning-based approach for DDoS attack detection in 5G and beyond networks. It leveraged pretrained DL models (such as CNN, BiLSTM, ResNet, and Inception) trained on a source domain, then fine-tuned them on the 5G-NIDD target dataset. They evaluated the approach under domain shift (limited labeled traffic in 5G-NIDD) and showed that transfer learning significantly improved detection compared to training from scratch. The reported performance included accuracy around 98.74%, demonstrating that DTL-5G was able to maintain high detection rates even in challenging settings.

The approach [38] evaluated several ML and hybrid models for DDoS detection in IoT-driven 6G energy hub networks. They compared algorithms like random forest (RF), gradient boosting (GB), support vector machine (SVM), decision tree (DT), and K-nearest neighbors (KNN), plus hybrids (RF + KNN, GB + KNN, GB + DT), on CICDDoS2019, KDD-CUP, and UNSW-NB datasets with different training size splits. They found that RF + KNN achieved highest accuracy (~99.44%) and that GB + DT had superior precision on some datasets, while GB + KNN attained the best recall under certain conditions. The work [39] investigated enhanced intrusion detection with advanced deep features and ensemble classifier techniques, where deep feature extraction via CNN, recurrent neural network (RNN), and autoencoder (AE) was combined with ensemble classifiers (RF + SVM) using soft voting. They evaluated the approach on the NSL-KDD and CICDDoS2019 datasets. The ensemble model with CNN-based features achieved near-perfect accuracy values (99.99% on NSL-KDD and 99.91% on CICDDoS2019), while the RNN- and autoencoder-based variants delivered ~99.30% accuracy on CICDDoS2019.

The work [40] investigated the weighted SVM kernel combined with adolescent identity search plus RF ensemble (wSVMAS-RF) for DDoS detection and categorization. It processed datasets including CICDDoS2019 and CICDoS2017 with a preprocessing pipeline using interval-reduced kernel PCA (IRKPCA) for dimensionality reduction. The method achieved accuracy of about 99.74% on CICDDoS2019 and ~99.00% on CICDoS2017, along with competitive precision, recall, and low false positive rates. The work [41] investigated a one-dimensional CNN (OS-CNN) model for network intrusion detection. They implemented four temporal-convolution approaches, such as time series transformer (TST), and compared them on multiple intrusion detection datasets, including CICDDoS2019 and UNSW-NB15. They demonstrated that TST and OS-CNN achieved the highest accuracy, both exceeding 98.5% accuracy, and showed superior area under the curve (AUC) performance compared to baseline CNN and RNN models.

The work [42] investigated a stacking ensemble approach for DDoS attack detection in software-defined cyber-physical systems (SD-CPS), relying on DL base models. They evaluated their method on the SDN-specific dataset and CICDDoS2019, including both binary and multiclass scenarios. The method achieved accuracy above 99% in both binary and multiclass classifications, maintaining robustness even with unknown traffic distribution. The work [43] proposed 5G-SIID, a hybrid DDoS intrusion detection system for 5G IoT networks combining deep neural network (DNN) and classical ML classifiers (RF, DT, SVM, KNN). It used PCA for feature selection, SMOTE for imbalance correction, and standard scaling, and was evaluated on Edge-IIoTset, among others. The DNN achieved 100% accuracy for binary classification, ~96.15% for 6-class classification, and ~94.68% for 15-class classification.

The study [44] investigated a complete pipeline integrating exploratory data analysis (EDA) with DL for intrusion detection in softwarized 5G networks. It applied preprocessing steps such as feature correlation, scaling, and class balancing, followed by DL classifiers including CNN and LSTM. The approach was validated on the 5G-NIDD dataset and achieved accuracy exceeding 99%, with strong precision and recall, demonstrating the effectiveness of coupling EDA with DL for 5G intrusion detection. The work [45] developed a hybrid feature-selection approach to anomaly intrusion detection in IoT networks, combining filter and wrapper methods. It used SMOTE to address class imbalance and applied classifiers such as DT, RF, and KNN. The method was tested on the BoT-IoT, ToN-IoT, and CIC-DDoS2019 datasets. The DT classifier achieved accuracy between 99.82 and 100%, with detection times between 0.02 and 0.15 s, demonstrating both high efficiency and fast response.

1.2. Paper Motivation, Contribution, and Organization

Existing research has advanced IoT intrusion detection through various ML and DL models, ranging from attention-based CNNs [30] to ensemble classifiers [31], transformer-based models [36], and hybrid deep feature extractors [39]. While these approaches achieved high accuracy on individual datasets, they often struggled to generalize across heterogeneous environments. Many of them were evaluated under constrained conditions or relied on limited datasets, raising concerns about robustness in large-scale, multi-source mobile communication networks. Moreover, several solutions lacked the mechanisms to effectively capture both temporal dependencies in traffic and deep hierarchical representations, which are critical for detecting subtle anomalies in noisy or imbalanced IoT traffic.

In addition, most prior works have treated anomaly detection either as a pure classification problem or as a temporal sequence modeling task, without sufficiently bridging the gap between these two perspectives. This separation has often led to architectures that either excel in learning global sequential patterns, but which overlook fine-grained features or capture local abstractions while failing to model long-range dependencies. Furthermore, the lack of adaptive optimization strategies has limited their scalability when deployed in dynamic environments such as 5G and beyond.

Another notable limitation is the challenge of balancing exploration and exploitation in optimization when tuning complex DL architectures. Existing methods frequently use grid search or simple heuristics, which are computationally expensive and prone to suboptimal convergence. Even works leveraging meta-heuristics [40] tend to emphasize either exploration or exploitation, limiting adaptability in dynamic and high-dimensional scenarios like 5G/6G networks. Similarly, while some approaches introduce transfer learning [37] or feature selection [35,45], they still lack integrated optimization frameworks capable of adaptively fine-tuning hyperparameters to sustain performance across diverse datasets such as CICDDoS2019, ToN-IoT, Edge-IIoTset, and 5G-NIDD. To address these gaps, this paper proposes the HTST-DBN framework, which integrates the sequence-modeling strength of the TST architecture with the deep feature abstraction of the deep belief network (DBN). The architecture is optimized using an improved orchard algorithm (IOA) enhanced by a seasonal migration operator, designed to balance exploration and exploitation more effectively than existing methods.

In the context of mobile communication networks, normal traffic flows often exhibit symmetric temporal or statistical patterns, whereas anomalies manifest as asymmetric deviations caused by attacks, failures, or dynamic environmental factors. Within the proposed HTST-DBN framework, the TST models such temporal symmetries through self-attention, learning recurring and balanced dependencies across sequences, while the DBN captures higher-level mirrored representations that enhance robustness against noise and imbalance. Together, these components enable the model to recognize when expected symmetries in network behavior are disrupted. At the optimization level, the IOA introduces deliberate asymmetry through its seasonal-migration operator. By alternating probabilistically between convergence toward the global best and divergence toward dissimilar candidates, the IOA performs controlled symmetry-breaking in the search space, maintaining equilibrium between exploration and exploitation. This mechanism parallels the balance between structural symmetry and purposeful asymmetry found in circuit and system design. In that broader engineering sense, the same principles underlying the HTST-DBN and IOA can inspire applications in electronic design automation (EDA). Hence, the framework embodies a unifying view in which detecting or exploiting asymmetry becomes a fundamental tool for achieving optimality across both communication-network intelligence and design-automation domains. Therefore, the main contributions of this paper are as follows:

- A novel anomaly detection framework (HTST-DBN) is proposed for next-generation mobile networks, addressing the challenges of noisy, imbalanced, and heterogeneous IoT traffic through adaptive modeling and optimization.

- The proposed method combines a TST for temporal dependency learning, a DBN for hierarchical feature abstraction, and an IOA for adaptive optimization. Their integration within a single pipeline allows the model to capture sequential patterns, extract deep latent features, and fine-tune hyper-parameters adaptively, resulting in better generalization and robustness compared to standalone approaches.

- A novel IOA with a seasonal migration operator is introduced, which adaptively balances exploration and exploitation. This mechanism prevents premature convergence, ensures diverse search, and refines promising regions more effectively, leading to stronger and more reliable hyper-parameter optimization.

- The proposed model is extensively benchmarked against state-of-the-art algorithms, including BERT, deep reinforcement learning (DRL), CNN, and RF, across four diverse datasets (ToN-IoT, 5G-NIDD, CICDDoS2019, and Edge-IoTset), demonstrating superior performance and stability.

The remainder of this paper is organized as follows: Section 2 presents the overall research workflow, including the benchmark datasets, data preprocessing pipeline, and the proposed HTST-DBN methodology with its core components (TST, DBN, and IOA). Section 3 reports the experimental setup and performance evaluation, providing comparative results against state-of-the-art models. Section 4 offers a detailed discussion, highlighting robustness, statistical significance, and real-world applicability. Finally, Section 5 concludes the paper with key findings, and outlines promising directions for future research.

2. Materials and Proposed Methods

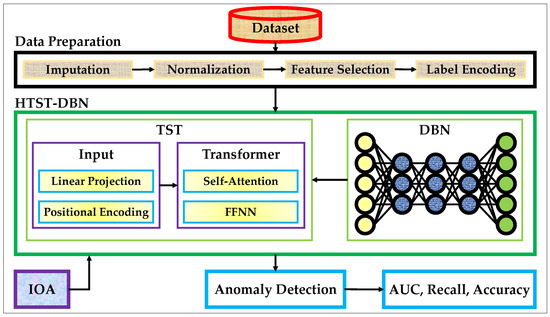

Figure 1 provides a high-level overview of the research methodology adopted in this work. Four benchmark datasets (ToN-IoT, 5G-NIDD, CICDDoS2019, and Edge-IoTset) serve as the foundation for model development and evaluation. The raw data streams undergo a preparation stage consisting of imputation, normalization, feature selection, and label encoding to ensure quality, consistency, and suitability for subsequent learning. Once preprocessed, the datasets are introduced into the proposed hybrid framework, which integrates a time series transformer (TST) with a deep belief network (DBN), further enhanced by the improved orchard algorithm (IOA) for adaptive optimization.

Figure 1.

Research methodology framework.

As shown in the figure, the proposed HTST-DBN pipeline follows a structured flow from raw data to anomaly detection outcomes. The TST captures temporal dependencies in the input, the DBN abstracts high-level features, and IOA optimizes the architecture and hyper-parameters. The outputs of the hybrid model are evaluated through standard classification metrics such as accuracy, precision, recall, and area under the curve (AUC). This end-to-end design enables effective detection of anomalies in heterogeneous and dynamic mobile communication environments, while the detailed mechanisms of each component are elaborated in the subsequent subsections.

2.1. Dataset

In this study, four benchmark datasets were employed to comprehensively evaluate the proposed HTST-DBN framework [30,37,38]. These datasets collectively represent diverse environments, including IoT telemetry, 5G core traffic, DDoS attacks, and edge-enabled IoT systems. Their heterogeneity in traffic sources, feature dimensions, and class distributions provides a rigorous testing ground for anomaly detection models. Validation across all four datasets demonstrates that the proposed method remains accurate under controlled conditions and robust to noise, imbalance, and variability inherent in real-world communication networks [37,38,39].

The ToN-IoT dataset is derived from a large-scale testbed for IoT and network systems [30,35]. It integrates telemetry from IoT devices, network traffic, and operating system logs, offering multi-source data streams. The dataset contains both benign and attack classes, including DoS, DDoS, backdoor, injection, ransomware, and password attacks. Its diversity makes it particularly valuable for validating the generalization ability of anomaly detection frameworks across heterogeneous input modalities. The 5G-NIDD dataset focuses on non-IP data delivery in 5G core networks, reflecting traffic patterns generated by IoT devices that operate without traditional IP stacks. This dataset captures signaling behavior, session establishment, and control-plane data flows in a 5G environment. The primary classes include normal device communications and various anomalous behaviors such as misconfigurations, flooding, and signaling abuse. Because it represents next-generation cellular networks, it is essential for evaluating anomaly detection models intended for 5G and beyond [36,37].

The CICDDoS2019 dataset is a well-established benchmark for DDoS detection. It was generated using a realistic testbed by simulating a wide range of DDoS attack vectors, including UDP floods, TCP SYN floods, HTTP floods, and amplification attacks, alongside normal traffic [38,39,40]. The dataset includes flow-based features such as packet counts, byte counts, and inter-arrival times. Its fine-grained labeling of different DDoS categories allows anomaly detection models to be tested not only on binary classification but also on multiclass detection of attack types. The Edge-IoTset dataset is designed for anomaly detection in edge-enabled IoT scenarios. It contains traffic traces from IoT devices connected through edge gateways, reflecting realistic deployments where computational resources and network conditions vary. The dataset includes both benign and malicious traffic, with attack types such as botnet, brute force, and reconnaissance. Its parameters cover packet-level and session-level features, making it highly representative of IoT devices [30,35].

To ensure the quality and consistency of the datasets used in this study, a systematic data preparation process was applied before feeding them into the HTST-DBN framework. Each dataset contains heterogeneous features and varying levels of noise, imbalance, and missing entries. Without careful preprocessing, these irregularities could severely hinder the performance of the model. Accordingly, a four-step preprocessing pipeline was designed, consisting of imputation, normalization, feature selection, and label encoding, to transform the raw data into a standardized and learning-ready format. All operations were implemented in Python 3.10 using Scikit-learn 1.2 and Imbalanced-learn 0.10 libraries, ensuring methodological transparency and cross-dataset consistency.

Incomplete or missing entries, especially in the ToN-IoT and Edge-IoTset datasets caused by packet loss or irregular telemetry sampling, were corrected using the KNN imputation algorithm with . For each missing value, the five most similar samples were identified in Euclidean space, and their mean value was used for replacement. This method preserves the non-linear relationships among correlated features and maintains the local distribution structure of network traffic, avoiding the statistical bias often introduced by mean or median imputation. The resulting datasets retained full record integrity while minimizing variance distortion across continuous attributes. Because the selected benchmarks contain features with highly diverse numeric scales (from packet counts in the hundreds to byte rates exceeding one million) all numerical attributes were normalized using Min–Max scaling () into the interval . This scaling stabilizes gradient-based optimization, prevents domination of large-magnitude variables, and accelerates convergence of both the Transformer and DBN components. The Min–Max approach was favored over Z-score normalization because it preserves sparsity and boundedness, which are critical for time series modeling stability in traffic data.

To reduce dimensionality and improve generalization, a two-stage feature-selection process was employed. First, features with near-zero variance () were removed to eliminate non-informative attributes. Second, mutual information (MI) analysis was performed to quantify the dependency between each remaining feature and its corresponding label. Features were ranked according to MI scores, and the top- attributes were retained ( depending on dataset dimensionality). This hybrid filter approach effectively discarded redundant variables while preserving those most strongly correlated with the target classes. The dimensional statistics before and after selection for each dataset are summarized in Table 1. The reduction percentage () quantifies the extent to which redundant or low-variance features were eliminated, demonstrating the efficiency of the mutual-information-based selection process. Across all datasets, the feature space was reduced by roughly half, highlighting a strong balance between dimensional compression and information retention. This effective reduction confirms that the preprocessing pipeline successfully minimized noise while preserving the most discriminative attributes for anomaly detection.

Table 1.

Feature statistics before and after selection.

Severe class imbalance (particularly evident in ToN-IoT and Edge-IoTset) was mitigated using the synthetic minority over-sampling technique (SMOTE). SMOTE generates synthetic minority examples by interpolating between neighboring samples in feature space, equalizing class distributions and preventing bias toward majority categories. This oversampling was applied solely to the training subset to avoid information leakage. Finally, all categorical attack labels (e.g., normal, DoS, DDoS, botnet, brute-force) were numerically encoded via LabelEncoder to establish a consistent label space for supervised learning and evaluation. Through this structured preprocessing pipeline, all datasets were standardized, balanced, and dimensionally optimized, forming a stable and reproducible foundation for subsequent modeling with the proposed HTST-DBN architecture.

2.2. TST

The TST is a specialized adaptation of the Transformer architecture designed to address the unique characteristics of sequential temporal data. While standard Transformers such as vision Transformer have been widely adopted in natural language processing and computer vision, they are not inherently optimized for time-dependent structures [46]. TST introduces a tailored processing pipeline consisting of linear projection, positional encoding, and multi-head self-attention to capture long-range temporal dependencies in sequential inputs. Unlike recurrent neural networks (RNNs) or convolutional neural networks (CNNs), which often struggle with vanishing gradients or limited receptive fields, TST provides a non-recursive mechanism capable of modeling complex temporal correlations over extended horizons. For anomaly detection in mobile communication networks, where traffic traces and telemetry streams naturally follow sequential patterns, the strengths of TST become particularly valuable [47]. Conventional ML or even general-purpose Transformer models often fail to capture noisy, sparse, and highly dynamic temporal structures across heterogeneous environments such as 5G cores, IoT telemetry, and mobile edge systems. TST addresses these limitations by (i) effectively learning long-term temporal dependencies, (ii) providing robustness against irregular and imbalanced data, and (iii) offering better generalization across multi-source and edge-enabled scenarios. These advantages make TST a crucial component of our hybrid framework, enabling reliable representation learning prior to deep feature abstraction with DBN [48].

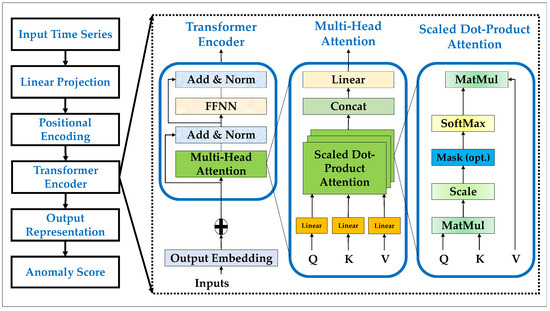

The overall structure of TST is illustrated in Figure 2, which depicts the transformation pipeline from raw input series to anomaly scores. The input time series is first mapped into a fixed-dimensional space through linear projection, after which positional encoding enriches the embeddings with temporal order information. These encoded sequences are then processed by the Transformer encoder, where the multi-head self-attention mechanism evaluates contextual relationships among elements by computing query–key–value () interactions through scaled dot-product attention. The outputs are normalized, passed through feed-forward layers, and aggregated into final embeddings that form the output representation. From these embeddings, an anomaly score is derived, reflecting the model’s ability to highlight irregular or malicious behaviors in network traffic. This modular architecture underpins the strength of TST in modeling sequential patterns critical for anomaly detection [46,47,48].

Figure 2.

The basic TST architecture.

Equation (1) represents the mapping of a raw time series of length and input dimension into a latent embedding space of dimension :

Here, is the projection matrix; is the bias vector; denotes the projected embeddings. Since the attention mechanism itself is permutation-invariant, sequential order is injected using positional encoding as shown in Equation (2) [46]:

where is the positional encoding matrix constructed from sinusoidal functions of varying frequencies. Once the sequence is embedded, queries, keys, and values are obtained via linear transformations, as defined in Equation (3):

Here, are the trainable weight matrices; is the dimension of the projected query–key space. These components feed into the scaled dot-product attention, which is at the core of the self-attention mechanism. As shown in Equation (4), attention is computed by normalizing similarity scores between queries and keys and then weighting the values accordingly [47]:

In practice, multiple attention heads are used to capture diverse temporal dependencies. Equation (5) defines multi-head attention (MHA):

Here, is an independent attention output, and projects the concatenated outputs back into the model dimension. The result is passed through a position-wise feed-forward network (FFN) as defined in Equation (6):

where is the weight matrix; is bias vector. This non-linear transformation further enriches the learned representation. To stabilize training and preserve gradient flow, residual connections combined with layer normalization are applied after each sublayer, as captured in Equation (7):

where denotes either the multi-head attention block or the FFN. By stacking such encoder layers, the model produces the final sequence representation, as described in Equation (8):

Here, captures the contextual embeddings of the time series across all layers.

Finally, anomaly detection requires mapping these embeddings into a task-specific score, as formulated in Equation (9) [48]:

where may represent a classification head or a reconstruction error metric, depending on whether the task is supervised or unsupervised.

Recent research has further advanced attention-based frameworks by integrating physical constraints and structural optimization to enhance interpretability, robustness, and computational efficiency. These studies demonstrate that attention mechanisms can effectively generalize across diverse time series and perception tasks, confirming their suitability for real-world IoT and intelligent monitoring applications [49,50]. Such findings further justify the adoption of the TST architecture in the proposed hybrid framework.

2.3. DBN

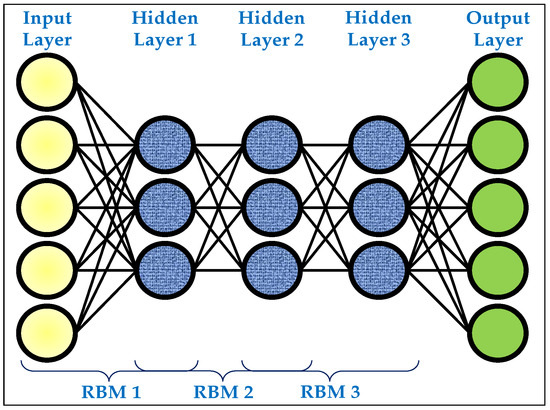

DBN was first introduced by Hinton and colleagues as a generative probabilistic model composed of multiple layers of stochastic latent variables. A DBN is built by stacking restricted Boltzmann machines (RBMs), where each RBM learns a hierarchical representation of the input data in an unsupervised manner [51]. Through greedy layer-wise pretraining followed by supervised fine-tuning, DBNs can capture increasingly abstract features from raw data. This hierarchical structure enables DBNs to address challenges such as non-linearity, high dimensionality, and limited labeled data, which are common in many real-world scenarios. By combining generative learning with discriminative fine-tuning, DBNs have been successfully applied in diverse domains such as speech recognition, image classification, and network traffic modeling. The primary advantages of DBNs lie in their ability to model complex probability distributions and to extract deep feature representations without requiring large-scale labeled datasets. Compared with shallow models or traditional machine learning techniques, DBNs demonstrate stronger generalization, robustness to noise, and improved performance on imbalanced or heterogeneous datasets. These properties make them particularly suitable for network anomaly detection, where traffic traces are often high-dimensional, noisy, and partially labeled [52].

Figure 3 illustrates the structure of a DBN, where the network is formed by sequentially stacking RBMs. The architecture begins with an input layer that feeds raw data into the first RBM. Each RBM consists of a visible layer and a hidden layer, where the hidden activations from one RBM serve as the visible inputs for the next, progressively forming deeper feature abstractions. The figure shows three hidden layers, highlighting how low-level features extracted in the early layers are transformed into increasingly complex and abstract representations at deeper layers. Finally, the output layer is connected to the last hidden layer to support supervised tasks such as classification. This hierarchical organization allows DBNs to capture both local patterns and high-level dependencies in the data. Through unsupervised pretraining of each RBM, the model learns robust feature representations even in the absence of abundant labeled data. When fine-tuned with supervised learning, the DBN becomes capable of leveraging both its generative foundations and discriminative power, making it especially effective for anomaly detection tasks in mobile communication networks where data is often noisy, high-dimensional, and only partially labeled [52,53,54].

Figure 3.

The generic structure of a DBN.

The foundation of a DBN lies in the RBM, which defines the relationship between visible and hidden variables. Equation (10) presents the energy function of an RBM, which assigns a scalar energy to each joint configuration of visible and hidden units:

Here, denotes the visible vector; the hidden vector; the weight matrix connecting visible and hidden units; and represent the bias vectors for visible and hidden layers, respectively. Building upon the energy function, the joint probability distribution of visible and hidden units is defined by the Boltzmann distribution. Equations (11) and (12) formalizes this distribution [53]:

In this expression, is the partition function, which ensures that probabilities are properly normalized. From the joint distribution, the marginal probability of a visible vector can be derived. Equation (13) shows this marginal likelihood:

This expression quantifies how likely an observed input is under the RBM model by summing over all possible hidden configurations. To enable efficient training, DBNs exploit the conditional independence between visible and hidden units. Equation (14) expresses the conditional probability of a hidden unit given the visible vector [54]:

where is the logistic sigmoid function. Similarly, the reconstruction of visible units from hidden states is captured in Equation (15):

These conditional probabilities provide the basis for the contrastive divergence learning algorithm, which approximates the gradient of the log-likelihood. Finally, by stacking multiple RBMs, a DBN is formed where the hidden activations of one layer serve as the visible inputs of the next. Equation (16) summarizes the layer-wise generative structure:

In this formulation, represent the hidden layers of the DBN, with higher layers modeling increasingly abstract features [52].

2.4. IOA

The OA is a relatively recent population-based meta-heuristic optimization method, first introduced by Kaveh et al. in 2023 [55]. Inspired by the natural process of cultivating and managing orchards, it treats candidate solutions as trees that undergo different stages of growth and renewal. OA was designed to address complex optimization problems across engineering, computer science, and data-driven applications. Its main advantages include a balance between exploration and exploitation, the ability to escape local optima, and adaptability to both continuous and discrete search spaces. By mimicking realistic agricultural operations, OA offers a novel search dynamics compared to classical algorithms such as particle swarm optimization (PSO) or genetic algorithm (GA), leading to more diverse candidate solutions and improved convergence speed [55].

The algorithm proceeds through several operators in each iteration. The growth operator enables each solution to evolve toward stronger candidates by introducing controlled random variations. The screening operator evaluates and filters weaker trees to maintain population quality. The grafting operator combines genetic material from strong and medium-quality candidates to generate promising offspring. The pruning operator randomly replaces underperforming solutions with new candidates to increase diversity. Finally, the elitism mechanism ensures that the best-performing solutions are preserved for the next generation. A single iteration therefore involves generating new solutions through growth and grafting, maintaining diversity via pruning, filtering candidates through screening, and securing the global best through elitism. This cycle is repeated until convergence, effectively imitating the management of a thriving orchard. The mathematical formulation of the OA begins with the growth operator. Equation (17) models the annual growth of a tree, where each candidate solution is updated by adding a growth factor and a random component that introduces variability in the growth direction. This mechanism ensures that solutions are not static but continuously adapt toward better regions of the search space [55].

where is the new solution; is the current position; is the growth factor; is a random variable introducing variability in the direction of growth.

The overall evaluation of solutions is governed by the fitness function. Equations (18)–(21) define the total fitness of each candidate by combining two complementary measures: the normalized fitness value and the growth rate over multiple years. Unlike many meta-heuristics that rely solely on fitness magnitude, OA introduces a distinctive objective that simultaneously considers both the quality of the current solution and its growth trajectory across generations. This dual emphasis enables a more balanced exploration of the search space and provides resilience against premature convergence [55].

where is the total fitness function; is the fitness function value of j-th candidate; is the normalized ; is the growth rate of solution ; is the normalized ; is the total number of growth years; and are weighting factors; is the weight given to those years; is the number of growth years.

Beyond growth and fitness evaluation, additional operators further refine the population. Equation (22) formulates the grafting process, which blends the characteristics of a strong candidate with those of a medium-quality one to generate new offspring. This controlled recombination promotes the inheritance of favorable traits while maintaining diversity. Equation (23) represents the pruning step, where weak or stagnant candidates are replaced by randomly generated solutions within the search boundaries, ensuring the population does not lose exploratory capability [55].

where is the grafting solution; is the position of the medium-quality candidate; is the position of the stronger candidate; is a blending coefficient determining the contribution of each parent; are the bounds of the search space; is the new randomly generated solution.

Through these mathematical formulations, the OA completes a cycle of growth, evaluation, grafting, and pruning, while elitism preserves the best-performing solutions for the next iteration. This structured process provides an effective balance between intensification and diversification, driving the search toward global optima. Although the OA offers a fresh perspective on population-based optimization, it still faces challenges in fully balancing exploration and exploitation. In some cases, the growth and grafting processes may bias the search toward intensification around promising solutions, reducing the diversity needed to escape local optima. Conversely, the pruning and random replacement steps, while useful for exploration, can occasionally disrupt convergence by introducing excessive randomness. As a newly developed algorithm, its mechanisms for maintaining this delicate balance are not yet as mature or well-validated as in more established meta-heuristics, which means there is still significant potential for refinement and extension to improve its robustness across complex optimization landscapes.

One of the main challenges of the OA lies in its limited ability to consistently balance exploration and exploitation. While growth and grafting drive intensification around promising solutions, pruning and random replacement sometimes create excessive randomness, weakening convergence. To address this limitation, a novel extension of the OA is introduced by incorporating a seasonal migration operator inspired by the natural cycles of seasonal change in orchards. This operator is specifically designed to reinforce both exploration and exploitation in a controlled and adaptive manner, thereby overcoming the tendency of the original algorithm to either converge prematurely or drift randomly in the search space.

The seasonal migration operator functions by alternately guiding candidate solutions toward either the global best solution (exploitation) or distant, less similar solutions (exploration). By probabilistically switching between these two modes, the operator maintains population diversity while simultaneously intensifying the search around high-quality regions. When the probability favors exploitation, the search is refined around the global best, accelerating convergence. Conversely, when the probability favors exploration, the operator directs the search toward distant candidates, effectively expanding the search horizon and reducing the risk of stagnation. This adaptive dual behavior ensures that both key components of meta-heuristic optimization are continuously addressed throughout the iterative process. Formally, the seasonal migration operator is defined in Equations (24) and (25):

where is the position of solution at iteration ; denotes the current global best solution; is a candidate selected from the most dissimilar individuals in the population; the parameter is a learning rate controlling the migration step size; is the probability of exploitation versus exploration; is the total number of iterations; ; ; controls the curvature of the transition. Algorithm 1 presents pseudo-code of IOA.

| Algorithm 1 Pseudo-codes of improved OA |

| Begin IOA %%Setting Initialization of number of strong/medium/weak trees, α, β, population size, Iteration %%Initial population for n = 1 to population size do Create population (trees) Calculate the fitness function () end %%Loop for i = 1 to Max iteration do %% Elitism Sort population Select elites %% Growth for j = 1 to population size do Calculate the fitness function () end %% Screening Save previous populations for growth rate for j = 1 to population size do Calculate the normalized fitness function () based Equation (19) Calculate the normalized growth rate () based Equation (21) Calculate the total fitness function () based Equation (18) Screening: strong, medium, and weak end %% Grafting for j = 1 to population size do Calculate the fitness function () end %% Pruning for p = 1 to population size do Calculate the fitness function () end %% Seasonal Migration for j = 1 to population size do Calculate the fitness function () end Sort the new population Show the best solution end End IOA |

By incorporating this operator into OA, the improved framework adaptively alternates between convergence and diversification, leading to a more reliable and effective optimization process. From an optimization-theoretic standpoint, the seasonal-migration operator serves as a controlled symmetry-breaking mechanism within the evolutionary population. During early iterations, when the candidate solutions tend to cluster around symmetric basins of attraction (regions representing repetitive or equivalent states in the search space) the exploratory branch introduces deliberate asymmetry by guiding individuals toward distant, non-similar solutions. This mechanism disrupts excessive regularity and injects diversity, preventing the optimizer from being confined to repetitive trajectories or shallow local minima. In later iterations, when convergence becomes desirable, the exploitative branch restores useful symmetries by aligning the population around the best-performing configurations. Hence, the operator dynamically alternates between symmetry preservation and symmetry breaking, enabling the IOA to maintain a self-regulated balance between exploration and exploitation.

This adaptive interplay between symmetric and asymmetric behaviors has broader implications beyond the current anomaly detection task. In complex engineered systems (such as EDA processes) optimization problems often involve symmetric structures (e.g., mirrored circuit topologies, balanced transistor pairs, or repeated layout cells) that ensure predictable and stable performance. However, controlled asymmetry is equally valuable, as it allows the design process to escape performance plateaus or to compensate for process variations and parasitic mismatches. The same principle realized in the IOA’s seasonal-migration operator can thus be leveraged to guide symmetry-aware optimization in circuit or system design, where breaking non-beneficial regularities while preserving functional balance leads to superior efficiency, robustness, and adaptability.

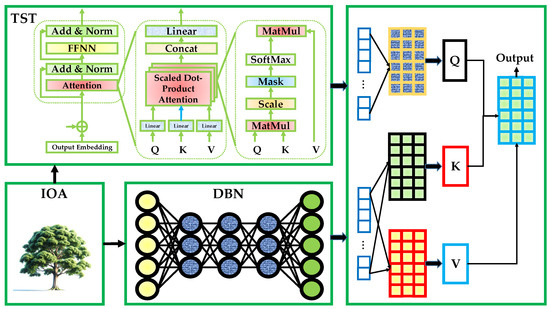

2.5. Proposed HTST-DBN

Figure 4 depicts the end-to-end HTST-DBN pipeline. The TST encoder converts preprocessed sequences into context-aware embeddings via multi-head self-attention (Q/K/V projections, scaled dot-product attention, feed-forward blocks, and residual normalization). The IOA operates outside the forward path to tune hyper-parameters and architectural knobs. The DBN consumes the TST embeddings and learns hierarchical, generative abstractions through stacked RBMs. The right-hand panel highlights the Q/K/V flow culminating in an anomaly score (or class) at the output. In the proposed HTST-DBN, the TST is responsible for modeling temporal dependencies and contextual interactions in network traffic/telemetry. It projects each sequence into a fixed-dimensional space, injects temporal order via positional encodings, and aggregates information across time using multi-head attention, enabling the model to capture both short-term bursts (e.g., DDoS spikes) and long-range trends (e.g., slow drift in IoT sensors). This is essential for heterogeneous, noisy streams typical of mobile networks, where dependencies span multiple time scales and sources.

Figure 4.

The proposed HTST-DBN architecture.

The DBN serves as a deep feature abstraction module on top of the TST embeddings. By stacking RBMs and leveraging generative pretraining followed by fine-tuning, the DBN uncovers latent structure and denoises the representation, yielding features that are robust to sparsity, missing values, and class imbalance. In practice, the DBN sharpens the separation between normal and anomalous regimes. IOA performs hyper-parameter/architecture search for the hybrid stack, optimizing (for example) TST depth/width, attention heads, learning rates, DBN layer sizes, and regularization strength. Unlike conventional optimizers, IOA evaluates candidates using a composite objective that accounts not only for the current validation fitness but also for the growth rate of that fitness across recent “years” (iterations), as formalized in Equations (18)–(21). To further strengthen search dynamics, a Seasonal Migration operator is incorporated, probabilistically alternating movements toward the global best (exploitation) and toward distant, dissimilar candidates (exploration). Together with growth, grafting, pruning, screening, and elitism, this yields a stable yet diverse search over the TST–DBN design space.

The hybrid design exploits complementary strengths: TST excels at sequence-level context aggregation; DBN excels at generative abstraction and denoising; IOA adaptively discovers configurations that generalize across heterogeneous data. Empirically, this synergy improves detection accuracy, stability under noise/imbalance, and transferability across datasets, yielding a practical, scalable solution for anomaly detection in next-generation mobile networks.

To integrate the temporal representation of the TST with the hierarchical abstraction capability of the DBN, an intermediate projection mechanism was applied. The final encoder output of TST, denoted as , was first flattened into a one-dimensional vector through a global average pooling operation across the temporal axis, resulting in a feature vector . When the temporal length exceeded 50, a dense projection layer with a learnable weight matrix was employed to map the transformer embedding space into the input dimension of the first RBM in the DBN. In our implementation, and , ensuring smooth dimensional continuity between the two submodules. This projection not only compresses the temporal embeddings into a compact feature representation but also preserves high-order dependencies learned by the TST attention heads before hierarchical feature abstraction in the DBN model.

To maintain gradient stability during joint training and to prevent vanishing gradients across the deep hierarchical layers, several optimization strategies were integrated into the HTST-DBN training process. First, residual normalization was preserved in each TST encoder block, which allows stable gradient propagation through the self-attention layers. Second, the DBN parameters were pre-trained layer by layer using the Contrastive Divergence (CD-1) algorithm before end-to-end fine-tuning, ensuring that the initial weights of each RBM layer were near an optimal energy state. During joint optimization, dropout (rate = 0.3) and adaptive learning-rate scheduling (initialized at ) were employed to mitigate gradient explosion and ensure smooth convergence. Additionally, the IOA dynamically tuned the learning rates and weight decay parameters of both sub-networks, allowing synchronized updates between the Transformer and DBN during fine-tuning. These mechanisms collectively stabilized the backward pass, accelerated convergence, and effectively eliminated gradient vanishing even under deep model configurations.

3. Results

All experiments were implemented in Python 3.10, using a combination of well-established libraries tailored to deep learning and optimization. The hybrid framework was built with TensorFlow 2.11 and Keras 2.11 for implementing the Time Series Transformer and DBN components, while NumPy 1.23, Pandas 1.5, and Scikit-learn 1.2 were employed for data preprocessing, normalization, and evaluation. For optimization and metaheuristic routines, including the IOA, custom modules were developed and integrated with SciPy 1.10. Visualization and performance analysis were carried out using Matplotlib 3.7 and Seaborn 0.12, ensuring reproducibility of figures and statistical plots. The experiments were executed on a workstation equipped with an Intel Core i9-12900K CPU @ 3.2 GHz, 64 GB RAM, and an NVIDIA RTX 3090 GPU with 24 GB memory. This hardware configuration provided sufficient computational capacity to train the hybrid HTST-DBN model on large-scale datasets such as CICDDoS2019 and ToN-IoT, while also supporting extensive hyper-parameter tuning through IOA.

To comprehensively evaluate the effectiveness of the proposed HTST-DBN framework, its performance was benchmarked against a diverse set of state-of-the-art algorithms, including TST, BERT, DBN, DRL, CNN, and RF. These baselines were chosen to represent different families of anomaly detection approaches: transformer-based architectures (TST, BERT), deep generative networks (DBN), reinforcement learning (DRL), convolutional networks (CNN), and classical ML classifiers (RF). Comparing against this broad spectrum allows us to validate the generalization ability and robustness of the proposed hybrid design. In particular, TST and DBN were also evaluated as standalone models in order to assess their individual strengths and weaknesses, and to demonstrate the incremental benefits of combining temporal modeling with deep feature abstraction.

Each baseline contributes unique capabilities that make the comparison both fair and insightful. TST captures long-range temporal dependencies in sequential data, while BERT offers powerful contextual representation learning adapted from NLP to sequential network traffic. DBN is effective in generative feature learning, while DRL provides adaptive decision-making under dynamic environments, reflecting realistic mobile and IoT network scenarios. CNN excels at extracting spatially local patterns and has proven effective in intrusion detection, while RF serves as a strong classical benchmark due to its ability to handle noisy and heterogeneous features. Beyond these baselines, the hybrid model was also trained using alternative meta-heuristic algorithms for hyper-parameter optimization, such as GA and PSO, to explicitly demonstrate the advantages of the proposed IOA. This comparative design ensures that improvements observed with HTST-DBN are not simply due to architectural complexity but stem from the synergy between TST, DBN, and IOA, making the evaluation rigorous and comprehensive.

For the evaluation of all algorithms, a comprehensive set of performance metrics was employed, including accuracy, recall, area under the curve (AUC), root mean square error (RMSE), execution time, variance, and the t-test. Together, these metrics allow us to assess not only classification effectiveness but also the stability, computational efficiency, and statistical reliability of the proposed HTST-DBN compared to the baselines. Equation (26) defines accuracy, which measures the overall proportion of correctly classified instances, combining both true positives and true negatives over the total population. A higher accuracy indicates that the model achieves a strong balance in correctly detecting normal and anomalous classes.

Equation (27) describes recall, which measures the ability of the model to identify actual anomalies from all anomalous samples present in the dataset. High recall indicates robustness against missed detections, which is particularly important in security applications.

Equation (28) introduces AUC, which corresponds to the area under the receiver operating characteristic (ROC) curve and provides a threshold-independent evaluation of classification performance. A higher AUC value reflects better discrimination between normal and attack classes across all thresholds.

where is the ROC curve at threshold .

Equation (29) defines RMSE, which is employed to track the training process by quantifying the average squared deviation between predicted and observed labels. Lower RMSE values indicate faster convergence and more stable learning, making it an important measure for evaluating optimization quality in the training phase.

where is the observed value; is the calculated value.

Beyond these formula-based metrics, execution time is measured to assess the computational cost of each algorithm, reflecting efficiency in large-scale deployment. Variance captures the stability of results across multiple runs, where lower variance denotes consistency and reliability in the model’s predictions. Finally, the t-test is conducted as a statistical significance test to verify whether the improvements achieved by the model over baselines are not due to random chance. Before presenting the quantitative results, it is essential to emphasize the role of parameter tuning, since the effectiveness of deep models strongly depends on properly chosen hyper-parameters. The HTST-DBN benefits from the IOA, which adaptively optimizes parameters such as learning rate and attention heads. In contrast, the baseline models including TST, BERT, DBN, DRL, CNN, and RF were tuned using the conventional grid search method. This distinction highlights the advantage of IOA in dynamically balancing exploration and exploitation, yielding parameter settings that are more tailored to the data and the optimization landscape.

As shown in Table 2, the configurations of all models are reported separately to ensure a fair and reproducible comparison. For the proposed HTST-DBN, the hyper-parameter calibration was conducted through the IOA, which adaptively searched within predefined ranges for each parameter. The learning rate was explored in the interval and optimized to 0.004, while the batch size was tuned within , converging to 32 as the most stable setting. The TST component was configured with 8 encoder layers and 8 attention heads, selected from search bounds of 4–10 layers and 4–16 heads, respectively. The DBN component comprised 4 hidden layers, each containing 32 neurons, optimized within 2–5 layers and 16–64 neurons per layer, using Tanh and Sigmoid activations to balance non-linear abstraction and stability. Dropout was maintained at 0.2, selected from a search range of 0.1–0.4 to minimize overfitting without compromising generalization. For the IOA parameters, the population size was optimized to 110 (search range 80–140) and the maximum number of iterations fixed at 300 (range 200–400). The weighting coefficients were dynamically adjusted within the ranges and , settling at and , which yielded the most balanced trade-off between exploration and exploitation.

Table 2.

Hyper-parameter settings of the proposed HTST-DBN and baseline models.

BERT used a learning rate of 0.002, batch size 32, dropout 0.1, 6 self-attention heads, and 6 transformer encoder layers, with an input sequence window of 64 and the GELU activation function trained with SGD. DRL was configured with a learning rate of 0.002, discount factor of 0.95, ϵ-greedy value of 0.44, and batch size 64. CNN employed 8 convolution layers with kernel size 5 × 5, max pooling of 2 × 2, and 6 hidden layers with 64 neurons each. Finally, RF was set with 300 estimators, a minimum of 6 samples per split, and a maximum depth of 10, using Gini as the impurity criterion. These parameter values underline the structural and algorithmic diversity across the baselines, ensuring that the comparative results are based on competitive configurations for each model.

Table 3 shows the comparative performance of the proposed HTST-DBN model and the baseline algorithms across the four benchmark datasets. As shown in Table 3, the performance comparison highlights the superiority of the proposed HTST-DBN framework over all baseline methods across four benchmark datasets. Each dataset is evaluated using Accuracy, Recall, and AUC, providing a multi-perspective assessment of detection capability. On the CICDDoS2019 dataset, HTST-DBN achieves 99.61% accuracy, 99.85% recall, and 99.93% AUC, outperforming the strongest baseline (TST with 90.28% accuracy and 92.20% AUC) by nearly 9–10 percentage points. This large margin is explained by the hybrid design, where TST captures bursty sequential patterns of DDoS flows while DBN enhances robustness by abstracting noisy features. In contrast, CNN and RF lag significantly with accuracies of 84.86% and 81.08%, highlighting the limitations of shallow or static architectures when facing high-volume attack traffic.

Table 3.

Comparative performance of the proposed HTST-DBN and baseline models.

For Edge-IoTset and 5G-NIDD, which are more complex due to heterogeneous IoT telemetry and 5G control-plane anomalies, HTST-DBN maintains outstanding performance with accuracies of 99.58% and 99.52%, recalls above 99.7%, and AUC values close to 99.8%. Baselines such as BERT and TST show relatively strong results (around 88–90% accuracy), yet they fall short of the hybrid approach. The consistent improvement demonstrates that the integration of DBN with TST embeddings enables the model to generalize effectively in dynamic, edge-enabled and cellular environments. IOA’s optimization further ensures that architecture depth, attention heads, and learning parameters are well-tuned to maximize detection capability across diverse data modalities. The ToN-IoT dataset, being the most challenging due to its multi-source, noisy, and imbalanced nature, still shows the dominance of HTST-DBN with 99.48% accuracy, 99.60% recall, and 99.86% AUC. In contrast, the best-performing baseline (TST) achieves only 87.42% accuracy and 89.33% AUC, while RF and CNN remain below 81% accuracy. This highlights how the hybrid model mitigates the difficulties of multi-source telemetry by leveraging both temporal dependencies and probabilistic abstractions.

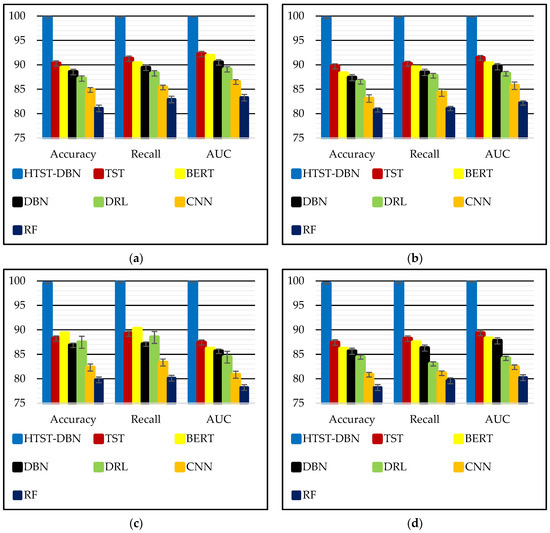

Figure 5 shows the bar chart representation of the comparative results previously reported in Table 3. Specifically, it illustrates how the proposed HTST-DBN consistently achieves the highest Accuracy, Recall, and AUC across all four datasets, compared with baseline models including TST, BERT, DBN, DRL, CNN, and RF. By transforming the tabular results into a visual format, the superiority of the hybrid approach becomes more evident, highlighting the significant margins of improvement in both detection effectiveness and robustness across diverse environments. The visualized results emphasize how the integration of TST, DBN, and IOA enables the HTST-DBN to capture long-range temporal dependencies, extract robust latent features, and fine-tune hyper-parameters in a way that directly addresses the challenges of anomaly detection in mobile and IoT networks. TST alone captures sequential dependencies but misses deeper abstraction; DBN alone captures latent features but lacks temporal sensitivity; and IOA ensures optimal balance of depth, attention, and regularization. Their combination leads to a powerful synergy where anomalies are detected with near-perfect reliability, even in the noisy and heterogeneous ToN-IoT dataset. This validates the novelty of the proposed model in effectively solving the problem of anomaly detection across multiple real-world scenarios.

Figure 5.

Bar chart visualization of accuracy, recall, and AUC across four datasets: (a) CICDDoS2019; (b) Edge-IoTset; (c) 5G-NIDD; (d) ToN-IoT.

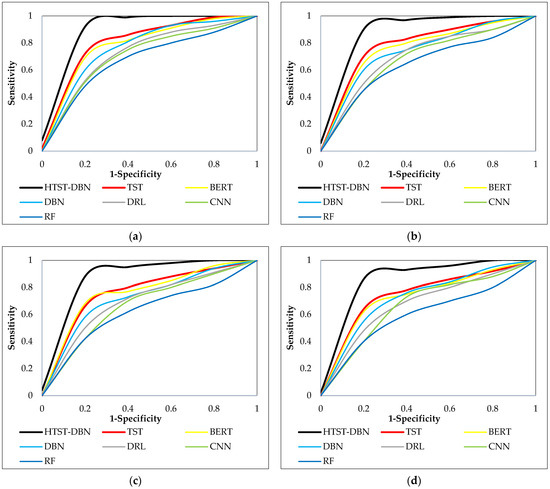

Figure 6 shows the ROC curves of the proposed HTST-DBN and baseline models, illustrating their ability to balance sensitivity (true positive rate) against 1-specificity (false positive rate) across varying thresholds. The steeper and more convex curve of HTST-DBN in all four subplots indicates its superior discriminative capability, consistently approaching the top-left corner of the plot. This demonstrates that HTST-DBN maintains a high detection rate while minimizing false alarms, a critical requirement in anomaly detection systems for communication networks.

Figure 6.

ROC curve comparisons across four benchmark datasets: (a) CICDDoS2019; (b) Edge-IoTset; (c) 5G-NIDD; (d) ToN-IoT.

From a technical perspective, the curvature of HTST-DBN’s ROC reflects the synergy between temporal encoding, probabilistic abstraction, and IOA-driven hyper-parameter optimization, which together produce a more robust decision boundary. In contrast, baselines such as RF and CNN exhibit flatter curves, reflecting weaker sensitivity–specificity trade-offs and higher susceptibility to misclassification under threshold variation. Even strong models like TST and BERT show less convex ROC profiles compared to HTST-DBN, underlining the latter’s advantage in threshold-independent evaluation. These results confirm that the proposed architecture not only improves point metrics like accuracy and recall but also achieves consistently superior classification robustness across a full spectrum of operating conditions.

Table 4 presents the comparative analysis of the proposed HTST-DBN framework against multiple architectural and optimization configurations, combining TST and DBN components with different meta-heuristic algorithms, including IOA, OA, PSO, GA, and chimp optimization algorithm (ChOA). This design enables a systematic evaluation of both architectural contributions and optimizer effects. By contrasting the IOA-based model with traditional optimization techniques, the table provides a holistic perspective on how search strategies influence convergence quality and classification performance across datasets.

Table 4.

Comparative analysis of different architectural combinations and optimization strategies.

Quantitatively, IOA delivers the most consistent and superior results, with accuracies exceeding 99.4% and AUC values approaching 99.9% on all datasets. For instance, on CICDDoS2019, IOA achieves 99.61% accuracy, 99.85% recall, and 99.93% AUC, outperforming PSO (96.08% accuracy), GA (95.36%), OA (96.75%), and ChOA (95.86%). This clearly demonstrates IOA’s enhanced exploration–exploitation balance, achieved through its adaptive migration probability and seasonal learning operator, which accelerate convergence and prevent premature stagnation. In contrast, standard OA lacks dynamic parameter adaptation, leading to slower convergence, while ChOA improves exploration via multi-group communication but still exhibits weaker exploitation consistency than IOA. Classical PSO and GA show moderate convergence speeds but struggle in maintaining solution diversity, which reduces their ability to escape local minima.

Furthermore, hybrid architectural variants such as TST-DBN and IOA-DBN perform better than single-component models but remain inferior to the full HTST-DBN configuration. For instance, TST-DBN attains 92.19% accuracy on CICDDoS2019 and 89.15% on ToN-IoT, while IOA-DBN slightly improves to 93.20% and 90.04%, respectively. These findings confirm that both the architectural integration and the optimization mechanism are essential. The combination of TST’s temporal feature extraction, DBN’s deep abstraction, and IOA’s adaptive optimization enables the proposed HTST-DBN to achieve robust and scalable anomaly detection performance across diverse network scenarios.

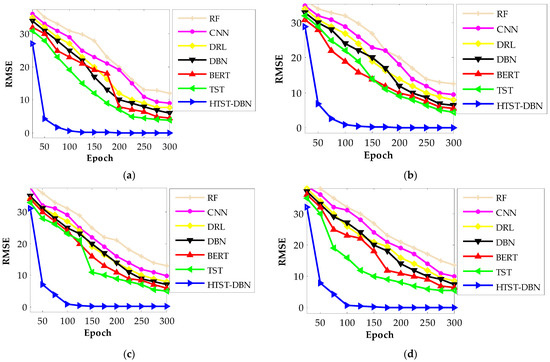

Figure 7 shows the evolution of RMSE with respect to training epochs, illustrating how quickly and effectively each model converges. The proposed HTST-DBN consistently achieves the fastest convergence, with RMSE dropping sharply within the first 40–60 epochs across all datasets and reaching near-zero values well before 100 epochs. In contrast, other deep models such as TST, BERT, and DBN require over 150 epochs to stabilize at higher RMSE values, while CNN and RF converge much more slowly and remain at significantly higher error levels. This demonstrates the efficiency of the hybrid framework in learning robust patterns without prolonged training cycles. An additional observation is that the IOA-driven optimization not only accelerates convergence but also reduces oscillations in the learning curves. While models like DRL and CNN exhibit fluctuating RMSE values during early epochs, HTST-DBN maintains a smooth downward trajectory, reflecting more stable gradient updates and better control over overfitting. This stability is particularly important in large-scale datasets such as CICDDoS2019 and ToN-IoT, where noisy and imbalanced data can otherwise cause unstable training behavior.

Figure 7.

Training convergence curves of RMSE across four datasets: (a) CICDDoS2019; (b) Edge-IoTset; (c) 5G-NIDD; (d) ToN-IoT.

A closer analysis across datasets reveals consistent trends: on CICDDoS2019 and Edge-IoTset, HTST-DBN achieves full convergence by approximately epoch 70, whereas baselines like TST and DBN flatten around epoch 200. For 5G-NIDD and ToN-IoT, which are inherently more complex and noisy, HTST-DBN still converges by epoch 90, while the baselines require nearly the entire 300-epoch span to stabilize. This accelerated convergence highlights the effectiveness of IOA-driven parameter tuning combined with the complementary strengths of TST and DBN, enabling the model not only to learn faster but also to generalize more effectively with minimal training error.

Table 5 presents a comprehensive comparison between the proposed HTST-DBN framework and several advanced deep-learning architectures inspired by recent studies discussed in the related-work section. To ensure fairness and consistency, these comparative models (including ABCNN [30], DRL-RBFNN [34], OS-CNN [41], DeepTransIDS [36], DNN [43], and RF-KNN [38]) were re-implemented and trained on the same benchmark datasets (CICDDoS2019, Edge-IoTset, 5G-NIDD, and ToN-IoT) under identical preprocessing and experimental conditions. Therefore, the reported metrics represent results obtained from our own experimental framework rather than the original studies, providing a direct and unbiased assessment of each model’s generalization capability. Across all four datasets, HTST-DBN consistently achieves the best performance in terms of accuracy, recall, and AUC, confirming its superior detection capability and robustness. On the challenging CICDDoS2019 dataset, it reaches 99.61% accuracy and 99.93% AUC, surpassing the second-best ABCNN and DRL-RBFNN models by more than 6%. A similar trend is observed for Edge-IoTset, where HTST-DBN maintains 99.58% accuracy and 99.82% AUC, outperforming DeepTransIDS by roughly 9%, highlighting its resilience to heterogeneous IoT telemetry. For 5G-NIDD, the proposed model attains 99.52% accuracy and 99.78% AUC, demonstrating effective adaptation to control-plane traffic dominated by short-sequence signaling. Even in the highly imbalanced ToN-IoT dataset, HTST-DBN achieves 99.48% accuracy and 99.86% AUC, clearly ahead of all baselines.

Table 5.

Comparative performance of the proposed HTST-DBN and recent models implemented based on related works.

4. Discussion

In the Results section, the superiority of the proposed HTST-DBN framework was demonstrated through the analysis of core evaluation metrics, including accuracy, recall, AUC, and training convergence behavior. The model consistently outperformed baseline approaches across all benchmark datasets, showing not only higher detection rates but also faster and more stable convergence. These findings underline the strength of combining temporal feature extraction through TST, deep generative abstraction with DBN, and IOA-driven hyper-parameter optimization. Together, these elements enabled the model to achieve robust classification performance across heterogeneous and noisy environments, validating its effectiveness as an anomaly detection solution for next-generation networks.

While these metrics highlight the predictive power of the proposed architecture, real-world applicability also depends on additional aspects such as variance across runs, statistical reliability through t-tests, and execution time efficiency. By examining variance, the stability and reproducibility of the model across multiple training sessions can be assessed, ensuring that the results are not overly sensitive to initialization. The t-test provides statistical confirmation that improvements over baselines are significant rather than random fluctuations. Moreover, execution time is a practical consideration for deployment in time-sensitive environments such as mobile edge or IoT networks. By analyzing these complementary dimensions, the evaluation determines whether HTST-DBN is not only accurate but also efficient, stable, and scalable for real-world applications.

Table 6 shows the robustness of the proposed HTST-DBN compared to baseline models, measured as the variance of results over 30 independent runs. From the results, HTST-DBN achieves remarkably low variance across all datasets, with values ranging from 0.00035 on CICDDoS2019 to 0.00072 on ToN-IoT, several orders of magnitude smaller than competing methods. In contrast, traditional baselines such as RF and CNN show significantly higher variance, exceeding 9.41 and 6.02, respectively, on CICDDoS2019, and surpassing 10.79 and 7.84 on ToN-IoT. Even stronger baselines like TST and BERT record variances above 2.1 and 2.8, underscoring that while they achieve reasonable accuracy, their training outcomes remain unstable. This consistency of HTST-DBN can be attributed to the synergy of TST and DBN architectures, alongside IOA-driven hyper-parameter optimization, which collectively minimize randomness in training. Such stability is crucial for real-world applications, particularly in IoT and 5G/6G scenarios where reproducibility and reliability are as important as accuracy. The extremely low variance values confirm that HTST-DBN is not only more accurate but also more dependable, making it a strong candidate for deployment in future intelligent network monitoring systems and mission-critical anomaly detection tasks.

Table 6.

Variance of different models across 30 independent runs.

Table 7 shows the outcomes of statistical t-tests conducted between the proposed HTST-DBN and each baseline model across all datasets. The p-values for every comparison are well below the threshold of 0.01, confirming that the performance differences observed are statistically significant. The “Results” column consistently reports “Significant,” indicating that the improvements achieved by HTST-DBN are not due to random variation but are statistically reliable across multiple datasets. The findings reinforce that the superior accuracy, recall, and AUC demonstrated in earlier tables are supported by rigorous statistical validation. For example, even in challenging datasets like ToN-IoT and 5G-NIDD, the p-values remain as low as 0.0002 or smaller, showing strong confidence in HTST-DBN’s advantage over baselines. This robustness across diverse environments highlights the reliability of the proposed architecture for real-world deployment. By passing significance testing at the 0.01 level, HTST-DBN proves not only to be more effective but also consistently better than existing models in a statistically meaningful sense, further solidifying its potential for anomaly detection in next-generation communication networks.

Table 7.

Statistical t-test results comparing HTST-DBN with others at a 0.01 significance level.

Table 8 shows the execution time required for each model to reach different training termination thresholds. This experiment measures the computational efficiency of the models by observing how quickly they achieve increasingly strict error levels during training. Such an evaluation provides practical insight into the scalability of models, especially when considering real-world deployments where both accuracy and computational cost are critical. From the results, HTST-DBN demonstrates a clear advantage in efficiency, requiring only 42 s to reach RMSE < 15 and 309 s to achieve RMSE < 3, a threshold that no baseline model was able to reach. By contrast, TST and BERT needed 849 s and 962 s, respectively, just to reach RMSE < 5, while CNN and DBN converged much more slowly, and RF failed to meet the stricter thresholds. These outcomes highlight that the hybrid integration of TST and DBN, coupled with IOA optimization, not only enhances accuracy and stability but also ensures faster convergence, reducing computational overhead significantly compared to other deep learning and classical methods.

Table 8.

Comparison of runtime for CICDDoS2019 under different RMSE-based stopping criteria.

Table 9 presents the inference latency results for the proposed HTST-DBN model and comparative baselines, measured in milliseconds per sample. Each value represents the average time required by a fully trained model to infer a single test instance on a standard CPU setup, emulating edge-level computational resources. Lower latency values indicate faster real-time inference and, therefore, higher suitability for on-device deployment in IoT or edge networks. Across all datasets, the proposed HTST-DBN maintains an average latency between 6.5 ms and 8.7 ms, which is well below the 10 ms threshold typically considered acceptable for real-time IoT detection. The model exhibits slightly higher latency than shallower networks such as TST and RF but remains significantly faster than heavy architectures like BERT and DRL, which require between 7.1 ms and 9.6 ms on average. These results confirm that the hybrid integration of TST and DBN provides a favorable balance between computational complexity and inference efficiency, enabling high detection accuracy without excessive runtime cost. From a broader perspective, the latency difference across datasets reflects the varying input dimensionality and sequence lengths of the respective benchmarks. For instance, CICDDoS2019 and ToN-IoT (both containing longer traffic flows) result in slightly increased inference times, whereas 5G-NIDD yields the lowest latency due to its compact signaling structure.

Table 9.

Average inference latency (in milliseconds) of all benchmarked models across four datasets.