Prototype-Enhanced Few-Shot Relation Extraction Method Based on Cluster Loss Optimization

Abstract

1. Introduction

- By introducing relation information to assist the model’s learning, for instance, TD-proto [11], it combines entity and relationship description information to help the model extract key information in sentences more accurately; CTEG [12] utilizes an entity-focused attention module and confusion-aware learning approach to strengthen the model’s ability to identify true relationships while avoiding confusion.

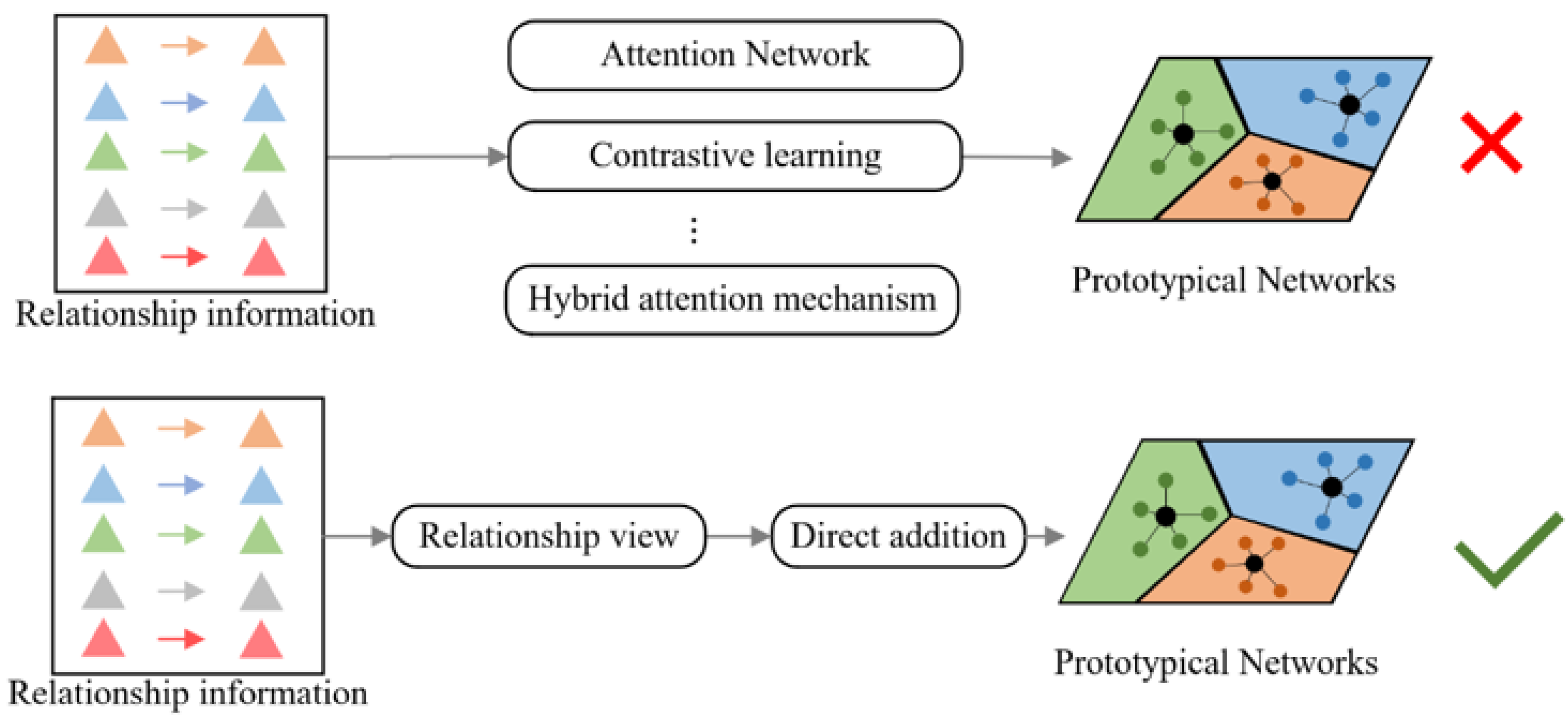

- By focusing on optimizing the representation of category prototypes, the aim is to reduce intra-class distances and expand inter-class differences, thereby improving the classification accuracy of the model. For instance, Han et al. [13] proposed a Hybrid Contrastive Relation Prototype (HCRP) method, which adopts relation description information as anchors to bring similar prototypes closer and push different prototypes farther apart in the representation space; Dong et al. [14] designed a semantic mapping framework, MapRE, based on label-aware and label-irrelevant semantic information, which enhances the generalization performance of the model by combining similarities between samples and between samples and labels; Sun et al. [15] proposed a hierarchical attention prototype network that combines multi-channel convolution feature extraction with adversarial sample generation to further optimize the feature representation of the model.

- High computational cost [16]: Traditional approaches typically rely on complex network architectures, resulting in the introduction of a substantial number of additional parameters. This not only increases computational overhead but may also compromise the overall effectiveness of the model.

- Deficiencies in hard sample mining methods [19]: Existing hard sample mining methods primarily focus on the relative differences between positive and negative samples, lacking the capability to effectively address anomalous instances or semantically complex samples.

- (1)

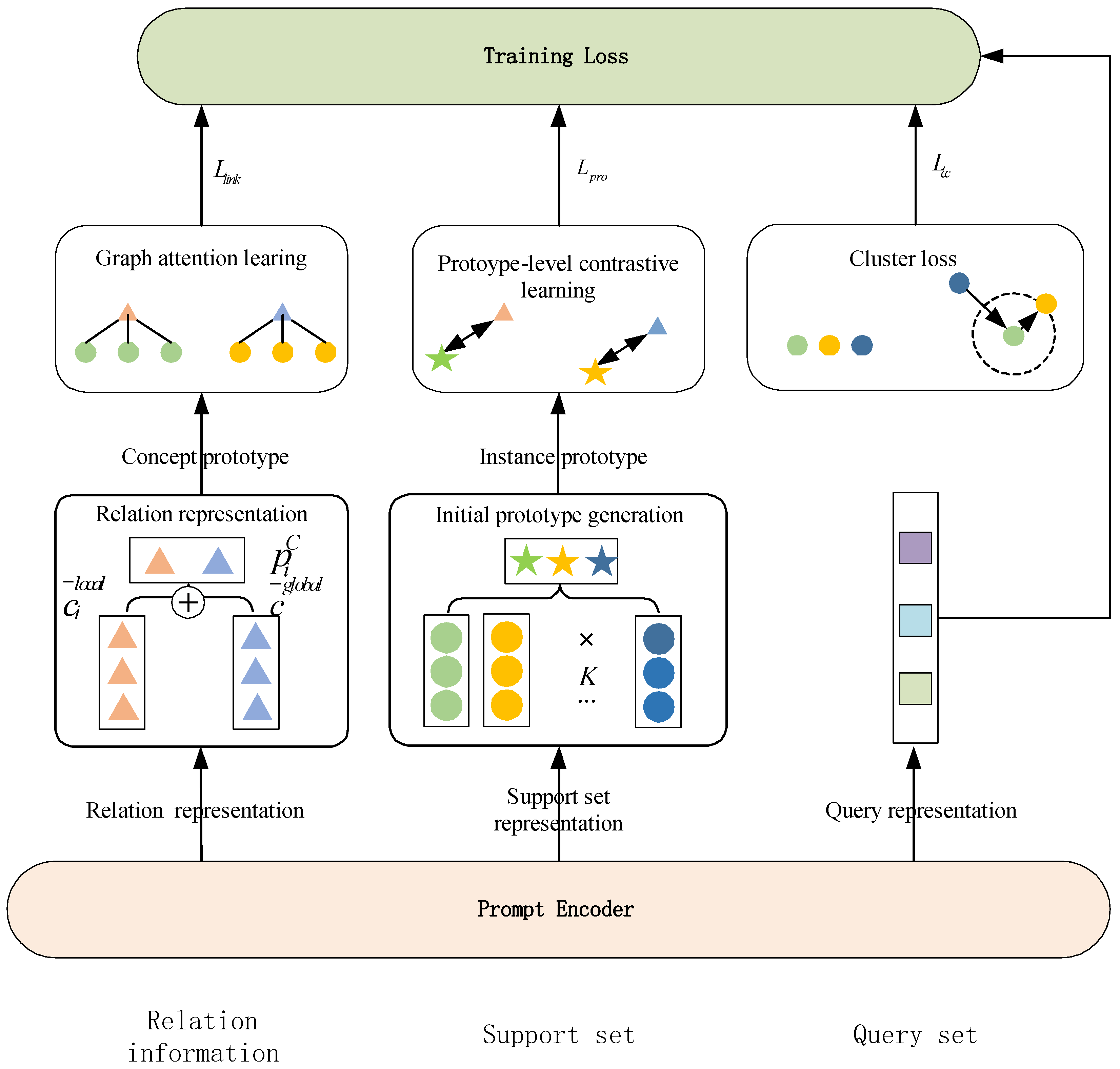

- We design a prompt encoder that can structurally encode different prompt templates while maintaining processing symmetry for instance and relationship information. Meanwhile, these encoded prompts are input into a Large Language Model (LLM), which utilizes its powerful semantic understanding capabilities to obtain high-quality representations of instances, instance prototypes, and conceptual prototypes;

- (2)

- We adopt a graph attention mechanism to model the association between conceptual prototypes and isomorphic instances, and use the proposed prototype-level contrastive learning strategy with bidirectional symmetry to fuse the interpretable features of conceptual prototypes with the intra-class common features among instance prototypes, forming an enhanced relation representation.

- (3)

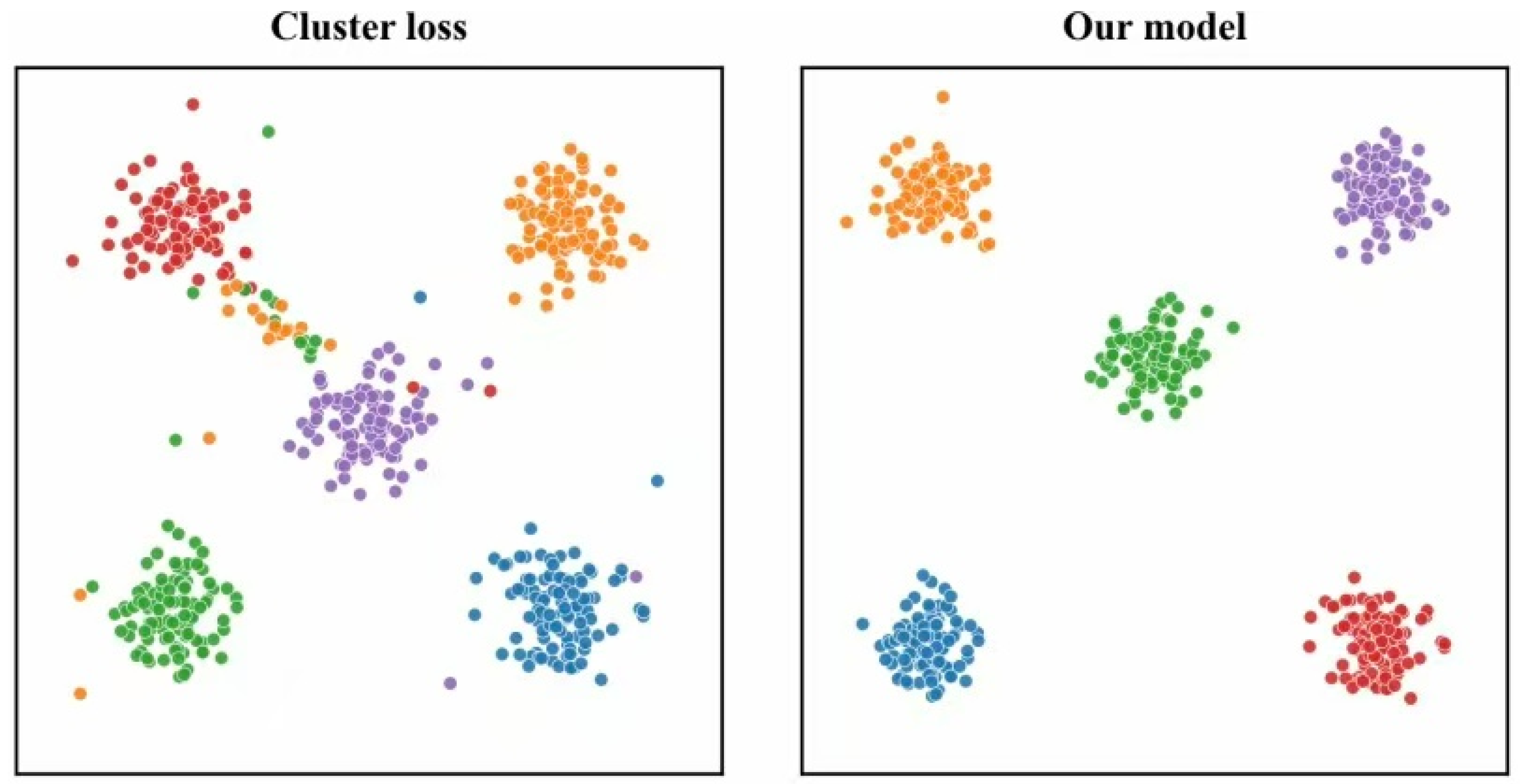

- We designed a clustering loss function to enable the model to learn a distinguishable metric space with improved class symmetry, ensuring that samples of the same class are highly aggregated and samples of different classes are significantly separated.

- (4)

- The experimental results on the FewRel1.0 and FewRel2.0 datasets show that the proposed model performs better than existing advanced models in the task of extracting few sample relations.

2. Materials and Methods

2.1. Relation Extraction

2.2. Few-Shot Relation Extraction

2.3. Cluster Loss Function

3. Methodology

3.1. Problem Formulation

3.2. Model Overview

3.3. Prompt Encoder

3.4. Graph Attention Learning Based on Relation Graph

3.5. Prototype-Level Contrastive Learning

3.6. Cluster Loss Based on Support Set

3.7. Training

4. Experiments

4.1. Datasets

4.2. Parameter Settings

4.3. Baseline Methods

5. Result and Discussion

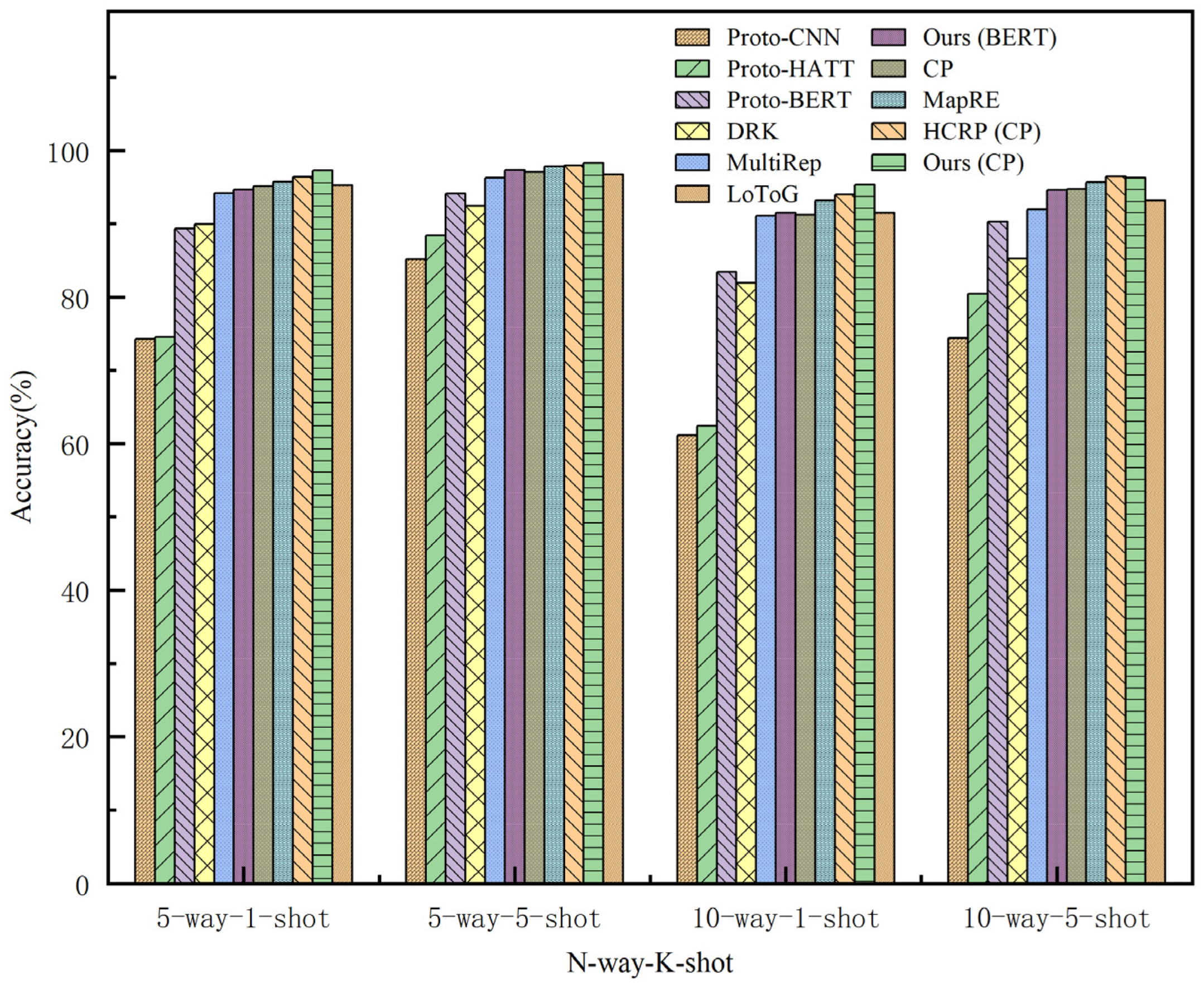

5.1. Compare Other Baseline Models

- (1)

- When employing BERT’s encoder architecture, the proposed model outperforms the CNN-based encoder model, demonstrating stronger competitiveness. The BERT encoder more effectively captures and expresses complex semantic information in few-shot learning tasks, which significantly enhances the model’s generalization capability and overall performance of the model.

- (2)

- With BERT as the pre-trained model, the proposed (BERT) approach surpasses existing mainstream methods in performance. As shown in Table 3, BERT-based models perform well in the evaluation. Compared to the second-best model (MultiRep), it achieves improvements of 0.45%, 1.06%, 0.39%, and 2.60% under four few-shot settings. While existing approaches often rely on more complex network architectures and implementations, the proposed model (BERT) achieves significant performance gains while maintaining a relatively concise model structure, which further validates the effectiveness and efficiency of our approach.

- (3)

- When using CP as the pre-trained model, the proposed model outperforms the state-of-the-art HCRP(CP) model in two few-shot settings (5-way-1-shot and 10-way-1-shot), with improvements of 0.83% and 1.38%, respectively.

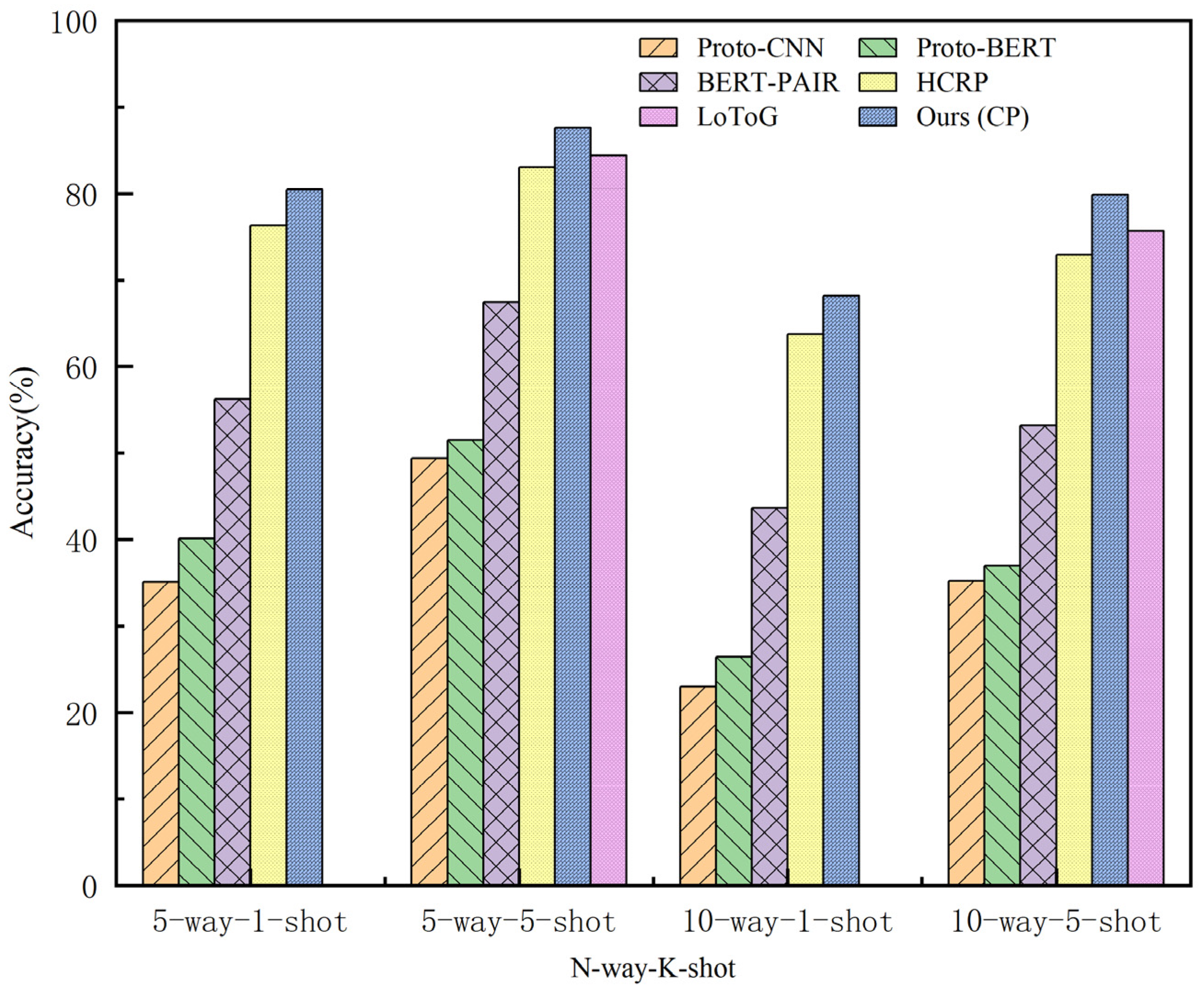

5.2. Result Analysis of Domain Adaptation

- (1)

- Our model significantly outperforms standard methods like Proto-BERT and BERT-PAIR. Under various N-way-K-shot settings, it achieves an accuracy improvement of at least 11%, with an average improvement of 13% across all settings. This demonstrates that introducing external information effectively enhances few-shot learning performance in domain adaptation scenarios.

- (2)

- Our model surpasses typical external information methods (HCRP, LoToG). Specifically, it outperforms HCRP by at least 4% in accuracy across N-way-K-shot settings, demonstrating that integrating relation information in the feature space is more effective than direct incorporation. It also exceeds LoToG by at least 3%, highlighting the critical importance of enhancing instance/prototype representations through the complementarity of relation-specific features and intra-class common features.

5.3. Ablation Experiment

- (1)

- “w/o relation information” represents a variant of the model that does not contain direct relation information and only relies on the basic prototype network structure, so as to enhance the representation capability of the model;

- (2)

- “w/o ” indicates that the link prediction loss function is used to quantify the internal tightness of the relation-specific type (Remove the part of graph attention learning);

- (3)

- “w/o ” indicates that the prototype-layer contrastive loss function is used to enhance the distinguishability between different relation class prototypes (remove the part of prototype-level contrastive learning);

- (4)

- “w/o ” indicates that the clustering loss function is used to impose clear and strict constraints on intra-class compactness and inter-class separability (Remove the part of cluster learning).

- (1)

- The integration of relational information is critical for enhancing prototype representations. When relational information is excluded (that is, “w/o relation information”), the model shows a noticeable performance drop. Specifically, the exclusion of integrated relational information resulted in a notable decrease in model accuracy by 1.78% and 1.55% under the two few-shot learning task configurations. This phenomenon clearly shows that relational information plays a key role in enhancing the representation ability of the model, and the lack of relational information makes the model unable to effectively capture the dependencies between global and local features, thus limiting the performance of the model.

- (2)

- The proposed constraints, particularly those emphasizing intra-class compactness and inter-class separability, allow the model to obtain better results compared to the conventional cross-entropy loss approach. Specifically, when both the prototype-layer contrastive loss and cluster loss are excluded (that is, “w/o ”), the accuracy of the model is observed to decrease by 1.43% and 2.20% under the two few-shot learning task configurations, which clearly indicates the importance of loss; when both the link prediction loss and cluster loss are excluded (that is, “w/o ”), the accuracy of the model is observed to decrease by 1.81% and 2.71% under the two few-shot learning task configurations, which clearly indicates the importance of loss; when both the prediction loss and prototype-layer contrastive loss are excluded (that is, “w/o ”), the accuracy of the model is observed to decrease by 1.15% and 2.38% under the two few-shot learning task configurations, which clearly indicates the importance of loss.

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhao, X.; Deng, Y.; Yang, M.; Wang, L.; Zhang, R.; Cheng, H.; Lam, W.; Shen, Y.; Xu, R. A comprehensive survey on relation extraction: Recent advances and new frontiers. ACM Comput. Surv. 2024, 56, 1–39. [Google Scholar] [CrossRef]

- Wang, W.; Wei, X.; Wang, B.; Li, Y.; Xin, G.; Wei, Y. Hyperplane projection network for few-shot relation classification. Expert Syst. Appl. 2024, 238, 121971. [Google Scholar] [CrossRef]

- Chen, X.; Wu, H.; Shi, X. Consistent prototype learning for few-shot continual relation extraction. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 7409–7422. [Google Scholar]

- Detroja, K.; Bhensdadia, C.K.; Bhatt, B.S. A survey on relation extraction. Intell. Syst. Appl. 2023, 19, 200244. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Y.; Wang, Z.; Peng, H.; Yang, Y.; Li, Y. Multi-information interaction graph neural network for joint entity and relation extraction. Expert Syst. Appl. 2024, 235, 121211. [Google Scholar] [CrossRef]

- Zhao, X.; Yang, M.; Qu, Q.; Xu, R. Few-Shot Relation Extraction with Automatically Generated Prompts. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 4971–4983. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Hu, J.; Wan, X.; Chang, T.H. Learn from relation information: Towards prototype representation rectification for few-shot relation extraction. In Proceedings of the Findings of the Association for Computational Linguistics: NAACL 2022, Seattle, WA, USA, 10–15 July 2022; pp. 1822–1831. [Google Scholar]

- Wen, W.; Liu, Y.; Ouyang, C.; Lin, Q.; Chung, T. Enhanced prototypical network for few-shot relation extraction. Inf. Process. Manag. 2021, 58, 102596. [Google Scholar] [CrossRef]

- Li, R.; Zhong, J.; Hu, W.; Dai, Q.; Wang, C.; Wang, W.; Li, X. Adaptive class augmented prototype network for few-shot relation extraction. Neural Netw. 2024, 169, 134–142. [Google Scholar] [CrossRef]

- Ding, N.; Wang, X.; Fu, Y.; Xu, G.; Wang, R.; Xie, P.; Shen, Y.; Huang, F.; Zheng, H.T.; Zhang, R. Prototypical representation learning for relation extraction. arXiv 2021, arXiv:2103.11647. [Google Scholar] [CrossRef]

- Yang, K.; Zheng, N.; Dai, X.; He, L.; Huang, S.; Chen, J. Enhance prototypical network with text descriptions for few-shot relation classification. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management, Virtual, 19–23 October 2020; pp. 2273–2276. [Google Scholar]

- Yang, K.; Zheng, N.; Dai, X.; He, L.; Huang, S.; Chen, J. Learning to decouple relations: Few-shot relation classification with entity-guided attention and confusion-aware training. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 5799–5809. [Google Scholar]

- Han, J.; Cheng, B.; Lu, W. Exploring task difficulty for few-shot relation extraction. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021; pp. 2605–2616. [Google Scholar]

- Dong, M.; Pan, C.; Luo, Z. MapRE: An Effective Semantic Mapping Approach for Low-resource Relation Extraction. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021; pp. 2694–2704. [Google Scholar]

- Sun, S.; Sun, Q.; Zhou, K.; Lv, T. Hierarchical attention prototypical networks for few-shot text classification. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 476–485. [Google Scholar]

- Li, J.; Feng, S.; Chiu, B. Few-shot relation extraction with dual graph neural network interaction. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 14396–14408. [Google Scholar] [CrossRef]

- Wen, M.; Xia, T.; Liao, B.; Tian, Y. Few-shot relation classification using clustering-based prototype modification. Knowl.-Based Syst. 2023, 268, 110477. [Google Scholar] [CrossRef]

- Wu, H.; He, Y.; Chen, Y.; Bai, Y.; Shi, X. Improving few-shot relation extraction through semantics-guided learning. Neural Netw. 2024, 169, 453–461. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Huang, Y.; Mao, R.; Gong, T.; Li, C.; Cambria, E. Virtual prompt pre-training for prototype-based few-shot relation extraction. Expert Syst. Appl. 2023, 213, 118927. [Google Scholar] [CrossRef]

- Gong, J.; Eldardiry, H. Few-Shot Relation Extraction with Hybrid Visual Evidence. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), Torino, Italy, 20–25 May 2024; pp. 7232–7247. [Google Scholar]

- Luo, D.; Gan, Y.; Hou, R.; Lin, R.; Liu, Q.; Cai, Y.; Gao, W. Synergistic anchored contrastive pre-training for few-shot relation extraction. Proc. AAAI Conf. Artif. Intell. 2024, 38, 18742–18750. [Google Scholar] [CrossRef]

- Chen, Y.; Yang, W.; Wang, K.; Qin, Y.; Huang, R.; Zheng, Q. A neuralized feature engineering method for entity relation extraction. Neural Netw. 2021, 141, 249–260. [Google Scholar] [CrossRef] [PubMed]

- Cabot, P.L.H.; Navigli, R. REBEL: Relation extraction by end-to-end language generation. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2021, Virtual, 16–20 November 2021; pp. 2370–2381. [Google Scholar]

- Guo, Q.; Zhang, J.; Wang, S.; Tian, L.; Kang, Z.; Yan, B.; Xiao, W. Bridging Generative and Discriminative Learning: Few-Shot Relation Extraction via Two-Stage Knowledge-Guided Pre-training. arXiv 2025, arXiv:2505.12236. [Google Scholar]

- Ying, X.; Xie, X.; Xu, T.; Zhao, Y.; Meng, Z.; Zhao, M. WMRE: Enhancing Distant Supervised Relation Extraction with Word-level Multi-instance Learning and Multi-hierarchical Feature. In Proceedings of the ICASSP 2025–2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 1–5. [Google Scholar]

- Liu, W.; Yin, M.; Zhang, J.; Cui, L. A Joint Entity Relation Extraction Model Based on Relation Semantic Template Automatically Constructed. Comput. Mater. Contin. 2024, 78, 975. [Google Scholar] [CrossRef]

- Sun, X.; Guo, Q.; Ge, S.Q. GFN: A novel joint entity and relation extraction model with redundancy and denoising strategies. Knowl.-Based Syst. 2024, 300, 112137. [Google Scholar] [CrossRef]

- Hu, B.; Lin, A.; Brinson, L.C. Tackling Structured Knowledge Extraction from Polymer Nanocomposite Literature as an NER/RE Task with seq2seq. Integr. Mater. Manuf. Innov. 2024, 13, 656–668. [Google Scholar] [CrossRef]

- Dagdelen, J.; Dunn, A.; Lee, S.; Walker, N.; Rosen, A.S.; Ceder, G.; Persson, K.A.; Jain, A. Structured information extraction from scientific text with large language models. Nat. Commun. 2024, 15, 1418. [Google Scholar] [CrossRef]

- Lv, J.; Zhang, Z.; Jin, L.; Li, S.; Li, X.; Xu, G.; Sun, X. HGEED: Hierarchical graph enhanced event detection. Neurocomputing 2021, 453, 141–150. [Google Scholar] [CrossRef]

- Sun, B.; Gong, K.; Li, W.; Song, X. MetaR: Few-Shot Named Entity Recognition with Meta-Learning and Relation Network. IEEE Trans. Audio Speech Lang. Process. 2025, 33, 974–986. [Google Scholar] [CrossRef]

- Bai, G.; Lu, C.; Guo, D.; Li, S.; Liu, Y.; Zhang, Z.; Dong, G.; Liu, R.; Yong, S. Clear Up Confusion: Advancing Cross-Domain Few-Shot Relation Extraction through Relation-Aware Prompt Learning. In Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 2: Short Papers), Mexico City, Mexico, 29 April–4 May 2024; pp. 70–78. [Google Scholar]

- Wang, Z.; Yang, L.; Yang, J.; Li, T.; He, L.; Li, Z. A Triple Relation Network for Joint Entity and Relation Extraction. Electronics 2022, 11, 1535. [Google Scholar] [CrossRef]

- Fan, M.; Bai, Y.; Sun, M.; Li, P. Large margin prototypical network for few-shot relation classification with fine-grained features. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019; pp. 2353–2356. [Google Scholar]

- Xiao, Y.; Jin, Y.; Hao, K. Adaptive prototypical networks with label words and joint representation learning for few-shot relation classification. IEEE Trans. Neural Netw. Learn. Syst. 2021, 34, 1406–1417. [Google Scholar] [CrossRef]

- Ren, L.; Chen, C.; Wang, L.; Li, P. Towards improved proxy-based deep metric learning via data-augmented domain adaptation. Proc. AAAI Conf. Artif. Intell. 2024, 38, 14811–14819. [Google Scholar] [CrossRef]

- Han, X.; Zhu, H.; Yu, P.; Wang, Z.; Yao, Y.; Liu, Z.; Sun, M. FewRel: A Large-Scale Supervised Few-Shot Relation Classification Dataset with State-of-the-Art Evaluation. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 30 November 2018; pp. 4803–4809. [Google Scholar]

- Han, Y.; Qiao, L.; Zheng, J.; Kan, Z.; Feng, L.; Gao, Y.; Tang, Y.; Zhai, Q.; Li, D.; Liao, X. Multi-view interaction learning for few-shot relation classification. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management, Gold Coast, Australia, 1–5 November 2021; pp. 649–658. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Gao, T.; Fisch, A.; Chen, D. Making Pre-trained Language Models Better Few-shot Learners. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Virtual, 1–6 August 2021; pp. 3816–3830. [Google Scholar]

- Schick, T.; Schütze, H. Exploiting Cloze-Questions for Few-Shot Text Classification and Natural Language Inference. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, Online, 19–23 April 2021; pp. 255–269. [Google Scholar]

- Chen, X.; Zhang, N.; Xie, X.; Deng, S.; Yao, Y.; Tan, C.; Huang, F.; Si, L.; Chen, H. Knowprompt: Knowledge-aware prompt-tuning with synergistic optimization for relation extraction. In Proceedings of the ACM Web Conference 2022, Lyon, France, 25–29 April 2022; pp. 2778–2788. [Google Scholar]

- Yang, Y.; Li, Y.; Quan, X. Ubar: Towards fully end-to-end task-oriented dialog system with gpt-2. Proc. AAAI Conf. Artif. Intell. 2021, 35, 14230–14238. [Google Scholar] [CrossRef]

- Jing, L.; Fan, X.; Feng, D.; Lu, C.; Jiang, S. A patent text-based product conceptual design decision-making approach considering the fusion of incomplete evaluation semantic and scheme beliefs. Appl. Soft Comput. 2024, 157, 111492. [Google Scholar] [CrossRef]

- Duan, X.; Liu, Y.; You, Z.; Li, Z. Agricultural Text Classification Method Based on ERNIE 2.0 and Multi-Feature Dynamic Fusion. IEEE Access 2025, 13, 52959–52971. [Google Scholar] [CrossRef]

- Zheng, W.; Lu, S.; Cai, Z.; Wang, R.; Wang, L.; Yin, L. PAL-BERT: An Improved Question Answering Model. CMES-Comput. Model. Eng. Sci. 2024, 139, 2729–2745. [Google Scholar] [CrossRef]

- Nie, W.; Chen, R.; Wang, W.; Lepri, B.; Sebe, N. T2TD: Text-3D Generation Model Based on Prior Knowledge Guidance. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 172–189. [Google Scholar] [CrossRef]

- Gardazi, N.M.; Daud, A.; Malik, M.K.; Bukhari, A.; Alsahfi, T.; Alshemaimri, B. BERT applications in natural language processing: A review. Artif. Intell. Rev. 2025, 58, 166. [Google Scholar] [CrossRef]

- Vrahatis, A.G.; Lazaros, K.; Kotsiantis, S. Graph attention networks: A comprehensive review of methods and applications. Future Internet 2024, 16, 318. [Google Scholar] [CrossRef]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning, PmLR, Virtual, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Gao, T.; Han, X.; Zhu, H.; Liu, Z.; Li, P.; Sun, M.; Zhou, J. FewRel 2.0: Towards More Challenging Few-Shot Relation Classification. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 6249–6254. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical networks for few-shot learning. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30, pp. 4080–4090. [Google Scholar]

- Gao, T.; Han, X.; Liu, Z.; Sun, M. Hybrid attention-based prototypical networks for noisy few-shot relation classification. Proc. AAAI Conf. Artif. Intell. 2019, 33, 6407–6414. [Google Scholar] [CrossRef]

- Ye, Z.; Ling, Z. Multi-Level Matching and Aggregation Network for Few-Shot Relation Classification. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 2872–2881. [Google Scholar]

- Yang, S.; Zhang, Y.; Niu, G.; Zhao, Q.; Pu, S. Entity Concept-enhanced Few-shot Relation Extraction. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, Online, 1–6 August 2021; pp. 987–991. [Google Scholar]

- Zhou, K.; Zhang, B.; Zhao, X.; Wen, J. Debiased Contrastive Learning of Unsupervised Sentence Representations. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, Dublin, Ireland, 22–27 May 2022; pp. 6120–6130. [Google Scholar]

- Wang, M.; Zheng, J.; Cai, F.; Shao, T.; Chen, H. DRK: Discriminative rule-based knowledge for relieving prediction confusions in few-shot relation extraction. In Proceedings of the 29th International Conference on Computational Linguistics, Gyeongju, Republic of Korea, 12–17 October 2022; pp. 2129–2140. [Google Scholar]

- Borchert, P.; De Weerdt, J.; Moens, M.-F. Efficient Information Extraction in Few-Shot Relation Classification through Contrastive Representation Learning. In Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Mexico City, Mexico, 16–21 June 2024; pp. 638–646. [Google Scholar]

- Peng, H.; Gao, T.; Han, X.; Lin, Y.; Li, P.; Liu, Z.; Sun, M.; Zhou, J. Learning from Context or Names? An Empirical Study on Neural Relation Extraction. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 3661–3672. [Google Scholar]

- Sun, H.; Chen, R. Enhancing the Prototype Network with Local-to-Global Optimization for Few-Shot Relation Extraction. In Proceedings of the Findings of the Association for Computational Linguistics: NAACL 2025, Albuquerque, NM, USA, 4 May 2025; pp. 2668–2677. [Google Scholar]

| Dataset | FewRel1.0 | FewRel2.0 | ||

|---|---|---|---|---|

| Relations | Instances | Relations | Instances | |

| Train | 64 | 44,800 | 64 | 44,800 |

| Verify | 16 | 11,200 | 10 | 1000 |

| Test | 20 | 14,000 | 15 | 1500 |

| Argument | Value |

|---|---|

| encoder | BERT encoder |

| Back-end model | BERT/CP |

| Learning rate | 1 × 10−5/5 × 10−6 |

| Maximum length | 256 |

| Hidden layer size | 768 |

| Batch size | 32 |

| Optimizer | AdamW |

| Verification procedure | 1000 |

| Maximum number of training iterations | 30,000 |

| Model | 5-Way-1-Shot | 5-Way-5-Shot | 10-Way-1-Shot | 10-Way-5-Shot | ||||

|---|---|---|---|---|---|---|---|---|

| Val | Test | Val | Test | Val | Test | Val | Test | |

| Proto-CNN [52] | — —/74.29 | — —/85.18 | — —/61.15 | — —/74.41 | ||||

| Proto-HATT [53] | 72.65/74.52 | 86.15/88.40 | 60.13/62.38 | 76.20/80.45 | ||||

| MLMAN [54] | 75.01/— — | 87.09/90.12 | 62.48/— — | 77.50/83.05 | ||||

| BERT-Pair [51] | 85.66/88.32 | 89.48/93.22 | 76.84/80.63 | 81.76/87.02 | ||||

| Proto-BERT [52] | 84.77/89.33 | 89.57/94.13 | 76.85/83.41 | 83.42/90.25 | ||||

| TD-proto [11] | — —/84.76 | — —/92.38 | — —/74.32 | — —/85.92 | ||||

| ConceptFERE [55] | — —/89.21 | — —/90.34 | — —/75.72 | — —/81.82 | ||||

| DAPL [56] | — —/85.94 | — —/94.28 | — —/77.59 | — —/89.26 | ||||

| HCRP [13] | 90.90/93.76 | 93.22/95.66 | 84.11/89.95 | 87.79/92.10 | ||||

| DRK [57] | — —/89.94 | — —/92.42 | — —/81.94 | — —/85.23 | ||||

| MultiRep [58] | 92.73/94.18 | 93.79/96.29 | 86.12/91.07 | 88.80/91.98 | ||||

| LoToG [60] | 92.38/95.28 | 94.26/96.71 | 86.23/91.48 | 91.11/93.14 | ||||

| Ours (BERT) | 92.95/94.63 | 94.36/97.35 | 87.25/91.46 | 89.85/94.58 | ||||

| CP [59] | — —/95.10 | — —/97.10 | — —/91.20 | — —/94.70 | ||||

| MapRE [14] | — —/95.73 | — —/97.84 | — —/93.18 | — —/95.64 | ||||

| HCRP (CP) [13] | 94.10/96.42 | 96.05/97.96 | 89.13/93.97 | 93.10/96.46 | ||||

| CBPM [16] | — —/90.89 | — —/94.68 | — —/82.54 | — —/89.67 | ||||

| Ours (CP) | 96.57/97.25 | 97.99/98.26 | 94.56/95.35 | 95.81/96.27 | ||||

| Model | 5-Way-1-Shot | 5-Way-5-Shot | 10-Way-1-Shot | 10-Way-5-Shot |

|---|---|---|---|---|

| Proto-CNN [52] | 35.09 | 49.37 | 22.98 | 35.22 |

| Proto-BERT [52] | 40.12 | 51.50 | 26.45 | 36.93 |

| BERT-PAIR [51] | 67.41 | 78.57 | 54.89 | 66.85 |

| HCRP [13] | 76.34 | 83.03 | 63.77 | 72.94 |

| LoToG [60] | — | 84.38 | — | 75.69 |

| Ours (CP) | 80.45 | 87.63 | 68.15 | 79.89 |

| Model | 5-Way 1-Shot | Training Time (Hours) | 10-Way 1-Shot | Training Time (Hours) |

|---|---|---|---|---|

| Our (CP) | 94.63 | 4 | 91.46 | 9 |

| w/o relation information | 92.85 | 3.5 | 89.91 | 8 |

| w/o | 93.20 | 2.5 | 89.26 | 6 |

| w/o | 92.82 | 2 | 88.75 | 5 |

| w/o | 93.48 | 2 | 89.08 | 5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qian, S.; Fu, B.; Liu, C.; Jin, S.; Sun, T.; Chen, Z.; Li, D.; Sun, Y.; Chen, Y.; Li, Y. Prototype-Enhanced Few-Shot Relation Extraction Method Based on Cluster Loss Optimization. Symmetry 2025, 17, 1673. https://doi.org/10.3390/sym17101673

Qian S, Fu B, Liu C, Jin S, Sun T, Chen Z, Li D, Sun Y, Chen Y, Li Y. Prototype-Enhanced Few-Shot Relation Extraction Method Based on Cluster Loss Optimization. Symmetry. 2025; 17(10):1673. https://doi.org/10.3390/sym17101673

Chicago/Turabian StyleQian, Shenyi, Bowen Fu, Chao Liu, Songhe Jin, Tong Sun, Zhen Chen, Daiyi Li, Yifan Sun, Yibing Chen, and Yuheng Li. 2025. "Prototype-Enhanced Few-Shot Relation Extraction Method Based on Cluster Loss Optimization" Symmetry 17, no. 10: 1673. https://doi.org/10.3390/sym17101673

APA StyleQian, S., Fu, B., Liu, C., Jin, S., Sun, T., Chen, Z., Li, D., Sun, Y., Chen, Y., & Li, Y. (2025). Prototype-Enhanced Few-Shot Relation Extraction Method Based on Cluster Loss Optimization. Symmetry, 17(10), 1673. https://doi.org/10.3390/sym17101673