1. Introduction

Thangka, as a traditional Tibetan scroll painting, holds profound cultural and artistic significance. Distinguished by its intricate iconography, rich color schemes, and detailed narrative compositions, Thangka is not only a sacred art form, but also a valuable heritage asset requiring careful preservation. With the advent of digital technologies, how to efficiently analyze, segment, and preserve Thangka images has become a pressing research topic in cultural heritage computing. Among these tasks, image segmentation serves as the foundation for downstream applications such as recognition, restoration, and style transfer.

However, the unique characteristics of Thangka images—dense components, fine-grained textures, and a lack of annotated datasets—pose significant challenges to conventional segmentation methods. Existing annotated resources are generally limited in both scale and stylistic diversity, which restricts their ability to cover the wide range of variations in color, layout, and symbolic representation found in Thangka. Furthermore, some datasets suffer from imbalanced class distributions and incomplete annotations, particularly for rare or degraded elements, making it difficult to train models that generalize robustly across different artistic styles. Similar issues are encountered in the segmentation of ancient murals and art paintings, where intricate patterns, rich color variation, and ambiguous boundaries often defeat standard approaches [

1,

2,

3]. As a result, researchers have developed a series of specialized algorithms for Thangka and related artistic images. These include spatial-prior-enhanced models [

4], line drawing augmentation, multi-scale attention mechanisms [

3], and end-to-end deep networks tailored for capturing both regular and blurred structures [

5,

6]. The integration of edge information, region proposals, and spatial priors has further improved segmentation accuracy for critical semantic elements, such as headdresses and figures, in Thangka and mural art [

1,

7,

8].

Early image segmentation approaches predominantly relied on convolutional neural networks (CNNs). The seminal work of U-Net [

9] established a widely adopted encoder–decoder architecture, achieving remarkable success in biomedical imaging. Subsequent advances, such as Context Prior [

10] and CondNet [

11], enhanced contextual modeling and feature discrimination, but their effectiveness was often limited by the local receptive fields of convolutional operations. To address these shortcomings in the context of Thangka and mural images, researchers introduced hybrid models that combine multi-scale convolutions, atrous convolutions, and attention modules, boosting feature extraction and edge detail preservation in complex scenes [

3,

5,

6].

The advent of Vision Transformers (ViTs) revolutionized the field, as architectures like Swin Transformer [

12] introduced hierarchical attention mechanisms that balance computational efficiency with global context modeling. Further developments, such as SegFormer [

13], Segmenter [

14], K-Net [

15], MaskFormer [

16], and Mask2Former [

17], unified various segmentation tasks within scalable frameworks. In the specific case of Thangka images, the use of multi-scale attention- and coordinate-aware modules has been shown to effectively capture both global semantic context and intricate details, helping to overcome over-segmentation and edge confusion [

3].

Meanwhile, open-vocabulary and vision–language models have broadened the scope of segmentation. GroupViT [

18], FC-CLIP [

19], and ClipSeg [

20] have enabled zero-shot and prompt-based segmentation by leveraging text supervision and large-scale pretrained backbones. The Segment Anything Model (SAM) [

21] and GenSAM [

22] further expanded segmentation capabilities through promptable and cross-modal frameworks. In the domain of art, domain adaptation and training-free methods have also gained traction, addressing the scarcity of annotated data for paintings by using style transfer and generative techniques to synthesize training samples or perform segmentation directly [

2,

23].

Efforts to enhance universality and efficiency have led to transformer-based frameworks such as Mask DINO [

24], OneFormer [

25], and methods like ReMaX [

26], SegNeXt [

27], and PlainSeg [

28]. Recent works have also explored dense decoding and decoder design improvements, offering practical benefits for high-resolution and complex images [

29,

30]. In heritage imaging, multi-attribute feature-fusion- and knowledge-driven detection have enabled more nuanced retrieval and recognition of objects in Thangka, leveraging both appearance and domain-specific cues [

8,

31,

32].

Beyond single-modal methods, multi-modal and multi-task approaches have become increasingly prominent. DPLNet [

33] introduced dual-prompt learning for efficient RGB-D and RGB-T segmentation, while GeminiFusion [

34] and ODIN [

35] unified 2D and 3D segmentation tasks. Other frameworks such as Delivering Arbitrary-Modal Segmentation [

36] and SwinMTL [

37] scaled segmentation to handle multi-modal and multi-task scenarios. In Thangka and mural segmentation, integrating spatial, chromatic, and contextual cues within multi-branch or fusion networks has further improved semantic understanding and detail recovery [

1,

3,

38]. Additionally, superpixel-based and clustering approaches have been explored for high-precision segmentation of murals with complex color appearances [

38].

Robustness and data efficiency remain active research frontiers. Self-ensemble strategies [

39] and representation separation techniques [

40] have addressed issues around over-smoothing and model robustness. At the same time, weakly supervised and box-level annotation methods have been proposed to reduce the high cost of pixel-level labeling, showing strong performance in element segmentation for Thangka and portrait images [

7,

41]. In parallel, recent works, such as Li et al. [

42], explored attention-based augmentation and multi-view learning for few-shot surface defect detection, providing insights into how tailored architectures can enhance feature representation under limited-data scenarios.

More recently, generative and diffusion models have emerged as promising alternatives to discriminative approaches. Bui et al. [

43] introduced a diffusion-based framework for RGB-D semantic segmentation, demonstrating superior performance in handling noisy data and capturing intricate spatial structures. The iterative denoising nature of diffusion models enables enhanced robustness and generalization, which aligns well with the challenges posed by Thangka and related artistic images. In addition, approaches such as PaintSeg [

23] offer training-free, adversarial segmentation pipelines that can generalize across diverse artistic styles.

Thangka images present unique challenges for segmentation due to their symbolic complexity, densely packed visual elements, and strong symmetrical layouts. Many regions, such as lotus petals and backlight halos, share similar textures and colors, making it difficult for traditional models to distinguish them. In addition, the main figures are often embedded within symmetrical compositions, which require structural awareness to segment accurately. Meanwhile, the textual descriptions associated with Thangka images contain rich symbolic meaning that is often missing from the visual channel, limiting the model’s ability to fully understand semantic context.

To address these issues, we propose SPIRIT, a structure-aware and prompt-guided diffusion segmentation framework. Our model integrates information from image, text, and support samples to guide the generation of accurate masks. It uses a diffusion-based architecture with support-query encoding, semantic-guided attention for multi-modal fusion, and a dedicated symmetry-aware module for structural consistency. This design enhances the model’s capacity to handle fine-grained categories, artistic variations, and visually ambiguous regions in Thangka paintings.

Contributions: The main contributions of this work are as follows:

We construct a high-quality Thangka segmentation dataset with pixel-level expert annotations, covering diverse artistic schools and materials.

We propose SPIRIT, a diffusion-based segmentation framework that incorporates cultural symmetry-priors to better capture structural regularities in Thangka compositions.

We design novel modules including the Symbolic-Guided Attention Field (SGAF), Symmetry-aware Affinity Module (SyAM), and Text-guided Augmentation and Refinement (TAR), each of which is validated using ablation studies.

Extensive experiments on the Thangka and ArtBench datasets demonstrate that our method consistently outperforms strong baselines, with significant gains in challenging categories such as halo and lotus.

Organization: The remainder of this paper is organized as follows:

Section 2 reviews related work on segmentation, diffusion models, and cultural heritage imaging.

Section 3 introduces the proposed SPIRIT framework and its key components.

Section 4 presents the experimental setup, results, and ablation studies. Finally,

Section 5 concludes the paper and discusses future directions.

3. Methods

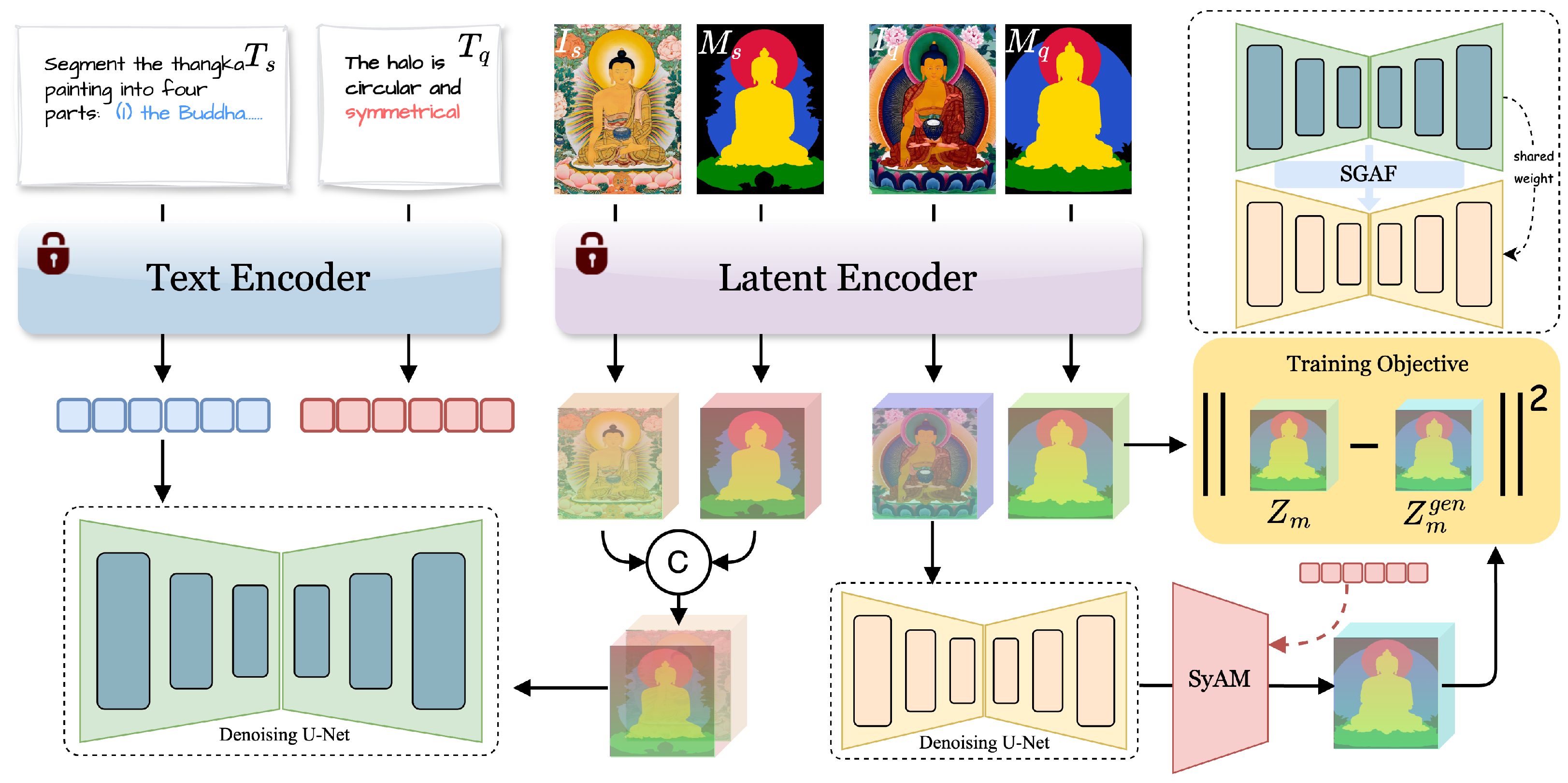

We introduce SPIRIT, a support-guided and symmetry-aware diffusion framework for Thangka image segmentation. The overall architecture is shown in

Figure 1. The core idea of SPIRIT is to combine cross-modal support guidance with symmetry-priors that are inherent in Thangka art.

The support image and its mask are first encoded into latent features. These features are combined with the support text features and sent into a diffusion U-Net to generate enriched support context features. SPIRIT then uses these features to guide the query branch. The query image is processed by another diffusion U-Net, where a fusion module injects the support context to help generate more accurate mask features. A symmetry affinity module further improves the query features by enforcing structural consistency, while the query text features provide semantic guidance. In this way, the predicted masks match the target regions and also follow the symmetrical design of Thangka paintings. The refined mask features are then compared with the original query mask features for training.

Through these designs, SPIRIT effectively unifies support-based cross-modal guidance and symmetry-aware refinement, resulting in more precise and reliable segmentation of complex Thangka artworks.

3.1. Preprocessing with Text-Guided Attribute Augmentation and Latent Visual Encoding

To enable semantically guided Thangka segmentation, we introduce a dual-stream preprocessing pipeline that processes both visual and textual inputs into structured embeddings. This step ensures that the downstream diffusion model can access the aligned representations enriched with symbolic guidance.

On the visual side, we adopt a shared latent encoder

based on the Variational Autoencoder (VAE) architecture from Stable Diffusion. It is used not only to compress the input Thangka image

I, but also the corresponding segmentation mask

M into their respective latent embeddings:

where

and

denote the latent visual and mask features, respectively. By operating in the same latent space, the model benefits from a consistent representation that supports learning in the denoising process while reducing computational complexity.

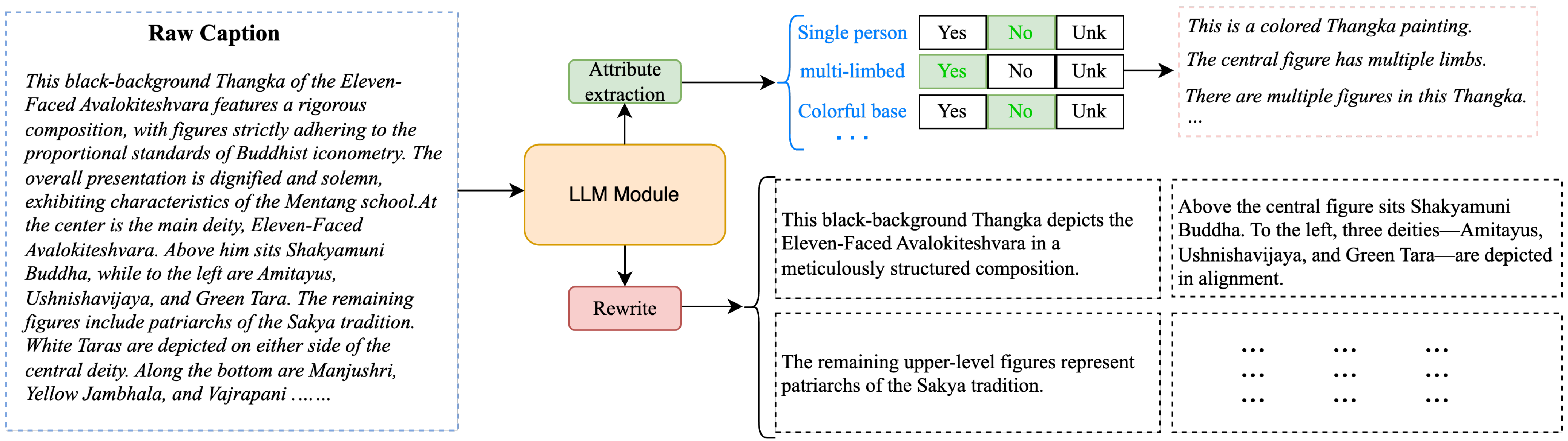

On the textual side, raw Thangka captions are often verbose and narrative, making them difficult to directly align with visual features. To extract structured symbolic knowledge and improve semantic clarity, we introduce a large language model (LLM)-based augmentation pipeline, as illustrated in

Figure 2. Given a raw caption

c, the LLM performs two parallel operations to produce an enriched textual representation.

One branch focuses on attribute extraction and template generation. The LLM identifies a set of symbolic attributes from the caption, such as “single person,” “multi-limbed,” or “colorful base,” with each attribute associated with categorical labels (Yes, No, or Unknown). These attributes are then converted into natural language phrases using predefined templates, resulting in structured symbolic statements that highlight the key semantic aspects of the image.

The other branch involves direct rewriting of the original caption. The LLM refines

c into a concise and semantically enhanced version

guided by the extracted attribute context:

The final input

is formed by concatenating the rewritten caption

with the template-generated symbolic phrases:

where

denotes sentence-level concatenation.

This enriched caption

is then encoded using the CLIP text encoder

to obtain the final text embedding:

By combining narrative clarity and symbolic abstraction, this attribute-guided textual augmentation enhances cross-modal alignment, which is especially important for Thangka segmentation tasks characterized by intricate iconographic structures and layered semantic cues.

3.2. Cross-Modal Semantic Fusion in Diffusion Models

Diffusion models have recently emerged as powerful tools for dense prediction tasks, offering a progressive denoising mechanism to recover structured outputs from noisy latent variables. Formally, given a noisy representation

at time step

t, the model learns to estimate the added noise

and predict the clean latent variable

:

where

denotes the noise schedule. Through iterative refinement over multiple steps, this formulation enables the model to capture both local structure and global semantics in complex visual data.

To fully exploit this potential in our Thangka segmentation setting, we propose a dual-pathway denoising architecture in which both the query image and its associated segmentation mask are encoded into latent spaces and processed in parallel diffusion branches. Although both branches follow the same denoising pipeline, their inputs serve complementary purposes: the image path captures visual semantics, while the mask path focuses on structural supervision.

Rather than treating these branches independently, we enforce shared weights between their diffusion modules. This design allows for the model to jointly learn from both modalities while maintaining consistent feature representations across image and mask domains. The shared denoising U-Net not only reduces parameter overhead, but also encourages semantic alignment throughout the denoising trajectory.

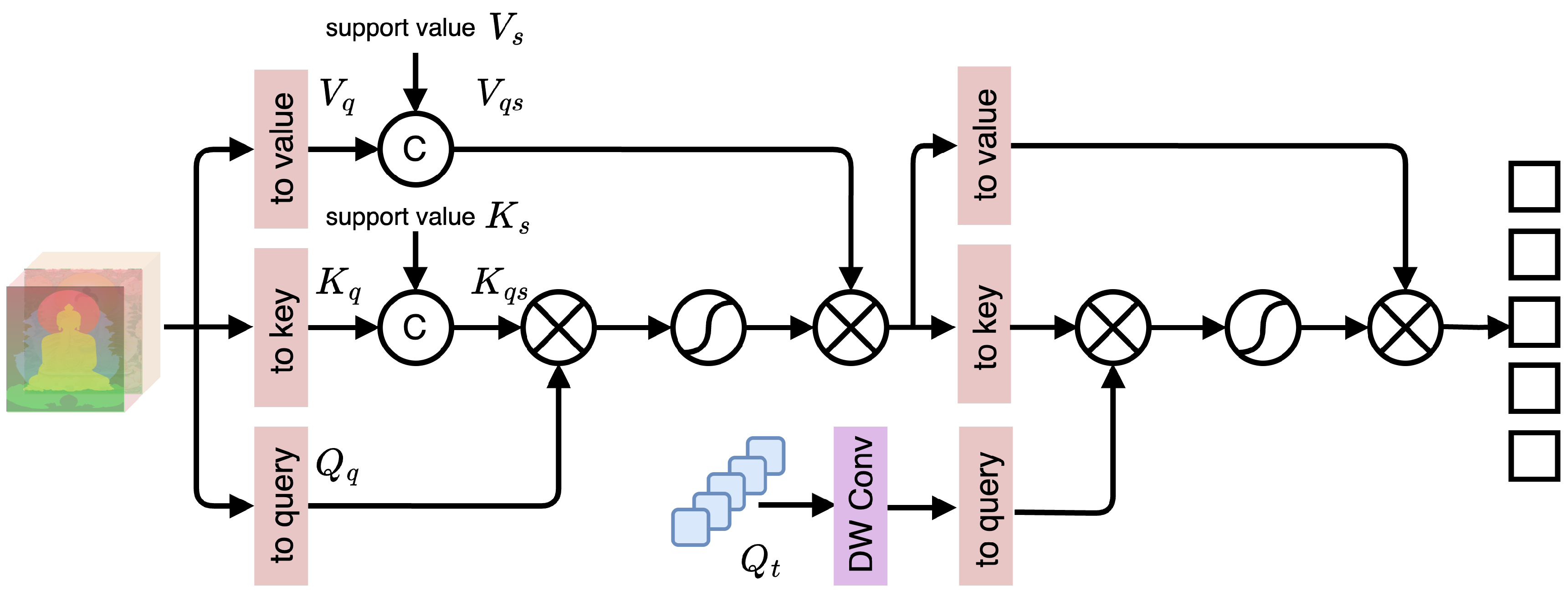

In Thangka segmentation, relying solely on visual or structural signals may be insufficient, as symbolic attributes (e.g., “lotus posture,” “multiple arms”) often govern region delineation. These high-level semantics are crucial for disambiguating visually similar patterns or culturally encoded structures. To inject both visual context and symbolic knowledge into the denoising process, we design a two-stage attention mechanism within the Semantic-Guided Attention Fusion (SGAF) module, as illustrated in

Figure 3. This module first performs visual self-attention enhanced by support image features, and then applies cross-attention guided by symbolic textual embeddings.

In the first stage, we perform a self-attention operation over the query image features, but enrich the key and value branches by incorporating support image information. Specifically, given a query image, we extract its query, key, and value embeddings

,

, and

, and similarly extract

and

from the corresponding support image. We concatenate these representations along the key and value branches:

where ‖ denotes feature concatenation followed by a linear projection. The enhanced visual representation is obtained by

which captures both intra-image context and inter-image priors from the support sample.

In the second stage, we aim to inject symbolic semantics derived from textual features. To preserve spatial sensitivity in the semantic representation, we first apply a depth-wise convolution to the textual query feature

:

producing a locally-aware symbolic embedding. We then perform a cross-attention operation where the updated textual queries

attend over the fused visual representation

X, obtained from the previous stage, which serves as both the key and value:

This second-stage attention allows for symbolic text-derived queries to directly modulate visual features, aligning visual patterns with high-level semantics. The resulting representation F reflects stronger cross-modal consistency and enhanced awareness of symbolic cues, which is particularly beneficial for segmenting the intricate iconographic structures of Thangka paintings.

3.3. Symmetry-Aware Mask Refinement

To better exploit the inherent bilateral or rotational symmetry of Thangka images and strengthen cross-modal alignment, we design a Symmetry Affinity Module (SyAM). The motivation behind this module is that visual features often exhibit structured symmetries, which, if explicitly modeled, can provide strong regularization and enhance the semantic consistency between vision and text. The overall framework is illustrated in

Figure 4, and we detail the mathematical formulation step by step below.

First, let the visual feature map be denoted as

, where

C is the channel dimension, and

represents the spatial resolution. A flipped counterpart of the feature map is obtained through a symmetry transformation

. Both the original and flipped features are processed by Global Average Pooling (GAP) followed by normalization:

Here, f and are compact global descriptors of the original and flipped features.

Next, a similarity function

is applied to

f and

to produce the symmetry affinity map:

The output S measures the structural correlation between the original and flipped feature representations.

Meanwhile, the text embedding is denoted as

, where

d is the textual feature dimension. It is first normalized by Layer Normalization (LN), then projected into a latent space by a Multi-Layer Perceptron (MLP). The result is passed through a sigmoid activation

to generate the modulation vector:

Here, acts as a text-induced gating vector that modulates the affinity map.

This modulation is achieved by element-wise multiplication (Hadamard product) between

S and

, resulting in the modulated symmetry map:

where ⊙ denotes element-wise product.

To enhance stability, a residual refinement process is introduced. The modulated map

is compressed via GAP and then passed through an MLP with sigmoid activation to generate a correction signal. This signal is combined with

by residual addition (⊕):

Subsequently, the corrected modulation is applied to the original symmetry map:

Here, represents the refined symmetry response.

Finally, the refined response

is fused back with the original visual feature map

F through a residual connection:

The resulting representation M serves as the symmetry-enhanced feature, which incorporates both visual structural symmetry and text-guided modulation for improved cross-modal alignment.

3.4. Loss Function

To effectively train our semantic-aware Thangka segmentation framework, we design a composite loss function that integrates multiple objectives from different modules in the architecture. Each loss term supervises a specific component or interaction, ensuring that both the visual structure and symbolic semantics are properly captured and aligned throughout the denoising and refinement stages.

For the dual-branch diffusion model, we supervise both the image and mask diffusion branches using standard noise prediction loss. Given a clean latent

and its noisy counterpart

at timestep

t, the model predicts the added noise

and is optimized via a simple

reconstruction loss:

This loss is applied to both the image and mask diffusion branches with shared denoising parameters, promoting consistent noise estimation and generation across modalities.

For the cross-modal semantic fusion module, we supervise the output of the denoised latent mask

by comparing it with the ground truth latent segmentation

using a latent-level mean squared error:

This term ensures that the denoised result remains semantically and structurally aligned with the annotated segmentation layout.

We incorporate a text-image contrastive loss

to encourage better alignment between the symbolic text embedding

t and the visual latent features

. Inspired by CLIP-style contrastive learning, we minimize the cosine distance between matched image–text pairs while maximizing it for unmatched pairs:

where

denotes cosine similarity and

is a learnable temperature parameter.

For the symmetry-aware mask refinement module, we enforce structural consistency via a symmetry consistency loss

, which penalizes the discrepancy between the predicted mask and its symmetric counterpart:

where

m is the generated mask and

denotes a symmetry transformation (e.g., horizontal flip). This loss encourages the model to respect underlying symmetrical patterns in Thangka layouts.

Finally, the total loss is a weighted combination of all the above components:

where

–

are scalar weights that balance the contributions of each loss term. In practice, these values are selected via validation performance to ensure stable convergence and optimal segmentation accuracy.

This multi-level loss design enables our model to jointly optimize for denoising fidelity, semantic–symbolic alignment, contrastive guidance, and structural regularity, which is crucial for tackling the rich, intricate compositions found in Thangka art.

4. Experiments

4.1. Evaluation Metrics

We evaluate segmentation performance using four standard metrics: mean Intersection over Union (mIoU), Dice coefficient, mean Accuracy (mAcc), and Pixel Accuracy (PixAcc). These metrics capture complementary aspects of segmentation quality.

The mIoU measures the overlap between predictions and ground truth. For class

c,

The Dice coefficient, equivalent to the F1 score, emphasizes boundary accuracy:

The mAcc evaluates per-class accuracy:

Finally, PixAcc measures the overall correctness:

4.2. Experimental Setup

We implement all models using the PyTorch (version 1.12.1) framework and train them on a single NVIDIA RTX 3080 Ti GPU. Input Thangka images are resized to and normalized before training. The model is optimized using the RAdam optimizer, which combines the stability of Adam with Rectified Adaptive Learning Rate for better convergence in the early stages. We set the initial learning rate to 0.001 and adopt a cosine annealing schedule with linear warm-up over the first ten epochs. The total number of training epochs is 300, with a batch size of 32. Weight decay is set to to prevent overfitting.

Each loss component is weighted to balance training objectives: is assigned to the segmentation objective, to the auxiliary classification loss, to the cross-modal alignment loss, and to the symmetry consistency loss. All values are selected via grid search on the validation set to ensure stable convergence and optimal segmentation accuracy.

4.3. Dataset and Augmentation

We construct a Thangka image segmentation dataset consisting of 2210 high-resolution digital images collected from public repositories and archival resources. The dataset covers a wide range of artistic schools, including Karma Gadri, Menri, Rebgong, Mensar, Chintse, Gyiwugang, and Nipali, as well as multiple materials such as silk, canvas, and paper-based Thangka paintings. Each image is manually annotated at the pixel level by domain experts through a two-stage verification process to ensure accuracy, with five semantic categories defined: figure, halo, backlight, background, and lotus. These categories capture the typical structural and symbolic elements commonly found in Thangka compositions. In addition, we deliberately included rare styles and historically degraded samples (e.g., pigment loss, cracks, fading) to better reflect real-world cultural heritage scenarios and evaluate robustness. In addition, most images are accompanied by corresponding textual descriptions, which offer rich semantic context and support potential multi-modal modeling. The segmentation task is formulated as a standard supervised learning problem. With its scale and diversity, the dataset provides a strong foundation for training and evaluating models under complex, real-world conditions in cultural heritage image analysis. To further assess the generalization ability of our method in broader artistic domains, we also incorporate the ArtBench dataset as a supplementary benchmark. ArtBench is a publicly available dataset for semantic segmentation in artistic paintings, featuring a variety of art styles and compositions. It serves as a valuable reference for evaluating segmentation performance beyond the Thangka domain.

To improve robustness and generalization, we design a data augmentation pipeline that combines general-purpose and domain-specific strategies. The general augmentations include random grayscale conversion, color jittering, Gaussian blur, random occlusion, horizontal flipping, scaling, and rotation. These operations simulate common variations such as lighting changes, sensor noise, and viewpoint diversity. In addition, we introduce augmentations tailored to the unique characteristics of Thangka images. Since many historical works suffer from degradation (e.g., pigment loss, stains, and cracks), we simulate such patterns during training. Moreover, to account for domain shifts in background tones across different artistic schools, we employ CycleGAN-based style transfer to convert complex colorful backgrounds into simplified variants such as pure red, black, or blue. Finally, we apply a multi-scale cropping and pasting strategy, where resized patches are reinserted into the original image to enrich spatial hierarchies and enhance foreground–background separation. These tailored augmentations significantly improve the model’s ability to handle the visual complexity and degradation present in Thangka art.

4.4. Comparative Experiments

To comprehensively validate the effectiveness and generalization capability of our method, we compare it with several representative segmentation approaches, including diffusion-based baselines (SegDiff [

56] and MedSegDiffv2 [

46]), as well as support-guided or prompt-based paradigms such as Painter [

57], SegGPT [

58], Matcher [

59], and VLP-SAM [

60]. The evaluation is conducted on two representative datasets: the domain-specific

Thangka dataset constructed by us and the public art-oriented benchmark

ArtBench.

We adopt standard segmentation evaluation metrics, mIoU, Dice coefficient, and mAcc, to provide a holistic assessment of each model’s performance. As shown in

Table 1, our method consistently achieves the best results across all metrics and datasets. On the Thangka dataset, our model achieves 88.3% mIoU, 94.2% Dice, and 90.0% mAcc, outperforming the strongest baseline (VLP-SAM) by 6.1%, 4.9%, and 4.7%, respectively. On the ArtBench dataset, our model achieves 86.1% mIoU, 92.4% Dice, and 87.4% mAcc, again surpassing all comparison methods with a clear margin. These results validate the robustness and generalizability of our segmentation framework, especially in the challenging context of complex artistic images.

To further investigate model behavior, we report per-class IoU and macro-AUC scores on the Thangka dataset in

Table 2. This finer-grained evaluation provides two key insights. First, our method achieves the most pronounced gains in challenging categories such as halo and lotus (improvements of +8.1 and +8.9 IoU over the strongest baseline, respectively), confirming that SPIRIT is particularly effective in handling fine-grained and structurally complex regions. Background segmentation remains comparable to baseline, which demonstrates that the improvements mainly arise from addressing difficult symbolic components rather than easier classes. Second, the additional AUC metric, computed from pixel-wise probability maps, further validates the effectiveness of our approach. Our method achieves the highest macro-AUC (0.962), indicating that it not only produces accurate masks after thresholding, but also maintains strong discriminative capability across varying decision thresholds. Together, these results reinforce the robustness of SPIRIT both in overall segmentation performance and in fine-grained, classification-oriented evaluation.

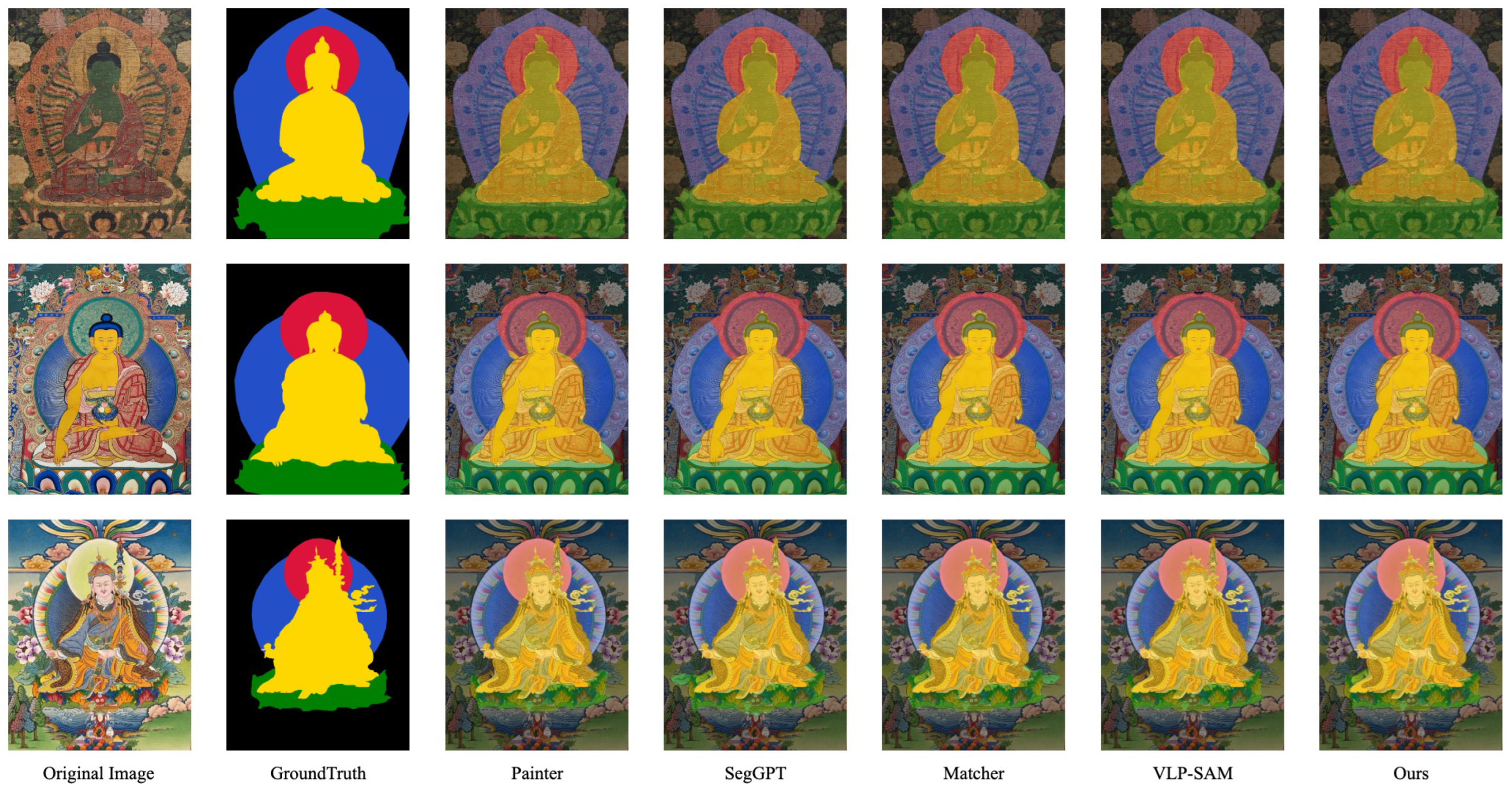

In addition to quantitative results, we provide qualitative comparisons of segmentation performance across different models, as shown in

Figure 5. The examples are selected from the Thangka dataset, representing typical compositional and stylistic characteristics of Tibetan Buddhist art. Painter and SegGPT tend to produce coarse masks that fail to tightly fit object boundaries, often including background artifacts or missing fine details, especially in regions with color similarities or intricate ornaments. Matcher offers relatively sharper outlines, but still struggles with semantic confusion in complex areas. VLP-SAM improves visual alignment, but still exhibits occasional over-segmentation, particularly in regions with overlapping symbolic elements. In contrast, our method generates precise and smooth masks that are better aligned with the underlying visual structures. It preserves the integrity of the figure contours and background separation, handles ambiguous boundaries more accurately, and shows consistent semantic parsing across different compositions. Notably, our method maintains mask coherence in challenging zones such as halos, hands, and base platforms, which are often overlooked by other models.

These visual results complement the quantitative findings, demonstrating the advantage of our framework in capturing fine-grained details and structure-aware semantics in art-domain segmentation.

4.5. Ablation Study

To assess the effectiveness of our proposed modules, we conduct a series of ablation experiments, focusing on three key components: the Symbolic-Guided Attention Field (SGAF), the Symmetry-aware Affinity Module (SyAM), and the Text-guided Augmentation and Refinement module (TAR). The quantitative results are summarized in

Table 3.

Starting from the baseline, which yields 77.8% mIoU and 89.3% Dice, we first analyze the impact of each module individually. Incorporating SGAF leads to a noticeable improvement of +3.2 mIoU and +2.3 Dice, confirming that integrating symbolic priors into the denoising process helps to recover fine-grained object boundaries and improves spatial awareness. When applying SyAM alone, the model gains +2.4 mIoU, showing that enforcing symmetry consistency—especially for inherently symmetric Thangka structures—enhances the overall mask coherence and reduces deformation artifacts. Adding TAR independently contributes a +3.1 mIoU gain, suggesting that leveraging external textual semantics for augmentation and refinement can enrich the representation of rare or complex patterns that are otherwise difficult to learn from visual features alone.

We then explore the joint effects of combining these modules. The integration of SGAF and SyAM achieves 82.8% mIoU, reflecting that symbolic attention and symmetry constraints are complementary in guiding the generation process. The combination of SGAF and TAR further improves performance to 83.2%, indicating that symbolic priors can be effectively reinforced by textual cues during denoising. SyAM and TAR together result in 82.5% mIoU, suggesting that structural and semantic regularities jointly provide a strong inductive bias when appearance cues are insufficient. Finally, integrating all three modules yields the best performance across all metrics: 88.3% mIoU, 94.2% Dice, 90.0% mean accuracy, and 92.6% pixel accuracy. Compared to the baseline, this configuration brings an overall improvement of +10.5 mIoU and +4.9 Dice, validating that our proposed components are mutually reinforcing and contribute to enhanced segmentation precision from semantic, structural, and generative perspectives.

To evaluate the contribution of our LLM-based caption rewriting and attribute extraction strategy, we conducted a dedicated ablation on the Text-guided Augmentation and Refinement (TAR) module. As reported in

Table 4, both sub-components provide measurable gains over the baseline model: caption rewriting improves mIoU from 82.8% to 84.5%, while attribute extraction further increases performance to 85.1%. When both are jointly applied, the model achieves the best performance (88.3% mIoU and 94.2% Dice), confirming that rewriting enhances textual diversity whereas attribute extraction provides explicit structural cues, and their combination yields complementary benefits. This analysis verifies the individual and synergistic contributions of TAR to segmentation accuracy.

We also analyzed the robustness of the Symmetry-aware Affinity Module (SyAM) under different prior strengths, controlled by the weight

in Equation (

21). As shown in

Table 4, a weak prior (

) and a strong prior (

) yield suboptimal results (86.0% and 86.5% mIoU, respectively) compared to the medium setting (

, 88.3% mIoU). This trend indicates that insufficient prior weighting fails to enforce structural regularity, whereas overly strong constraints reduce flexibility in non-symmetric regions. The medium prior provides the best balance between structural guidance and adaptive fitting, confirming the robustness of SyAM in handling symmetrical yet diverse Thangka compositions.

4.6. Visual Error Analysis

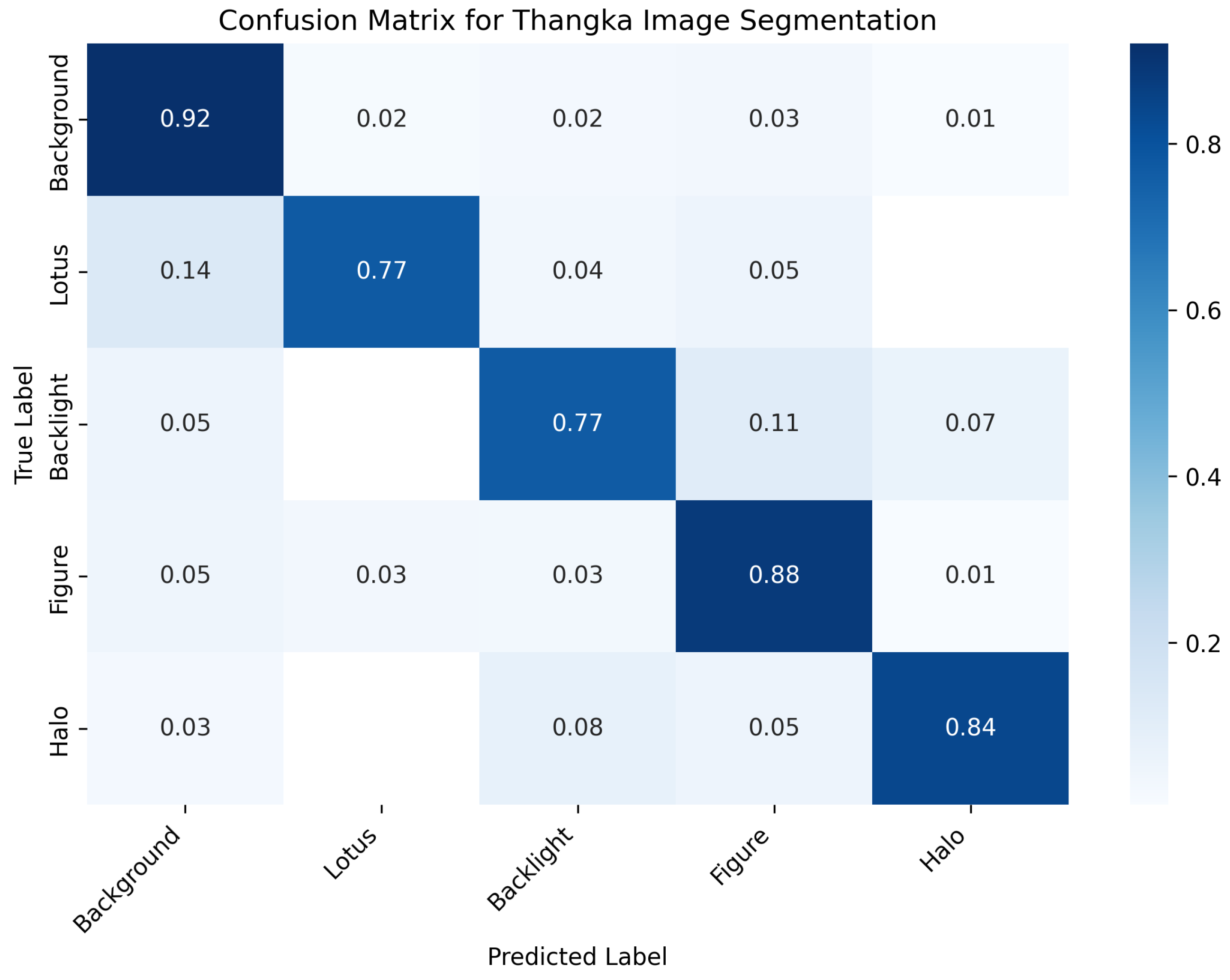

To further evaluate the performance of our segmentation model, we visualize the confusion matrix across the five semantic categories. This provides a more detailed understanding of how different classes are distinguished and where errors predominantly occur. The confusion matrix is shown in

Figure 6.

From the visualization, it can be observed that the model achieves relatively high accuracy on dominant categories such as Background and Figure, as indicated by strong diagonal responses. However, confusions occur between visually similar classes, for example, Lotus and Backlight, which often share overlapping color distributions and fine-grained textures. Another common confusion appears between Halo and Backlight due to their co-occurrence in similar spatial regions around the central figure.

These misclassifications highlight the challenges in segmenting fine-grained symbolic elements in Thangka paintings. While the backbone model provides robust general recognition, improvements may be achieved by incorporating additional structural priors or relation-aware modeling to better distinguish semantically close categories.

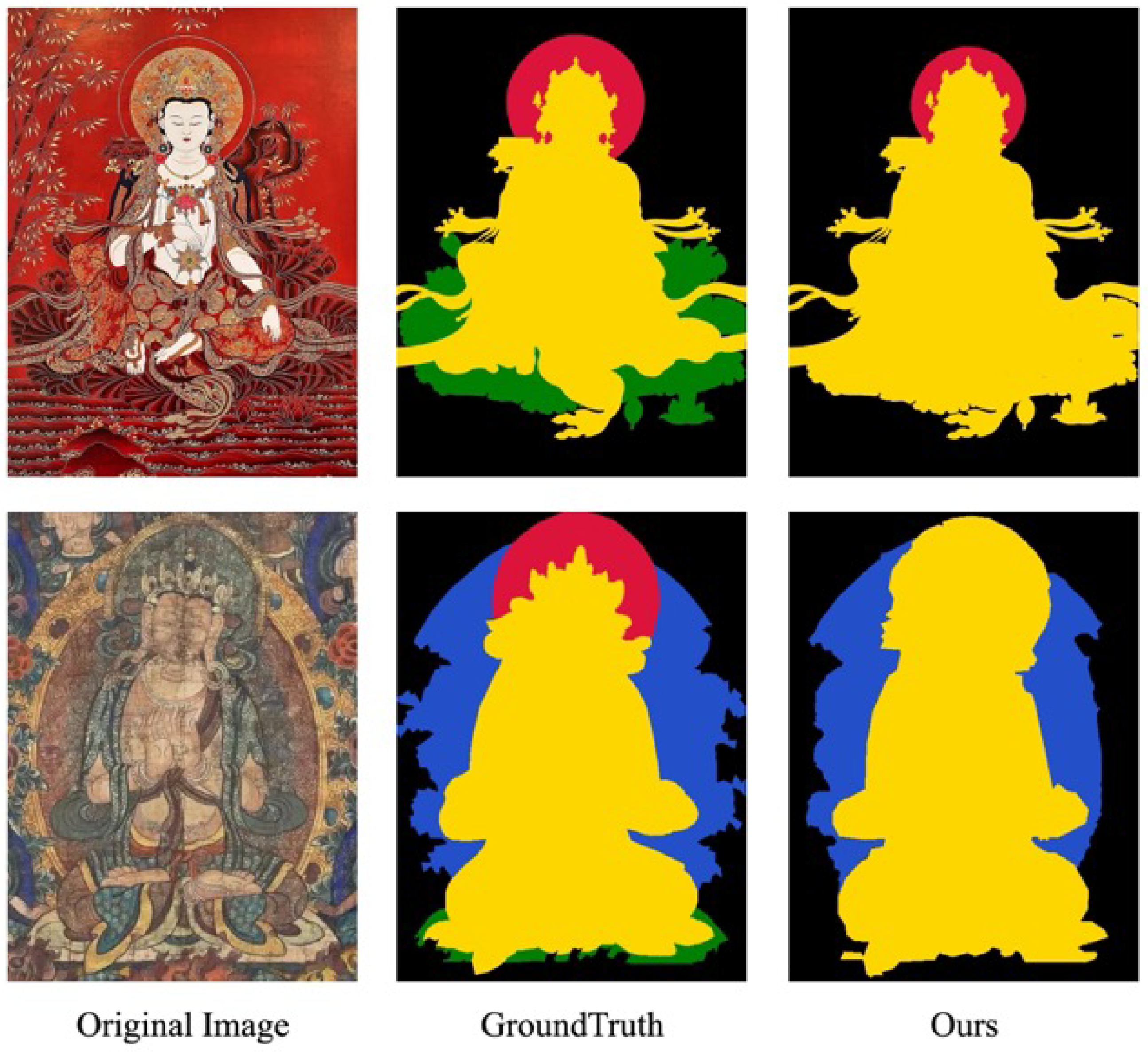

In addition to the confusion matrix analysis, we further examine specific failure cases where the model struggles with unusual or challenging Thangka styles, as illustrated in

Figure 7. The upper example depicts a modern-style Thangka with highly saturated flat colors and minimal texture. Although the visual boundaries appear clear to the human eye, the lack of shading and texture cues significantly hinders the model’s ability to differentiate between adjacent semantic regions. In particular, large homogeneous areas are often misclassified as the “figure” class, especially when the color similarity between the figure and surrounding elements such as the seat or background is high. Moreover, the uniform color palette causes the model to confuse visually adjacent elements like the halo and the backlight, leading to inaccurate segmentation around the figure’s silhouette.

In contrast, the lower case shows an ancient, severely degraded Thangka image with notable pigment loss, faded details, and low contrast. In this scenario, the model faces two major challenges: first, the conventional low-level features—such as color, edges, and contrast—that typical segmentation models rely on are severely diminished; second, the artistic inconsistencies and deterioration introduce irregular deformations, occlusions, and noise. These conditions make it difficult for the model to locate precise boundaries, often resulting in misclassification between semantically close regions such as “backlight” and “figure” or between “halo” and “background”.

These two examples represent edge cases of over-simplified and extremely degraded inputs, respectively, and they highlight the limitations of the current model’s generalization ability. The first case reveals the model’s dependence on texture cues for disambiguation, while the second underscores its vulnerability to quality degradation and style variance. Addressing these issues may require incorporating style-aware augmentation strategies, degradation modeling tailored to historical art, or symmetry-based priors that can better support segmentation under such challenging conditions.

5. Conclusions

Our proposed diffusion-based segmentation framework demonstrates strong performance on both Thangka and ArtBench datasets, effectively identifying symbolic regions such as figures, halos, lotus bases, and backlights. This superiority arises from the iterative refinement process inherent in diffusion models, which allows for the better preservation of structure and detail compared to traditional CNN- or Transformer-based methods. By integrating minimal priors such as prompt guidance and symmetry refinement, the model achieves high accuracy without reliance on large-scale annotations. Despite these strengths, certain limitations remain.

As shown in the visual error analysis, the model performs less reliably on flat-colored modern Thangka due to a lack of internal texture cues, and on degraded historical Thangka where pigment loss and noise obscure semantic boundaries. These issues expose sensitivity to style shifts and visual degradation. Although trained with limited supervision, the model still depends on well-selected examples, which may restrict its performance in diverse real-world scenarios. Potential solutions include domain adaptation or multi-style augmentation. Furthermore, while evaluation metrics such as mAcc and mIoU provide useful indicators, they may not fully reflect perceptual correctness or cultural relevance. Minor boundary shifts can reduce scores, even when the segmentation remains visually and semantically acceptable.

Future work may consider incorporating expert-informed metrics to better capture the nuanced requirements of heritage segmentation tasks. In addition, we plan to investigate texture-invariant feature extractors and degradation-robust priors, which can reduce the dependence on fine-grained surface textures and improve segmentation under style variations or deterioration. Incorporating multi-scale frequency-domain cues such as wavelet or Fourier representations may further strengthen robustness against pigment loss and noise. Overall, our results confirm the potential of diffusion models in this domain while pointing toward directions for enhancing robustness, generalization, and interpretability.