Abstract

This paper addresses the NP-hard problem of solving the rank of a matrix in Robust Principal Component Analysis (RPCA) by proposing a nonconvex fractional regularization approximation. Compared to existing convex regularization (which often yields suboptimal solutions) and nonconvex regularization (which typically requires parameter selection), the proposed model effectively avoids parameter selection while preserving scale invariance. By introducing an auxiliary variable, we transform the problem into a nonconvex optimization problem with a separable structure. We use a more flexible Symmetric Alternating Direction Method of Multipliers (SADMM) to arrive at a solution and provide a rigorous convergence proof. In numerical experiments involving synthetic data, image recovery, and foreground–background separation for surveillance video, the proposed fractional regularization model demonstrates high computational accuracy, and its performance is comparable to that of many state-of-the-art algorithms.

MSC:

49M37; 65K05; 90C26

1. Introduction

Over the past few decades, Robust Principal Component Analysis (RPCA), a crucial analytical tool, has been widely applied in various fields (see references [1,2,3,4,5,6,7]) to reconstruct low-rank matrices X and sparse matrices Y from observed datasets . In practical applications, M typically represents high-dimensional datasets such as surveillance videos, recognized images, or text documents; X denotes the primary structural components of the data (e.g., static backgrounds in surveillance footage, texture features in images, or common vocabulary across documents); while Y represents anomalous components (such as moving objects in videos, image noise, or keywords distinguishing different documents). Given the low rank and sparse nature of the underlying data, we mathematically formulate RPCA as the following model:

where (resp., ) denotes the rank (resp., is the number of non-zeros) of the matrix, and is a trade-off parameter. However, due to the discontinuity of the model, it is NP-hard.

1.1. Related Work

In recent years, theoretical exploration and algorithm design for problem (1) have sparked a significant research boom in academia. Most studies focus on convex and nonconvex relaxation strategies for RPCA. Wright et al. [8] conducted a systematic and comprehensive analysis of the convex relaxation model for problem (1) under relatively mild assumptions. The details of which are as follows:

where represents the nuclear norm (calculated as the sum of all its non-zero singular values of a matrix) to promote the low-rank component of M, and denotes the norm (calculated as maximum of the absolute sums of all columns of a matrix) to encourage the sparse component of M.

For practical applications, and in view of the incomplete observation characteristics of M, Tao and Yuan [9] further extended model (2), and the specific mathematical form is as follows:

where is a subset of the index set , representing the observable entries, and is the orthogonal projection onto the span of matrices vanishing outside of so that the -th entry of is if , otherwise it is zero.

Models (2) and (3) have a concise and clear architecture with a convenient solution process [9]. Under the assumption of incoherence, the convex model is able to recover the low-rank and sparse components with very high probability [1]. However, the model suffers from two limitations that restrict its applicability. Firstly, in real-world applications, the underlying matrices may lack the necessary incoherence guarantees [1] and the data may be severely compromised. In this scenario, the global optimal solution of the model may deviate significantly from the true value. Secondly, RPCA performs an equal scaling reduction for all singular values. The nuclear norm is essentially the norm of the singular values, and the norm has a shrinkage property, which will lead to estimates [10,11] that are biased. This means that the nuclear norm imposes an overpenalty on larger singular values and may end up obtaining only a severely erroneous solution. Based on this, a variety of nonconvex relaxations of rank functions have been proposed. For convenience, we provide the general expression for nonconvex regularization as follows (for specific details, please refer to Table 1):

where denotes the i-th singular value of matrix X.

Table 1.

Nonconvex regularized functions.

Numerous experimental results reveal that, with proper parameter settings, model (4) can often achieve superior performance compared to the nuclear norm. However, parameter estimation for nonconvex approximations is too time-consuming in terms of the computational process. Theoretically, a uniform evaluation criterion is lacking for optimal parameter selection. Therefore, the natural question is: is it possible to find a nonconvex model to avoid parameter estimation?

Fortunately, the fractional model (Nuclear/Frobenius, N/F) can effectively fill this research gap. On the one hand, it avoids the time consumption caused by parameter selection during computation. On the other hand, the fractional structure possesses inherent scale invariance, which also distinguishes it from other nonconvex models. Moreover, Gao et al. [20] demonstrated the significant advantage of the fractional model in approximating the rank function through a concrete example. It should be especially emphasized that when the fractional model is applied to the field of vector optimization, it will be reduced to the model, and the related applications have been explored in several references [21,22,23,24,25,26,27], which will not be discussed in detail in this paper.

1.2. Problem Description

Inspired by the application of the fractional model to the low-rank matrix recovery problem [20], we constructed the following more general optimization model under the premise of incomplete data observation:

where is the Frobenius norm (calculated as the square root of the sum of the squares of all matrix entries), are the balancing parameters of X and Y, is a linear operator, and is an observed vector/matrix. By penalizing the constraint into the objective function, we obtain the following equivalent form of (5):

In comparison to the existing nonconvex models, the proposed model can effectively avoid the suboptimal solution caused by improper parameter selection. In addition, the model is closer to the matrix’s rank and still retains the property of scale invariance.

Through the introduction of a supplementary variable , we reconstruct the primitive formulation (6) into the following optimization problem equipped with a separable structure:

Due to the fact that the Alternating Direction Multiplier Method (ADMM) is known to be a very effective algorithmic framework for solving an optimization problem with a separable structure, and inspired by [28], we adopt a more flexible Symmetric ADMM (SADMM) to solve the problem. The implementation framework of the specific algorithm is described below.

where is the Lagrange multiplier, , is the penalty parameter, and is the augmented Lagrangian function of (7) and is given as

In contrast to the classical ADMM, the SADMM proposed in this work not only increases the intermediate updating process of the multipliers, but also introduces the relaxation factor . The introduction of this relaxation factor effectively improves the performance of the algorithm in terms of numerical computation. The method returns to the classical ADMM when takes the value of 0. Therefore, SADMM is an extension of ADMM. This is also validated in the subsequent numerical experiments.

1.3. Our Contribution

We briefly summarize the work of our research.

- We propose a new rank approximation model for the RPCA. Compared with the existing nonconvex models, this model not only circumvents the parameter restriction, but also exhibits superior performance in terms of approximating the rank.

- We employ the SADMM to solve the proposed model, and prove the convergence of the algorithm under mild conditions.

- We conduct experiments using both synthetic and real-world data. The experimental results demonstrate the superiority of the proposed algorithm and model.

The remainder of this paper is organized as follows. Section 2 describes the algorithmic framework and provides a detailed description of the solution to each subproblem. Section 3 introduces the preliminaries necessary for analyzing the algorithm and rigorously analyzes the convergence. Section 4 presents the numerical results of the proposed algorithm on synthetic and real data. Finally, the main conclusions are summarized in Section 5.

2. Algorithm

We will focus on discussing the strategy for solving problem (7) using the SADMM. We will now detail the solution methods for the X-subproblem, Y-subproblem, and Z-subproblem.

- (1)

- Solving the X-subproblem: For the variables , , and , and we introduce an auxiliary variable N to reformulate the X-subproblem, which is described as follows:

- (2)

- Solving the Y-subproblem: For the variables , , and ,

- (3)

- Solving the Z-subproblem: For the variables , , and ,

The specific algorithm implementation process is summarized in Algorithm 1.

| Algorithm 1 SADMM for solving (7) |

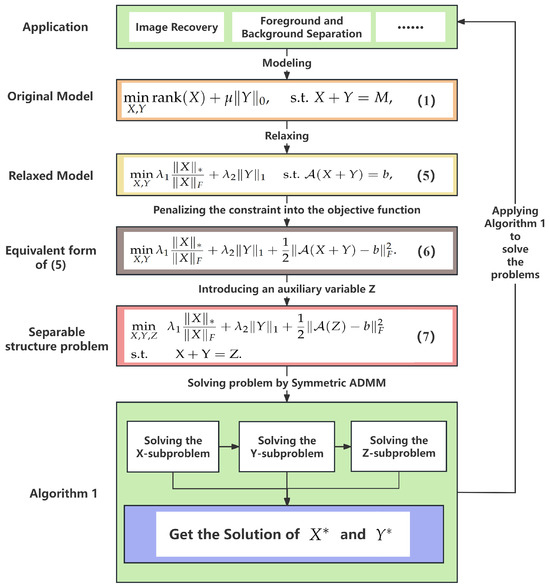

To help readers follow the overall process, the summarized algorithm block diagram is given in Figure 1.

Figure 1.

Summarized algorithm block diagram.

3. Convergence

This section mainly analyzes the convergence of the proposed algorithm. First, we provide the necessary definitions and concepts.

3.1. Preliminaries

For an extended-real-valued function g, the domain of g is defined as

A function g is closed if it is lower semicontinuous and is proper if and for any . For any point and subset , the Euclidean distance from to S is defined by

where the notation is norm (calculated as the square root of the sum of squares of all vector entries). For a proper and closed function , a vector is a subgradient of g at , where denotes the subdifferential of g [29] defined by

with being the set of regular subgradients of g at :

Note that if is continuously differentiable and is proper and lower semicontinuous, it follows from [29] that . A point is called a (limiting-) critical point or stationary point of F if it satisfies , and the set of critical points of F is denoted by .

Definition 1.

We say that is a critical point of the augmented Lagrangian function if it satisfies

3.2. Convergence

Next, we will analyze the convergence. Reviewing the iteration scheme (8a)–(8e), we next propose the following first-order optimality conditions for the subproblems in Algorithm 1.

Lemma 1.

Let be the sequence generated by Algorithm 1. Then, we have

Proof.

Adding (8c) and (8e), we get

and thus

Based on the optimality condition of (8a),

Similarly,

Therefore,

By the optimality condition of (8b),

Substituting (16) into the above inclusion yields, we get

We also get the following equality by (8d)’s optimality condition,

And then from (8e),

In addition,

Therefore, (15) holds. This completes the proof. □

Lemma 2.

Let be the sequence generated by Algorithm 1. For any and , we have the sequence , which is decreasing, and

where .

Proof.

From (8a),

Note that is strongly convex and, with a modulus of at least ,

where the forth equality follows from (16), and the last inequality follows from [30], Lemma 1. Next, by using the definition of and (8c),

From (16),

Lemma 3.

Let be the sequence generated by Algorithm 1. If is bounded, then for any and , the whole sequence is bounded.

Proof.

From Lemma 2,

where the second equality yields from (17) and the last inequality arises from [30], Lemma 1. Since , we can easily see that . By using the boundedness of and nonnegativity of the last inequality of (24), we find that , , and are bounded. According to the triangle inequality , we find that is also bounded. In addition, from the boundedness of , we can derive that linear operator is bounded. So, we have

which implies that is also bounded. Hence, is bounded. This completes the proof. □

Remark 1.

The boundedness of is required throughout the subsequent analysis. In image processing, the elements in X represent pixel values (), making the assumption of boundedness for X reasonable. Some studies [20,26,27] even directly constrain X within a specific box to replace the assumption of boundedness.

Lemma 4.

Let be the sequence generated by Algorithm 1. If is bounded, then for any and , there exists a positive constant m such that

Proof.

Lemma 5.

Let be the sequence generated by Algorithm 1. If is bounded, then for any and , we have

Proof.

By utilizing the Kurdyka–Lojasiewicz (KL) property [30,31] and previous conclusions, we can ensure the convergence of the generated sequence to a critical point. In the following theorems, we summarize these results, and the proof process is similar to the proof techniques in references [28,30,31,32,33,34,35]. Due to the limited space, the specific proof steps are omitted here.

Theorem 1.

Let be the sequence generated by Algorithm 1 and , . If is bounded, then any cluster point of the sequence is a critical point of (7).

Theorem 2.

Let be the sequence generated by Algorithm 1. If , , , and is bounded, then has finite length, i.e.,

and hence globally converges to the critical point of (7).

4. Numerical Results

We apply the proposed SADMM to principal component analysis experiments on synthetic data, image recovery, and background and foreground separations of surveillance videos to validate its effectiveness. For these experiments, we focus on addressing the following RPCA problem:

All codes were written and run using MATLAB 2021a software on a Windows 10 laptop equipped with an Intel(R) Core(TM) i7-1165G7 processor, a 2.80 GHz clock speed, and 16 GB of RAM. All the numerical results are the averages of 10 random running trials.

4.1. Synthetic Data

The purpose of this subsection is to compare the numerical performance of the classical ADMM () and the proposed SADMM under the same experimental conditions. We mainly tested the effect of different values of on the numerical results.

Let be the observed matrix, where X and Y represent the low-rank and sparse components to be recovered, respectively. The dimension of M is . We generate rank-r matrix X via , where and are randomly generated using the MATLAB 2021a . The low rank of X is controlled by (i.e., ), the sparsity of Y is controlled by ( i.e., ), is the ratio of sample (observed) entries (i.e., ), and is the cardinality of ). Based on the , we randomly selected non-zero positions and set the elements at them to random numbers within the range to construct the sparse matrix Y. We started process with the initial iteration values . We set the experimental parameters as , , , , and , , and the maximum iteration numbers and for the outer and inner iterations, respectively, as 500 and 5. The stopping criterion is set to in this subsection.

Table 2 presents CPU time (Time), the iteration number (Iter.), and relative error (RE:=, where is the recovered matrix) for different values of . The numerical results reveals that delivers the optimal performance while maintaining a relatively stable relative error.

Table 2.

The iteration time and number of iterations corresponding to different values.

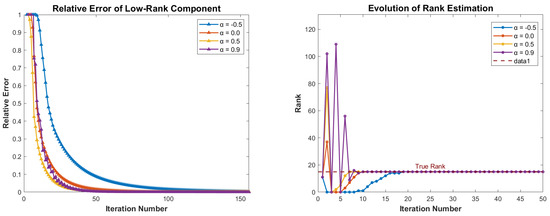

We further investigated the convergence properties of the SADMM. Figure 2 shows the evolution of the relative error and the rank of low-rank matrix X as the number of iterations increases, for parameters , respectively. Observing the left side of Figure 2, it is evident that when , the SADMM exhibits a faster convergence rate compared to the traditional ADMM. From the right side of Figure 2, we can conclude that, during the initial few iterations, the rank changes caused by are more pronounced than ; however, can more quickly reach the true low-rank state, and the low-rank characteristics remain stable throughout the iteration process.

Figure 2.

Evolutions of the relative error of low-rank (left) and rank estimation (right) for , , , and .

Based on the above results, it can be observed that the SADMM achieves the most optimal performance when . To further evaluate the advantage of the proposed model, we solve it with the SADMM with the parameter set to (the optimal parameter) and apply it to the subsequent image processing and video surveillance experiments.

4.2. Image Recovery

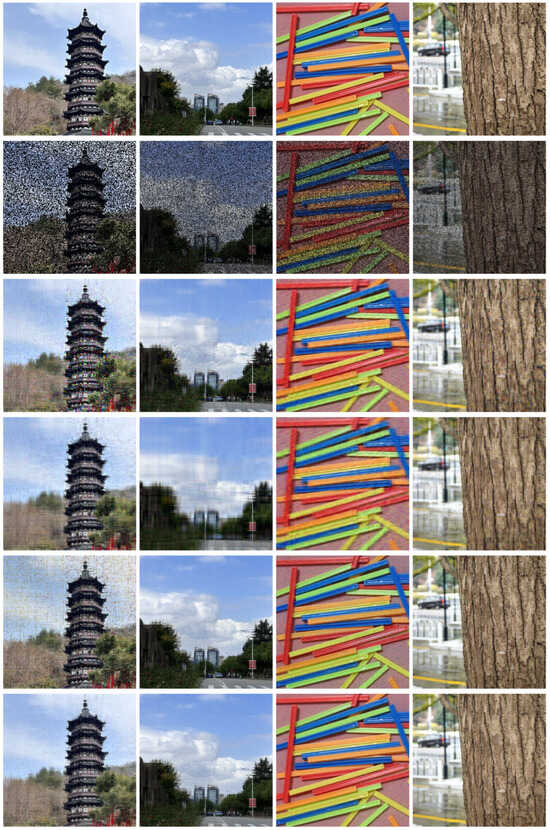

In this subsection, we mainly evaluate the effectiveness of the SADMM through the recovery of real images. In practical applications, the original image is often not low-rank, but its essential information is often dominated by high singular values. Therefore, we can approximately regard it as a low-rank matrix. The observed original image is often damaged or obscured, resulting in the loss of some elements. That is, the observed matrix M can be approximately regarded as the sum of the low-rank matrix X and the sparse noise matrix Y. Therefore, the purpose of this subsection is to recover the low-rank part from the partially damaged image, which is the matrix completion problem. We compare the SADMM with three other classical algorithms used for matrix completion problems. They are the Accelerated Iteratively Reweighted Nuclear Norm algorithm (AIRNN) [36], Singular Value Thresholding algorithm (SVT) [37], and the Schatten Capped p norm minimization method (SCp) [16]. In this experiment, the parameters in the SADMM were set as , , , , and , . The parameters in AIRNN, SVT, and SCp were all set to the optimal parameters used in the corresponding literature, and .

Figure 3 presents four image samples captured by our team, including four color images: “Tower ()”, “Sky ()”, “Spillikins ()” and “Texture ()”. We simulated partial image damage by randomly removing of the real data (for color images, each channel was processed separately and then averaged). Figure 3 also shows the performance of different algorithms in recovering damaged images.

Figure 3.

Image restoration results under 50% random pixel loss using different methods. Scene contains Tower, Sky, Spillikins, and Texture. From top to bottom: Original, Damaged, AIRNN, SVT, SCp, SADMM.

In order to further evaluate the performance of the SADMM and the proposed model, Table 3 lists the numerical results of the four algorithms, AIRNN, SVT, SCp, and SADMM, including the Signal-to-Noise Ratio (SNR) and relative error (RE) between the original image X and the recovered image . The SNR was calculated with the following formula:

where is the mean of the original data.

Table 3.

Numerical results of different algorithms on various images.

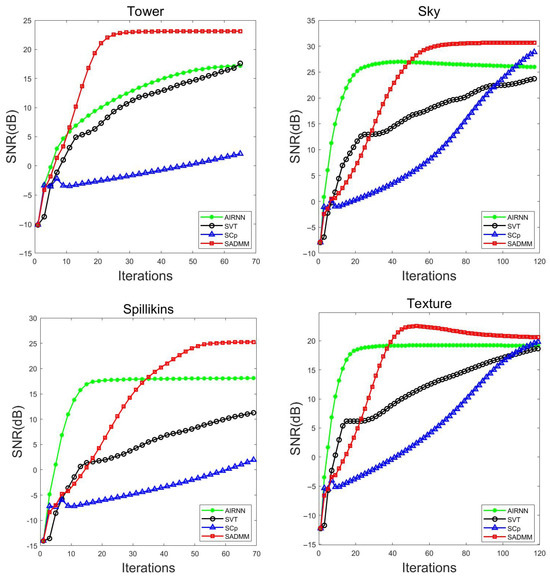

The numerical results in Table 3 show that the SADMM demonstrated better recovery performance when solving the proposed model. Figure 4 further shows the evolution tendency of the SNR values of the four methods as the number of iterations increased. From Figure 4, it can be observed that, under the same number of iterations, the proposed SADMM achieved better image recovery results than the other three algorithms.

Figure 4.

Evolution of SNR values as the number of iterations increases.

4.3. Foreground and Background Separation in Surveillance Video

In this subsection, We apply the proposed algorithm to the problem of separating the foreground and background in surveillance video, which is a typical RPCA problem. We test the algorithm using two surveillance video datasets: restaurant and shopping mall surveillance videos. They can be obtained from https://hqcai.org/datasets/restaurant.mat (accessed on 13 July 2025) and https://hqcai.org/datasets/shoppingmall.mat (accessed on 13 July 2025) of [38]. The details of these videos, including the resolution and the number of frames, are shown in Table 4, where each column of the matrix corresponds to a frame of the video. The index set of missing information is randomly determined based on the specified sample ratio . In the following experiment, we set , which means that of the information in the test video was randomly removed.

Table 4.

Dataset description. The video name, resolution, and the number of frames.

In order to further evaluate algorithm performance and model accuracy, we compare the parallel split augmented Lagrange method from [39] with the Optimal Proximal Augmented Lagrange Method (OPALM) from [40]. For clarity, the dynamic step size and constant step size strategies used in [39] are labeled Alg1d and Alg1c, respectively. The parameter settings of the SADMM are , , , , , and , respectively. The relevant parameter settings of Alg1d, Alg1c, and OPALM are the same as those in the corresponding literature, with the stopping criteria .

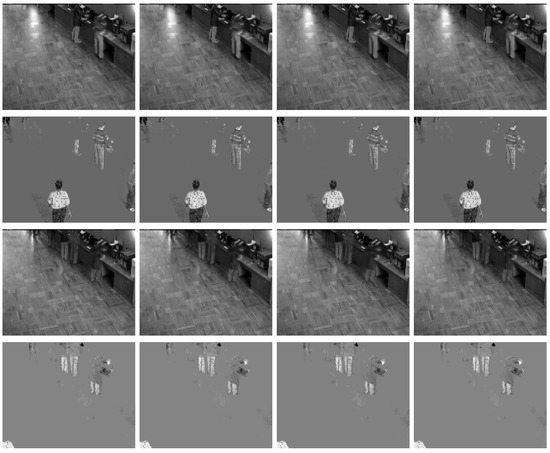

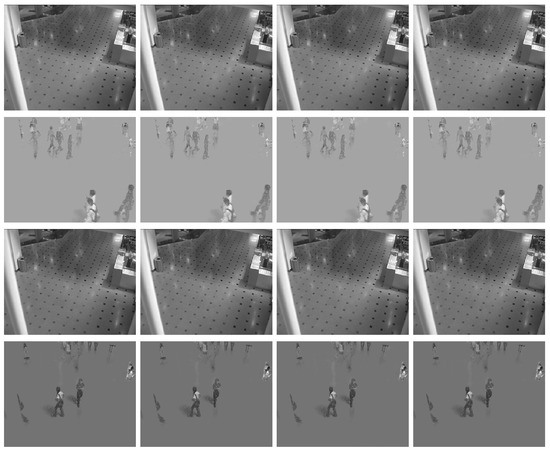

Figure 5 shows the original frames and the corrupted frames of the 150th and 200th frames in the restaurant surveillance video and the 350th and 400th frames in the shopping mall surveillance video.

Figure 5.

The 150th and 200th frames in the restaurant surveillance video and the 350th and 400th frames in the shopping mall surveillance video, showing the original frames (top row) and the corrupted frames (bottom row).

Figure 6 shows the results of different algorithms separating the foreground and background images from a video sequence. The images displayed in Figure 6 are selected from the 150th and 200th frames of the restaurant video and the 350th and 400th frames of the shopping mall video. Table 5 provides a detailed list of the rank of the low-rank matrix (denoted as rank()), the sparsity of the sparse matrix (denoted as ), and the relative error (denoted as ) for the reconstructed matrices using different methods. According to the data presented in Table 5, it can be observed that, at the end of the iteration process, the ranks of the low-rank matrices obtained by each algorithm remain consistent, and the differences in the sparsity of sparse matrices are minimal. In addition, the SADMM demonstrated smaller relative errors across the two datasets, further indicating that the fractional regularization model proposed in this paper achieves higher accuracy.

Figure 6.

Foreground and background separation in surveillance videos separated by the tested algorithms. Columns 1–4 correspond to the results of the Alg1d, Alg1c, OPALM, and SADMM, respectively. From top to bottom: the 150th and 200th frames of the restaurant video and the 350th and 400th frames of the shopping mall video.

Table 5.

Numerical results for background extraction on surveillance videos.

5. Conclusions

This paper proposes a nonconvex fractional regularization model to address the rank approximation problem in robust principal component analysis. A more general symmetric alternating direction method of multipliers is employed to solve it, and the convergence is proved. The final results also validate the effectiveness of our algorithm. However, the iterative solution of the X subproblem imposes certain limitations on the scalability of this algorithm. Future work will explore explicit solutions for subproblem X and test the model on larger-scale real-world datasets.

Author Contributions

Formal analysis and writing—original draft preparation, Z.G.; validation, X.Z. and S.Z.; writing—review and editing, S.Z. and Y.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (12471290, 120081), Suqian Sci&Tech Program (M202206), Open Fund of the Key Laboratory of NSLSCS, Ministry of Education and Qing Lan Project.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Candès, E.J.; Li, X.D.; Ma, Y.; Wright, J. Robust principal component analysis? J. ACM 2011, 58, 11. [Google Scholar] [CrossRef]

- Huang, Y.; Wang, Z.; Chen, Q.; Chen, W. Robust principal component analysis via truncated L1−2 minimization. In Proceedings of the 2023 International Joint Conference on Neural Networks, Gold Coast, Australia, 18–23 June 2023; pp. 1–9. [Google Scholar] [CrossRef]

- Bian, J.T.; Zhao, D.D.; Nie, F.P.; Wang, R.; Li, X.L. Robust and sparse principal component analysis with adaptive loss minimization for feature selection. IEEE T. Neur. Net. Lear. 2022, 35, 3601–3614. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.S.; Han, D.R.; Zhang, W.X. A customized inertial proximal alternating minimization for SVD-free robust principal component analysis. Optimization 2023, 73, 2387–2412. [Google Scholar] [CrossRef]

- Shen, Y.; Xu, H.Y.; Liu, X. An alternating minimization method for robust principal component analysis. Optim. Method Softw. 2018, 34, 1251–1276. [Google Scholar] [CrossRef]

- Zhuang, S.T.; Wang, Q.W.; Chen, J.F. Dual graph Laplacian RPCA method for face recognition based on anchor points. Symmetry 2025, 17, 691. [Google Scholar] [CrossRef]

- Zhang, W.T.; Chen, X.H. Robust discriminative non-negative and symmetric low-rank projection learning for feature extraction. Symmetry 2025, 17, 307. [Google Scholar] [CrossRef]

- Wright, J.; Peng, Y.G.; Ma, Y.; Ganesh, A.; Rao, S. Robust principal component analysis: Exact recovery of corrupted low-rank matrices via convex optimization. In Proceedings of the 23rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 7–10 December 2009; pp. 2080–2088. Available online: https://dl.acm.org/doi/abs/10.5555/2984093.2984326 (accessed on 13 July 2025).

- Tao, M.; Yuan, X.M. Recovering low-rank and sparse components of matrices from incomplete and noisy observations. SIAM J. Optim. 2011, 21, 57–81. [Google Scholar] [CrossRef]

- Fan, J.Q.; Li, R.Z. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 2001, 96, 1348–1360. [Google Scholar] [CrossRef]

- Zhang, C.H. Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 2010, 38, 894–942. [Google Scholar] [CrossRef] [PubMed]

- Zhang, T. Analysis of multi-stage convex relaxation for sparse regularization. J. Mach. Learn. Res. 2010, 11, 1081–1107. Available online: https://dl.acm.org/doi/10.5555/1756006.1756041 (accessed on 13 July 2025).

- Candès, E.J.; Wakin, M.B.; Boyd, S.P. Enhancing sparsity by reweighted ℓ1 minimization. J. Fourier Anal. Appl. 2008, 14, 877–905. [Google Scholar] [CrossRef]

- Friedman, J.H. Fast sparse regression and classification. Int. J. Forecasting 2012, 28, 722–738. [Google Scholar] [CrossRef]

- Nie, F.P.; Huang, H.; Ding, C. Low-rank matrix recovery via efficient schatten p-norm minimization. In Proceedings of the 26-th AAAI Conference on Artificial Intelligence, Toronto, ON, Canada, 22–26 July 2012; pp. 655–661. Available online: https://dl.acm.org/doi/10.5555/2900728.2900822 (accessed on 13 July 2025).

- Li, X.L.; Zhang, H.Y.; Zhang, R. Matrix completion via non-convex relaxation and adaptive correlation learning. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 1981–1991. [Google Scholar] [CrossRef] [PubMed]

- Gao, C.X.; Wang, N.Y.; Yu, Q.; Zhang, Z.H. A feasible nonconvex relaxation approach to feature selection. In Proceedings of the 25th AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 7–11 August 2011; pp. 356–361. [Google Scholar] [CrossRef]

- Geman, D.; Yang, C.D. Nonlinear image recovery with half-quadratic regularization. IEEE T. Image Process. 1995, 4, 932–946. [Google Scholar] [CrossRef] [PubMed]

- Trzasko, J.; Manduca, A. Highly undersampled magnetic resonance image reconstruction via homotopy ℓ0-minimization. IEEE T. Med. Imaging 2009, 28, 106–121. [Google Scholar] [CrossRef]

- Gao, K.X.; Huang, Z.H.; Guo, L.L. Low-rank matrix recovery problem minimizing a new ratio of two norms approximating the rank function then using an ADMM-type solver with applications. J. Comput. Appl. Math. 2024, 438, 115564. [Google Scholar] [CrossRef]

- Rahimi, Y.; Wang, C.; Dong, H.B.; Lou, Y.F. A scale-invariant approach for sparse signal recovery. SIAM J. Sci. Comput. 2019, 41, A3649–A3672. [Google Scholar] [CrossRef]

- Wang, C.; Yan, M.; Rahimi, Y.; Lou, Y.F. Accelerated schemes for the L1/L2 minimization. IEEE Trans. Signal Process. 2020, 68, 2660–2669. [Google Scholar] [CrossRef]

- Tao, M. Minimization of L1 over L2 for sparse signal recovery with convergence guarantee. SIAM J. Sci. Comput. 2022, 44, A770–A797. [Google Scholar] [CrossRef]

- Hurley, N.; Rickard, S. Comparing measures of sparsity. IEEE T. Inform. Theory 2009, 55, 4723–4741. [Google Scholar] [CrossRef]

- Yin, P.H.; Esser, E.; Xin, J. Ratio and difference of l1 and l2 norms and sparse representation with coherent dictionaries. Commun. Inf. Syst. 2014, 14, 87–109. [Google Scholar] [CrossRef]

- Wang, C.; Tao, M.; Nagy, J.G.; Lou, Y.F. Limited-angle CT reconstruction via the L1/L2 minimization. SIAM J. Imaging Sci. 2021, 14, 749–777. [Google Scholar] [CrossRef]

- Wang, C.; Tao, M.; Chuah, C.N.; Nagy, J.G.; Lou, Y.F. Minimizing L1 over L2 norms on the gradient. Inverse Probl. 2022, 38, 065011. [Google Scholar] [CrossRef]

- Wu, Z.M.; Li, M.; Wang, D.Z.W.; Han, D.R. A symmetric alternating direction method of multipliers for separable nonconvex minimization problems. Asia. Pac. J. Oper. Res. 2017, 34, 1750030. [Google Scholar] [CrossRef]

- Rockafellar, R.T.; Wets, R.J.B. Variational Analysis; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar] [CrossRef]

- Bolte, J.; Sabach, S.; Teboulle, M. Proximal alternating linearized minimization for nonconvex and nonsmooth problems. Math. Program. 2014, 146, 459–494. [Google Scholar] [CrossRef]

- Attouch, H.; Bolte, J.; Svaiter, B.F. Convergence of descent methods for semi-algebraic and tame problems: Proximal algorithms, forward−backward splitting, and regularized Gauss−Seidel methods. Math. Program. 2013, 137, 91–129. [Google Scholar] [CrossRef]

- Guo, K.; Han, D.R.; Wu, T.T. Convergence of alternating direction method for minimizing sum of two nonconvex functions with linear constraints. Int. J. Comput. Math. 2016, 94, 1653–1669. [Google Scholar] [CrossRef]

- Ge, Z.L.; Zhang, X.; Wu, Z.M. A fast proximal iteratively reweighted nuclear norm algorithm for nonconvex low-rank matrix minimization problems. Appl. Numer. Math. 2022, 179, 66–86. [Google Scholar] [CrossRef]

- Ge, Z.L.; Wu, Z.M.; Zhang, X.; Ni, Q. An extrapolated proximal iteratively reweighted method for nonconvex composite optimization problems. J. Glob. Optim. 2023, 86, 821–844. [Google Scholar] [CrossRef]

- Ge, Z.L.; Zhang, S.Y.; Zhang, X.; Cui, Y. A new proximal iteratively reweighted nuclear norm method for nonconvex nonsmooth optimization problems. Mathematics 2025, 13, 2630. [Google Scholar] [CrossRef]

- Phan, D.N.; Nguyen, T.N. An accelerated IRNN-Iteratively Reweighted Nuclear Norm algorithm for nonconvex nonsmooth low-rank minimization problems. J. Comput. Appl. Math. 2021, 396, 113602. [Google Scholar] [CrossRef]

- Cai, J.F.; Candès, E.J.; Shen, Z.W. A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 2010, 20, 1956–1982. [Google Scholar] [CrossRef]

- Giampouras, P.; Cai, H.Q.; Vidal, R. Guarantees of a preconditioned subgradient algorithm for overparameterized asymmetric low-rank matrix recovery. arXiv 2025, arXiv:2410.16826. [Google Scholar] [CrossRef]

- Jiang, F.; Lu, B.Y.; Wu, Z.M. Revisiting parallel splitting augmented Lagrangian method: Tight convergence and ergodic convergence rate. CSIAM T. Appl. Math. 2024, 5, 884–913. [Google Scholar] [CrossRef]

- He, B.S.; Ma, F.; Yuan, X.M. Optimal proximal augmented Lagrangian method and its application to full Jacobian splitting for multi-block separable convex minimization problems. IMA J. Numer. Anal. 2020, 40, 1188–1216. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).