Abstract

The pathfinder algorithm (PFA) starts with a random search for the initial population, which is then partitioned into only a pathfinder phase and a follower phase. This approach often results in issues like poor solution accuracy, slow convergence, and susceptibility to local optima in the PFA. To address these challenges, a multi-strategy fusion approach is proposed in the symmetry-enhanced, improved pathfinder algorithm-based multi-strategy fusion for engineering optimization problems (IPFA) for function optimization problems. First, the elite opposition-based learning mechanism is incorporated to improve the population diversity and population quality, to enhance the solution accuracy of the algorithm; second, to enhance the convergence speed of the algorithm, the escape energy factor is embedded into the prey-hunting phase of the GWO and replaces the follower phase in the PFA, which increases the diversity of the algorithm and improves the search efficiency of the algorithm; lastly, to solve the problem of easily falling into the local optimum, the optimal individual position is perturbed using the dimension-by-dimension mutation method of t-distribution, which helps the individual to jump out of the local optimum rapidly and advance toward other regions. The IPFA is used for testing on 16 classical benchmark test functions and 29 complex CEC2017 function sets. The final optimization results of PFA and IPFA in pressure vessels are 5984.8222 and 5948.3597, respectively. The final optimization results in tension springs are 0.012719 and 0.012699, respectively, which are comparable with the original algorithm and other algorithms. A comparison between the original algorithm and other algorithms shows that the IPFA algorithm is significantly enhanced in terms of solution accuracy, and the lower engineering cost further verifies the robustness of the IPFA algorithm.

1. Introduction

In recent years, the application of intelligent optimization algorithms in the domains of engineering, health, and economics is growing, specifically in problems such as image segmentation [1], intelligent transport system [2], optimal scheduling [3], and path planning [4], among others [5,6,7,8,9]. Intelligent optimization algorithms are largely derived from the behavioral and hunting patterns of organisms in the natural world. Scholars have proposed algorithms such as particle swarm optimization (PSO) [10], differential evolution (DE) [11], the whale optimization algorithm (WOA) [12], multi-verse optimizer (MVO) [13], and the sine cosine algorithm (SCA) [14] by studying the collective behavior of organisms or physical phenomena. In contrast to conventional optimization algorithms, these emerging algorithms possess the advantages of fewer parameters, simpler principles, and enhanced robustness. They have found successful applications across diverse scientific research domains.

The pathfinder algorithm (PFA) [15], a swarm intelligence optimization algorithm based on the concepts of biological evolution and swarm intelligence, was introduced by Yapici et al. in 2019. In order to obtain the best answers, the algorithm simulates the processes of natural selection and biological evolution. The algorithm stands out for its adaptive parameter settings, diverse exploration strategies, robustness, and high efficiency. It has found widespread applications in various domains, including machine learning, signal processing, and image processing. However, PFA is not without its challenges, including slow convergence speed, suboptimal solution accuracy, and susceptibility to local optimization.

Sonali Priyadarshani et al. [16] employed the PFA to determine optimal parameters for the FOTID controller. This application aimed to enhance the generation control performance of multi-source power systems by integrating PFA with the AGC system and FOTID controller. Varaprasad Janamala [17] utilized the PFA to simulate the path search process for optimizing the configuration scheme of a solar PV system. The objective was to enhance the resilience and recovery of the system. Eid A. Gouda et al. [18] conducted an analysis and evaluation of the performance of fuel cells under dynamic loads and varying environments. They achieved this by applying the PFA to a fuel cell system. The study demonstrated how the fuel cell system adapts to diverse operating conditions and load demands, and further proposed improvement and optimization strategies. Zhi Yuan et al. [19] optimized the speed trajectory of a fuel cell-based locomotive by employing an enhanced PFA to achieve optimal hydrogen consumption.

To address the issue of low solution accuracy in the PFA, Tang [20] introduced a wizard mechanism to enhance the algorithm’s performance. Pathfinder individuals equipped with the wizard mechanism collect valuable information from their surroundings and share this information with followers. Additionally, a novel variation probability, pcR, is defined to improve the algorithm’s capability to escape local optima. A preceding researcher [21] integrated the teaching-learning algorithm with the PFA and introduced an exponential step operator to optimize individual followers during the follower phase. This enhancement notably increased the overall optimization accuracy and convergence speed of the algorithm. However, it did not completely address the issue of a too-restricted search range resulting from the random distribution of the initial population in the algorithm. The pathfinder serves as a pivotal entity in the PFA algorithm, Hu Rong [22] introduced a distance-based selection mechanism to broaden the search range of the pathfinder. Additionally, a self-learning search strategy was devised to facilitate a multi-neighborhood search on the updated pathfinder individuals, thereby enhancing the algorithm’s capability for local exploration. However, this approach does not consider the impact on the follower group when pathfinder individuals encounter local optima. Lu Miao [23] enhanced the optimization performance of the grey wolf optimization algorithm by leveraging the distinctive updating pattern of the pathfinder and followers within the PFA. This fusion resulted in optimization algorithms characterized by high convergence accuracy. However, the inherent challenge of low optimization accuracy in the PFA itself remains to be universally resolved. In the work by Sun Zhezhong [24], a dynamic opposition-based learning strategy was employed to enhance the quality of the initial population. A novel leapfrog archive was introduced for preserving and generating new optimal individuals, guiding individuals trapped in local optima to escape their current positions. Furthermore, a two-jump model was proposed to harmonize the algorithm’s global search and local exploitation capability. However, this approach did not address the challenge of the PFA’s inefficiency in searching for the optimum on complex benchmark functions.

The pathfinder algorithm comprises only two stages: the pathfinder stage and the follower stage. However, when the pathfinder is trapped in a local region, the follower updates according to the pathfinder’s position, resulting in slow convergence and a propensity to fall into local optima. Moreover, random initialization often yields lower-quality initial solutions, thereby diminishing the algorithm’s solving accuracy. To address these challenges, this paper proposes the symmetry-enhanced, improved pathfinder algorithm-based multi-strategy fusion for engineering optimization problems (IPFA), which integrates multiple strategies. Firstly, the introduction of an elite opposition-based learning mechanism aims to inject learned superior individuals into the initial stage of the pathfinder optimization algorithm. This enhances both the diversity and quality of individuals within the entire population. By selecting individuals with superior performance in the search space and introducing them into the initial population, the algorithm is prompted to explore the solution space more comprehensively, thereby improving accuracy. Next, the escape energy factor is integrated into the prey-hunting phase of the grey wolf algorithm, replacing the follower phase in the pathfinder algorithm. This addition enables individuals to opt for escape during the search process, aiming to enhance algorithm diversity, search efficiency, and robustness, and ultimately improve convergence speed. Ultimately, employing the dimension-by-dimension mutation method to perturb the position of the optimal individual aims to facilitate a swift departure from local optimal solutions and steer toward alternative regions. This strategy proves effective by disrupting the local structure of the current solution through small random perturbations applied to each dimension of the optimal individual. Consequently, it guides the search process toward novel and potentially more optimal directions, thereby reducing the likelihood of the algorithm becoming trapped in local optimality. In summary, the amalgamation of these mechanisms into the pathfinder algorithm yields a comprehensive and robust optimization framework. This framework addresses aspects such as global optimality search, diversity maintenance, escape mechanisms, and perturbation strategies, thereby enhancing the algorithm’s overall robustness and performance.

This paper’s main contributions can be summed up as follows:

- In order to improve the quality of the initial solution of the PFA and enhance the accuracy of the algorithm, an elite reverse learning initialized population is introduced instead of a random initial population;

- In order to improve the convergence speed of PFA, the escape energy factor will be embedded into the prey-hunting phase of the grey wolf algorithm and replace the follower phase in the pathfinder algorithm;

- To ameliorate the problem of the PFA falling into a local optimum, the optimal individual position is perturbed using a t-distribution dimension-by-dimension variation method.

The paper’s following sections are organized as follows: the second section outlines the standard pathfinder algorithm, presenting its pseudo-code and flowchart. In the third part, an enhanced pathfinder algorithm (IPFA) is introduced, amalgamating three improvements. The fourth section details the experimental simulations and result analysis, with applications to two engineering design problems. The fifth section concludes by summarizing the key findings of the paper.

2. PFA

2.1. Inspiration

The pathfinder algorithm draws inspiration from exploratory behavior in biology, particularly the behavior of animals searching for food, water, and safe shelter in unfamiliar environments. The algorithm leverages the innate exploratory instincts observed in animals, including birds during migration and insects while foraging for food.

In these biological behaviors, explorers make decisions by integrating information about the environment and internal drivers, dynamically adjusting their paths of action to secure optimal survival strategies. Taking cues from this behavioral pattern, the pathfinder algorithm relies on the cooperative efforts of multiple explorers within the search space. In this approach, each explorer continually adapts its movement direction and speed based on individual experience and information about the surrounding environment, with the ultimate goal of uncovering the globally optimal solution.

The inspiration for the pathfinder algorithm combines biological exploratory behavior with mathematical optimization algorithms aimed at finding optimal solutions in complex search spaces. The algorithm can strike a balance between local and global searches, better avoiding slipping into local optimal solutions by mimicking the behavior of explorers in unfamiliar areas. Because of this motivation, the pathfinder algorithm has the potential to be applied to a wide range of problem domains, particularly for efficient global optimization searches in situations where there is a significant degree of uncertainty and complexity in the problem’s solution space.

2.2. Mathematical Model

The population is split into two categories in PFA: pathfinders and followers. The pathfinder is the person with the highest fitness value, while the remaining people are classified as followers.

The position vector X in the PFA will consist of N individuals in the population of dimension d. Thus, the populations form an dimensional matrix, i.e.,

The person with the best fitness value is called the pathfinder in the population’s iterative process, where N is the population size and d is the geographical dimension. All followers adjust their positions toward the pathfinder using the ‘updating’ method described in Equation (2), as follows:

The expression provided indicates that t is the current iteration number, is the maximum iteration number of the algorithm, is the pathfinder’s position at the t-th iteration, is the pathfinder’s position at the t-generation, and is the pathfinder’s position at the generation. is the pathfinder’s step factor in the range [0,1], is a random number in the range [−1,1], and A is the pathfinder’s randomized step size, which is determined by the multi-directionality of the value of .

After the pathfinder is updated, the follower is updated according to the pathfinder position in Equation (4):

In this context, the positions of the ith and jth followers at the t-th iteration are shown by the symbols and , respectively. The updated position of the ith follower following the update is represented by . Vectors and are random. The interaction coefficient between the pathfinder and followers is denoted by , while the interaction coefficient among followers is represented by . Vectors and have a uniform distribution within the range [1,2]. Random numbers with uniform distribution in the interval [0,1] make up and . A random value in the interval [−1,1] that determines the direction of the follower movement is denoted by , and stands for a factor that adds randomness to the follower movement. The separation between the ith and jth followers is denoted by . Algorithm 1 is as below.

| Algorithm 1 Pseudo-code of PFA. |

|

2.3. PFA Steps

The pathfinder algorithm’s pseudo-code and particular steps are as follows:

Step 1: Set the initialization parameters of the algorithm, including the population size N, the upper and lower bounds ( and ) of the search range, and the maximum iterations ();

Step 2: Initialize the population at random;

Step 3: The population’s fitness values are computed and sorted based on the fitness function, and the pathfinder is the person with the lowest fitness value;

Step 4: Pathfinder phase. Pathfinder positions are updated according to Equation (2);

Step 5: Follower phase. Update the follower position according to Equation (4);

Step 6: The population’s individuals’ fitness values are updated, and the person with the lowest fitness value becomes the new pathfinder;

Step 7: If the iteration termination condition is met, repeat steps 4 through 7 continuously and output the global optimal solution.

3. IPFA Improvements

3.1. Elite Opposition-Based Learning Initialized Population

Opposition-based learning (OBL) [25,26], introduced by Tizhoosh in 2005 within the realm of intelligent computing, represents a novel concept aimed at enhancing the optimization process. Its primary objective is to incorporate high-quality individual solutions, thereby improving the algorithm’s search efficiency and convergence performance while minimizing unnecessary explorations in the search space. This strategic integration enables the algorithm to converge to optimal solutions more swiftly. In this context, the opposition point is defined as follows:

Definition 1.

Opposite point. Given a point in a d-dimensional space, where with and representing the upper and lower bounds of the search range, for , the opposite point can be defined as follows:

Definition 2.

Elite opposition solution. Let the elite individuals in the population be denoted as , where d represents the dimensionality. The elite opposition-based solution, denoted as , is defined as follows:

where [0,1] of random numbers, , , . If crosses the boundary, the position is reset using the following equation:

Elite opposition-based learning (EOBL) [27] presents clear advantages over traditional reverse learning methods. This mechanism capitalizes on the valuable information carried by elite individuals in the population. It begins by forming a reverse population from these elite individuals and then selects the best individuals from both the reverse population and the current population to form a new population. EOBL has garnered widespread adoption among researchers. For instance, YuXin Guo [28] employed EOBL to optimize the Harris Hawks optimization algorithm, thereby enhancing population diversity and quality. Similarly, ChengWang Xie et al. [29] integrated EOBL into the fireworks explosion algorithm to bolster its global search capability.

3.2. Grey Wolf Optimizer

The grey wolf optimizer (GWO) [30] is an optimization algorithm based on the behavior of grey wolf packs in nature, which simulates the collaborative and competitive relationships between leaders and followers in a grey wolf pack. Due to its simple but effective search mechanism, GWO shows strong performance in solving optimization problems. The core idea of this algorithm is to mimic the behavioral style of a grey wolf pack, which includes synergy between the leader grey wolf, the deputy leader grey wolf, and the regular grey wolves. The leader grey wolf is responsible for guiding the entire pack to move in the direction of a more optimal solution, while the follower grey wolves search as the leader guides them. By simulating this collective intelligence and collaborative behavior of the grey wolf pack, GWO can efficiently search the solution space and find the global optimal solution or a near-optimal solution. The GWO algorithm is simple to understand, easy to implement, and shows good robustness and performance in solving various types of optimization problems. As a result, numerous scholars have devised adaptive weight factors for position updates to enhance the search speed and accuracy of the grey wolf optimizer (GWO). Xinming Zhang et al. [31] proposed a hybrid algorithm (HGWOP) that integrates particle swarm optimization (PSO) and GWO. They introduced a differential perturbation strategy into GWO, resulting in SDPGWO. Additionally, a stochastic mean example learning strategy was applied to PSO, yielding MELPSO. The efficient hybrid algorithm HGWOP was then created by seamlessly integrating SDPGWO and MELPSO. Yun Ou et al. [32] designed an adaptive weight factor for position updating to improve the speed and accuracy of the GWO search. In this paper, the use of the grey wolf algorithm in the (2) Hunting phase instead of the follower phase of the PFA can improve the convergence speed of the algorithm and reduce the probability of the overall algorithm being stagnant because the pathfinder falls into the local optimum; the following is the gray wolf optimizer’s mathematical model:

The position vector X in the grey wolf optimizer will consist of N individuals in the population of dimension d. Each individual represents a “grey wolf”, which has a social hierarchy, including “” (the best individual), “” (the second-best individual), “” (the third-best individual), and “” (the other individuals). The hierarchy is shown in Figure 1.

Figure 1.

Grey wolf pyramid levels.

- (1)

- Encircling prey.where is the current generation prey position, is the current generation grey wolf individual position, A and C are vector coefficients with formulas and , and are random numbers between the intervals [0,1], and a is the convergence factor, which decreases linearly from 2 to 0 as the number of iterations increases, i.e.,

- (2)

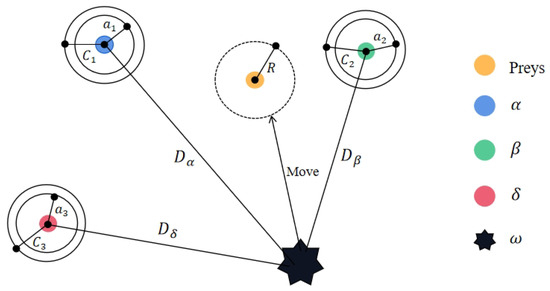

- Hunting. The positions of the other grey wolves in group X are jointly determined based on the positions of , , and :where , , and are defined the same as A and , and are defined the same as C. The way of updating the wolf position is shown in the following Figure 2.

Figure 2. Schematic diagram of grey wolf location update.

Figure 2. Schematic diagram of grey wolf location update.

3.3. Follower Update Formulation Based on GWO and Escape Energy

In highly complex multimodal problems, the PFA may fall into local optimal solutions; this is because the PFA is mainly based on competition and selection among individuals, and may miss the global optimal solution in some cases. GWO excels in global search, leveraging population behavior and alignment mechanisms to effectively explore the search space and identify either the global optimal solution or a near-optimal one. In light of these strengths, this paper integrates the PFA with the GWO algorithm to enhance the overall performance of PFA.

To strike a balance between global and local search, and to further improve the search efficiency and optimization performance of the algorithm, the paper introduces the concept of escape energy (E) from the Harris Hawks optimization (HHO) [33]. Equation (19) captures this concept and is presented as follows:

Here, represents a randomly generated number within the range of [−1,1]. The escape energy (E) plays a crucial role in determining the algorithm’s performance, influencing factors such as search speed, search quality, and convergence. When the escape energy is greater than or equal to 1, it encourages individuals to engage in global exploration more frequently. This is particularly beneficial when the entire search space has not been thoroughly explored, aiding the algorithm in swiftly identifying potential globally optimal solutions during the initial iterations. Conversely, when the escape energy is less than or equal to 1, individuals tend to prioritize local searches. They concentrate on delving deeper into regions near the currently identified superior solutions, facilitating the algorithm in optimizing those candidate solutions with a more detailed approach.

The follower update Equation (23) for the PFA, combining the GWO and the escape energy (E), is as follows:

3.4. Dimension-by-Dimension Mutation

The t-distribution [34,35], also known as the student distribution, has the following probability density (Equation (24)):

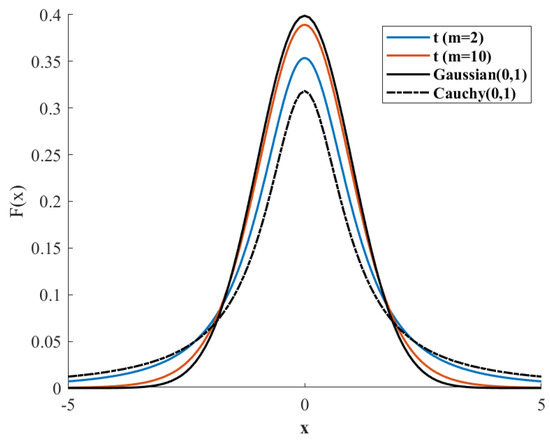

where is the Euler integral of type II. The t-distribution curve shape is related to the value of the degrees of freedom parameter m. When the parameter m = 1, the t-distribution is in the form of a Cauchy distribution, denoted as t(m = 1) = C(0,1). As the parameter “m” increases, the t-distribution gradually approaches the standard normal distribution. When the parameter “m” tends to infinity, the t-distribution gradually converges to the standard normal distribution N(0,1). Figure 3 shows the distribution of the t-distribution, Cauchy distribution, and normal distribution with parameter changes for different degrees of freedom.

Figure 3.

Gaussian, t-distribution, and Cauchy distribution density functions.

The output result of the mutation operation has uncertainty, and if the dimension-by-dimension mutation operation is performed on each individual in the population, it will inevitably lead to an increase in computational complexity and may reduce the search efficiency of the algorithm. Therefore, in this section, only the optimal individual in the current population is subjected to the dimension-by-dimension mutation operation, and the dimension-by-dimension mutation formula is as follows:

where is the t-distribution of the PFA’s iteration count for the degree of freedom parameter, and is the new solution following mutation. At the beginning of the iteration, is smaller, representing a smaller degree of the freedom parameter; the model is close to the Cauchy distribution, has a significant role in the perturbation of the individual , which effectively enhances the algorithm’s ability to search globally, and helps to prevent the individual from falling into the local aggregation phenomenon. As the iteration of the algorithm proceeds, the increase in the degrees of freedom parameter causes the t distribution to gradually converge to a Gaussian distribution, which results in a gradual weakening of the perturbation of the distribution operator on the individual . This evolution is particularly evident in the later stages of the algorithm (where is larger), which in turn provides the algorithm with a higher degree of local exploitation and convergence accuracy.

Since the optimal individual position after mutation cannot be guaranteed to be better than the original position, an optimization-preserving strategy, i.e., greedy strategy, is added after the dimension-by-dimension mutation position perturbation. By comparing the fitness value of the mutated optimal individual with the original optimal individual, the individual with the best fitness value is selected as the global optimal solution for the current generation, then the mutated individual replaces the original individual, and the judgment formula is as follows:

3.5. IPFA Steps

The IPFA algorithm’s specific steps and Algorithm 2 are as follows, based on the enhancements in this section:

Step 1: The initialized algorithm parameters, including population size (N), upper and lower boundaries ( and ) of the search range, and the maximum iterations ();

Step 2: The population is initialized using the elite opposition-based learning strategy outlined in Equation (10);

Step 3: Computing the fitness values of each individual in the population based on a fitness function. Sort the individuals based on the fitness value and select the individual with the smallest fitness value as the pathfinder;

Step 4: Pathfinder phase. Update the pathfinder position according to Equation (2);

Step 5: Follower stage. Update the follower position according to Equation (23);

Step 6: Mutation stage. The fitness values of all individuals are computed and ranked, and then the individual with the optimal fitness value is picked for dimension-by-dimension mutation based on Equation (25);

Step 7: Determine whether to update the optimal individual according to the greedy strategy Equation (26);

Step 8: The population’s individual fitness levels are updated, and the person with the lowest value is selected to be the new pathfinder;

Step 9: If the termination condition for iterations is met, output the optimal individual position along with its corresponding fitness value. Otherwise, repeat Steps 4 through 9 until the iteration termination is satisfied.

| Algorithm 2 Pseudo-code of IPFA. |

|

3.6. Time Complexity Analysis

The total temporal complexity of the PFA is known to be , assuming a population size of N, spatial dimensions of d, and a maximum iteration count of . In this scenario, represents the fitness function. The time complexity of the IPFA is now analyzed.

For the IPFA, in the initialization population stage, assuming the time for initializing algorithm parameters is , and the time for sorting all individuals based on fitness values and selecting the pathfinder is , the time complexity of the initialization population stage can be expressed as follows:

Here, the time complexity for initializing the individuals in the population through elite opposition-based learning is , and the time for calculating the fitness value for each individual is .

As the iteration progresses, during the pathfinder phase, the IPFA updates the pathfinder position like the standard PFA, without incurring additional time. Consequently, the time complexity of the pathfinder phase remains consistent with that of the standard PFA, as follows:

During the follower phase, the time required for computing the coefficient vectors A and C is denoted as . Additionally, the time for calculating the distances between , , and wolves for each individual in the population is represented by . The computation of , , and consumes time , while the calculation of requires time . The processing time for the boundaries of the follower’s dimensions is denoted as , and the time for computing E is . Collectively, these components contribute to the time complexity of the follower phase:

During the mutation phase, the following time complexities are measured: is the time of sorting the population of individuals to find the optimal individual; is the time of the optimal individual performing dimension-by-dimension mutation; is the time of computing the fitness value of the optimal individual; is the time of determining whether the new optimal individual replaces the old optimal individual by using the greedy strategy; is the time of retaining the position of the optimal individual; and is the time of processing the boundaries of the optimal individual in each dimension, so the time complexity of the mutation phase is as follows:

To put it briefly, the IPFA’s total temporal complexity is . As a result, the IPFA’s time complexity and execution efficiency are comparable to those of the regular PFA.

4. Experimental Simulation and Analysis of Results

4.1. Experimental Design

To assess the performance of the proposed IPFA in this study, IPFA is compared with particle swarm optimization (PSO) [10], differential evolution (DE) [11], whale optimization algorithm (WOA) [12], seagull optimization algorithm (SOA) [36], sine cosine algorithm (SCA) [14], grey wolf optimizer (GWO) [30], chimp optimization algorithm (ChOA) [37], and the standard PFA [15] on 45 benchmark test functions (function information as shown in Table 1). The functions – are unimodal (UN) functions, – are multimodal (MN) functions, – are fixed-dimension multimodal (FM) functions, and F1–F29 are benchmark test functions from CEC2017. In CEC2017, F1–F9 involve functions with rotation and translation tests, F10–F19 are composite functions designed to assess the algorithm’s capability in handling high-dimensional problems, and F20–F29 are hybrid functions designed to evaluate the algorithm’s performance under various complex scenarios. To ensure the fairness of the evaluation, each algorithm underwent 30 independent runs to minimize the possible effects of random samples. While maintaining uniformity, all algorithms shared a set of parameter settings, such as a population size of 30 and a maximum iteration of 1000. For the fairness of the experiment, all algorithms were subjected to optimization testing on a computer equipped with an Intel(R) Core(TM) i5—11260H CPU @ 2.60 GHz, running Windows 10, with 16 GB of memory and a 64-bit operating system. The optimization experiments were conducted using MATLAB R2021a software. Table 2 provides the parameter settings for each algorithm.

Table 1.

Benchmark functions.

Table 2.

Experimental parameters of each algorithm.

4.2. Comparative Analysis of Optimization Performance

In this section, a comprehensive comparison is conducted between IPFA and nine other evolutionary algorithms, with summarized results presented in Table 3. Three key metrics are analyzed for each algorithm: mean, standard deviation (S.D), and Best. The “Mean” represents the average obtained by summing the results of 30 independent runs of a specific algorithm and dividing by the number of runs. Also, the algorithms are ranked according to their average values, with the rankings starting from 1, representing the best performing algorithm, followed by a rank of 2, and so on; if there are multiple algorithms with equal performance, they are ranked the same, while the rankings of the other algorithms are computed in the usual order. The sum of the algorithm rankings on the test functions and the overall rankings are shown in the last two rows. The better an algorithm performs on each test function, the smaller the value of the sum of the rankings. Thus, the best algorithm has a composite ranking of 1, followed closely by algorithms with a ranking of 2, and so on.

Table 3.

Comparison of benchmarking function optimization results.

Regarding the single-peak functions, , , , and , the IPFA obtains a performance of Rank 1, in terms of the mean, S.D., and best, but regarding the functions, , , and , the IPFA is only ranked 2nd, 5th, and 3rd, which is less than ideal for finding the optimal results. Overall, the convergence performance of IPFA on single-peaked functions is slightly better than the other eight algorithms.

The IPFA secures the top ranking on multi-peak functions and . However, its performance is less impressive on other multi-peak and fixed-dimensional multi-peak functions, where it holds the 6th, 2nd, 4th, and 3rd positions for functions , , and , respectively. It is important to remember that for these three functions, the ‘best’ is ideal.

In the evaluation of the CEC2017 functions F1-F23, the IPFA consistently secures the 1st rank across all rotation and displacement functions F1, F2, F3, F4, and F6. This remarkable performance indicates its exceptional capability to rotate the problem and effectively adapt to the translation of the search space. Moreover, the algorithm demonstrates resilience in overcoming geometric changes within the search space, underscoring its robust adaptability. The IPFA algorithm is ranked 1 for all high-dimensional composite functions except for the function F18. This observation suggests that the incorporation of the escape energy from the Harris Hawks optimization in the algorithm achieves a harmonious balance between a global and local search. This allows for rapid convergence to the global optimal solution, preventing entrapment in local optima. Moreover, it underscores the algorithm’s proficiency in handling high-dimensional functions. The IPFA achieves the 1st rank on mixed functions F25 and F27. These mixed functions exhibit highly complex shapes with pronounced nonlinearity, lack of smoothness, and irregular characteristics. The utilization of t-distribution-based multi-elite individuals for dimension-wise mutations in the algorithm proves beneficial for escaping the current position and converging toward the local minimum of the functions. This imparts significant adaptability to the algorithm, enabling it to overcome the challenges posed by the intricate nature of these functions. While the algorithm’s performance is suboptimal on the remaining eight functions, it notably outperforms other algorithms with the smallest best values on functions F20, F22, F26, F28, and F29. This underscores the algorithm’s superior optimization capability in comparison to the considered alternatives.

The IPFA secures the top position across all 45 function species in the search results, showcasing its exceptional global search and local exploration capabilities. The algorithm exhibits robustness and adaptability, further underscoring its prowess.

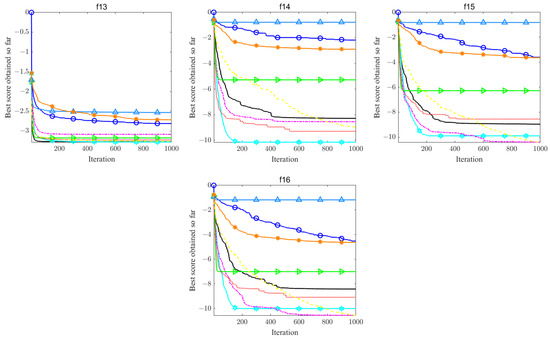

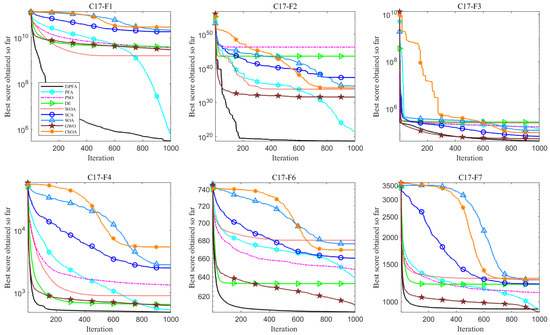

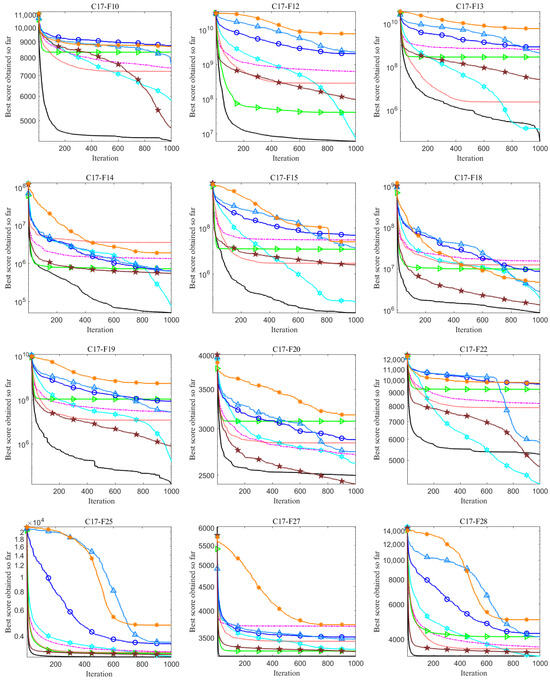

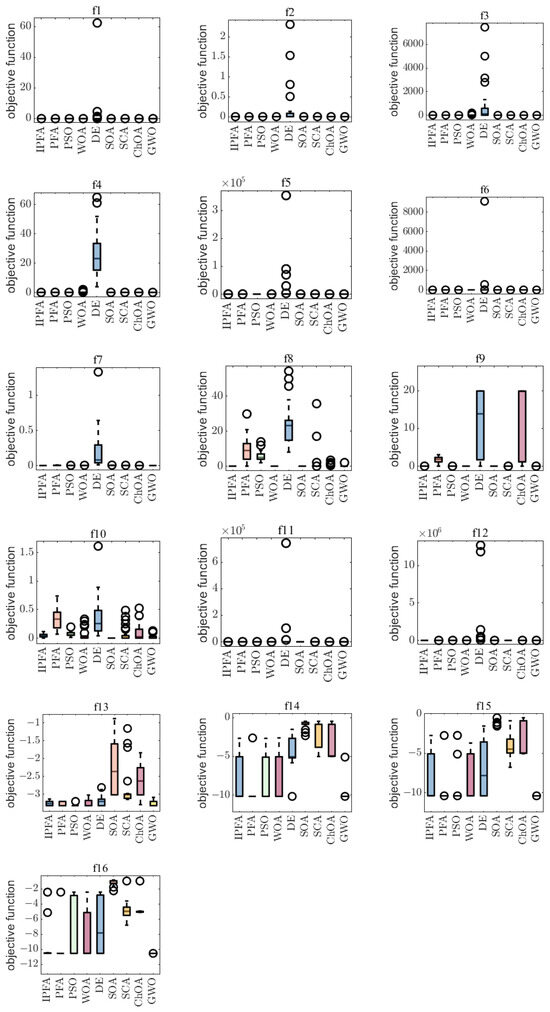

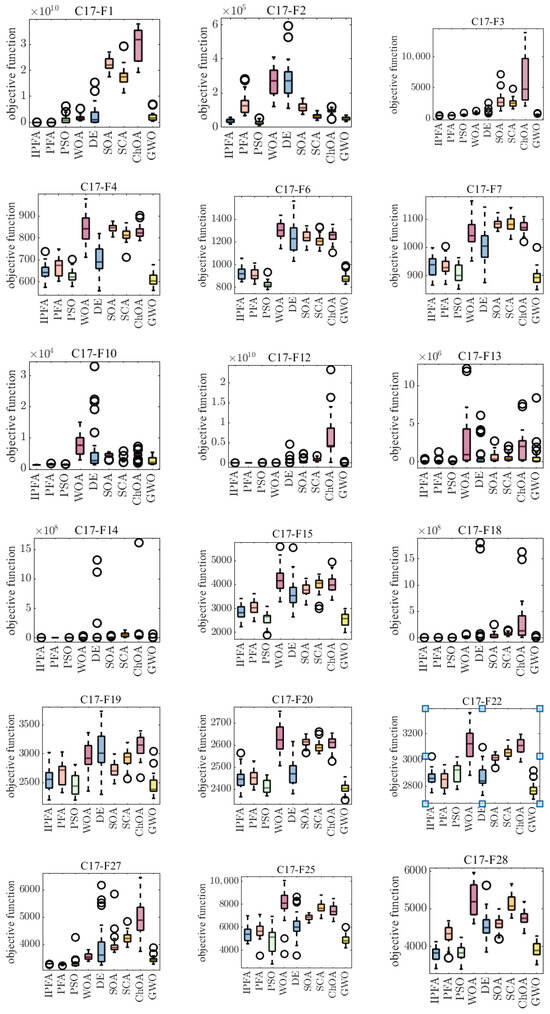

The convergence curves shown in Figure 4 and Figure 5 indicate that IPFA outperforms the other eight algorithms in terms of global convergence and overall optimized search accuracy. In addition, the boxplots in Figure 6 and Figure 7 illustrate the performance of these algorithms when dealing with test functions. Regarding unimodal functions –, the IPFA only falls into local optima for functions , , and , demonstrating a notable advantage in the remaining functions. It rapidly converges toward the global optimum. Regarding multimodal functions –, the IPFA notably lacks the high convergence accuracy observed in the WOA for functions – and experiences local optima. However, it is evident that the IPFA exhibits a faster early-stage convergence, indicating that elite opposition-based learning provides superior initial solutions. These solutions are closer to the global optimum than randomly initialized solutions. Despite all algorithms encountering local optima in functions and , the IPFA significantly outperforms others in terms of convergence accuracy and speed. For fixed-dimensional multi-peaked functions –, it can be seen that the PFA algorithm solves such functions more generally. This superiority arises from the characteristic nature of fixed-dimensional multi-peaked functions, which typically encompass numerous locally optimal solutions spaced relatively far apart. The dimension-by-dimension variation strategy employed by the IPFA concentrates solutions near certain local optima, hindering efficient escape and resulting in the algorithm being trapped in local convergence without the ability to explore global optimal solutions. In the realm of CEC2017 functions, the collaboration between the pathfinders and followers in the PFA has consistently exhibited superior optimization accuracy compared to other algorithms. Notably, for functions F7, F20, and F22, the PFA and GWO demonstrate continuous optimization efforts. However, the improved pathfinder algorithm (IPFA) outperforms all other algorithms on the remaining functions. This underscores the algorithm’s exceptional global search capability, enabling it to swiftly and efficiently navigate the problem’s search space and converge rapidly toward potential global optimal solutions.

Figure 4.

Convergence curves of functions –.

Figure 5.

CEC2017 functions: convergence curves (parts).

Figure 6.

Boxplot of functions –.

Figure 7.

Boxplot of CEC2017 functions (parts).

4.3. Wilcoxon Rank Sum Test

One nonparametric hypothesis testing technique is the Wilcoxon signed-rank sum test (WRST) [38]. Because it refrains from making particular assumptions about the data distribution, it is very appropriate for a variety of intricate comparative data analyses. The Wilcoxon rank sum test (WRST) is a valuable tool for evaluating the convergence performance of multiple algorithms when comparing their performances. The outcomes of the nonparametric tests conducted through WRST are presented in Table 4 and Table 5.

Table 4.

WRST comparison between IPFA and PFA, PSO, DE, and WOA.

Table 5.

WRST comparison between IPFA and SCA, SOA, GWO, and ChOA.

Eight distinct algorithms were employed in this analysis, each subjected to a comparative evaluation against the IPFA algorithm. In each experiment, the discrepancies in observations across the algorithms were quantified and ranked, based on their absolute magnitudes, and assigned corresponding ranks. Subsequently, the algorithms were classified, based on the positive and negative scenarios of the rank, with ’+’ indicating a positive rank sum and ’-’ indicating a negative rank sum. The p-value was then used to determine if there was a significant difference between the two algorithms. If the p-value exceeds 0.05, it indicates that there is no statistically significant difference between the two algorithms. Simultaneously, the concept of a “winner” was introduced to delineate the algorithm’s performance relative to others in diverse situations.

Specifically, the magnitudes of positive and negative rank sums were compared when the p-value was less than 0.05. If the positive rank sum is less than the negative rank sum, it means that an algorithm shows an advantage in convergence performance compared to other algorithms at a statistical significance level of 5%, marking it as ’+’; on the contrary, if the positive rank sum is greater than the negative rank sum, it means that the algorithm shows a weaker performance at the significance level, marking it as ’-’; the p-value is marked with a ’=’ if it is larger than 0.05, which means that at the 5% significance level, there is no significant difference between the two algorithms.

In this way, the validity of the proposed IPFA algorithm is verified. Therefore, in this section, nonparametric tests are performed on the means in Table 3 using the Wilcoxon signed-rank sum test, the results of which are presented in Table 4 and Table 5, to authoritatively assess the convergence performance of the compared algorithms.

Table 4 and Table 5 demonstrate that in 27 of the 45 benchmark functions, the IPFA performs better than the PFA, there is no significant difference in 9 benchmark functions, and there are also 9 benchmark functions that are inferior to the PFA, and in general, the IPFA performs slightly better than the PFA, which indicates that the algorithm’s improvement has an effect; compared with the PSO, the performance of the IPFA is inferior to that of PSO except for the functions , , and , and the performance of the IPFA outperforms that of PSO for the rest of the 42 functions. Compared with the DE, except for 10 functions where the performance of the two algorithms is comparable, the DE is inferior to the IPFA in the remaining 35 functions; compared with the SCA, the IPFA outperforms the SCA in 45 functions. Compared to the GWO, IPFA has only slightly better performance; the IPFA has a significant advantage over the WOA, SOA, and ChOA in most of the functions. In conclusion, it is demonstrated that IPFA outperforms other intelligent optimization algorithms in terms of total performance.

4.4. Research on Engineering Design Problems of IPFA

4.4.1. Pressure Vessel Design Problem

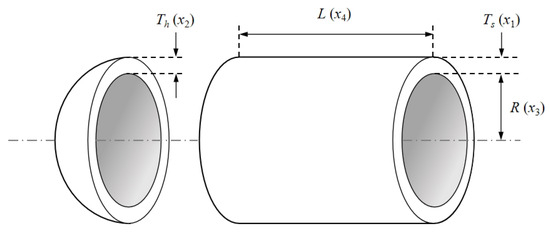

Reducing the total cost is the aim of the pressure vessel design challenge [39]. Four decision variables make up this model: the thickness of the shell , the thickness of the head , the inner radius , and the length of the cylindrical section without taking the head , in addition to four constraints. The model’s schematic structure is displayed in Figure 8:

Figure 8.

Pressure vessel design model.

The mathematical model of the pressure vessel design problem is as follows:

To optimize this problem, the IPFA is initialized with 30 individuals, executed for 30 independent runs, and iterated for 1000 cycles. The final optimization outcome is = 5948.3597 with X = [0.9383 0.0628 54.6570 184.6838]. The results obtained by the proposed IPFA in solving this problem are compared with eight other optimization algorithms, including those reported in the literature. The experimental results are comprehensively presented in Table 6 and Table 7. Upon analyzing the tables, it is evident that the IPFA in this study exhibits superior optimization performance compared to the other six meta-heuristic algorithms. It not only incurs lower design costs but also boasts the smallest S.D. among all the algorithms. Furthermore, the mean and worst rank second only to PFA, underscoring the efficacy of IPFA in optimizing the pressure vessel design problem.

Table 6.

Optimal solutions for the pressure vessel design problem.

Table 7.

Results of the pressure vessel design problem.

4.4.2. Tension Spring Design Problem

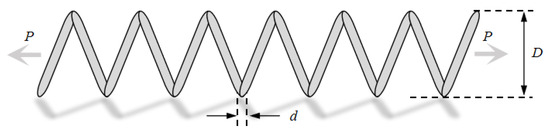

The tension spring design problem [43] has the objective of minimizing or maximizing the performance metrics of a tension spring under given constraints. This model has three decision variables: coil diameter , average coil diameter , and the number of active coils , as well as four constraints, and its structure is schematically shown in Figure 9:

Figure 9.

Tension spring design model.

The mathematical model of the tension spring design problem is as follows:

The algorithm settings remain consistent with those employed in the pressure vessel design experiments. The results of each algorithm running independently for 30 iterations are tabulated in Table 8 and Table 9. Based on the provided tables, the mean, worst, and S.D. of the IPFA are comparatively average in comparison to other optimization algorithms. Although there is room for improvement in its performance, the IPFA and GWO optimums are minimized at the same time, which is excellent compared to the other seven meta-heuristic algorithms, demonstrating the lowest design cost with = 0.012699, X = [0.0504 0.3978 11.2764].

Table 8.

Optimal solutions for the tension spring design problem.

Table 9.

Results of the tension spring design problem.

5. Conclusions

In this study, we propose an improved pathfinding algorithm (IPFA) to address the problems of slow convergence and low optimization accuracy of standard pathfinding algorithms. Firstly, we introduce an elite opposition-based learning learning mechanism that incorporates learned exemplar individuals at the beginning of the algorithm. This improvement aims to increase the diversity and quality of the entire population, thus improving the global optimality performance and increasing the convergence accuracy. By selectively integrating individuals with exceptional performance into the initial population, the algorithm is better equipped to explore a diverse solution space and mitigate premature convergence to local optima.

Secondly, integrating the escape energy into the prey-hunting phase of the grey wolf optimizer, while substituting the follower phase in the pathfinder algorithm, serves to enhance the algorithm’s diversity and elevate both the search efficiency and optimization performance. The incorporation of escape energy enables a subset of individuals to opt for escape during the search process, facilitating a more extensive exploration of the search space. This strategic approach helps prevent the algorithm from converging to a local optimal solution, thereby enhancing global search capabilities and fortifying the algorithm’s overall robustness.

In conclusion, the dimension-by-dimension mutation method is employed to perturb the location of the optimal individual, facilitating a swift escape from local optimal solutions and promoting movement toward alternative regions. By implementing tiny random perturbations on every dimension of the ideal individual, this method successfully breaks apart the local structure of the existing solution. As a result, this directs the search procedure in a novel and maybe more advantageous route, improving the algorithm’s overall search performance.

Incorporating these mechanisms into the pathfinder algorithm considers global optimization, diversity maintenance, escape mechanisms, and perturbation strategies. This comprehensive enhancement aims to improve the robustness and performance of the algorithm. Such depth in algorithmic improvement is typically well-suited to address the distinct characteristics of various problems, consequently enhancing the algorithm’s applicability.

Simultaneously, eight representative meta-heuristic algorithms were chosen for performance comparison, confirming the exceptional performance of the IPFA through function optimization experiments and an index evaluation system. The IPFA was successfully employed to address two engineering design problems, demonstrating stable and effective optimization outcomes that fully validate the algorithm’s robust applicability. In future research endeavors, the aim will be to explore the integration of the IPFA with the field of deep learning, applying the optimization model to data prediction and analysis problems.

Author Contributions

Conceptualization, B.W. and X.M.; methodology, X.M.; software, B.W.; data curation, W.Y. and Y.C.; writing—original draft preparation, X.M.; visualization, B.W., W.Y. and Y.C.; funding acquisition, B.W. and X.M. All authors have read and agreed to the published version of the manuscript.

Funding

This project was funded by the National Natural Science Foundation of China (grant no. 12271036), the Scientific Research Project of Mudanjiang Normal University (grant no. GP2020003), and the School-level Projects of Mudanjiang Normal University (grant no. kjcx2023-125mdjnu, kjcx2023-126mdjnu).

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Huo, X.; Zhang, F.; Shao, K.; Tan, J.Q. Improved Meta-heuristic Optimization Algorithm and Its Application in Image Segmentation. J. Softw. 2021, 32, 3452–3467. [Google Scholar]

- Rajamoorthy, R.; Arunachalam, G.; Kasinathan, P.; Devendiran, R.; Ahmadi, P.; Pandiyan, S.; Muthusamy, S.; Panchal, H.; Kazem, H.A.; Sharma, P. A novel intelligent transport system charging scheduling for electric vehicles using Grey Wolf Optimizer and Sail Fish Optimization algorithms. Energy Sources Part A Recover. Util. Environ. Eff. 2022, 44, 3555–3575. [Google Scholar] [CrossRef]

- He, Y.; Venkatesh, B.; Guan, L. Optimal scheduling for charging and discharging of electric vehicles. IEEE Trans. Smart Grid 2012, 3, 1095–1105. [Google Scholar] [CrossRef]

- Cai, Y.; Du, P. Path planning of unmanned ground vehicle based on balanced whale optimization algorithm. Control. Decis. 2021, 36, 2647–2655. [Google Scholar]

- Guerrero-Ibáñez, J.; Zeadally, S.; Contreras-Castillo, J. Sensor technologies for intelligent transportation systems. Sensors 2018, 18, 1212. [Google Scholar] [CrossRef]

- Xia, H.; Chen, L.; Wang, D.; Lu, X. Improved Denclue outlier detection algorithm with differential privacy and attribute fuzzy priority relation ordering. IEEE Access 2023, 11, 90283–90297. [Google Scholar] [CrossRef]

- Xia, H.Z.; Chen, L.M.; Qi, F.; Mao, X.D.; Sun, L.Q.; Xue, F.Y. DP-Denclue: An outlier detection algorithm with differential privacy preservation. In Proceedings of the 2022 IEEE 24th IEEE International Conference on High Performance Computing and Communications (HPCC), Chengdu, China, 18–21 December 2022; pp. 2264–2269. [Google Scholar]

- Hsu, Y.P.; Modiano, E.; Duan, L. Age of information: Design and analysis of optimal scheduling algorithms. In Proceedings of the 2017 IEEE International Symposium on Information Theory (ISIT), Aachen, Germany, 25–30 June 2017; pp. 561–565. [Google Scholar]

- Zhang, H.Y.; Lin, W.m.; Chen, A.X. Path planning for the mobile robot: A review. Symmetry 2018, 10, 450. [Google Scholar] [CrossRef]

- Shi, Y. Particle swarm optimization. IEEE Connect. 2004, 2, 8–13. [Google Scholar]

- Draa, A.; Chettah, K.; Talbi, H. A compound sinusoidal differential evolution algorithm for continuous optimization. Swarm Evol. Comput. 2019, 50, 100450. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Hatamlou, A. Multi-verse optimizer: A nature-inspired algorithm for global optimization. Neural Comput. Appl. 2016, 27, 495–513. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A sine cosine algorithm for solving optimization problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Yapici, H.; Cetinkaya, N. A new meta-heuristic optimizer: Pathfinder algorithm. Appl. Soft Comput. 2019, 78, 545–568. [Google Scholar] [CrossRef]

- Priyadarshani, S.; Subhashini, K.; Satapathy, J. Pathfinder algorithm optimized fractional order tilt-integral-derivative (FOTID) controller for automatic generation control of multi-source power system. Microsyst. Technol. 2021, 27, 23–35. [Google Scholar] [CrossRef]

- Janamala, V. A new meta-heuristic pathfinder algorithm for solving optimal allocation of solar photovoltaic system in multi-lateral distribution system for improving resilience. SN Appl. Sci. 2021, 3, 118. [Google Scholar] [CrossRef] [PubMed]

- Gouda, E.A.; Kotb, M.F.; El-Fergany, A.A. Investigating dynamic performances of fuel cells using pathfinder algorithm. Energy Convers. Manag. 2021, 237, 114099. [Google Scholar] [CrossRef]

- Yuan, Z.; Li, H.; Yousefi, N. Optimal hydrogen consumption of fuel cell-based locomotive using speed trajectory optimization by Improved Pathfinder algorithm. J. Clean. Prod. 2021, 278, 123430. [Google Scholar] [CrossRef]

- Tang, C.; Zhou, Y.; Luo, Q.; Tang, Z. An enhanced pathfinder algorithm for engineering optimization problems. Eng. Comput. 2021, 38, 1481–1503. [Google Scholar] [CrossRef]

- Tang, C.; Zhou, Y.; Tang, Z.; Luo, Q. Teaching-learning-based pathfinder algorithm for function and engineering optimization problems. Appl. Intell. 2021, 51, 5040–5066. [Google Scholar] [CrossRef]

- Hu, R.; Dong, Y.; Qian, B. Pathfinder algorithm for green pipeline scheduling with limited buffers. J. Syst. Simul. 2021, 33, 1384. [Google Scholar]

- Lu, M. Improvement and Application of Grey Wolf Optimization Algorithm. Master’s Thesis, Guangxi Minzun University, Nanning, China, 2021. [Google Scholar]

- Sun, Z.Z. Improved Pathfinder Algorithm and Its Application. Master’s Thesis, University of Science and Technology Liaoning, Anshan, China, 2021. [Google Scholar]

- Mahdavi, S.; Rahnamayan, S.; Deb, K. Opposition based learning: A literature review. Swarm Evol. Comput. 2018, 39, 1–23. [Google Scholar] [CrossRef]

- Tizhoosh, H.R. Opposition-based learning: A new scheme for machine intelligence. In Proceedings of the International Conference on Computational Intelligence for Modelling, Control and Automation and International Conference on Intelligent Agents, Web Technologies and Internet Commerce (CIMCA-IAWTIC’06), Vienna, Austria, 28–30 November 2005; Volume 1, pp. 695–701. [Google Scholar]

- Yildiz, B.S.; Pholdee, N.; Bureerat, S.; Yildiz, A.R.; Sait, S.M. Enhanced grasshopper optimization algorithm using elite opposition-based learning for solving real-world engineering problems. Eng. Comput. 2022, 38, 4207–4219. [Google Scholar] [CrossRef]

- Yuxin, G.; Sheng, L.; Wenxin, G.; Lei, Z. Elite Opposition-Based Learning Golden-Sine Harris Hawks Optimization. J. Comput. Eng. Appl. 2022, 58. [Google Scholar] [CrossRef]

- Xie, C.W.; Xu, L.; Zhao, H.R.; Xia, X.W.; Wei, B. Multi-objective fireworks optimization algorithm using elite opposition-based learning. Acta Electonica Sin. 2016, 44, 1180. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Zhang, X.; Lin, Q.; Mao, W.; Liu, S.; Dou, Z.; Liu, G. Hybrid Particle Swarm and Grey Wolf Optimizer and its application to clustering optimization. Appl. Soft Comput. 2021, 101, 107061. [Google Scholar] [CrossRef]

- Ou, Y.; Zhou, K.; Yin, P.; Liu, X. Improved grey wolf optimizer algorithm based on dual convergence factor strategy. J. Comput. Appl. 2023, 43, 2679. [Google Scholar]

- Kamboj, V.K.; Nandi, A.; Bhadoria, A.; Sehgal, S. An intensify Harris Hawks optimizer for numerical and engineering optimization problems. Appl. Soft Comput. 2020, 89, 106018. [Google Scholar] [CrossRef]

- Lange, K.L.; Little, R.J.; Taylor, J.M. Robust statistical modeling using the t distribution. J. Am. Stat. Assoc. 1989, 84, 881–896. [Google Scholar] [CrossRef]

- Jones, M.C.; Faddy, M. A skew extension of the t-distribution, with applications. J. R. Stat. Soc. Ser. Stat. Methodol. 2003, 65, 159–174. [Google Scholar] [CrossRef]

- Dhiman, G.; Kumar, V. Seagull optimization algorithm: Theory and its applications for large-scale industrial engineering problems. Knowl.-Based Syst. 2019, 165, 169–196. [Google Scholar] [CrossRef]

- Khishe, M.; Mosavi, M.R. Chimp optimization algorithm. Expert Syst. Appl. 2020, 149, 113338. [Google Scholar] [CrossRef]

- Wilcoxon, F. Individual comparisons by ranking methods. In Breakthroughs in Statistics: Methodology and Distribution; Springer: Berlin/Heidelberg, Germany, 1992; pp. 196–202. [Google Scholar]

- Moss, D.R. Pressure Vessel Design Manual; Elsevier: Amsterdam, The Netherlands, 2004. [Google Scholar]

- Abualigah, L.; Shehab, M.; Diabat, A.; Abraham, A. Selection scheme sensitivity for a hybrid Salp Swarm Algorithm: Analysis and applications. Eng. Comput. 2022, 38, 1149–1175. [Google Scholar] [CrossRef]

- Cheng, Z.; Song, H.; Zheng, D.; Zhou, M.; Sun, K. Hybrid firefly algorithm with a new mechanism of gender distinguishing for global optimization. Expert Syst. Appl. 2023, 224, 120027. [Google Scholar] [CrossRef]

- Montemurro, M.; Vincenti, A.; Vannucci, P. The automatic dynamic penalisation method (ADP) for handling constraints with genetic algorithms. Comput. Methods Appl. Mech. Eng. 2013, 256, 70–87. [Google Scholar] [CrossRef]

- Arora, J.S. Introduction to Optimum Design; Elsevier: Amsterdam, The Netherlands, 2004. [Google Scholar]

- Kaveh, A.; Bakhshpoori, T. Water evaporation optimization: A novel physically inspired optimization algorithm. Comput. Struct. 2016, 167, 69–85. [Google Scholar] [CrossRef]

- Feng, L.; Li, S.; Feng, S. Preparation and characterization of silicone rubber with high modulus via tension spring-type crosslinking. RSC Adv. 2017, 7, 13130–13137. [Google Scholar] [CrossRef]

- Kaveh, A.; Talatahari, S. A novel heuristic optimization method: Charged system search. Acta Mech. 2010, 213, 267–289. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).