Data-Driven Control Based on Information Concentration Estimator and Regularized Online Sequential Extreme Learning Machine

Abstract

1. Introduction

2. Related Knowledge

2.1. Semi-Parametric Model

2.2. Information Concentration Estimator

2.3. Regularized Online Sequential Extreme Learning Machine

2.4. Problem Formulation

3. The Design of Data-Driven Control Using the Proposed IC Estimator and ReOS-ELM

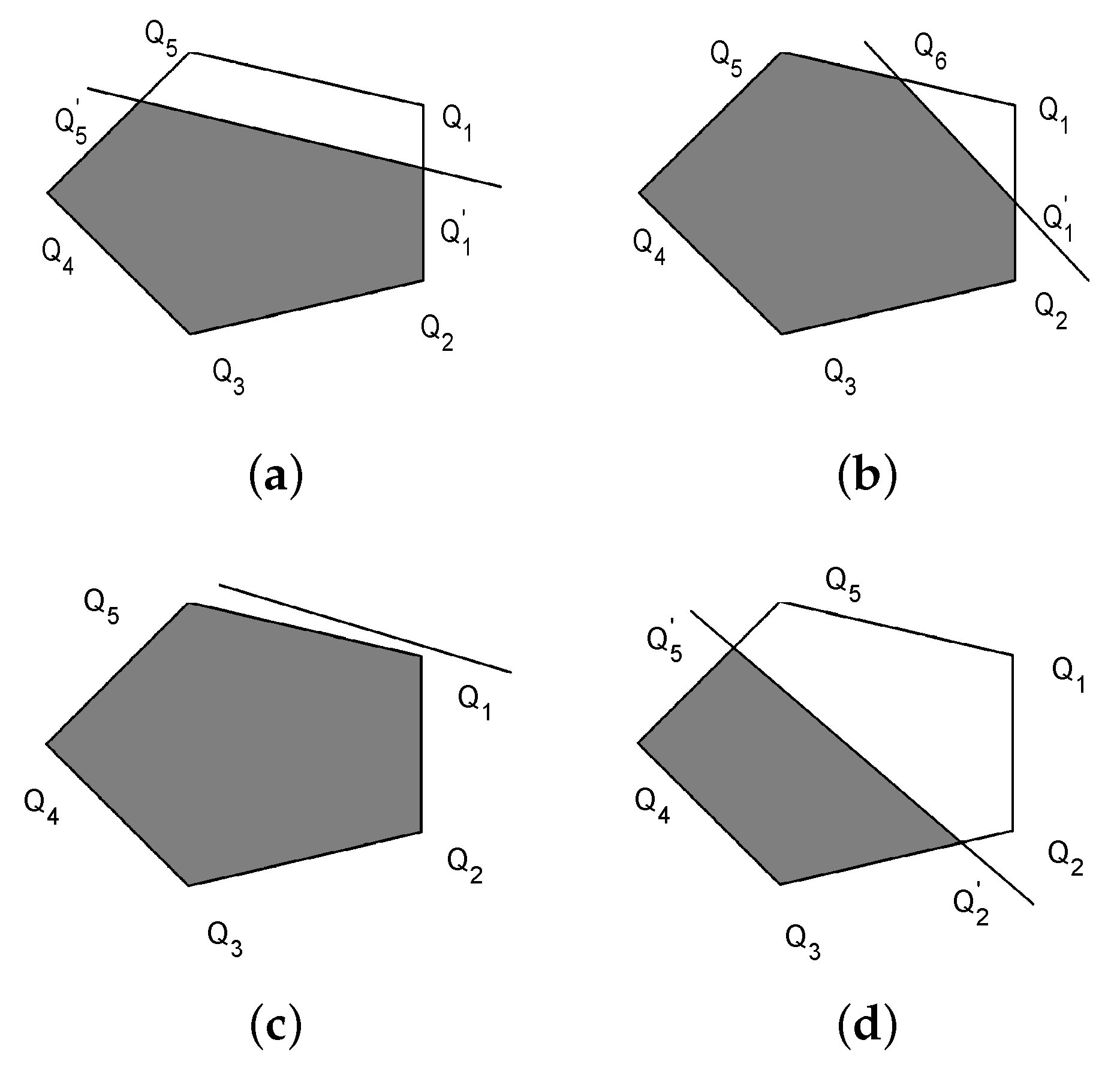

3.1. The Design for the Proposed Improved IC Estimator

| Algorithm 1: AddLinearCons2D(): Add linear constraint to a polygon v. |

| Input: represented by clock-wise arranged vertexes |

| Output: clock-wise arranged vertexes |

| 1: Denote the number of vertexes in v by n |

| 2: for to n do |

| 3: Denote the jth vertex by |

| 4: Let . |

| 5: end for |

| 6: if then |

| 7: and return |

| 8: else if , then |

| 9: and return |

| 10: end if |

| 11: for to do |

| 12: if ( and are in different side) ∧ ( and are in different sides) then |

| 14: the intersection of and |

| 15: the intersection of and |

| 16: if () then |

| 17: |

| 18: else |

| 19: |

| 20: end if |

| 21: break |

| 22: else if ( and are in different side) ∧ ( are on |

| the same side) ∧ ( are on different side) then |

| 23: the intersection of and |

| 24: the intersection of and |

| 25: if then |

| 26: ={} |

| 27: else |

| 28: = |

| 29: end if |

| 30: break |

| 31: else if ( and are in different side) ∧ ( are on the, |

| same side) ∧ ( are the same side) then |

| 32: the intersection of and |

| 33: the intersection of and |

| 34: if then |

| 35: ={} |

| 36: else |

| 37: ={ } |

| 38: end if |

| 39: break |

| 40: end if |

| 41: end if |

| 42: end if |

| 43: |

| 44: end for |

| 46: if (size10) then |

| 47: |

| 48: else |

| 49: |

| 50: end if |

| 51: return |

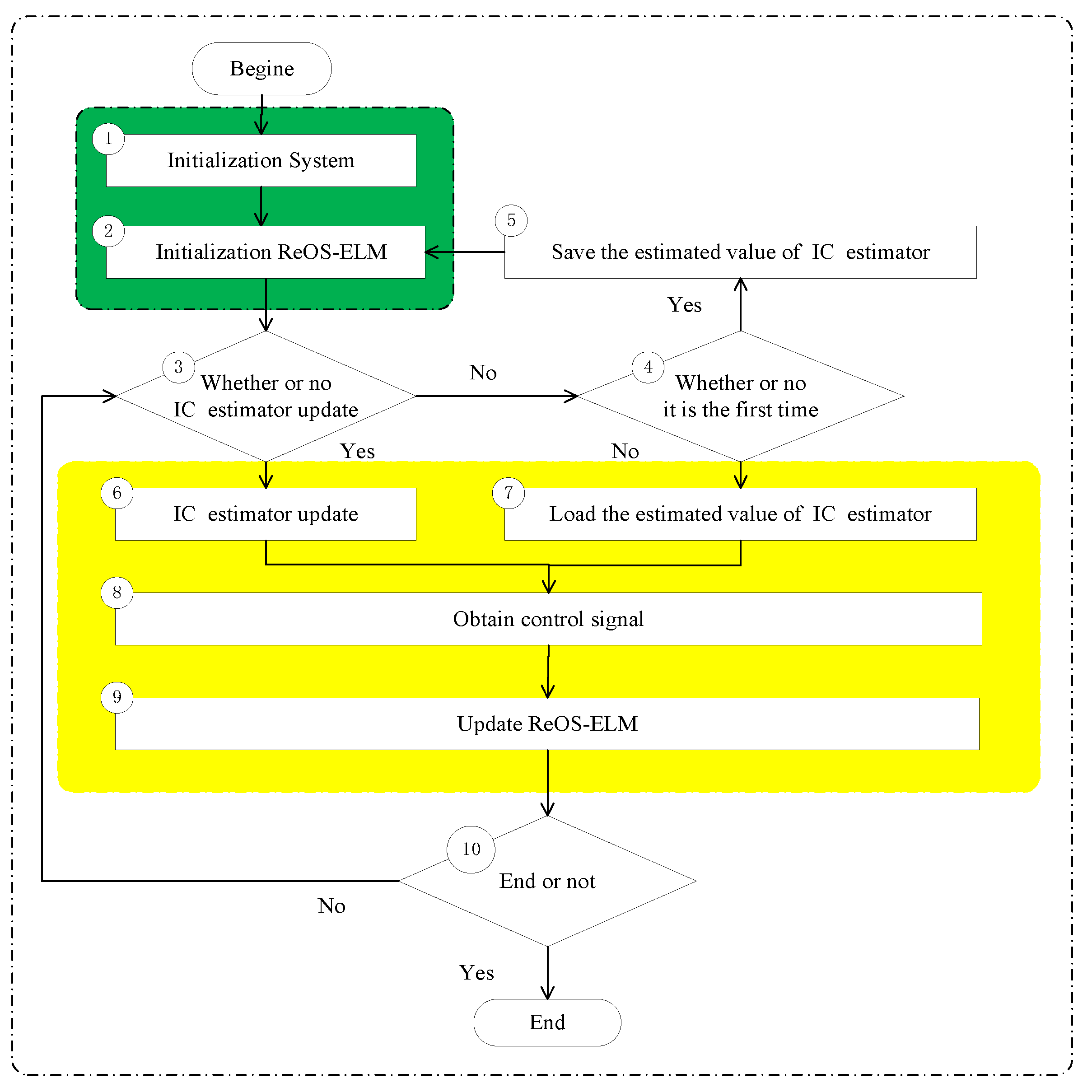

3.2. The Design of the Data-Driven Control Algorithm

- ➀

- Initialization of System

- According to a priori knowledge on , we can obtain the initial polygon (usually a quadrangle) and the value of , and define , , set .

- Set , and ; , are two random numbers, , and ; set , , , and .

- ➁

- Initialization of ReOS-ELM

- For ReOS-ELM, define the hidden node number L, and the regularization factor .

- Assign random parameters for ReOS-ELM, where .

- Measure the output of the plant (16).

- The first sampling data can be obtained as and .

- Obtain and using the following equationsandDefine , and .

- Set , which means that the relearning process has not been activated.

- ➂

- Whether or not the IC estimator updates

- Calculate andwhereand and denote the two coordinates of Q.

- if , then execute ➅ or ➃.

- ➃

- Whether or not it is the first time

- Calculate and . If , executeor .

- If , then set .

- if , execute ➄ or ➆.

- ➄

- Initialize ReOS-ELM and save the estimated value of the IC estimator

- Relearning: using the data and initialize ReOS-ELM, and obtain and using the following equationsand

- .

- ➅

- IC estimator update

- ➆

- Load the estimated value of the IC estimator

- .

- ➇

- Obtain control signal

- Using the kth sample data , calculate the output of the SLFN networks , and

- Calculate the control signal

- Measure the output of the system (16).

- ➈

- The binary updating algorithm which is used for updating ReOS-ELM

- Obtain the kth sample data and ,

- ➉

- End or not

- If , set , and execute ➉, or the proposed algorithm ends.

3.3. Stability Analysis

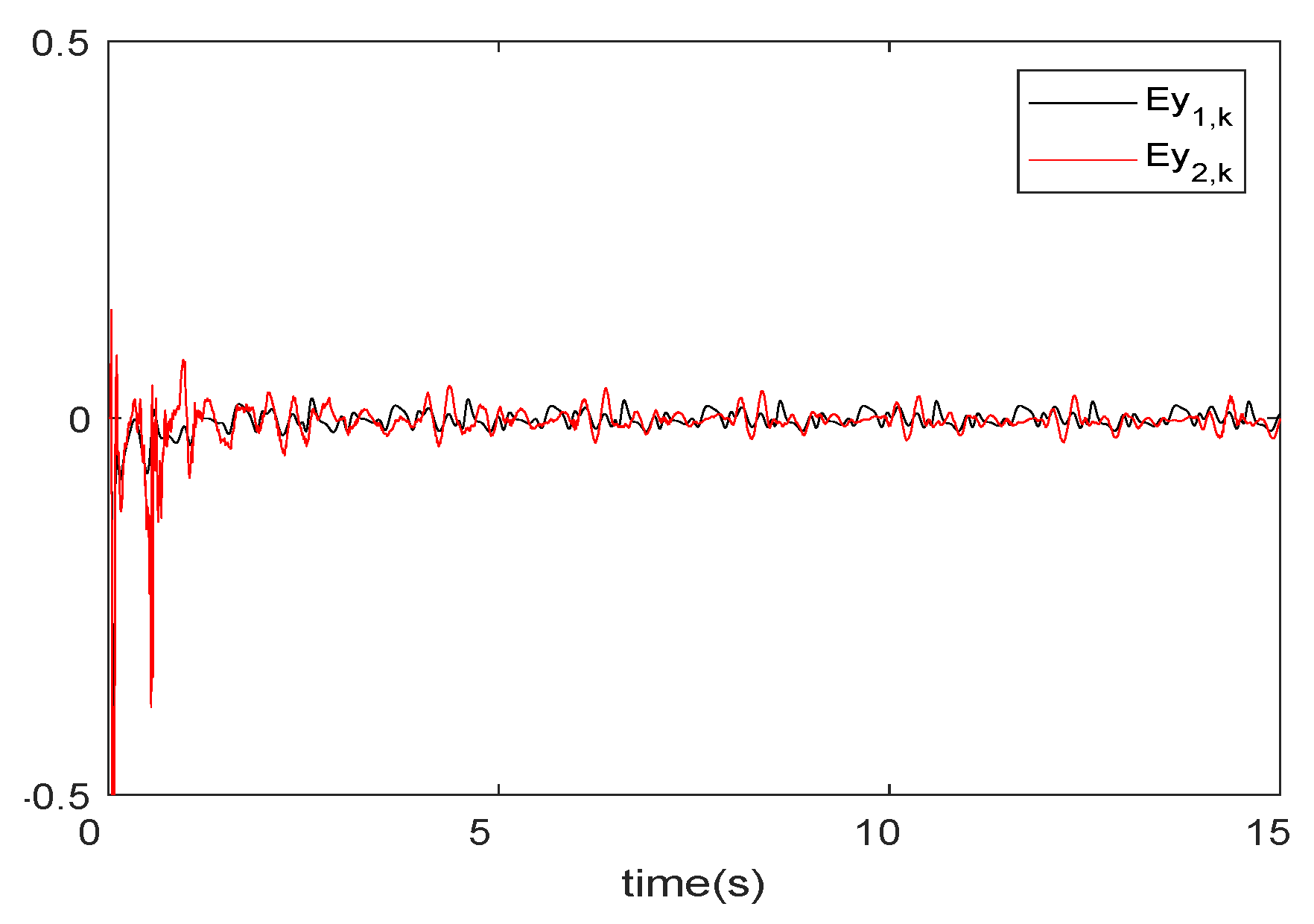

4. Analysis of Experimental Results

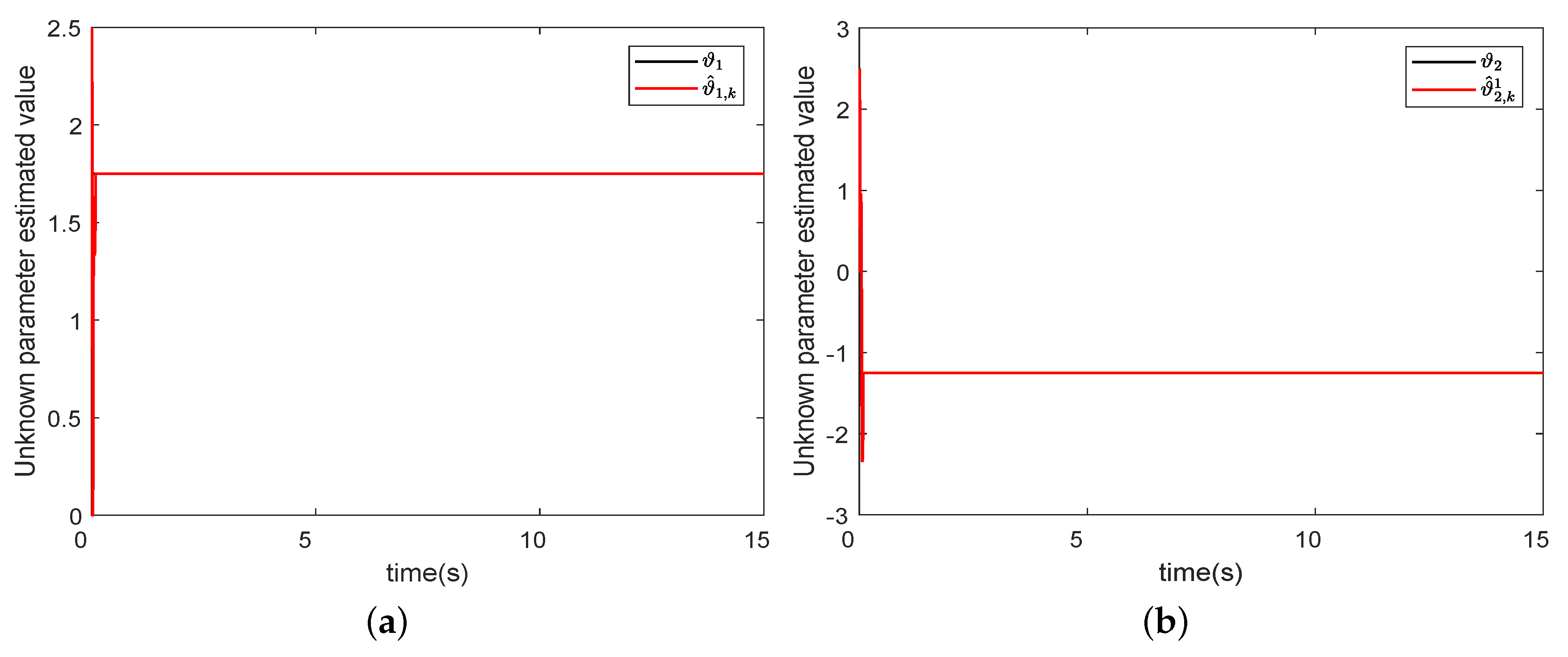

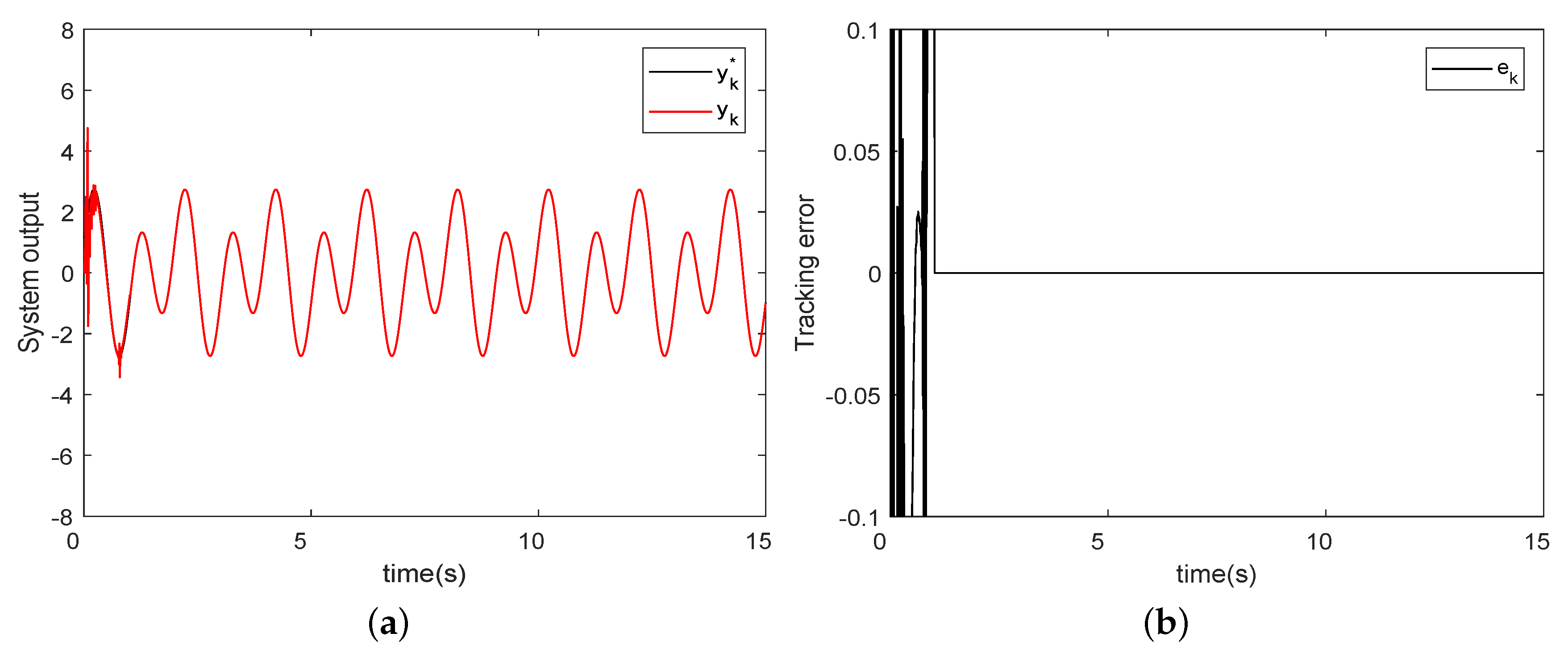

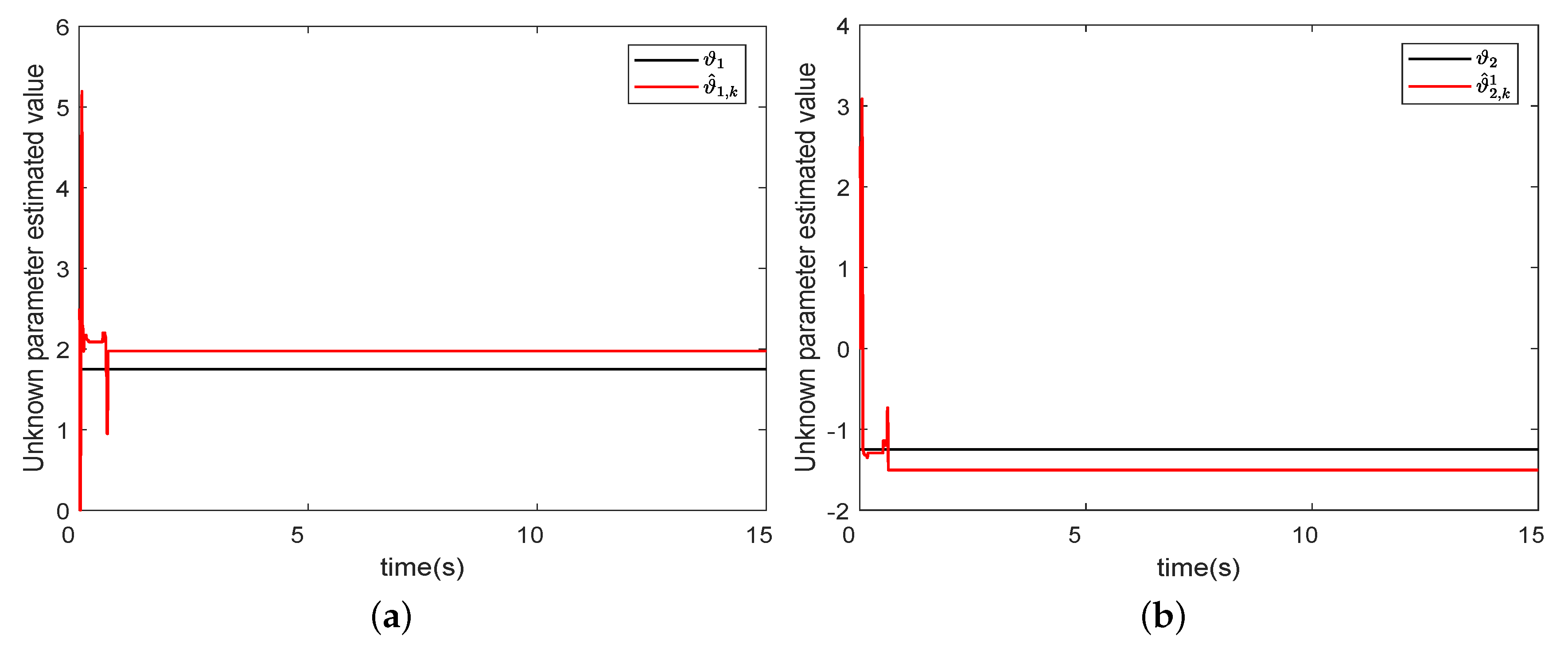

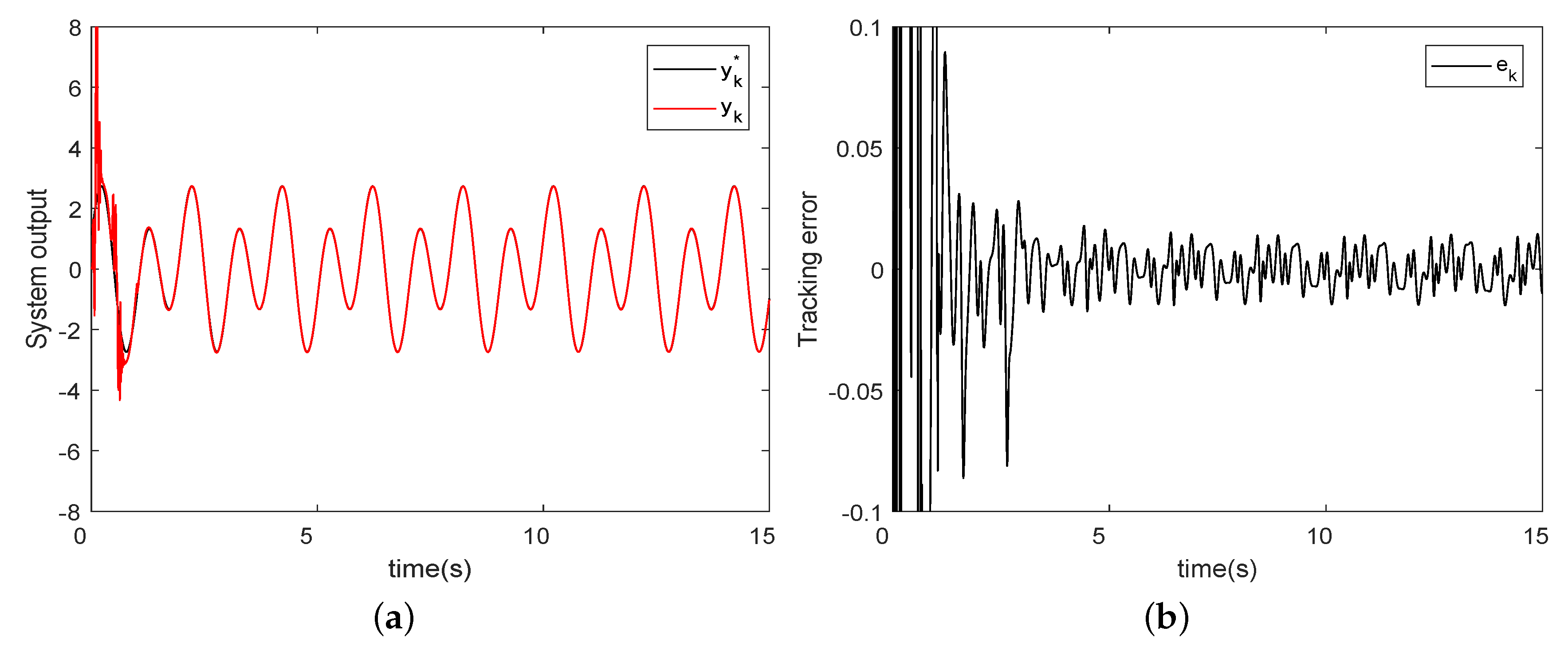

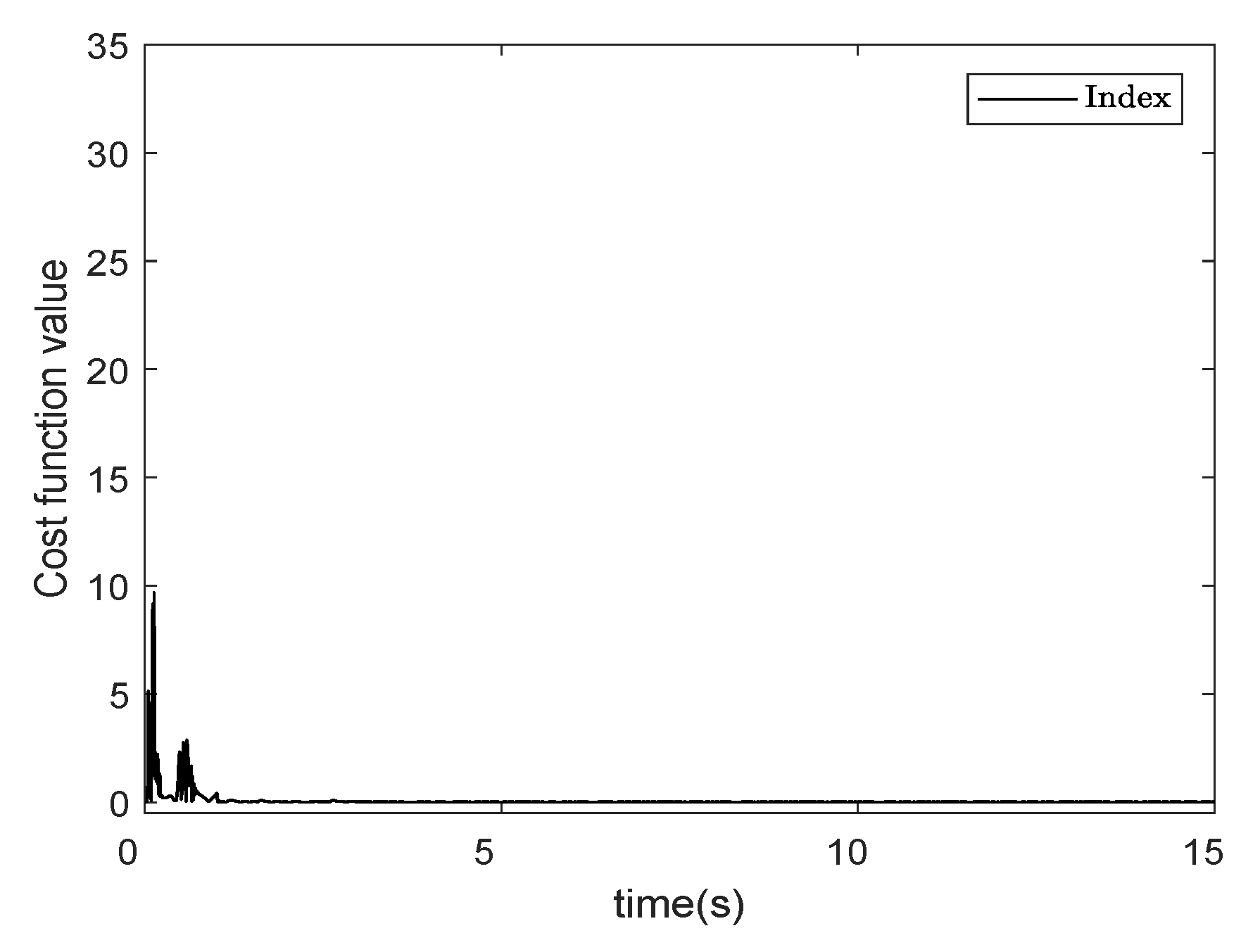

4.1. The First Simulation Example

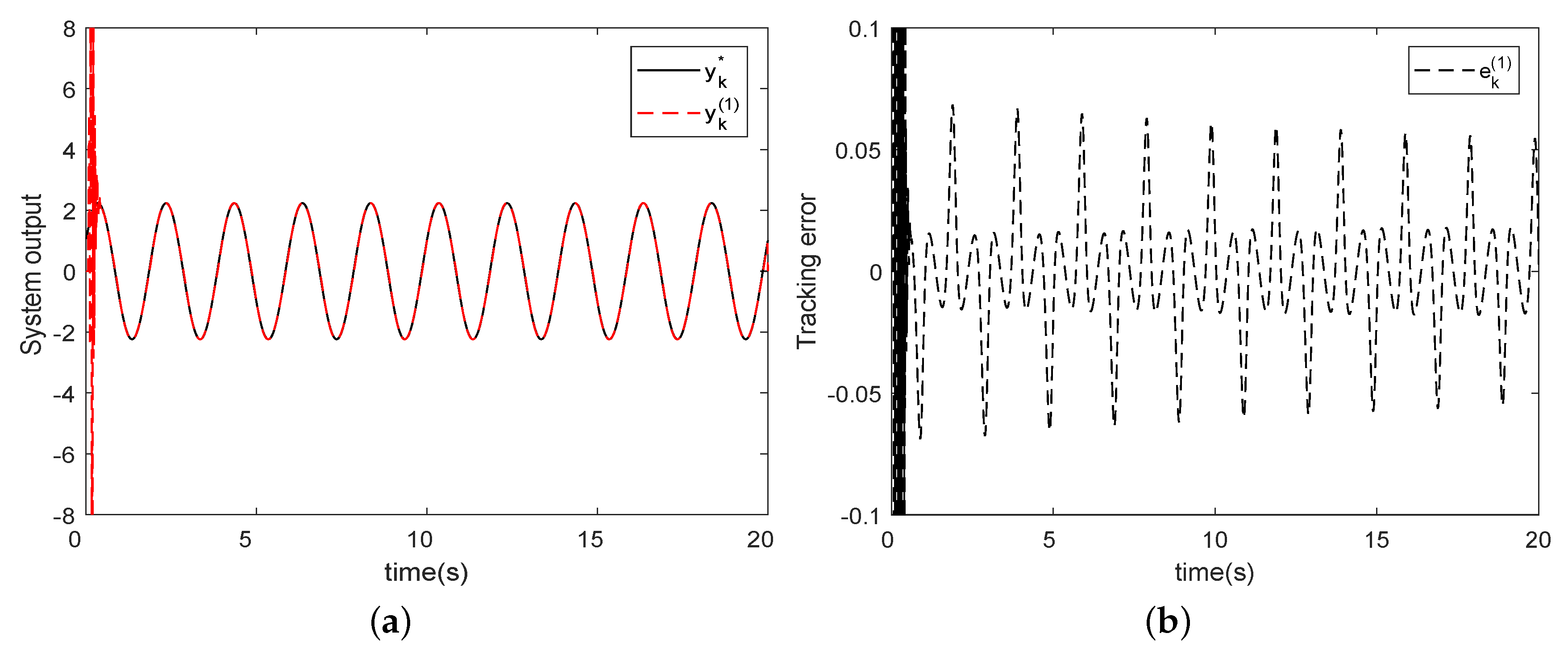

4.2. The Second Simulation Example

4.3. The Third Simulation Example

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hou, Z.; Jin, S. A novel data-driven control approach for a class of discrete-time nonlinear systems. IEEE Trans. Control Sytems Technol. 2011, 19, 1549–1558. [Google Scholar] [CrossRef]

- Liao, Y.; Du, T.; Jiang, Q. Model-free adaptive control method with variable forgetting factor for unmanned surface vehicle control. Appl. Ocean. Res. 2019, 93, 101945. [Google Scholar] [CrossRef]

- Hou, Z.; Zhu, Y. Controller-Dynamic-Linearization-Based Model Free Adaptive Control for Discrete-Time Nonlinear Systems. IEEE Trans. Ind. Inform. 2013, 9, 2301–2309. [Google Scholar] [CrossRef]

- Aghaei Hashjin, S.; Pang, S.; Ait-Abderrahim, K.; Nahid-Mobarakeh, B. Data-Driven Model-Free Adaptive Current Control of a Wound Rotor Synchronous Machine Drive System. IEEE Trans. Transp. Electrif. 2020, 6, 1146–1156. [Google Scholar] [CrossRef]

- Zhang, X.; Ma, H. Data-Driven Model-Free Adaptive Control Based on Error Minimized Regularized Online Sequential Extreme Learning Machine. Energies 2019, 12, 3241. [Google Scholar] [CrossRef]

- Yu, M.; Zhou, W.; Liu, B. On iterative learning control for MIMO nonlinear systems in the presence of time-iteration-varying parameters. Nonlinear Dyn. 2017, 89, 2561–2571. [Google Scholar] [CrossRef]

- He, W.; Meng, T.; He, X.; Ge, S.S. Unified iterative learning control for flexible structures with input constraints. Automatica 2018, 96, 326–336. [Google Scholar] [CrossRef]

- Mandra, S.; Galkowski, K.; Rogers, E.; Rauh, A.; Aschemann, H. Performance-enhanced robust iterative learning control with experimental application to PMSM position tracking. IEEE Trans. Control Syst. Technol. 2019, 27, 1813–1819. [Google Scholar] [CrossRef]

- Choi, W.; Volpe, F.A. Simultaneous iterative learning control of mode entrainment and error field. Nucl. Fusion 2019, 59, 056011. [Google Scholar] [CrossRef]

- Hjalmarsson, H. Iterative feedback tuning—An overview. Int. J. Adapt. Control Signal Process. 2002, 16, 373–395. [Google Scholar] [CrossRef]

- Heertjes, M.F.; Vander Velden, B.; Oomen, T. Constrained iterative feedback tuning for robust control of a wafer stage system. IEEE Trans. Control Syst. Technol. 2016, 24, 56–66. [Google Scholar] [CrossRef]

- Sanchez Pena, R.S.; Colmegna, P.; Bianchi, F. Unfalsified control based on the controller parameterisation. Int. J. Syst. Sci. 2015, 46, 2820–2831. [Google Scholar] [CrossRef]

- Safonov, M.; Tsao, T. The unfalsified control concept and learning. IEEE Trans. Autom. Control 1997, 42, 843–847. [Google Scholar] [CrossRef]

- Jeng, J.C.; Lin, Y.W. Data-driven nonlinear control design using virtual-reference feedback tuning based on the block-oriented modeling of nonlinear systems. Ind. Eng. Chem. Res. 2018, 57, 7583–7599. [Google Scholar] [CrossRef]

- Kobayashi, M.; Konishi, Y.; Ishigaki, H. A lazy learning control method using support vector regression. Int. J. Innovtive Comput. Inf. Control 2007, 3, 1511–1523. [Google Scholar]

- Jia, C.; Li, X.; Wang, K.; Ding, D. Adaptive control of nonlinear system using online error minimum neural networks. Isa Trans. 2016, 65, 125–132. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Jia, C.; Liu, D.; Ding, D. Adaptive Control of Nonlinear Discrete-Time Systems by Using OS-ELM Neural Networks. Abstr. Appl. Anal. 2014, 2014, 1–11. [Google Scholar] [CrossRef]

- Rahmani, B.; Belkheiri, M. Adaptive neural network output feedback control for flexible multi-link robotic manipulators. Int. J. Control 2019, 92, 2324–2338. [Google Scholar] [CrossRef]

- Li, X.; Fang, J.; Li, H. Exponential stabilisation of memristive neural networks under intermittent output feedback control. Int. J. Control 2018, 91, 1848–1860. [Google Scholar] [CrossRef]

- Chen, C.; Modares, H.; Xie, K.; Lewis, F.L.; Wan, Y.; Xie, S. Reinforcement Learning-based Adaptive Optimal Exponential Tracking Control of Linear Systems with Unknown Dynamics. IEEE Trans. Autom. Control 2024. to be published. [Google Scholar] [CrossRef]

- Nguyen, N.D.; Nguyen, T.; Nahavandi, S. Multi-agent behavioral control system using deep reinforcement learning. Neurocomputing 2019, 359, 58–68. [Google Scholar] [CrossRef]

- Wang, Z.; Li, H.; Wang, J.; Shen, F. Deep reinforcement learning based conflict detection and resolution in air traffic control. IET Intell. Transp. Syst. 2019, 13, 1041–1047. [Google Scholar] [CrossRef]

- Khalatbarisoltani, A.; Soleymani, M.; Khodadadi, M. Online control of an active seismic system via reinforcement learning. Struct. Control Health Monit. 2019, 26, e2298. [Google Scholar] [CrossRef]

- Yang, X.; Liu, D.; Wang, D. Reinforcement learning for adaptive optimal control of unknown continuous-time nonlinear systems with input constraints. Int. J. Control 2014, 87, 553–566. [Google Scholar] [CrossRef]

- Ma, H.; Lum, K.Y.; Ge, S.S. Adaptive control for a discrete-time first-order nonlinear system with both parametric and non-parametric uncertainties. In Proceedings of the IEEE Conference on Decision & Control, New Orleans, LA, USA, 12–14 December 2007. [Google Scholar]

- Zhou, H.; Ma, H.; Li, N.; Yang, C. Semi-parametric adaptive control of discrete-time systems using extreme learning machine. In Proceedings of the 2017 9th International Conference on Modelling, Identification and Control (ICMIC 2017), Piscataway, NJ, USA, 26–28 July 2017. [Google Scholar]

- Zhang, X.; Ma, H.; Luo, M.; Liu, X. Adaptive sliding mode control with information concentration estimator for a robot arm. Int. J. Syst. Sci. 2020, 51, 217–228. [Google Scholar] [CrossRef]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Liang, N.Y.; Huang, G.B.; Saratchandran, P.; Sundararajan, N. A fast and accurate online sequential learning algorithm for feedforward networks. IEEE Trans. Neural Netw. 2006, 17, 1411–1423. [Google Scholar] [CrossRef]

- Huynh, H.T.; Won, Y. Regularized online sequential learning algorithm for single-hidden layer feedforward neural networks. Pattern Recognit. Lett. 2011, 32, 1930–1935. [Google Scholar] [CrossRef]

- Hou, Z. Nonparametric Model and Adaptive Control Theory, 1st ed.; Science Press of China: Beijing, China, 1999. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Ma, H.; Zhang, H. Data-Driven Control Based on Information Concentration Estimator and Regularized Online Sequential Extreme Learning Machine. Symmetry 2024, 16, 88. https://doi.org/10.3390/sym16010088

Zhang X, Ma H, Zhang H. Data-Driven Control Based on Information Concentration Estimator and Regularized Online Sequential Extreme Learning Machine. Symmetry. 2024; 16(1):88. https://doi.org/10.3390/sym16010088

Chicago/Turabian StyleZhang, Xiaofei, Hongbin Ma, and Huaqing Zhang. 2024. "Data-Driven Control Based on Information Concentration Estimator and Regularized Online Sequential Extreme Learning Machine" Symmetry 16, no. 1: 88. https://doi.org/10.3390/sym16010088

APA StyleZhang, X., Ma, H., & Zhang, H. (2024). Data-Driven Control Based on Information Concentration Estimator and Regularized Online Sequential Extreme Learning Machine. Symmetry, 16(1), 88. https://doi.org/10.3390/sym16010088