A Regularized Generalized Popov’s Method to Solve the Hierarchical Variational Inequality Problem with Generalized Lipschitzian Mappings

Abstract

1. Introduction

2. Preliminaries

- (i)

- If mapping A satisfiesthen A is monotone.

- (ii)

- If for , mapping A satisfiesthen A is η-strongly monotone.

- (iii)

- If for , mapping A satisfiesthen A is L-Lipschitzian.

- (iv)

- If for , mapping A satisfiesthen A is L-generalized Lipschitzian.

- (v)

- A is hemicontinuous if

- (i)

- ;

- (ii)

- ;

- (iii)

- ;

- (iv)

- .

3. Main Results

- ()

- A is k-Lipschitzian on and monotone on .

- ()

- F is hemicontinuous, -generalized Lipschitzian and -strongly monotone on .

- ()

- The solution set is nonempty.

- ()

- Let be a sequence of and meet , , .

- ()

- Let be a sequence and satisfy , , , where (where N is a chosen positive integer).

| Algorithm 1: The multi-step inertial regularized generalized Popov’s extra-gradient method. |

Initialization: Given , , , . Let , , be any three members of . Step 1. Compute

Step 2. Given the current iterate , , and , compute as follows: Step 3. Compute

Step 4. Set and go to Step 1. |

- (i)

- For all , where τ is a positive constant,

- (ii)

- is bounded, and .

- (iii)

- .

- (i)

- ,

- (ii)

- .

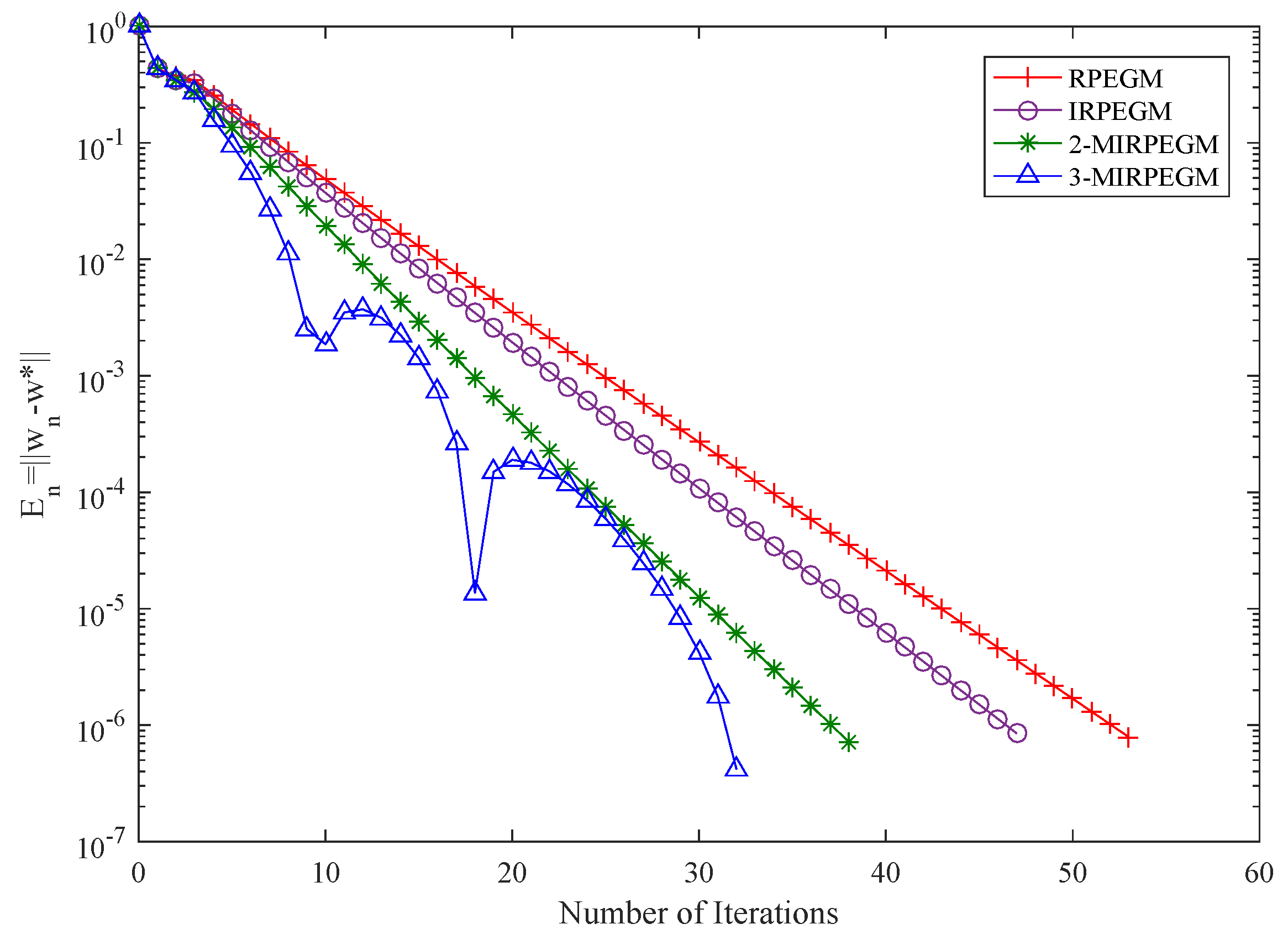

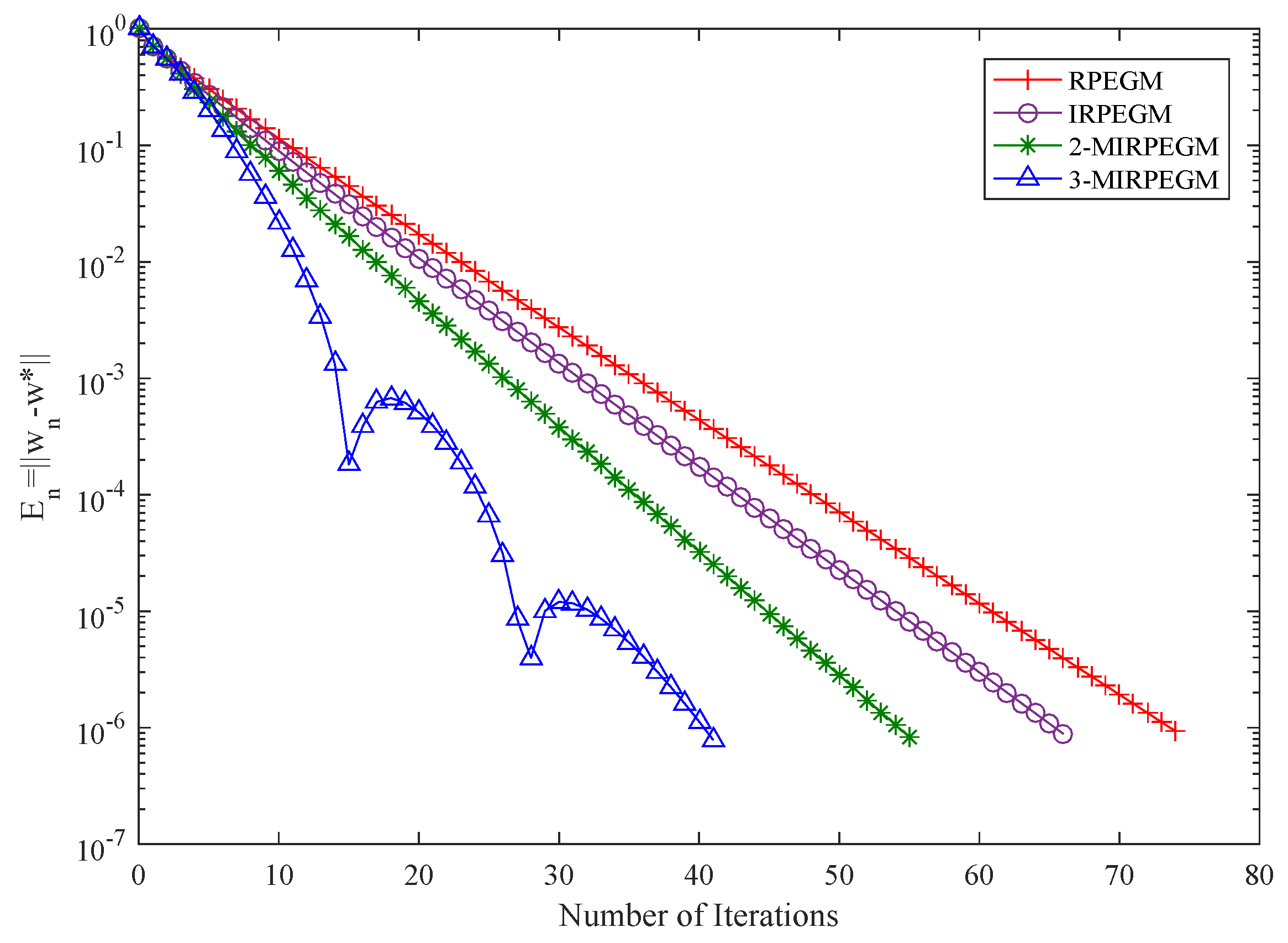

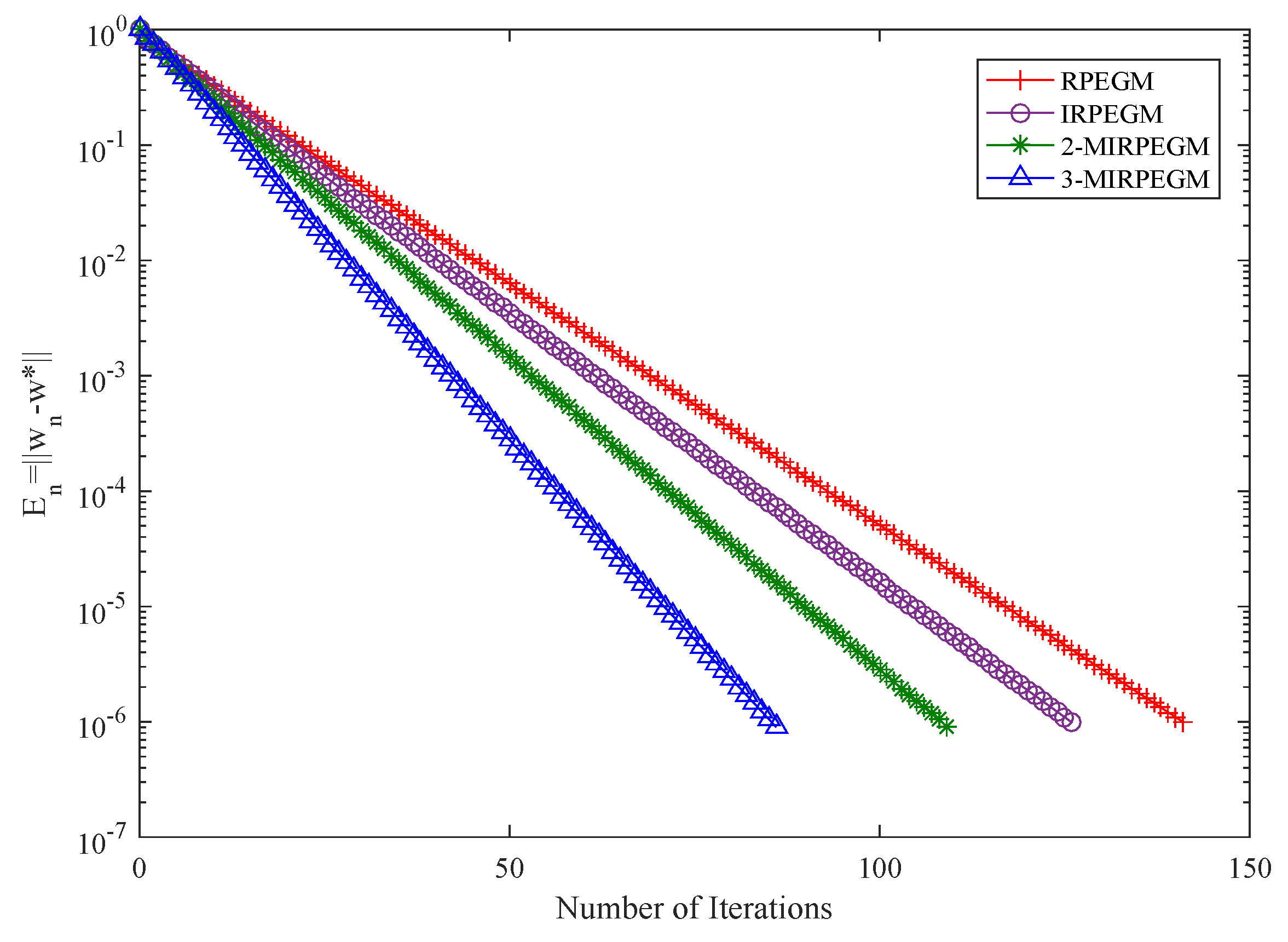

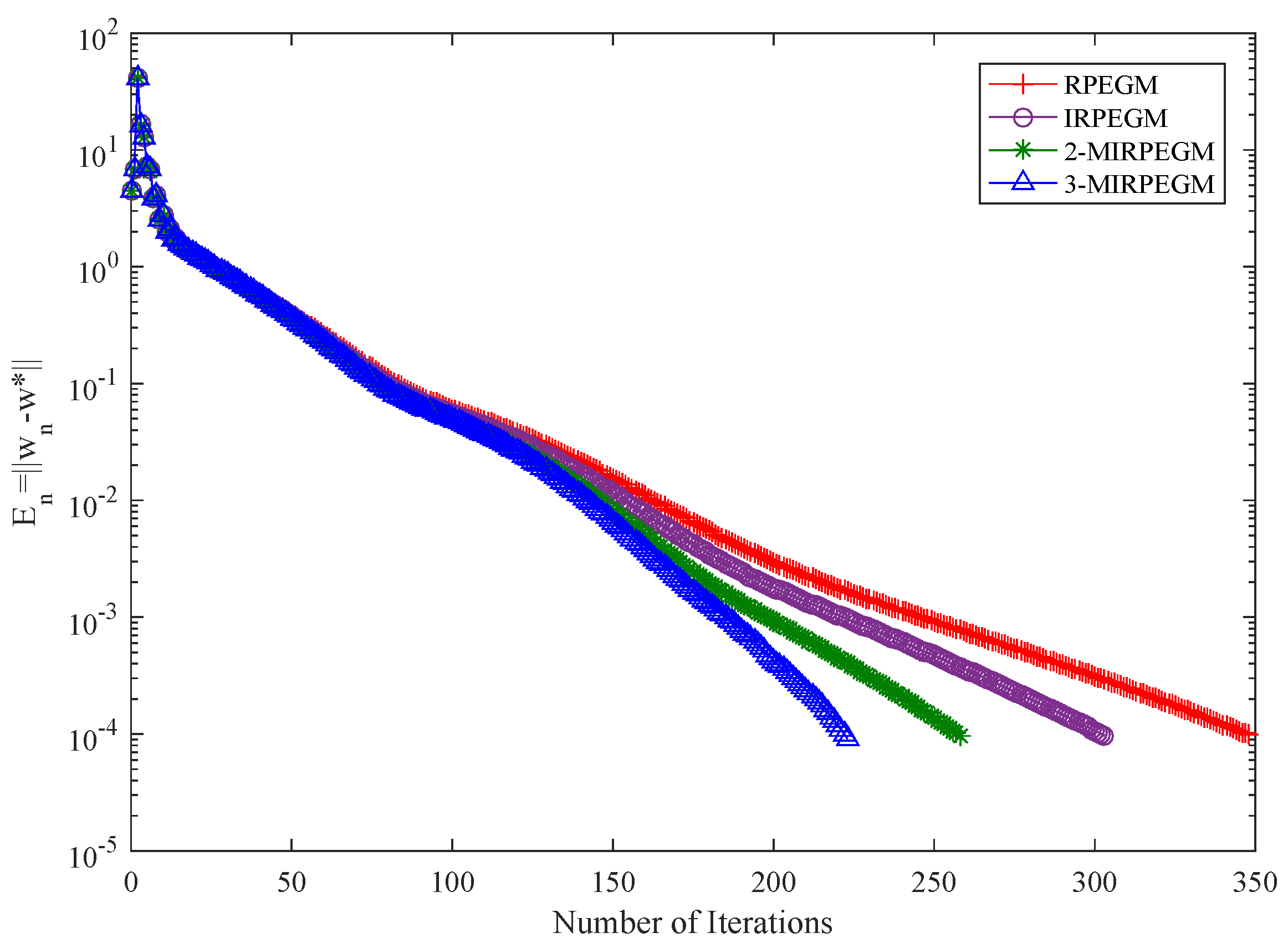

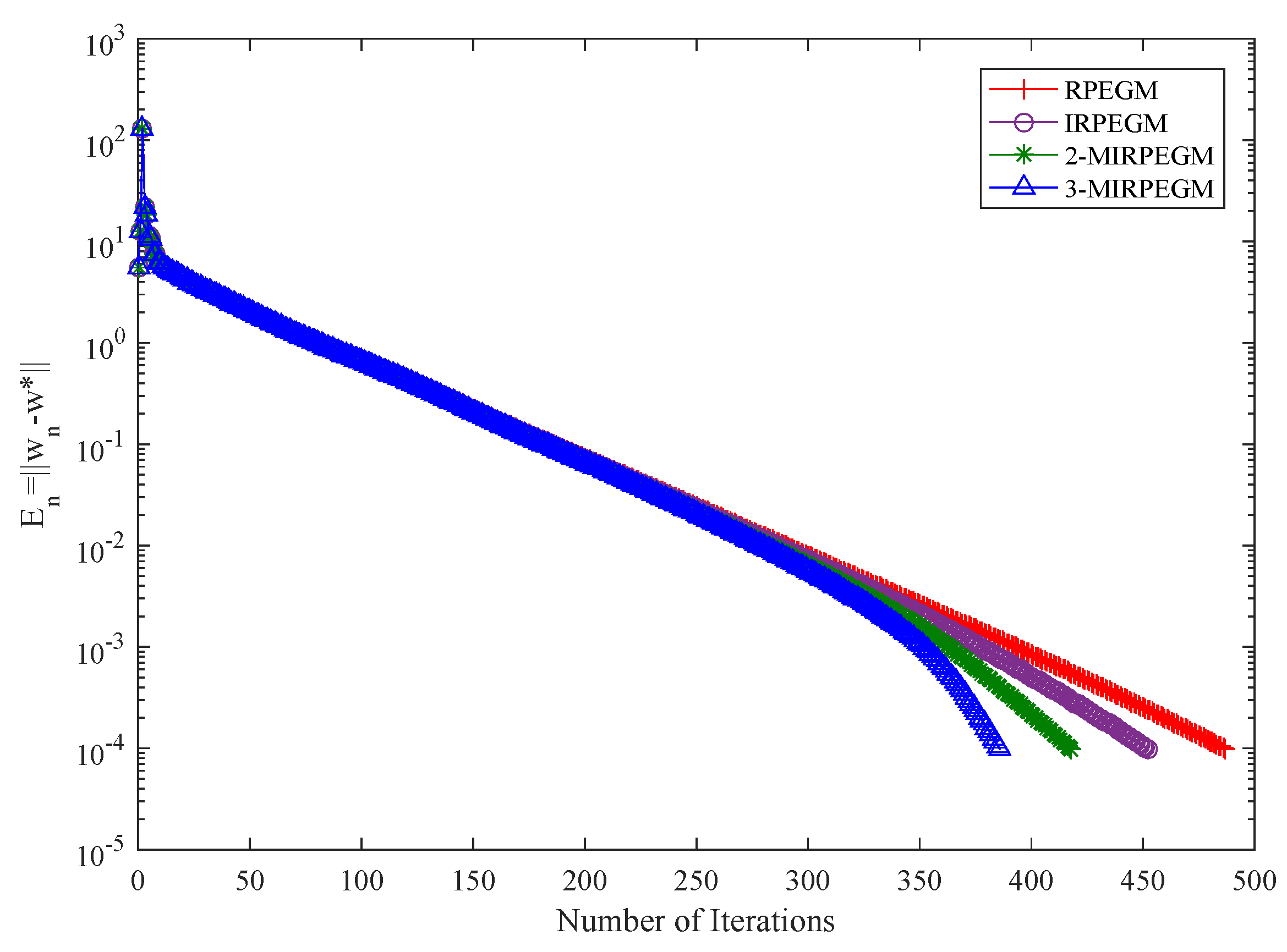

4. Numerical Examples

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Censor, Y.; Gibali, A.; Reich, S. The subgradient extragradient method for solving variational inequalities in Hilbert space. J. Optim. Theory Appl. 2011, 148, 318–335. [Google Scholar] [CrossRef] [PubMed]

- Ceng, L.C.; Shehu, Y.; Wang, Y. Parallel Tseng’s extragradient mrthods for solving systoms of variational inequalities on hadamard manifolfs. Symmetry 2020, 12, 43. [Google Scholar] [CrossRef]

- Hieu, D.V.; Strodiot, J.J.; Muu, L.D. An extragradient algorithm for solving variational inequalities. J. Optim. Theory Appl. 2020, 185, 476–503. [Google Scholar] [CrossRef]

- Wang, Y.; Li, C.; Lu, L. A new algorithm for the common solutions of a generalized variational inequality system and a nonlinear operator equation in Banach spaces. Mathematics 2020, 8, 1994. [Google Scholar] [CrossRef]

- Wang, Y.; Pan, C. Viscosity approximation methods for a general variational inequality system and fixed point problems in Banach spaces. Symmetry 2020, 12, 36. [Google Scholar] [CrossRef]

- Yang, J.; Liu, H.; Li, G. Convergence of a subgradient extragradient algorithm for solving monotone variational inequalities. Numer. Algor. 2020, 84, 389–405. [Google Scholar] [CrossRef]

- Reich, S.; Thong, D.V.; Cholamjiak, P.; Long, L.V. Inertial projection-type methods for solving pseudomonotone variatinal inequality problems in Hilbert spaces. Numer. Algor. 2021, 88, 813–835. [Google Scholar] [CrossRef]

- Thong, D.V.; Shehu, Y.; Iyiola, O.S.; Thang, H.V. New hybrid projection methods for variational inequalities involving pseudomonotone mappings. Optim. Eng. 2021, 22, 363–386. [Google Scholar] [CrossRef]

- Korpelevich, G.M. An extragradient method for finding saddle points and for other problems. Ekon. Mat. Metod. 1976, 12, 747–756. [Google Scholar]

- Popov, L.D. A modification of the Arrow-Hurwicz method for searching for saddle points. Mat. Zameki. 1980, 28, 777–784. [Google Scholar]

- Malitsky, Y.V.; Semenov, V.V. An extragradient algorithm for monotone variational inequalities. Cybern. Syst. Anal. 2014, 50, 271–277. [Google Scholar] [CrossRef]

- Hieu, D.V.; Anh, P.K.; Muu, L.D. Modified extragradient-like algorithms with new stepsizes for variational inequalities. Comput. Optim. Appl. 2019, 73, 913–932. [Google Scholar] [CrossRef]

- Hieu, D.V.; Moudafi, A. Regularization projection method for solving bilevel variational inequality problem. Optim. Lett. 2020, 15, 205–229. [Google Scholar] [CrossRef]

- Jiang, B.; Wang, Y.; Yao, J.C. Multi-step inertial regularized methods for hierarchical variational inequality problems involving generalized lipschitz continuous and hemicontinuous mappings. Mathematics 2021, 9, 2103. [Google Scholar] [CrossRef]

- Hammad, H.A.; Renman, H.; Almusawa, H. Tikhonov regularization terms for Accelerating inertial mann-like algorithm with applications. Symmetry 2021, 13, 554. [Google Scholar] [CrossRef]

- Zhou, H.; Zhou, Y.; Feng, G. Iterative methods for solving a class of monotone variational inequality problems with applications. J. Inequal. Appl. 2015, 2015, 68. [Google Scholar] [CrossRef][Green Version]

- Xu, H.K. Averaged mappings and the gradient-projection algorithm. J. Optim. Theory Appl. 2011, 150, 360–378. [Google Scholar] [CrossRef]

- Yang, J.; Liu, H. Strong convergence result for solving monotone variational inequlities in Hilbert space. Numer. Algor. 2019, 80, 741–752. [Google Scholar] [CrossRef]

- Kraikaew, R.; Saejung, S. Strong convergence of the Halpern subgradient extragradient method for solving variational inequalities in Hilbert spaces. J. Optim. Theory Appl. 2014, 163, 399–412. [Google Scholar] [CrossRef]

| IRPEGM | IRSEGM | ||||

|---|---|---|---|---|---|

| Iter. | Time [s] | Iter. | Time [s] | ||

| 0.2 | 0.2 | 128 | 0.9596 | 167 | 1.2030 |

| 0.3 | 73 | 0.5893 | 99 | 0.8926 | |

| 0.4 | 73 | 0.5891 | 167 | 1.2683 | |

| 0.3 | 0.2 | 76 | 0.6052 | 188 | 1.3341 |

| 0.3 | 157 | 1.0814 | 184 | 1.3190 | |

| 0.4 | 76 | 0.6191 | 184 | 1.3587 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Gao, Y.; Jiang, B. A Regularized Generalized Popov’s Method to Solve the Hierarchical Variational Inequality Problem with Generalized Lipschitzian Mappings. Symmetry 2022, 14, 187. https://doi.org/10.3390/sym14020187

Wang Y, Gao Y, Jiang B. A Regularized Generalized Popov’s Method to Solve the Hierarchical Variational Inequality Problem with Generalized Lipschitzian Mappings. Symmetry. 2022; 14(2):187. https://doi.org/10.3390/sym14020187

Chicago/Turabian StyleWang, Yuanheng, Yidan Gao, and Bingnan Jiang. 2022. "A Regularized Generalized Popov’s Method to Solve the Hierarchical Variational Inequality Problem with Generalized Lipschitzian Mappings" Symmetry 14, no. 2: 187. https://doi.org/10.3390/sym14020187

APA StyleWang, Y., Gao, Y., & Jiang, B. (2022). A Regularized Generalized Popov’s Method to Solve the Hierarchical Variational Inequality Problem with Generalized Lipschitzian Mappings. Symmetry, 14(2), 187. https://doi.org/10.3390/sym14020187