Speech Enhancement Model Synthesis Based on Federal Learning for Industrial CPS in Multiple Noise Conditions

Abstract

1. Introduction

2. Models and Methods

2.1. Problem Statement

2.2. Teacher Model

2.2.1. Encoder

2.2.2. TSTM

2.2.3. Masking

2.2.4. Decoder

2.2.5. Loss

2.3. Student Model with Mean Distillation

3. Experiment

3.1. Dataset

- (1)

- Teacher models: We built four teacher models. In order to train the teacher models in different noise environments, we selected 11,572 clean data points from VoiceBank [45]. The VoiceBank corpus consisted of 30 native English speakers from different parts of the world, each reading approximately 400 sentences, and we used 28 speakers for training and 2 for testing. We also used four noises common in the CPS industry—babble, white, destroyerops, and factory (from the NoiseX-92 dataset [46])—to generate the noise-laden speech dataset. The noisy speech in the training set was added to a random SNR of dB corresponding to the noise. The test set had a SNR of dB.

- (2)

- Student model: A total of 6300 clean speech items were selected from the TIMIT training dataset and mixed at dB with the four noises we used in the teacher model dataset at random. This produced noisy speech samples. Of these, were used as the training dataset, and 1750 were used as the validation dataset. To evaluate the student model, 1344 speech sounds were selected from the TIMIT test dataset and blended at dB with the four noises present in the two datasets above. The resulting 5376 noisy speech samples were used as the test dataset.

| Dataset | Type | Information | Source | |

|---|---|---|---|---|

| Clean speech | Teacher model | 30 speakers | training 28 speakers | VoiceBank corpus [45] |

| testing 2 speakers | ||||

| Student model | 630 speakers | training 462 speakers | TIMIT [47] | |

| testing 168 speakers | ||||

| Noise | white | Sampling a high-quality analog noise generator | NoiseX92 [46] | |

| babble | 100 people speaking in a canteen | |||

| factory | Plate-cutting and electrical welding equipment | |||

| destroyerops | Samples from microphone on digital audio tape | |||

3.2. Training Detail

3.3. Evaluation Metrics

4. Discussion

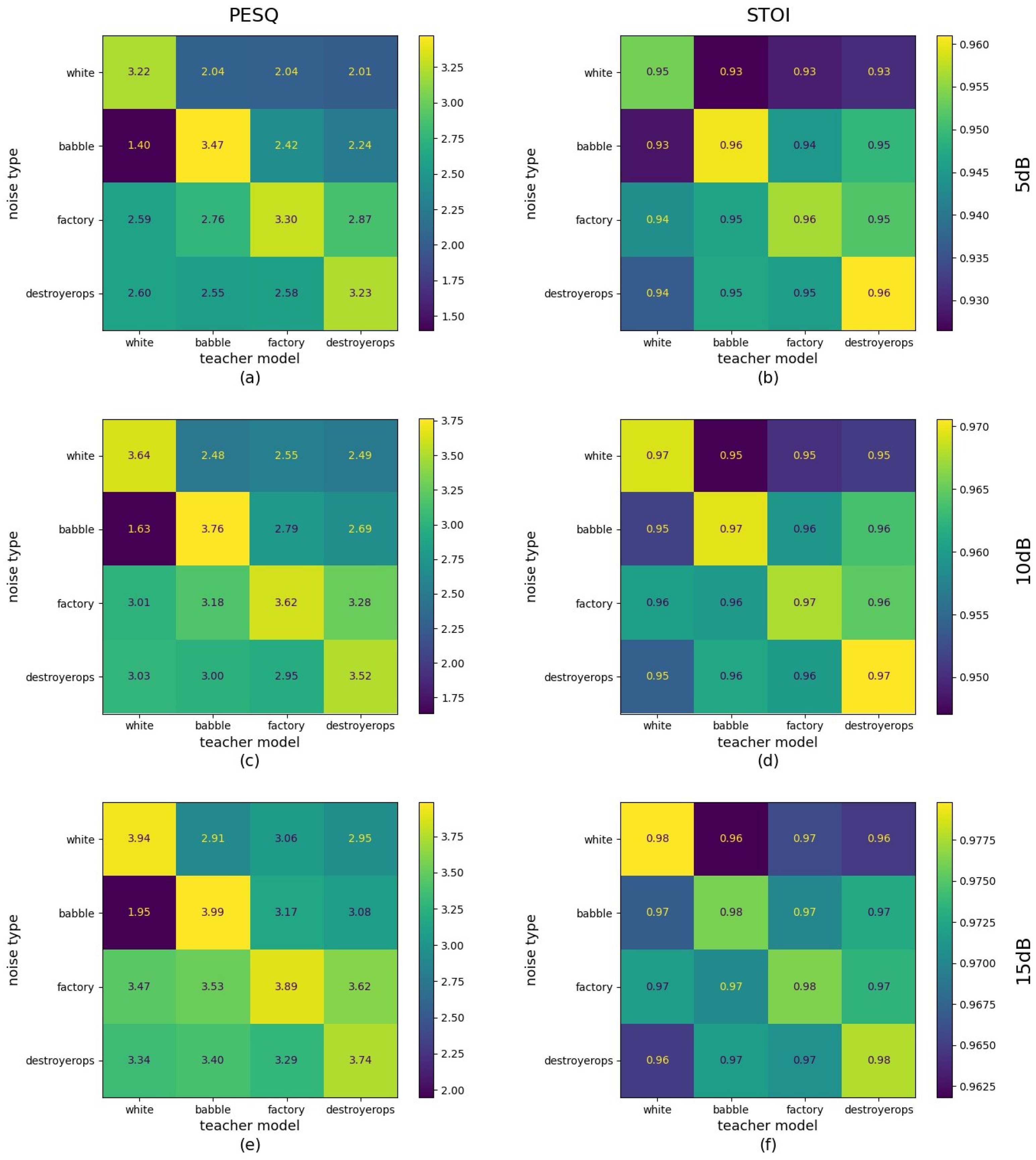

4.1. Evaluation of the Teacher Models

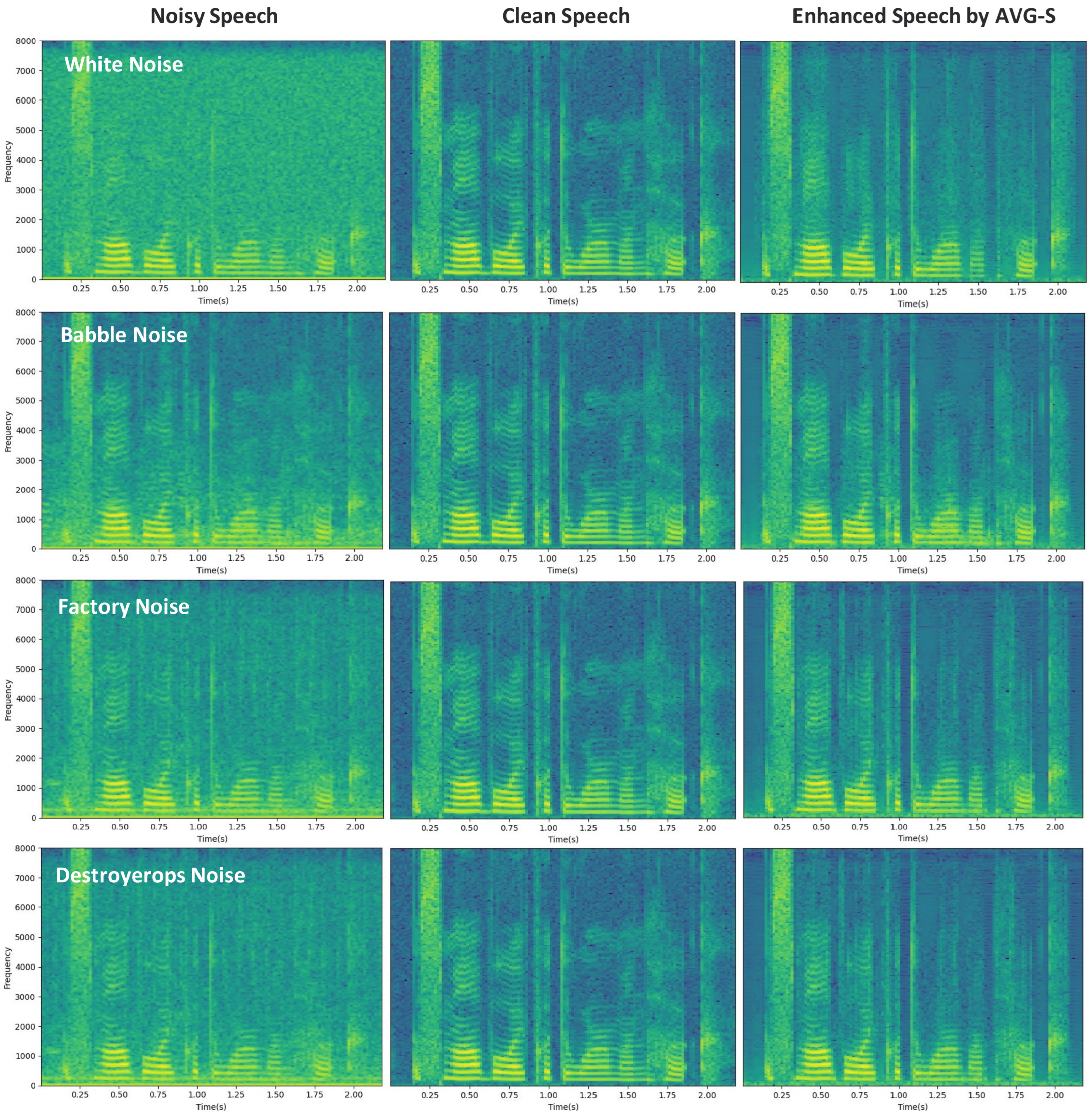

4.2. Student Models on TIMIT + NoiseX92

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Konstantinou, C.; Maniatakos, M.; Saqib, F.; Hu, S.; Plusquellic, J.; Jin, Y. Cyber-physical systems: A security perspective. In Proceedings of the 2015 20th IEEE European Test Symposium (ETS), Cluj-Napoca, Romania, 25–29 May 2015; pp. 1–8. [Google Scholar]

- Sanislav, T.; Mois, G.; Miclea, L. An approach to model dependability of cyber-physical systems. Microprocess. Microsyst. 2016, 41, 67–76. [Google Scholar] [CrossRef]

- Wang, C.; Lv, Y.; Wang, Q.; Yang, D.; Zhou, G. Service-Oriented Real-Time Smart Job Shop Symmetric CPS Based on Edge Computing. Symmetry 2021, 13, 1839. [Google Scholar] [CrossRef]

- Loizou, P.C. Speech Enhancement: Theory and Practice; CRC Press: Boca Raton, FL, USA, 2007. [Google Scholar]

- Boll, S. Suppression of acoustic noise in speech using spectral subtraction. IEEE Trans. Acoust. Speech Signal Process. 1979, 27, 113–120. [Google Scholar] [CrossRef]

- Lim, J.; Oppenheim, A. All-pole modeling of degraded speech. IEEE Trans. Acoust. Speech Signal Process. 1978, 26, 197–210. [Google Scholar] [CrossRef]

- Ephraim, Y.; Malah, D. Speech enhancement using a minimum mean-square error log-spectral amplitude estimator. IEEE Trans. Acoust. Speech Signal Process. 1985, 33, 443–445. [Google Scholar] [CrossRef]

- Ephraim, Y.; Van Trees, H.L. A signal subspace approach for speech enhancement. IEEE Trans. Speech Audio Process. 1995, 3, 251–266. [Google Scholar] [CrossRef]

- Purwins, H.; Li, B.; Virtanen, T.; Schlüter, J.; Chang, S.Y.; Sainath, T. Deep learning for audio signal processing. IEEE J. Sel. Top. Signal Process. 2019, 13, 206–219. [Google Scholar] [CrossRef]

- Zhang, Q.; Nicolson, A.; Wang, M.; Paliwal, K.K.; Wang, C. DeepMMSE: A deep learning approach to MMSE-based noise power spectral density estimation. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 1404–1415. [Google Scholar] [CrossRef]

- Li, A.; Yuan, M.; Zheng, C.; Li, X. Speech enhancement using progressive learning-based convolutional recurrent neural network. Appl. Acoust. 2020, 166, 107347. [Google Scholar] [CrossRef]

- Roman, N.; Woodruff, J. Ideal binary masking in reverberation. In Proceedings of the 2012 20th European Signal Processing Conference (EUSIPCO), Bucharest, Romania, 27–31 August 2012; pp. 629–633. [Google Scholar]

- Li, X.; Li, J.; Yan, Y. Ideal Ratio Mask Estimation Using Deep Neural Networks for Monaural Speech Segregation in Noisy Reverberant Conditions. In Proceedings of the Interspeech, Stockholm, Sweden, 20–24 August 2017; pp. 1203–1207. [Google Scholar]

- Tengtrairat, N.; Woo, W.L.; Dlay, S.S.; Gao, B. Online noisy single-channel source separation using adaptive spectrum amplitude estimator and masking. IEEE Trans. Signal Process. 2015, 64, 1881–1895. [Google Scholar] [CrossRef]

- Paliwal, K.; Wójcicki, K.; Shannon, B. The importance of phase in speech enhancement. Speech Commun. 2011, 53, 465–494. [Google Scholar] [CrossRef]

- Erdogan, H.; Hershey, J.R.; Watanabe, S.; Le Roux, J. Phase-sensitive and recognition-boosted speech separation using deep recurrent neural networks. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, QLD, Australia, 19–24 April 2015; pp. 708–712. [Google Scholar]

- Williamson, D.S.; Wang, Y.; Wang, D. Complex ratio masking for monaural speech separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2015, 24, 483–492. [Google Scholar] [CrossRef]

- Tan, K.; Wang, D. Complex spectral mapping with a convolutional recurrent network for monaural speech enhancement. In Proceedings of the ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 6865–6869. [Google Scholar]

- Le, X.; Chen, H.; Chen, K.; Lu, J. DPCRN: Dual-Path Convolution Recurrent Network for Single Channel Speech Enhancement. In Proceedings of the Interspeech 2021, Brno, Czech Republic, 30 August–3 September 2021; pp. 2811–2815. [Google Scholar]

- Luo, Y.; Mesgarani, N. Tasnet: Time-domain audio separation network for real-time, single-channel speech separation. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 696–700. [Google Scholar]

- Luo, Y.; Mesgarani, N. Conv-tasnet: Surpassing ideal time–frequency magnitude masking for speech separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2019, 27, 1256–1266. [Google Scholar] [CrossRef] [PubMed]

- Pascual, S.; Bonafonte, A.; Serrà, J. SEGAN: Speech Enhancement Generative Adversarial Network. In Proceedings of the Interspeech 2017, Stockholm, Sweden, 20–24 August 2017; pp. 3642–3646. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Oord, A.V.D.; Dieleman, S.; Zen, H.; Simonyan, K.; Vinyals, O.; Graves, A.; Kalchbrenner, N.; Senior, A.; Kavukcuoglu, K. Wavenet: A generative model for raw audio. arXiv 2016, arXiv:1609.03499. [Google Scholar]

- Qian, K.; Zhang, Y.; Chang, S.; Yang, X.; Florêncio, D.; Hasegawa-Johnson, M. Speech Enhancement Using Bayesian Wavenet. In Proceedings of the Interspeech, Stockholm, Sweden, 20–24 August 2017; pp. 2013–2017. [Google Scholar]

- Rethage, D.; Pons, J.; Serra, X. A wavenet for speech denoising. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5069–5073. [Google Scholar]

- Fu, S.W.; Tsao, Y.; Lu, X.; Kawai, H. Raw waveform-based speech enhancement by fully convolutional networks. In Proceedings of the 2017 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Kuala Lumpur, Malaysia, 12–15 December 2017; pp. 6–12. [Google Scholar]

- Fu, S.W.; Wang, T.W.; Tsao, Y.; Lu, X.; Kawai, H. End-to-end waveform utterance enhancement for direct evaluation metrics optimization by fully convolutional neural networks. IEEE/ACM Trans. Audio Speech Lang. Process. 2018, 26, 1570–1584. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics, PMLR, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Bonawitz, K.; Ivanov, V.; Kreuter, B.; Marcedone, A.; McMahan, H.B.; Patel, S.; Ramage, D.; Segal, A.; Seth, K. Practical secure aggregation for privacy-preserving machine learning. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 1175–1191. [Google Scholar]

- Malinin, A.; Mlodozeniec, B.; Gales, M. Ensemble distribution distillation. arXiv 2019, arXiv:1905.00076. [Google Scholar]

- Du, S.; You, S.; Li, X.; Wu, J.; Wang, F.; Qian, C.; Zhang, C. Agree to disagree: Adaptive ensemble knowledge distillation in gradient space. Adv. Neural Inf. Process. Syst. 2020, 33, 12345–12355. [Google Scholar]

- Zhou, K.; Yang, Y.; Qiao, Y.; Xiang, T. Domain adaptive ensemble learning. IEEE Trans. Image Process. 2021, 30, 8008–8018. [Google Scholar] [CrossRef]

- Meng, Z.; Li, J.; Gong, Y.; Juang, B.H. Adversarial Teacher-Student Learning for Unsupervised Domain Adaptation. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5949–5953. [Google Scholar]

- Watanabe, S.; Hori, T.; Le Roux, J.; Hershey, J.R. Student-teacher network learning with enhanced features. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 5275–5279. [Google Scholar]

- Nakaoka, S.; Li, L.; Inoue, S.; Makino, S. Teacher-student learning for low-latency online speech enhancement using wave-u-net. In Proceedings of the ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 661–665. [Google Scholar]

- Kim, S.; Kim, M. Test-time adaptation toward personalized speech enhancement: Zero-shot learning with knowledge distillation. In Proceedings of the 2021 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, 17–20 October 2021; pp. 176–180. [Google Scholar]

- Hao, X.; Wen, S.; Su, X.; Liu, Y.; Gao, G.; Li, X. Sub-Band Knowledge Distillation Framework for Speech Enhancement. In Proceedings of the Interspeech 2020, Shanghai, China, 25–29 October 2020; pp. 2687–2691. [Google Scholar]

- Hao, X.; Su, X.; Wang, Z.; Zhang, Q.; Xu, H.; Gao, G. SNR-based teachers-student technique for speech enhancement. In Proceedings of the 2020 IEEE International Conference on Multimedia and Expo (ICME), London, UK, 6–10 July 2020; pp. 1–6. [Google Scholar]

- Wang, K.; He, B.; Zhu, W.P. TSTNN: Two-stage transformer based neural network for speech enhancement in the time domain. In Proceedings of the ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 7098–7102. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Li, P.; Jiang, Z.; Yin, S.; Song, D.; Ouyang, P.; Liu, L.; Wei, S. Pagan: A phase-adapted generative adversarial networks for speech enhancement. In Proceedings of the ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 6234–6238. [Google Scholar]

- Pandey, A.; Wang, D. Densely connected neural network with dilated convolutions for real-time speech enhancement in the time domain. In Proceedings of the ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 6629–6633. [Google Scholar]

- Veaux, C.; Yamagishi, J.; King, S. The voice bank corpus: Design, collection and data analysis of a large regional accent speech database. In Proceedings of the 2013 International Conference Oriental COCOSDA Held Jointly with 2013 Conference on Asian Spoken Language Research and Evaluation (O-COCOSDA/CASLRE), Gurgaon, India, 25–27 November 2013; pp. 1–4. [Google Scholar]

- Varga, A.; Steeneken, H.J. Assessment for automatic speech recognition: II. NOISEX-92: A database and an experiment to study the effect of additive noise on speech recognition systems. Speech Commun. 1993, 12, 247–251. [Google Scholar] [CrossRef]

- Garofolo, J.S.; Lamel, L.F.; Fisher, W.M.; Fiscus, J.G.; Pallett, D.S. DARPA TIMIT acoustic-phonetic continous speech corpus CD-ROM. NIST speech disc 1-1.1. NASA STI/Recon Tech. Rep. N 1993, 93, 27403. [Google Scholar]

- Taal, C.H.; Hendriks, R.C.; Heusdens, R.; Jensen, J. An algorithm for intelligibility prediction of time–frequency weighted noisy speech. IEEE Trans. Audio Speech Lang. Process. 2011, 19, 2125–2136. [Google Scholar] [CrossRef]

- Kong, J.; Kim, J.; Bae, J. Hifi-gan: Generative adversarial networks for efficient and high fidelity speech synthesis. In Proceedings of the Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, Virtual, 6–12 December 2020; pp. 17022–17033. [Google Scholar]

| Input: // initial student model weights |

| Output: |

| 1. for while do |

| 2. // Available teacher models |

| 3. (split into batch) |

| 4. for batch t in do |

| 5. // average distillation from teacher model |

| 6. |

| 8. end |

| Noise Type | White | Babble | Factory | Destroyerops | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SNR | 5 | 10 | 15 | 5 | 10 | 15 | 5 | 10 | 15 | 5 | 10 | 15 |

| noisy | 1.3502 | 1.6011 | 1.9188 | 1.955 | 2.3697 | 2.765 | 1.7486 | 2.1791 | 2.6098 | 1.7337 | 2.1716 | 2.594 |

| White-T | 3.2178 | 3.6357 | 3.9430 | 2.0419 | 2.4767 | 2.9081 | 2.0387 | 2.5519 | 3.0627 | 2.0122 | 2.4935 | 2.9522 |

| Babble-T | 1.3991 | 1.6337 | 1.9476 | 3.4725 | 3.7636 | 3.9862 | 2.4183 | 2.7894 | 3.1658 | 2.2434 | 2.6867 | 3.0835 |

| Factory-T | 2.5898 | 3.0094 | 3.4656 | 2.7635 | 3.1792 | 3.5274 | 3.2973 | 3.6181 | 3.8932 | 2.8717 | 3.2751 | 3.6161 |

| Destroyerops-T | 2.6038 | 3.0335 | 3.3431 | 2.5517 | 2.9989 | 3.3964 | 2.5754 | 2.9510 | 3.2934 | 3.2275 | 3.5150 | 3.7427 |

| Noise Type | White | Babble | Factory | Destroyerops | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SNR | 5 | 10 | 15 | 5 | 10 | 15 | 5 | 10 | 15 | 5 | 10 | 15 |

| noisy | 0.9200 | 0.9481 | 0.9668 | 0.9235 | 0.9446 | 0.96 | 0.9241 | 0.9465 | 0.9628 | 0.9264 | 0.9478 | 0.9619 |

| White-T | 0.9538 | 0.9693 | 0.9798 | 0.9265 | 0.9471 | 0.9618 | 0.9291 | 0.9504 | 0.9660 | 0.9309 | 0.9513 | 0.9648 |

| Babble-T | 0.9283 | 0.9516 | 0.9671 | 0.9602 | 0.9696 | 0.9762 | 0.9449 | 0.9595 | 0.9701 | 0.9493 | 0.9638 | 0.9728 |

| Factory-T | 0.9435 | 0.9604 | 0.9718 | 0.9466 | 0.9597 | 0.9704 | 0.9563 | 0.9677 | 0.9763 | 0.9517 | 0.9647 | 0.9735 |

| Destroyerops-T | 0.9366 | 0.9543 | 0.9650 | 0.9471 | 0.9612 | 0.9718 | 0.9458 | 0.9599 | 0.9709 | 0.9610 | 0.9706 | 0.9777 |

| Noise Type | White | Babble | Factory | Destroyerops | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SNR | 5 | 10 | 15 | 5 | 10 | 15 | 5 | 10 | 15 | 5 | 10 | 15 |

| noisy | 1.3314 | 1.6784 | 2.1967 | 1.8224 | 2.321 | 2.8465 | 1.7311 | 2.2928 | 2.9016 | 1.6181 | 2.1384 | 2.7365 |

| Random-S | 2.4116 | 2.9373 | 3.3127 | 2.4754 | 2.9764 | 3.3710 | 2.3568 | 2.9448 | 3.3852 | 2.3273 | 2.9081 | 3.3567 |

| AVE-S | 2.4503 | 2.9564 | 3.3043 | 2.4846 | 3.0094 | 3.3901 | 2.3917 | 2.9798 | 3.3879 | 2.3875 | 2.9657 | 3.3784 |

| Noise Type | White | Babble | Factory | Destroyerops | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SNR | 5 | 10 | 15 | 5 | 10 | 15 | 5 | 10 | 15 | 5 | 10 | 15 |

| noisy | 0.9440 | 0.9767 | 0.9912 | 0.9097 | 0.9537 | 0.9782 | 0.9271 | 0.9667 | 0.9860 | 0.9022 | 0.9501 | 0.9777 |

| Random-S | 0.9475 | 0.9734 | 0.9854 | 0.9479 | 0.9702 | 0.9831 | 0.9547 | 0.9758 | 0.9867 | 0.9428 | 0.9677 | 0.9824 |

| AVE-S | 0.9505 | 0.9745 | 0.9853 | 0.9482 | 0.9721 | 0.9843 | 0.9578 | 0.9778 | 0.9874 | 0.9450 | 0.9701 | 0.9837 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, K.; Lu, W.; Zhou, H.; Yao, J. Speech Enhancement Model Synthesis Based on Federal Learning for Industrial CPS in Multiple Noise Conditions. Symmetry 2022, 14, 2285. https://doi.org/10.3390/sym14112285

Wang K, Lu W, Zhou H, Yao J. Speech Enhancement Model Synthesis Based on Federal Learning for Industrial CPS in Multiple Noise Conditions. Symmetry. 2022; 14(11):2285. https://doi.org/10.3390/sym14112285

Chicago/Turabian StyleWang, Kunpeng, Wenjing Lu, Hao Zhou, and Juan Yao. 2022. "Speech Enhancement Model Synthesis Based on Federal Learning for Industrial CPS in Multiple Noise Conditions" Symmetry 14, no. 11: 2285. https://doi.org/10.3390/sym14112285

APA StyleWang, K., Lu, W., Zhou, H., & Yao, J. (2022). Speech Enhancement Model Synthesis Based on Federal Learning for Industrial CPS in Multiple Noise Conditions. Symmetry, 14(11), 2285. https://doi.org/10.3390/sym14112285