Performance Evaluation of an Independent Time Optimized Infrastructure for Big Data Analytics that Maintains Symmetry

Abstract

1. Introduction

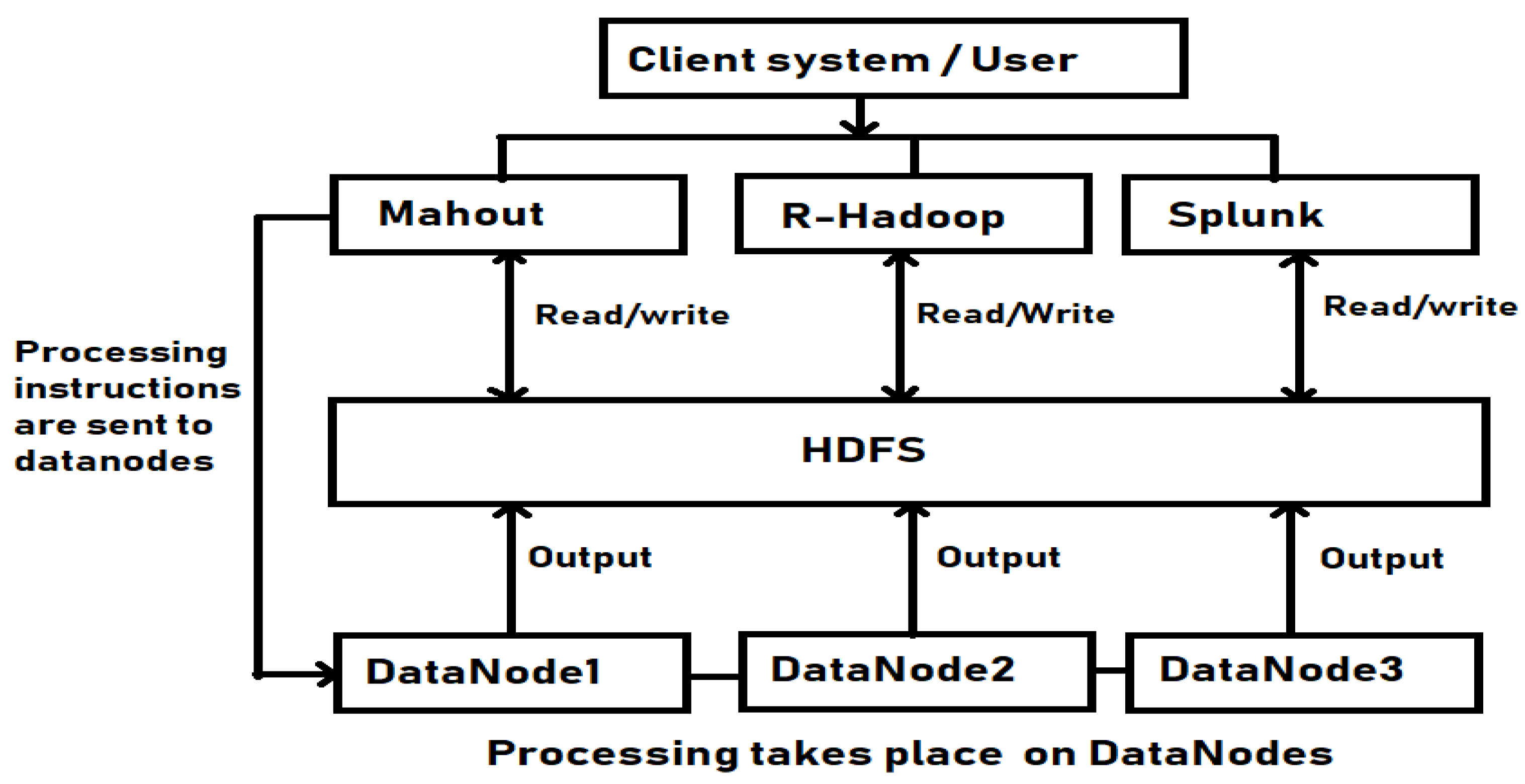

2. Proposed Hybrid Framework: A Three Master Node (Name Node) Model

| Algorithm 1. HYB_NAME_NODE for the Proposed Model |

| *//All the algorithms are implemented on DN (Data-Node), Controlled by the Name-Node (Master Node)//* |

| HYB_NAME_NODE (Ds, Ds ext, S(D), N1, N2, N3, CM, Sp, Bp, DN) |

| Ds = Data |

| Ds ext = Data extension |

| S(D) = Data Size |

| N1 = Name-Node (Master Node 1)//Shared resources |

| N2 = Name-Node (Master Node 2)//Shared resources |

| N3 = Name-Node (Master Node 3)//Shared resources |

| CM = Client-Node (Client Machine) |

| Sp = Stream Data |

| Bp = Batch Data |

| DN = Data-Node |

| Step 1- Input the Data Ds from CM |

| Step 2- If (S(D) ≤ 1 GB && Ds ∈ Sp || Ds ∈ Bp) |

| Process N2 |

| Else if (S(D) >1 GB && Ds ∈ Sp || Ds ∈ Bp) |

| Process N1 |

| Else if (Ds ext = “. Log”) |

| Process N3 |

| Exit (0); |

| Step 3- Name-Node (M1, M2, M3) process the Job, given by the CN (User/Client machine) |

| Step 4- if (job request Algo = K-Means)//All 3 algorithms are used for the application purpose// |

| { |

| Launch = K-Means () |

| Result () |

| } |

| Else if (job request Algo = Recommender) |

| { |

| Launch = Recommender () |

| Result () |

| } |

| Else if (job request Algo = Naïve Bayes) |

| { |

| Launch = Naïve Bayes () |

| Result () |

| } |

| Exit (0); |

| Step 5- If the result is final (result after reducer/completion of the algo) |

| { |

| Output stores in HDFS |

| } |

Contribution

- For smooth running of the system, a maven repository for the mahout is build, which can easily use the machine learning library.

- core-site.xml, yarn-site.xml arrangements are altered as per the requirement of cluster. This is Twisted for the HA (High Availability) formation.

- To make R work on Linux and Hadoop, an R-server is built.

- To simplify a feasible condition of this combined cluster requires multiple repositories and OS repository for accessing and building “YUM,” which can work on Linux.

- 5.

- Serialization is tuned in yarn-site.xml with respect to the Data Node RPC (Remote Procedure Call, which is the heartbeat signal offers the communication among the Name-Node and Data-Node and accountable to allot job processing location) and movement of the data directly in the form of input splits from the HDFS.

3. Legacy Model

4. Results

4.1. Data Description

- Data set 1:

- Twenty News group data is the set of information, which contains a survey on persons through the website, i.e., what kind of updates they read and what they like [47].

- Data set 2:

- The movie dataset contains numerous files, which have a customer_id that describes who watches the movie, the movie id, and the year of release. These movies are separated as per the votes and score provided by the users. The movie id is in a range from 1 to 17,770 [48].

- Data set 3:

- The Spam SMS dataset consists of a message per line with the label and the raw text. This The SMS is not always spam, but can be a message between two individuals. This is a completely text-based dataset. It has 6000+ rows of messages and two columns. The spam message is mined from website with the help of a web crawler [49].

4.2. Job Execution Process in Hadoop Infrastructure

- New stage: When a user provide instruction to the Name-Node to execute any job, it comes under a new stage.

- Submitted stage: When the Name-Node accepts and submits the job to the Resource manager for further execution, it comes under submitted stage.

- Analyzing stage: The Resource manager will do some validation and check for the input path and output path and the data.

- Accepted stage: After completing the submitting and analyzing stage the job will wait for the Application Master (AM) container to be launched. This stage is just above the execution/running stage.

- Running stage: When the Application master (AM) container assigned, the job starts running in the cluster, where the data resides, i.e., on the Data-Nodes.

- Map stage: The map stage is the stage where the data is processed and converted in the Key Value pairs and these key value pairs are then given to reducer.

- Reducer Stage: In the reducer stage, the key value pairs are club together as per the characteristics, such as what are the values associated with key 1 and so on.

- Committer stage: In the committer stage, the output from the reducer process are clubbed into a single output file so that instead of having multiple part files user can have a single output file.

- Finished stage: when the resource manager marked the job to be finished and AM container is cleaned up by the node manager in simple word the job is successfully completed.

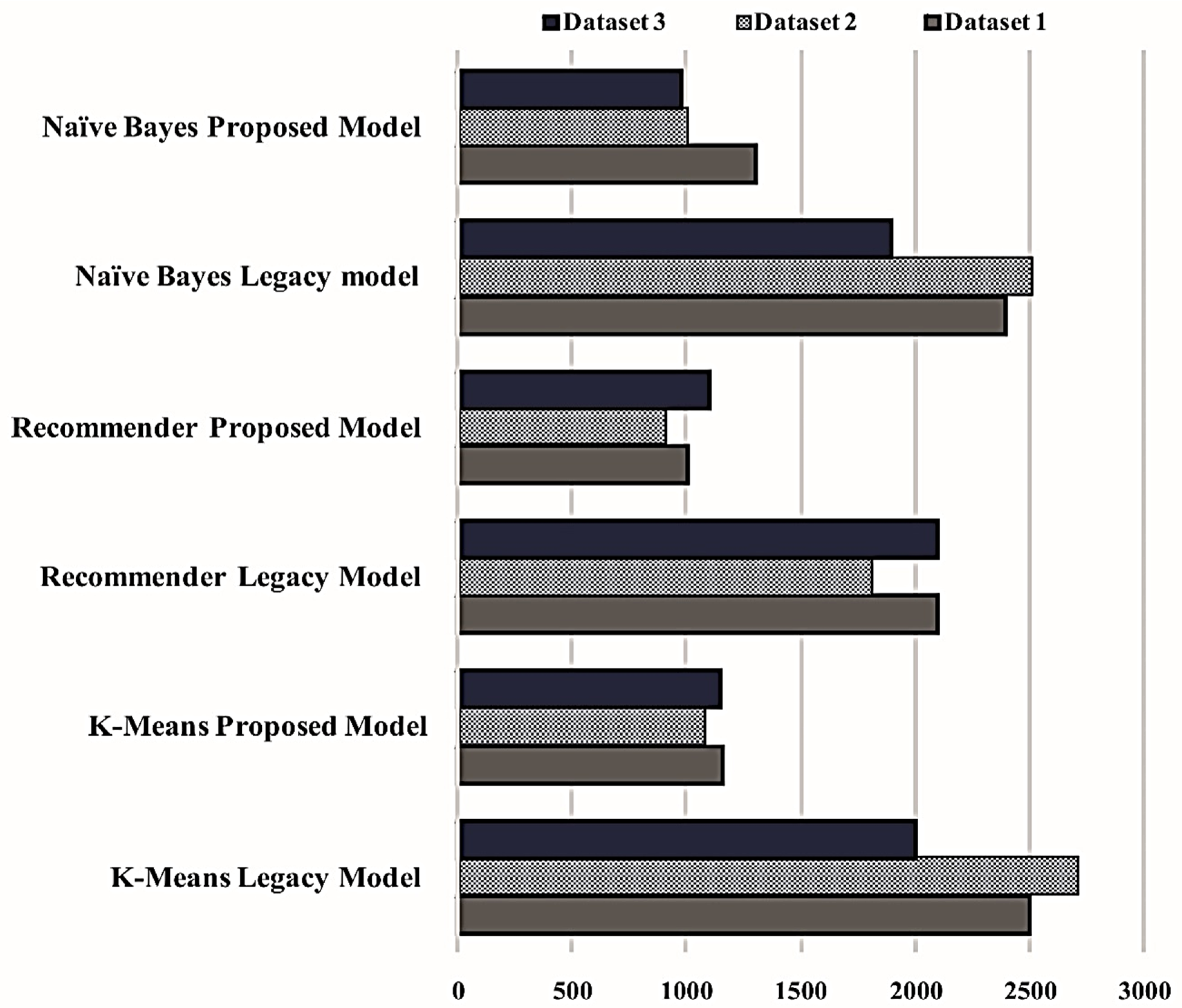

4.2.1. Response Time Comparison between Proposed and Legacy Model

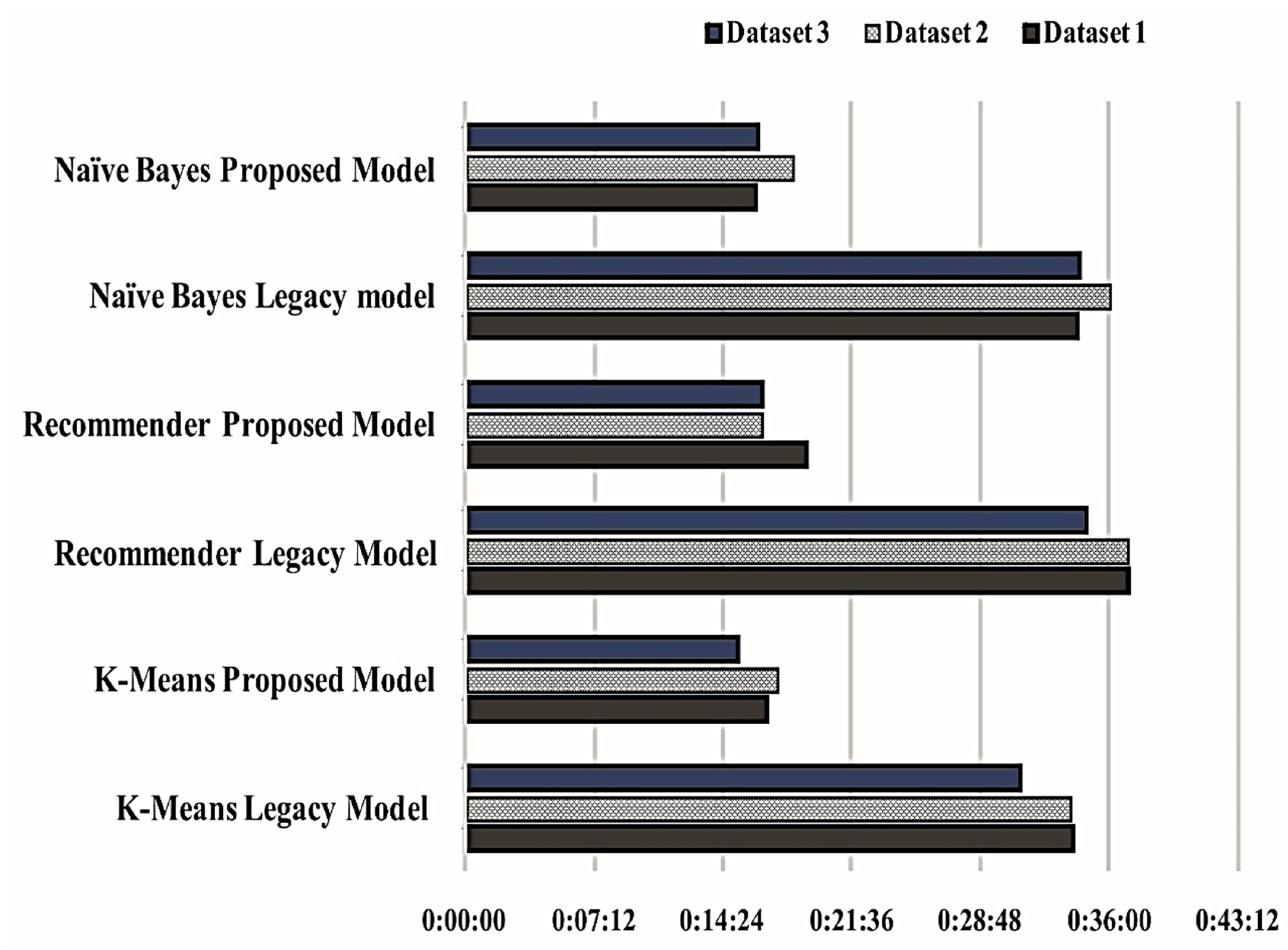

4.2.2. Time Taken (Running Time) by the MapReduce Programming Model

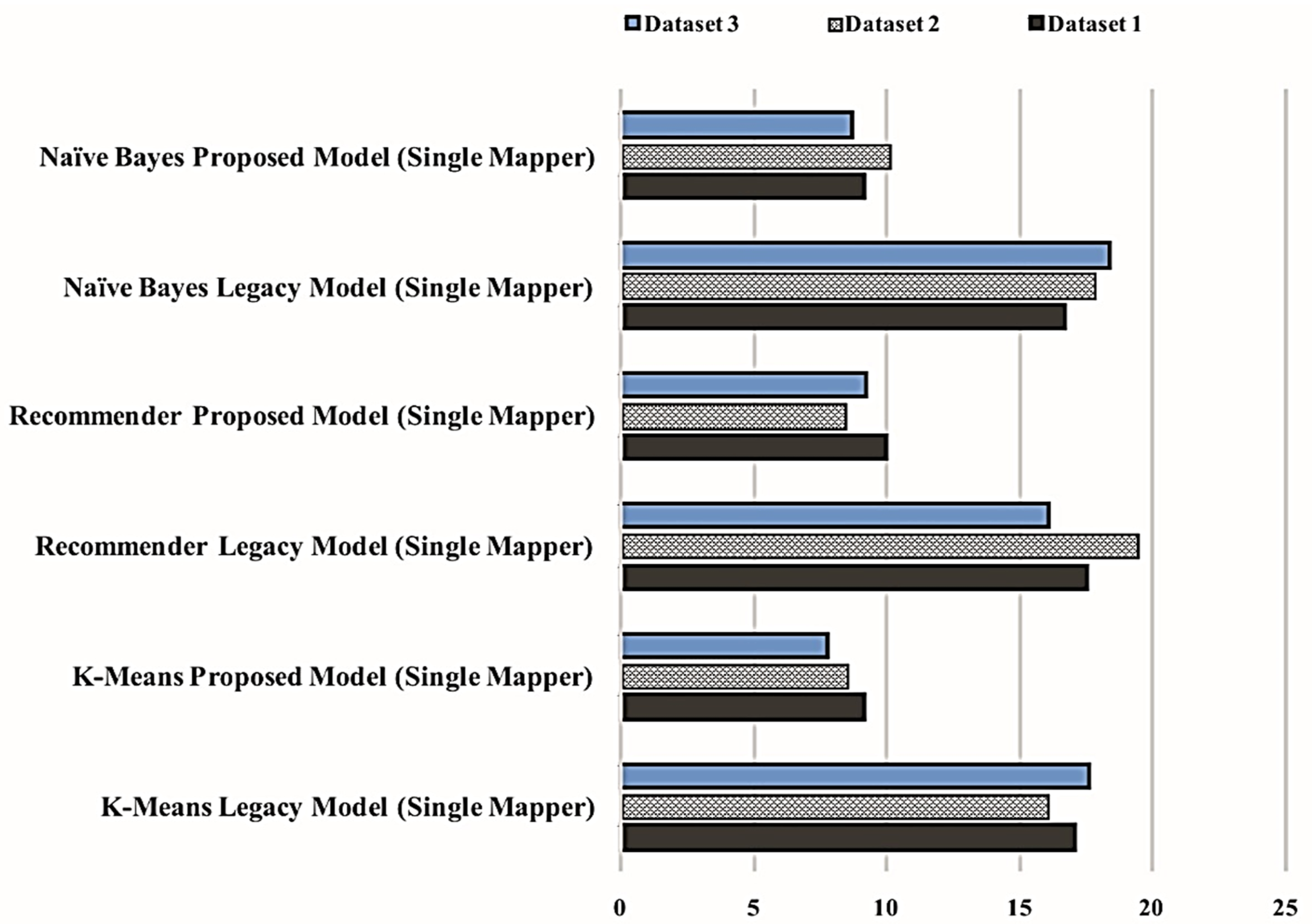

4.2.3. Throughput (Time Taken by the Single Mapper of Map Function)

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Garlasu, D.; Sandulescu, V.; Halcu, I.; Neculoiu, G.; Grigoriu, O.; Marinescu, M.; Marinescu, V. A big data implementation based on Grid computing. In Proceedings of the 2013 11th RoEduNet International Conference, Sinaia, Romania, 17–19 January 2013; pp. 1–4. [Google Scholar]

- Bryant, R.; Katz, R.H.; Lazowska, E.D. Big-data computing: Creating revolutionary breakthroughs in commerce. Sci. Soc. 2008, 8, 1–15. [Google Scholar]

- Gantz, J.; Reinsel, D. Extracting value from chaos. IDC Iview 2011, 1142, 1–12. [Google Scholar]

- Chen, L.; Chen, C.P.; Lu, M. A multiple-kernel fuzzy c-means algorithm for image segmentation. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2011, 41, 1263–1274. [Google Scholar] [CrossRef] [PubMed]

- Gandomi, A.; Haider, M. Beyond the hype: Big data concepts, methods, and analytics. Int. J. Inf. Manag. 2015, 35, 137–144. [Google Scholar] [CrossRef]

- Chen, C.P.; Zhang, C.-Y. Data-intensive applications, challenges, techniques and technologies: A survey on Big Data. Inf. Sci. 2014, 275, 314–347. [Google Scholar] [CrossRef]

- Rodríguez-Mazahua, L.; Rodríguez-Enríquez, C.-A.; Sánchez-Cervantes, J.L.; Cervantes, J.; García-Alcaraz, J.L.; Alor-Hernández, G. A general perspective of Big Data: Applications, tools, challenges and trends. J. Supercomput. 2016, 72, 3073–3113. [Google Scholar] [CrossRef]

- Hashem, I.A.T.; Yaqoob, I.; Anuar, N.B.; Mokhtar, S.; Gani, A.; Khan, S.U. The rise of “big data” on cloud computing: Review and open research issues. Inf. Syst. 2015, 47, 98–115. [Google Scholar] [CrossRef]

- Bhati, J.P.; Tomar, D.; Vats, S. Examining Big Data Management Techniques for Cloud-Based IoT Systems. In Examining Cloud Computing Technologies Through the Internet of Things; IGI Global: Hershey, PA, USA, 2018; pp. 164–191. [Google Scholar]

- Vats, S.; Sagar, B. Data Lake: A plausible Big Data science for business intelligence. In Proceedings of the 2nd International Conference on Communication and Computing Systems (ICCCS 2018), Gurgaon, India, 1–2 December 2018; p. 442. [Google Scholar]

- Agarwal, R.; Singh, S.; Vats, S. Review of Parallel Apriori Algorithm on MapReduce Framework for Performance Enhancement. In Big Data Analytics; Springer: Dordrecht, The Netherlands, 2018; pp. 403–411. [Google Scholar]

- Arias, J.; Gamez, J.A.; Puerta, J.M. Learning distributed discrete Bayesian network classifiers under MapReduce with Apache spark. Knowl. Based Syst. 2017, 117, 16–26. [Google Scholar] [CrossRef]

- Semberecki, P.; Maciejewski, H. Distributed classification of text documents on Apache Spark platform. In Proceedings of the International Conference on Artificial Intelligence and Soft Computing, Zakapane, Poland, 12–16 June 2016; pp. 621–630. [Google Scholar]

- Shen, P.; Wang, H.; Meng, Z.; Yang, Z.; Zhi, Z.; Jin, R.; Yang, A. An improved parallel Bayesian text classification algorithm. Rev. Comput. Eng. Stud. 2016, 3, 6–10. [Google Scholar]

- Prabhat, A.; Khullar, V. Sentiment classification on big data using Naïve Bayes and logistic regression. In Proceedings of the 2017 International Conference on Computer Communication and Informatics (ICCCI), Coimbatore, India, 5–7 January 2017; pp. 1–5. [Google Scholar]

- Kotwal, A.; Fulari, P.; Jadhav, D.; Kad, R. Improvement in sentiment analysis of twitter data using hadoop. In Proceedings of the International Conference on “Computing for Sustainable Global Development”, New Delhi, India, 16–18 March 2016. [Google Scholar]

- Sheela, L.J. A review of sentiment analysis in twitter data using Hadoop. Int. J. Database Theory Appl. 2016, 9, 77–86. [Google Scholar] [CrossRef]

- Hou, X. An Improved K-means Clustering Algorithm Based on Hadoop Platform. In Proceedings of the International Conference on Cyber Security Intelligence and Analytics, Shenyang, China, 21–22 February 2019; pp. 1101–1109. [Google Scholar]

- Ansari, Z.; Afzal, A.; Sardar, T.H. Data Categorization Using Hadoop MapReduce-Based Parallel K-Means Clustering. J. Inst. Eng. India Ser. B 2019, 100, 95–103. [Google Scholar] [CrossRef]

- Shaikh, T.A.; Shafeeque, U.B.; Ahamad, M. An Intelligent Distributed K-means Algorithm over Cloudera/Hadoop. Int. J. Educ. Manag. Eng. 2018, 8, 61. [Google Scholar] [CrossRef]

- Yang, M.; Mei, H.; Huang, D. An effective detection of satellite image via K-means clustering on Hadoop system. Int. J. Innov. Comput. Inf. Control 2017, 13, 1037–1046. [Google Scholar]

- Wang, Y.; Wang, M.; Xu, W. A sentiment-enhanced hybrid recommender system for movie recommendation: A big data analytics framework. Wirel. Commun. Mob. Comput. 2018, 2018, 8263740. [Google Scholar] [CrossRef]

- Zhang, H.; Huang, T.; Lv, Z.; Liu, S.; Zhou, Z. MCRS: A course recommendation system for MOOCs. Multimed. Tools Appl. 2018, 77, 7051–7069. [Google Scholar] [CrossRef]

- McClay, W. A Magnetoencephalographic/encephalographic (MEG/EEG) brain-computer interface driver for interactive iOS mobile videogame applications utilizing the Hadoop Ecosystem, MongoDB, and Cassandra NoSQL databases. Diseases 2018, 6, 89. [Google Scholar] [CrossRef]

- Bharti, R.; Gupta, D. Recommending top N movies using content-based filtering and collaborative filtering with hadoop and hive framework. In Recent Developments in Machine Learning and Data Analytics; Springer: Dordrecht, The Netherlands, 2019; pp. 109–118. [Google Scholar]

- Contratres, F.G.; Alves-Souza, S.N.; Filgueiras, L.V.L.; DeSouza, L.S. Sentiment analysis of social network data for cold-start relief in recommender systems. In Proceedings of the World Conference on Information Systems and Technologies, Galicia, Spain, 16–19 April 2018; pp. 122–132. [Google Scholar]

- Sherman, B.T.; Lempicki, R.A. Systematic and integrative analysis of large gene lists using DAVID bioinformatics resources. Nat. Protoc. 2009, 4, 44. [Google Scholar]

- Lavrac, N.; Keravnou, E.; Zupan, B. Intelligent data analysis in medicine. Encycl. Comput. Sci. Technol. 2000, 42, 113–157. [Google Scholar]

- Sharma, I.; Tiwari, R.; Rana, H.S.; Anand, A. Analysis of mahout big data clustering algorithms. In Intelligent Communication, Control and Devices; Springer: Dordrecht, The Netherlands, 2018; pp. 999–1008. [Google Scholar]

- Almeida, F.; Calistru, C. The main challenges and issues of big data management. Int. J. Res. Stud. Comput. 2013, 2, 11–20. [Google Scholar] [CrossRef]

- Ghemawat, S.; Gobioff, H.; Leung, S.-T. The Google file system. In Proceedings of the nineteenth ACM symposium on Operating systems principles, Bolton Landing, NY, USA, 19–22 October 2003; pp. 29–43. [Google Scholar]

- Dean, J.; Ghemawat, S. MapReduce: Simplified data processing on large clusters. Commun. ACM 2008, 51, 107–113. [Google Scholar] [CrossRef]

- Apache Hadoop (2012). Available online: http://hadoop.apache.org/ (accessed on 21 June 2020).

- Shvachko, K.; Kuang, H.; Radia, S.; Chansler, R. The hadoop distributed file system. In Proceedings of the 2010 IEEE 26th Symposium on Mass Storage Systems and Technologies (MSST), Incline Village, NV, USA, 3–7 May 2010; pp. 1–10. [Google Scholar]

- Bhandarkar, M. MapReduce programming with apache Hadoop. In Proceedings of the 2010 IEEE International Symposium on Parallel & Distributed Processing (IPDPS), Atlanta, GA, USA, 19–23 April 2010; p. 1. [Google Scholar]

- Apache Mahout (2019). Available online: https://mahout.apache.org/ (accessed on 21 June 2020).

- Apache Hbase (2019). Available online: http://hbase.apache.org/ (accessed on 21 June 2020).

- Apache Hive (2019). Available online: http://hive.apache.org/ (accessed on 21 June 2020).

- Esteves, R.M.; Pais, R.; Rong, C. K-means clustering in the cloud—A Mahout test. In Proceedings of the 2011 IEEE Workshops of International Conference on Advanced Information Networking and Applications, Biopolis, Singapore, 22–25 March 2011; pp. 514–519. [Google Scholar]

- Rong, C. Using Mahout for clustering Wikipedia’s latest articles: A comparison between k-means and fuzzy c-means in the cloud. In Proceedings of the 2011 IEEE Third International Conference on Cloud Computing Technology and Science, Washington, DC, USA, 5–10 July 2011; pp. 565–569. [Google Scholar]

- Ericson, K.; Pallickara, S. On the performance of high dimensional data clustering and classification algorithms. Future Gener. Comput. Syst. 2013, 29, 1024–1034. [Google Scholar] [CrossRef]

- Chakraborty, T.; Jajodia, S.; Katz, J.; Picariello, A.; Sperli, G.; Subrahmanian, V. FORGE: A fake online repository generation engine for cyber deception. In IEEE Transactions on Dependable and Secure Computing; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Mercorio, F.; Mezzanzanica, M.; Moscato, V.; Picariello, A.; Sperli, G. DICO: A graph-db framework for community detection on big scholarly data. In IEEE Transactions on Emerging Topics in Computing; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Moscato, V.; Picariello, A.; Sperlí, G. Community detection based on game theory. Eng. Appl. Artif. Intell. 2019, 85, 773–782. [Google Scholar] [CrossRef]

- Agarwal, R.; Singh, S.; Vats, S. Implementation of an improved algorithm for frequent itemset mining using Hadoop. In Proceedings of the 2016 International Conference on Computing, Communication and Automation (ICCCA), Greater Noida, India, 29–30 April 2016; pp. 13–18. [Google Scholar]

- Vats, S.; Sagar, B. Performance evaluation of K-means clustering on Hadoop infrastructure. J. Discret. Math. Sci. Cryptogr. 2019, 22, 1349–1363. [Google Scholar] [CrossRef]

- News Group. Available online: https://www.kaggle.com/crawford/20-newsgroups (accessed on 21 June 2020).

- Netflix. Available online: https://www.kaggle.com/laowingkin/netflix-movie-recommendation/data (accessed on 21 June 2020).

- Sms-spam-classification. Available online: https://www.kaggle.com/jeandsantos/sms-spam-classification/activity (accessed on 21 June 2020).

- Landset, S.; Khoshgoftaar, T.M.; Richter, A.N.; Hasanin, T. A survey of open source tools for machine learning with big data in the Hadoop ecosystem. J. Big Data 2015, 2, 24. [Google Scholar] [CrossRef]

| Volume | Data at rest |

| Velocity | Data in motion |

| Variety | Data in many form |

| Veracity | Data in doubt |

| Variability | Data in inconsistency speed |

| Validity | Data for accuracy |

| Volatility | Data is unstable or changeable |

| Vulnerability | Data for privacy |

| Visualization | Data for imagination |

| Value | Data for benefits |

| Algorithms with Environment | Dataset 1 | Dataset 2 | Dataset 3 |

|---|---|---|---|

| K-Means Legacy Model | * 2500 ± 2.59 | * 2700 ± 2.82 | * 2000 ± 2.72 |

| K-Means Proposed Model | * 1155 ± 3.57 | * 1075 ± 1.86 | * 1150 ± 1.41 |

| Recommender Legacy Model | * 2100 ± 1.41 | * 1800 ± 0.94 | * 2100 ± 1.41 |

| Recommender Proposed Model | * 1009 ± 1.86 | * 900 ± 2.82 | * 1100 ± 2.82 |

| Naïve Bayes Legacy model | * 2400 ± 2.35 | * 2500 ± 1.88 | * 1900 ± 1.41 |

| Naïve Bayes Proposed Model | * 1300 ± 1.41 | * 1000 ± 0.94 | * 975 ± 1.41 |

| Algorithms with Environment | Dataset 1 | Dataset 2 | Dataset 3 |

|---|---|---|---|

| K-Means Legacy Model | * 0:33:55 ± 0.28 | * 0:33:46 ± 0.02 | * 0:31:00 ± 0.02 |

| K-Means Proposed Model | * 0:16:50 ± 0.24 | * 0:17:28 ± 0.04 | * 0:15:16 ± 0.02 |

| Recommender Legacy Model | * 0:37:00 ± 0.03 | * 0:37:01 ± 0.01 | * 0:34:46 ± 0.02 |

| Recommender Proposed Model | * 0:19:01 ± 0.01 | * 0:16:34 ± 0.02 | * 0:16:37 ± 0.03 |

| Naïve Bayes Legacy model | * 0:34:13 ± 0.01 | * 0:36:00 ± 0.01 | * 0:34:17 ± 0.03 |

| Naïve Bayes Proposed Model | * 0:16:15 ± 0.02 | * 0:18:15 ± 0.01 | * 0:16:20 ± 0.03 |

| Algorithms with Environment | Dataset 1 | Dataset 2 | Dataset 3 |

|---|---|---|---|

| K-Means Legacy Model (Single Mapper) | * 17.04 ± 0.01 | * 16.02 ± 0.01 | * 17.62 ± 0.01 |

| K-Means Proposed Model (Single Mapper) | * 9.16 ± 0.01 | * 8.51 ± 0.01 | * 7.75 ± 0.02 |

| Recommender Legacy Model (Single Mapper) | * 17.5 ± 0.18 | * 19.4 ± 0.18 | * 16.06 ± 0.02 |

| Recommender Proposed Model (Single Mapper) | * 10.0 ± 0.47 | * 8.45 ± 0.02 | * 9.2 ± 0.09 |

| Naïve Bayes Legacy model (Single Mapper) | * 16.67 ± 0.07 | * 17.78 ± 0.03 | * 18.34 ± 0.01 |

| Naïve Bayes Proposed Model (Single Mapper) | * 9.17 ± 0.03 | * 10.1 ± 0.13 | * 8.67 ± 0.03 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vats, S.; Sagar, B.B.; Singh, K.; Ahmadian, A.; Pansera, B.A. Performance Evaluation of an Independent Time Optimized Infrastructure for Big Data Analytics that Maintains Symmetry. Symmetry 2020, 12, 1274. https://doi.org/10.3390/sym12081274

Vats S, Sagar BB, Singh K, Ahmadian A, Pansera BA. Performance Evaluation of an Independent Time Optimized Infrastructure for Big Data Analytics that Maintains Symmetry. Symmetry. 2020; 12(8):1274. https://doi.org/10.3390/sym12081274

Chicago/Turabian StyleVats, Satvik, Bharat Bhushan Sagar, Karan Singh, Ali Ahmadian, and Bruno A. Pansera. 2020. "Performance Evaluation of an Independent Time Optimized Infrastructure for Big Data Analytics that Maintains Symmetry" Symmetry 12, no. 8: 1274. https://doi.org/10.3390/sym12081274

APA StyleVats, S., Sagar, B. B., Singh, K., Ahmadian, A., & Pansera, B. A. (2020). Performance Evaluation of an Independent Time Optimized Infrastructure for Big Data Analytics that Maintains Symmetry. Symmetry, 12(8), 1274. https://doi.org/10.3390/sym12081274