Underwater Image Enhancement Using Successive Color Correction and Superpixel Dark Channel Prior

Abstract

1. Introduction

2. Proposed Successive Color Correction

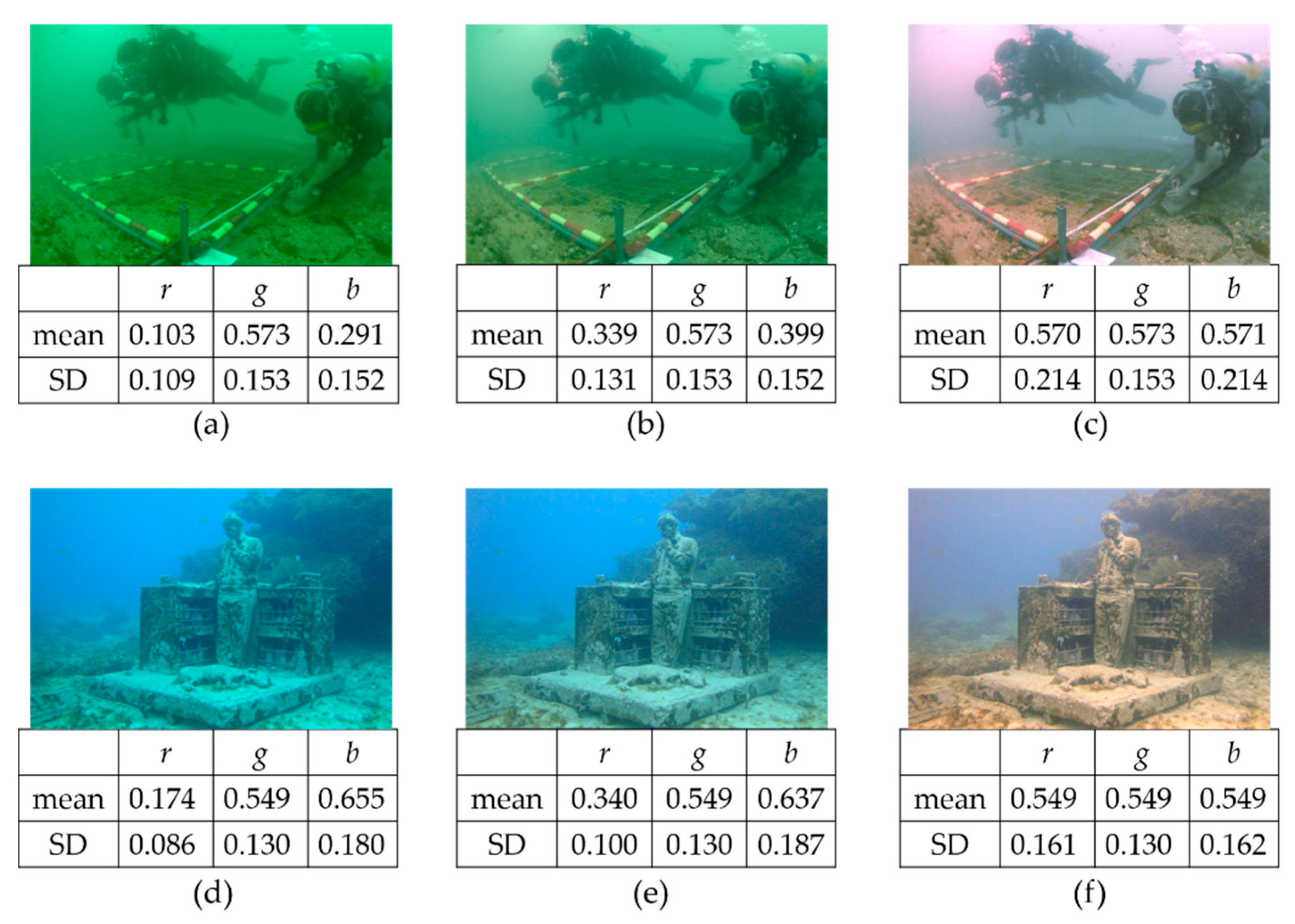

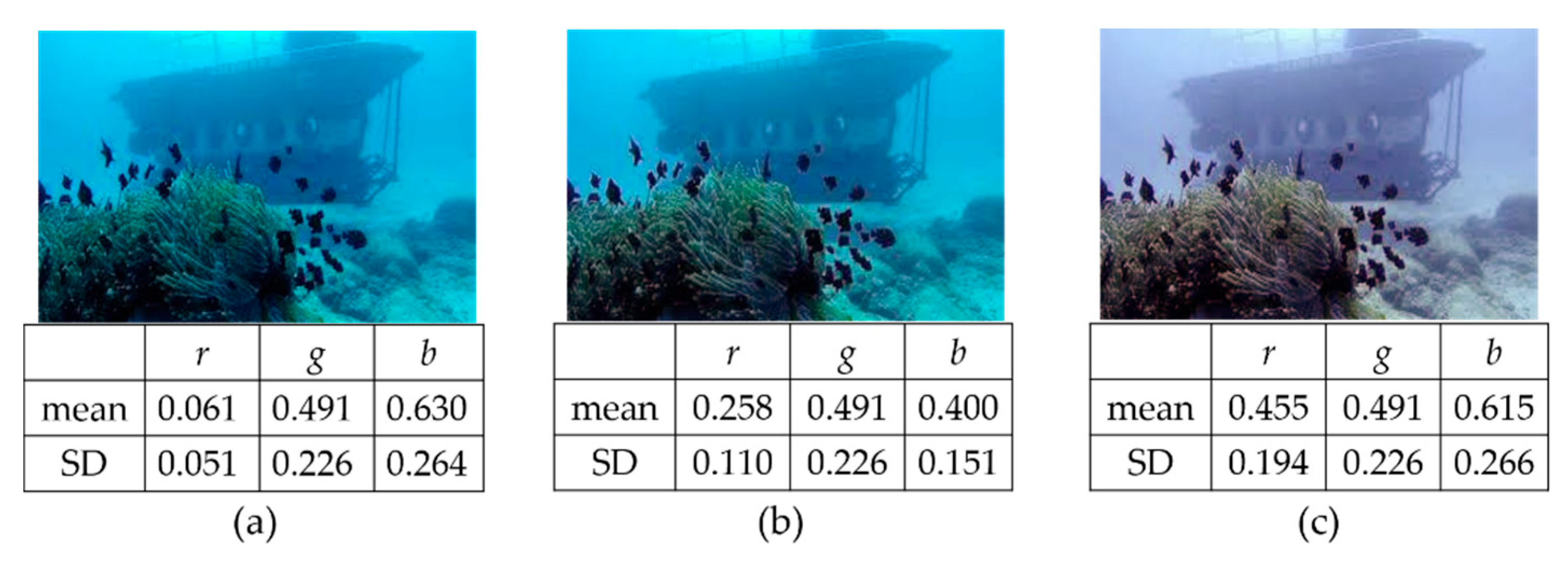

2.1. Improvement of Underwater White Balance

2.2. Adaptive Image Normalization

3. Underwater Image Enhancement Using Superpixel Dark Channel Prior

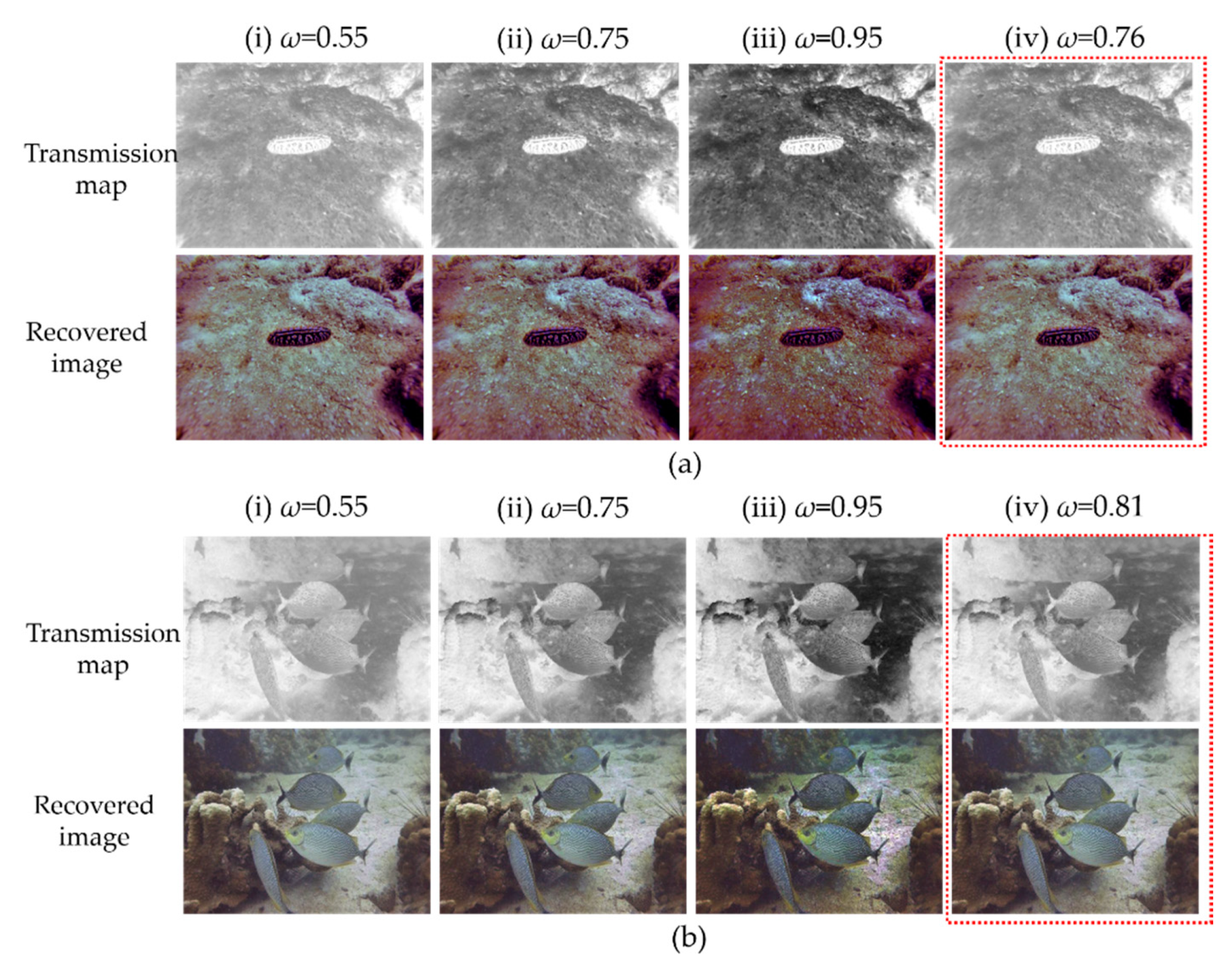

3.1. Transmission Map Estimation

3.2. Adaptive Weight

3.3. Summary of Proposed Method

4. Simulation Results

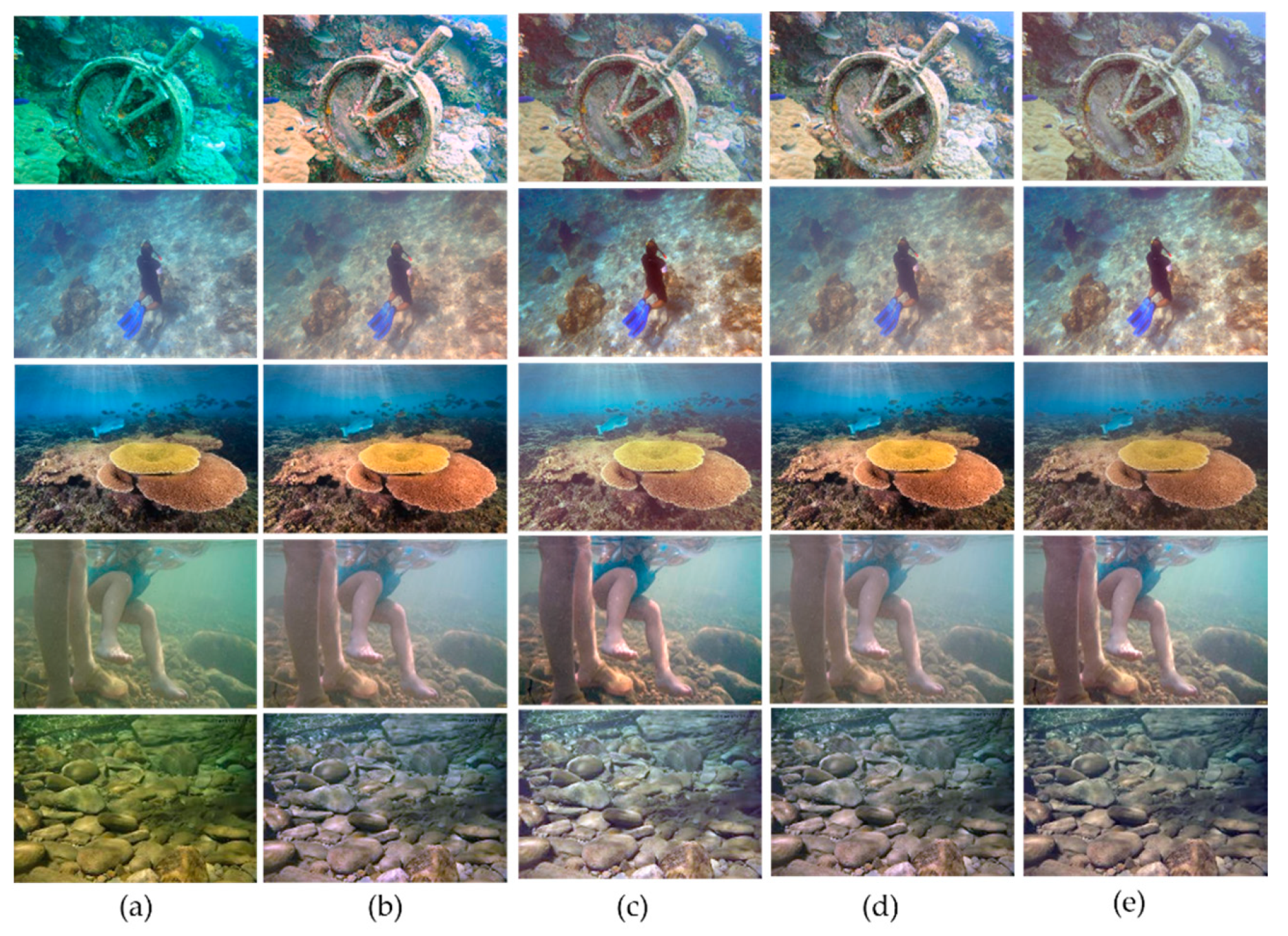

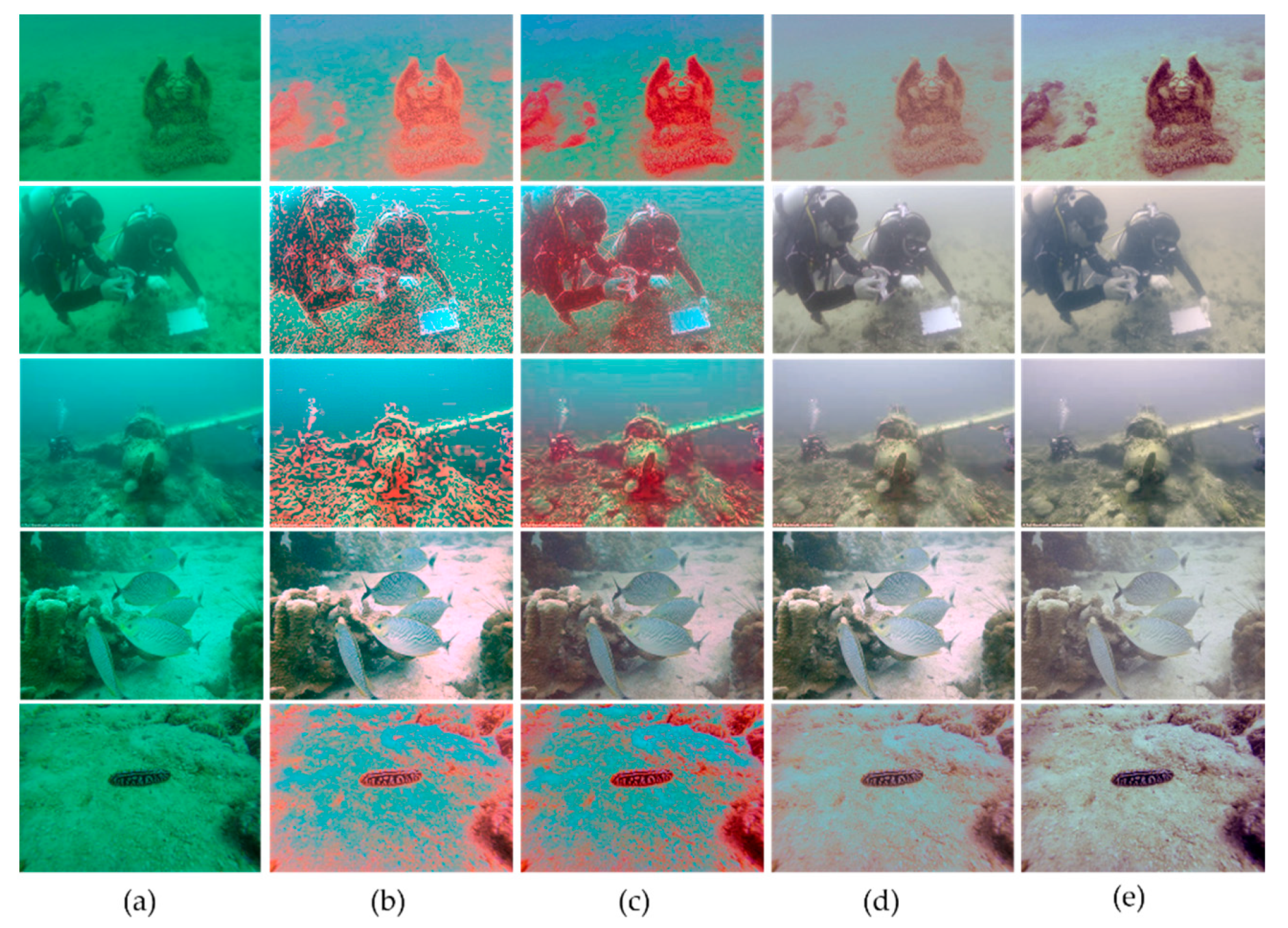

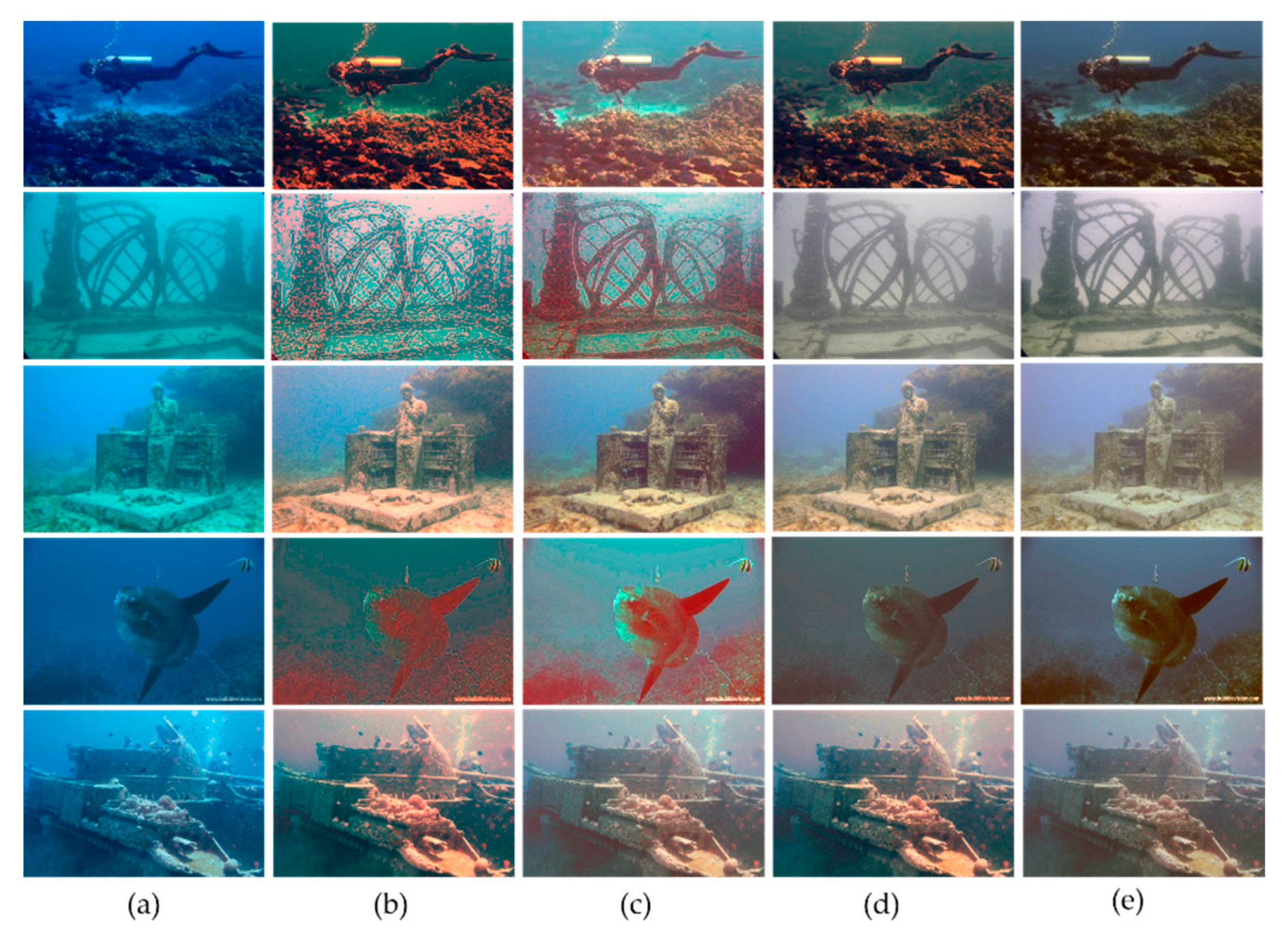

4.1. Underwater Color Correction Reuslts

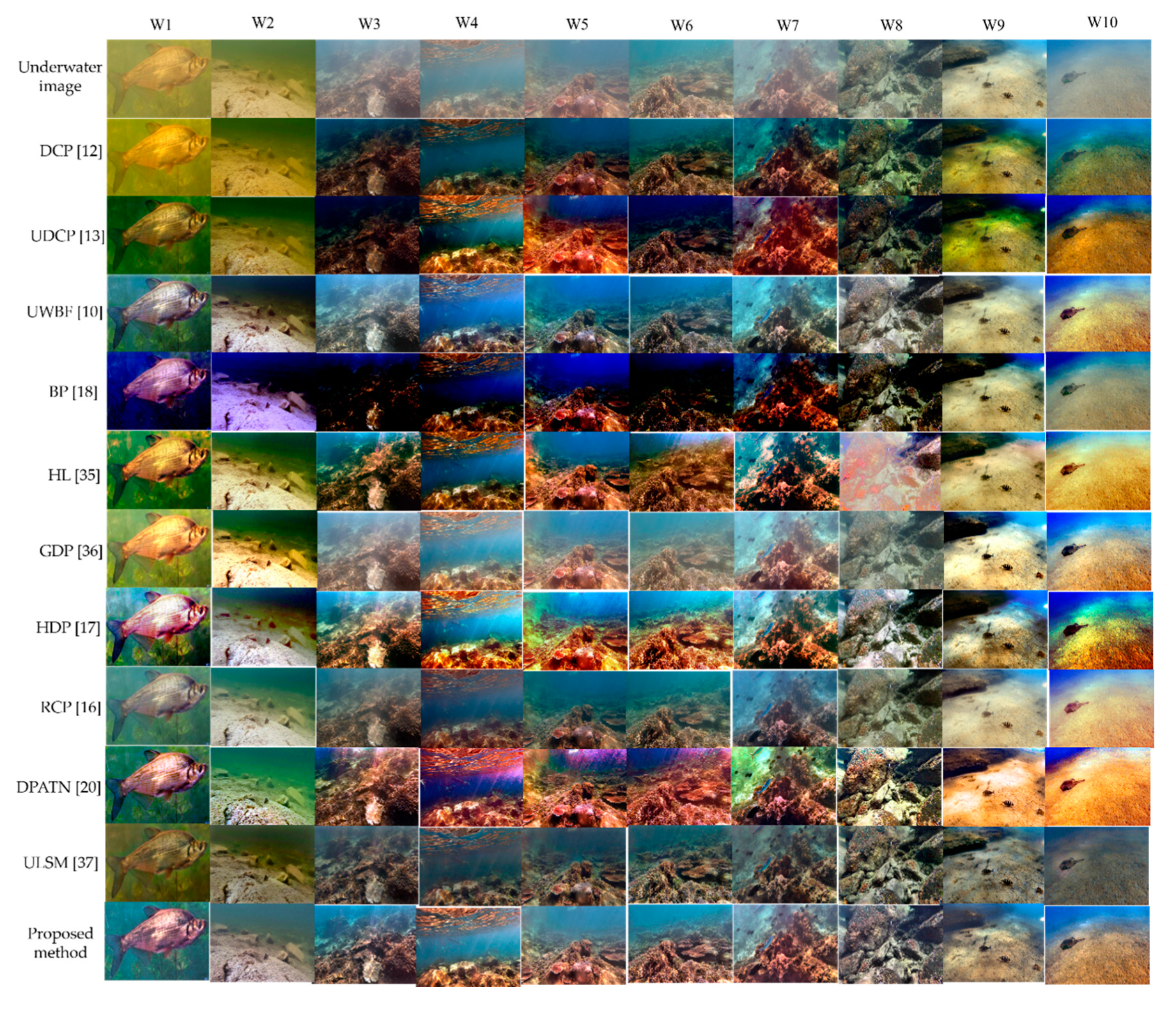

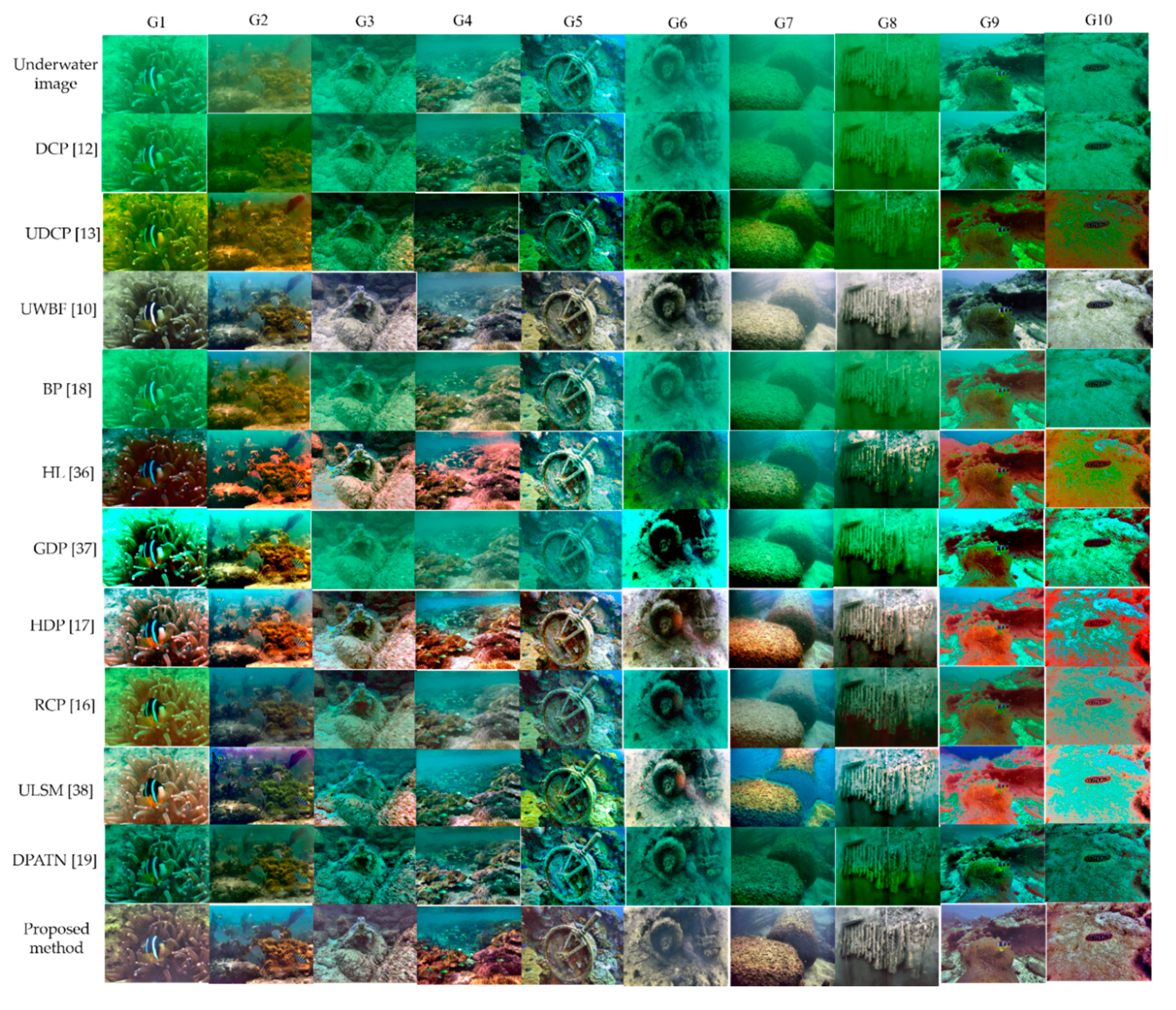

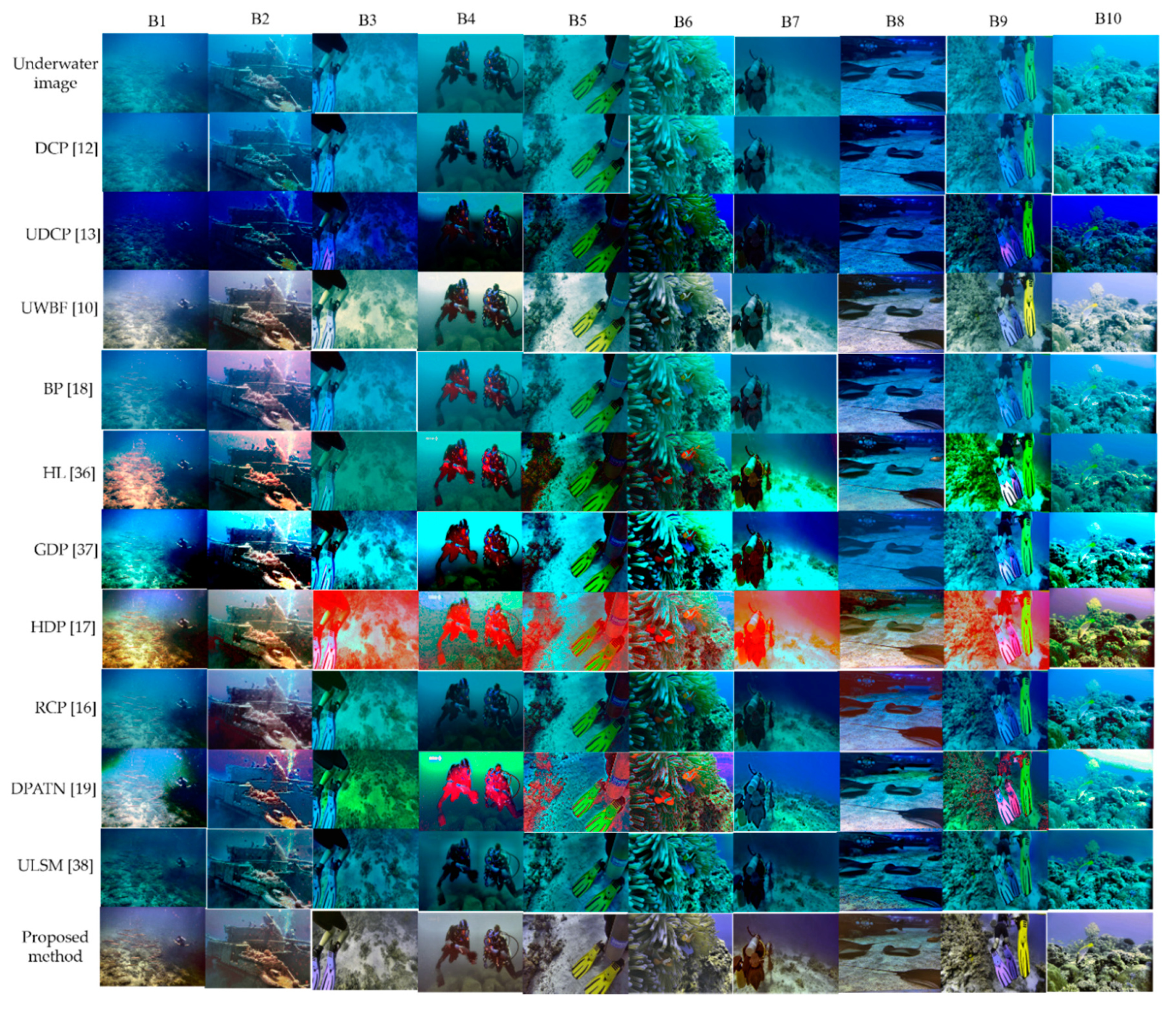

4.2. Image Enhancement Reuslts

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Acronyms

| Acronym | Description |

| CLAHE | Contrast Limited Adaptive Histogram Equalization |

| UWB | Underwater White Balance |

| DCP | Dark Channel Prior |

| UDCP | Underwater Dark Channel Prior |

| RCP | Red Channel Prior |

| HDP | Histogram Distribution Prior |

| BP | Blurriness Prior |

| GWA | Gray World Assumption |

| DPATN | Data and Prior Aggregated Transmission Network |

| UIEBD | Underwater Image Enhancement Benchmark Dataset |

| GAN | Generative Adversarial Network |

| SLIC | Simple Linear Iterative Clustering |

| UWBF | Underwater White Balance-based Fusion Algorithm |

| HL | Haze Line |

| UIQM | underwater image quality measure |

References

- Lu, H.; Li, Y.; Zhang, Y.; Chen, M.; Serikawa, S.; Kim, H. Underwater optical image processing: A comprehensive review. Mob. Netw. Appl. 2017, 22, 1204–1211. [Google Scholar] [CrossRef]

- Yang, M.; Hu, J.; Li, C.; Rohde, G.; Du, Y.; Hu, K. An in-depth survey of underwater image enhancement and restoration. IEEE Access 2019, 7, 123638–123657. [Google Scholar] [CrossRef]

- Wang, Y.; Song, W.; Fortino, G.; Ql, L.-Z.; Zhang, W.; Liotta, A. An experimental-based review of image enhancement and image restoration methods for underwater imaging. IEEE Access 2019, 7, 140233–140251. [Google Scholar] [CrossRef]

- Iqbal, K.; Salam, R.A.; Osman, A.; Talib, A.Z. Underwater image enhancement using an integrated colour model. Int. J. Compt. Sci. 2007, 34, 1–6. [Google Scholar]

- Hitam, M.S.; Awalludin, E.A.W.; Yussof, N.J.H.W.; Bachok, Z. Mixture contrast limited adaptive histogram equalization for underwater image enhancement. In Proceedings of the 2013 International Conference on Computer Applications Technology (ICCAT), Sousse, Tunisia, 20–22 January 2013; pp. 1–5. [Google Scholar] [CrossRef]

- Ghani, A.S.A.; Isa, N.A.M. Underwater image quality enhancement through integrated color model with Rayleigh distribution. Appl. Soft Comput. 2015, 27, 219–230. [Google Scholar] [CrossRef]

- Fu, X.; Zhuang, P.; Huang, Y.; Liao, Y.; Zhang, X.-P.; Ding, X. A Retinex-based enhancing approach for single underwater image. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 4572–4576. [Google Scholar] [CrossRef]

- Zhang, S.T.; Wang, S.; Dong, J.; Yu, H. Underwater image enhancement via extended multi-scale Retinex. Neurocomputing 2017, 245, 1–9. [Google Scholar] [CrossRef]

- Li, C.J.; Guo, C.; Guo, G.; Cong, R.; Gong, J. A hybrid method for underwater image correction. Pattern Recognit. Lett. 2017, 94, 62–67. [Google Scholar] [CrossRef]

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Bekaert, P. Color balance and fusion for underwater image enhancement. IEEE Trans. Image Process. 2018, 27, 379–393. [Google Scholar] [CrossRef]

- Ancuti, C.; Ancuti, C.O.; Haber, T.; Bekaert, P. Enhancing underwater images and videos by fusion. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 81–88. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [CrossRef]

- Drews, P., Jr.; do Nascimento, E.; Moraes, F.; Botelho, S.; Campos, M. Transmission estimation in underwater single images. In Proceedings of the 2013 IEEE International Conference on Computer Vision Workshops, Sydney, NSW, Australia, 2–8 December 2013; pp. 825–830. [Google Scholar] [CrossRef]

- Drews, P.L.J.; Nascimento, E.R.; Botelho, S.S.C.; Campos, M.F.M. Underwater depth estimation and image restoration based on single images. IEEE Comput. Graph. Appl. 2016, 36, 24–35. [Google Scholar] [CrossRef]

- Li, C.; Quo, J.; Pang, Y.; Chen, S.; Wang, J. Single underwater image restoration by blue-green channels dehazing and red channel correction. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 1731–1735. [Google Scholar] [CrossRef]

- Galdran, A.; Pardo, D.; Picón, A.; Alvarez-Gila, A. Automatic red-channel underwater image restoration. J. Vis. Commun. Image Represent. 2015, 26, 132–145. [Google Scholar] [CrossRef]

- Li, C.; Guo, J.; Cong, R.; Pang, Y.; Wang, B. Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans. Image Process. 2016, 25, 5664–5677. [Google Scholar] [CrossRef]

- Peng, Y.; Cosman, P.C. Underwater image restoration based on image blurriness and light absorption. IEEE Trans. Image Process. 2017, 26, 1579–1594. [Google Scholar] [CrossRef] [PubMed]

- Buchsbaum, G. A spatial processor model for object colour perception. J. Franklin Inst. 1980, 310, 1–26. [Google Scholar] [CrossRef]

- Liu, R.; Fan, X.; Hou, M.; Jiang, Z.; Luo, Z.; Zhang, L. Learning aggregated transmission propagation networks for haze removal and beyond. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2973–2986. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, J.; Cao, Y.; Wang, Z. A deep CNN method for underwater image enhancement. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–29 September 2017; pp. 1382–1386. [Google Scholar] [CrossRef]

- Hou, M.; Liu, R.; Fan, X.; Luo, Z. Joint residual learning for underwater image enhancement. In Proceedings of the 2018 IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 4043–4047. [Google Scholar] [CrossRef]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 2020, 29, 4376–4389. [Google Scholar] [CrossRef]

- Li, J.; Skinner, K.A.; Eustice, R.M.; Johnson-Roberson, M. Water-GAN: Unsupervised generative network to enable real-time color correction of monocular underwater images. IEEE Robot. Autom. Lett. 2018, 3, 387–394. [Google Scholar] [CrossRef]

- Fabbri, C.; Islam, M.J.; Sattar, J. Enhancing underwater imagery using generative adversarial networks. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 7159–7165. [Google Scholar] [CrossRef]

- Land, E.H. The Retinex theory of color vision. Sci. Am. 1977, 237, 108–129. [Google Scholar] [CrossRef]

- van de Weijer, J.; Gevers, T.; Gijsenij, A. Edge-based color constancy. IEEE Trans. Image Process. 2007, 16, 2207–2214. [Google Scholar] [CrossRef]

- Narasimhan, S.G.; Nayar, S.K. Vision and the Atmosphere. Int’l J. Computer Vision. 2002, 48, 233–254. [Google Scholar] [CrossRef]

- Xiao, C.; Gan, J. Fast image dehazing using guided joint bilateral filter. Vis. Comput. 2012, 28, 713–721. [Google Scholar] [CrossRef]

- Yeh, C.H.; Kang, L.W.; Lee, M.S.; Lin, C.Y. Haze effect removal from image via haze density estimation in optical model. Opt. Express. 2013, 21, 27127–27141. [Google Scholar] [CrossRef] [PubMed]

- Lin, Z.; Wang, X. Dehazing for image and video using guided filter. Appl. Sci. 2012, 2, 123–127. [Google Scholar] [CrossRef]

- Jiang, Y.; Sun, C.; Zhao, Y.; Yang, L. Image dehazing using adaptive bi-channel priors on superpixels. Comput. Vis. Image Und. 2017, 165, 17–32. [Google Scholar] [CrossRef]

- Yang, M.; Liu, J.; Li, Z. Superpixel-based single nighttime image haze removal. IEEE Trans. Multimed. 2018, 20, 3008–3018. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Susstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 32, 2274–2282. [Google Scholar] [CrossRef]

- Berman, D.; Tali, T.; Shai, A. Diving into haze-lines: Color restoration of underwater images. In Proceedings of the British Machine Vision Conference (BMVC), London, UK, 4–7 September 2017; pp. 1–12. [Google Scholar] [CrossRef]

- Gong, Y.; Sbalzarini, I.F. A Natural-scene gradient distribution prior and its application in light-microscopy image processing. IEEE J. Sel. Top. Signal Process. 2016, 10, 99–114. [Google Scholar] [CrossRef]

- Cho, Y.; Kim, A. Visibility enhancement for underwater visual SLAM based on underwater light scattering model. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 710–717. [Google Scholar] [CrossRef]

- Source Code for the Proposed Method. Available online: https://sites.google.com/view/ispl-pnu/ (accessed on 24 July 2020).

- Panetta, K.; Gao, C.; Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Oceanic Eng. 2016, 41, 541–551. [Google Scholar] [CrossRef]

| Input: underwater image I Output: enhanced image J

|

| Underwater Images | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| W1 | W2 | W3 | W4 | W5 | W6 | W7 | W8 | W9 | W10 | Ave | |

| DCP [12] | 0.471 | 0.417 | 0.783 | 0.688 | 0.676 | 0.796 | 0.784 | 0.825 | 0.605 | 0.591 | 0.664 |

| UDCP [13] | 0.588 | 0.454 | 0.671 | 0.673 | 0.676 | 0.632 | 0.731 | 0.742 | 0.607 | 0.595 | 0.637 |

| UWBF [10] | 0.685 | 0.478 | 0.783 | 0.732 | 0.677 | 0.773 | 0.746 | 0.818 | 0.578 | 0.635 | 0.691 |

| BP [18] | 0.417 | 0.405 | 0.106 | 0.343 | 0.306 | 0.117 | 0.326 | 0.301 | 0.559 | 0.528 | 0.341 |

| HL [35] | 0.618 | 0.461 | 0.774 | 0.785 | 0.681 | 0.842 | 0.675 | 0.721 | 0.58 | 0.59 | 0.673 |

| GDP [36] | 0.491 | 0.354 | 0.707 | 0.615 | 0.603 | 0.687 | 0.655 | 0.76 | 0.487 | 0.549 | 0.591 |

| HDP [17] | 0.569 | 0.459 | 0.761 | 0.706 | 0.731 | 0.819 | 0.729 | 0.795 | 0.579 | 0.593 | 0.674 |

| RCP [16] | 0.518 | 0.442 | 0.705 | 0.624 | 0.616 | 0.755 | 0.687 | 0.79 | 0.551 | 0.507 | 0.62 |

| DPATN [20] | 0.546 | 0.428 | 0.783 | 0.769 | 0.731 | 0.819 | 0.738 | 0.703 | 0.563 | 0.582 | 0.666 |

| ULSM [37] | 0.531 | 0.451 | 0.662 | 0.483 | 0.531 | 0.678 | 0.61 | 0.792 | 0.563 | 0.336 | 0.564 |

| Proposed | 0.653 | 0.468 | 0.468 | 0.801 | 0.695 | 0.82 | 0.81 | 0.83 | 0.646 | 0.687 | 0.724 |

| Underwater Images | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| G1 | G2 | G3 | G4 | G5 | G6 | G7 | G8 | G9 | G10 | Ave | |

| DCP [12] | 0.376 | 0.42 | 0.644 | 0.752 | 0.72 | 0.236 | 0.413 | 0.459 | 0.476 | 0.366 | 0.486 |

| UDCP [13] | 0.436 | 0.463 | 0.666 | 0.656 | 0.682 | 0.327 | 0.506 | 0.442 | 0.509 | 0.481 | 0.517 |

| UWBF [10] | 0.493 | 0.498 | 0.789 | 0.766 | 0.841 | 0.396 | 0.537 | 0.623 | 0.5 | 0.44 | 0.588 |

| BP [18] | 0.409 | 0.45 | 0.737 | 0.766 | 0.814 | 0.248 | 0.439 | 0.506 | 0.522 | 0.381 | 0.527 |

| HL [35] | 0.404 | 0.48 | 0.696 | 0.688 | 0.703 | 0.381 | 0.491 | 0.533 | 0.547 | 0.489 | 0.541 |

| GDP [36] | 0.334 | 0.42 | 0.72 | 0.709 | 0.784 | 0.261 | 0.413 | 0.48 | 0.449 | 0.354 | 0.492 |

| HDP [17] | 0.479 | 0.497 | 0.714 | 0.796 | 0.745 | 0.365 | 0.544 | 0.548 | 0.616 | 0.543 | 0.585 |

| RCP [16] | 0.477 | 0.441 | 0.789 | 0.763 | 0.848 | 0.373 | 0.516 | 0.573 | 0.541 | 0.458 | 0.578 |

| DPATN [20] | 0.446 | 0.543 | 0.7 | 0.735 | 0.715 | 0.329 | 0.579 | 0.533 | 0.569 | 0.463 | 0.561 |

| ULSM [37] | 0.283 | 0.363 | 0.692 | 0.744 | 0.781 | 0.2 | 0.387 | 0.514 | 0.437 | 0.277 | 0.468 |

| Proposed | 0.509 | 0.55 | 0.787 | 0.863 | 0.849 | 0.412 | 0.593 | 0.625 | 0.513 | 0.502 | 0.62 |

| Underwater Images | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| B1 | B2 | B3 | B4 | B5 | B6 | B7 | B8 | B9 | B10 | Ave | |

| DCP [12] | 0.322 | 0.374 | 0.316 | 0.19 | 0.397 | 0.366 | 0.194 | 0.568 | 0.399 | 0.306 | 0.343 |

| UDCP [13] | 0.311 | 0.357 | 0.377 | 0.248 | 0.453 | 0.424 | 0.241 | 0.513 | 0.481 | 0.391 | 0.38 |

| UWBF [10] | 0.438 | 0.455 | 0.432 | 0.224 | 0.551 | 0.461 | 0.268 | 0.716 | 0.586 | 0.412 | 0.454 |

| BP [18] | 0.36 | 0.41 | 0.325 | 0.22 | 0.418 | 0.38 | 0.209 | 0.657 | 0.402 | 0.318 | 0.37 |

| HL [35] | 0.381 | 0.366 | 0.338 | 0.267 | 0.446 | 0.527 | 0.231 | 0.73 | 0.381 | 0.363 | 0.403 |

| GDP [36] | 0.351 | 0.238 | 0.336 | 0.222 | 0.396 | 0.253 | 0.222 | 0.624 | 0.419 | 0.21 | 0.327 |

| HDP [17] | 0.451 | 0.422 | 0.382 | 0.32 | 0.508 | 0.526 | 0.268 | 0.713 | 0.479 | 0.414 | 0.448 |

| RCP [16] | 0.398 | 0.444 | 0.363 | 0.235 | 0.48 | 0.517 | 0.254 | 0.703 | 0.473 | 0.428 | 0.43 |

| DPATN [20] | 0.413 | 0.462 | 0.43 | 0.338 | 0.532 | 0.457 | 0.288 | 0.686 | 0.523 | 0.349 | 0.448 |

| ULSM [37] | 0.234 | 0.332 | 0.321 | 0.221 | 0.388 | 0.365 | 0.206 | 0.588 | 0.423 | 0.323 | 0.34 |

| Proposed | 0.454 | 0.415 | 0.497 | 0.212 | 0.583 | 0.506 | 0.317 | 0.69 | 0.641 | 0.449 | 0.476 |

| Methods | Average UIQM Scores |

|---|---|

| DCP [12] | 0.536 |

| UDCP [13] | 0.519 |

| UWBF [10] | 0.632 |

| BP [18] | 0.441 |

| HL [35] | 0.579 |

| GDP [36] | 0.519 |

| HDP [17] | 0.623 |

| RCP [16] | 0.619 |

| DPATN [20] | 0.59 |

| ULSM [37] | 0.531 |

| Proposed | 0.65 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, H.S.; Moon, S.W.; Eom, I.K. Underwater Image Enhancement Using Successive Color Correction and Superpixel Dark Channel Prior. Symmetry 2020, 12, 1220. https://doi.org/10.3390/sym12081220

Lee HS, Moon SW, Eom IK. Underwater Image Enhancement Using Successive Color Correction and Superpixel Dark Channel Prior. Symmetry. 2020; 12(8):1220. https://doi.org/10.3390/sym12081220

Chicago/Turabian StyleLee, Ho Sang, Sang Whan Moon, and Il Kyu Eom. 2020. "Underwater Image Enhancement Using Successive Color Correction and Superpixel Dark Channel Prior" Symmetry 12, no. 8: 1220. https://doi.org/10.3390/sym12081220

APA StyleLee, H. S., Moon, S. W., & Eom, I. K. (2020). Underwater Image Enhancement Using Successive Color Correction and Superpixel Dark Channel Prior. Symmetry, 12(8), 1220. https://doi.org/10.3390/sym12081220