1. Introduction

With the increased use of artificial intelligence, decision support systems, forecasting, and expert systems in many enterprises, optimization problems arise more often in modern economic sectors. Such problems are widespread in computer science, engineering [

1], and economics [

2]. Optimization problems lie at the heart of many machine learning algorithms [

3], including neural networks [

4], clustering algorithms, support vector machines, and random forests. By convention, optimization denotes a minimization problem—the selection of element

such that

, where

is the objective function, often named the fitness function in evolutionary computation. The maximization problem, conversely, can be formulated as the selection of

such that

. Evolutionary algorithms represent a subset of optimization algorithms. Such algorithms use heuristic techniques inspired by biological evolution, and they process a variety of solutions to an optimization problem in a single iteration. Therefore, such biology-inspired algorithms are also known as population-based techniques.

The recent research in evolutionary computation has introduced a number of effective heuristic population-based optimization techniques, including genetic algorithms [

5], particle swarm optimization [

6], ant colony optimization [

7], cuckoo search [

8], bee swarm optimization [

9], memetic algorithm [

10], differential evolution [

11], fish school search [

12], and others. These effective algorithms have found their applications in many real-world problems [

1,

2] and continue gaining popularity among researchers. According to the No Free Lunch (NFL) theorem in optimization, no universal method exists that could be used for solving all optimization problems efficiently [

13]. Consequently, the recent research in optimization has introduced a variety of hybrid algorithms applied to solve practical problems, including big data classification using support vector machine (SVM) algorithms and modified particle swarm optimization [

14] and predictive modelling of medical data using a combined approach of random forests and a genetic algorithm [

15]. Hybrids of evolution-inspired and classical optimization methods have been proposed as well, applied in neural network model training [

16,

17] and in accurate inverse permittivity measurement [

18]. In order to take advantage of the several population-based optimization algorithms, a novel meta-heuristic approach, COBRA (Co-Operation of Biology-Related Algorithms), was proposed in [

19], based on the parallel and simultaneous work of multiple evolution-inspired algorithms.

In this paper, we consider the fish school search (FSS) algorithm, proposed by Bastos Filho et al. in [

12]. Inspired by the collective behavior of fish schools, this effective and computationally inexpensive optimization technique outperformed the genetic algorithm in image reconstruction of electrical impedance tomography in [

20] and proved its superiority over particle swarm optimization in [

21]. Moreover, FSS outperformed back propagation and the bee swarm algorithm in neural network training for mobility prediction in [

22]. In addition, FSS was used in intellectual assistant systems [

23] and in solving assembly line balancing problems [

24]. Compared to other evolution-inspired algorithms, FSS is a relatively lightweight optimization technique. A multiobjective version of FSS exists [

25], as well as modifications intended to improve the performance of the algorithm [

26].

However, the premature convergence problem inherent to many evolutionary optimization algorithms is inherent to FSS as well. The core idea of FSS is to make the randomly initialized population of agents move towards the positive gradient of a function, without the need to perform computationally expensive operations, except for fitness function evaluations. Given that, the quality of the obtained solution heavily depends on the initial locations of agents in a population and on the predefined step sizes. If the considered fitness function is multimodal and agents do not fill the search space uniformly, then the algorithm has a higher chance to converge to a locally optimal solution, probably different on each test run.

Therefore, the characteristics of the pseudorandom number generator (PRNG) used to initialize the locations of agents and to perform individual and collective movements have a great impact on the accuracy of the obtained solution and on the execution time of the algorithm as well. Plenty of PRNGs have been invented [

27,

28,

29]; the generators vary per platform and per programming language. The characteristics of PRNGs differ, depending on the considered domain. PRNGs have applications in simulations, electronic games, and cryptography, and each area implies its own limitations and requirements. In some cases, the generator has to be cryptographically secure. In other cases, the speed of a PRNG can be a crucial measure of quality.

Chaotic behavior exists in many natural systems, as well as in deterministic nonlinear dynamical systems. Chaos-based systems have applications in communications, cryptography, and noise generation. A dynamical system is denoted by a state space

, a set of times

, and a map

which describes the evolution of the dynamical system. An example of a chaotic mapping is the logistic map, introduced by Robert May in 1976 [

30]. The map is given by the following equation:

Here,

represents the current state of the dynamical system, and

is the control parameter. The control parameter determines whether a dynamical system stabilizes at a constant value, stabilizes at periodic values, or becomes completely chaotic. The logistic-mapping-based system becomes chaotic when

. In the completely chaotic state, the logistic map (1) can be used as a simple PRNG. Guyeux et al. in [

31] proposed a novel chaotic PRNG based on a combination of chaotic iterations and well-known generators with applications in watermarking. Hobincu et al. proposed a cryptographic PRNG targeting secret communication [

32]; the algorithm was based on the evolution of the chaotic Henon map.

Zhiteng Ma et al. in [

33] used a modified version of the logistic map (1) to generate the initial locations of agents in the proposed chaotic particle swarm optimization algorithm. They conducted a series of experiments that verified the effectiveness of the implemented optimization technique. A novel hybrid algorithm based on chaotic search and the artificial fish swarm algorithm was proposed in [

34], where Hai Ma et al. used the logistic map (1) to perform the local search. The results of the numerical experiment proved that the proposed technique was characterized by higher convergence speed and better stability compared to the original algorithm. El-Shorbagy et al. in [

35] proposed a hybrid algorithm that integrates the genetic algorithm and a chaotic local search strategy to improve the convergence speed towards the globally optimal solution. They considered several chaotic mappings, including the logistic map (1). The obtained results confirmed that integrating the genetic algorithm with chaos-based local search speeds up the convergence, even when dealing with nonsmooth and nondifferentiable functions.

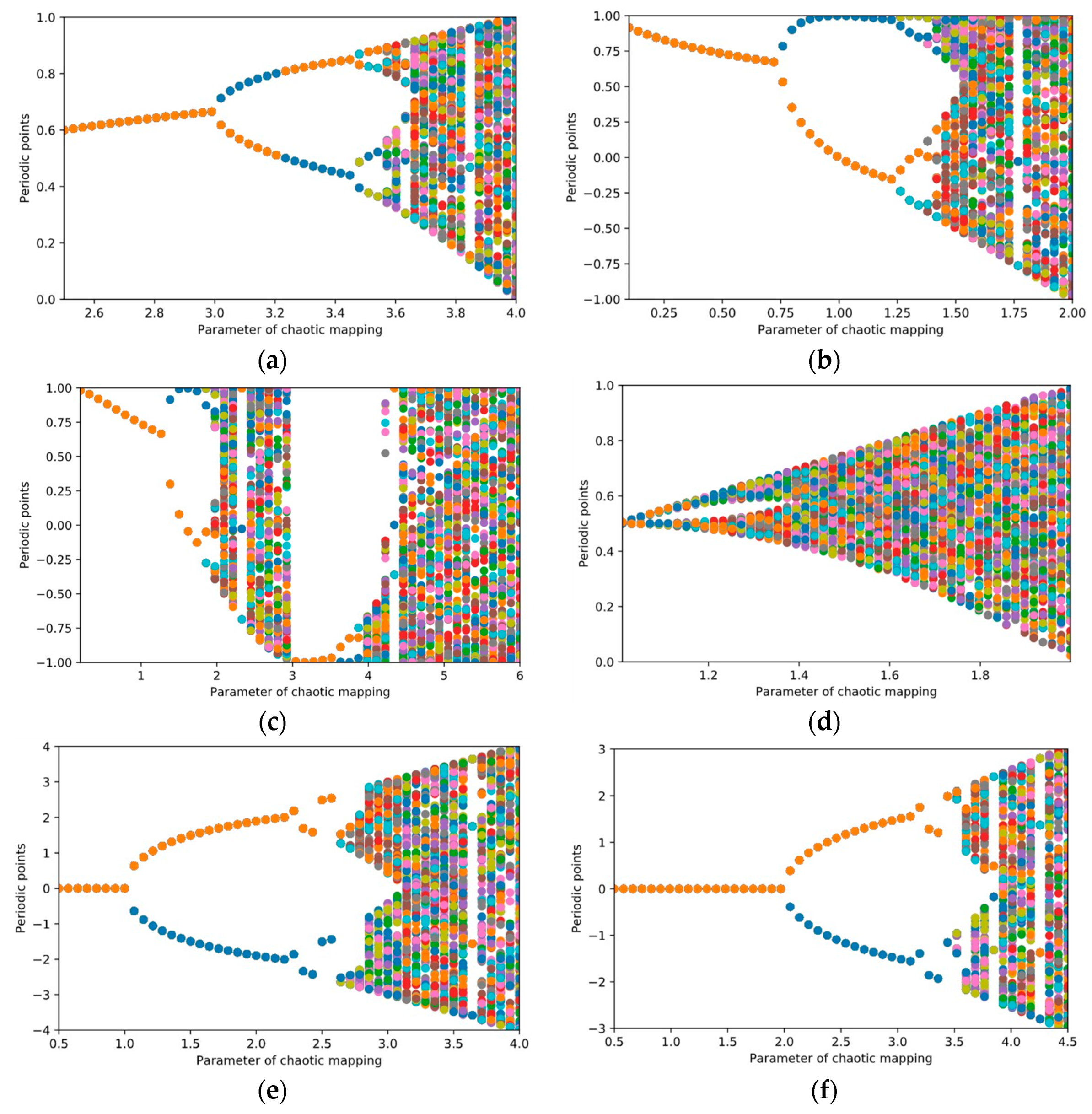

In this paper, we provide a comparative study of optimization algorithms based on FSS and different chaotic mappings, including (1). We consider the modified logistic map [

36], the tent map [

37], the sine map, and the circle map with zero driving phase, which are parts of the fractional Caputo standard α-family of maps, as described in [

38]. Additionally, we consider the simple cosine map, mentioned in [

29]. We analyze the distributions produced by chaotic PRNGs, and compare the performance of the mappings with that of other commonly used PRNGs, such as Mersenne Twister [

28], Philox [

39], and Parallel Congruent Generator (PCG) [

40]. In addition, we incorporate exponential step decay into FSS and compare the accuracy of the modified algorithm with that of PSO and GA on both unimodal and multimodal test functions for optimization. We apply the Wilcoxon signed-rank test to determine whether significant differences exist between the considered algorithms. The results of the numerical experiments show the outstanding advantage of tent-map-based chaotic PRNG performance, as well as the superiority of tent-map-based FSS with exponential step decay over PSO and GA.

4. Discussion

During the research presented in this paper, we considered a number of dynamical equations, including the logistic map, which has been of great interest among researchers since its first mention in 1976 [

30]. We also considered the improved logistic map, proposed in [

36], the digital tent map [

37], the cosine mapping, and the sine and circle mappings, based on Caputo standard α-family of maps [

38]. According to the Lyapunov exponent metric, specific

parameter values were selected to make the iterative equations completely chaotic.

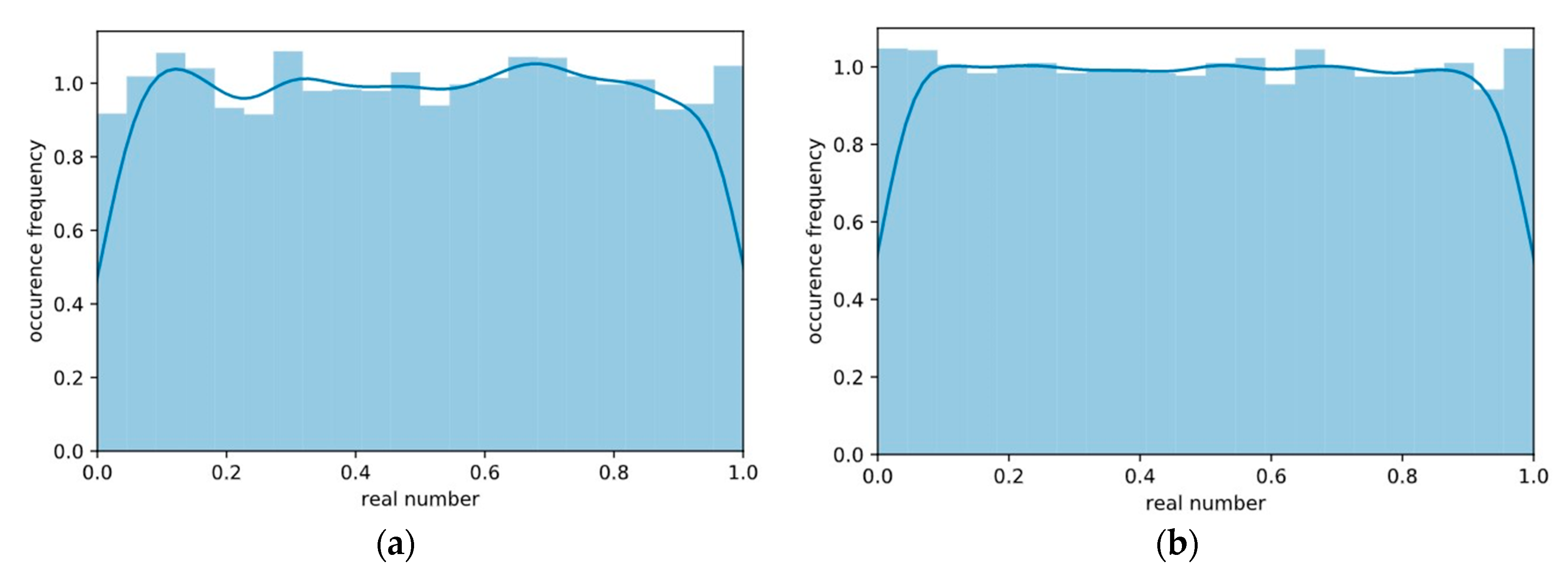

The performance of the PRNG used to generate the initial population of solutions and to perform other computations is crucial in evolutionary optimization and especially in swarm intelligence. In

Section 3.1, we benchmarked the considered chaotic PRNGs and state-of-the-art PRNGs, such as Mersenne Twister, PCG, and Philox. The logistic map and the tent map showed the best performance. Analysis of the pseudorandom number distributions produced by the chaotic PRNGs showed that only the digital tent map produces uniformly distributed random numbers. We observed that the tent-map-based PRNG can produce more symmetrically and uniformly distributed real numbers when compared to the considered nonchaotic PRNGs. As shown in [

46], the digital tent-map-based pseudorandom bit generators (PRBGs) represent a suitable alternative to other traditional low-complexity PRBGs such as the linear feedback shift registers. All these facts allow us to conclude that the use of the tent-map-based PRNG is highly appropriate in evolutionary computation, especially in cases when the performance of an optimization algorithm is of great importance.

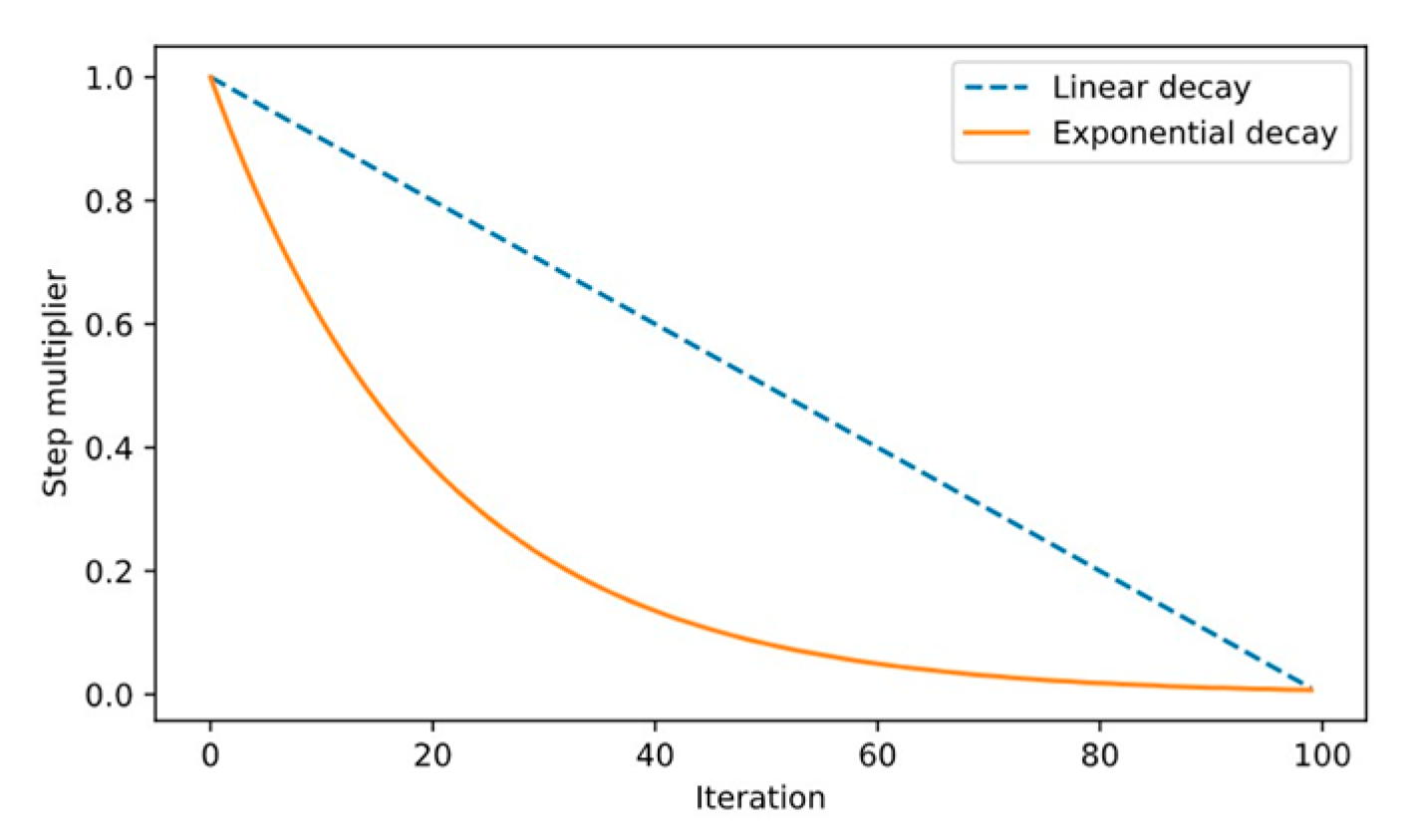

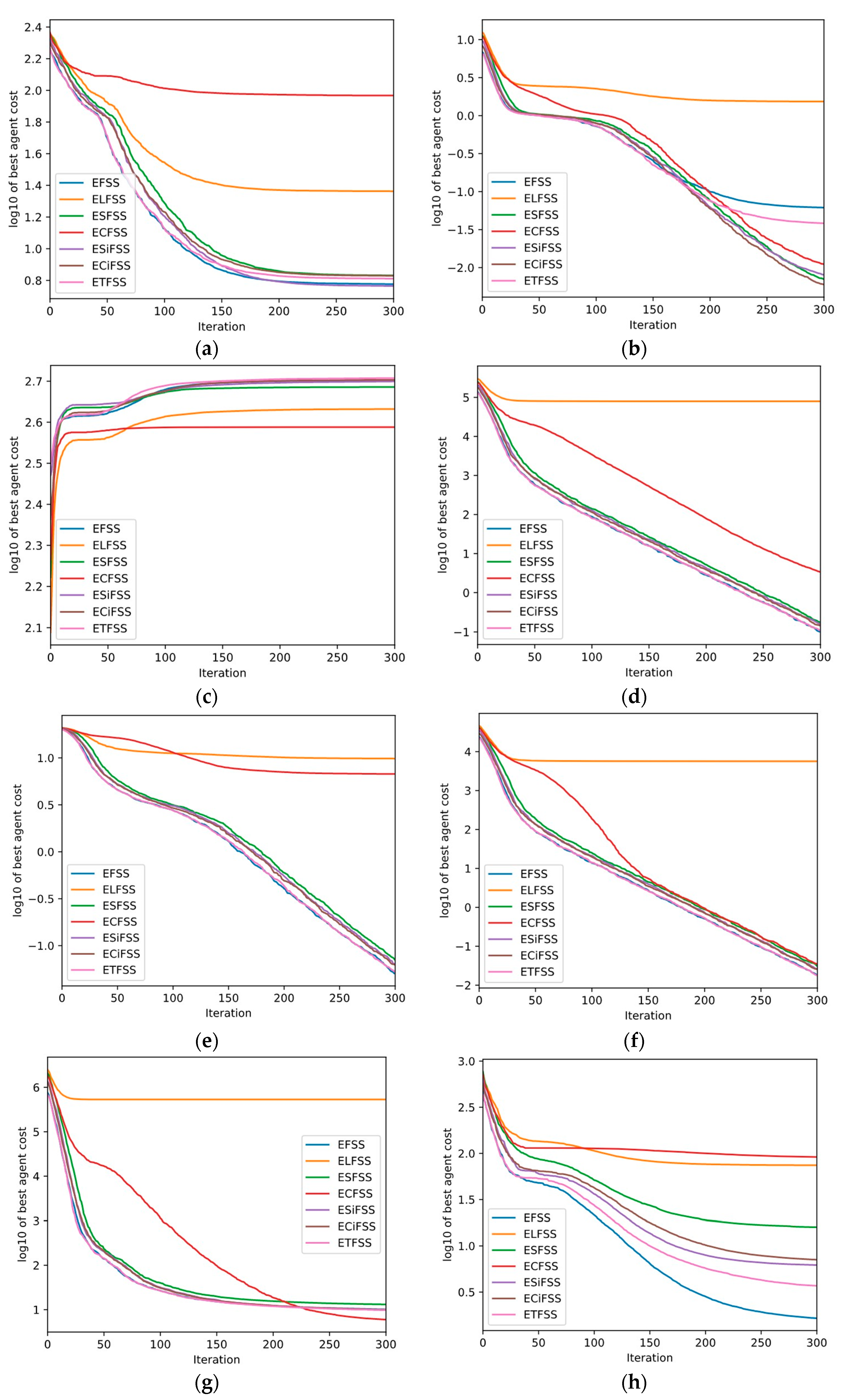

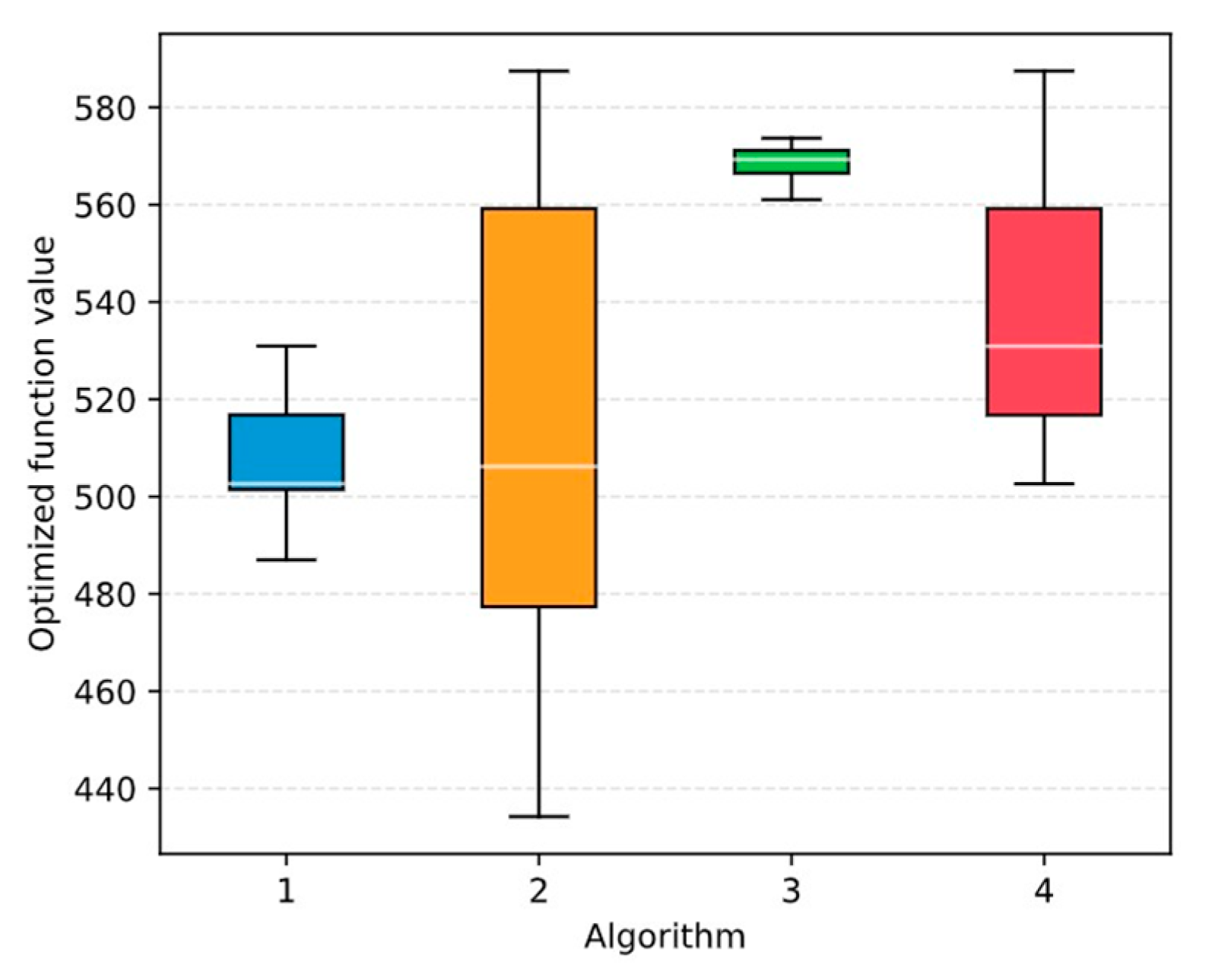

We proposed a novel modification of the FSS algorithm, which was originally invented by Bastos-Filho et al. We incorporated exponential step decay into the original FSS algorithm and used different chaotic mappings to generate initial locations of agents in the population and to generate individual and collective-volitive movement vectors. The modified algorithm with exponential step decay based on the chaotic tent map, abbreviated as ETFSS, showed the most promising results in most of the considered optimization problems. The considered multidimensional test functions for optimization, including both unimodal and multimodal functions. We tested the algorithms on the test functions for numerical optimization, including the Rastrigin, Griewank, Sphere, Ackley, and Rosenbrock functions [

43], the Schwefel 1.2 and Styblinsky-Tang functions [

44], and the Zakharov function [

45]. We compared the proposed ETFSS algorithm with the original FSS with linear step decay and with PSO and GA with tournament selection. In order to verify the superiority of ETFSS, we applied the Wilcoxon signed-rank statistical test to the original algorithm and to other considered popular optimization techniques. The obtained results confirmed that ETFSS generally performs better when compared to the original FSS algorithm, PSO, or GA. The incorporation of the chaotic tent mapping reduces the time required for the considered biology-inspired heuristic to converge, and the incorporation of exponential step decay improves the accuracy of the obtained approximately optimal solution. Notably, as shown in [

33,

47], the incorporation of chaotic mappings into PSO and GA can lead to better exploration and exploitation by the algorithms, resulting in improved accuracy of the obtained solutions.

Further research could cover more chaotic mappings, for example, the Chebyshev map or the Henon map. The incorporation of genetic operators or momentum into the ETFSS algorithm could potentially improve the performance of the ETFSS algorithm as well. As shown in [

48], a hybrid algorithm based on PSO and FSS achieved better performance when compared to the original PSO and FSS algorithms used separately. The proposed ETFSS continuous optimization technique can be applied to any problem which can be solved by continuous evolutionary computation, for example, in machine learning or engineering. Finally, the tent-map-based PRNG could be used in order to improve the performance of other optimization algorithms which heavily rely on the performance of the incorporated PRNG, including the binary FSS algorithm [

49], GA, PSO, ant colony algorithms, cuckoo search, and others.