Abstract

The Spatial–Numerical Association of Response Codes (SNARC), namely the automatic association between smaller numbers and left space and between larger numbers and right space, is often attributed to a Mental Number Line (MNL), in which magnitudes would be placed left-to-right. Previous studies have suggested that the MNL could be extended to emotional processing. In this study, participants were asked to carry out a parity judgment task (categorizing one to five digits as even or odd) and an emotional judgment task, in which emotional smilies were presented with four emotional expressions (very sad, sad, happy, very happy). Half of the sample was asked to categorize the emotional valence (positive or negative valence), the other half was asked to categorize the emotional intensity (lower or higher intensity). The results of the parity judgment task confirmed the expected SNARC effect. In the emotional judgment task, the performance of both subgroups was better for happy than for sad expressions. Importantly, a better performance was found only in the valence task for lower intensity stimuli categorized with the left hand and for higher intensity stimuli categorized with the right hand, but only for happy smilies. The present results show that neither emotional valence nor emotional intensity alone are spatialized left-to-right, suggesting that magnitudes and emotions are processed independently from one another, and that the mental representation of emotions could be more complex than the bi-dimentional left-to-right spatialization found for numbers.

1. Introduction

The Spatial–Numerical Association of Response Codes (SNARC) is a well-documented phenomenon, first described by Dehaene and colleagues [1], consisting of the automatic association between left space and smaller numbers, and between right space and larger numbers. It has been shown that, even when the task is independent of a quantity judgment—for instance, the categorization of numbers as even or odd (parity judgment task)—participants are faster to correctly categorize smaller numbers by using the left responding hand, and larger numbers by using the right responding hand. Moreover, the magnitude of a number is not defined a priori, but it is relative to the specific range presented in a task: when participants were required to carry out the parity judgment task by using two different ranges of numbers, namely from zero to five and from five to nine, the numbers four and five were categorized more quickly with the right hand in the first range (larger numbers), but the same numbers were categorized more quickly with the left hand in the second range (smaller numbers; [1]).

Starting from this pioneering evidence, different findings have been described in support of this automatic association (e.g., [2,3]) in the most disparate tasks, such as time categorization [4], physical size [5] and weights [6], musical pitch [7], non-symbolic stimuli categorization [8], among others, suggesting that the association between space and magnitudes goes beyond the cognitive representation of numbers. The SNARC effect has been often ascribed to the Mental Number Line (MNL), namely a hypothetical horizontal line in which lower magnitudes are placed on the left pole and higher magnitudes are placed on the right pole [1,9]. Different studies highlighted that the cerebral substrate of the MNL takes place in the parietal cortex, an associative cortical area in which both space and magnitude are processed (for a review, see [10]). Thus, the MNL would allow the automatic placement of the most dissimilar magnitudes on a left-to-right horizontal space. A still-debated point in this domain concerns the possible effect of culture on the MNL: on the one hand, some evidence supports the idea that the left-to-right MNL would be universal, since it has been described in non-human species as well as in pre-verbal infants and in newborns [11,12]. On the other hand, however, other findings found support for an opposite view, in which the reading–writing direction plays a crucial role in determining the direction of the MNL, with persons with a right-to-left reading–writing system showing an absent or reversed SNARC effect, possibly attributable to a right-to-left MNL [1,13]. These findings shed light on the crucial role of culture, and thus language, on the mental representation of abstract concepts [14], and they are in line with a linguistic framework in which our thoughts are shaped by our language and vice versa [15]. In this frame, abstract concepts, such as space and time, would be structured through metaphorical mapping, so that if spatiotemporal metaphors differ across cultures, people’s conceptions of such abstract concepts (e.g., space) will differ [16]. In accordance with this view, the reading–writing direction could have an effect on the direction of the MNL, and thus it would have an impact on the SNARC effect. In the present study, the focus will be restricted to Western culture with a left-to-right reading–writing system, because in this culture the left-to-right MNL seems to be unquestionable and, as has been previously specified, it is widely confirmed for different features.

Different authors have also proposed that emotional stimuli could be mentally represented according to a left-to-right direction, with less intense or negative emotions preferentially associated with the left space (categorized more quickly by using the left responding hand), and more intense or positive emotions preferentially associated with the right space (categorized more quickly by using the right responding hand). In particular, Homes and Lourenco [17] proposed the existence of a mental magnitude line: the authors remarked on the difference between “prothetic” dimensions (characterized by quantity, thus responding to the question “how much”), and “metathetic” dimensions (characterized by quality, thus responding to the question “what kind”). They argued that, despite the fact that the original idea of the MNL would apply only to prothetic dimensions, the mental magnitude line applies also to metathetic dimensions, including emotions (e.g., categorizing emotions as positive vs negative should be independent of a quantity judgment). They hypothesized that, if the mental magnitude line is valid, a SNARC-like effect should be found not only for numbers or other quantitative stimuli, but also for qualitative stimuli, such as emotional faces. In their first experiment, eighteen participants were required to use the left/right hand to categorize numeric stimuli as even or odd (range: zero to nine, excluding five), and emotional faces as female or male. Facial stimuli were presented in five emotional poses: neutral, happy, very happy and extremely happy. Results confirmed the authors’ hypothesis: both numbers and emotional faces were automatically placed on a mental magnitude line, with faster left hand responses for smaller numbers and less happy expressions, and faster right hand responses for larger numbers and happier expressions, confirming that a left-to-right orientation extends to social metathetic stimuli. In Experiment 2, the authors wanted to verify whether the results found were due to the fact that the intensity (prothetic) of the emotional expression was automatically evaluated by the observers (from low to high happiness); to disentangle this possibility, they repeated the same protocol as in Experiment 1, but they presented the following expressions: neutral, happy, angry, extremely happy and extremely angry. By means of this manipulation, they showed that right hand responses became faster with higher emotional intensity for both happy and angry expressions, revealing that the left-to-right orientation is attributable to emotional intensity and it is not due to the positive or negative emotional valence of the stimuli. Finally, in a third experiment, they asked participants to categorize the same stimuli used in Experiment 2 either as happy/not happy (happy task), or as angry/not angry (angry task). This latter experiment confirmed the existence of the mental magnitude line: the left-to-right orientation for emotional intensity was flexible, as previously shown by Dehaene et al. for numbers [1], being significant in terms of “happiness” in the happy task and in terms of “angriness” in the angry task (from lower intensity to higher intensity emotional stimuli).

Pitt and Casasanto [18] proposed that, concerning emotional stimuli, valence mapping occurs, as opposed to the intensity mapping proposed by Holmes and Laurenco [17]. According to Pitt and Casasanto, in fact, positive emotions would be associated with the dominant hemispace (right space for right-handers) and negative emotions would be associated with the non-dominant hemispace (left space for right-handers). In Experiment 1a, described by the authors, thirty-two right-handers were required to categorize four emotional words (low and high intensity, positive and negative valence words: bad, horrible, good, perfect) by using the left and right hand. In particular, half of the sample was required to categorize stimuli as positive or negative in valence (valence judgment task), and the other half of participants was required to categorize the same words as stimuli expressing lower or higher emotional intensities (intensity judgment task). The results confirmed the occurrence of valence mapping, with faster left/right-hand responses for negative/positive words, respectively, but no intensity mapping was found. Interestingly, in a second experiment, Pitt and Casasanto tried to reconcile their results with those described by Holmes and Lourenco [17]. To this aim, the authors measured the area of the mouth of the facial stimuli used by Holmes and Lourenco, and they showed that the intensity mapping described in that study was explained by this physical feature of the stimuli used. In other words, Pitt and Casasanto showed that the area of the mouth significantly predicted the results described by Holmes and Lourenco (more intense emotional expressions corresponding to stimuli with a larger area of the mouth) and they explained this evidence in the context of the SNARC effect already present for physical size, with lower areas preferentially placed on the left, and larger areas preferentially placed to the right [5].

Nevertheless, Holmes, Alcat and Lourenco’s [19] contrasting findings provided important evidence: they replicated the original task with emotional faces [17], for which Pitt and Casasanto [18] have shown that the size of the mouth explained the original findings, but they occluded the mouths of the emotional faces by covering them with a white rectangle. By using this elegant manipulation, the authors provided further confirmation of their initial results, revealing the existence of intensity mapping, even when the mouth of the stimuli was not visible to the observers. The authors themselves, however, highlighted that participants could have inferred the size of the mouth by other facial cues (i.e., eye expression, facial shape). In an attempt to further clarify this possibility, they also replicated the task used by Pitt and Casasanto with emotional words, but with a crucial difference: prior to the categorization task, they gave their participants a reference word. The authors started from the hypothesis that, in the task carried out by Pitt and Casasanto, no intensity mapping was found because participants were required to categorize each word as less or more intense, but without a reference to which each stimulus had to be compared. Thus, they first presented a target word, and then they asked participants to categorize the experimental words as less or more intense with respect to that specific target word. Moreover, in this case, the authors found faster responses for less/more intense emotional words categorized with the left/right hand, respectively.

It can be concluded that the possible left-to-right spatialization of emotions remains unclear. In order to disentangle the existence of intensity mapping [17] and that of valence mapping [18] for emotional expressions, in the present study, a similar protocol as that used by Pitt and Casasanto was employed, but—in order to be in agreement with the original task created by Holmes and Lourenco—emotional words were replaced with emotional expressions. Starting from the criticisms highlighted with regard to the area of the mouth in facial stimuli [18], as well as to the other facial cues which could lead participants to infer the mouth area even when the mouth is covered (namely the eyes and facial shape, [19]), positive and negative expressions with lower and higher intensity were presented by means of smilies. The use of emoticons and smilies is widely widespread in online chats and communications, so these forms of emotional expression have been well documented and studied in the last two decades (e.g., [20,21,22,23]). In smilies, facial emotions are expressed in a simplified and iconic way, by using a curved line to represent the mouth. In the present study, the length of the curved line representing the mouth was kept constant in all the stimuli, resulting in smilies expressing four different emotional expressions (very negative, negative, positive, very positive). The same number of participants as in Experiment 1, described by Pitt and Casasanto, were tested, and—as in their study—the whole sample was divided into two halves: half of the sample was administered a valence judgment task (positive/negative emotions) and the other half was administered an intensity judgment task (lower/higher emotional intensity). Moreover, as carried out by Holmes and Lourenco, the whole sample was also required to carry out a parity judgment task with Arabic numbers. In contrast to previous studies in which the performance in intensity and valence tasks was analyzed only as the difference between left hand and right-hand response times (RTs), in the present study, both RTs and accuracy were considered. Furthermore, in order to investigate the possible spatialization of emotional stimuli independently from the expected SNARC effect, separate analyses were carried out on the numerical task and on the emotional task. It has to be noted, in fact, that Holmes et al. [17,19] and Pitt and Casasanto [18] investigated the emotional spatialization by means of left-right RTs regressed in the performance of the numerical magnitude task, in order to obtain the unstandardized slope coefficient of best-fitting linear regressions. In the present study, numerical and emotional tasks were analyzed separately, because it cannot be ignored that the two stimulus categories (numbers and emotions) are mentally represented independently from one another. Thus, the well-established SNARC effect was hypothesized in the numerical task, used as a control condition to test the validity of the general procedure used. However, the main aim of this study was to assess the possible presence of emotional valence mapping and emotional intensity mapping, independent of the left-to-right MNL for magnitudes, starting from the hypothesis that both of these types of automatic mapping could happen, possibly depending on the specific instructions used. In particular, it has been hypothesized that valence mapping in the valence task and intensity mapping in the intensity task are associated with a better performance for lower intensity and negative emotions categorized with the left hand, and with a better performance for higher intensity and positive emotions categorized with the right hand.

2. Material and Methods

2.1. Participants

Thirty-two healthy participants (16 females) took part in the study. The participants’ ages were between 20 and 31 years old (mean ± standard error of the age: 24.44 ± 0.42 years-old) and all participants were right handed, with a mean handedness score of 76.01 (± 2.25), as measured by means of the Edinburg Handedness Inventory [24], in which a score of -100 corresponds to a complete left preference, and a score of +100 corresponds to a complete right preference. The sample was randomly divided into two subsamples: sixteen participants were asked to carry out an intensity judgment task (group “Intensity”: eight females, age: 24.5 ± 0.78 years-old, handedness: 77.55 ± 3.7), while the remaining sixteen participants were asked to carry out a valence judgments task (group “Valence”: eight females, age: 24.38 ± 0.41 years-old, handedness: 74.47 ± 2.86). The whole sample also completed a parity judgment task (for more details, see the Procedure section). Participants had normal or corrected-to-normal vision, were unaware of the purpose of the study and were tested in isolation.

2.2. Stimuli

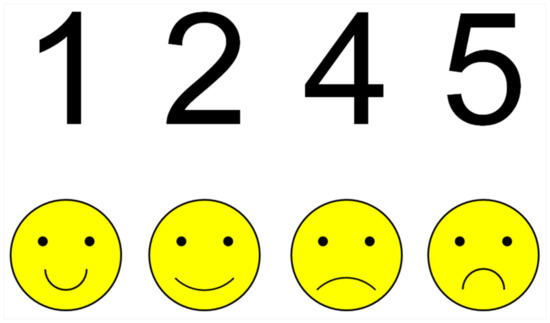

The Experiment included a numerical test and an emotional test. All stimuli were constituted by images created by using Microsoft PowerPoint 2007 (Microsoft Corp., Redmond, WA, USA): in the numerical test, stimuli were the Arabic numbers 1, 2, 4 and 5 presented in black with a height of 12 cm, font Arial; in the emotional test, stimuli were “smilies” (see Figure 1). In particular, four different schematic face-like stimuli were used as smilies: a circle was created with a diameter of 12 cm and two black dots were placed inside the circle at 4.5 cm from the upper point of the diameter and spaced apart 5.5 cm from each other, to represent the eyes. The four smilies differed from one another for the curved line used to represent the mouth: two smilies were created with a negative emotional expression (sadness), by inserting a downward curved line, in the other two smilies, the same curve was rotated by 180° to represent a positive emotional expression (happiness). In all the smilies the curve representing the mouth measured 9.42 cm, but the two stimuli in each emotional category (sadness and happiness) differed from one another in terms of the curvature of the “mouth” representing the intensity of the emotional expression. Specifically, two different arches were calculated with the formula “diameter × 3.14 × angle/360 = arc”, one corresponding to 90° of a 12 cm circumference (a “wider” curve, representing a less intense expression: 12 cm × 3.14 × 90°/360 = 9.42 cm), with the other corresponding to 180° of a 6 cm circumference (a “less wide” curve, representing a more intense expression: 6 cm × 3.14 × 180°/360 = 9.42). Indeed, four smilies were obtained, showing (i) very happy, (ii) happy, (iii) sad and (iv) very sad emotional expressions, with the curve representing a mouth of the same length. Lines were drawn in black and the “face” was colored in yellow (Figure 1).

Figure 1.

Stimuli used: the upper panel shows the Arabic numbers used in the numerical test; the lower panel shows the smilies used in the emotional test (from left to right: very happy, happy, sad, very sad).

2.3. Procedure

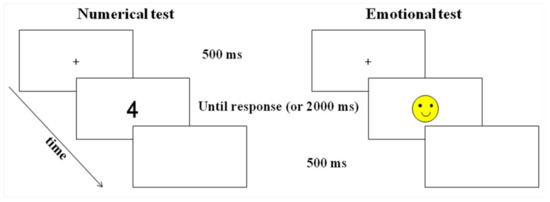

Each participant carried out both the numerical test and the emotional test, and each test was divided into two sessions, which differed in terms of the association between responding hand (left/right) and response. The order of tests and sessions was randomized across participants and short breaks were inserted between two consecutive parts, in order to allow participants to rest and to focus on the new instructions. Each session consisted of four stimuli (numbers one, two, four and five for the numerical test; smilies with very sad, sad, happy, very happy expressions for the emotional test) repeated 24 times, for a total of 96 trials in each session. In each trial, a black fixation cross was presented in the center of a white screen for 500 ms, and it was followed by the stimulus (number or smiley), presented in the center of the screen, until the participant gave a response or for a period of 2000 ms (if the response was slower than 2000 ms, the stimulus disappeared and the response was not recorded). After the stimulus disappeared, the screen became black for 500 ms and then the next trial started (Figure 2). The order of the stimuli was randomized within and across participants and sessions.

Figure 2.

Schematic representation of the experimental procedure and timeline used in the numerical test (left panel) and in the emotional test (right panel).

Prior the beginning of each session, written instructions were presented and participants were asked to provide their responses as quickly and as accurately as they could. Sixteen stimuli were presented prior to each session, to allow the participants to familiarize themselves with the task and with the responding keys, and these responses were excluded from the analyses.

In the numerical test, all participants were required to categorize each number as even or odd (parity judgment task), by pressing two different keys using the left index finger (key “g”) and the right index finger (key “ù”). The association between the even/odd response and the left/right key was inverted in the two sessions of the test. In the emotional test, the “Intensity” subgroup was asked to judge the intensity of the emotional expression depicted in each smiley as lower/higher emotional intensity (independent of the positive or negative valence—intensity judgment task), whereas the “Valence” subgroup was asked to judge the valence of the emotional expression depicted in each smiley as positive/negative valence (independently of the lower or higher intensity of the expression: valence judgment task). For both the valence and the intensity judgment task, the association between either positive/negative or lower/higher response and the left/right key was inverted in the two sessions of the test. Indeed, all participants carried out both the numerical test (parity judgment task) and the emotional test (either valence or intensity judgment task) with the two possible associations between hand and response.

The whole protocol was controlled by means of the E-Prime software (Psychology Software Tools Inc., Pittsburgh, PA), and lasted about 15 min. The experimental procedures were conducted in accordance with the guidelines of the Declaration of Helsinki.

2.4. Data Analysis

Statistical analyses were carried out using Statistica 8.0 software (StatSoft, Inc., Tulsa, OK, USA), with a significant threshold of p < 0.05. Data were analyzed by means of repeated measures analyses of variance (ANOVAs) and post-hoc comparisons were carried out by using the Duncan test. In order to consider both accuracy and response times in an overall analysis, Inverse Efficiency Scores (IES) were used as the dependent variable. IES were obtained dividing the response times of the correct responses by the proportion of correct responses in each condition [25,26,27], so that lower IES corresponded to more accurate and faster responses than higher IES (lower IES correspond to a better performance). Reaction times were excluded when they were lower than 100 ms and higher than 1000 ms.

An initial ANOVA was carried out on the performance of the whole sample in the parity judgment task: responding hand (left, right), parity (even, odd) and magnitude (smaller, larger) were considered as within-subject factors. Then, two different ANOVAs were carried out on the emotional test, considering the responding hand (left, right), valence (sad, happy) and intensity (low, high) as within-subject factors: one ANOVA involved the responses of the “Intensity” subsample (intensity judgment task), the other ANOVA involved the responses of the “Valence” subsample (valence judgment task). In the first step, the gender of participants was considered as a between-subjects factor for each of the three ANOVAs (parity, intensity and valence judgment tasks), but it was not significant and did not interact with the other factors, and thus it was excluded from the analyses.

3. Results

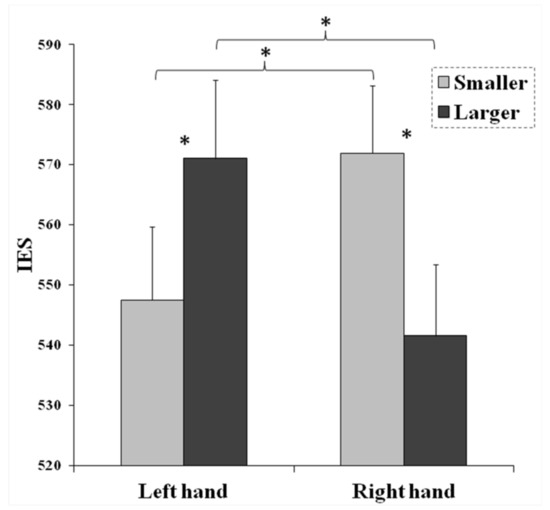

The first ANOVA carried out on the parity judgments revealed a significant main effect of parity (F(1,31) = 25.54, MSE = 1559, p < 0.001, ηp2 = 0.45): the performance was better (faster and more accurate) for even (IES: 545.55 ± 8.29) than for odd numbers (IES: 570.5 ± 8.78). Importantly, the interaction between responding hand and magnitude was significant (F(1,31) = 17.22, MSE = 2694, p < 0.001, ηp2 = 0.36) confirming the expected SNARC effect (Figure 3): all post-hoc comparisons were significant, showing a better performance with the left hand for smaller than for larger numbers (p = 0.016), and with the right hand for larger than for smaller numbers (p = 0.004). Moreover, when smaller numbers were presented, the performance was better with the left hand than with the right hand (p = 0.016), whereas when larger numbers were presented, the performance was better with the right hand than with the left hand (p = 0.004).

Figure 3.

Interaction between responding hand (leftmost columns: left hand, rightmost columns: right hand) and magnitude (light gray: smaller numbers, one and two; dark gray: larger numbers, four and give) in the parity judgment task (numerical test). Bars represent standard errors and asterisks show the significant comparisons.

In the ANOVA on the intensity judgment task, only the main effect of valence was significant (F(1,15) = 5.45, MSE = 773, p = 0.034, ηp2 = 0.27), showing a better performance for happy (IES: 579.81 ± 28.67) than for sad expression (IES: 591.29 ± 30.77). The other main effects and interactions were not significant.

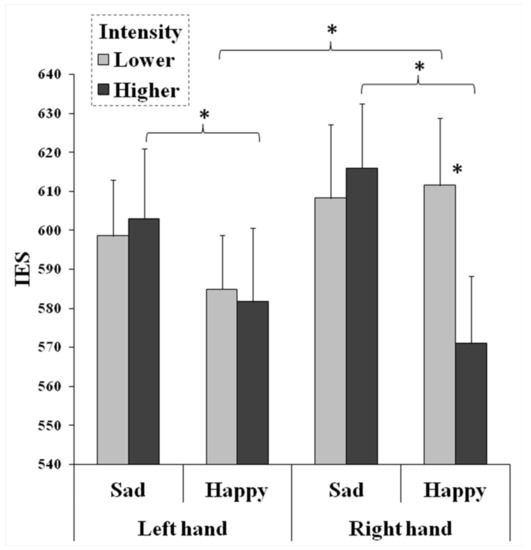

The ANOVA on the valence judgment task confirmed a significant main effect of valence (F(1,15) = 6.85, MSE = 1720, p = 0.019, ηp2 = 0.31), with a better performance for happy (IES: 587.28 ± 7.83) than for sad expressions (IES: 606.46 ± 8.18). Furthermore, the interaction between responding hand, intensity and valence was significant (F(1,15) = 5.6, MSE = 593, p = 0.032, ηp2 = 0.27, Figure 4). Post-hoc comparisons showed that the performance in the valence judgment task was better for smilies representing a higher intensity happy expression than a sad expression, with both the left hand (p = 0.036) and the right hand (p < 0.001). Importantly, the happy expression was better categorized with the right hand when the intensity was higher than lower (p < 0.001), whereas when the emotional intensity was lower, the happy expression was better categorized with the left hand than with the right hand (p = 0.012).

Figure 4.

Interaction among responding hand (leftmost columns: left hand, rightmost columns: right hand), intensity (light gray: lower intensity, dark gray: higher intensity) and valence (sad smiley, happy smiley) in the valence judgment task (emotional test). Bars represent standard errors and asterisks show the significant comparisons.

4. Discussion

The present study was aimed at investigating whether intensity mapping and valence mapping occur during facial emotion categorization, in line with a MNL expected in a Western sample with a left-to-right reading/writing system. The experimental idea starts from two different findings: on the one hand, Holmes and Lourenco [17] found that participants were faster at categorizing less/more intense emotional expressions with the left/right hand, respectively, independent of the emotional valence (namely, an intensity mapping). The authors concluded that possibly all stimulus dimensions could be mentally placed on a left-to-right mental space, similar to the left-to-right placement already found for numbers (SNARC effect). On the other hand, Pitt and Casasanto [18] disproved this conclusion, showing that the intensity mapping described by Holmes and Lourenco was due to the size of the mouth of the stimuli used, and that this intensity mapping disappeared when emotional words were used as stimuli. Even though a following study also confirmed intensity mapping when facial stimuli were presented (with the mouth occluded [19]), it remains unclear whether, in the latter case, participants could infer the size of the mouth from different facial cues. In the present study, this possible confounding effect has been bypassed, by presenting emotional smilies instead of human faces. In this way, the mouth of the stimuli has been represented by means of a bi-dimensional line, avoiding the problem of the mouth area. Moreover, although simplistic, the smiley also allowed us to avoid other facial cues (such as facial shape and eye expressions), which could possibly be exploited by the observers to infer the expression of the mouth. In summary, emotional smilies allow us to control for the possible confounding effects by ensuring that a positive or negative emotion with a lower or higher intensity is represented only by means of a curved line representing the mouth, and this line can be of the same length in all of the emotional expressions, avoiding other physical confounding effects (i.e., left/right preference for shorter/longer lines). Moreover, a classical parity judgment task was also administered to all participants, in order to validate the specific protocol used. Emotional and numerical tasks, however, were analyzed separately in an attempt to investigate each of the two tasks independent of each other, in order to find a solution to the contrasting results previously found using emotional stimuli.

The present results confirmed the SNARC effect for one-digit numbers, even if the range used was really small (only four stimuli were presented), showing a better performance with the left or right hand when smaller or larger numbers, respectively, were categorized as even or odd (see also [28]). Moreover, the performance was better for even than for odd numbers, confirming the previous evidence of an “odd effect” [29]. Thus, all of the expected findings were confirmed in the numerical task, showing that the protocol used (number of trials, keys of response) was adequate to allow for the left-to-right spatialization of the stimuli presented.

In both the valence and the intensity judgment tasks, positive emotions were better categorized than negative emotions, revealing a higher proneness to recognize positive over negative expressions. This result could be explained by the fact that the mouth is the most important facial region in recognizing happiness, whereas sadness and anger are mainly recognized from the eyes [30]. In this study, all smilies had the same non-emotional representation of the eyes (two black dots), with emotional valence and intensity being conveyed only by the line representing the mouth. This could have led participants to mainly focus on the mouth and thus to better process the positive expression.

Importantly, only in the valence judgment task was the interaction between the responding hand, valence and intensity significant, confirming not only a positive expression advantage (with respect to the negative expression) for higher intensity emotions, but also revealing an interaction that seems to confirm both the valence mapping and intensity mapping: a better performance was found, in terms of both accuracy and response times, when the positive expression was categorized with the right hand (valence mapping: positive emotion better categorized with the right hand), but only when the emotional intensity was higher with respect to the condition in which the emotional intensity was lower (intensity mapping: higher intensity emotion better categorized with the right hand). In the same vein, when the emotional intensity was lower, the positive emotion was better categorized by using the left rather than the right hand. This pattern of results suggests that neither valence alone nor intensity alone are mentally placed on a left-to-right mental line, but that they can interact with one another. In other words, intensity mapping can occur (lower/higher intensity better categorized with the left/right hand, respectively), but only for the positive expression and only when an emotional categorization task is required. As specified above, the fact that this interaction is significant only for the positive expression could be attributed to the higher salience of the mouth in the recognition of happiness [30], and to the fact that, in the stimuli used here, only the mouth changed among the stimuli, conveying the specific emotion. According to the present results, it can be concluded that an interaction exists among space and emotions that involves both emotional valence and emotional intensity. This pattern of results can be viewed as being in line with the existence of intensity mapping, as proposed by Holmes et al. [17,19], but it also highlights the importance of orienting the attention of the observers on the emotional valence in order to find such evidence of mapping, in accordance with Pitt and Casasanto [18].

However, another possible explanation can be proposed, starting from the fact that—in contrast to the results described in the abovementioned studies—the present results were obtained by analyzing the numerical task and the emotional task separately. It can be suggested, in fact, that the results of the present study put in doubt the existence of a bi-dimensional left-to-right mental disposition of facial emotional stimuli. As has been previously demonstrated, the MNL, which is often considered the basis for the SNARC effect, is functionally attributed to the activity of the parietal cortex, a multisensory associative area responsible for the processing of both space and magnitudes [9]. In support of this view, it is well-known that the parietal lobe is a crucial region for visuospatial processing, and that parietal damage can cause Gerstmann syndrome, involving dyscalculia, agraphia, finger agnosia, and left–right confusion [31]. Concerning emotions, there is no cerebral area specifically involved in all emotional processing. In fact there are different cortical and subcortical structures that correspond to the detection of emotions [32,33,34,35], involving both temporo-parietal sites and prefrontal areas, besides subcortical limbic structures. Moreover, cerebral areas involved in emotional detection could be different according to the stimulus categories [36], and thus the results found by presenting emotional words are hardly comparable with those obtained by presenting emotional faces [37,38,39]. Looking at the results found in previous studies, it can be hypothesized that the intensity mapping described by Holmes and colleagues [17,19] can be attributed to the area of the mouth of the facial stimuli used, as proposed by the authors themselves, and that the valence mapping described by Pitt and Casasanto using emotional words [18] might involve different cerebral circuits than those involved in the processing of emotional faces (it must be noted that, by varying the protocol, Holmes et al. [19] did not find support for valence mapping by means of emotional words). Thus, it can be speculated that the cerebral substrate for magnitudes and emotions (in particular, emotional faces) is different, being localized in the parietal cortex for magnitudes and being more distributed for emotions. At a cerebral level, a left-to-right orientation in the (imagery or real) space means a right-to-left hemispheric involvement, given that the perceptual and motor pathways are organized in a contralateral way (i.e., left hemisphere/right hemispace and vice versa). In this model, the right hemisphere would be specialized in processing negative emotions (valence mapping) and/or emotions with a lower intensity (intensity mapping), and the left hemisphere would be specialized in processing positive/higher intensity emotions. This hemispheric asymmetry has been widely investigated in the literature on emotional processing and it is known as the “valence hypothesis” [40]. Such a theory of hemispheric asymmetries for positive and negative emotions, however, is considered to be opposed to another well-documented hypothesis, in which a right-hemispheric superiority is postulated for all emotions, including both negative and positive valence (known as the “right hemisphere hypothesis”; see [41]). These two main theories on the cerebral substrate of emotional processing were reconciled in a model in which, after a first right-hemispheric posterior activation for all emotional stimuli, a frontal left/right activity would occur specifically for positive/negative emotions, respectively [42,43]. In this frame, it could be hypothesized that only when a valence judgment is required are the emotional pathways activated. Then, when the emotion to be judged is negative in valence, it would activate only the right hemisphere (according to both the valence hypothesis and the right hemisphere hypothesis), whereas when the emotion to be judged is positive in valence, it would also activate the left hemisphere (valence hypothesis). It can be speculated that this is the reason why a left/right asymmetry occurs only for the positive expression: the activation of both cerebral hemispheres for the happy expression (a posterior right-hemispheric activity followed by an anterior left-hemispheric activity), would lead, evidently, to a behavioral asymmetry only when the attention of the observers is focused on emotional valence (otherwise there would not be the activation of the emotional pathways), and only when a positive expression is presented (otherwise, the negative valence involves only the right hemisphere). This speculation is also in agreement with the finding that the expected SNARC effect for numbers was found here, revealing that the MNL at the basis of this left-to-right asymmetry is independent of the possible asymmetries for emotional stimuli. In light of this, it could be concluded that asymmetries exist for both magnitudes and emotions, but that they are not dependent upon one other, and that they have to be considered as being based on different cerebral circuits.

The well-established left-to-right MNL for numbers has been found here, confirming that the interaction between responding hand and magnitude is so strong to that it can also be found by using a restricted range of numbers (from one to five). However, this bi-dimensional mental arrangement for simple stimuli could be inadequate for more complex stimuli, such as emotional faces, in which different characteristics relate to their processing. These characteristics interact with one another, leading to a complex pattern of interaction, which involves hemispheric asymmetries, emotional intensity and valence, but possibly includes other variables not considered here as well (e.g., stimulus categories). For instance, despite the fact that the SNARC effect for magnitudes has also been described by presenting stimuli in the auditory modality [44], different patterns of asymmetries were found for visual and auditory emotional stimuli [45,46], and this represents another piece of evidence in support of the fact that emotional processing is a complex cognitive task, which cannot be reduced to a simple left-to-right bi-dimensional organization, taking place in a specific cortical area.

A final remark has to be made about the fact that the present results, as well as most of the previous findings described in this study, refer to Western participants, in which the left-to-right reading–writing direction overlaps with the expected (and confirmed) left-to-right placement of numbers (i.e., SNARC effect as due to the MNL). As mentioned in the Introduction, however, the directionality of the MNL is much less clear in cultures with an opposite reading and writing direction [47], and future studies should take into consideration the possible cross-cultural difference in this domain, in order to shed more light on the role of language (i.e., culture) on the mental representation of magnitudes, space, time, emotions, and so on.

5. Conclusions

The present results showed that neither clear valence mapping nor clear intensity mapping occur when participants—who show the SNARC effect in a parity judgment task—are asked to categorize stimuli that resemble faces. In fact, the interactions between responding hand and valence, and between responding hand and intensity, were not significant. Nevertheless, the interaction between responding hand, valence and intensity suggests that when participants’ attention is focused on the emotional valence (a qualitative or metathetic dimension), positive emotions with lower and higher intensity are better categorized with the left and right hand, respectively. Emotions are complex dimensions and it is possible that their mental representation is more complex than a left-to-right bi-dimensional magnitude line, possibly involving different features, including valence and intensity, among others, and involving both cortical and subcortical structures.

Funding

This research received no external funding.

Acknowledgments

I would like to thank Dalila Lecci, who helped me in recruiting and testing participants, and Luca Tommasi for his precious suggestions.

Conflicts of Interest

The author declares that she has no conflicts of interest.

References

- Dehaene, S.; Bossini, S.; Giraux, P. The mental representation of parity and number magnitude. J. Exp. Psychol. Gen. 1993, 122, 371–396. [Google Scholar] [CrossRef]

- Fias, W. The Importance of Magnitude Information in Numerical Processing: Evidence from the SNARC Effect. Math. Cogn. 1996, 2, 95–110. [Google Scholar] [CrossRef]

- MacNamara, A.; Loetscher, T.; Keage, H.A.D. Mapping of non-numerical domains on space: A systematic review and meta-analysis. Exp. Brain Res. 2017, 236, 335–346. [Google Scholar] [CrossRef] [PubMed]

- Ishihara, M.; Keller, P.E.; Rossetti, Y.; Prinz, W. Horizontal spatial representations of time: Evidence for the STEARC effect. Cortex 2008, 44, 454–461. [Google Scholar] [CrossRef] [PubMed]

- Ren, P.; Nicholls, M.E.R.; Ma, Y.; Chen, L. Size Matters: Non-Numerical Magnitude Affects the Spatial Coding of Response. PLoS ONE 2011, 6, e23553. [Google Scholar] [CrossRef] [PubMed]

- Holmes, K.J.; Lourenco, S.F. When Numbers Get Heavy: Is the Mental Number Line Exclusively Numerical? PLoS ONE 2013, 8, e58381. [Google Scholar] [CrossRef]

- Weis, T.; Estner, B.; Van Leeuwen, C.; Lachmann, T. SNARC (spatial–numerical association of response codes) meets SPARC (spatial–pitch association of response codes): Automaticity and interdependency in compatibility effects. Q. J. Exp. Psychol. 2016, 69, 1366–1383. [Google Scholar] [CrossRef]

- Nemeh, F.; Humberstone, J.; Yates, M.J.; Reeve, R.A. Non-symbolic magnitudes are represented spatially: Evidence from a non-symbolic SNARC task. PLoS ONE 2018, 13, e0203019. [Google Scholar] [CrossRef]

- Walsh, V. A theory of magnitude: Common cortical metrics of time, space and quantity. Trends Cogn. Sci. 2003, 7, 483–488. [Google Scholar] [CrossRef]

- Hubbard, E.M.; Piazza, M.; Pinel, P.; Dehaene, S. Interactions between number and space in parietal cortex. Nat. Rev. Neurosci. 2005, 6, 435–448. [Google Scholar] [CrossRef]

- Rugani, R.; De Hevia, M.D. Number-space associations without language: Evidence from preverbal human infants and non-human animal species. Psychon. Bull. Rev. 2016, 24, 352–369. [Google Scholar] [CrossRef] [PubMed]

- Di Giorgio, E.; Lunghi, M.; Rugani, R.; Regolin, L.; Barba, B.D.; Vallortigara, G.; Simion, F. A mental number line in human newborns. Dev. Sci. 2019, 22, e12801. [Google Scholar] [CrossRef]

- Zebian, S. Linkages between Number Concepts, Spatial Thinking, and Directionality of Writing: The SNARC Effect and the REVERSE SNARC Effect in English and Arabic Monoliterates, Biliterates, and Illiterate Arabic Speakers. J. Cogn. Cult. 2005, 5, 165–190. [Google Scholar] [CrossRef]

- Pitt, B.; Casasanto, D. The correlations in experience principle: How culture shapes concepts of time and number. J. Exp. Psychol. Gen. 2019. [Google Scholar] [CrossRef] [PubMed]

- Deutscher, G. Through the Language Glass: Why the World Looks Different in Other Languages, 1st ed.; Macmillan: New York, NY, USA, 2011. [Google Scholar]

- Boroditsky, L. Metaphoric structuring: Understanding time through spatial metaphors. Cognition 2000, 75, 1–28. [Google Scholar] [CrossRef]

- Holmes, K.J.; Lourenco, S.F. Common spatial organization of number and emotional expression: A mental magnitude line. Brain Cogn. 2011, 77, 315–323. [Google Scholar] [CrossRef]

- Pitt, B.; Casasanto, D. Spatializing Emotion: No Evidence for a Domain-General Magnitude System. Cogn. Sci. 2017, 42, 2150–2180. [Google Scholar] [CrossRef]

- Holmes, K.J.; Alcat, C.; Lourenco, S.F. Is Emotional Magnitude Spatialized? A Further Investigation. Cogn. Sci. 2019, 43, e12727. [Google Scholar] [CrossRef]

- Derks, D.; Bos, A.; Von Grumbkow, J. Emoticons in Computer-Mediated Communication: Social Motives and Social Context. CyberPsychol. Behav. 2008, 11, 99–101. [Google Scholar] [CrossRef]

- Churches, O.; Nicholls, M.; Thiessen, M.; Kohler, M.J.; Keage, H.A.; Nicholls, M.E.R. Emoticons in mind: An event-related potential study. Soc. Neurosci. 2014, 9, 196–202. [Google Scholar] [CrossRef]

- Thompson, D.; MacKenzie, I.G.; Leuthold, H.; Filik, R. Emotional responses to irony and emoticons in written language: Evidence from EDA and facial EMG: Emotional responses to irony and emoticons. Psychophysiol 2016, 53, 1054–1062. [Google Scholar] [CrossRef] [PubMed]

- Ganster, T.; Eimler, S.C.; Krämer, N.C. Same Same but Different!? The Differential Influence of Smilies and Emoticons on Person Perception. Cyberpsychol. Behav. Soc. Netw. 2012, 15, 226–230. [Google Scholar] [CrossRef] [PubMed]

- Oldfield, R. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 1971, 9, 97–113. [Google Scholar] [CrossRef]

- Prete, G.; D’Anselmo, A.; Brancucci, A.; Tommasi, L. Evidence of a Right Ear Advantage in the absence of auditory targets. Sci. Rep. 2018, 8, 15569. [Google Scholar] [CrossRef] [PubMed]

- Prete, G.; Fabri, M.; Foschi, N.; Tommasi, L. Asymmetry for Symmetry: Right-Hemispheric Superiority in Bi-Dimensional Symmetry Perception. Symmetry 2017, 9, 76. [Google Scholar] [CrossRef]

- Prete, G.; Malatesta, G.; Tommasi, L. Facial gender and hemispheric asymmetries: A hf-tRNS study. Brain Stimul. 2017, 10, 1145–1147. [Google Scholar] [CrossRef]

- Prete, G.; Tommasi, L. Exploring the interactions among SNARC effect, finger counting direction and embodied cognition. PeerJ 2020, in press. [Google Scholar] [CrossRef]

- Hines, T.M. An odd effect: Lengthened reaction times for judgments about odd digits. Mem. Cogn. 1990, 18, 40–46. [Google Scholar] [CrossRef]

- Eisenbarth, H.; Alpers, G.W. Happy mouth and sad eyes: Scanning emotional facial expressions. Emot. 2011, 11, 860–865. [Google Scholar] [CrossRef]

- Gerstmann, J. Syndrome of finger agnosia, disorientation for right and left, agraphia and acalculia: Local diagnostic value. Arch. Neurol. Psychiatry 1940, 44, 398. [Google Scholar] [CrossRef]

- Johnson, M.H. Subcortical face processing. Nat. Rev. Neurosci. 2005, 6, 766–774. [Google Scholar] [CrossRef] [PubMed]

- Prete, G.; Capotosto, P.; Zappasodi, F.; Tommasi, L. Contrasting hemispheric asymmetries for emotional processing from event-related potentials and behavioral responses. Neuropsychology 2018, 32, 317–328. [Google Scholar] [CrossRef] [PubMed]

- Wyczesany, M.; Capotosto, P.; Zappasodi, F.; Prete, G. Hemispheric asymmetries and emotions: Evidence from effective connectivity. Neuropsychologia 2018, 121, 98–105. [Google Scholar] [CrossRef] [PubMed]

- Prete, G.; Capotosto, P.; Zappasodi, F.; Laeng, B.; Tommasi, L. The cerebral correlates of subliminal emotions: An electroencephalographic study with emotional hybrid faces. Eur. J. Neurosci. 2015, 42, 2952–2962. [Google Scholar] [CrossRef] [PubMed]

- Ovaysikia, S.; Tahir, K.A.; Chan, J.L.; DeSouza, J. Word wins over face: Emotional stroop effect activates the frontal cortical network. Front. Hum. Neurosci. 2011, 4, 4. [Google Scholar] [CrossRef]

- Fusar-Poli, P.; Placentino, A.; Carletti, F.; Landi, P.; Allen, P.; Surguladze, S.; Benedetti, F.; Abbamonte, M.; Gasparotti, R.; Barale, F.; et al. Functional atlas of emotional faces processing: A voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. J. Psychiatry Neurosci. 2009, 34, 418–432. [Google Scholar]

- Vuilleumier, P.; Pourtois, G. Distributed and interactive brain mechanisms during emotion face perception: Evidence from functional neuroimaging. Neuropsychologia 2007, 45, 174–194. [Google Scholar] [CrossRef]

- Citron, F.M. Neural correlates of written emotion word processing: A review of recent electrophysiological and hemodynamic neuroimaging studies. Brain Lang. 2012, 122, 211–226. [Google Scholar] [CrossRef]

- Davidson, R.J.; Mednick, D.; Moss, E.; Saron, C.; Schaffer, C.E. Ratings of emotion in faces are influenced by the visual field to which stimuli are presented. Brain Cogn. 1987, 6, 403–411. [Google Scholar] [CrossRef]

- Gainotti, G. Emotional Behavior and Hemispheric Side of the Lesion. Cortex 1972, 8, 41–55. [Google Scholar] [CrossRef]

- Killgore, W.D.; Yurgelun-Todd, D.A. The right-hemisphere and valence hypotheses: Could they both be right (and sometimes left)? Soc. Cogn. Affect. Neurosci. 2007, 2, 240–250. [Google Scholar] [CrossRef] [PubMed]

- Prete, G.; Laeng, B.; Fabri, M.; Foschi, N.; Tommasi, L. Right hemisphere or valence hypothesis, or both? The processing of hybrid faces in the intact and callosotomized brain. Neuropsychologia 2015, 68, 94–106. [Google Scholar] [CrossRef] [PubMed]

- Nuerk, H.-C.; Wood, G.; Willmes, K. The Universal SNARC Effect: The association between number magnitude and space is amodal. Exp. Psychol. 2005, 52, 187–194. [Google Scholar] [CrossRef] [PubMed]

- Erhan, H.; Borod, J.C.; Tenke, C.; Bruder, G.E. Identification of Emotion in a Dichotic Listening Task: Event-Related Brain Potential and Behavioral Findings. Brain Cogn. 1998, 37, 286–307. [Google Scholar] [CrossRef]

- Prete, G.; Marzoli, D.; Brancucci, A.; Fabri, M.; Foschi, N.; Tommasi, L. The processing of chimeric and dichotic emotional stimuli by connected and disconnected cerebral hemispheres. Behav. Brain Res. 2014, 271, 354–364. [Google Scholar] [CrossRef]

- Feldman, A.; Oscar-Strom, Y.; Tzelgov, J.; Berger, A. Spatial–numerical association of response code effect as a window to mental representation of magnitude in long-term memory among Hebrew-speaking children. J. Exp. Child Psychol. 2019, 181, 102–109. [Google Scholar] [CrossRef]

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).