There are basically three types of forecasting techniques: numerical weather prediction, image-based prediction and statistical and machine learning (ML) methods ranging for a period of short-term, medium-term and long-term predictions. Solar irradiance data are time series data, i.e., ranging for a period of time in sequential manner, and traditionally, linear forecasting methods were widely used because they were well understood, easy to compute and provided stable forecasts. Autoregression (AR) [

8], the moving-average model (MA), autoregressive with exogeous inputs (ARX) [

9], autoregressive moving-average (ARMA) [

10], autoregressive moving-average with exogeous inputs (ARMAX) [

11], autoregressive integrated moving average (ARIMA) [

12], seasonal autoregressive integrated moving average (SARIMA) [

13], autoregressive integrated moving-average with exogeous inputs (ARIMAX) [

14], seasonal autoregressive integrated moving-average with exogeous inputs (SARIMAX) [

15] and generalized autoregressive score (GAS) [

16,

17] are the traditional forecast models. Belmahdi et al. [

18] proposed the ARMA and ARIMA models for forecasting the global solar radiation parameter. The models showed improvement in terms of forecast error; however, only the solar radiation parameter was considered. The geographical or meteorological parameters were not employed. These are mostly linear over the previous inputs or states; hence, they are not adapted to many real-world applications. One major limitation is their pre-assumed linearity form of the data that cannot capture complex nonlinear patterns. The challenges also include lower forecast accuracy and less scalability for big data. Yagli et al. [

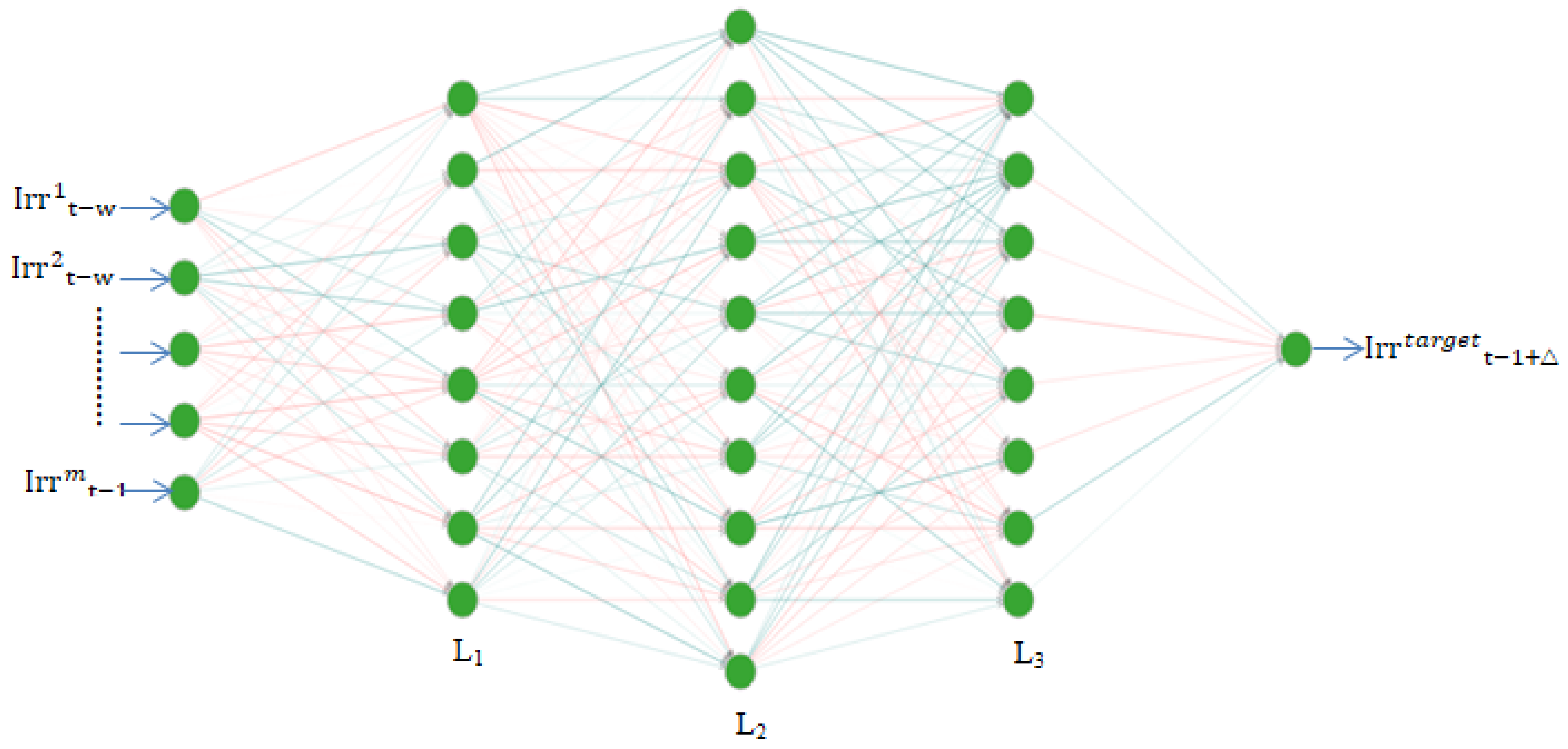

19] performed a study using satellite-derived irradiance data from multiple locations on 68 machine learning models. The research proposed that multilayer perceptron (MLP) models were one of the best performers and, for assessment of model performance, resulted in a daily or short evaluation period as advised. Following this, the neural network models used here were focused for day-ahead forecasting. The neural network models (NN) work on nonlinear transforming layers with data not required to be necessarily stationary. Neural networks are also strongly capable of determining the complex structures in data, working as an efficient tool for reconstruction of a noisy system driven by data, which is why they are suitable for complex and variable time series forecasting. These are suited for modeling problems which require capturing dependencies and are capable of preserving knowledge as they progress through the subsequent time steps in the data. The authors of [

20] proposed an approach for prediction of solar irradiance using deep recurrent neural networks with the aim of improving model complexity and enabling feature extraction of high-level features. The proposed method showed better performance than the conventional feedforward neural networks and support vector machines. The recurrent neural network (RNN) [

21] architecture is a special type of neural network accounting for data node dependencies by preserving sequential information in an inner state, which allows the persistence of knowledge accrued from subsequent time steps. However, the RNN is prone to vanishing and exploding gradients. This led to the development of RNN variants such as long short-term memory (LSTM) networks [

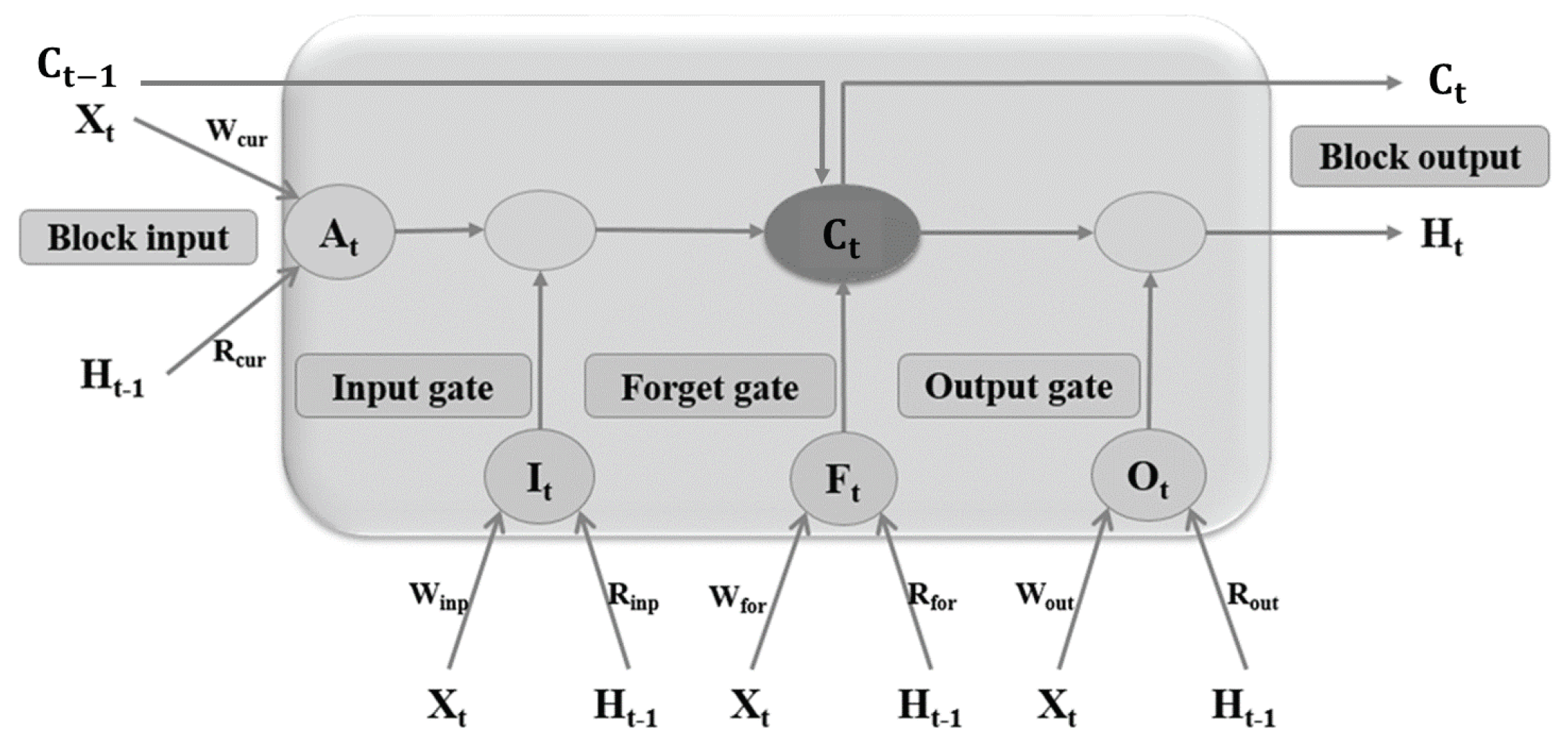

22], bidirectional LSTM and gated recurrent units (GRU) as extensions of the RNN architecture by replacing the conventional perceptron architecture with memory cell and gating mechanisms that regulate information flow across the network. These variants are widely used for the task of solar irradiance forecasting. The authors of [

23] stated that LSTM is a powerful approach for time series forecasting; they used it for day-ahead prediction of solar irradiance. The study proved the LSTM model to be robust; it outperformed other forecast mechanisms such as gradient boosting regression, feedforward neural networks and the persistence model. The authors of [

24] proposed a mechanism for hourly day-ahead prediction of solar irradiance using the weather forecasting data. The proposed model consisting of the LSTM variant was compared to the persistence algorithm, linear least square regression and multilayered feedforward neural networks using a backpropagation algorithm (BPNN) for solar irradiance prediction which resulted in LSTM performing the best among all of the methods. The authors of [

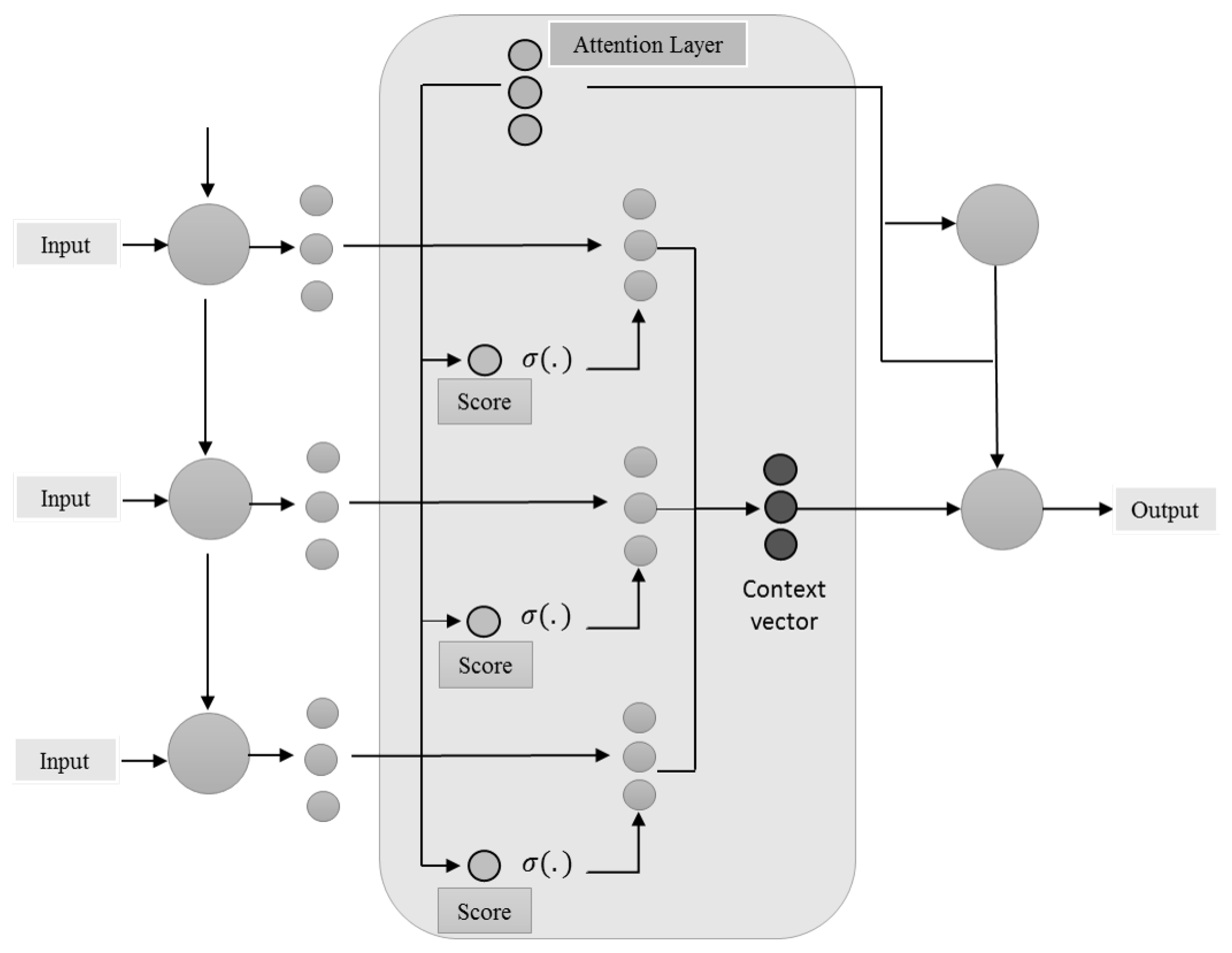

25] developed a least absolute shrinkage and selection operator (LASSO) and LSTM integrated temporal model for solar intensity forecasting which could predict short-term solar intensity with high precision. Furthermore, recurrent neural networks can be divided into two categories based on the type of mechanism they follow, one being the traditional memory-based models and the other being the attention-based ones. Some of the memory-based models are LSTM, GRU, bidirectional RNNs and so on, while some attention-based models are the attention LSTM, self-attention generative adversarial networks and multi-headed LSTM. The memory-based RNNs are the most widely used models for the task of solar irradiance forecasting in literature. Here, we also intend to introduce the attention mechanism for the task of solar irradiance forecasting. For the task of predicting an element, the attention vector is estimated on the strength of its correlation with other elements and then the sum of these values, which are weighted by the attention vector, is taken. The attention mechanism which was originally introduced and used specifically for machine translation has been recently used for time series forecasting in solar energy tasks. The authors of [

26] proposed a temporal attention mechanism for forecasting solar power production from PV plants. The authors of [

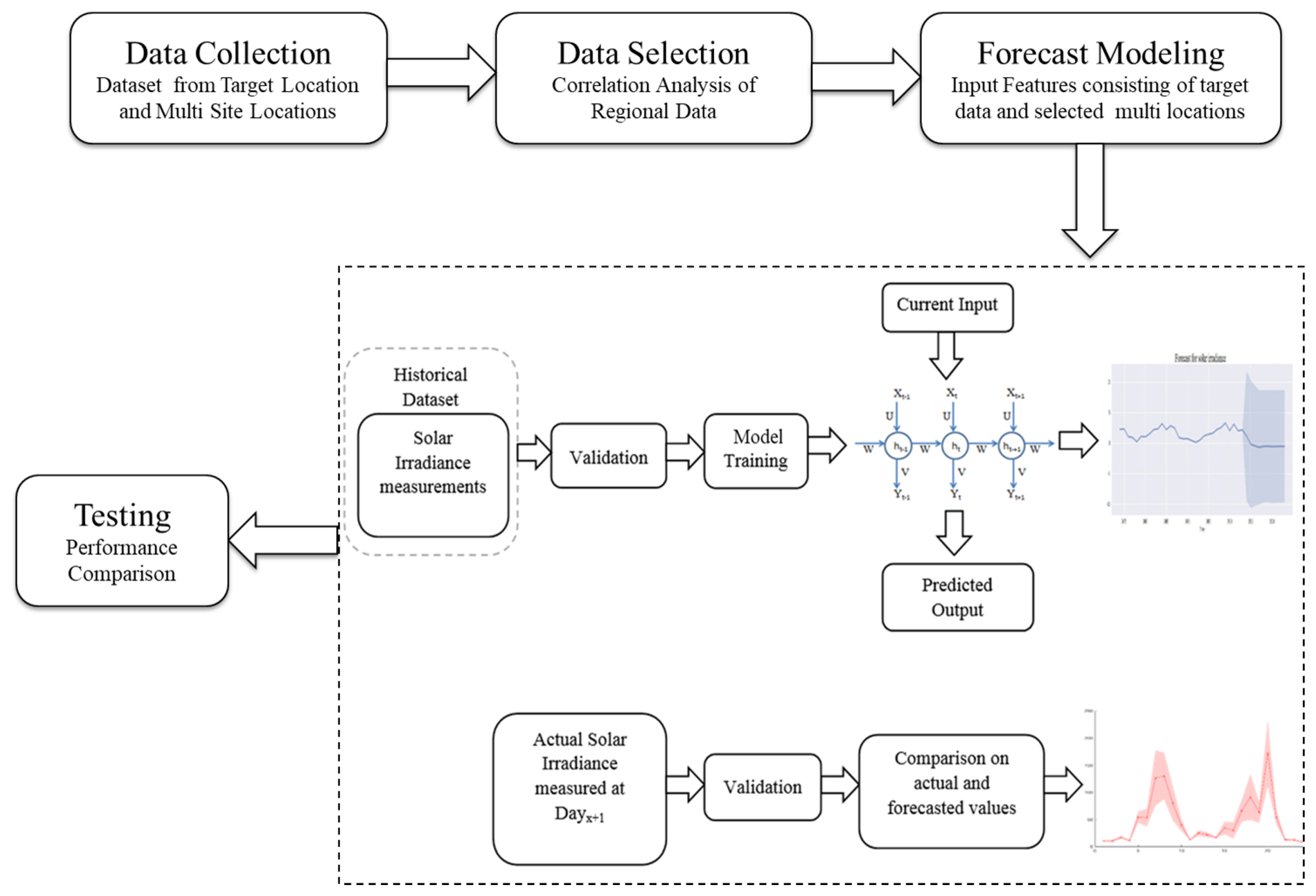

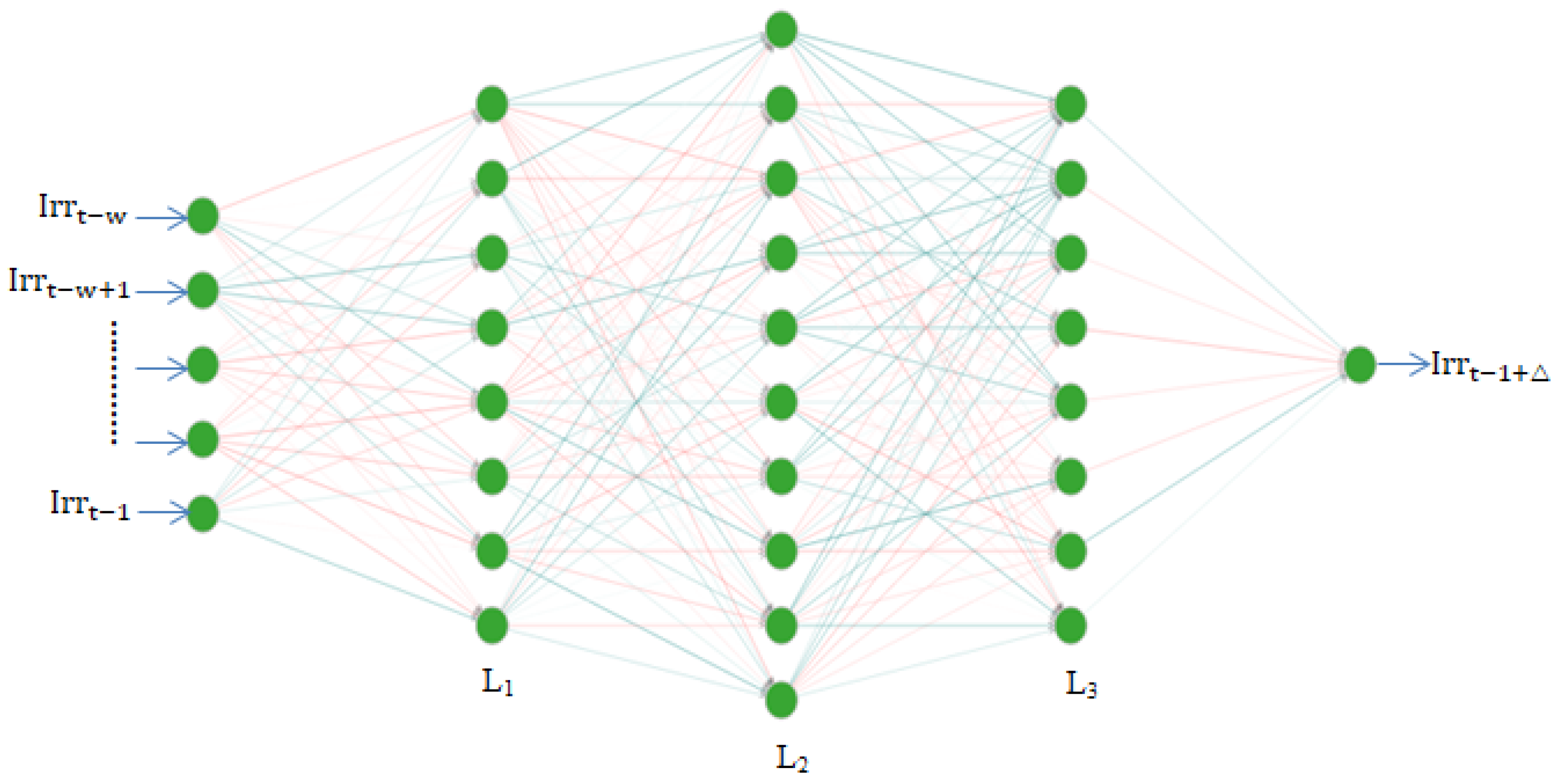

27] improved upon the attention-based architecture of transformers for forecasting solar power production. In previous works, the prediction task was performed on data from a single target location. The data from the surrounding locations were not exploited for predicting future values of target location. Here, along with the target location’s data, the regional data surrounding the target location were also utilized for building the model. This was done in order to exploit the available data of multiple locations and their contribution in forecasting the future value of a particular target location. A thorough study focusing on various memory-based and attention-based deep recurrent neural network mechanisms for solar irradiance forecasting has not been carried out yet. As such, the DNN-based time series models were built on the basis of the multiple-site concept to forecast the daily solar irradiance of two locations in India on data collected from NASA over a period of 36 years.