Automatic Classification System of Arrhythmias Using 12-Lead ECGs with a Deep Neural Network Based on an Attention Mechanism †

Abstract

1. Introduction

2. Related Work

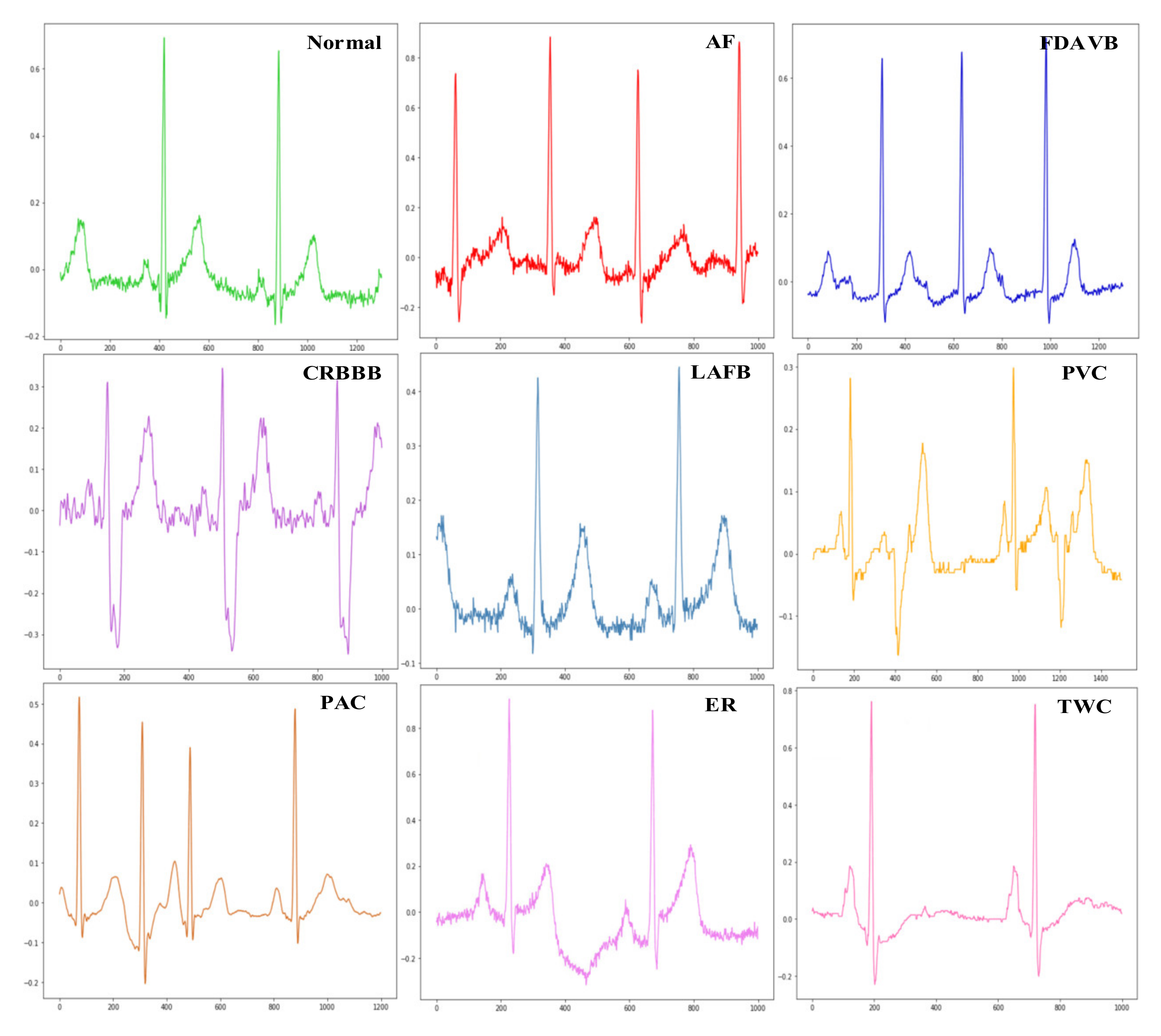

3. Data

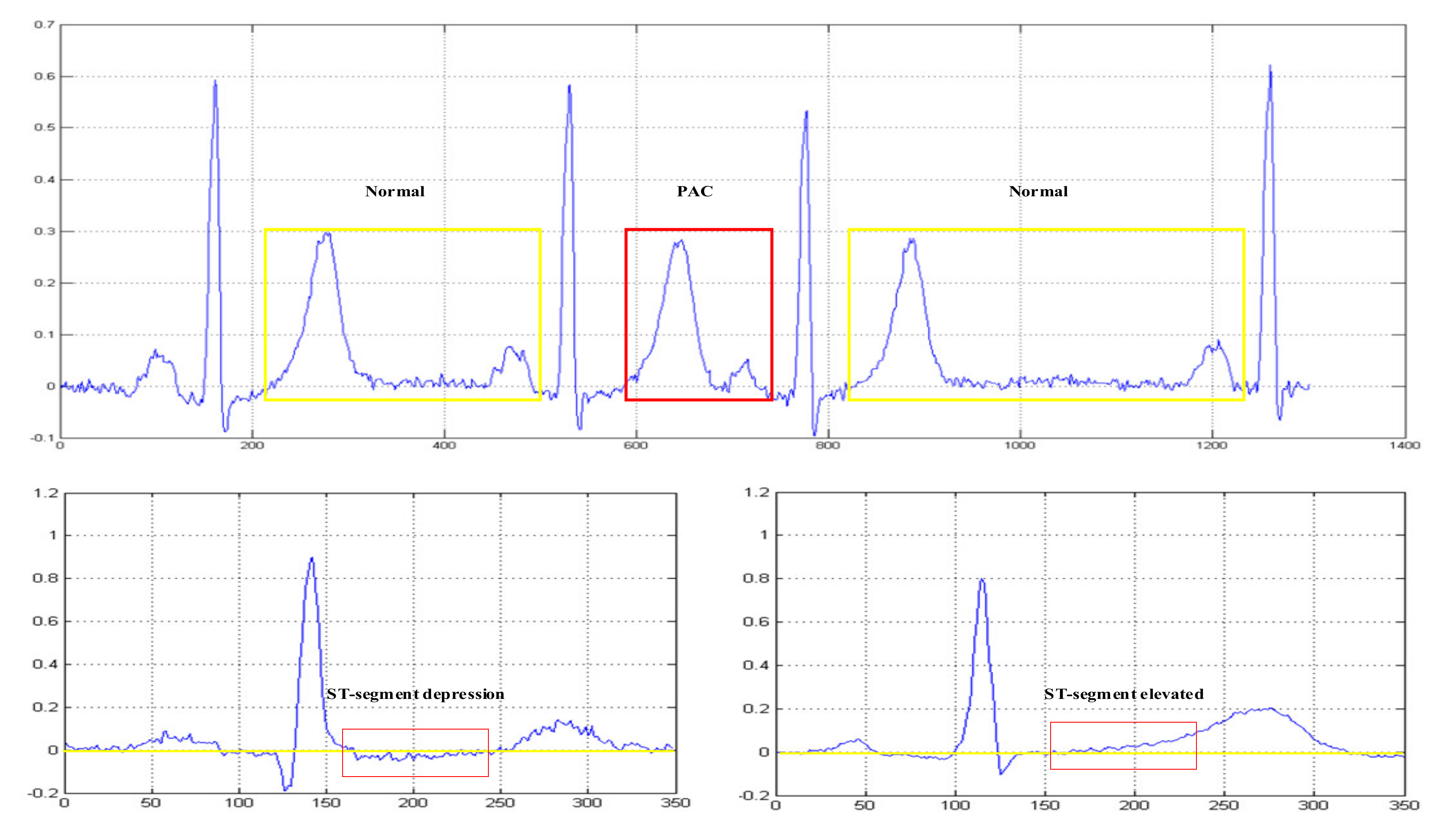

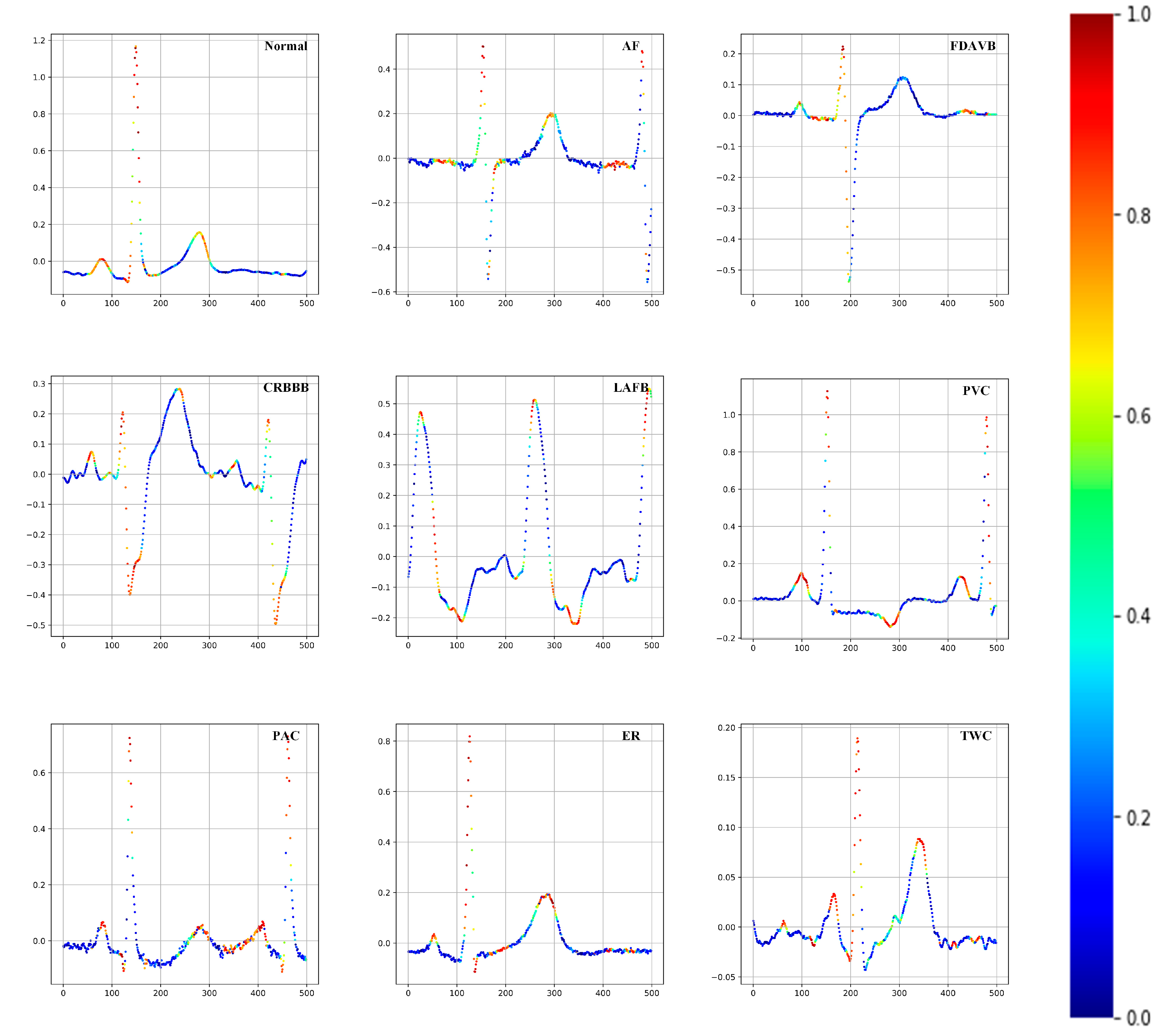

3.1. Data Description

3.2. Data Processing

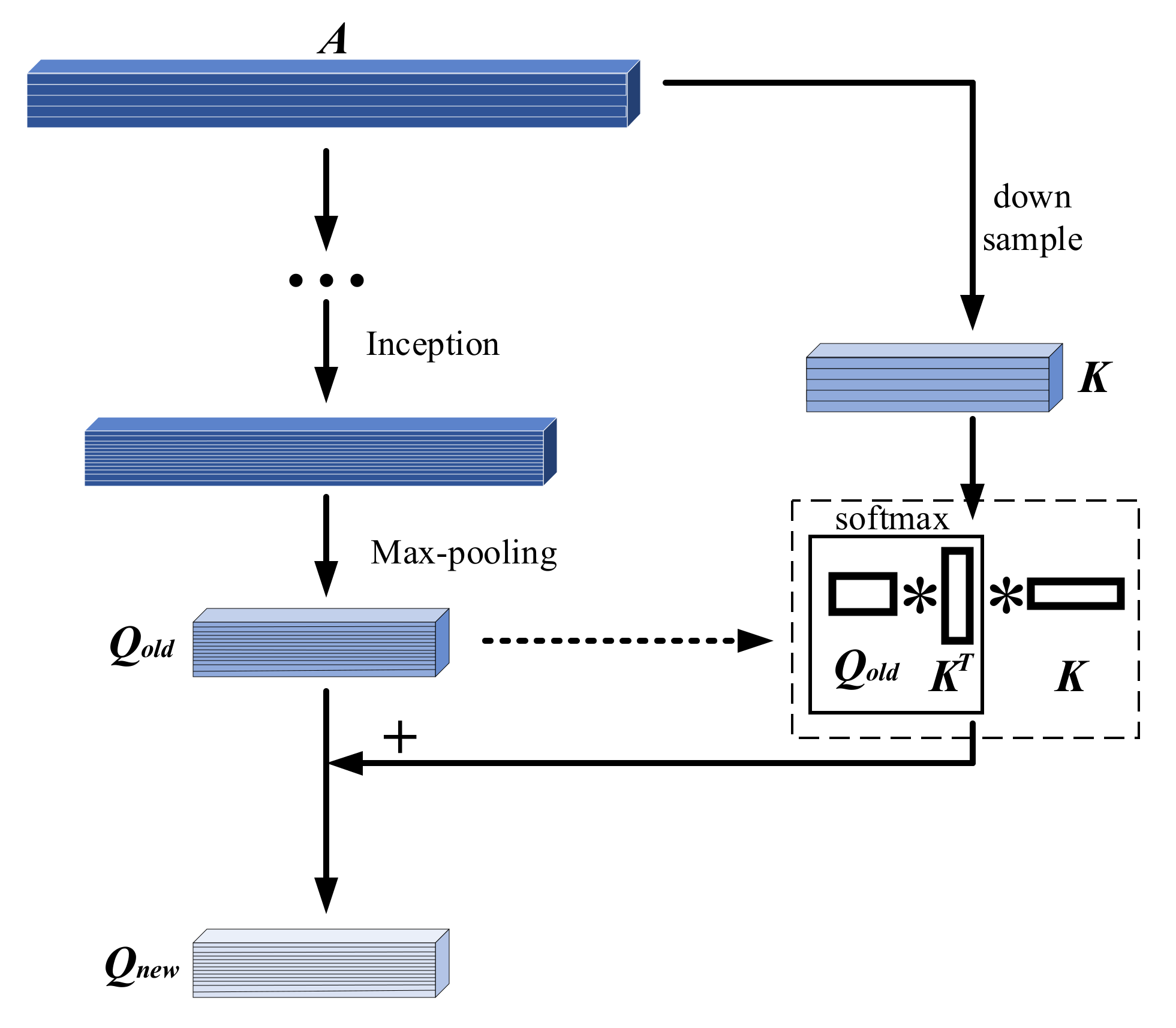

4. Material and Methods

4.1. Inception Module

4.2. Attention Module

4.3. GRU Module

4.4. Other Important Components

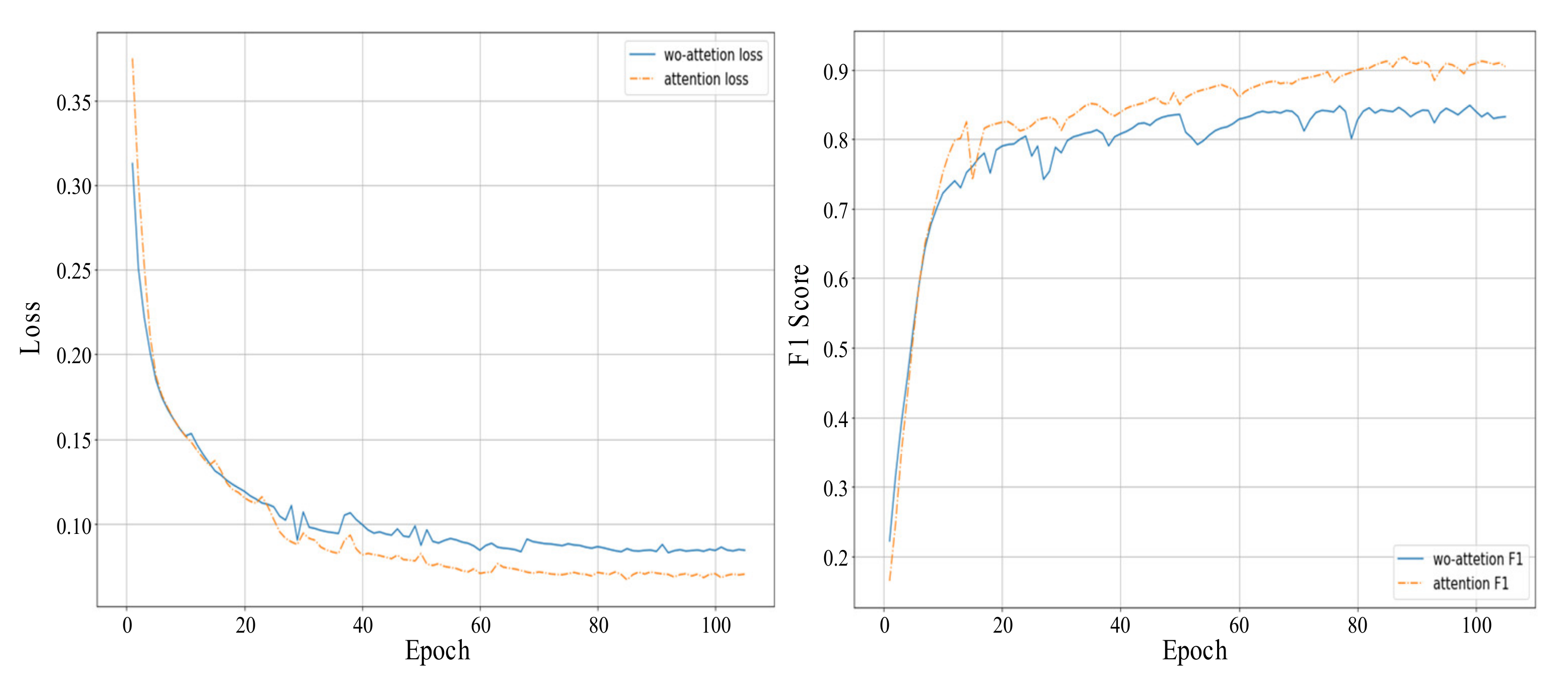

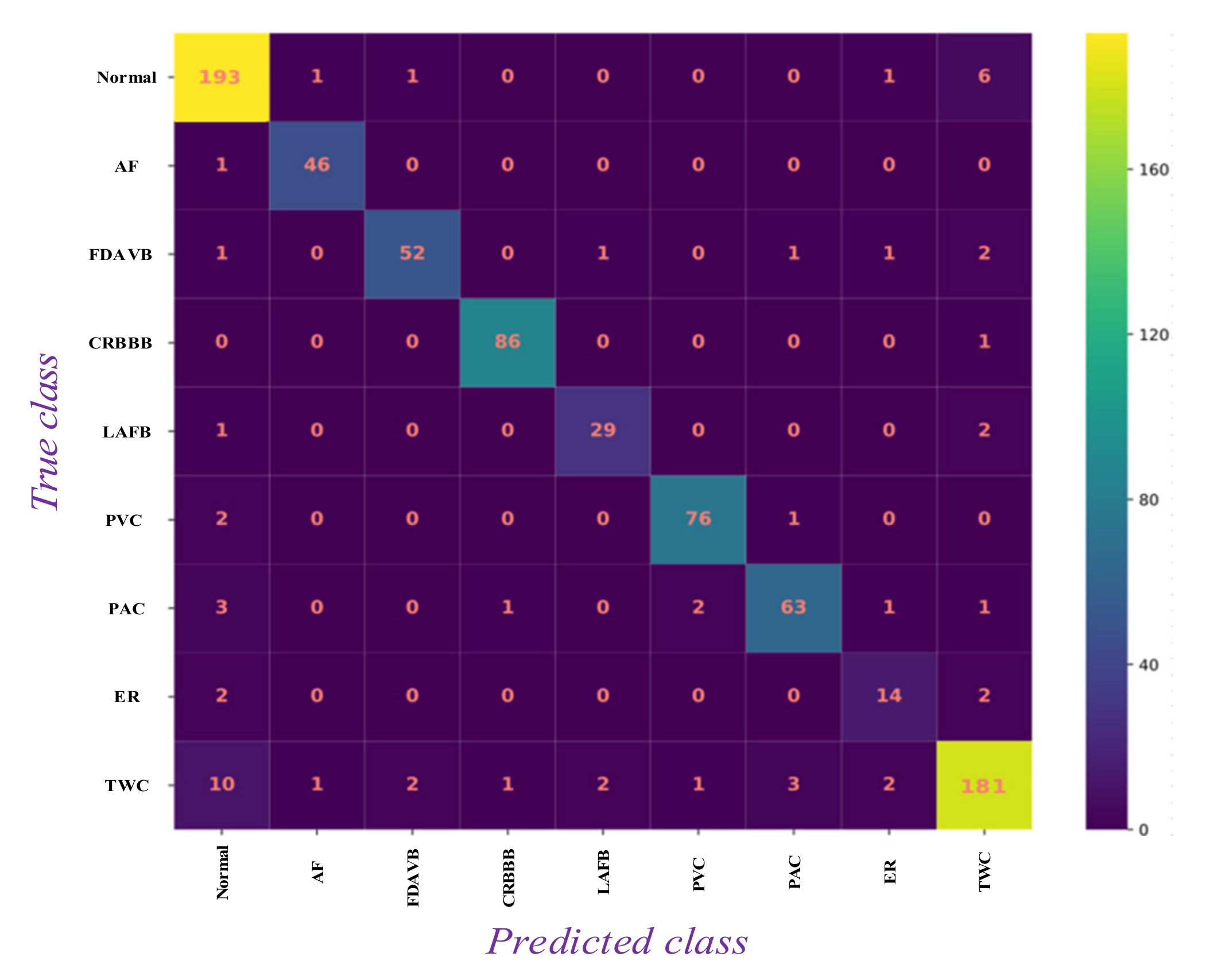

5. Results and Discussion

5.1. Results Analysis

5.2. Model Evaluation

5.3. Comparison with Other Approaches

5.4. Future Work

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Zimetbaum, P.J.; Josephson, M.E. Use of the electrocardiogram in acute myocardial infarction. N. Engl. J. Med. 2003, 348, 933–940. [Google Scholar] [CrossRef]

- Wübbeler, G.; Stavridis, M.; Kreiseler, D.; Bousseljot, R.-D.; Elster, C. Verification of humans using the electrocardiogram. Pattern Recognit. Lett. 2007, 28, 1172–1175. [Google Scholar] [CrossRef]

- Yu, S.N.; Chou, K.T. Selection of significant independent components for ECG beat classification. Expert Syst. Appl. 2009, 36, 2088–2096. [Google Scholar] [CrossRef]

- Slimane, Z.E.H.; Bereksi Reguig, F. New Algorithm for QRS Complex Detection. J. Mech. Med. Biol. 2005, 5, 507–515. [Google Scholar] [CrossRef]

- De Chazal, P.; O’Dwyer, M.; Reilly, R.B. Automatic classification of heartbeats using ECG morphology and heartbeat interval features. IEEE Trans. Biomed. Eng. 2004, 51, 1196–1206. [Google Scholar] [CrossRef] [PubMed]

- Clifford, G.D.; Azuaje, F.; McSharry, P. Advanced Methods and Tools for ECG Data Analysis; Artech House: Boston, MA, USA, 2006. [Google Scholar] [CrossRef]

- D’Angelo, G.; Ficco, M.; Palmieri, F. Malware detection in mobile environments based on Autoencoders and API-images. J. Parallel Distrib. Comput. 2020, 137, 26–33. [Google Scholar] [CrossRef]

- D’Angelo, G.; Tipaldi, M.; Glielmo, L.; Rampone, S. Spacecraft autonomy modeled via Markov decision process and associative rule-based machine learning. In Proceedings of the 2017 IEEE International Workshop on Metrology for AeroSpace (MetroAeroSpace), Padua, Italy, 21–23 June 2017; pp. 324–329. [Google Scholar] [CrossRef]

- Hu, J.I. Research on Key Technologies for Automatic Analysis of ECG Signals; National University of Defense Technology: Changsha, China, 2006. [Google Scholar]

- Melgani, F.; Bazi, Y. Classification of Electrocardiogram Signals with Support Vector Machines and Particle Swarm Optimization. IEEE Trans. Inf. Technol. Biomed. 2008, 12, 667–677. [Google Scholar] [CrossRef]

- Kumar, R.G.; Kumaraswamy, Y.S. Investigating cardiac arrhythmia in ECG using random forest classification. Int. J. Comput. Appl. 2012, 37, 31–34. [Google Scholar] [CrossRef]

- Park, J.; Lee, K.; Kang, K. Arrhythmia detection from heartbeat using k-nearest neighbor classifier. In Proceedings of the 2013 IEEE International Conference on Bioinformatics and Biomedicine, Shanghai, China, 18–21 December 2013; pp. 15–22. [Google Scholar] [CrossRef]

- Yuzhen, C.; Zengfei, F. Feature search algorithm based on maximum divergence for heart rate classification. J. Biomed. Eng. 2008, 25, 53–56. [Google Scholar]

- Ceylan, R.; Özbay, Y. Comparison of FCM, PCA and WT techniques for classification ECG arrhythmias using artificial neural network. Expert Syst. Appl. 2007, 33, 286–295. [Google Scholar] [CrossRef]

- Kiranyaz, S.; Ince, T.; Gabbouj, M. Real-time patient-specific ECG classification by 1-D convolutional neural networks. IEEE Trans. Biomed. Eng. 2015, 63, 664–675. [Google Scholar] [CrossRef] [PubMed]

- Warrick, P.; Homsi, M.N. Cardiac arrhythmia detection from ECG combining convolutional and long short-term memory networks. In Proceedings of the 2017 Computing in Cardiology (CinC), Rennes, France, 24–27 September 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Übeyli, E.D. Recurrent neural networks with composite features for detection of electrocardiographic changes in partial epileptic patients. Comput. Biol. Med. 2008, 38, 401–410. [Google Scholar] [CrossRef]

- Oh, S.L.; Ng, E.-Y.-K.; Tan, R.S.; Acharya, U.R. Automated diagnosis of arrhythmia using combination of CNN and LSTM techniques with variable length heart beats. Comput. Biol. Med. 2018, 102, 278–287. [Google Scholar] [CrossRef] [PubMed]

- Ji, Y.; Zhang, S.; Xiao, W. Electrocardiogram Classification Based on Faster Regions with Convolutional Neural Network. Sensors 2019, 19, 2558. [Google Scholar] [CrossRef] [PubMed]

- Johnson, A.E.W.; Pollard, T.J.; Shen, L.; Lehman, L.H.; Feng, M.; Ghassemi, M.; Moody, B.; Szolovits, P.; Celi, L.A.; Mark, R.G. MIMIC-III, a freely accessible critical care database. Sci. Data 2016, 3, 160035. [Google Scholar] [CrossRef] [PubMed]

- Goldberger, A.L.; Amaral, L.A.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.K.; Stanley, H.E. PhysioBank, PhysioToolkit, and PhysioNet: Components of a New Research Resource for Complex Physiologic Signals. Circulation 2000, 101, e215–e220. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual attention network for image classification. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3156–3164. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems 30; Curran Associates, Inc.: Red Hook, NY, USA, 2017; pp. 5998–6008. [Google Scholar]

- Greff, K.; Srivastava, R.K.; Koutnik, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A search space odyssey. IEEE Trans. Neural Networks Learn. Syst. 2016, 28, 2222–2232. [Google Scholar] [CrossRef] [PubMed]

- Dey, R.; Salemt, F.M. Gate-variants of gated recurrent unit (GRU) neural networks. In Proceedings of the 2017 IEEE 60th International Midwest Symposium on Circuits and Systems (MWSCAS), Boston, MA, USA, 6–9 August 2017; pp. 1597–1600. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Zubair, M.; Kim, J.; Yoon, C. An automated ECG beat classification system using convolutional neural networks. In Proceedings of the 2016 IEEE 6th International Conference on IT Convergence and Security (ICITCS), Prague, Czech Republic, 26–26 September 2016; pp. 1–5. [Google Scholar]

- Prasad, H.; Martis, R.J.; Acharya, U.R.; Min, L.C.; Suri, J.S. Application of higher order spectra for accurate delineation of atrial arrhythmia. In Proceedings of the 2013 IEEE 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 57–60. [Google Scholar] [CrossRef]

- Hannun, A.Y.; Rajpurkar, P.; Haghpanahi, M.; Tison, G.H.; Bourn, C.; Turakhia, M.P.; Ng, A.Y. Cardiologist-level arrhythmia detection and classification in ambulatory electrocardiograms using a deep neural network. Nat. Med. 2019, 25, 65. [Google Scholar] [CrossRef] [PubMed]

| Type | Trainset | Valset | Testset1 | Testset2 | Testset3 |

|---|---|---|---|---|---|

| Normal | 1752 | 202 | 173 | 1814 | 231 |

| AF | 455 | 47 | 41 | 506 | 41 |

| FDAVB | 479 | 58 | 42 | 571 | 45 |

| CRBBB | 738 | 87 | 66 | 842 | 50 |

| LAFB | 147 | 32 | 30 | 201 | 30 |

| PVC | 574 | 79 | 64 | 656 | 65 |

| PAC | 600 | 71 | 53 | 688 | 72 |

| ER | 196 | 18 | 11 | 317 | 12 |

| TWC | 1937 | 203 | 171 | 2273 | 164 |

| Total | 5850 | 650 | 500 | 6500 | 500 |

| Layer | Type | Kernel Size/Stride | Output Size | Branch 1 | Branch 2 | Branch 3 | Branch 4 |

|---|---|---|---|---|---|---|---|

| 0 | Input | 8192 × 12 | |||||

| 1 | Convolution | 7 × 1/2 | 4096 × 64 | ||||

| 2 | Max-pooling | 3 × 1/2 | 2048 × 64 | ||||

| 3 | Convolution | 3 × 1/1 | 2048 × 192 | ||||

| 4 | Max-pooling | 3 × 1/2 | 1024 × 192 | ||||

| 5 | Inception | 1024 × 256 | 64 | 128 | 32 | 32 | |

| 6 | Inception | 1024 × 480 | 128 | 192 | 96 | 64 | |

| 7 | Max-pooling | 3 × 1/2 | 512 × 480 | ||||

| 8 | Inception | 512 × 512 | 192 | 208 | 48 | 64 | |

| 9 | Inception | 512 × 512 | 160 | 224 | 64 | 64 | |

| 10 | Inception | 512 × 512 | 128 | 256 | 64 | 64 | |

| 11 | Inception | 512 × 528 | 112 | 288 | 64 | 64 | |

| 12 | Inception | 512 × 832 | 256 | 320 | 128 | 128 | |

| 13 | Max-pooling | 3 × 1/2 | 256 × 832 | ||||

| 14 | Inception | 256 × 832 | 256 | 320 | 128 | 128 | |

| 15 | Inception | 256 × 1024 | 384 | 384 | 128 | 128 | |

| 16 | Avg-pooling | 3 × 1/2 | 128 × 1024 | ||||

| 17 | GRU | 128 × 2048 | |||||

| 18 | GRU | 128 × 512 | |||||

| 19 | Linear | 1 × 9 | Dropout (40%) | Softmax | |||

| Type | Length | |||||

|---|---|---|---|---|---|---|

| 1024 | 2048 | 4096 | 8192 | 16,384 | 32,768 | |

| Normal | 0.842 | 0.883 | 0.895 | 0.930 | 0.875 | 0.872 |

| AF | 0.931 | 0.968 | 0.971 | 0.968 | 0.953 | 0.953 |

| FDAVB | 0.754 | 0.864 | 0.877 | 0.920 | 0.852 | 0.844 |

| CRBBB | 0.937 | 0.971 | 1.000 | 0.983 | 0.973 | 0.962 |

| LAFB | 0.732 | 0.865 | 0.922 | 0.906 | 0.884 | 0.853 |

| PVC | 0.714 | 0.884 | 0.932 | 0.962 | 0.921 | 0.906 |

| PAC | 0.665 | 0.822 | 0.868 | 0.906 | 0.863 | 0.845 |

| ER | 0.381 | 0.684 | 0.684 | 0.757 | 0.702 | 0.702 |

| TWC | 0.704 | 0.852 | 0.881 | 0.904 | 0.875 | 0.841 |

| Average | 0.740 | 0.866 | 0.892 | 0.915 | 0.878 | 0.864 |

| Type | Module | |||

|---|---|---|---|---|

| Unidirectional GRU | Bidirectional GRU | Unidirectional LSTM | Bidirectional LSTM | |

| Normal | 0.930 | 0.891 | 0.891 | 0.874 |

| AF | 0.968 | 0.957 | 0.968 | 0.957 |

| FDAVB | 0.920 | 0.915 | 0.888 | 0.901 |

| CRBBB | 0.983 | 0.983 | 0.977 | 0.973 |

| LAFB | 0.906 | 0.922 | 0.906 | 0.884 |

| PVC | 0.962 | 0.949 | 0.943 | 0.943 |

| PAC | 0.906 | 0.915 | 0.901 | 0.904 |

| ER | 0.757 | 0.722 | 0.684 | 0.684 |

| TWC | 0.904 | 0.887 | 0.875 | 0.862 |

| Average | 0.915 | 0.905 | 0.893 | 0.887 |

| Precision (PPV) | Recall (Sensitivity) | Specificity | F1 Score | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Set1 | Set2 | Set3 | Set4 | Set1 | Set2 | Set3 | Set4 | Set1 | Set2 | Set3 | Set4 | Set1 | Set2 | Set3 | Set4 | |

| Normal | 0.906 | −0.921 | 0.861 | 0.883 | 0.955 | −0.949 | 0.914 | 0.935 | 0.955 | −0.955 | 0.942 | 0.951 | 0.930 | 0.935 | 0.887 | 0.908 |

| AF | 0.958 | 0.979 | 0.962 | 0.976 | 0.978 | 0.987 | 0.983 | 1.000 | 0.995 | 0.997 | 0.996 | 0.997 | 0.968 | 0.983 | 0.972 | 0.987 |

| FDAVB | 0.945 | 0.939 | 0.933 | 0.933 | 0.897 | 0.886 | 0.891 | 0.889 | 0.996 | 0.995 | 0.995 | 0.995 | 0.920 | 0.912 | 0.912 | 0.910 |

| CRBBB | 0.977 | 1.000 | 0.975 | 0.980 | 0.989 | 1.000 | 0.988 | 0.980 | 0.995 | 1.000 | 0.996 | 0.996 | 0.983 | 1.000 | 0.981 | 0.980 |

| LAFB | 0.906 | 0.900 | 0.872 | 0.900 | 0.906 | 0.871 | 0.838 | 0.844 | 0.995 | 0.993 | 0.987 | 0.991 | 0.906 | 0.885 | 0.852 | 0.871 |

| PVC | 0.962 | 0.974 | 0.967 | 0.969 | 0.962 | 0.974 | 0.956 | 0.969 | 0.995 | 0.996 | 0.995 | 0.995 | 0.962 | 0.974 | 0.961 | 0.969 |

| PAC | 0.926 | 0.909 | 0.914 | 0.917 | 0.887 | 0.896 | 0.879 | 0.889 | 0.991 | 0.992 | 0.990 | 0.991 | 0.906 | 0.902 | 0.896 | 0.903 |

| ER | 0.737 | 0.750 | 0.672 | 0.692 | 0.778 | 0.818 | 0.688 | 0.750 | 0.992 | 0.992 | 0.974 | 0.983 | 0.757 | 0.783 | 0.680 | 0.720 |

| TWC | 0.914 | 0.906 | 0.841 | 0.884 | 0.892 | 0.884 | 0.823 | 0.854 | 0.969 | 0.961 | 0.953 | 0.959 | 0.904 | 0.895 | 0.832 | 0.869 |

| Average | 0.914 | 0.920 | 0.889 | 0.904 | 0.916 | 0.918 | 0.884 | 0.901 | 0.987 | 0.987 | 0.981 | 0.984 | 0.915 | 0.919 | 0.886 | 0.902 |

| Author | Method | Acc (%) | Sen (%) | Spe (%) |

|---|---|---|---|---|

| Proposed | Inception + GRU + Attention | 92.84 | 90.12 | 98.41 |

| Melgani et al. [10] | SVM | 85.72 | 84.29 | 89.61 |

| Kumar et al. [11] | Random Forest | 87.14 | 87.23 | 91.97 |

| Oh, S.L. et al. [18] | CNN + LSTM | 92.10 | 90.50 | 97.42 |

| Ji Y et al. [19] | Faster R-CNN | 93.25 | 91.16 | 98.23 |

| Zubair et al. [29] | CNN | 90.40 | 87.75 | 94.42 |

| Prasad et al. [30] | K-NN | 86.45 | 85.11 | 91.36 |

| Han et al. [31] | Residual Block | 91.19 | 89.96 | 96.56 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, D.; Wu, H.; Zhao, J.; Tao, Y.; Fu, J. Automatic Classification System of Arrhythmias Using 12-Lead ECGs with a Deep Neural Network Based on an Attention Mechanism. Symmetry 2020, 12, 1827. https://doi.org/10.3390/sym12111827

Li D, Wu H, Zhao J, Tao Y, Fu J. Automatic Classification System of Arrhythmias Using 12-Lead ECGs with a Deep Neural Network Based on an Attention Mechanism. Symmetry. 2020; 12(11):1827. https://doi.org/10.3390/sym12111827

Chicago/Turabian StyleLi, Dengao, Hang Wu, Jumin Zhao, Ye Tao, and Jian Fu. 2020. "Automatic Classification System of Arrhythmias Using 12-Lead ECGs with a Deep Neural Network Based on an Attention Mechanism" Symmetry 12, no. 11: 1827. https://doi.org/10.3390/sym12111827

APA StyleLi, D., Wu, H., Zhao, J., Tao, Y., & Fu, J. (2020). Automatic Classification System of Arrhythmias Using 12-Lead ECGs with a Deep Neural Network Based on an Attention Mechanism. Symmetry, 12(11), 1827. https://doi.org/10.3390/sym12111827