1. Introduction

In recent years, virtual reality (VR) has become popular in various applications as a result of the development of low-priced, high-performance VR devices (e.g., Oculus Rift and HTC Vive Pro VR headsets) and powerful software platforms (e.g., Unity). Such applications include driving simulations [

1], training in mining industries [

2], fire drills [

3], and entertainment [

4,

5]. It is expected that VR-ready applications will expand rapidly in the future.

A sense of immersion in the virtual world currently relies mainly on vision, but systems should allow users to perform different types of interactions in various ways so that they can operate them with minimal effort and more enjoyment. Kinect [

3,

6,

7], Oculus touch [

8], and Vive tracker [

9,

10] are examples that have achieved this. However, these devices require a large space free of obstacles, which is not always possible, such as in vehicles.

We investigated the effectiveness of head movement as a possible interaction technique for controlling machines or systems in VR. One scenario we consider in this study is a driving simulation, as it has been widely used in VR games, but application of a head movement interface is not limited to this application. Head movement was captured by a head-mounted display (HMD) in our trial. Although motion capture devices that can detect head position, such as Kinect, are available, it is reasonable to use an HMD with motion sensors equipped for capturing head movement.

The idea of adopting a head movement interface comes from the observation that the user cannot help but sway whenever his/her view on an HMD changes, due to the immersive nature of VR. The technique of utilizing the user’s unconscious behavior in the interaction provides a new and entertaining opportunity. In the future, it might also serve as an assistive technology in the real world, offering satisfactory machine control and enabling the user to feel connected to and in perfect harmony with the machine. The purpose of this research is to conduct basic studies into unconscious interaction techniques, and the feasibility of applying head movement as an interaction technique in a practical context is left for future work.

Before performing the main experiment involving head movement as a means for steering the vehicle, we first analyzed the type of head movement associated with the driver’s steering behavior in the lateral direction in VR. Experimental results demonstrated that movements in the three axes of x, yaw, and roll were reasonable for the steering control. We then implemented a simulator in which the participants could control steering using head movement and examined its effectiveness in VR in terms of usability, realistic motion, and VR sickness. Although the head movement interface is usually used in conjunction with other typical interfaces (e.g., a steering wheel and a joystick) in practice, it is worth evaluating the competency of the head movement interaction technique independently.

The remainder of this paper is organized as follows. In

Section 2, works related to the present study are described. The preliminary experiment and main experiment that we performed in our study are explained in

Section 3 and

Section 4, respectively. Discussion of the experiments are presented in

Section 5. Finally,

Section 6 concludes the present work.

2. Related Works

There are several previous studies on VR-related interfaces. One promising approach is the gaze interface because it can be assumed that the user turns his/her eyes on a person or item to which he/she pays attention. There is a strong possibility of identifying the target object by detecting the position and direction of the HMD the user wears. In [

11], the authors explore the usability and psychological factors of VR applications with a gaze interface installed. Researchers have also tried to increase the user interaction with a VR system by incorporating hand gestures and/or controller-based interfaces. In [

12], the authors analyzed the effects of hand gestures on the gaze interface in a VR system. Kharoub et al. [

13] conducted an experiment on the usability and user experience of controller-based and gestural interfaces in a VR 3D desktop environment. While most of the existing trials were designed for selecting specific objects in the VR space, we are interested in interface techniques for control of the movement of a machine.

Regarding head movements, Hachaj et al. [

14] studied the recognition and classification of seven head gestures, which included clockwise/counterclockwise rotation and nodding, by using machine learning techniques. In addition, previous studies have investigated controlling a robot [

15] and a wheelchair by the movement of the head for people with tetraplegia [

16,

17,

18]. Most of the studies adopting head movement as an interface technique have been undertaken in real-world situations, but we believe that head movement is applicable to VR.

Studies on VR sickness and motion sickness are important as they influence the feasibility of VR systems. Tanaka et al. [

19] studied an optimal value search system that efficiently calculates the angular velocity and viewing angle that limit VR sickness without affecting the sense of presence. In addition, there have been studies on motion sickness when vehicles are travelling [

20]. It is known that passengers need to reduce the tilting angle of their head towards the lateral acceleration direction to help relieve the symptoms. Models of occupants’ head movement in response to lateral acceleration have been presented [

21,

22,

23,

24]. Furthermore, [

25] discussed design issues connected with (car) sickness in anticipation of the autonomous vehicle era. In addition, [

26] proposed a new metric of visually induced motion sickness in the context of navigation in virtual environments.

Prior to this research, we investigated the influence of driving-related factors in VR driving simulations, especially focusing on driver’s avoidance behavior [

27]. From the research results, it was confirmed that the avoidance timing and the vehicle position are affected by various setting conditions, which include vehicle speed, width, location of the steering wheel, traffic lane line, and lane change patterns.

3. Preliminary Experiment

It is known that drivers tilt their head toward the center of a curve [

23,

24]. This reaction helps reduce motion sickness. However, we wondered whether head movement in other directions is correlated with the vehicle’s lateral movement when changing lanes. In addition, it is worth investigating whether head movement can improve steering control. Our hypotheses associated with these questions were:

- (1)

Besides roll rotation, there are other head movement options that are related to lateral movement of the vehicle, likely yaw rotation.

- (2)

Head movement can be of assistance in steering control.

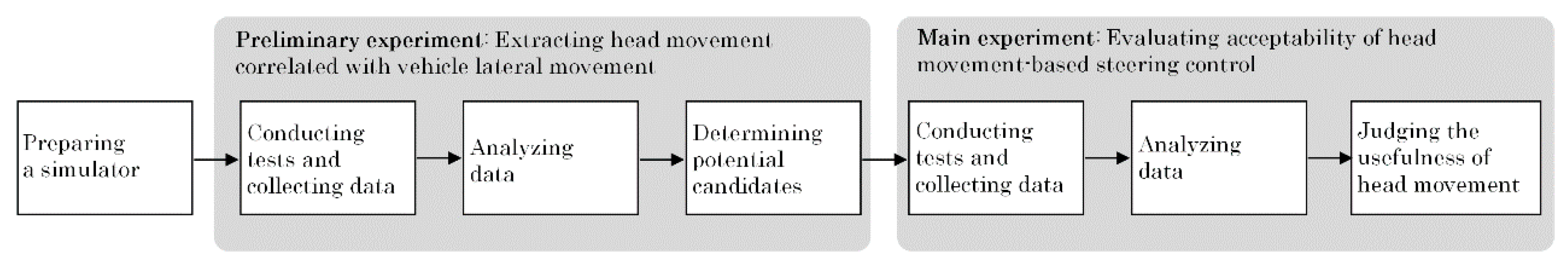

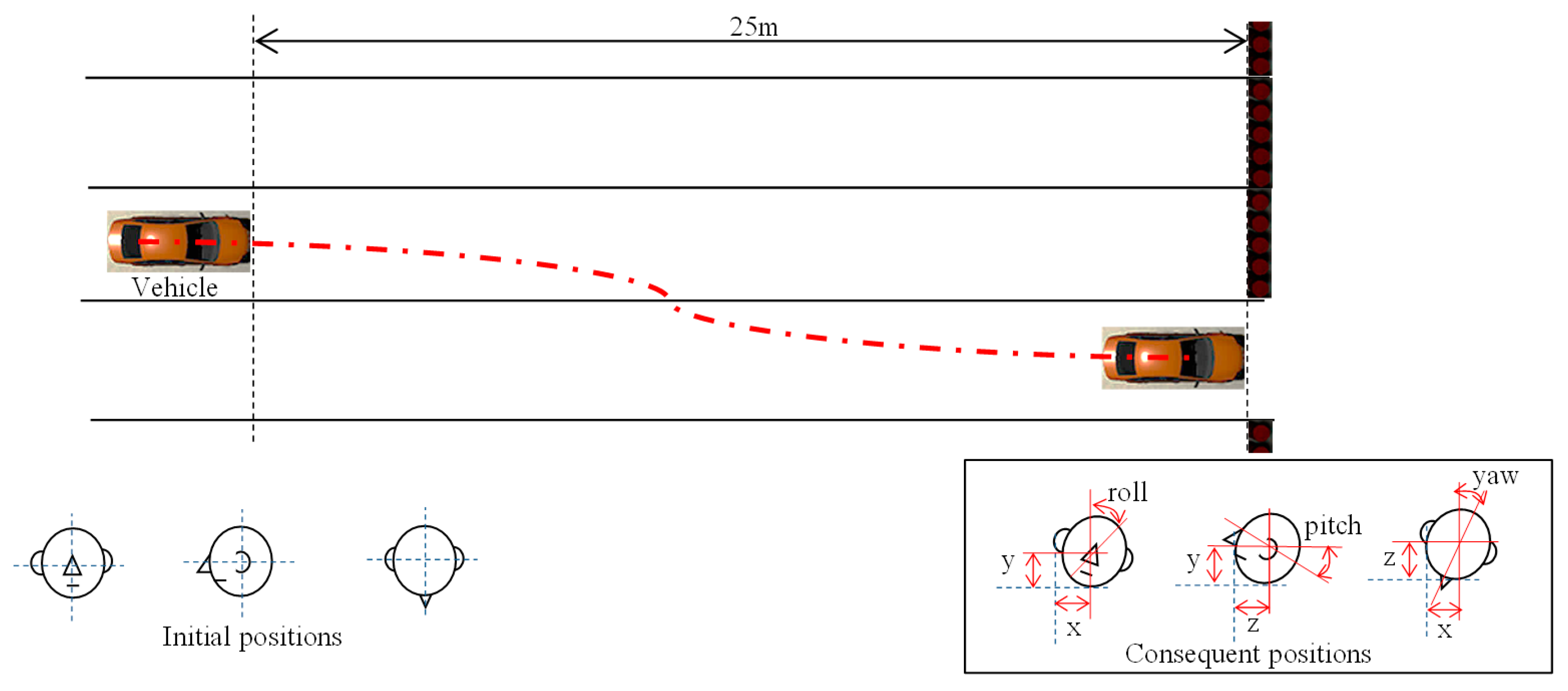

In the preliminary experiment, prior to the main experiment, we investigated the relationship between the vehicle driving behavior and head movement in VR to find potential candidates that could be used for vehicle steering (

Figure 1).

3.1. Participants and Apparatus

Twenty participants (18 men and 2 women) aged 21–24 years (mean: 21.8, standard deviation: 0.8) participated in the experiment. They had normal visual sensations and physical ability. All participants gave consent after receiving a full explanation of the test procedure. All of the participants had a driver’s license except for one.

The CPU of the computer used in this experiment was the Core i7-7700 (3.60 GHz), and the computer had 16 GB memory. We used Oculus Rift (CV 1) for the visual and auditory presentation of the VR space. The field of view (FOV) of the head-mounted display (HMD) was 110 degrees, the refresh rate was 90 Hz, and the weight was 440 g. The media content for the driving simulation was created using Unity 2017.2.1.f1 (64-bit). Scene frames were updated at a rate of 50 Hz. The Logicool LPRC-15000 was used for steering a vehicle, as shown in

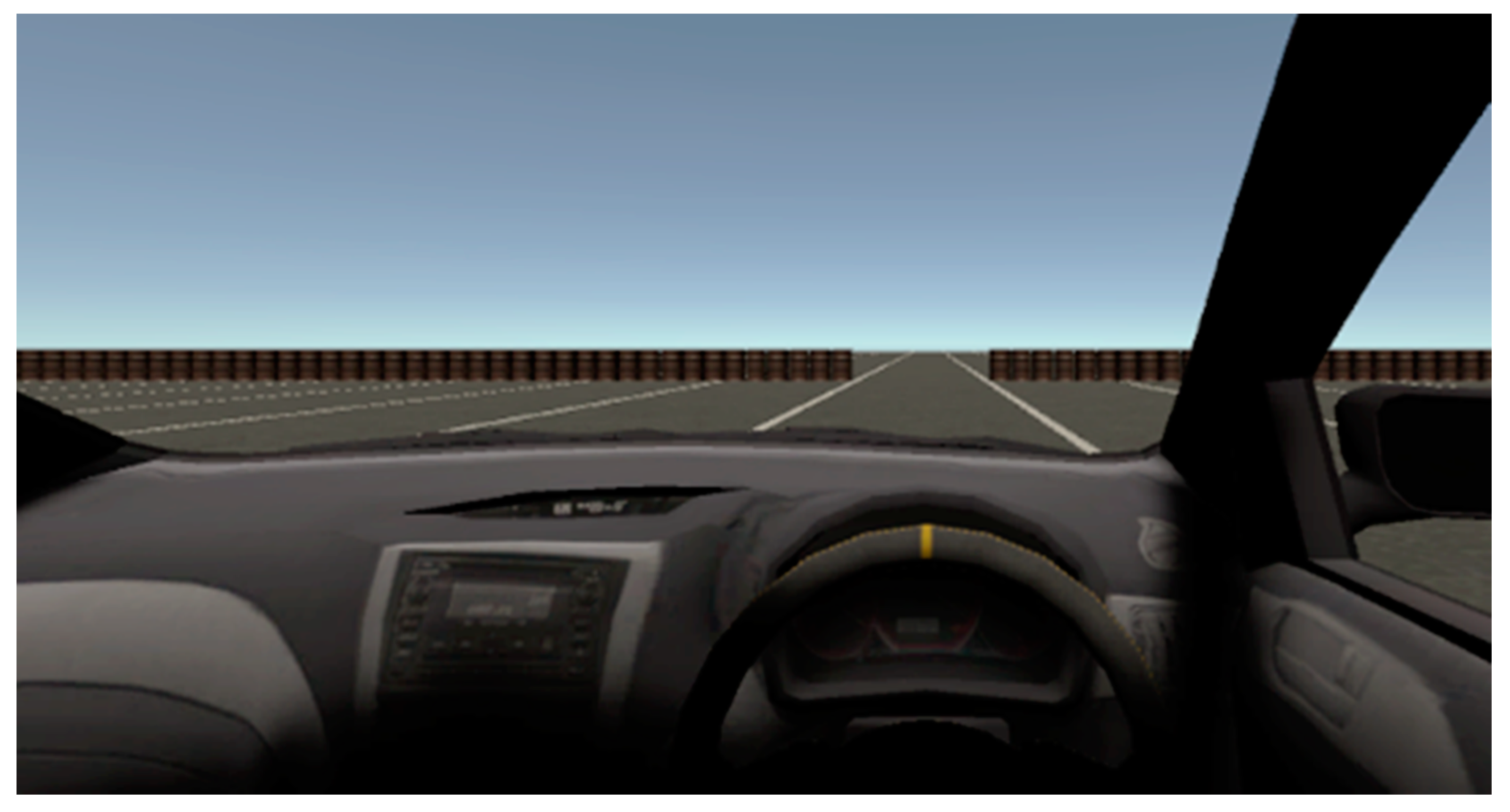

Figure 2. Participants did not need to operate the accelerator pedal or the brake pedal as the vehicle speed was controlled by the system. Additionally, for this experiment, we used a chair without a headrest to ensure the participants had unobstructed head movement.

3.2. Setup

We developed a driving simulator in which the participants drove in a virtual space while avoiding obstacles. The vehicle speed was set at 80 km/h. The vehicle length and height were 4.5 m and 1.4 m, respectively. The width was either 1.6 m (standard type) or 1.2 times wider, i.e., 1.92 m (wide type). The basis of these options was that the width of the category of small vehicles in Japan is 1.7 m or less. Vehicles beyond this size are classified as standard size vehicles. The location of the steering wheel was set for either left-hand drive vehicles or right-hand drive vehicles (Japanese standards). The viewing position of a participant was set at 0.35 m from the center of the vehicle to the left (left-hand drive) or right (right-hand drive) in the lateral direction, 1.05 m above the ground, and 2.21 m from the front of the vehicle. A sample image presented on the HMD is shown in

Figure 3. The three-dimensional and stereoscopic views of the scene changed depending on the participant’s head movement. The local coordinate system of the head was independent of the global coordinate system. The local coordinate system was reset and the head position was set to zero when the participant sat on a chair and started the experiment.

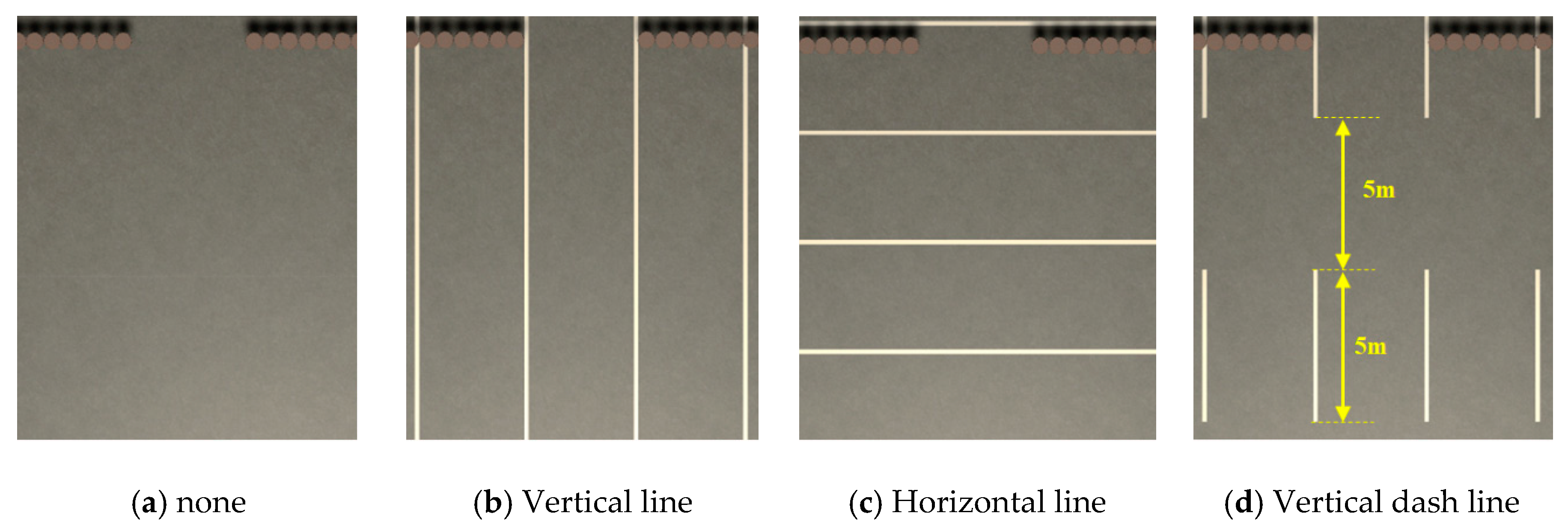

The road prepared for experiments had countless lanes and no side walls. All lanes except one were blocked by obstacles (drums). Participants were asked to drive the vehicles so as to navigate through the obstacles. Each lane was 3.5 m wide. Here, we prepared four kinds of lane separation patterns as shown in

Figure 4, considering the possibility of applying the proposed head motion-based interaction technique to situations where people drive vehicles in different locations. Vertical and vertical dash lines were most common, but horizontal lines and no-line patterns may have appeared under the wilderness or fanciful VR scenes. Examination of the influence of the variety of line patterns requires not missing any serious variables. In the same manner, we decided to apply the variable of lane change pattern, which has two options: one-lane change and two-lane change, assuming the difference in avoidance levels affects the head movement.

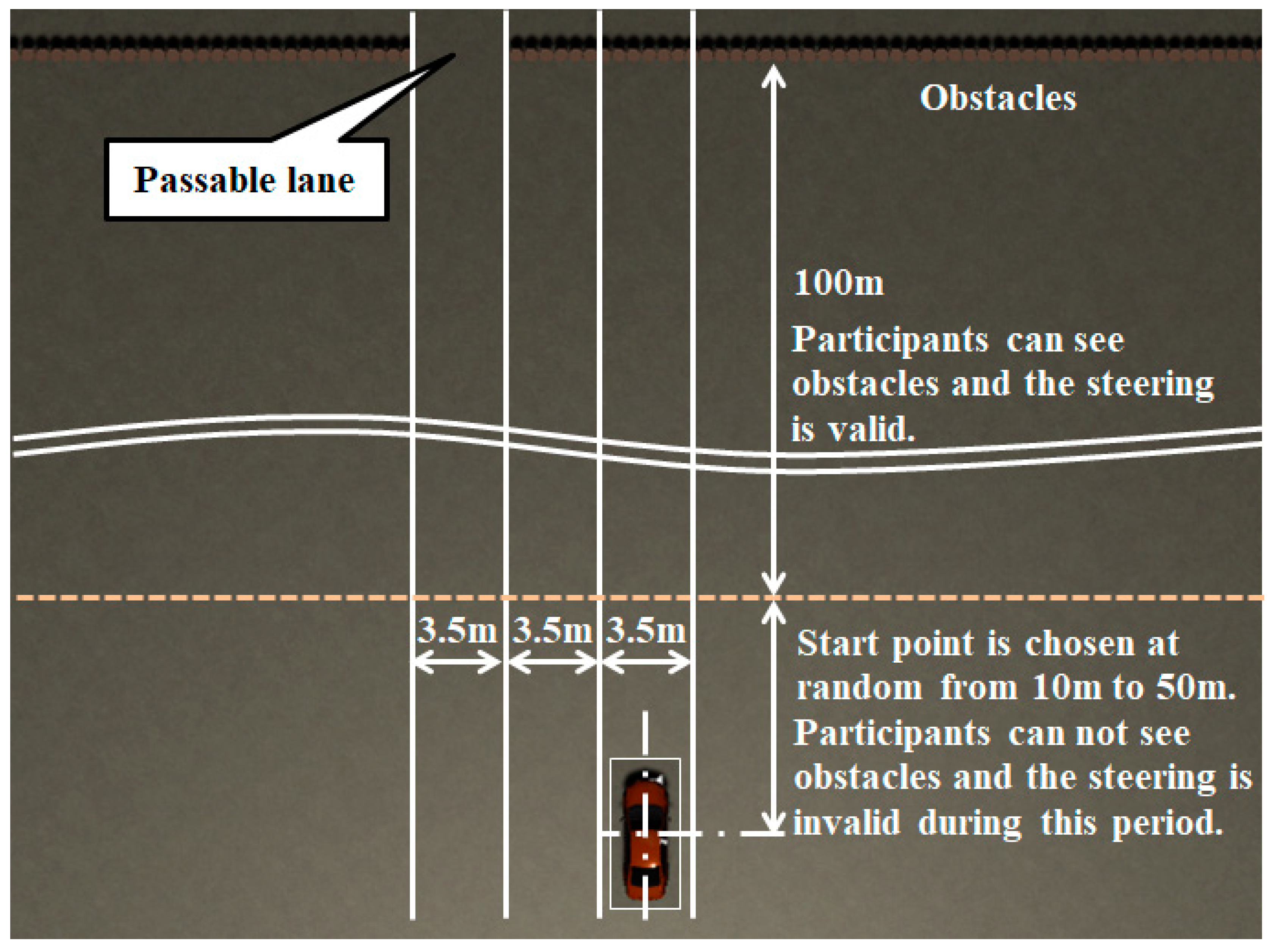

In each trial, the starting position of the vehicle was randomly assigned from 110 to 150 m ahead of the obstacles’ position. The appearance of the obstacles was controlled so that no obstacles were visible to the participants until they were 100 m ahead of them. In addition, the steering control remained invalid until the vehicle reached that point. This was done to eliminate the influence of unwanted steering operations and to encourage not starting the steering operation until the obstacles were visually confirmed.

Figure 5 shows an experiment setting in which the participant is expected to move two lanes to the left.

Specific trial patterns in the experiment were decided as follows. First, one of the combinations of the vehicle width and the steering wheel location was randomly determined, and all 16 patterns were run by repeating the process of randomly selecting one from the combinations of four line types and four lane change types—combinations of the degree of lane change (one lane shift and two lanes shift), and the direction of lane change (left shift and right shift). Next, one of the remaining combinations of the vehicle width and the steering wheel location was selected again and the pattern repeated until all 16 combinations were completed. The above series of trials were executed three times, and we collected the driving behavior data.

3.3. Procedure

Prior to the experiment, the participants were informed that there were differences in the steering wheel locations (right or left), vehicle width, and traffic lane lines. Additionally, the participants were instructed to check the lane where no obstacles were positioned and pass through the corresponding positions by controlling the steering wheel. The participants were also informed in advance that if they felt uncomfortable at any time during the experiment, they could quit the trials immediately. None of the participants quit during the experiment.

After the explanation of the procedure, the participants sat on a chair, put on the HMD, and adjusted their seat, so that they were comfortable with the seat position, provided it was within limits. Before starting the trials, the participants took part in a preparation session. Since the purpose of this session was to get used to the system, we chose four basic patterns where the participants tried both the left and right steering wheel locations on a simple road either without a line or with one vertical line. When all trials were completed, participants were asked to fill in a questionnaire and provide us with comments.

3.4. Result

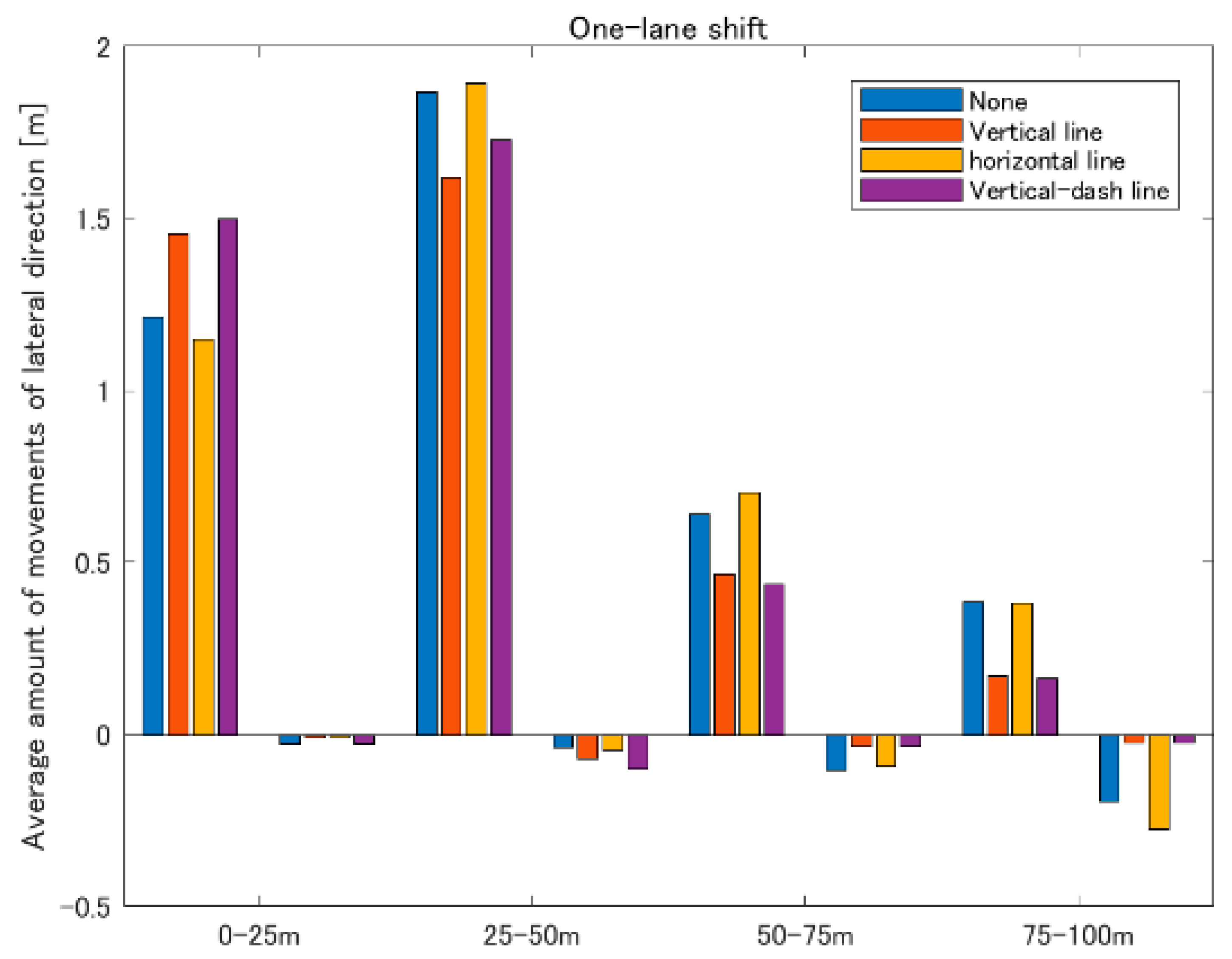

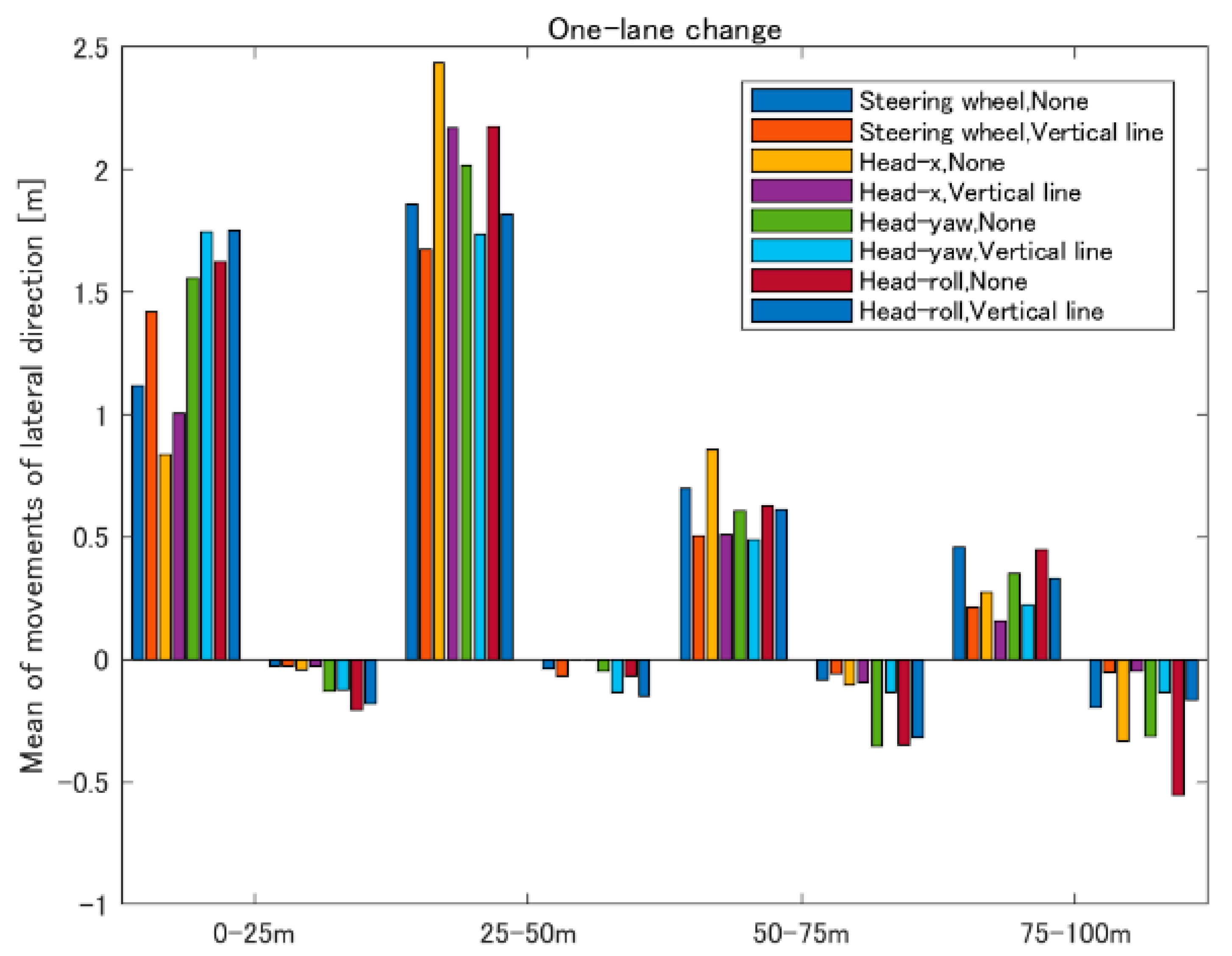

ANOVA was used to determine whether there was a significant difference in mean head movements among the lane change patterns at each of the four zones (0–25 m, 25–50 m, 50–75 m, and 75–100 m), as depicted in

Figure 6.

Table 1 summarizes the test results (

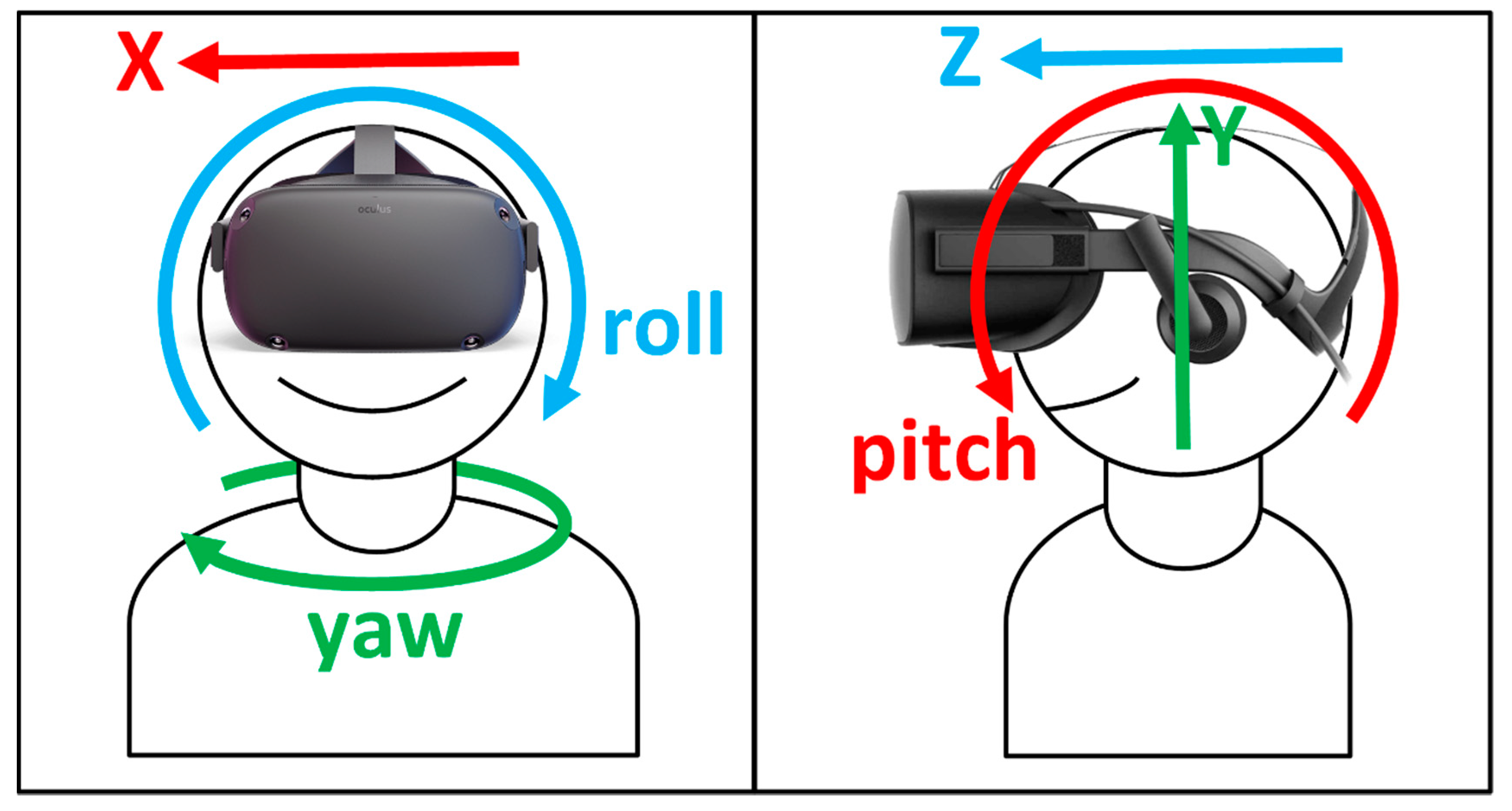

p-values). The head movements examined in the experiment are illustrated in

Figure 7. The x, y, and z axes refer to right/left, up/down, and forward/backward motions, respectively. The positive

x-axis points to the right, the positive

y-axis points upwards, and the positive

z-axis points forwards. We also examined the possible effects of steering wheel location, width, and lane line, which are all associated with driving and may cause certain changes in head movement.

As indicated in the table, differences among lane change patterns were significant for x, yaw, and roll. However, the significance for yaw was only in the 0–50 m zone. Similar results were observed in the steering wheel location. There was a significant difference in the width, but only for y and pitch. No significant difference was observed in the lane line.

3.5. Towards the Main Experiment

From the results in the previous section, it was found that the head motion in x, yaw, and roll was associated with lane change control. We chose those three as the potential candidates for the interaction technique of steering the vehicle. Additionally, in the main experiment, the steering wheel location was in the right-hand position to eliminate the influence of the seating position. As for the width, there was no significant difference between x, yaw, and roll. Therefore, we decided to adopt the standard width. Although there was no significant difference in the head movement between different lane lines, the timing of steering actions was different depending on the zone as well as the lane line.

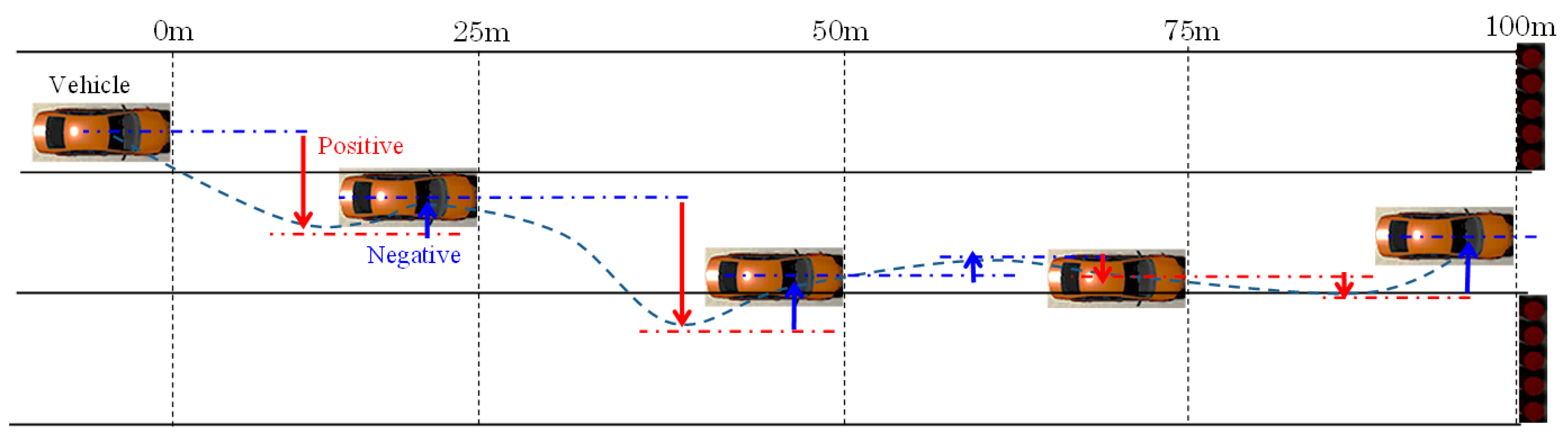

Figure 8 shows how much the vehicle had shifted to the running direction (positive)—the direction toward a passable lane—and how much it had turned back (negative) at each zone (see

Figure 9). The sum of the values in all of the four zones was ideally equal to 3.5 m, which corresponds to the width of (one) lane shift. This gave us the lane line for the main experiment. Here, the lateral movement regarding the lane line is classified into two groups; one includes no line and a horizontal line, and the other includes a vertical line and a vertical dash line. We chose the no line and the vertical line for the main experiment as representatives for the groups.

4. Main Experiment

In the main experiment, we prepared a simulator so that participants could drive a virtual vehicle to the left and right by moving or rotating their head in the x, yaw, or roll direction from their initial position. We examined three types of interfaces independently to make the analysis simpler. The degree of vehicle movement was determined by referring to the results of the preliminary experiment: the lateral vehicle movement was 3 m/s at a change of 5 cm in x, 5 degrees in yaw, and 3 degrees in roll.

The relationship between the amount of movement of the head in the x direction (cm) and the lateral speed v (m/s) of the vehicle is expressed by Equation (1). The vehicle moves in the direction of the head movement:

The relationship between the yaw rotation (degree) of the head and the lateral speed v (m/s) of the vehicle is expressed by Equation (2). As for the roll rotation, the relationship is as in Equation (3). The vehicle moves in the direction of head rotation:

4.1. Participants and Apparatus

Twelve participants (10 men and 2 women) aged 20–25 years (mean: 22.8, standard deviation: 1.3) participated in the experiment. They had normal visual sensations and physical ability. All participants consented to the experiment after receiving a full explanation of the test procedure.

The equipment used in the experiment was similar to that used in the preliminary experiment. However, the steering controller was not used because the steering control was under HMD (head movement) control.

4.2. Procedure

The vehicle used in the experiment was a right-hand drive vehicle with a standard width. Three types of head movement, x, yaw, and roll, were examined in this order. Thereafter, each interface option was named Head-x, Head-yaw, and Head-roll.

The participants were advised to rehearse and repeat the simulator until they got used to it, and then the trial began. A sequence of trials was randomly selected from a total of eight patterns—two line types (no line and vertical line), and four lane change patterns (one-lane shift to right/left and two-lane shift to right/left). The participants were asked to perform the trials three times, making 24 trials in total. One trial took about 7 s, and the total for 24 trials was less than 3 min. Since 24 trials were performed for each of the three types of interface candidates, each subject was asked to perform continuous trials for a total of around 9 min. Other settings were the same as in the preliminary experiment. None of the participants quit during the experiment as a result of feeling uncomfortable.

After all the trials were completed, the participants were asked to fill in a questionnaire and give us their comments. The questionnaire was designed to address the usability in vehicle control, realistic motion, and VR sickness. Realistic motion is defined as how well the head movement suits the lateral movement of a vehicle. Responses to the usability and realistic motion were indicated on a five-point scale (1: very undesirable; 2: undesirable; 3: neutral; 4: desirable; 5: very desirable), and those to the VR sickness were indicated on a four-point scale (1: every time; 2: often; 3: rarely; 4: never).

4.3. Results

Table 2 summarizes the results of the questionnaire regarding the usability and realistic motion. As for the usability, Head-x was the best, followed by Head-yaw. Realistic motion exhibited a similar tendency.

Figure 10 shows the amount of lateral movement for every 25 m zone for different interaction techniques and the line types under the condition of a one-lane change. The results of the use of the steering wheel are given for reference.

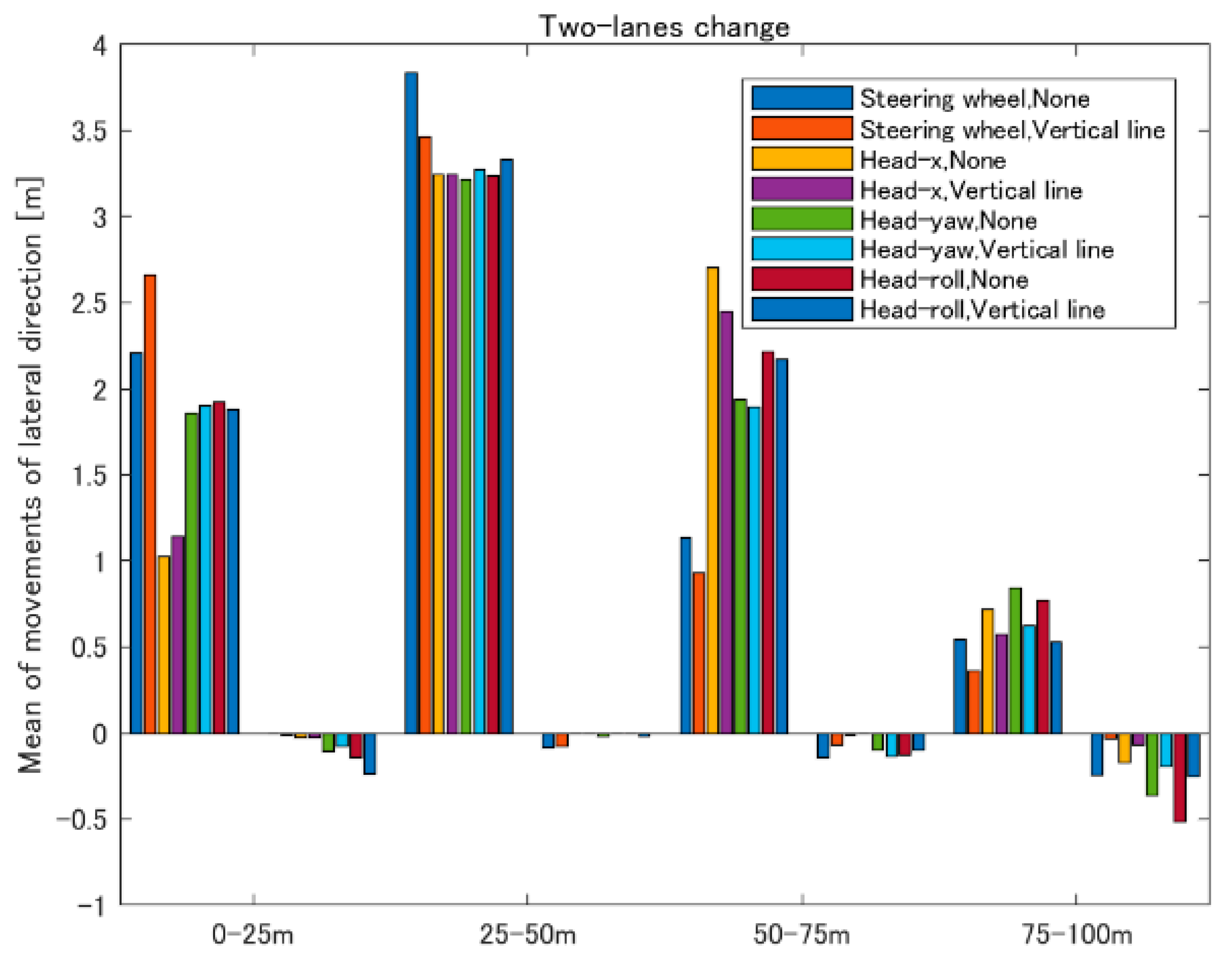

Figure 11 shows the amount of lateral movement in the case of a two-lane change. Roughly speaking, the participants’ behavior in the head movement interfaces did not differ significantly from that in the steering wheel interfaces. However, some characteristic differences are discussed in the next section.

Table 3 shows the questionnaire results for VR sickness. The participants were not sickened by Head-x and Head-yaw at all. They felt slight sickness to Head-roll, but the degree of the sickness was less than the case in the steering wheel interface.

5. Discussion

The movement of the whole body to the right or left was accompanied with Head-x. This behavior, or translation, is common in real life and would thus be acceptable for an interaction with systems. On the other hand, Head-yaw and Head-roll were achieved by rotating the head. This might not have been comfortable for participants because such a movement changed the direction of the person’s vision, and the degree of a horizontal line, resulting in degraded usability.

Meanwhile, in Head-x, the body moved in the left-right direction, and the view in VR space was translated accordingly. As a result, participants could overestimate their movements and restrain their extent of steering the vehicle at the 0–25 m zone. Later, they might have noticed that the distance traveled had not been enough to successfully complete the gate passing maneuver.

By comparing the behavior in the two-lane change to that in the one-lane change, it would be reasonable to imagine that the lateral movement doubles. Lateral movement in the first 25 m zone in the case of head movement interfaces was similar in both the one-lane change and the two-lane change. However, the difference in the 50–75 m zone was much greater in ratio than expected (twice). In other words, the head movement interfaces resulted in postponing the lane changing movement compared to the steering wheel interface. This may not be a favorable situation because of the increase in risk. There is an opportunity to cope with this by building a model of the human behavior, and then adding a proper compensation mechanism based on the model. Meanwhile, Head-yaw and Head-roll generated larger negative movements than Head-x. This coincides with the questionnaire results in that their usability and realistic motion were lower.

Regarding VR sickness, the results indicated that participants did not experience a feeling of sickness with the head movement interfaces (especially for Head-x and Head-yaw) compared to the use of the steering wheel as an interface. It is likely that the input from vision matched the bodily sensation, considering that the realistic motion was rather high. The participants might have had a feeling of being in control of the system (vehicle), and this helped in reducing VR sickness. Having said that, it is important to examine whether the time spent on the experiment in the VR environment is an influencing factor for VR sickness.

Overall, hypothesis (1) appears to be correct. Specifically, it was found that the head motion in x, yaw, and roll was associated with lane change control. As for hypothesis (2), head movement can assist with steering control, especially movement along the horizontal x-axis, which is most promising because it can reduce VR sickness while guaranteeing better usability and realistic motion. However, role sharing between the head movement interface and the steering wheel interface needs additional study.

6. Conclusions

In this study, we explored the effectiveness of head movement as an interaction technique for steering control in VR. By focusing on right or left movements, we attempted to obtain meaningful results using the abovementioned experiments. Human–machine interaction can become conceived of as symmetrical in the sense that if a machine is truly easy for humans to handle, it means that they can get the best out of it.

We first examined head movements while driving using the steering wheel, where the x, yaw, and roll axes were found to be reasonable choices. Afterwards, a driving simulator allowing participants to steer a vehicle by means of their head movement was implemented, and its performance was examined from the viewpoint of usability, realistic motion, and VR sickness. We found that movement along the horizontal x-axis is most promising because it could reduce VR sickness while guaranteeing better usability and realistic motion. We confirmed that the interface is effective for lateral movement in the VR space by using the candidates found from the head movement during driving as the interface.

In this study, we assumed that the vehicle runs at a constant speed in the VR space. In addition, the experimental scenario we considered in the study is simpler than that in real-life. The driver needs to move his/her head for additional purposes (for example, to check rearview mirrors and to relieve muscle strain during longer drive sessions) in more realistic driving scenarios. The user interface suitable for different situations is likely to change. We shall continue to investigate head movement interfaces and other interface options in more detail. Moreover, the integration of head movement interfaces into the steering wheel interface will be studied so as to offer more satisfactory steering control in the real world.