1. Introduction

The approximation of nonlinear systems can be performed using a finite sum of the Volterra series expansion that relates the system’s inputs and outputs. This method has been studied since the 1960s [

1,

2,

3] and used in different applications, for example, Reference [

4,

5,

6], among others. In this context, bilinear forms have been used to approximate a large class of nonlinear systems, where the bilinear component is interpreted in terms of an input-output relation (meaning that it is defined with respect to the data). Consequently, the bilinear system may be regarded as one of the simplest recursive nonlinear systems.

More recently, a new approach was introduced in Reference [

7], where the bilinear term is considered within the framework of a multiple-input/single-output (MISO) system, and it is defined with respect to the spatiotemporal model’s impulse responses. A particular case of this type of system is the Hammerstein model [

8]; in this scenario, a single-input signal passes through a nonlinear block and a linear system, which are cascaded. Recently, adaptive solutions were proposed for the study of such bilinear forms in the context of system identification [

9,

10,

11]. The bilinear approach is suitable for a particular form of the decomposition, which involves only two terms (i.e., two systems). In some cases, it would be useful to exploit a higher-order decomposition, which could improve the overall performance in terms of both complexity and efficiency.

Motivated by the good performance of these approaches in the study of bilinear forms, we aim to further extend this approach to higher-order multilinear in parameters’ systems. Applications, such as multichannel equalization [

12], nonlinear acoustic devices for echo cancellation [

13], multiple-input/multiple-output (MIMO) communication systems [

14,

15] and others, can be addressed within the framework of multilinear systems. Because many of these applications can be formulated in terms of system identification problems [

16], it is of interest to estimate a model based on the available and observed data, which are usually the input and the output of the system. The challenges that usually occur in such system identification tasks may be represented by a high length of the finite impulse response filter [

17,

18], which may employ hundreds or even thousands of coefficients. Moreover, another possible issue is the large parameter space [

19,

20]. Nowadays, such scenarios are related to very important topics, for example, big data [

21], machine learning [

22], and source separation [

23]. Usually, they are addressed based on tensor decompositions and modelling [

24,

25,

26,

27]. These techniques mainly exploit the Kronecker product decomposition [

28], which further allows the reformulation of a high-dimension problem as low-dimension models, which can be tensorized together.

In this paper, we propose an iterative Wiener filter tailored for third-order systems (i.e., trilinear forms), together with adaptive solutions for such problems—namely the least-mean-square (LMS) and normalized LMS (NLMS) algorithms. Following this development, the solution can then be extended to higher-order multilinear systems. The proposed approach presents an advantage from the perspective of exploiting the decomposition of the global impulse response. One potential limitation of such a method is related to the particular form of the global impulse response to be identified, which is a result of separable systems. In perspective, it would be useful to extend this approach to identify more general forms of impulse responses. Recently, we developed such ideas in References [

29,

30], based on the Wiener filter and the recursive least-squares (RLS) algorithm, by exploiting the nearest Kronecker product decomposition and the related low-rank approximation.

The rest of this paper is organized as follows: In

Section 2, a brief theory of tensors is provided, which is necessary for the following developments; next, in

Section 3, the iterative Wiener filter tailored for the identification of trilinear forms is proposed;

Section 4 contains the derivation of the trilinear forms for the LMS and NLMS adaptive algorithms; simulation results are provided in

Section 5, while

Section 6 concludes the paper.

2. Background on Tensors

A tensor is a multidimensional array of data the entries of which are referred by using multiple indices [

31,

32]. A tensor, a matrix, a vector, and a scalar can be denoted by

,

,

, and

a, respectively. In this paper, we are only interested in the third-order tensor

, meaning that its elements are real-valued and its dimension is

. For a third-order tensor, the first and second indices

and

correspond to the row and column, respectively—as in a matrix—while the third index

corresponds to the tube and describes its depth. These three indices describe the three different modes. The entries of the different order tensors are denoted by

,

, and

, for

,

, and

.

The notion of vectorization, consisting of transforming a matrix into a vector, is very well known. Matricization does somewhat the same thing but from a third-order tensor into a large matrix. Depending on which index’s elements are considered first, we have matricization along three different modes [

24,

25]:

where

are the frontal slices. Hence, the vectorization of a tensor is

Let

,

, and

be vectors of length

,

, and

, respectively, whose elements are

,

, and

. A rank-1 tensor (of dimension

) is defined as

where ∘ is the vector outer product and the elements of

are given by

. The frontal slices of

in Equation (

1) are

. In particular, we have

, where

is the transpose operator. Therefore, the rank of a tensor

, denoted

, is defined as the minimum number of rank-1 tensors that generate

as their sum.

The inner product between two tensors

and

of the same dimension is

It is important to be able to multiply a tensor with a matrix [

26,

27]. Let the tensor be

and the matrix be

. The mode-1 product between the tensor

and the matrix

gives the tensor:

whose entries are

, for

, and

. In the same way, with the matrix

, the mode-2 product between the tensor

and the matrix

gives the tensor:

whose entries are

, for

, and

. Finally, with the matrix

, the mode-3 product between the tensor

and the matrix

gives the tensor:

whose entries are

, for

, and

.

The multiplication of

with the row vectors

,

, and

(see the components of

in Equation (

1)) gives the scalar:

In particular, we have

and

It is easy to check that Equations (

5)–(

7) are trilinear (with respect to

,

, and

), bilinear (with respect to

and

), and linear (with respect to

) forms, respectively.

We can express Equation (

6) as

where

represents the trace of a square matrix and ⊗ denotes the Kronecker product. Expression (

5) can also be written in a more convenient way. Indeed, we have

where

is defined in Equation (

1).

3. Trilinear Wiener Filter

Let us consider the following signal model, which is used in system identification tasks:

where

is the desired (also known as reference) signal at time index

t,

is the output signal of a MISO system and

is a zero-mean additive noise, uncorrelated with the input signals. The zero-mean input signals can be described in a tensorial form

:

and the three impulse responses

, of lengths

,

, and

, respectively, can be written as

It can be seen that

represents a trilinear form because it is a linear function of each of the vectors

, if the other two are kept fixed. The trilinear form can be regarded as an extension of the bilinear form [

7].

Starting from the three impulse responses of the MISO system, a rank-1 tensor of dimension

can be constructed in the following way:

where

Consequently, the output signal becomes

where

and

(with

) are the frontal slices of

and

, respectively, while

and

denote two long vectors, each of them having

elements. Hence, the output signal can be expressed as

In this framework, the aim is to estimate the global impulse response

. For that, first we define the error signal:

where

represents the estimated signal, obtained using the impulse response

of length

.

Based on Equation (

16), let us consider the mean-squared error (MSE) optimization criterion, that is, the minimization of the cost function:

where

denotes mathematical expectation. Using Equation (

16) in Equation (

17), together with the notation

(the variance of the reference signal),

(the cross-correlation vector between the input and the reference signals) and

(the covariance matrix of the input signal), the cost function can be developed as

By minimizing Equation (

18), we obtain the well-known Wiener filter:

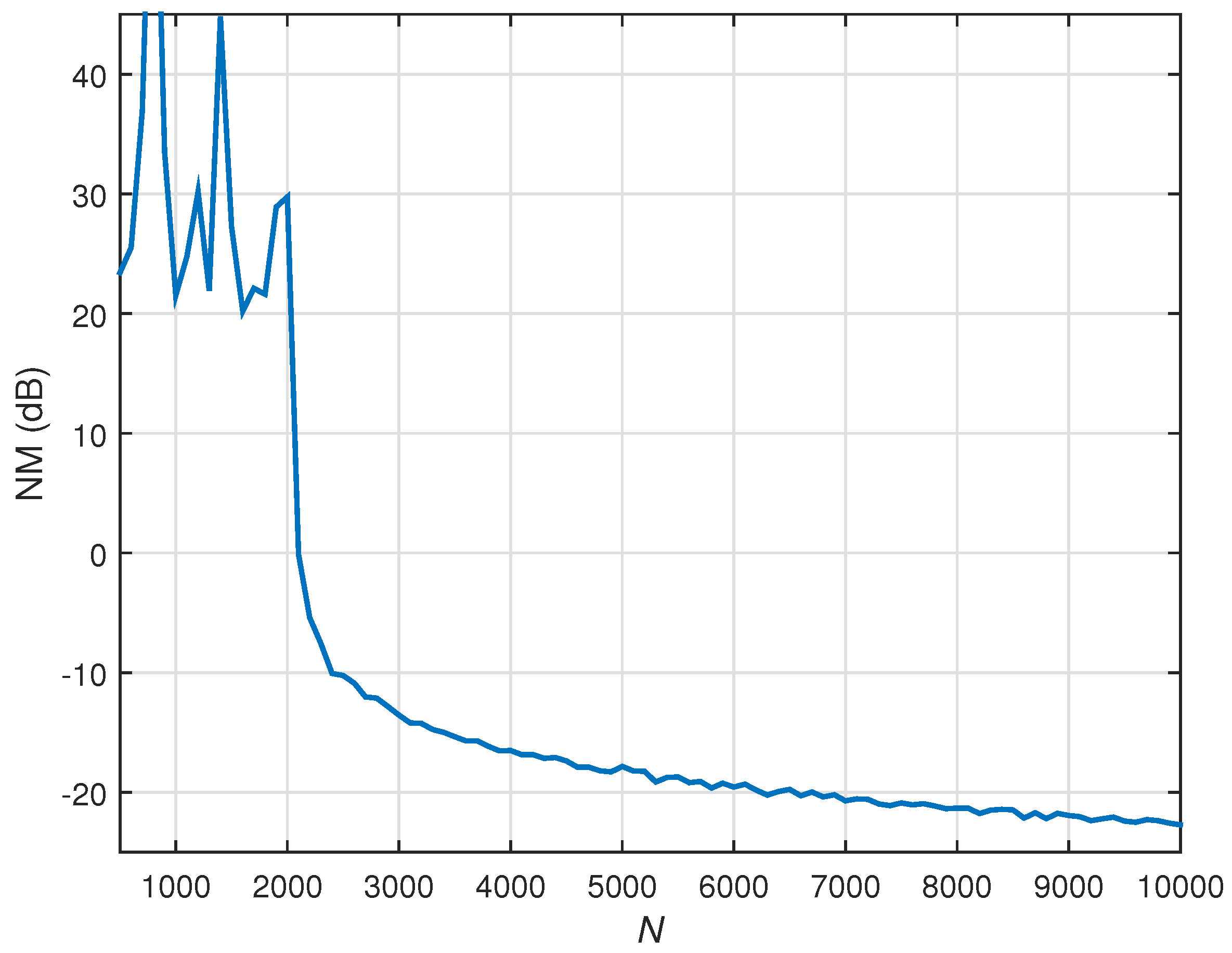

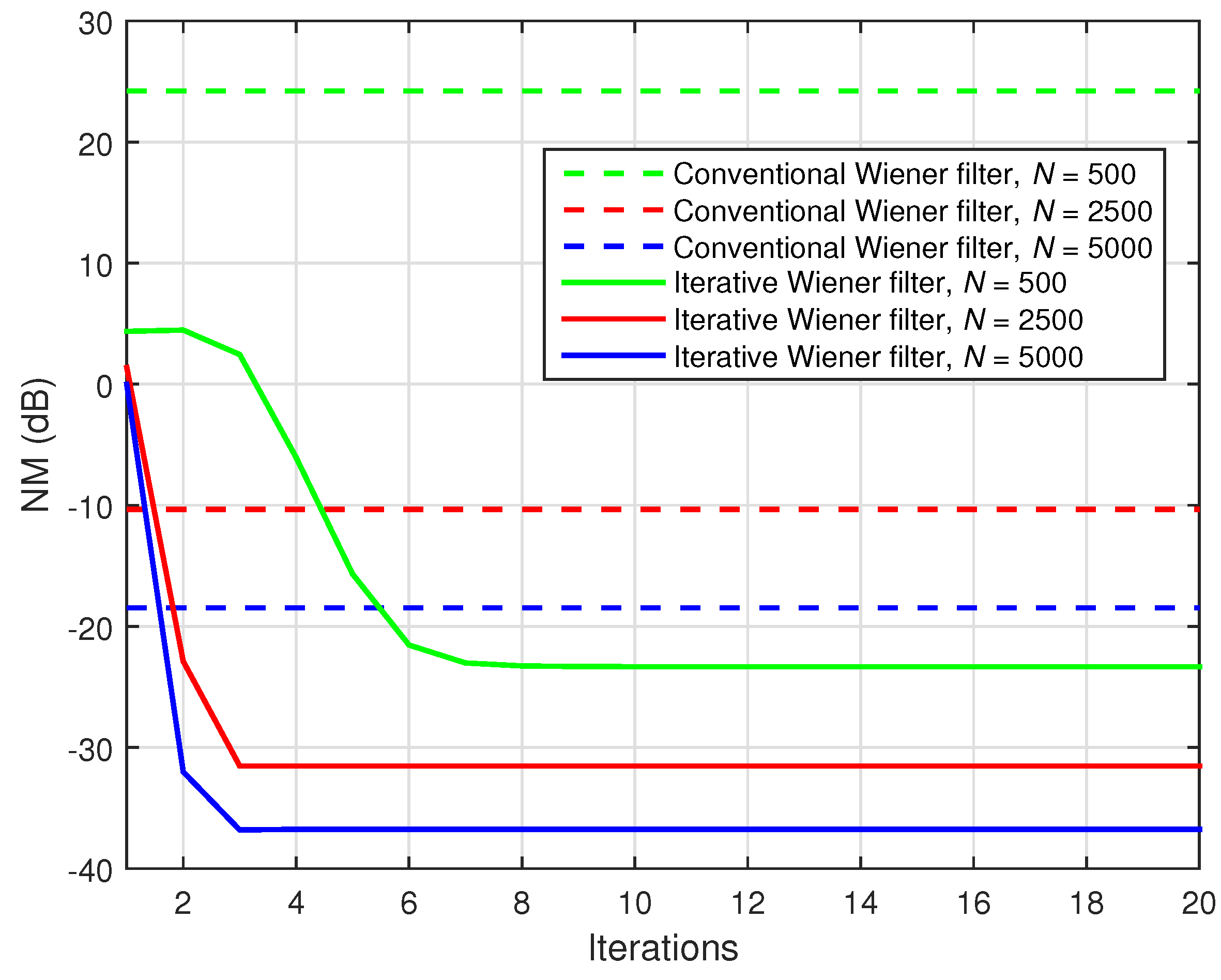

As we can see, the dimension of the covariance matrix is , thus requiring a large amount of data (much more than samples) to obtain a good estimate of it. Furthermore, could be very ill-conditioned because of its huge size. As a result, the solution will be very inaccurate, to say the least, in practice.

On the other hand, as we notice from Equation (

13), the global impulse response

(with

coefficients) results based on a combination of the shorter impulse responses

, with

,

, and

coefficients, respectively. In fact, we only need

different elements to form

, even though this global impulse response is of length

. This represents the motivation behind an alternative approach to the conventional Wiener solution. Similar to Equation (

13), the estimate of the global system can be decomposed as

where

are three impulse responses of lengths

,

, and

, respectively, which represent the estimates of the individual impulse responses

. Nevertheless, we should note that there is no unique solution related to the decomposition in Equation (

20), since for any constants

, and

, with

, we have

. Consequently,

also represents a set of solutions for our problem. Nevertheless, the global system impulse response—

—can be identified with no scaling ambiguity.

Next, we propose an iterative alternative to the conventional Wiener filter, following the decomposition from Equation (

20). First, we can easily verify that

where

is the identity matrix of size

, for

. Based on the previous relations, the cost function from Equation (

18) can be expressed in three different ways. For example, using Equation (

21), we obtain

When

and

are fixed, we can rewrite Equation (

24) as

where

In this case, the partial cost function from Equation (

25) is a convex one and can be minimized with respect to

.

Similarly, using Equations (22) and (23), the cost function from Equation (

18) becomes

Also, when

and

are fixed, Equation (

28) becomes

where

while when

and

are fixed, the cost function from Equation (29) results in

where

In both cases, the partial cost functions from Equations (

30) and (

33) can be minimized with respect to

and

, respectively.

The previous procedure suggests an iterative approach. To start the algorithm, a set of initial values should be provided for two of the estimated impulse responses. For example, we can choose

and

. Hence, based on Equations (

26) and (

27), one may compute

In the first iteration, the first cost function to be minimized results from Equation (

25) (using Equations (

36) and (

37)), that is,

which leads to the solution

. Also, since

and

are now available, we can evaluate (based on Equations (

31) and (

32))

so that the cost function from Equation (

30) becomes

while its minimization leads to

. Finally, using the solutions

and

, we can find

in a similar manner. First, we evaluate (based on Equations (

34) and (

35))

Then, we minimize the cost function (which results from Equation (

33)):

which leads to the solution

. Continuing the iterative procedure, at iteration number

n, we obtain the estimates of the impulse responses based on the following steps:

Thus, the global impulse response at iteration

n results in

The proposed iterative Wiener filter for trilinear forms represents an extension of the solution presented in Reference [

7] (in the context of bilinear forms). However, when the MISO system identification problem results are based on Equation (

10), it is more advantageous to use the algorithm tailored for trilinear forms instead of reformulating the problem in terms of multiple bilinear forms. The trilinear approach has some similarities (to some extent) with that introduced in Reference [

33]. However, the batch Trilinear Wiener-Hopf (TriWH) algorithm from Reference [

33] is more related to an adaptive approach, since the statistics are estimated within the algorithm. On the other hand, in the case of our iterative Wiener filter, the estimates of the statistics are considered to be

a priori available (see also the related discussion in the next section), which is basically in the spirit of the Wiener filter.

4. LMS and NLMS Algorithms for Trilinear Forms

It is well-known that the Wiener filter presents several limitations that may make it unsuitable to be used in practice (e.g., the matrix inversion operation, the correlation matrix estimation, etc.). For this reason, a more convenient manner of treating the system identification problem is through adaptive filtering. One of the simplest types of adaptive algorithms is the LMS, which will be presented in the following, tailored for the new trilinear form approach.

First, let us consider the three estimated impulse responses

, together with the corresponding

a priori error signals:

where

It can be checked that

. In this context, the LMS updates for the three filters are the following:

where

represent the step-size parameters. Relations (

49)–(

51) define the LMS algorithm for trilinear forms, namely LMS-TF.

For the initialization of the estimated impulse responses, we use

In the end, we can obtain the global filter using Relation (

20). Alternatively, this global impulse response may be identified directly with the regular LMS algorithm by using the following update:

where

and

is the global step-size parameter.

However, an observation needs to be made regarding the update in Relation (

55): this involves the presence of an adaptive filter of length

, whereas the LMS-TF algorithm, which is defined by the update Relations (

49)–(

51), uses three shorter filters of lengths

,

, and

, respectively. Basically, a system identification problem of size

(as in the regular approach) was reformulated in terms of three shorter filters of lengths

,

, and

. Taking into account that we usually have

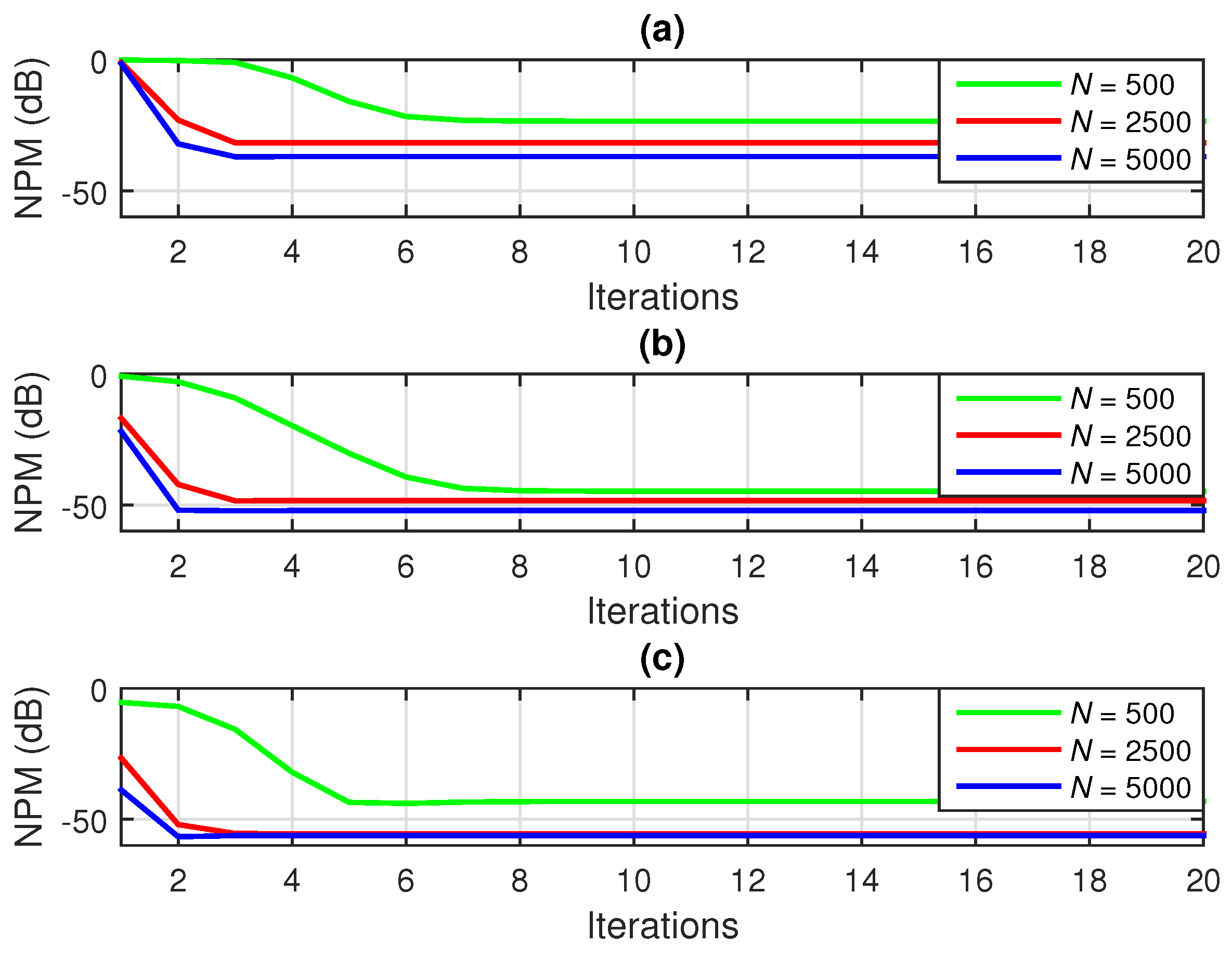

, the advantage of the trilinear approach (in terms of reducing the complexity) could be important. Therefore, the complexity of this new approach is lower and the convergence rate is expected to be faster.

The step-size parameters in Relations (

49)–(

51) take constant values, chosen such that they ensure the convergence of the algorithm and a good compromise between convergence speed and steady-state misadjustment. Nevertheless, when dealing with non-stationary signals, it may be more appropriate to use time-dependent step-sizes, which lead to the following update relations:

For deriving the expressions of the step-size parameters, we take into consideration the stability conditions and we target to cancel the following expressions, which represent the

a posteriori error signals [

34]:

By replacing Relation (

49) in (

60), Relation (

50) in (

61), and Relation (

51) in (

62), respectively, and by imposing the conditions

,

, and

, we obtain that

Consequently, assuming that

,

, and

, the following expressions for the step-size parameters result:

In order to obtain a good balance between convergence rate and misadjustment, three positive constants,

,

, and

, are employed [

35]. In addition, three regularization constants

,

, and

, usually chosen to be proportional to the variance of the input signal [

36], are added to the denominators of the step-size parameters. Finally, the updates of the NLMS algorithm for trilinear forms (NLMS-TF) become

The initializations of the estimated filters may be the same as Equations (

52)–(

54). In a similar way as for the LMS algorithm, the global impulse response can be identified using the regular NLMS:

where

is given in Equation (

56). The parameters

and

represent the normalized step-size parameter and the regularization constant for the global filter, respectively. As previously shown in Reference [

9] for bilinear forms, the global misalignment can be controlled by using a constraint on the sum of the normalized step-sizes, and this sum should be smaller than 1. In this way, for different values of

,

,

fulfilling this condition, the misalignment of the global filter is the same. On the other hand, in the case when

, the three filters achieve the same level of the misalignment.

Again, we notice that the global impulse response identification involves the use of a filter of length . Because the trilinear approach uses three much shorter impulse responses of lengths , , and , respectively, it is expected that this new solution will yield a faster convergence. This will be shown through simulations.

The NLMS-TF algorithm proposed here is similar to that presented in Reference [

19]. However, our choice of the system impulse responses used in simulations is different from that in Reference [

19]. On the contrary, we aim to show the performance of the algorithm in a scenario that includes a real echo path. In addition, we also study the tracking capability of the algorithm.