Abstract

The non-homogeneous Poisson process (NHPP) software has a crucial role in computer systems. Furthermore, the software is used in various environments. It was developed and tested in a controlled environment, while real-world operating environments may be different. Accordingly, the uncertainty of the operating environment must be considered. Moreover, predicting software failures is commonly an important part of study, not only for software developers, but also for companies and research institutes. Software reliability model can measure and predict the number of software failures, software failure intervals, software reliability, and failure rates. In this paper, we propose a new model with an inflection factor of the fault detection rate function, considering the uncertainty of operating environments and analyzing how the predicted value of the proposed new model is different than the other models. We compare the proposed model with several existing NHPP software reliability models using real software failure datasets based on ten criteria. The results show that the proposed new model has significantly better goodness-of-fit and predictability than the other models.

1. Introduction

The core technologies of the fourth industrial revolution, such as artificial intelligence (AI), big data, the Internet of Things (IoT), are implemented in software, and software is essential as a mediator to create new values by fusing these technologies in all industries. As the importance and role of software in a computer system keep growing, a fatal software error can cause significant damage. For the effective operation of software, it is imperative to reduce the possibilities of software failures and maintain high levels of reliability. Software reliability is defined as the probability that the software will run without a fault for a certain period. It is vital for developing skills and theories to improve the software reliability. However, the development of a software system is a difficult and complex process. Therefore, the main focus of software development is on improving the reliability and stability of a software system. The number of software failures and the time interval of each failure have a significant influence on the reliability of software. Therefore, the prediction of software failures is a research field that is important not only for software developers, but also for companies and research institutes. Software reliability models can be classified according to the applied software development cycle. Before the testing phase, a reliability prediction model is used that predicts reliability using information such as past data or language used, development domain, complexity, and architecture. After the test phase, a software reliability model is used, which is a mathematical model of software failures such as the frequency of failures and failure interval times. A model makes it easier to evaluate the software reliability using the fault data collected in the test or operating environment. In addition, the model can measure the number of software failures, software failure interval, and software reliability; and failure rate can be estimated and variously predicted.

Although various types of software reliability models have been studied, software defects and failures generally do not occur at the same time intervals. Based on this, a non-homogeneous Poisson process (NHPP) software reliability model was developed. The NHPP models determine mathematically handled software reliability; they are used extensively because of their potential for various applications. Most of the previous NHPP software reliability models were developed based on the assumptions that faults detected in the testing phase are removed immediately with no debugging time delay, new faults are not introduced, and software systems used in the field environments are the same as or close to those used in the development-testing environment. Based on this, Goel and Okumoto [1] presented a stochastic model for the software failure phenomenon using an NHPP; this model describes the failure observation phenomenon by an exponential curve. Also, there have been some other software reliability models that describe either S-shaped curves or a mixture of exponential and S-shaped curves [2,3,4]. As the Internet became popular in the mid-1990s, due to rapid changes in industrial structure and environment, a software reliability model with a variety of operating environments begun to be studied. In early 2000, considering the uncertainty of the operating environment, researchers began to try new approaches such as the application of calibration factors [5,6,7]. Based on this, Teng and Pham [8] generalized the software reliability model considering the uncertainty of the environment and its effects upon software failure rates. Recently, Inoue et al. [9] proposed the software reliability model with the uncertainty of testing environments. Li and Pham [10,11] proposed NHPP software reliability models considering fault removal efficiency and error generation, and the uncertainty of operating environments with imperfect debugging and testing coverage. Song et al. [12,13,14,15] studied NHPP software reliability models with various fault detection rates considering the uncertainty of operating environments. Zhu and Pham [16] proposed an NHPP software reliability model with a pioneering idea by considering software fault dependency and imperfect fault removal. However, previous NHPP software reliability models [1,2,3,4,17,18,19,20,21,22,23,24,25] did not take into account the uncertainty of the software operating environment, and did not consider the learn-curve in the fault detection rate function [8,9,10,11,13,14,26,27].

In this paper, we discuss a new model with inflection factor of the fault detection rate function considering the uncertainty of operating environments, and the predictive analysis. We examine the goodness-of-fit and the predictability of a new software reliability model and other existing NHPP models based on several datasets. The explicit solution of the mean value function for the new software reliability model is derived in Section 2. Criteria for model comparisons, prediction, and selection of the best model are discussed in Section 3. Model analysis and results through numerical examples are discussed in Section 4. Section 5 presents conclusions and remarks.

2. NHPP Software Reliability Modeling

2.1. A General NHPP Software Reliability Model

) represents the cumulative number of failures up to the point of execution time when the software failure/defect follows the NHPP.

Assuming that is a mean value function, the relationship between the mean value function and the intensity function is

A general mean value function of NHPP software reliability models using the differential equation is as follows [19]:

Solving Equation (1) by using different functions and , the following mean value function m(t) is observed [19],

where , and is the marginal condition of Equation (2), with representing the start time of the testing process.

2.2. A New NHPP Software Reliability Model

A general mean value function of NHPP software reliability models using the differential equation considering the uncertainty of operating environments is as follows [26]:

where is the mean value function, is the fault detection rate function, is the expected number of faults that exist in the software before testing, and is a random variable that represents the uncertainty of the system fault detection rate in the operating environments with a probability density function [26],

We find the following mean value function using the differential equation by applying the random variable ; it has a generalized probability density function with two parameters and , where the initial condition m(0) = 0:

In this paper, we consider a fault detection rate function to be as follows:

where is the failure detection rate and represents the inflection factor.

We obtain a new mean value function of NHPP software reliability model subject to the uncertainty of operating environments that can be used to determine the expected number of software failures detected by time by substituting the function in Equation (5):

In this paper, the advantages of the proposed new model take into account the learn-curve in the fault detection rate function and the uncertainty of the operating environments.

3. Parameter Estimation and Criteria for Model Comparisons

3.1. Parameter Estimation and Models for Comparison

Many NHPP software reliability models use the least square estimation (LSE) and the maximum likelihood estimation (MLE) methods to estimate the parameters. However, if the expression of the mean value function of the software reliability model is too complicated, an accurate estimate may not be obtained from the MLE method. Here, we derived the parameters of the mean value function using the Matlab and R programs based on the LSE method. Table 1 summarizes the mean value functions of existing NHPP software reliability models and the proposed new model; among them, NHPP software reliability models 18, 19, and 20 consider the uncertainty of the environment.

Table 1.

Software reliability models.

3.2. Criteria for Model Comparison

We use ten criteria to estimate the goodness-of-fit of the proposed model, and use one criterion to compare the predicted values.

(1) Mean squared error (MSE)

The MSE measures the average of the squares of the errors that is the average squared difference between the estimated values and the actual data.

(2) Root mean square error (RMSE)

The RMSE is a frequently used measure of the differences between values predicted by a model or an estimator and the values observed.

(3) Predictive ratio risk (PRR) [22]

The PRR measures the distance of the model estimates from the actual data against the model estimate.

(4) Predictive power (PP) [22]

The PP measures the distance of the model estimates from the actual data.

(5) Akaike’s information criterion (AIC) [28]

AIC is measured to compare the capability of each model in terms of maximizing the likelihood function (L), while considering the degrees of freedom. and are given as follows:

(6) R-square (R2) [10]

The R2 measures how successful fit is in explaining the variation of the data.

(7) Adjusted R-square (Adj R2) [10]

The Adjusted R2 is a modification to R2 that adjusts for the number of explanatory terms in a model relative to the number of data points.

(8) Sum of absolute errors (SAE) [13]

The SAE measures the absolute distance of the model.

(9) Mean absolute errors (MAE) [29]

The MAE measures the deviation by the use of absolute distance of the model.

(10) Variance [16]

The variance measures the standard deviation of the prediction bias, where Bias is given as:

(11) Sum of squared errors for predicted value (Pre SSE) [11]

We use the data points up to time to estimate the parameters of the mean value function , then measure the square of the error between the estimated value and the actual data after the time , obtained by substituting the estimated parameter into the mean value function.

Here, is the estimated cumulative number of failures at for ; is the total number of failures observed at time ; is the total number of observations; is the number of unknown parameters in the model.

The smaller the value of these nine criteria, i.e., MSE, RMSE, PRR, PP, AIC, SAE, MAE, Variance, and Pre SSE, the better is the fit of the model (closer to 0). On the other hand, the higher the value of the two criteria, i.e., and , the better is the fit of the model (closer to 1).

3.3. Confidence Interval

It is possible to check whether the value of the mean value function is included in the confidence interval at each point, , or not and how much the confidence interval actually contains the value. We use the following Equation (7) to obtain the confidence interval [22] of the proposed new model and existing NHPP software reliability models;

where is , the percentile of the standard normal distribution.

4. Numerical Examples

4.1. Data Information

Datasets #1 and #2 were reported by [22] based on system test data for a telecommunication system. Both, the automated and human-involved tests are executed on multiple test beds. The system records the cumulative of faults by each week. In Datasets #1 and #2, the week index is from week 1 to 21, and there are 26 and 43 cumulative failures in 21 weeks, respectively. Detailed information can be seen in [22]. Datasets #3, #4, and #5 were reported by [22] based on the on-line communication system. Here as well, the system records the cumulative of faults by each week. In Datasets #3, #4, and #5, the week index is from week 1 to 12, and there are 26, 55, and 55 cumulative failures in 12 weeks, respectively. Detailed information can be seen in [22].

4.2. Results of the Estimated Parameters

Table 2, Table 3, Table 4, Table 5 and Table 6 summarize the results of the estimated parameters using the LSE technique and the values of the ten criteria (MSE, RMSE, PRR, PP, AIC, , , SAE, MAE, and Variance) of all 21 models in Table 1. First, for comparison of the goodness-of-fit, we obtained the parameter estimates and the criteria of all models using all data sets; when from Dataset #1 and #2, and when from Dataset #3, #4 and #5. As shown in Table 2, Table 3, Table 4, Table 5 and Table 6, we can see that the proposed new model has the best results when comparing the ten criteria to the other models.

Table 2.

Results of Model Parameter Estimation and Criteria for Comparison from Dataset #1.

Table 3.

Results of Model Parameter Estimation and Criteria for Comparison from Dataset #2.

Table 4.

Results of Model Parameter Estimation and Criteria for Comparison from Dataset #3.

Table 5.

Results of Model Parameter Estimation and Criteria for Comparison from Dataset #4.

Table 6.

Results of Model Parameter Estimation and Criteria for Comparison from Dataset #5.

As can be seen from Table 2, the MSE, RMSE, PRR, SAE, MAE, and Variance values for the proposed new model are the lowest values compared to all models in Table 1. The MSE value of the proposed new model is 0.5864, which is smaller than the value of MSE of other models. The RMSE value is 0.7658, PRR value is 0.5024, SAE value is 11.3783, MAE value is 0.7111, and Variance value is 0.6903, which are smaller than the corresponding values of other models. The and values for the proposed new model are the largest values as compared to all models. The value of the proposed model is 0.9947, and the value is 0.9929, which are larger than the corresponding values of other models.

From Table 3, we can see that the MSE, RMSE, PRR, PP, SAE, MAE, and Variance values for the proposed new model are the lowest values in comparison with every model in Table 1. The MSE value of the proposed new model is 0.8470, which is smaller than that of other models. The RMSE value is 0.9203, PRR value is 0.1159, PP value is 0.1355, SAE value is 14.0367, MAE value is 0.8773, and Variance value is 0.8232, which are smaller than the corresponding values of other models. The AIC value is 77.0423, which is the second lowest value. The and values for the proposed new model are the largest values compared to all models. The value of for the proposed model is 0.9970 and the is 0.9960, which are larger than the respective values of other models.

As can be seen from Table 4, the MSE, RMSE, PP, AIC, SAE, and Variance values for the proposed new model are the lowest values compared to all other models in Table 1. The MSE value of the proposed new model is 4.4412, which is smaller than that of the other models. The RMSE value is 2.1074, PP value is 0.7376, AIC value is 54.3482, SAE value is 15.4691, and Variance value is 1.7189, which are smaller than the value of other models. The MAE value is 2.2099, which is the second lowest value. The and values for the proposed new model are the largest values compared to all models. The value of the proposed model is 0.9682 and the value is 0.9416, which are larger than the value of other models.

As seen from Table 5, the MSE, RMSE, PRR, PP, AIC, SAE, MAE, and Variance values for the proposed new model are the lowest values in comparison with every model in Table 1. The MSE value of the proposed new model is 6.7120, RMSE value is 2.5908, PRR value is 0.1812, PP value is 0.1363, AIC value is 70.5195, SAE value is 18.2230, MAE value is 2.6033, and Variance value is 2.0735, which are smaller than the value of other models. The values of and for the proposed new model are the largest values compared to all models. The value of for the proposed model is 0.9877 and that of is 0.9774, which are larger than the respective values of other models.

As depicted in Table 6, the MSE, RMSE, PRR, PP, SAE, MAE, and Variance values for the proposed new model are the lowest values as compared to all other models in Table 1. The MSE value of the proposed new model is 2.3671, RMSE value is 1.5385, PRR value is 0.04121, PP value is 0.0333, SAE value is 11.4867, MAE value is 1.6410, and Variance value is 1.2284, which are smaller than the corresponding values of other models. The AIC value is 58.7819, which is the second lowest value. The and values for the proposed new model are the largest values compared to all models. The value of for the proposed model is 0.9940 and that of is 0.9890, which are larger than the corresponding values of other models.

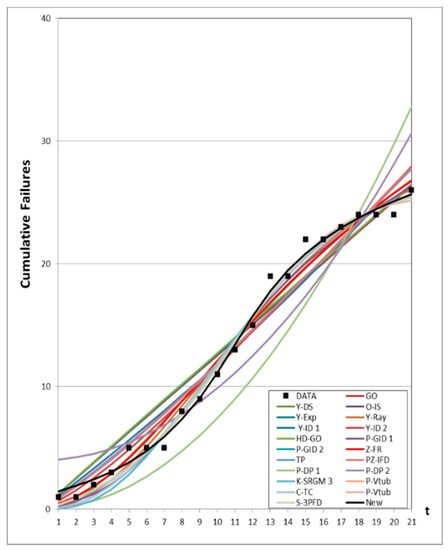

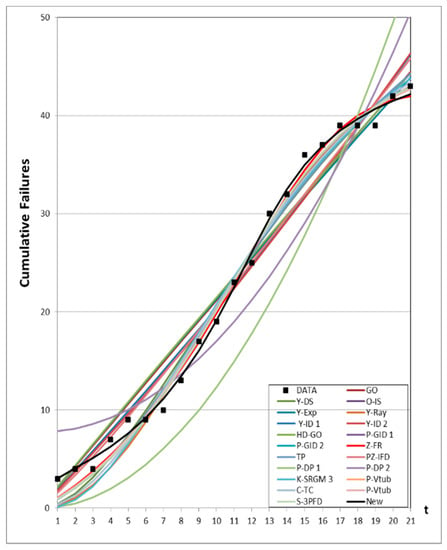

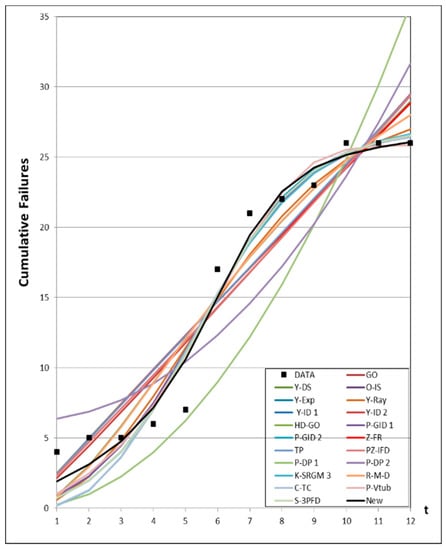

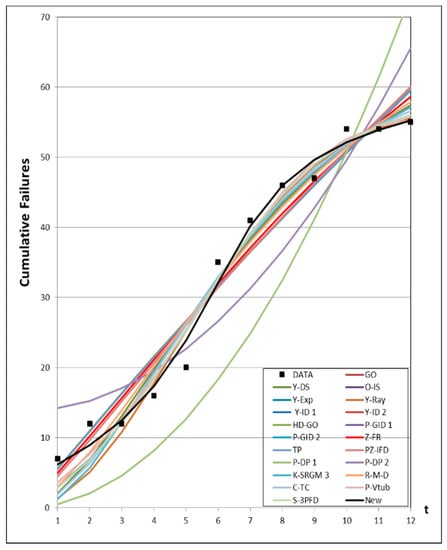

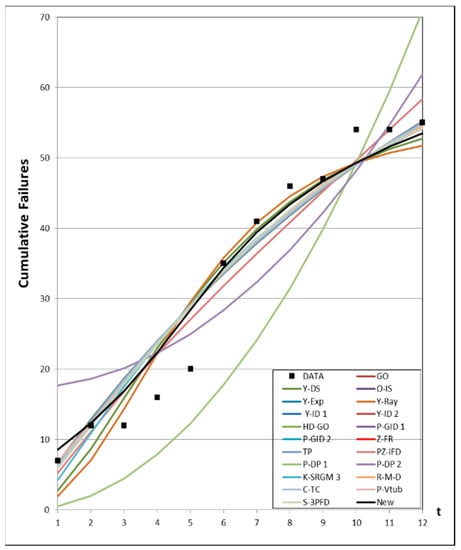

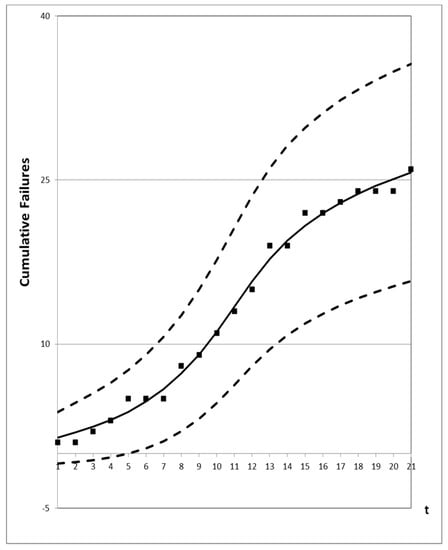

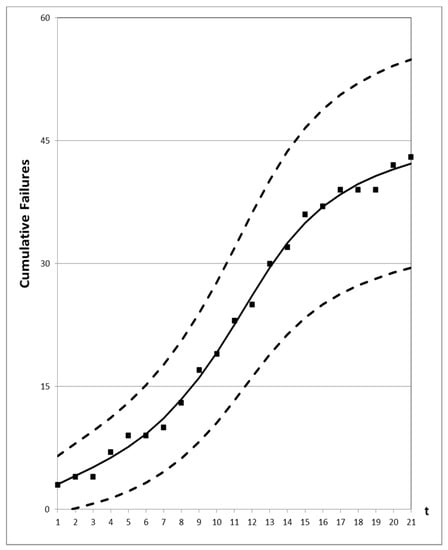

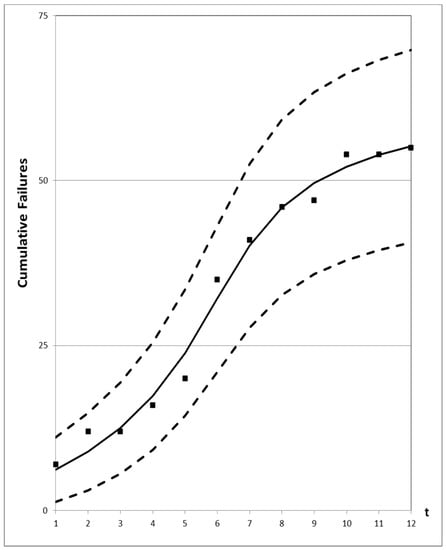

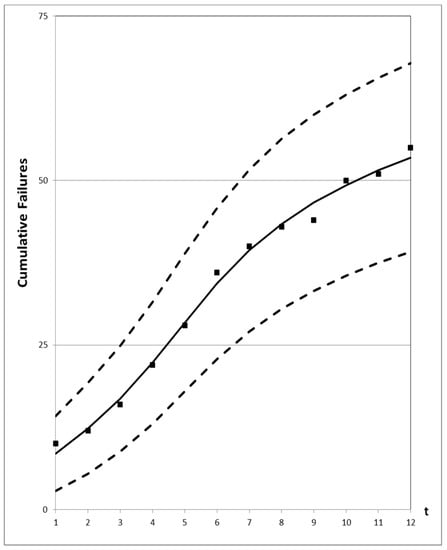

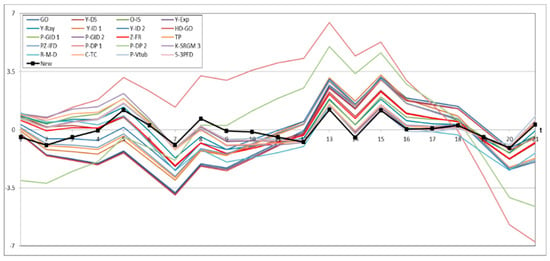

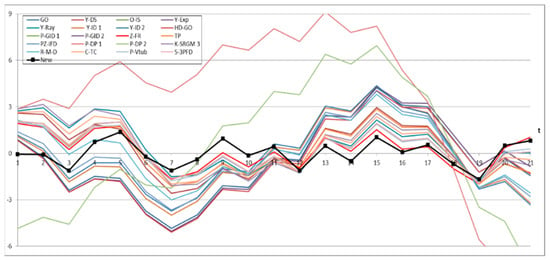

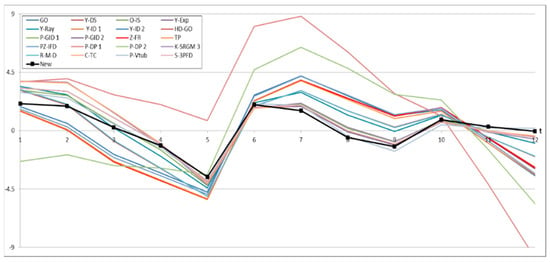

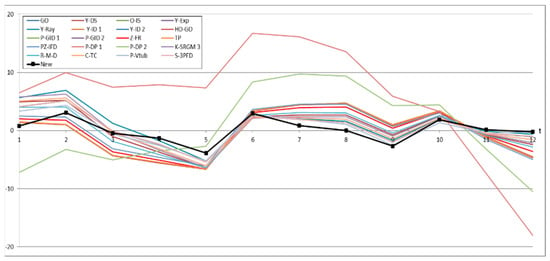

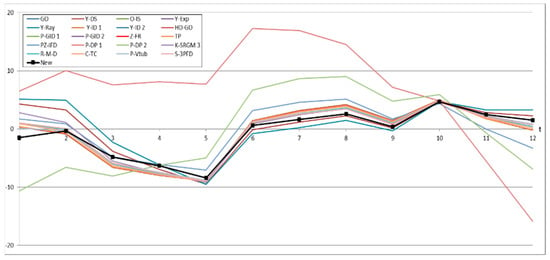

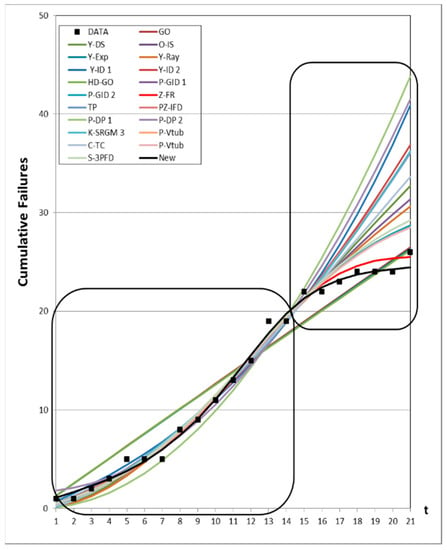

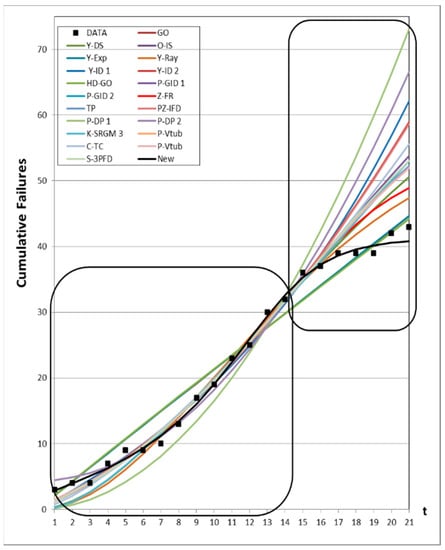

Figure 1, Figure 2, Figure 3, Figure 4 and Figure 5 show graphs of the mean value functions for all models based on Datasets #1–#5, respectively. Figure 6, Figure 7, Figure 8, Figure 9 and Figure 10 show graphs of the 95% confidence interval of the proposed new model, which serve to confirm whether the value of the mean value function is included in the confidence interval of each time point. Figure 11, Figure 12, Figure 13, Figure 14 and Figure 15 show graphs of the relative error value of all models, which serve to confirm its ability to provide better accuracy.

Figure 1.

Mean value functions of all models for Dataset #1.

Figure 2.

Mean value functions of all models for Dataset #2.

Figure 3.

Mean value functions of all models for Dataset #3.

Figure 4.

Mean value functions of all models for Dataset #4.

Figure 5.

Mean value functions of all models for Dataset #5.

Figure 6.

95% confidence interval of the proposed new model for Dataset #1.

Figure 7.

95% confidence interval of the proposed new model for Dataset #2.

Figure 8.

95% confidence interval of the proposed new model for Dataset #3.

Figure 9.

95% confidence interval of the proposed new model for Dataset #4.

Figure 10.

95% confidence interval of the proposed new model for Dataset #5.

Figure 11.

Relative error value of all models for Dataset #1.

Figure 12.

Relative error value of all models for Dataset #2.

Figure 13.

Relative error value of all models for Dataset #3.

Figure 14.

Relative error value of all models for Dataset #4.

Figure 15.

Relative error value of all models for Dataset #5.

4.3. Prediction Analysis

In this paper, we use Dataset #1 and #2 to compare how the predicted values of each model are different to fulfill the objective of this paper. We compare the goodness-of-fit of all models by using up to 75% of the dataset and compare the predicted value of all models using the remaining 25% of dataset. For comparison of the goodness-of-fit, we obtained the parameter estimates and the criteria (MSE, RMSE, PRR, PP, AIC, , , SAE, MAE, and Variance) for all models when , and, for comparison of the predicted value, we obtained the PreSSE value of all models when from Dataset #1 and #2.

First of all, as seen in Table 7 and Table 8 for comparison of the goodness-of-fit, it is evident that the proposed new model has the best results when comparing the ten criteria with the other models. As seen from Table 7, the MSE, RMSE, PRR, SAE, and Variance values for the proposed new model are the lowest values compared to all models in Table 1. The MSE value of the proposed new model is 0.5915, which is smaller than the corresponding value of other models. The RMSE value is 0.7691, the PRR value is 0.3380, the SAE value is 8.2769, and the Variance value is 0.6591, which are smaller than the value of other models. The and values for the proposed new model are the largest values compared to all models. The value of for the proposed model is 0.9923 and that of is 0.9885, which are larger than the respective values of other models. As seen from Table 8, the MSE, RMSE, PRR, PP, SAE, MAE, and Variance values for the proposed new model are the lowest values in comparison with all models in Table 1. The MSE value of the proposed new model is 0.7827, RMSE value is 0.8847, PRR value is 0.1103, PP value is 0.1306, SAE value is 9.9671, MAE value is 0.7576, and Variance value is 0.7576, which are smaller than the corresponding values of other models. The and values for the proposed new model are the largest values compared to all models. The value of for the proposed model is 0.9959 and that of is 0.9939, both of which are larger than the respective values of other models. Finally, as shown in Table 7 and Table 8 for the comparison of the predicted value, it is evident that the proposed new model has the best results when comparing the criterion of PreSSE with the other models. As it can be seen from Table 7, the PreSSE value for the proposed new model is the lowest value as compared to all models in Table 1. The PreSSE value of the proposed new model is 2.6780, which is smaller than that of the other models. The PreSSE value of the proposed new model is 8.6532, which is smaller than the value of PreSSE of other models in Table 8. Figure 16 and Figure 17 show graphs of the goodness-of-fit and prediction of mean value functions for all models from Datasets #1 and #2, respectively.

Table 7.

Results of Model parameter estimation, criteria, and prediction for comparison-Dataset #1.

Table 8.

Results of Model parameter estimation, criteria, and prediction for comparison-Dataset #2.

Figure 16.

Goodness-of-fit and prediction of mean value functions of all models for Dataset #1.

Figure 17.

Goodness-of-fit and prediction of mean value functions of all models for Dataset #2.

5. Conclusions

The software is used in a variety of environments; however, it is typically developed and tested in a controlled environment. The uncertainty of the operating environment is considered because the environment in which the software is operated varies. Therefore, we consider the uncertainty of the operating environment and the learn-curve in the fault detection rate function. In this paper, we discussed a new model with inflection factor of the fault detection rate function considering the uncertainty of operating environments and analyzed how the predicted values of the proposed new model are different than the other models. We provided numerical proof by goodness-of-fit and also predicted the values for all models, and compared the proposed new model with several existing NHPP software reliability models based on eleven criteria (MSE, RMSE, PRR, PP, AIC,, , SAE, MAE, Variance, and Pre SSE). As shown with the numerical examples, the results prove that the proposed new model has significantly better goodness-of-fit and predicts the value better than the other existing models. Future work will involve broader validation of this conclusion based on recent data sets. In addition, we need to apply Bayesian and big-data estimation method to estimate parameters, and also need to consider the multi-release point.

Author Contributions

K.Y.S. analyzed the data; K.Y.S. contributed analysis tools; K.Y.S. and I.H.C. wrote the paper; K.Y.S. and I.H.C. supported funding; H.P. suggested development of new proposed model; I.H.C. and H.P. designed the paper.

Funding

This research was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (NRF-2018R1D1A1B07045734, NRF-2018R1A6A3A03011833).

Acknowledgments

This research was supported by the National Research Foundation of Korea.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Goel, A.L.; Okumoto, K. Time-dependent error-detection rate model for software reliability and other performance measures. IEEE Trans. Reliab. 1979, 28, 206–211. [Google Scholar] [CrossRef]

- Yamada, S.; Ohba, M.; Osaki, S. S-shaped reliability growth modeling for software fault detection. IEEE Trans. Reliab. 1983, 32, 475–484. [Google Scholar] [CrossRef]

- Ohba, M. Inflexion S-shaped software reliability growth models. In Stochastic Models in Reliability Theory; Osaki, S., Hatoyama, Y., Eds.; Springer: Berlin, Germany, 1984; pp. 144–162. [Google Scholar]

- Yamada, S.; Ohtera, H.; Narihisa, H. Software Reliability Growth Models with Testing-Effort. IEEE Trans. Reliab. 1986, 35, 19–23. [Google Scholar] [CrossRef]

- Yang, B.; Xie, M. A study of operational and testing reliability in software reliability analysis. Reliab. Eng. Syst. Saf. 2000, 70, 323–329. [Google Scholar] [CrossRef]

- Huang, C.Y.; Kuo, S.Y.; Lyu, M.R.; Lo, J.H. Quantitative software reliability modeling from testing from testing to operation. In Proceedings of the International Symposium on Software Reliability Engineering, IEEE, Los Alamitos, CA, USA, 8–11 October 2000; pp. 72–82. [Google Scholar]

- Zhang, X.; Jeske, D.; Pham, H. Calibrating software reliability models when the test environment does not match the user environment. Appl. Stoch. Models Bus. Ind. 2002, 18, 87–99. [Google Scholar] [CrossRef]

- Teng, X.; Pham, H. A new methodology for predicting software reliability in the random field environments. IEEE Trans. Reliab. 2006, 55, 458–468. [Google Scholar] [CrossRef]

- Inoue, S.; Ikeda, J.; Yamda, S. Bivariate change-point modeling for software reliability assessment with uncertainty of testing-environment factor. Ann. Oper. Res. 2016, 244, 209–220. [Google Scholar] [CrossRef]

- Li, Q.; Pham, H. A testing-coverage software reliability model considering fault removal efficiency and error generation. PLoS ONE 2017, 12, e0181524. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Pham, H. NHPP software reliability model considering the uncertainty of operating environments with imperfect debugging and testing coverage. Appl. Math. Model. 2017, 51, 68–85. [Google Scholar] [CrossRef]

- Song, K.Y.; Chang, I.H.; Pham, H. A Three-parameter fault-detection software reliability model with the uncertainty of operating environments. J. Syst. Sci. Syst. Eng. 2017, 26, 121–132. [Google Scholar] [CrossRef]

- Song, K.Y.; Chang, I.H.; Pham, H. A software reliability model with a Weibull fault detection rate function subject to operating environments. Appl. Sci. 2017, 7, 983. [Google Scholar] [CrossRef]

- Song, K.Y.; Chang, I.H.; Pham, H. An NHPP software reliability model with S-shaped growth curve subject to random operating environments and optimal release time. Appl. Sci. 2017, 7, 1304. [Google Scholar] [CrossRef]

- Song, K.Y.; Chang, I.H.; Pham, H. Optimal Release Time and Sensitivity Analysis Using a New NHPP Software Reliability Model with Probability of Fault Removal Subject to Operating Environments. Appl. Sci. 2018, 8, 714. [Google Scholar] [CrossRef]

- Zhu, M.; Pham, H. A two-phase software reliability modeling involving with software fault dependency and imperfect fault removal. Comput. Lang. Syst. Struct. 2018, 53, 27–42. [Google Scholar] [CrossRef]

- Yamada, S.; Tokuno, K.; Osaki, S. Imperfect debugging models with fault introduction rate for software reliability assessment. Int. J. Syst. Sci. 1992, 23, 2241–2252. [Google Scholar] [CrossRef]

- Hossain, S.A.; Dahiya, R.C. Estimating the parameters of a non-homogeneous Poisson-process model for software reliability. IEEE Trans. Reliab. 1993, 42, 604–612. [Google Scholar] [CrossRef]

- Pham, H.; Nordmann, L.; Zhang, X. A general imperfect software debugging model with S-shaped fault detection rate. IEEE Trans. Reliab. 1999, 48, 169–175. [Google Scholar] [CrossRef]

- Pham, H.; Zhang, X. An NHPP software reliability models and its comparison. Int. J. Reliab. Qual. Saf. Eng. 1997, 4, 269–282. [Google Scholar] [CrossRef]

- Zhang, X.M.; Teng, X.L.; Pham, H. Considering fault removal efficiency in software reliability assessment. IEEE Trans. Syst. Man. Cybern. Part Syst. Hum. 2003, 33, 114–120. [Google Scholar] [CrossRef]

- Pham, H. System Software Reliability; Springer: London, UK, 2006. [Google Scholar]

- Pham, H. An Imperfect-debugging Fault-detection Dependent-parameter Software. Int. J. Automat. Comput. 2007, 4, 325–328. [Google Scholar] [CrossRef]

- Kapur, P.K.; Pham, H.; Anand, S.; Yadav, K. A unified approach for developing software reliability growth models in the presence of imperfect debugging and error generation. IEEE Trans. Reliab. 2011, 60, 331–340. [Google Scholar] [CrossRef]

- Roy, P.; Mahapatra, G.S.; Dey, K.N. An NHPP software reliability growth model with imperfect debugging and error generation. Int. J. Reliab. Qual. Saf. Eng. 2014, 21, 1–3. [Google Scholar] [CrossRef]

- Pham, H. A new software reliability model with Vtub-Shaped fault detection rate and the uncertainty of operating environments. Optimization 2014, 63, 1481–1490. [Google Scholar] [CrossRef]

- Chang, I.H.; Pham, H.; Lee, S.W.; Song, K.Y. A testing-coverage software reliability model with the uncertainty of operation environments. Int. J. Syst. Sci. Oper. Logist. 2014, 1, 220–227. [Google Scholar]

- Akaike, H. A new look at statistical model identification. IEEE Trans. Autom. Control 1974, 19, 716–719. [Google Scholar] [CrossRef]

- Anjum, M.; Haque, M.A.; Ahmad, N. Analysis and ranking of software reliability models based on weighted criteria value. Int. J. Inf. Technol. Comput. Sci. 2013, 2, 1–14. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).