A Method for Constructing Supervised Topic Model Based on Term Frequency-Inverse Topic Frequency

Abstract

1. Introduction

2. Background

2.1. L-LDA (Labeled Latent Dirichlet Allocation)

2.2. Dependency-LDA (Dependency Latent Dirichlet Allocation)

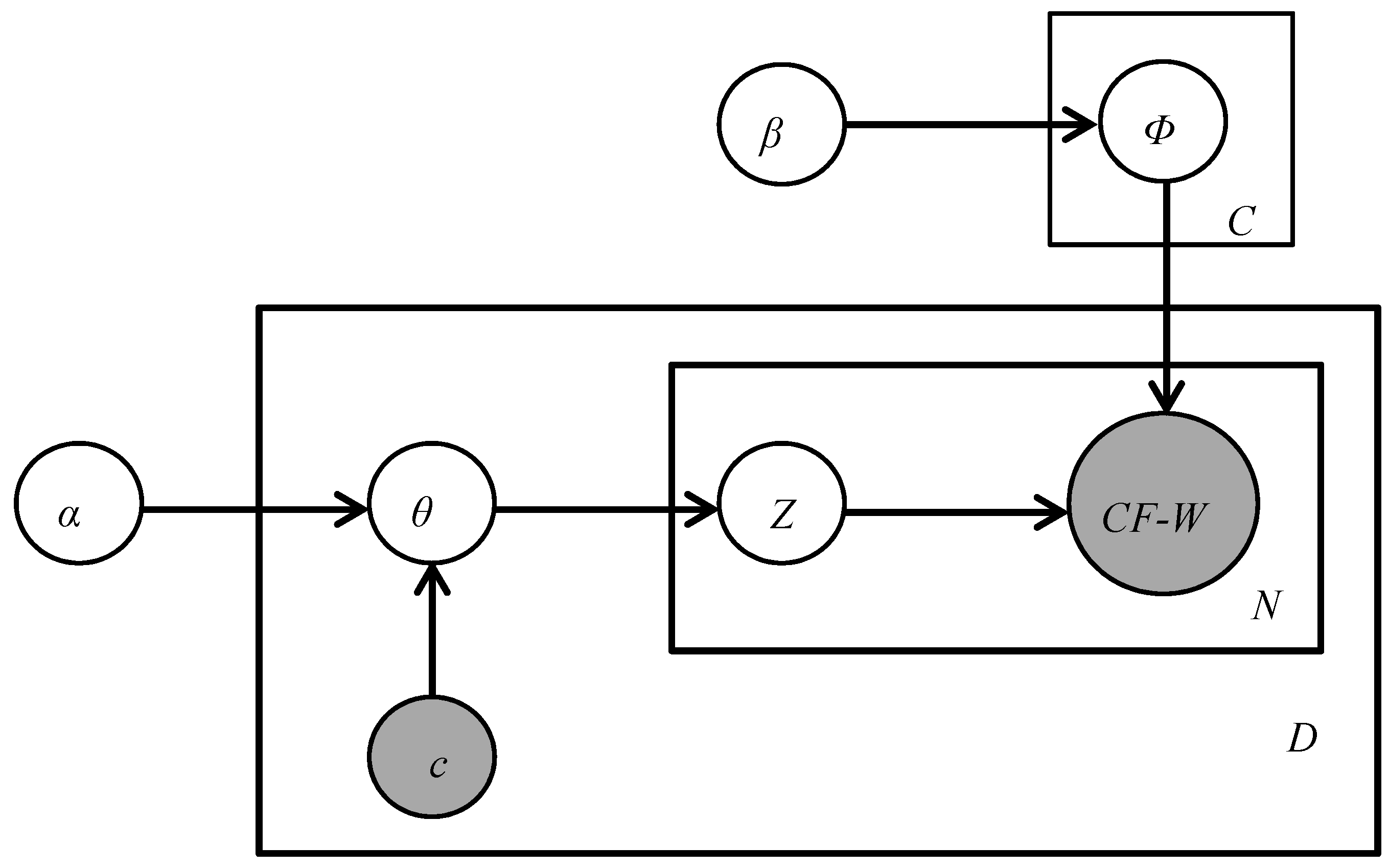

2.3. CF-Weight LDA (CF-Weight Latent Dirichlet Allocation)

- (1)

- The L-LDA and Dependency-LDA supervised topic models achieve correspondence between topics of document and multiple labels. The topic of each document is not the only one. However, they neglect the weight of terms by the topic frequency knowledge.

- (2)

- The CF-weight LDA supervised topic model uses the weight of terms with topic frequency knowledge. Nevertheless, the term frequency in the topic is neglected, and the calculating formula for the weight of terms reflects the poor ability of distinguishing topics without certain judgment conditions, for example, the perplexity value of the topic model.

3. Proposed Method

- (1)

- Term frequency: Term frequency is the number of times the term has appeared in the topic. Higher values of the term frequency indicate that the term can represent the topic better.

- (2)

- Inverse topic frequency: Inverse topic frequency is a measure of the importance of a term. The less number of topics in which the term has occurred indicates the term can distinguish topics better.

- Step 1.

- Set the appropriate Dirichlet prior parameters α and , and set the number of topics according to the label set of the corpus.

- Step 2.

- Choose a topic at random for each term in the corpus.

- Step 3.

- Loop through each term in the corpus, and use Equation (3) to calculate the probability of each term in all topics.

- Step 4.

- Repeat step 3 until the Gibbs sampling algorithm converges, which means the perplexity value of documents is stable. Calculate the probability matrix of topic-term by Equation (4).

- Step 5.

- Calculate the weight matrix of topic-term by Equation (6).

- Step 6.

- Generate the final probability matrix of topic-term by Equation (7).

4. Experimental Results and Analysis

4.1. Experiment Environment

4.2. Dataset

4.3. Evaluation Metrics

4.4. Baseline Methods

4.5. Comparison with Baseline Methods

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Deerwester, S.; Dumais, S.T.; Furnas, G.W.; Landauer, T.K.; Harshman, R. Indexing by Latent Semantic Analysis. J. Am. Soc. Inf. Sci. 1990, 41, 391–407. [Google Scholar] [CrossRef]

- Thomas, H. Probabilistic Latent Semantic Analysis. In Proceedings of the 22nd Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Berkeley, CA, USA, 15–19 August 1999; pp. 50–57. [Google Scholar]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent Dirichlet Allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Wu, Y.; Xi, S.; Yao, Y.; Xu, F.; Tong, H.; Lu, J. Guiding Supervised Topic Modeling for Content based Tag Recommendation. Neurocomputing 2018, 314, 479–489. [Google Scholar] [CrossRef]

- Ou, W.; Huynh, V. Joint Aspect Discovery, Sentiment Classification, Aspect-Level Ratings and Weights Approximation for Recommender Systems by Rating Supervised Latent Topic Model. J. Adv. Comput. Intell. Intell. Inform. 2018, 22, 17–26. [Google Scholar] [CrossRef]

- Zhang, Y.; Ma, J.; Wang, Z.; Chen, B. LF-LDA: A Supervised Topic Model for Multi-label Documents Classification. Int. J. Data Warehous. 2018, 14, 18–36. [Google Scholar] [CrossRef]

- Soleimani, H.; Miller, D.J. Semi-supervised Multi-label Topic Models for Document Classification and Sentence Labeling. In Proceedings of the 25th ACM International Conference on Information and Knowledge Management, Indianapolis, IN, USA, 24–28 October 2016; pp. 105–114. [Google Scholar]

- Blei, D.M.; McAuliffe, J.D. Supervised Topic Models. In Proceedings of the Twenty-First Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 3–6 December 2007; pp. 121–128. [Google Scholar]

- Lacoste-Julien, S.; Sha, F.; Jordan, M.I. DiscLDA: Discriminative Learning for Dimensionality Reduction and Classification. In Proceedings of the Twenty-Second Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–11 December 2008; pp. 897–904. [Google Scholar]

- Zhu, J.; Ahmed, A.; Xing, E.P. MedLDA: Maximum Margin Supervised Topic Models. J. Mach. Learn. Res. 2012, 13, 2237–2278. [Google Scholar]

- Ramage, D.; Hall, D.; Nallapati, R.; Manning, C.D. Labeled LDA: A Supervised Topic Model for Credit Attribution in Multilabeled Corpora. In Proceedings of the 2009 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–7 August 2009; pp. 248–256. [Google Scholar]

- Ramage, D.; Manning, C.D.; Dumais, S. Partially Labeled Topic Models for Interpretable Text Mining. In Proceedings of the 17th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Diego, CA, USA, 21–24 August 2011; pp. 457–465. [Google Scholar]

- Kim, D.; Kim, S.; Oh, A. Dirichlet Process with Mixed Random Measures: A Nonparametric Topic Model for Labeled Data. In Proceedings of the 29th International Conference on Machine Learning, Edinburgh, UK, 26 June–1 July 2012; pp. 675–682. [Google Scholar]

- Rubin, T.N.; Chambers, A.; Smyth, P.; Steyvers, M. Statistical Topic Models for Multi-label Document Classification. Mach. Learn. 2012, 88, 157–208. [Google Scholar] [CrossRef]

- Zou, Y.; OuYang, J.; Li, X. Supervised Topic Models with Weighted Words: Multi-label Document Classification. Front. Inf. Technol. Electron. Eng. 2018, 19, 513–523. [Google Scholar] [CrossRef]

- Gibbs Sampling-Wikipedia. Available online: https://en.wikipedia.org/wiki/Gibbs_sampling (accessed on 23 September 2019).

- Griffiths, T.L.; Steyvers, M. Finding Scientific Topics. In Proceedings of the National Academy of Sciences, Irvine, CA, USA, 9–11 May 2004; pp. 5228–5235. [Google Scholar]

- Perplexity-Wikipedia. Available online: https://en.wikipedia.org/wiki/Perplexity (accessed on 24 September 2019).

- Miha, P.; Vili, P. Text Classification Method based on Self-Training and LDA Topic Models. Expert Syst. Appl. 2017, 80, 83–93. [Google Scholar]

- Tf–idf-Wikipedia. Available online: https://en.wikipedia.org/wiki/Tf–idf (accessed on 29 August 2019).

| Notation | Description |

|---|---|

| Term | |

| Topic | |

| Document | |

| Number of terms in document | |

| The th term of document | |

| The term probability in corpus | |

| The Dirichlet prior parameter | |

| The scale coefficient | |

| The smoothing factor | |

| Number of topics in corpus | |

| Number of topics for Term | |

| The sampling number matrix of topic-term | |

| The probability matrix of topic-term | |

| The weight matrix of topic-term | |

| The final generated probability matrix of topic-term |

| Steps | Description |

|---|---|

| 1 | For each topic : |

| 2 | Generate the multinomial topic distributions over vocabulary |

| 3 | End for |

| 4 | For each document : |

| 5 | Generate the multinomial topic distributions over document according to the label set of each document |

| 6 | For each term : |

| 7 | Generate topic |

| 8 | Generate term |

| 9 | End for |

| 10 | End for |

| Steps | Description |

|---|---|

| 1 | for each topic : |

| 2 | Generate the multinomial topic distributions over label |

| 3 | End for |

| 4 | for each label : |

| 5 | Generate the multinomial topic distributions over vocabulary |

| 6 | End for |

| 7 | for each document : |

| 8 | Generate the multinomial topic distributions over document |

| 9 | for each label : |

| 10 | Generate the topic |

| 11 | Generate the label according to the label set of each document |

| 12 | End for |

| 13 | Compute the asymmetric Dirichlet prior using Equation (1) |

| 14 | Generate the multinomial topic distributions over document |

| 15 | For each term : |

| 16 | Generate topic |

| 17 | Generate term |

| 18 | End for |

| 19 | End for |

| Methods | Precision |

|---|---|

| L-LDA2009 | 48.61% |

| D-LDA2012 | 47.49% |

| CFW-LDA2018 | 52.46% |

| L-LDATF-ITF | 53.76% |

| D-LDATF-ITF | 47.93% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gou, Z.; Huo, Z.; Liu, Y.; Yang, Y. A Method for Constructing Supervised Topic Model Based on Term Frequency-Inverse Topic Frequency. Symmetry 2019, 11, 1486. https://doi.org/10.3390/sym11121486

Gou Z, Huo Z, Liu Y, Yang Y. A Method for Constructing Supervised Topic Model Based on Term Frequency-Inverse Topic Frequency. Symmetry. 2019; 11(12):1486. https://doi.org/10.3390/sym11121486

Chicago/Turabian StyleGou, Zhinan, Zheng Huo, Yuanzhen Liu, and Yi Yang. 2019. "A Method for Constructing Supervised Topic Model Based on Term Frequency-Inverse Topic Frequency" Symmetry 11, no. 12: 1486. https://doi.org/10.3390/sym11121486

APA StyleGou, Z., Huo, Z., Liu, Y., & Yang, Y. (2019). A Method for Constructing Supervised Topic Model Based on Term Frequency-Inverse Topic Frequency. Symmetry, 11(12), 1486. https://doi.org/10.3390/sym11121486