Improved Image Splicing Forgery Detection by Combination of Conformable Focus Measures and Focus Measure Operators Applied on Obtained Redundant Discrete Wavelet Transform Coefficients

Abstract

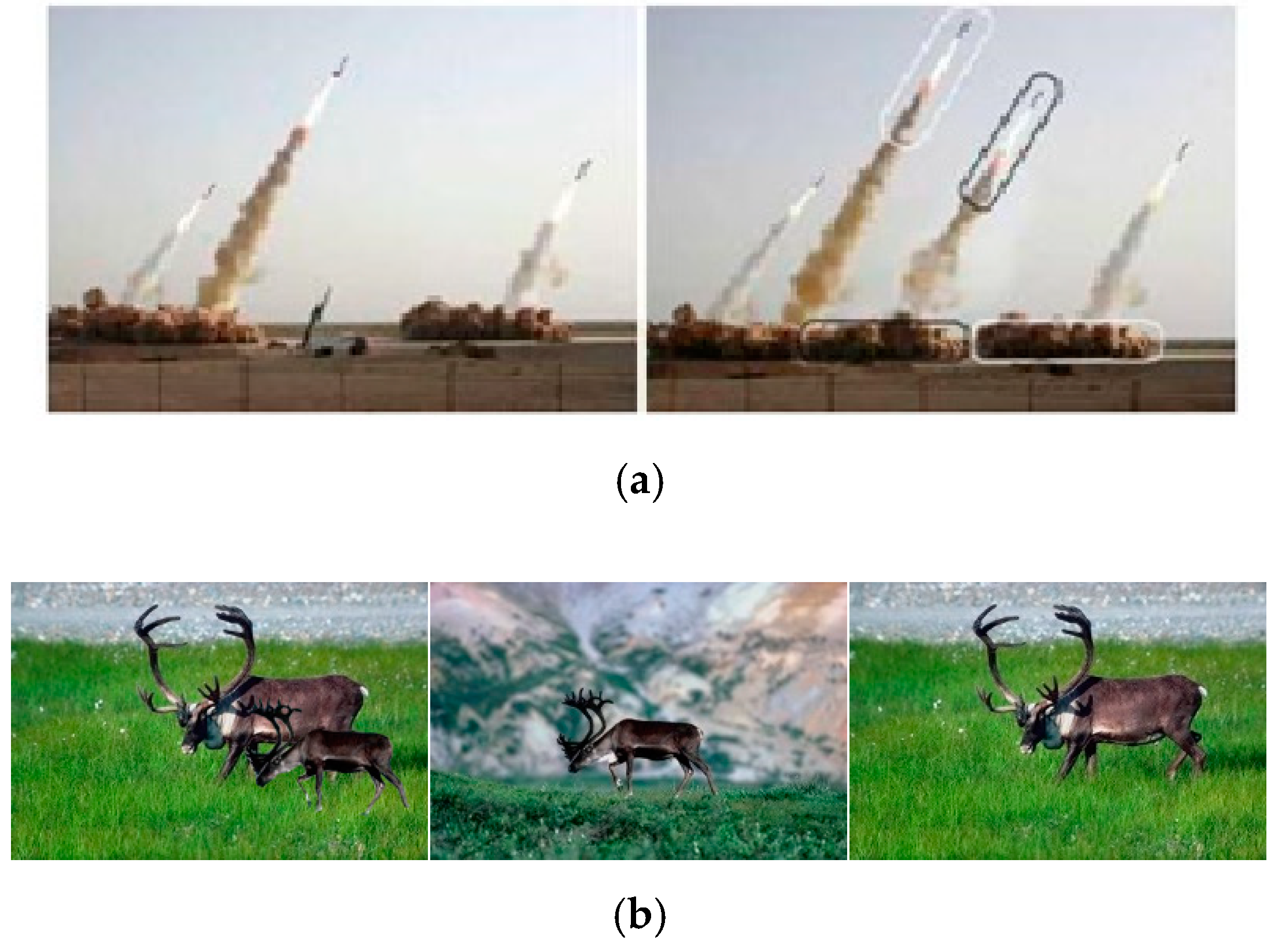

:1. Introduction

- Various passive methods are implemented to negate the image tampering and can be broadly categorized into the following methods.

- Pixel-based: used to detect the irregularities in the image at the pixel’s level. The simplest and commonly use in copy-move and splicing detection [8].

- Format-based: applied in images with JPEG format. JPEG compression makes tampering detection of an image difficult. However, some traces of tampering are left behind or distributed across the entire image that can be manipulated in the detection process [9]. Techniques such as double JPEG, JPEG blocks and JPEG quantization are exploited for detection in compressed images.

- Camera-based: used unique signature left by during the image acquisition and image storage in term of the lens, and sensor noises [10]. Some of the artifacts studied are color correlation, white balancing, quantization tables, filtering and JPEG compression.

- Physical-based: used the inconsistencies in light source across the image. Discovery anomalies on the light direction in 2D and light direction 3D as well as the light environment [11].

- Geometry-based: focused on objects and their position. Metric measurements and principal point techniques used to detection [11].

2. Related Works

3. Proposed Method

3.1. Image Pre-Processing

3.2. Feature Extraction

- Redundant Discrete Wavelets Transform (RDWT)

- Focus Measures

- Content-independent of any image structures.

- Large variations with respect to the degree of blurring.

- Minimal computational complexity.

- Energy of Gradient (EOG) is the sum of squared of horizontal and vertical directional gradients. The spliced region(s) edges on tampered images are more blurred than the authentic images which is more focus; therefore, EOG is selected to measure the degree of focus. EOG based on first derivatives and is computed as follows [23]:whereand

3.2.1. Conformable Gradient of 2D-Images (CG)

3.2.2. Conformable Laplacian of 2D-Images (CL)

- Source images are converted into YCbCr color space from RGB. Y, Cb, and Cr channels are extracted separately.

- Source image for each color space channel is further divided into non-overlapping blocks of n × n whereby n = 128, 64, 32, 16, 8.

- One level RDWT decomposition applied on each partitioned image blocks to obtain four sub-bands (LL, LH, HL, and HH).

- Focus measures operators based on (5) (6), and Conformable focus measures (14) (15) are calculated and combined for LH which emphasizes vertical edges, HL emphasizes horizontal edges and HH emphasizes diagonal edges individually. The LH, HL and HH are selected to use the high frequency sub-band, the detailed coefficients.

- The Mean, and the Standard deviation are calculated for each RDWT-Focus measure detailed coefficients that form features vector.

- These processes are the same for extracting authentic and spliced image features.

- Authentic features are labeled as (1) and spliced features as (0) then combined to create the feature vector for classification.

- SVM is used to classify the images into authentic and spliced.

4. The Experimental Results

- CASIA TIDE V2 is a color images dataset created by Institute of Automation Chinese Academy of Sciences. It consists of 7408 authentic and 5122 spliced images with different resolution. The CASIA TIDE V2 images are more realistic and challenging as it underwent post-processing. These images are of various sizes ranging from 240 × 160 to 900 × 600 resolutions [36]. It is publicly available datasets (https://www.kaggle.com/sophatvathana/casia-dataset.)

- IFS-TC phase-1 is an open color image dataset is created by IEEE Information Forensics and Security Technical Committee (IFS-TC). The dataset includes 1006 authentic and 450 spliced images with resolution from 1024 × 575 to 1024 × 768 [37]. It is publicly available dataset, https://signalprocessingsociety.org/newsletter/2014/01/ieee-ifs-tc-image-forensics-challenge-website-new-submissions).

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Uliyan, D.M.; Jalab, H.A.; Wahab, A.W.A.; Sadeghi, S. Image Region Duplication Forgery Detection Based on Angular Radial Partitioning and Harris Key-Points. Symmetry 2016, 8, 62. [Google Scholar] [CrossRef]

- Uliyan, D.M.; Jalab, H.A.; Wahab, A.W.A.; Shivakumara, P.; Sadeghi, S. A novel forged blurred region detection system for image forensic applications. Expert Syst. Appl. 2016, 64, 1–10. [Google Scholar] [CrossRef]

- Sadeghi, S.; Dadkhah, S.; Jalab, H.A.; Mazzola, G.; Uliyan, D. State of the art in passive digital image forgery detection: Copy-move image forgery. Pattern Anal. Appl. 2018, 21, 291–306. [Google Scholar] [CrossRef]

- Sadeghi, S.; Jalab, H.A.; Wong, K.; Uliyan, D.; Dadkhah, S. Keypoint based authentication and localization of copy-move forgery in digital image. Malays. J. Comput. Sci. 2017, 30, 117–133. [Google Scholar] [CrossRef]

- Moghaddasi, Z.; Jalab, H.A.; Noor, R.M. Image splicing forgery detection based on low-dimensional singular value decomposition of discrete cosine transform coefficients. Neural Comput. Appl. 2019, 31, 7867–7877. [Google Scholar] [CrossRef]

- Ibrahim, R.; Moghaddasi, Z.; Jalab, H.; Noor, R. Fractional differential texture descriptors based on the machado entropy for image splicing detection. Entropy 2015, 17, 4775–4785. [Google Scholar] [CrossRef]

- Yahya, A.-N.; Jalab, H.A.; Wahid, A.; Noor, R.M. Robust watermarking algorithm for digital images using discrete wavelet and probabilistic neural network. J. King Saud Univ. Comput. Inf. Sci. 2015, 27, 393–401. [Google Scholar] [Green Version]

- Prakash, C.S.; Maheshkar, S.; Maheshkar, V. Detection of copy-move image forgery with efficient block representation and discrete cosine transform. J. Intell. Fuzzy Syst. 2018, 35, 1–13. [Google Scholar] [CrossRef]

- Zheng, L.; Zhang, Y.; Thing, V.L. A survey on image tampering and its detection in real-world photos. J. Vis. Commun. Image Represent. 2019, 58, 380–399. [Google Scholar] [CrossRef]

- Lin, X.; Li, J.-H.; Wang, S.-L.; Cheng, F.; Huang, X.-S. Recent advances in passive digital image security forensics: A brief review. Engineering 2018, 4, 29–39. [Google Scholar] [CrossRef]

- Ansari, M.D.; Ghrera, S.; Tyagi, V. Pixel-based image forgery detection: A review. IETE J. Educ. 2014, 55, 40–46. [Google Scholar] [CrossRef]

- Moghaddasi, Z.; Jalab, H.A.; Md Noor, R.; Aghabozorgi, S. Improving RLRN image splicing detection with the use of PCA and kernel PCA. Sci. World J. 2014, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Hakimi, F.; Hariri, M.; GharehBaghi, F. Image splicing forgery detection using local binary pattern and discrete wavelet transform. In Proceedings of the 2015 2nd International Conference on Knowledge-Based Engineering and Innovation (KBEI), Tehran, Iran, 5–6 November 2015; pp. 1074–1077. [Google Scholar]

- Kaur, M.; Gupta, S. A Passive Blind Approach for Image Splicing Detection Based on DWT and LBP Histograms. In Security in Computing and Communications, Sscc 2016; Mueller, P., Thampi, S.M., Bhuiyan, M.Z.A., Ko, R., Doss, R., Calero, J.M.A., Eds.; Springer: Berlin/Heidelberg, Germany, 2016; Volume 625, pp. 318–327. [Google Scholar]

- Alhussein, M. Image Tampering Detection Based on Local Texture Descriptor and Extreme Learning Machine. In Proceedings of the 2016 UKSim-AMSS 18th International Conference on Computer Modelling and Simulation (UKSim), Cambridge, UK, 6–8 April 2016; pp. 196–199. [Google Scholar] [CrossRef]

- Kashyap, A.; Suresh, B.; Agrawal, M.; Gupta, H.; Joshi, S.D. Detection of splicing forgery using wavelet decomposition. In Proceedings of the 2015 International Conference on Computing, Communication & Automation (ICCCA), Greater Noida, India, 15–16 May 2015; pp. 843–848. [Google Scholar]

- Zhao, X.; Wang, S.; Li, S.; Li, J. Passive image-splicing detection by a 2-D noncausal Markov model. IEEE Trans. Circuits Syst. Technol. 2015, 25, 185–199. [Google Scholar] [CrossRef]

- Isaac, M.M.; Wilscy, M. Image Forgery Detection Based on Gabor Wavelets and Local Phase Quantization. Procedia Comput. Sci. 2015, 58, 76–83. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Q.; Lu, W.; Weng, J. Joint image splicing detection in DCT and Contourlet transform domain. J. Vis. Commun. Image Represent. 2016, 40, 449–458. [Google Scholar] [CrossRef]

- Agarwal, S.; Chand, S. Image forgery detection using Markov features in undecimated wavelet transform. In Proceedings of the 2016 Ninth International Conference on Contemporary Computing (IC3), Noida, India, 1–13 August 2016; pp. 1–6. [Google Scholar]

- Park, T.H.; Han, J.G.; Moon, Y.H.; Eom, I.K. Image splicing detection based on inter-scale 2D joint characteristic function moments in wavelet domain. EURASIP J. Image Video Process. 2016, 2016, 30. [Google Scholar] [CrossRef]

- Mir, H.; Xu, P.; Van Beek, P. An extensive empirical evaluation of focus measures for digital photography. In Proceedings of the SPIE, San Francisco, CA, USA, 3–5 February 2014; Volume 9023. [Google Scholar]

- Chun, M.G.; Kong, S.G. Focusing in thermal imagery using morphological gradient operator. Pattern Recognit. Lett. 2014, 38, 20–25. [Google Scholar] [CrossRef]

- Jalab, H.A.; Subramaniam, T.; Ibrahim, R.; Kahtan, H.; Noor, N. New Texture Descriptor Based on Modified Fractional Entropy for Digital Image Splicing Forgery Detection. Entropy 2019, 21, 371. [Google Scholar] [CrossRef]

- Jalab, H.A.; Hasan, A.M.; Moghaddasi, Z.; Wakaf, Z. Image Splicing Detection Using Electromagnetism-Like Based Descriptor. In Proceedings of the SAI Intelligent Systems Conference, London, UK, 21–22 September 2016; pp. 59–66. [Google Scholar]

- Qureshi, M.A.; Deriche, M. A bibliography of pixel-based blind image forgery detection techniques. Signal Process. Image Commun. 2015, 39, 46–74. [Google Scholar] [CrossRef]

- Sharma, S.; Kumar, U. Review of Transform Domain Techniques for Image Steganography. Int. J. Sci. Res. 2015, 4, 194–197. [Google Scholar]

- Subhedar, M.S.; Mankar, V.H. Image steganography using redundant discrete wavelet transform and QR factorization. Comput. Electr. Eng. 2016, 54, 406–422. [Google Scholar] [CrossRef]

- Gaur, S.; Srivastava, V.K. A hybrid RDWT-DCT and SVD based digital image watermarking scheme using Arnold transform. In Proceedings of the 2017 4th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, Delhi-NCR, India, 2–3 February 2017; pp. 399–404. [Google Scholar]

- Bajaj, A. Robust and reversible digital image watermarking technique based on RDWT-DCT-SVD. In Proceedings of the 2014 International Conference on Advances in Engineering and Technology Research (ICAETR), Singapore, 29–30 March 2014; pp. 1–5. [Google Scholar]

- Guo, L.; Liu, L.; Sun, H. Focus Measure Based on the Image Moments. In Proceedings of the 2018 IEEE International Conference on Mechatronics and Automation (ICMA), Changchun, Jilin, China, 5–8 August 2018; pp. 1151–1156. [Google Scholar]

- Faundez-Zanuy, M.; Mekyska, J.; Espinosa-Duró, V. On the focusing of thermal images. Pattern Recognit. Lett. 2011, 32, 1548–1557. [Google Scholar] [CrossRef]

- Pertuz, S.; Puig, D.; Garcia, M.A. Analysis of focus measure operators for shape-from-focus. Pattern Recognit. 2013, 46, 1415–1432. [Google Scholar] [CrossRef]

- Anderson, D.R.; Camrud, E.; Ulness, D.J. On the nature of the conformable derivative and its applications to physics. J. Fract. Calc. Appl. 2018, 10, 92–135. [Google Scholar]

- Huang, W.; Jing, Z. Evaluation of focus measures in multi-focus image fusion. Pattern Recognit. Lett. 2007, 28, 493–500. [Google Scholar] [CrossRef]

- Dong, J.; Wang, W.; Tan, T. CASIA image tampering detection evaluation database. In Proceedings of the 2013 IEEE China Summit and International Conference on Signal and Information Processing, Beijing, China, 6–10 July 2013; pp. 422–426. [Google Scholar]

- IEEE IFS-TC Image Forensics Challenge: Image Corpus. Available online: http://ifc.recod.ic.unicamp.br/ (accessed on 7 October 2019).

- Image Processing Toolbox; The Mathworks, Inc.: Natick, MA, USA; Available online: http://www.mathworks (accessed on 7 October 2019).

- Armas Vega, E.A.; Sandoval Orozco, A.L.; Garcia Villalba, L.J.; Hernandez-Castro, J. Digital Images Authentication Technique Based on DWT, DCT and Local Binary Patterns. Sensors 2018, 18, 3372. [Google Scholar] [CrossRef]

- Wang, R.; Lu, W.; Li, J.; Xiang, S.; Zhao, X.; Wang, J. Digital image splicing detection based on Markov features in QDCT and QWT domain. Int. J. Digit. Crime Forensics 2018, 10, 90–107. [Google Scholar] [CrossRef]

- Li, C.; Ma, Q.; Xiao, L.; Li, M.; Zhang, A. Image splicing detection based on Markov features in QDCT domain. Neurocomputing 2017, 228, 29–36. [Google Scholar] [CrossRef]

- Shen, X.; Shi, Z.; Chen, H. Splicing image forgery detection using textural features based on the grey level co-occurrence matrices. IET Image Process. 2017, 11, 44–53. [Google Scholar] [CrossRef]

| Dataset | Authentic | Spliced | Total | Format | Size | Method of Tampering |

|---|---|---|---|---|---|---|

| CASIA V2 [36] | 7408 | 5122 | 12,530 | JPEG, TIFF BMP | 320 × 240 to 900 × 600 | Splicing with pre-processing and post-processing |

| IFS-TC [37] | 1006 | 450 | 1456 | PNG | 1024 × 575 to 1024 × 768 | Splicing with pre-processing and post-processing |

| Channel | Dimensionality | TP (%) | TN (%) | Accuracy (%) |

|---|---|---|---|---|

| Y | 24-D | 95 | 96 | 95.50 |

| Cb | 24-D | 98 | 98 | 98.30 |

| Cr | 24-D | 98 | 98 | 98.10 |

| CbCr | 48-D | 97 | 98 | 97.30 |

| YCbCr | 72-D | 98 | 98 | 97.60 |

| Channel | Dimensionality | TP (%) | TN (%) | Accuracy (%) |

|---|---|---|---|---|

| Y | 24-D | 96 | 80 | 91.40 |

| Cb | 24-D | 99 | 99 | 98.60 |

| Cr | 24-D | 98 | 96 | 97.70 |

| CbCr | 48-D | 99 | 96 | 97.90 |

| YCbCr | 72-D | 99 | 96 | 98.10 |

| Methods | Feature Reduction | Dimensionality | TP (%) | TN (%) | Accuracy (%) |

|---|---|---|---|---|---|

| DMWT + Markov + BDCT [17] | SVM-RBF | NA | NA | NA | 90.10 |

| DCT + Contourlet Transform [19] | LIBSVM + RBF | 16,524 | 98.06 | 95.31 | 96.69 |

| Markov + QDCT + QWT [40] | None | 11,664 | 96.75 | 95.13 | 95.94 |

| DCT + DWT + LBP [39] | SVM-RBF | NA | NA | NA | 96.19 |

| Proposed (Cb) | None | 24-D | 98 | 98 | 98.30 |

| Proposed (YCbCr) | None | 72-D | 98 | 97 | 97.40 |

| Method | Feature Reduction | Dimensionality | TP (%) | TN (%) | Accuracy (%) |

|---|---|---|---|---|---|

| LBP + DWT [13] | PCA | NA | NA | NA | 97.21 |

| Markov features in QDCT domain [41] | None | 972 | NA | NA | 92.38 |

| DWT and LBP Histogram [14] | None | NA | NA | NA | 94.09 |

| co-occurrence matrices in wavelet domain [21] | PCA | 100 | NA | NA | 95.40 |

| TF-GLCM (CbCr) [42] | LIBSVM + RBF | 96 | 97.72 | 97.80 | 97.70 |

| Proposed (Cb) | None | 24-D | 99 | 99 | 98.60 |

| Proposed (YCbCr) | None | 72-D | 99 | 96 | 98.10 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Subramaniam, T.; Jalab, H.A.; Ibrahim, R.W.; Mohd Noor, N.F. Improved Image Splicing Forgery Detection by Combination of Conformable Focus Measures and Focus Measure Operators Applied on Obtained Redundant Discrete Wavelet Transform Coefficients. Symmetry 2019, 11, 1392. https://doi.org/10.3390/sym11111392

Subramaniam T, Jalab HA, Ibrahim RW, Mohd Noor NF. Improved Image Splicing Forgery Detection by Combination of Conformable Focus Measures and Focus Measure Operators Applied on Obtained Redundant Discrete Wavelet Transform Coefficients. Symmetry. 2019; 11(11):1392. https://doi.org/10.3390/sym11111392

Chicago/Turabian StyleSubramaniam, Thamarai, Hamid A. Jalab, Rabha W. Ibrahim, and Nurul F. Mohd Noor. 2019. "Improved Image Splicing Forgery Detection by Combination of Conformable Focus Measures and Focus Measure Operators Applied on Obtained Redundant Discrete Wavelet Transform Coefficients" Symmetry 11, no. 11: 1392. https://doi.org/10.3390/sym11111392

APA StyleSubramaniam, T., Jalab, H. A., Ibrahim, R. W., & Mohd Noor, N. F. (2019). Improved Image Splicing Forgery Detection by Combination of Conformable Focus Measures and Focus Measure Operators Applied on Obtained Redundant Discrete Wavelet Transform Coefficients. Symmetry, 11(11), 1392. https://doi.org/10.3390/sym11111392