A Sparsity-Aware Variable Kernel Width Proportionate Affine Projection Algorithm for Identifying Sparse Systems

Abstract

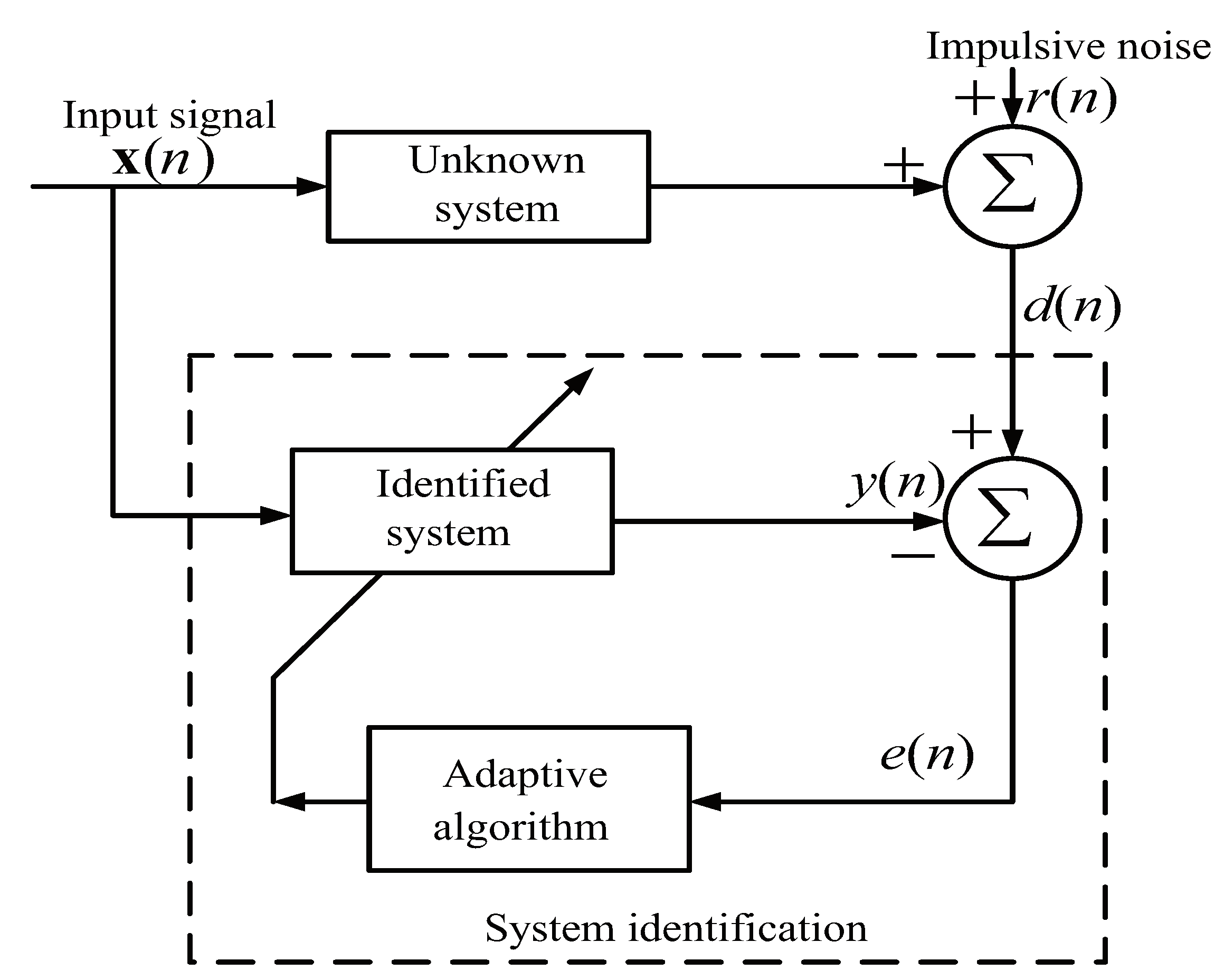

1. Introduction

2. Previous Work of the MCC and AP Algorithms

2.1. The Basic MCC Algorithm

2.2. AP Algorithm

3. The Developed LP-VPAP Algorithm

4. Performance Analysis

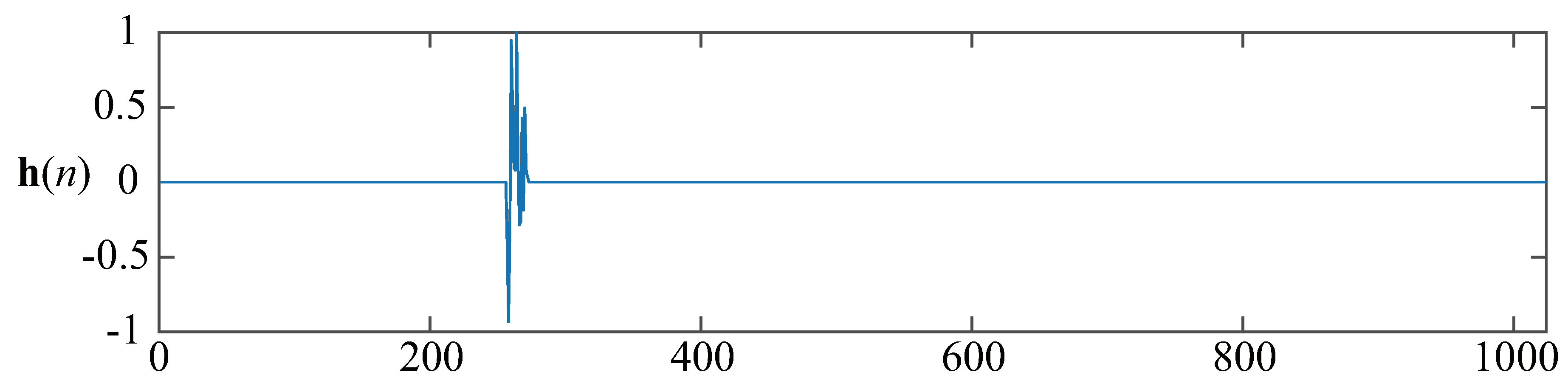

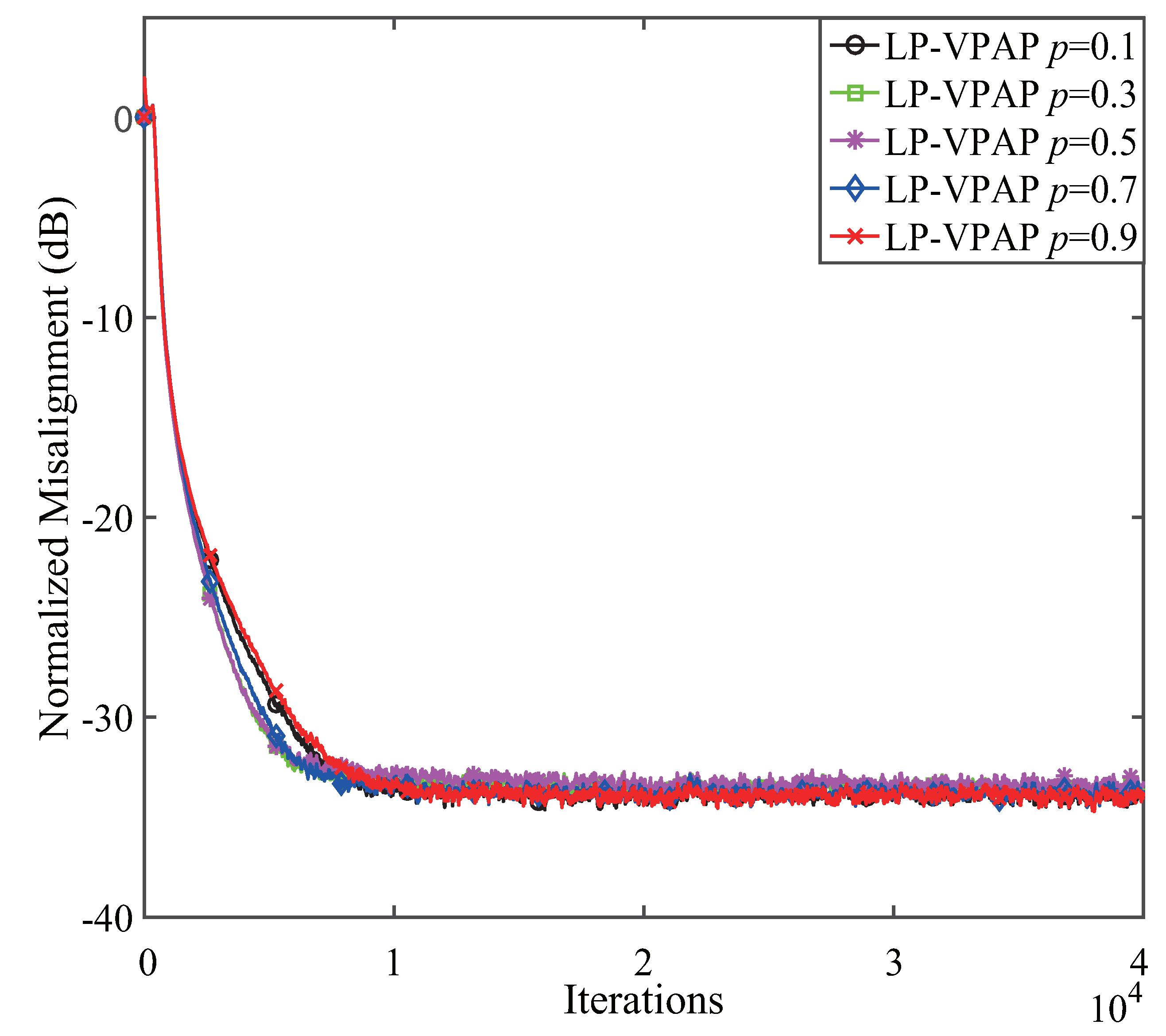

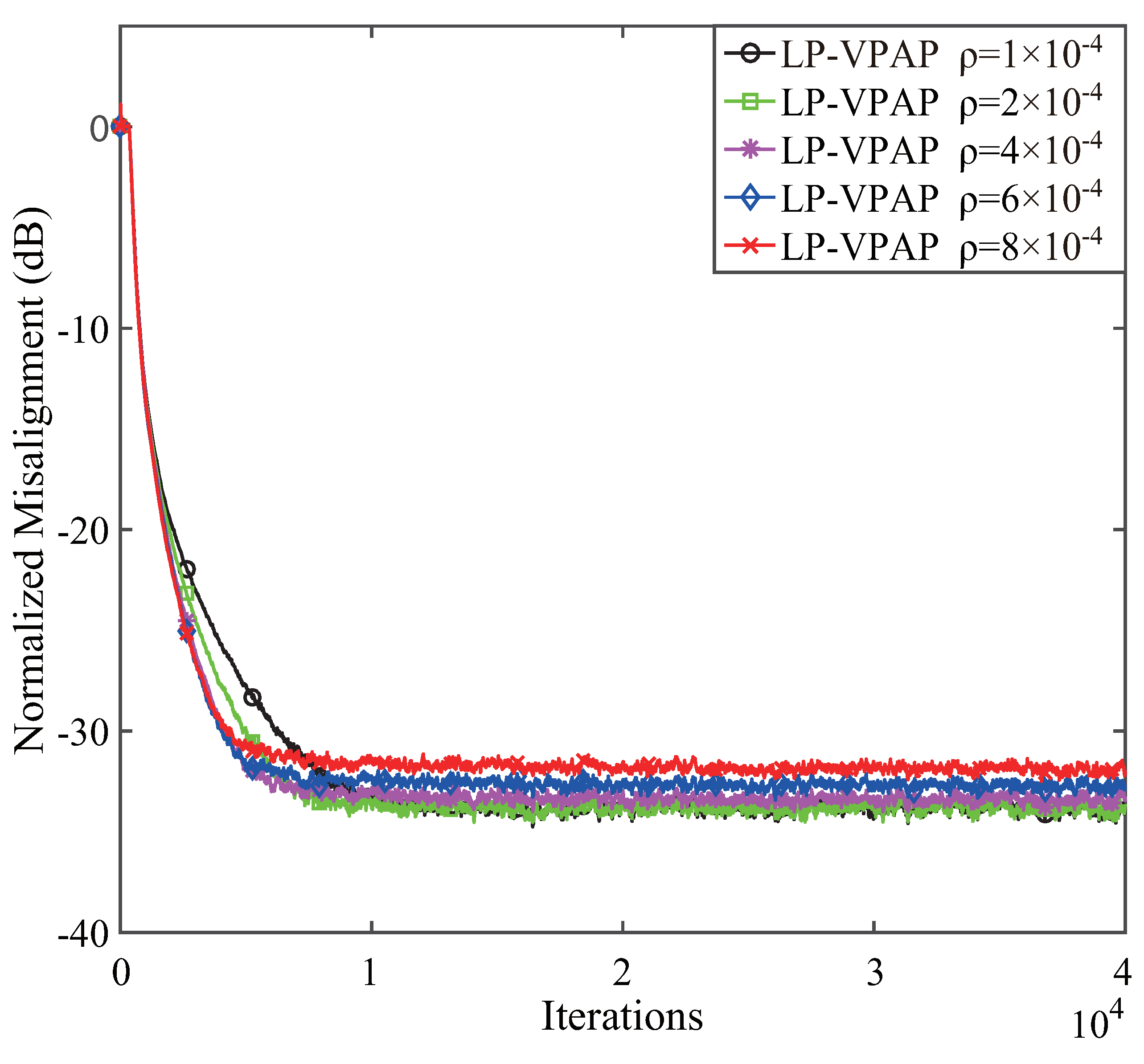

4.1. Performance of the LP-VPAP with Different p and

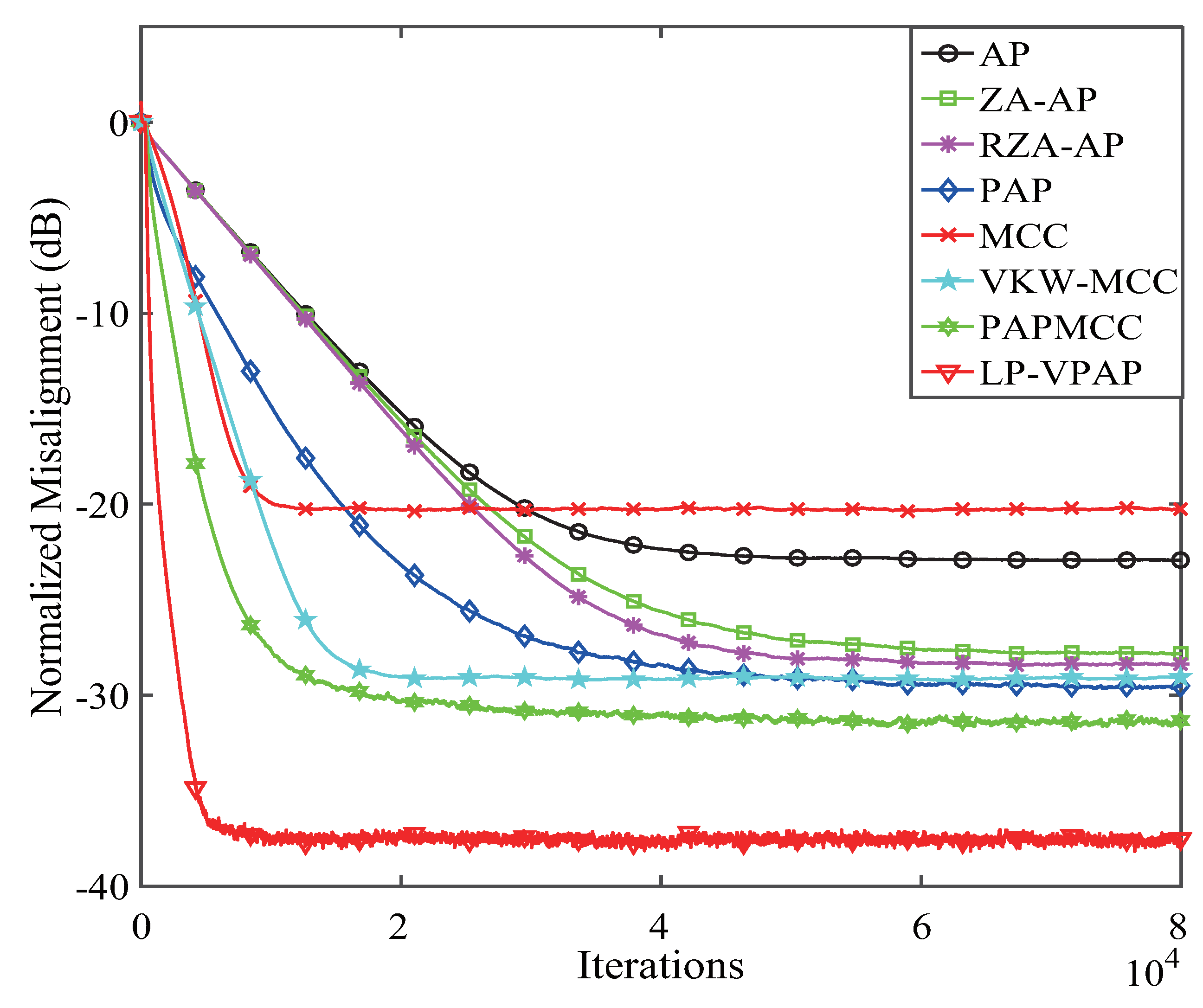

4.2. Performance Comparisons of the LP-VPAP Algorithm under Different Input Signals

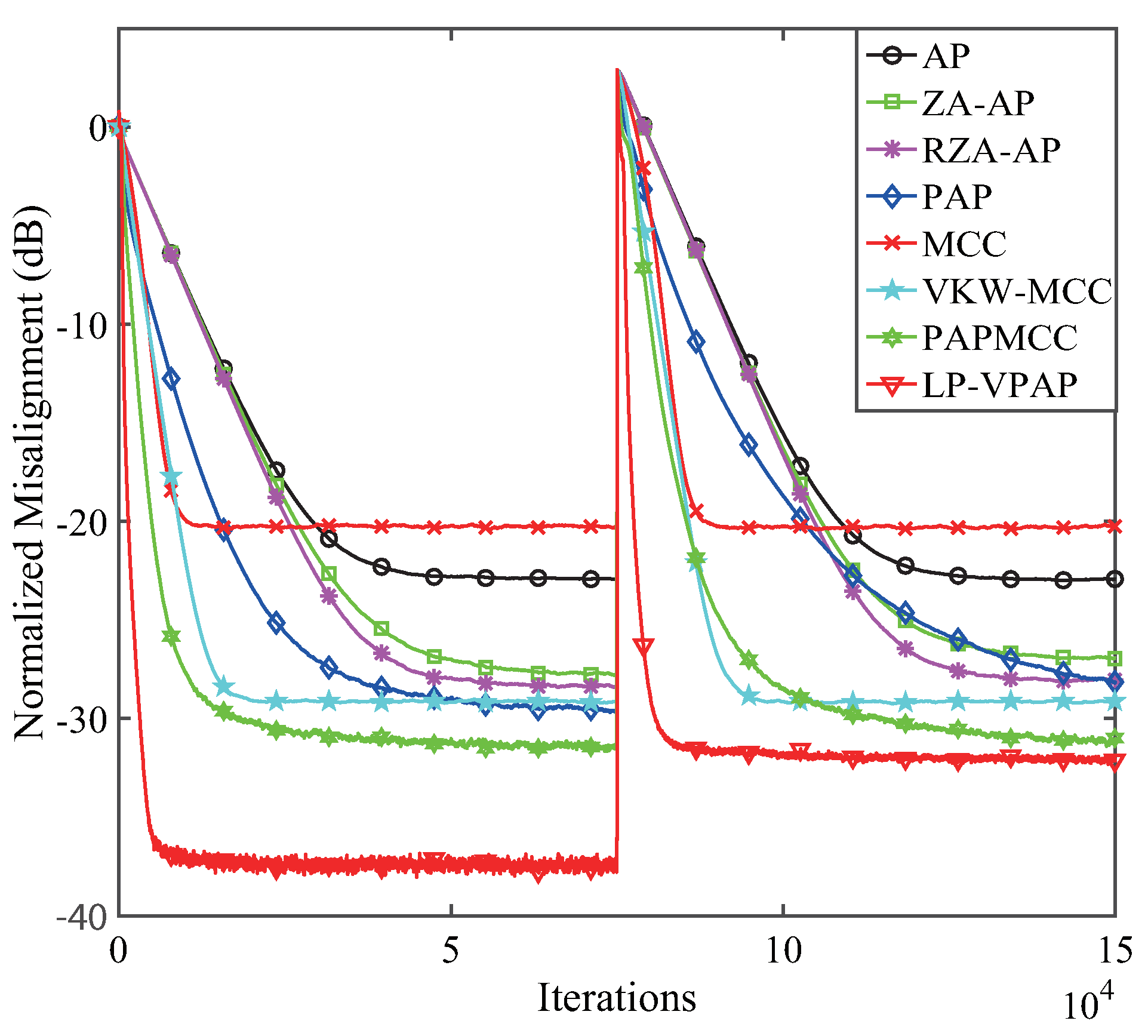

4.3. Tracking Behavior of the LP-VPAP

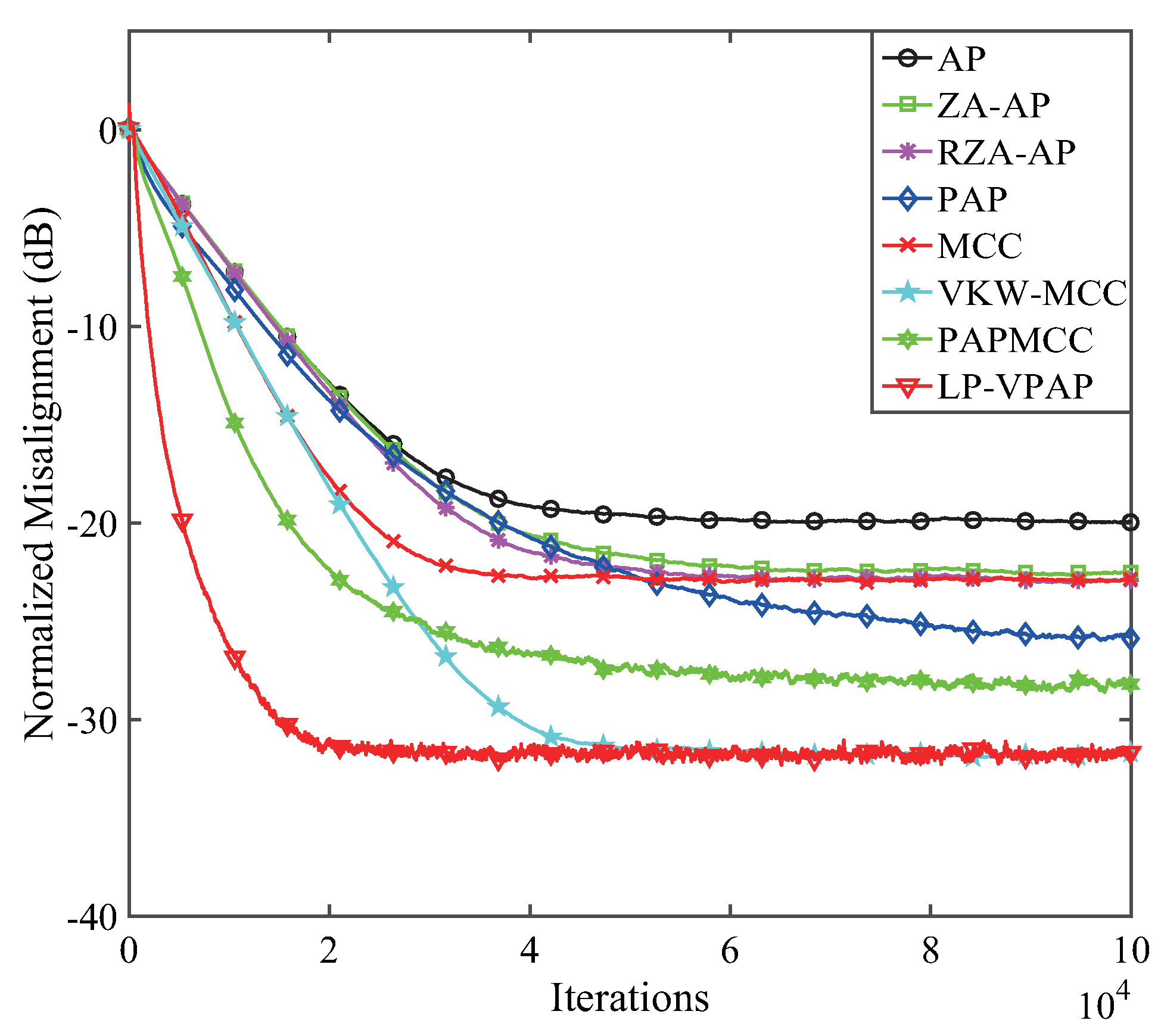

4.4. Performance Comparisons of the LP-VPAP Algorithm with a Less Sparse Echo Path

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Ljung, L. System Identification: Theory for the User; Prentice-Hall: Upper Saddle River, NJ, USA, 1987. [Google Scholar]

- Benesty, J.; Gänsler, T.; Morgan, D.R.; Sondhi, M.M.; Gay, S.L. Advances in Network and Acoustic Echo Cancellation; Springer: Berlin, Germany, 2001. [Google Scholar]

- Li, Y.; Wang, Y.; Jiang, T. Sparse-aware set-membership NLMS algorithms and their application for sparse channel estimation and echo cancelation. AEU-Int. J. Electron. Commun. 2006, 70, 895–902. [Google Scholar] [CrossRef]

- Li, Y.; Wang, Y.; Yang, R.; Ablu, F. A soft parameter function penalized normalized maximum correntropy criterion algorithm for sparse system identification. Entropy 2017, 19, 45. [Google Scholar] [CrossRef]

- Li, Y.; Wang, Y.; Jiang, T. Norm-adaption penalized least mean square/fourth algorithm for sparse channel estimation. Signal Process. 2016, 128, 243–251. [Google Scholar] [CrossRef]

- Li, Y.; Jiang, Z.; Jin, Z.; Han, X.; Yin, J. Cluster-sparse proportionate NLMS algorithm with the hybrid norm constraint. IEEE Access 2018, 6, 47794–47803. [Google Scholar] [CrossRef]

- Haykin, S. Adaptive Filter Theory, 2nd ed.; Prentice-Hall: Upper Saddle River, NJ, USA, 1991. [Google Scholar]

- Widrow, B.; Stearns, S.D. Adaptive Signal Processing; Prentice-Hall: Upper Saddle River, NJ, USA, 1985. [Google Scholar]

- Douglas, S.C.; Meng, T.Y. Normalized data nonlinearities for LMS adaptation. IEEE Trans. Signal Process. 1994, 42, 1352–1365. [Google Scholar] [CrossRef]

- Ozeki, K.; Umeda, T. An adaptive filtering algorithm using an orthogonal projection to an affine subspace and its properties. Electr. Commun. Jpn. 1984, 67, 19–27. [Google Scholar] [CrossRef]

- Shin, H.; Sayed, A.H.; Song, W. Variable step-size NLMS and affine projection algorithms. Electr. Commun. Jpn. 2004, 11, 132–135. [Google Scholar] [CrossRef]

- Li, Y.; Jiang, Z.; Osman, O.M.O.; Han, X.; Yin, J. Mixed norm constrained sparse APA algorithm for satellite and network echo channel estimation. IEEE Access 2018, 6, 65901–65908. [Google Scholar] [CrossRef]

- Etter, D. Identification of sparse impulse response systems using an adaptive delay filter. In Proceedings of the 1985 IEEE International Conference on Acoustics, Speech and Signal Processing, Tampa, FL, USA, 26–29 April 1985; pp. 1169–1172. [Google Scholar]

- Li, W.; Preisig, J.C. Estimation of rapidly time-varying sparse channels. IEEE J. Ocean. Eng. 2007, 32, 927–939. [Google Scholar] [CrossRef]

- Li, Y.; Wang, Y.; Jiang, T. Sparse least mean mixed-norm adaptive filtering algorithms for sparse channel estimation applications. Int. J. Commun. Syst. 2017, 30, 1–14. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, C.; Wang, S. Low-complexity non-uniform penalized affine projection algorithm for sparse system identification. Circuits Syst. Signal Process. 2016, 35, 1–14. [Google Scholar] [CrossRef]

- Shi, W.; Li, Y.; Zhao, L.; Liu, X. Controllable sparse antenna array for adaptive beamforming. IEEE Access 2019, 7, 6412–6423. [Google Scholar] [CrossRef]

- Zhang, X.; Jiang, T.; Li, Y.; Zakharov, Y. A novel block sparse reconstruction method for DOA estimation with unknown mutual coupling. IEEE Commun. Lett. 2019. [Google Scholar] [CrossRef]

- Berger, C.R.; Zhou, S.; Preisig, J.C.; Willett, P. Sparse channel estimation for multicarrier underwater acoustic communication: From subspace methods to compressed sensing. IEEE Trans. Signal Process. 2010, 58, 1708–1721. [Google Scholar] [CrossRef]

- Gänsler, T.; Gay, S.L.; Sondhi, M.M.; Benesty, J. Double-talk robust fast converging algorithms for network echo cancellation. IEEE Trans. Speech Audio Process. 2000, 8, 656–663. [Google Scholar] [CrossRef]

- Duttweiler, D.L. Proportionate normalized least-mean-squares adaptation in echo cancelers. IEEE Trans. Speech Audio Process. 2000, 8, 508–518. [Google Scholar] [CrossRef]

- Gansler, T.; Benesty, J.; Gay, S.L.; Sondhi, M.M. A robust proportionate affine projection algorithm for network echo cancellation. In Proceedings of the 2000 IEEE International Conference on Acoustics, Speech, and Signal Processing, Istanbul, Turkey, 5–9 June 2000; pp. 793–796. [Google Scholar]

- Benesty, J.; Gay, S.L. An improved PNLMS algorithm. In Proceedings of the 2002 IEEE International Conference on Acoustics, Speech, and Signal Processing, Orlando, FL, USA, 13–17 May 2002; pp. 1881–1884. [Google Scholar]

- Jin, Z.; Li, Y.; Wang, Y. An enhanced set-membership PNLMS algorithm with a correntropy induced metric constraint for acoustic channel estimation. Entropy 2017, 19, 281. [Google Scholar] [CrossRef]

- Deng, H.; Doroslovački, M. Improving convergence of the PNLMS algorithm for sparse impulse response identification. IEEE Signal Process. Lett. 2005, 12, 181–184. [Google Scholar] [CrossRef]

- Chen, Y.; Gu, Y.; Hero, A.O. Sparse LMS for system identification. In Proceedings of the 2009 IEEE International Conference on Acoustics, Speech and Signal Processing, Taipei, Taiwan, 19–24 April 2009; pp. 3125–3128. [Google Scholar]

- Gu, Y.; Jin, J.; Mei, S. L0 norm constraint LMS for sparse system identification. IEEE Signal Process. Lett. 2009, 16, 774–777. [Google Scholar]

- Su, G.; Jin, J.; Gu, Y.; Wang, J. Performance analysis of l0 norm constraint least mean square algorithm. IEEE Trans. Signal Process. 2012, 60, 2223–2235. [Google Scholar] [CrossRef]

- Jiang, S.; Gu, Y. Block-sparsity-induced adaptive filter for multi-clustering system identification. IEEE Trans. Signal Process. 2015, 63, 5183–5330. [Google Scholar] [CrossRef]

- Jin, J.; Qu, Q.; Gu, Y. Robust zero-point attraction least mean square algorithm on near sparse system identification. IET Signal Process. 2013, 7, 210–218. [Google Scholar] [CrossRef]

- Meng, R.; de Lamare, R.C.; Nascimento, V.H. Nascimento. Sparsity-aware affine projection adaptive algorithms for system identification. In Proceedings of the Sensor Signal Processing for Defence (SSPD 2011), London, UK, 27–29 September 2011; pp. 793–796. [Google Scholar]

- Yu, Y.; Zhao, H.; Chen, B. Sparseness-controlled proportionate affine projection sign algorithms for acoustic echo cancellation. Circuits Syst. Signal Process. 2015, 34, 3933–3948. [Google Scholar] [CrossRef]

- Ma, W.; Chen, B.; Qu, H.; Zhao, J. Sparse least mean p-power algorithms for channel estimation in the presence of impulsive noise. Signal Image Video Process. 2016, 10, 503–510. [Google Scholar] [CrossRef]

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Vega, L.R.; Rey, H.; Benesty, J.; Tressens, S. A new robust variable step-size NLMS algorithm. IEEE Trans. Signal Process. 2008, 56, 1878–1893. [Google Scholar] [CrossRef]

- Chen, B.; Xing, L.; Zhao, H.; Zheng, N.; Príncipe, J.C. Generalized correntropy for robust adaptive filtering. IEEE Trans. Signal Process. 2016, 64, 3376–3387. [Google Scholar] [CrossRef]

- Chen, B.; Wang, J.; Zhao, H.; Zheng, N.; Príncipe, J.C. Convergence of a fixed-point algorithm under maximum correntropy criterion. IEEE Signal Process. Lett. 2015, 22, 1723–1727. [Google Scholar] [CrossRef]

- Ma, W.; Qu, H.; Gui, G.; Xu, L.; Zhao, J.; Chen, B. Maximum correntropy criterion based sparse adaptive filtering algorithms for robust channel estimation under non-Gaussian environments. J. Frankl. Inst.-Eng. Appl. Math. 2015, 352, 2708–2727. [Google Scholar] [CrossRef]

- Li, Y.; Jiang, Z.; Shi, W.; Han, X.; Chen, B. Blocked maximum correntropy criterion algorithm for cluster-sparse system identifications. IEEE Trans. Circuits Syst. II-Express Briefs 2019. [Google Scholar] [CrossRef]

- Shi, W.; Li, Y.; Wang, Y. Noise-free maximum correntropy criterion algorithm in non-Gaussian environment. IEEE Trans. Circuits Syst. II-Express Briefs 2019. [Google Scholar] [CrossRef]

- Chen, B.; Xing, L.; Liang, J.; Zheng, N.; Príncipe, J.C. Steady-state mean-square error analysis for adaptive filtering under the maximum correntropy criterion. IEEE Signal Process. Lett. 2014, 21, 880–884. [Google Scholar]

- Chen, B.; Príncipe, J.C. Maximum correntropy estimation is a smoothed MAP estimation. IEEE Signal Process. Lett. 2012, 19, 491–494. [Google Scholar] [CrossRef]

- Wu, Z.; Shi, J.; Zhang, X.; Ma, W.; Chen, B. Kernel recursive maximum correntropy. Signal Process. 2015, 117, 11–16. [Google Scholar] [CrossRef]

- Chen, B.; Wang, X.; Lu, N.; Wang, S.; Cao, J.; Qin, J. Mixture correntropy for robust learning. Pattern Recognit. 2018, 79, 318–327. [Google Scholar] [CrossRef]

- Wang, F.; He, Y.; Wang, S.; Chen, B. Maximum total correntropy adaptive filtering against heavy-tailed noises. Signal Process. 2017, 141, 84–95. [Google Scholar] [CrossRef]

- Wang, S.; Dang, L.; Chen, B.; Duan, S.; Wang, L.; Chi, K.T. Random fourier filters under maximum correntropy criterion. IEEE Trans. Circuits Syst. I-Regul. Pap. 2018, 65, 3390–3403. [Google Scholar] [CrossRef]

- Qian, G.; Wang, S. Generalized complex correntropy: Application to adaptive filtering of complex data. IEEE Access 2018, 6, 19113–19120. [Google Scholar] [CrossRef]

- Wang, S.; Dang, L.; Wang, W.; Qian, G.; Chi, K.T. Kernel adaptive filters with feedback based on maximum correntropy. IEEE Access 2018, 6, 10540–10552. [Google Scholar] [CrossRef]

- Huang, F.; Zhang, J.; Zhang, S. Adaptive filtering under a variable kernel width maximum correntropy criterion. IEEE Trans. Circuits Syst. II-Express Briefs 2017, 64, 1247–1251. [Google Scholar] [CrossRef]

- Jiang, Z.; Li, Y.; Hunag, X. A correntropy-based proportionate affine projection algorithm for estimating sparse channels with impulsive noise. Entropy 2019, 21, 555. [Google Scholar] [CrossRef]

- Das, R.L.; Chakraborty, M. Improving the performance of the PNLMS algorithm esing l1 norm regularization. IEEE Trans. Audio Speech Lang. Process. 2016, 24, 1280–1290. [Google Scholar] [CrossRef]

- Li, Y.; Li, W.; Yu, W.; Wan, J.; Li, Z. Sparse adaptive channel estimation based on lp-norm-penalized affine projection algorithm. Int. J. Antennas Propag. 2014, 2014, 1–8. [Google Scholar]

- Benesty, J.; Paleologu, C.; Ciochina, S. On regularization in adaptive filtering. IEEE Trans. Audio Speech Lang. Process. 2011, 19, 1734–1742. [Google Scholar] [CrossRef]

| Algorithm | Addition | Multiplication | Division |

|---|---|---|---|

| MCC | 1 | ||

| VKW-MCC | 1 | ||

| AP | 0 | ||

| ZA-AP | 0 | ||

| RZA-AP | L | ||

| PAP | L | ||

| PAPMCC | |||

| LP-VPAP |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, Z.; Li, Y.; Huang, X.; Jin, Z. A Sparsity-Aware Variable Kernel Width Proportionate Affine Projection Algorithm for Identifying Sparse Systems. Symmetry 2019, 11, 1218. https://doi.org/10.3390/sym11101218

Jiang Z, Li Y, Huang X, Jin Z. A Sparsity-Aware Variable Kernel Width Proportionate Affine Projection Algorithm for Identifying Sparse Systems. Symmetry. 2019; 11(10):1218. https://doi.org/10.3390/sym11101218

Chicago/Turabian StyleJiang, Zhengxiong, Yingsong Li, Xinqi Huang, and Zhan Jin. 2019. "A Sparsity-Aware Variable Kernel Width Proportionate Affine Projection Algorithm for Identifying Sparse Systems" Symmetry 11, no. 10: 1218. https://doi.org/10.3390/sym11101218

APA StyleJiang, Z., Li, Y., Huang, X., & Jin, Z. (2019). A Sparsity-Aware Variable Kernel Width Proportionate Affine Projection Algorithm for Identifying Sparse Systems. Symmetry, 11(10), 1218. https://doi.org/10.3390/sym11101218