Abstract

The automatic detection, segmentation, localization, and evaluation of the optic disc, macula, exudates, and hemorrhages are very important for diagnosing retinal diseases. One of the difficulties in detecting such regions of interest (RoIs) with computer vision is their symmetries, e.g., between the optic disc and exudates and also between exudates and hemorrhages. This paper proposes an original, intelligent, and high-performing image processing system for the simultaneous detection and segmentation of retinal RoIs. The basic principles of the method are image decomposition in small boxes and local texture analysis. The processing flow contains three phases: preprocessing, learning, and operating. As a first novelty, we propose proper feature selection based on statistical analysis in confusion matrices for different feature types (extracted from a co-occurrence matrix, fractal type, and local binary patterns). Mainly, the selected features are chosen to differentiate between similar RoIs. The second novelty consists of local classifier fusion. To this end, the local classifiers associated with features are grouped in global classifiers corresponding to the RoIs. The local classifiers are based on minimum distances to the representatives of classes and the global classifiers are based on confidence intervals, weights, and a voting scheme. A deep convolutional neural network, based on supervised learning, for blood vessel segmentation is proposed in order to improve the RoI detection performance. Finally, the experimental results on real images from different databases demonstrate the rightness of our methodologies and algorithms.

1. Introduction

Eye disorders can lead partial or even total loss of vision, significantly affecting life quality []. In order to ensure timely medical intervention and treatment, their early detection relies on the analysis of retinal components such as optic disc (OD), macula (MA), blood vessels (BVs), exudates (EXs), and hemorrhages (HEs). Only intelligent processing of a retinal image can provide real measures of these specific ophthalmological zones. Adding complex image processing to dedicated neural networks and efficient descriptors and classifiers, the system for determining the RoIs in retinal images can be considered as an effective support for decision-making in early disease diagnosis. The system’s input is the retinal image and the output is the degree of retinal RoI damage. Automatic detection based on specific imaging devices and real-time image processing can be used for the early-stage decision of an ophthalmologist. Computer-aided diagnosis in an intelligent system also has the advantages of computational power, speed, and continuous development. Many different approaches for retinal RoI identification and evaluation are found in the literature. However, accurate and simultaneous detection, localization, and evaluation of OD, MA, BV, EX, and HE is a difficult task for such a system. The general difficulty for simultaneous detection of retinal RoIs is the symmetries between them, like between OD and EX (color and, sometimes, segmented shapes) and between EX and HE (segmented shapes). It must have intelligent algorithms for image processing and interpretation and sufficient computational power. Usually, it must exclude the similarities (symmetries) and point out the differences.

The first RoI, the OD, is the easiest to detect, due to its shape, colors, size, and structure. Therefore, many papers are related to its detection and localization [,,,,,,,,,,,,]. For example, the maximum number of decisions from a set of detectors can be used to detect the center of the OD []. By selecting some textural features and image decomposition in overlapping boxes, OD detection becomes more simple and efficient []. Taking into account the density of large blood vessels in the OD region, the authors in [,] proposed fast and accurate methods for OD detection. Considering that the OD has the highest radial symmetry, a methodology based on the radial symmetry detector and separation of vascular information is used in [] for OD localization. Sometimes, the OD and EX are similar in color, intensity, and shape. In pathological images, a large EX can be similar to the OD. To overcome this inconvenience, an automatic OD detection is proposed in [], based on the symmetry axis of blood vessel distribution in this region. Some authors remove the OD before the detection and localization of EXs. To this end, the authors in [] proposed a methodology to differentiate EXs from ODs by considering the features on different color components. The optic disc is identified as a region where the majority of pixels have a great difference on R and G channels. Next, a thresholding method is applied in order to extract the exudates from the obtained binary image. Some features like mean intensity, entropy, uniformity, standard deviation, and smoothness are computed for obtaining an improved accuracy. The mentioned characteristics have different values for EXs and ODs; so, EXs can be identified by removing the areas that do not have matching values of the previous characteristics. For the optic disc detection, in [], an algorithm based on the 2D Gaussian filter and blood vessel segmentation was implemented. Also for OD detection Pereira et al. [] applied a modern algorithm based on ant colony optimization and anisotropic diffusion. More recently, the authors in [] proposed a supervised model for OD abnormality detection for different datasets, taking into account a deep learning approach from global retinal images. The results show a good accuracy and indicate potential applications. Automatic image analysis methods aiming to detect and segment MA and fovea are currently based on support vector machine classification [], hierarchical image decomposition [,], statistical segmentation methods [], deep learning [], and pixel intensity characteristics []. The authors in [] presented a method which combines different OD and MA classifiers to benefit from their strong points (high accuracy and low computation time). With the purpose of detection of macular degeneration, Veras et al. [] proposed some algorithms to localize and segment the MA. Sekhar et al. [] addresses blood vessels localization, segmentation, and their spatial relations in order to detect the optic disc and macula. Based on pixel intensity (low), the authors in [] also proposed the MA detection technique. They also proposed an algorithm for the OD diameter estimation. Akram et al. [] presented a system based on artificial intelligence for evaluation of macular edema to support the ophthalmologists in early detection of the disease. They used a comprehensive characteristics group and a Gaussian-mixture-model-based classifier for accurate detection of MA. Chaudhry et al. [] detect macula from an autofluorescence imaging device in connection with OD position. This automatic positioning is based on a top-down approach using vascular tree segmentation and skeletonization. In [] the authors proposed a method to detect and localize the macula center using two steps: first, the context - based information is aggregated with a statistical type model in order to obtain the approximate localization of the macula; second, the aggregation of seeded mode tracking is used for final localization of the macula center.

Over time, many methods and approaches have been used for the detection of EXs (e.g., thresholding methods considering the green channel [,,]). As a consequence that the illumination conditions are different, image preprocessing algorithms for correction are necessary. Kaur and Mittal [] proposed an algorithm for EX detection which first identifies and rejects the healthy areas based on their characteristics and second segments the EXs from the remaining regions using a region growing method. Bu et al. [] presented a hierarchical procedure for EX detection in eye fundus images to solve some difficulties such as the recognition of similar-colored objects (e.g. cotton wool spots, optic disc, and the normal macular reflection). Using an artificial intelligence method, an automated identification of EXs is obtained in []. After an initial primary processing phase, an image segmentation based on fuzzy clustering is used and a dataset of regions of interest is obtained. For each area, characteristics such as size, color, edge, and texture are extracted. The authors proposed a deep neural network, based on the extracted characteristics for the detection of areas containing EXs. The images are firstly filtered by applying a fuzzy clustering technique. Furthermore, the method in [] localizes and evaluates the exudates, taking into account different image processing techniques, morphological filters, and image features.

The approaches for HE identification commonly used can also be grouped into different categories such as thresholding, segmentation, mathematical morphology, classification, clustering, neural network, etc. For example, Seoud et al. [] described and validated a method for the identification of micro-aneurysms and HEs in retinal images, which can be used in the diagnosis and grading of diabetic retinopathy. Their contribution is a set of dynamic shape features which show the evolution of shapes under a processed image and allow differentiation between lesions and blood vessels. The method was tested against six image datasets, both public and private. A splat-based feature classification algorithm with applications to large, irregular HE detection in retinal images was proposed in [].

For detection and segmentation of retinal RoIs, different classifiers were used: model-based (template matching), minimum distance between vector features, voting scheme, and, recently, neural network (NN). Garcia et al. [] proposed an algorithm for computer - based identification of the lesions due to the diabetic retinopathy. The algorithm is integrated into a system in order to support the ophthalmologist decision in the detection and monitoring of the disease. The authors analyze the performance of three different NN classifiers in automated detection of lesions or exudates: support vector machine, radial basis function, and multilayer perceptron. By applying different criteria and testing on 67 images, they conclude that the multilayer NN classifier based on image criterion has the best results. This research was extended in [], where new criteria and classifiers (e.g., logistic regression) were tested against those in the previous paper. Now, the relationship between the extracted set of characteristics and the class of objects is highlighted. Again, the multilayer perceptron performed the best out of the selected methods, but only by a small margin in comparison with logistic regression. Moreover, in [] a new neural network supervised approach for blood vessel detection was proposed. The proposed algorithm uses an NN arhitecture for pixel classification and creates a vector of moment-invariants-based and gray-level features for pixel representation. The performance of the algorithm was tested on STARE [] and DRIVE [] datasets, with better performance than other existing solutions. The authors in [] used CNNs (Convolutional NNs) to detect hemorrhages. They tried to simplify and speed it up by heuristically classifying samples of the training phase.

The authors in [] presented a methodology and their implementation which is based on a voting procedure with the aim of raising the decision accuracy. They proposed the technique of majority voting and probability approach to evaluate the correctness of decision. The important goal of the work consisted in solving detection problems in image processing algorithms which are geometrically constrained. For example, the algorithm performance was tested with good accuracy for OD identification on the MESSIDOR dataset []. In order to increase the performances of classifications, the voting scheme and NN approaches were combined in []. In this case, two connected NNs were considered: one for feature selection and the other for classification, based on local detectors. Finally, a voting scheme with different weights for local classifiers was used, taking into account the results from associated confusion matrices.

In order to increase the efficiency of the classifiers, primary processing of retinal images is necessary. Therefore, Nayak et al. [,] presented algorithms for identification and monitoring glaucoma and diabetic retinopathy, using primary processing l (histogram equalization, filtering, and morphological operations). For a particular disease, specific characteristics are established. Based on these features, an artificial NN classifier identifies the presence of a particular disease and its evolution. The authors in [] introduce an algorithm based on morphological operations for the identification of micro-aneurysms and HEs in retinal images. The image is firstly primary processed and then, important regions of interest such as OD, blood vessels, and fovea are successively removed from the retinal image. Only the HEs and micro-aneurysms remain. The obtained results are similar to those of other papers as sensitivity and specificity. The method for computer aided detection of the diabetic retinopathy, developed in [], consists of the following phases: image enhancement by local histogram equalization, Gabor filters, SVM (support vector machine) classifier, HE detection, fovea localization, and diabetic retinopathy identification. The algorithms were implemented and tested on free database HRF [] with acceptable sensitivity and specificity. The authors in [] presented a method and algorithms for successive detection of important RoIs in retinal images using an artificial neural network (ANN) and k-means clustering. The ANN was simulated on a common PC and it created time-consuming difficulties for real implementation. The application was developed only for the MESSIDOR database.

As a technical novelty, this paper proposes an Intelligent System for diagnosis and evaluation of regions of interest from Retinal Images (ISRI), with multiple functions: F1, OD detection; F2, MA detection; F3, EX detection and size evaluation; and F4, HE detection and size evaluation. All of these four functions are addressed. Two main contributions of the authors can be mentioned: (a) proper feature selection based on statistical analysis in confusion matrices for different feature type, and (b) the weight-based fusion of the local classifiers (associated with features) into global classifiers (corresponding to the RoIs). The paper is organized as follows: In Section 2, the methodology and algorithms for primary processing of retinal images with the goal of RoI detection, segmentation, and size estimation are described and implemented. In Section 3 the experimental results obtained using images from two databases (STARE and MESSIDOR) are reported and analyzed. Finally, the discussions and conclusions are presented in Section 4 and Section 5, respectively.

2. Materials and Methods

2.1. ISRI Architecture

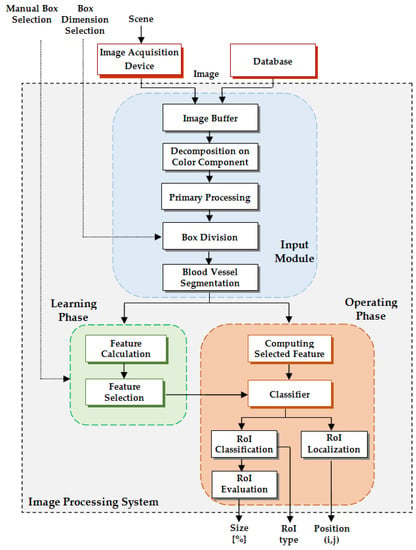

We propose an algorithm and software implementation for the identification and evaluation of four RoIs from retinal images: OD, MA, EX, and HE. It can be considered as an intelligent image processing system and diagnosis support for eye diseases. The basic methodology for determination of the mentioned RoIs is texture analysis in small patches (boxes). ISRI has three inputs (retinal image, I; box dimension setting, BDS; and manual box selection, MBS—the last two only for the learning phase) and three outputs (RoI type, RoI; RoI localization, L(i,j); and size estimation of RoI, S [%]). The ISRI concept is shown in Figure 1. As can be seen, it has two phases: a Learning Phase (LP), considered as a calibration one, and an Operating Phase (OP), considered as a measuring one. Also, ISRI has an Input Module (IM) for image preprocessing which is active in both phases.

Figure 1.

Intelligent System for diagnosis and evaluation of regions of interest from Retinal Images concept (RoI – Region of Interest).

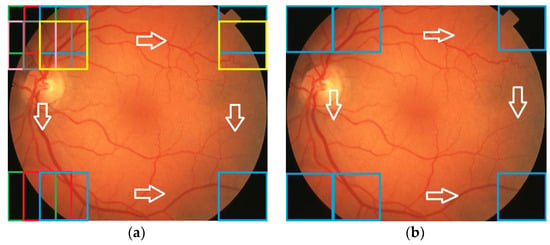

The IM contains five blocks configured in a pipeline structure: Image Buffer (IB), Decomposition on Color Components (DCC), Primary Processing (PP), Box Division (BD), and Blood Vessel Segmentation (BVS). The retinal image may come from an Image Acquisition Device (IAD) or from an Image Database (DB) and it is stored in the IB to then be processed. First, it is decomposed in color components of RGB and HSV (Hue—Saturation—Value) spaces by DCC. This image is processed by the PP block to improve the representation using the CLAHE algorithm (Contrast-Limited Adaptive Histogram Equalization []). Images to be analyzed are further decomposed in boxes by the BD block with the aid of two algorithms: the sliding box algorithm (Figure 2a) and the nonoverlapping box algorithm (Figure 2b). The box dimension is manually selected (Box Dimension Selection, BDS) depending of the image resolution, the algorithm for division, and the type of RoI. The last operation in this initial image processing phase is the blood vessel segmentation by the BVS block.

Figure 2.

Exemplification of box division of the analyzed image: (a) sliding box algorithm; (b) nonoverlapping box algorithm.

The LP contains two successive steps: Step 1, calculating the values of a set of features for the regions of interest (Feature Calculation module, FC); and Step 2, selecting effective features for class representation (Feature Selection module, FS). For the LP, manually selected boxes are used (Manual Box Selection, MBS). There are also two successive steps in the OP: Step 1, calculating the values of the selected features corresponding to different RoIs for the boxes extracted from the input image (Computing Selected Features module, CSF); and Step 2, assigning the class label to the RoI (Classifier module, C). RoI_C selects the box type (OD, MA, EX, HE) as a consequence of the RoI classifiers’ decisions. The size evaluation of the detected RoI is done by segmentation and “1”s counting in the RoI_E block. RoI localization is done by the RoI_L block as the position of the upper corner (i,j) of the investigated box.

In order to calibrate and test the system, two well-known public databases were used: STARE [] and MESSIDOR []. They contain both healthy subjects and pathological cases with abnormalities. The STARE database consists of 400 retinal images captured by a TopCon TRV-50 fundus camera. It also contains two sets of manual segmentations obtained by two ophthalmologists used as references. The MESSIDOR database contains three sets of eye fundus images (1200 color images extracted with a color video 3CCD camera on a TopCon TRC NW6 nonmydriatic retinograph with a 45 degree field of view) with 8 bits per color plane at 1440 × 960, 2240 × 1488, or 2304 × 1536 pixels. Each image is in TIFF format and has an associated medical annotation.

2.2. Input Module

The input module has four main functions: image decomposition on color channels, primary processing of each component, box division on each color channel, and blood vessel segmentation in each box. Because the retinal images have zones with different brightness and also contain noises with different small patterns in the background, primary processing for image enhancement is needed. Therefore, the first operation in primary processing is noise rejection. Due to efficiency and edge preserving, for noise reduction, a median filter (3 × 3 kernel) and morphological filter (erosion and dilation) were used in the PP for each component of interest of the color spaces (R, G, B, and H). Because the eye fundus images have usually low local contrast the histogram equalization is performed. Another primary processing operation, binarization, is used in the blood vessel segmentation (IM) and also in the final RoI segmentation (OP). The above operations are commonly used and will not be detailed in this work. Due to primary processing methods (noise elimination), EXs or HEs of less than 3 × 3 pixels cannot be detected.

As we mentioned above, two types of box division algorithms are used. For OD and MA localization we used a sliding box algorithm as it is necessary that a box contains the entire RoI to be analyzed. We have chosen the sliding window approach for OD and MA localization because it is more possible to get a fine matching of these regions of interest in a sliding window rather than in a traditional approach (nonoverlapping—fixed—windows). For EX and HE, a nonoverlapping algorithm is used because it is simpler in terms of computing effort and it is necessary for the global evaluation of diseases (the spread on the entire image).

The algorithm for creating the sliding boxes starts with the box from the upper left corner of the image and a specified step sliding (Figure 2a). The analyzed box slides until it touches the right margin of the image. Then, the sliding box returns to the beginning and performs a vertical down slide, moving on to the next row. The algorithm runs until the lower right corner is touched. The nonoverlapping box algorithm for EX and HE is presented in Figure 2b. In this case, the box division has a smaller range than OD or MA (¼ of the sliding box dimension).

For high-precision localization, an optimum box size must be chosen depending on the database and a good framing of the RoI (the minimum dimension framing the RoI). This dimension is experimentally chosen (input BDS). At each step of the sliding box algorithm, a new box is considered. Thus, a matrix of boxes (grid of boxes) is created. The box has a unique ID consisting of the row number and column number in the grid of boxes. The size of the sliding step depends of the resolution of the input images (like the patch dimension); for example, in the case of the STARE database it was experimentally chosen to be 10 pixels, while in the case of the MESSIDOR database it was 15 pixels.

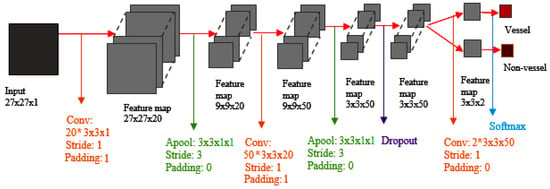

Blood vessel detection is necessary only for F1 and F4 functions (OD and HE detection). The blood vessel segmentation (BVS block) is necessary in two situations: for more accurate detection of the OD in function F1 (in this case blood vessels need to be highlighted) and for more accurate detection of the HEs in function F4 (in this case blood vessels need to be removed). In other cases, blood vessel detection is not considered. The input to BVS is a box obtained from box division algorithms on the green channel (for better contrast of blood vessels). This box is divided into smaller boxes of dimension 27 × 27 pixels using a sliding box algorithm with a sliding step size of 1 pixel. The new boxes represent the input in the main module of BVS, namely the CNN. The CNN has three convolutional layers (one of which is fully connected), two pooling layers (average pooling layers), one dropout layer, and a Softmax layer. The CNN-based architecture of BVS is presented in Figure 3 []. The input data for the proposed neural network (the input layer) are boxes of 27 × 27 pixels on the green channel (STARE DB case). For images with higher resolution like those from MESSIDOR, larger boxes (55 × 55 pixels) can be considered but in this case an adjustment of the CNN is necessary. (Note that the learning phase of the blood vessel segmentation does not have to be repeated for different resolutions of the input images. However, it is possible, on demand, with consideration of the training time.) These boxes are passed through the entire network to get their classification into two classes: vessel (V) and nonvessel (NV). The second layer with 20 neurons (local 3 × 3 filter functions) is a convolutional type with both stride and padding equal to 1. The third layer is an average pooling one, which performs the image subsample, based on the mean in a 3 × 3 sliding box.

Figure 3.

Convolutional neural network (CNN)-based architecture of blood vessel segmentation (BVS).

The new image (box) has a resolution of 9 × 9 (stride equal 3). The next layer with 50 neurons (filters) is also a convolutional type, with both stride and padding equal to 1 and, consequently, with output 9 × 9 pixels. The fifth layer is a new pooling layer with stride equal to 3 (padding 0) which reduces the box dimension to 3 × 3. It follows a dropout layer for the regularization of the network (the rate is equal to 0.5). The penultimate layer is a convolutional one of the fully connected type with 2 neurons (for classification), stride 1, and padding 0. The final layer of the CNN is called Softmax, is based on a loss function measure, and has two outputs: V and NV. The blood vessel segmentation was made by pixelwise classification. The training of the CNN used 50 epochs and 4000 small boxes of 27 × 27 pixels (2000 with vessels and 2000 without vessels) on the green channel. The CNN for blood vessel segmentation was implemented using MatConvNet.

2.3. Learning Phase

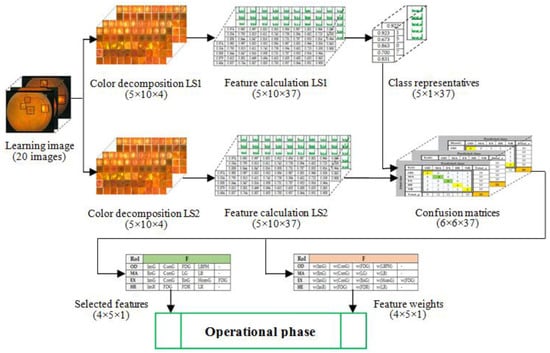

The learning phase is necessary because this system can be used for different DBs and different fundus cameras. It can be mentioned that the set parameters in the LP can be stored and are not required for each use of the system. LP is usually necessary when the imaging device for the retina is changed, another retinal database is used, or other parameters are required. Therefore, in the LP, the system calibration is practically done. We used a supervised method for system training because, in this case, it performs better and requires less computational effort than an unsupervised one. To this end, a set of candidate features (CF, Table 1) for each box from a learning set (LS) was calculated. Five classes are considered: OD, MA, EX, HE (as RoIs), and the class of the rest, NR (Non-RoI). The LP block diagram is presented in Figure 4. In order to select the features used in the segmentation process, the LP is divided into two tasks: (1) the establishment of the representative value of the features for each class, and (2) feature selection and establishment of classifiers for the segmentation process of each class. Correspondingly, LS is also divided into two disjoint sets: LS1 (learning set for Task 1) and LS2 (learning set for Task 2).

Table 1.

Feature set (candidate features, CF) for learning phase.

Figure 4.

Block diagram for the learning phase.

Each class has 10 representative boxes in LS1 and 10 representative boxes in LS2 (experimentally and arbitrarily chosen). Thus, the number of boxes is 50 for LS1 (5 × 10 = 50 boxes) and also for LS2 (2).

Task 1. For each feature F from (CF), the representative of each class is established by averaging the corresponding feature values on the representative class boxes LS1: . The exemplification of the representative feature calculation for the OD class is done in the next section (Table 2).

Table 2.

Exemplification of feature calculation for the OD class (marked with orange are the features selected for OD).

Task 2. This task consists of feature selection and weight assignation for classifiers. Because the classification process takes into account heterogeneous features, it is convenient to consider the image classification based on weighted local classifiers. To this end, another set (LS2) of 50 boxes (10 for each class) is used. Taking into account the representatives of classes established in Task 1, each box from the learning set LS2 is classified based on minimum distance to the representatives of the classes for each feature. The considered distances are the absolute differences in the case of scalar features and the Minkowski distance of order 1 in the case of the Local Binary Pattern (LBP) histogram (Table 1).

2.3.1. Feature Evaluation for Class Representatives

In order to calibrate the system for different features and color channels (corresponding to RoIs) the following set of texture features are investigated [,]: statistical features of order 1 (Im, mean intensity), features extracted from co-occurrence matrices (Con, contrast; En, energy; Ent, entropy; Hom, homogeneity; Cor, correlation; Var, variance), fractal type features (Dm, mass fractal dimension; L, lacunarity) and LBP type features (LBP, histogram of Local Binary Pattern) (Table 1). The mean intensity Im is a statistical characteristic, calculated as the average of the pixel intensity I(i,j) on different color channels for each box. M × M represents the box dimension. The co-occurrence matrix Cd is computed as the average of eight co-occurrence matrices of dimension K × K, where K represents the number of levels in the monochrome image, with a displacement d and directions at 0, 45°, 90°, 135°, 180°, 225°, 270°, and 315°. For Con, Ent, En, Hom, Cor, and Var, the parameter d (distance in pixels) is considered as a subscript.

For DFD calculation, a 3D configuration is created in order to be covered with boxes of dimension r × r× s, where r is the division factor and s is the box height s = rImax/M []. Here Imax represents the maximum value of the intensity level and M × M is the image dimension. Each pixel (i,j) from the square Sr(u,v) of dimension r × r has an intensity value I(i,j) which belongs to a box from the stick of boxes with height s. The maximum value of I(i,j), for , is noted by p(u,v)and the minimum value is noted by q(u,v) (Table 1). It can be considered that nr(u,v), which is the difference between p(u,v) and q(u,v), covers the 3D relief of the monochrome image created by Sr(u,v) and I(i,j), for . Therefore, covers the entire configuration created on the monochrome image I. Next, the DFD algorithm is similarly with the standard box counting algorithm. The lacunarity, which characterizes the homogeneity and gaps, is a powerful tool in medical image classification and it is also a fractal type feature []. The lacunarity can be considered as a complementary feature because it can differentiate between two textures of the same fractal dimension. A high value of lacunarity shows that the texture has many holes and great heterogeneity.

Another feature successfully used in texture classification is the LBP histogram. For each pixel, a neighborhood of dimension (2h + 1) × (2h + 1) is considered. The contour of the neighborhood is of 8h = n pixels and the texture defined in the neighborhood of the central pixel is described as a vector of elements

where vC represents the value of the central pixel pC and v1, v2, …, vn are the values of its neighbors p1, p2, …, pn (p1 is the pixel from upper left corner of the neighborhood). The neighborhood pixels are tested clockwise. Based on the type of difference obtained (negative or positive) between the central pixel vC and each neighbor (3), defined in VT, the LBP code [] can be computed as a sequence of binary numbers (by concatenating the result of each difference), as described in Equation (4).

VT = [vC, v1, v2, …, vn]

After computing the binary format, the LBP code is converted to decimal format (Equation (5)).

The LBP histogram vector H = [H0, H1, …, Hn − 1] is calculated as a histogram of values of LBP codes of all box pixels.

At the end of this task, the representatives of the classes are the averages of the feature values for the boxes considered in LS1 (Table 2, Table 3 and Table 4).

Table 3.

LBP on H for the set of 10 boxes for the establishment of the OD class.

Table 4.

Results for class representatives. Marked with green are the features selected for different RoIs.

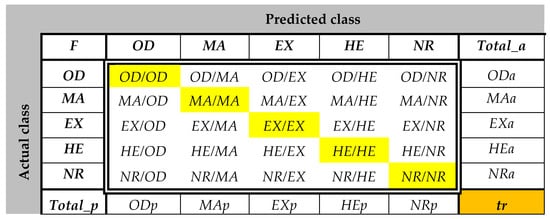

2.3.2. Feature Selection and Establishment of Classifiers

1. Feature Selection

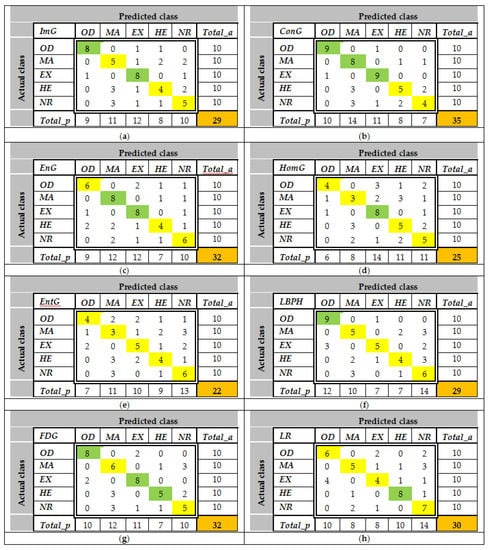

Feature selection is the most difficult problem due to the similarities or symmetries between the retinal RoIs—OD and EX (color and segmented shapes) or EX and HE (segmented shapes). It is done in the learning phase by the means of confusion matrices (CMs), calculated for each feature from the previous set and for each color component. CMs (Figure 5) are computed by considering sets of 10 manually selected images (boxes) for each class—OD, MA, EX, HE, and NR. In CM, ODa represents the total number of actual boxes which contain OD, MAa represents the total number of actual boxes which contain MA, and so on. Similarly, ODp represents the total number of predicted boxes which contain OD, MAp represents the total number of predicted boxes which contain MA, and so on. OD/OD represents the total number of boxes which contain the OD and were correctly predicted. Similarly, MA/MA represents the total number of boxes which contain the MA and were correctly predicted, OD/MA represents the number of boxes containing OD classified as MA, and so on. tr is the total number of boxes correctly classified, meaning the trace of CM. In order to select a feature as efficient for the classification process, the trace of CM is computed (Equation (6)).

Figure 5.

Confusion matrix (CM) for one feature F.

Using the concept of the confusion matrix (Figure 5), three selection criteria are used:

- If , where Total (Equation (7)) represents the number of boxes considered, then the tested feature is a relevant one and it is kept; generally, this feature can be used for more RoIs.In our case, Total = 50.

- To include a feature into the set of selected features for the class Ci, the corresponding value Ci/Ci from its CM must be higher than 0.75Cia, where Cia represents the total boxes containing Ci used in the learning phase, Task 2 (LS2)—in our case, Cia = 10. These criteria act as the performance evaluation, in order to select the most efficient features from a given set of features.

- If the distance (Minkowski of order 1 []) between two CMs (corresponding to two features) is low, then one of the features can be rejected as redundant.

For simplicity, we considered the following notation:

C1 = OD, C2 = MA, C3 = EX, C4 = HE, C5 = NR

2. Calculation of Feature Weights and Establishment of Classifiers

In the Feature Selection task, a set of efficient features was selected for each class Ci, i = 1, 2, 3, 4 (e.g., excluding NR). Then, for each class Ci and selected feature Fj, from the corresponding CM a weight wij is calculated using Equation (9) and a local classifier Dij (11) is considered and associated with wij.

A local classifier Dij for the class Ci and the feature Fj is implemented in the following way. First, a confidence interval is created (Equation (10)), where min and max represent, respectively, the minimum and the maximum of the values Fj for the class Ci obtained from the LS1 set. If Fj = LBPH, then a confidence band for the histogram is considered. The classifier Dij is given in Equation (11), where B is the box to be classified.

Based on local classifiers Dij for each class Ci, a global classifier Di is developed (Equation (12)). Thus, by considering the local classifier Dij, an intelligent processing network for classification predicts that the patch B belongs to class Ci, i = 1, 2, 3, 4, if the associated weighting vote Di(B) (Equation (12)) is maximum and higher than , where s is the number of features associated with the class Ci. If not, then B belongs to the class C5 = NR. The threshold 0.7 was experimentally chosen. This can be considered as classification process based on a voting scheme with a threshold.

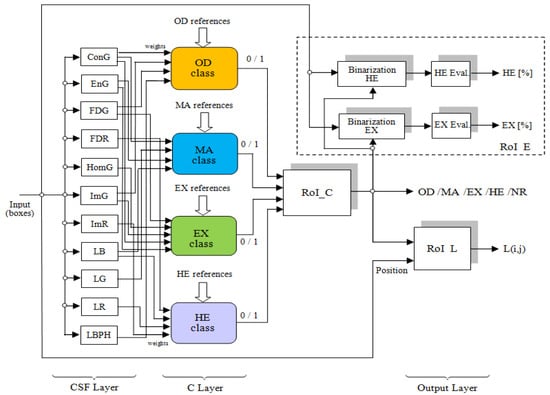

Taking into account the selection criteria, in the learning phase, Step 2, for each RoI a dedicated classifier is built: D1 for the OD class, D2 for the MA class, D3 for the EX class, and D4 for the HE class. These classifiers are visible in the operating phase (Figure 6). So, each classifier has specific features (Table 5): D1—ImG, ConG, FDG, and LBPH; D2—ConG, EnG, LG, and LB; D3—ImG, ConG, EnG, HomG, and FDG; D4—ImR, FDR, LB, and LR.

Figure 6.

Block diagram for the operating phase (OP) and output module (included).

Table 5.

Results of test for feature selection and their weight establishment. Marked with gray are the classes which accept the corresponding feature for the classifier and the associated weights.

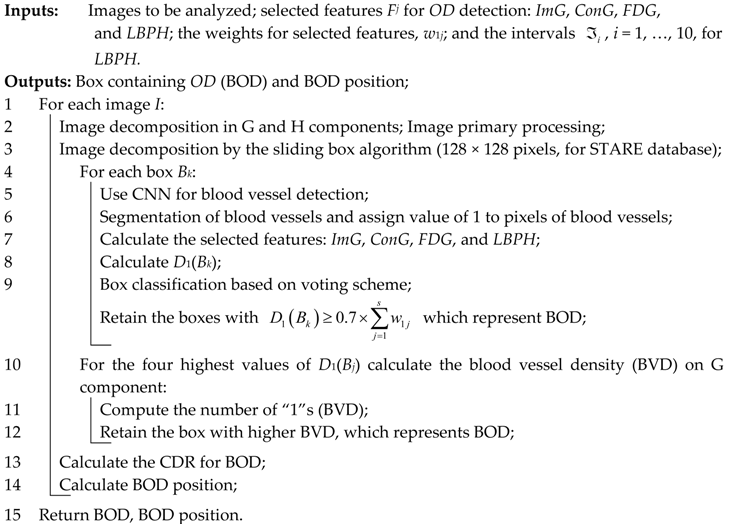

2.4. Operating Phase

The OP (Figure 6) classifies the boxes obtained by division of the retinal image under investigation and also determines some parameters of the detected RoIs like position and size. The block diagram from Figure 6 shows a multilevel structure of this phase. The first level does the calculation of features established in the learning phase. All features are computed in a parallel manner: contrast on green (ConG), energy on green (EnG), homogeneity on green (HomG), mean intensity on green (ImG), mean intensity on red (ImR), differential fractal dimension on green (FDG) differential fractal dimension on red (FDR), lacunarity on blue (LB), lacunarity on green (LG), lacunarity on red (LR), and local binary pattern on H (LBPH). The second layer is the classifiers’ layer for OD, MA, EX, and HE. For each feature involved, the corresponding weight (from the learning phase) is assigned. The classifiers, based on a voting scheme, use the representatives of the classes also established in the learning phase. The classifier outputs are equal to 1 if the box contains the corresponding RoI and 0 if not. The last layer of the structure represents the output layer (module) of ISRI and establishes the type of RoI—OD, MA, EX, HE, or NR (by RoI detection module)—RoI position, and the evaluation of the size of RoI (as percent from box area). To this end, a binarization procedure is needed. Below, the algorithms for RoI detection and evaluation are presented (Algorithms 1–4).

(a) OD Detection and Localization

| Algorithm 1: Operating Phase—OD |

|

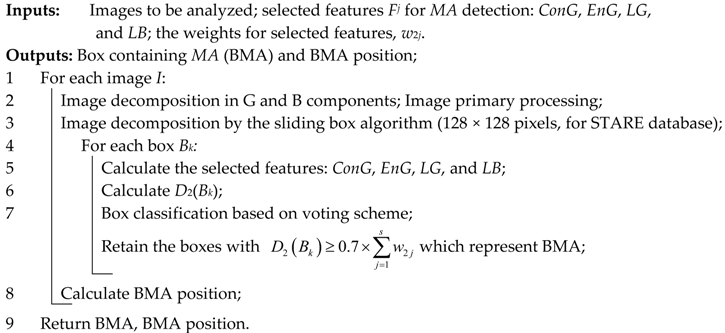

(b) MA Detection and Localization

| Algorithm 2: Operational Phase—MA |

|

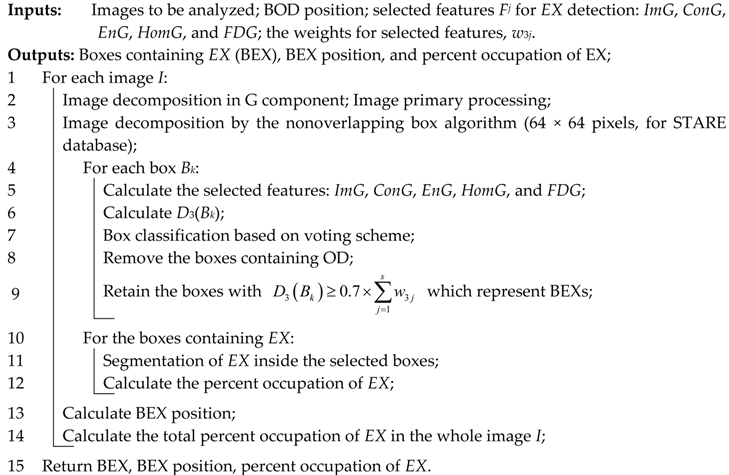

(c) EX Detection, Localization, and Evaluation

| Algorithm 3: Operational Phase—EX |

|

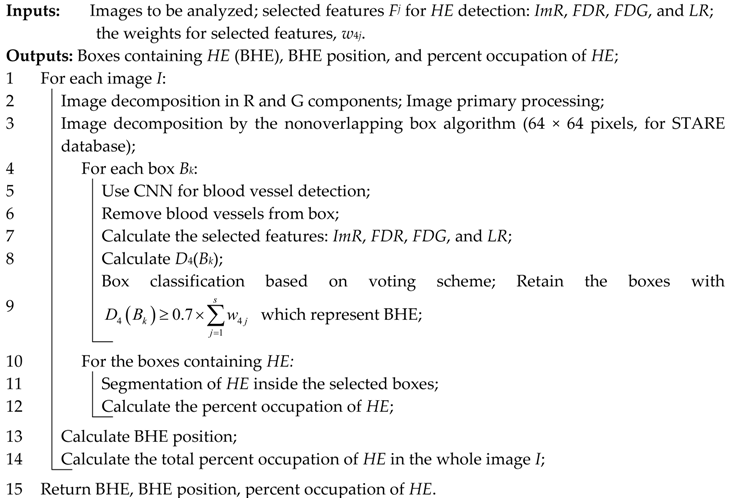

(d) HE Detection, Localization, and Evaluation

| Algorithm 4: Operational Phase—HE |

|

3. Experimental Results

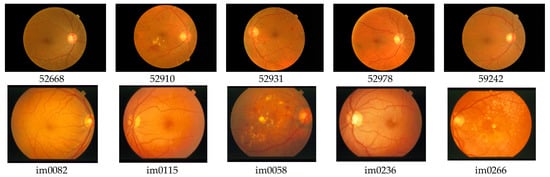

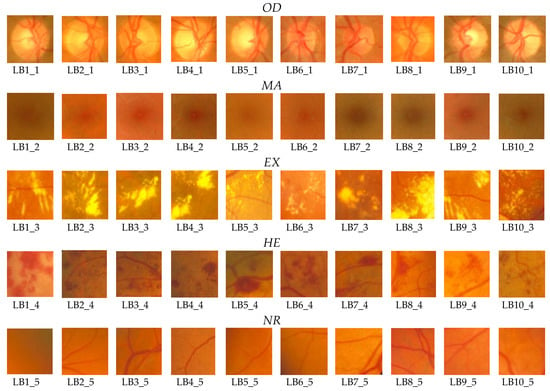

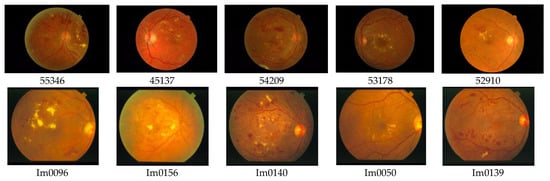

For the testing and validation of the proposed sensor we used 100 boxes from 60 images (Figure 7) for the learning phase and 200 images for the operating phase. As mentioned in Section 2, 50 boxes (10 for OD, 10 for MA, 10 for EX, 10 for HE, and 10 for NR; Figure 8) were used in feature selection and another 50 boxes were used in classifier implementation. A box dimension of 128 × 128 pixels was used for STARE and a box dimension of 256 × 256 pixels was used for MESSIDOR. The sliding step sizes (BDS) were 10 pixels for the STARE DB and 15 pixels for the MESSIDOR DB. In BVS, the sliding step size was 1 pixel for both. The same features were selected both in the STARE case and the MESSIDOR case. Examples of feature calculations for OD class representations are presented in Table 2 (36 features) and Table 3 (LBP feature). The last column contains the average values of the row. The results concerning the representatives for the classes OD, MA, EX, and HE are presented in Table 4. The features from CF (Table 1) were tested on R, G, B, and H color components and, in concordance with the selection criterion, the selected features and the corresponding color channels are marked with green in Table 4.

Figure 7.

Examples of images from MESSIDOR (top) and STARE (bottom) databases for the learning phase.

Figure 8.

Boxes of RoIs for feature calculation.

The confusion matrices are calculated using the set LS2 for all features and all classes. Some examples of CMs are presented in Figure 9. As can be observed, the values greater than 8 (greater than 0.75Cia—see feature selection from the Section 2.3.2) are highlighted with green. For example, according to Figure 9a, ImG is a feature selected for OD and EX detection. Similarly, Figure 9b determines the classifiers which use ConG, Figure 9c determines the classifiers which use EnG, Figure 9d determines the classifiers which use HomG, Figure 9f determines the classifiers which use LBPH, Figure 9g determines the classifiers which use FDG, and Figure 9h determines the classifiers which use LR. It can be observed in Figure 9e that EntG is not a proper feature for any class.

Figure 9.

Examples of CM calculations and feature selection. Marked with green are the classes which accept the corresponding feature for the classifier.

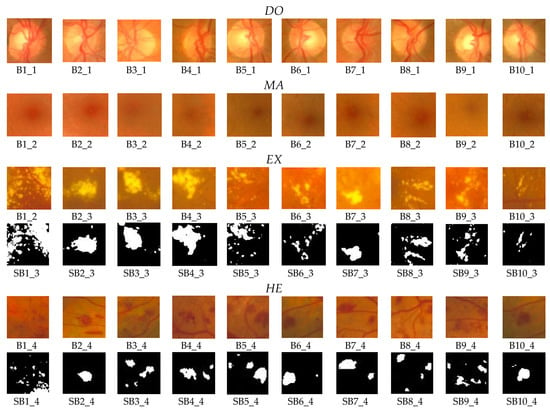

Table 5 presents the traces of all confusion matrices associated with features (the rows represent such CM traces). The fulfillment of the selection criterion for the feature-class pair (from the confusion matrices) and also the associated weights for classifiers are highlighted in gray. The last column indicates the class which uses the corresponding feature in their classifier. The features and associated weights for each RoI can be extracted from Table 5. The classifiers from Equation (12)—D1, D2, D3, and D4—were implemented taking into account the confidence intervals and band associated with the weights and the corresponding RoIs (OD, MA, EX, and HE) (Table 6). For the operational phase testing, both MESSIDOR and STARE databases were used (Figure 10). Some results concerning the box classification are given in Figure 11. The boxes classified as EX or HE have undergone a segmentation process (Figure 11, boxes labeled with SB). The results of size evaluation of EXs and HEs are presented in Table 7 and Table 8, respectively. Then, by summing the partial areas of EXs (HEs) from boxes belonging to the same image, a global evaluation in pixels and percent can be obtained (Table 9).

Table 6.

Results for class representatives.

Figure 10.

Example of images from MESSIDOR (top) and STARE (bottom) databases for the operating phase.

Figure 11.

Results of box classification.

Table 7.

Area and percentage of EXs for different segmented boxes.

Table 8.

Area and percentage of HEs for different segmented boxes.

Table 9.

Segmented boxes with EX from the image Im0001 (STARE DB). Partial and total size and corresponding percentage.

4. Discussion

For testing and evaluation of the proposed system we used a set of 200 images from STARE and MESSIDOR databases (S1: 50 normal, without EX or HE; S2: 50 only with EX; S3: 50 only with HE; S4: 50 with both EX and HE). From S1, S2, S3, and S4, OD and MA were detected; from S2 and S4, EX was evaluated; and from S3 and S4, HE was evaluated. The flexibility of the system allows the use of different box dimensions for different databases. For box classification, the performances are presented in Table 10, where TP is true positive, TN is true negative, FP is false positive, and FN is false negative. The obtained performances in terms of accuracy are presented and compared with other methods in Table 11. It can be observed that only our system performs the detection of all RoIs and the results are similar to or better than those of other methods.

Table 10.

The confusion matrices and the performances for box classification.

Table 11.

Performance comparison (accuracy (%)).

In this particular experiment the blood vessel analysis was not considered for OD detection as it has been detailed in a previous work by the authors []. By considering the pixel density of the blood vessels in the candidate boxes to contain OD, it was experimentally shown that the accuracy detection increased. Contrarily, by eliminating the blood vessels from the retinal image, HE detection and segmentation gave better results. As a direction for feature research, we propose to extend the system functions to other parameters like OD diameter, cup/OD diameter ratio, and blood vessel segmentation of an entire image.

5. Conclusions

The proposed method for the detection and evaluation of retinal RoIs takes into consideration information about pixel distribution (second-order statistics), color, and fractal textures. An intelligent system is thus implemented which contains three main modules: preprocessing, learning, and operating. The retinal images are decomposed into boxes (of variable size) for different RoIs, with the aid of a sliding box algorithm or a nonoverlapping box algorithm. Furthermore, a CNN structure is proposed for blood vessel segmentation. In the learning phase, effective feature selection is made based on the primary statistic information provided by confusion matrices and minimum distance classifiers. Two important cues are considered for the operational mode: weights given to the selected features and confidence intervals associated with the selected features for each class (representatives of classes). Conclusively, statistical analysis and comparison with similar works validated the implemented system.

Acknowledgments

This work has been funded by University POLITEHNICA of Bucharest, through the “Excellence Research Grants” Program, UPB—GEX 2017. Identifier: UPB—GEX2017, Ctr. No. 22/2017 (SET)”.

Author Contributions

Dan Popescu conceived the system. Dan Popescu and Loretta Ichim contributed to system implementation and wrote the paper. Loretta Ichim designed and performed the experiments for image processing.

Conflicts of Interest

The authors declare no conflict of interest. The founding sponsors had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Li, J.Q.; Welchowski, T.; Schmid, M.; Letow, J.; Wolpers, A.C.; Holz, F.G.; Finger, R.P. Retinal Diseases in Europe. Prevalence, Incidence and Healthcare Needs; EURETINA Report; EURETINA: Bonn, Germany, 2017. [Google Scholar]

- Mohamed, N.A.; Zulkifley, M.A.; Hussain, A.; Mustapha, A. Local binary patterns and modified red channel for optic disc segmentation. J. Theor. Appl. Inf. Technol. 2015, 81, 84–91. [Google Scholar]

- Mohammad, S.; Morris, D.T.; Thacker, N. Texture analysis for the segmentation of optic disc in retinal images. In Proceedings of the 2013 IEEE International Conference on Systems, Man, and Cybernetics, Manchester, UK, 13–16 October 2013; pp. 4265–4270. [Google Scholar] [CrossRef]

- Popescu, D.; Ichim, L.; Caramihale, T. Computer—Aided localization of the optic disc based on textural features. In Proceedings of the 9th International Symposium on Advanced Topics in Electrical Engineering, Bucharest, Romania, 7–9 May 2015; pp. 307–312. [Google Scholar]

- Mendonça, A.M.; Sousa, A.; Mendonça, L.; Campilho, A. Automatic localization of the optic disc by combining vascular and intensity information. Comput. Med. Imaging Graph. 2013, 37, 409–417. [Google Scholar] [CrossRef] [PubMed]

- Ichim, L.; Popescu, D.; Cirneanu, S. Combining blood vessel segmentation and texture analysis to improve optic disc detection. In Proceedings of the 5th IEEE International Conference on E-Health and Bioengineering, Iaşi, Romania, 19–21 November 2015; pp. 1–4. [Google Scholar]

- Qureshi, R.J.; Kovacs, L.; Harangi, B.; Nagy, B.; Peto, T.; Hajdu, A. Combining algorithms for automatic detection of optic disc and macula in fundus images. Comput. Vis. Image Underst. 2012, 116, 138–145. [Google Scholar] [CrossRef]

- Dehghani, A.; Moghaddam, H.A.; Moin, M.-S. Optic disc localization in retinal images using histogram mathing. EURASIP J. Image Video Process. 2012, 2012, 19. [Google Scholar] [CrossRef]

- Zhang, D.; Zhao, Y. Novel accurate and fast optic disc detection in retinal images with vessel distribution and directional characteristics. IEEE J. Biomed. Health Inform. 2016, 20, 333–342. [Google Scholar] [CrossRef] [PubMed]

- Xiong, L.; Li, H. An approach to locate optic disc in retinal images with pathological changes. Comput. Med. Imaging Graph. 2016, 27, 40–50. [Google Scholar] [CrossRef] [PubMed]

- Rahebi, J.; Hardalac, F. A new approach to optic disc detection in human retinal images using the firefly algorithm. Med. Biol. Eng. Comput. 2016, 54, 453–461. [Google Scholar] [CrossRef] [PubMed]

- Dashtbozorga, B.; Mendonca, A.M.; Campilhoc, A. Optic disc segmentation using the sliding band filter. Comput. Biol. Med. 2015, 56, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Panda, R.; Puhan, N.B.; Panda, G. Global vessel symmetry for optic disc detection in retinal images. In Proceedings of the Fifth National Conference on Computer Vision, Pattern Recognition, Image Processing and Graphics (CVPRIPG 2015), Patna, Bihar, 16–19 December 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Giachetti, A.; Ballerini, L.; Trucco, E. Accurate and reliable segmentation of the optic disc in digital fundus images. J. Med. Imaging 2014, 1, 024001. [Google Scholar] [CrossRef] [PubMed]

- El-Abbadi, N.K.; Al-Saadi, E.H. Automatic detection of exudates in retinal images. Int. J. Comput. Sci. Issues 2013, 10, 237–242. [Google Scholar]

- Youssif, A.A.; Ghalwash, A.Z.; Ghoneim, A.S. Optic disc detection from normalized digital fundus images by means of a vessels’ direction matched filter. IEEE Trans. Med. Imaging 2008, 27, 11–18. [Google Scholar] [CrossRef] [PubMed]

- Pereira, C.; Gonçalves, L.; Ferreira, M. Optic disc detection in color fundus images using ant colony optimization. Med. Biol. Eng. Comput. 2013, 51, 295–303. [Google Scholar] [CrossRef] [PubMed]

- Alghamdi, H.S.; Tang, H.L.; Waheeb, S.A.; Peto, T. Automatic optic disk abnormality detection in fundus images: a deep learning approach. In Proceedings of the Medical Image Computing and Computer-Assisted Interventions—MICCAI 2016, Athens, Greece, 17–21 October 2016; Volume LNCS 9900, pp. 1–8. [Google Scholar] [CrossRef]

- Cheng, J.; Wong, D.W.K.; Cheng, X.; Liu, J.; Tan, N.M.; Bhargava, M.; Cheung, C.M.G.; Wong, T.Y. Early age-related macular degeneration detection by focal biologically inspired feature. In Proceedings of the 19th IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012; pp. 2805–2808. [Google Scholar] [CrossRef]

- Hijazi, M.H.A.; Coenen, F.; Zheng, Y. Data mining techniques for the screening of age-related macular degeneration. Knowl.-Based Syst. 2012, 29, 83–92. [Google Scholar] [CrossRef]

- Zheng, Y.; Hijazi, M.H.A.; Coenen, F. Automated disease/no disease grading of age-related macular degeneration by an image mining approach. Investig. Ophthalmol. Vis. Sci. 2012, 53, 8310–8318. [Google Scholar] [CrossRef] [PubMed]

- Köse, C.; Şevik, U.; Gençalioğlu, O.; İkibaş, C.; Kayıkıçıoğlu, T. A statistical segmentation method for measuring age-related macular degeneration in retinal fundus images. J. Med. Syst. 2010, 34, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Burlina, P.; Freund, D.E.; Joshi, N.; Wolfson, Y.; Bressler, N.M. Detection of age-related macular degeneration via deep learning. In Proceedings of the 13th IEEE International Symposium on Biomedical Imaging (ISBI 2016), Prague, Czech, 13–16 April 2016; pp. 184–188. [Google Scholar] [CrossRef]

- Liang, Z.; Wong, D.W.K.; Liu, J.; Chan, K.L.; Wong, T.Y. Towards automatic detection of age-related macular degeneration in retinal fundus images. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; pp. 4100–4103. [Google Scholar] [CrossRef]

- Veras, R.; Medeiros, F.; Silva, R.; Ushizima, D. Assessing the accuracy of macula detection methods in retinal images. In Proceedings of the 18th International Conference on Digital Signal Processing (DSP 2013), Santorini, Greece, 1–3 July 2013; pp. 1–6. [Google Scholar] [CrossRef]

- Sekhar, S.; El-Samie, F.; Yu, P.; Al-Nuaimy, W.; Nandi, A. Automated localisation retinal features. Appl. Opt. 2011, 50, 3064–3075. [Google Scholar] [CrossRef] [PubMed]

- Tan, N.M.; Wong, D.W.K.; Liu, J.; Ng, W.J.; Zhang, Z.; Lim, J.H.; Tan, Z.; Tang, Y.; Li, H.; Lu, S.; et al. Automatic detection of the macula in the retinal fundus image by detecting regions with low pixel intensity. In Proceedings of the International Conference on Biomedical and Pharmaceutical Engineering, (ICBPE’09), Singapore, 2–4 December 2009; pp. 1–5. [Google Scholar] [CrossRef]

- Akram, M.U.; Tariq, A.; Khan, S.A.; Javed, M.Y. Automated detection of exudates and macula for grading of diabetic macular edema. Comput. Methods Programs Biomed. 2014, 114, 141–152. [Google Scholar] [CrossRef] [PubMed]

- Chaudhry, A.R.; Bellmann, C.; Le Tien, V.; Klein, J.C.; Parra-Denis, E. Automatic macula detection in human eye fundus auto-fluorescence images: Application to eye disease localization. In Proceedings of the 10th European Congress of ISS, Milano, Italy, 26–29 June 2009; pp. 1–6. [Google Scholar]

- Wong, D.W.; Liu, J.; Tan, N.M.; Yin, F.; Cheng, X.; Cheng, C.Y.; Cheung, G.C.; Wong, T.Y. Automatic detection of the macula in retinal fundus images using seeded mode tracking approach. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC 2012), San Diego, CA, USA, 28 August–1 September 2012; pp. 4950–4953. [Google Scholar] [CrossRef]

- Phillips, R.; Forrester, J.; Sharp, P. Automated detection and quantification of retinal exudates. Graefe’s Arch. Clin. Exp. Ophthalmol. 1993, 231, 90–94. [Google Scholar] [CrossRef]

- Ege, B.; Hejlesen, O.; Larsen, O.; Moller, K.; Jennings, B.; Kerr, D.; Cavan, D. Screening for diabetic retinopathy using computer based image analysis and statistical classification. Comput. Meth. Programs Biomed. 2000, 62, 165–175. [Google Scholar] [CrossRef]

- Usher, D.; Dumskyj, M.; Himaga, M.; Williamson, T.H.; Nussey, S.; Boyce, J. Automated detection of diabetic retinopathy in digital retinal images: A tool for diabetic retinopathy screening. Diabet. Med. 2004, 21, 84–90. [Google Scholar] [CrossRef] [PubMed]

- Kaur, J.; Mittal, D. Segmentation and measurement of exudates in fundus images of the retina for detection of retinal disease. J. Biomed. Eng. Med. Imaging 2015, 2, 27–38. [Google Scholar] [CrossRef]

- Bu, W.; Wu, X.; Chen, X.; Dai, B.; Zheng, Y. Hierarchical detection of hard exudates in color retinal images. J. Softw. 2013, 8, 2723–2732. [Google Scholar] [CrossRef][Green Version]

- Osareh, A.; Shadgar, B.; Markahm, R. A computational-intelligence-based approach for detection of exudates in diabetic retinopathy images. IEEE Trans. Inf. Technol. Biomed. 2009, 13, 535–545. [Google Scholar] [CrossRef] [PubMed]

- Walter, T.; Klein, J.C.; Massin, P.; Erginay, A. A contribution of image processing to the diagnosis of diabetic retinopathy-detection of exudates in color fundus images of the human retina. IEEE Trans. Med. Imaging 2002, 21, 1236–1243. [Google Scholar] [CrossRef] [PubMed]

- Seoud, L.; Hurtut, T.; Chelbi, J.; Cheriet, F.; Langlois, J.M.P. Red lesion detection using dynamic shape features for diabetic retinopathy screening. IEEE Trans. Med. Imaging 2016, 35, 1116–1126. [Google Scholar] [CrossRef] [PubMed]

- Tang, L.; Niemeijer, M.; Joseph, M.; Reinhardt, J.M.; Garvin, M.K.; Abramoff, M.D. Splat feature classification with application to retinal hemorrhage detection in fundus images. IEEE Trans. Med. Imaging 2013, 32, 364–375. [Google Scholar] [CrossRef] [PubMed]

- Garcia, M.; Sachez, C.I.; Lopez, M.I.; Abasolo, D.; Hornero, R. Neural network based detection of hard exudates in retinal images. Comput. Methods Programs Biomed. 2009, 93, 9–19. [Google Scholar] [CrossRef] [PubMed]

- Garcia, M.; Valverde, C.; Lopez, M.I.; Poza, J.; Hornero, R. Comparison of logistic regression and neural network classifiers in the detection of hard exudates in retinal images. In Proceedings of the 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Osaka, Japan, 3–7 July 2013; pp. 5891–5894. [Google Scholar] [CrossRef]

- Marin, D.; Aquino, A.; Gegundez-Arias, M.E.; Bravo, J.M. A new supervised method for blood vessel segmentation in retinal images by using gray-level and moment invariants-based features. IEEE Trans. Med. Imaging 2011, 30, 146–158. [Google Scholar] [CrossRef] [PubMed]

- Hoover, A.; Goldbaum, M. Locating the optic nerve in a retinal image using the fuzzy convergence of the blood vessels. IEEE Trans. Med. Imaging 2003, 22, 951–958. [Google Scholar] [CrossRef] [PubMed]

- Stall, J.J.; Abramoff, M.D.; Niemeijer, M.; Viergever, M.A.; van Ginneken, B. Ridge based vessel segmentation in color images of the retina. IEEE Trans. Med. Imaging 2004, 23, 501–509. [Google Scholar] [CrossRef] [PubMed]

- Van Grinsven, M.J.J.P.; van Ginneken, B.; Hoyng, C.B.; Theelen, T.; Sanchez, C.I. Fast convolutional neural network training using selective data sampling: Application to hemorrhage detection in color fundus images. IEEE Trans. Med. Images 2016, 25, 1273–1284. [Google Scholar] [CrossRef] [PubMed]

- Hajdu, A.; Hajdu, L.; Jonas, A.; Kovacs, L.; Toman, H. Generalizing the majority voting scheme to spatially constrained voting. IEEE Trans. Image Process. 2013, 22, 4182–4194. [Google Scholar] [CrossRef] [PubMed]

- Decencière, E.; Zhang, X.; Cazuguel, G.; Lay, B.; Cochener, B.; Trone, C.; Gain, P.; Ordonez, R.; Massin, P.; Erginay, A.; et al. Feedback on a publicly distributed database: The Messidor database. Image Anal. Stereol. 2014, 33, 231–234. [Google Scholar] [CrossRef]

- Caramihale, T.; Popescu, D.; Ichim, L. Interconnected neural networks based on voting scheme and local detectors for retinal image analysis and diagnosis. In Proceedings of the Image Analysis and Processing Conference—ICIAP 2017, Catania, Italy, Battiato, 11–15 September 2017; Gallo, G., Schettini, R., Stanco, F., Eds.; Lecture Notes in Computer Science. Volume 10485, pp. 753–764. [Google Scholar] [CrossRef]

- Nayak, J.; Bhat, P.S.; Acharya, U.R.; Lim, C.M.; Kagathi, M. Automated identification of diabetic retinopathy stages using digital fundus images. J. Med. Syst. 2008, 32, 107–115. [Google Scholar] [CrossRef] [PubMed]

- Nayak, J.; Acharya, U.R.; Bhat, P.S.; Shetty, N.; Lim, T.C. Automated diagnosis of glaucoma using digital fundus images. J. Med. Syst. 2009, 33, 337–346. [Google Scholar] [CrossRef] [PubMed]

- Junior, S.B.; Welfer, D. Automatic detection of mycroaneurysms and hemorrhages in color eye fundus images. Int. J. Comput. Sci. Inf. Technol. 2013, 5, 21–37. [Google Scholar] [CrossRef]

- Raja, D.S.S.; Vasuki, S. Screening diabetic retinopathy in developing countries using retinal images. Appl. Med. Inform. 2015, 36, 13–22. [Google Scholar]

- Sopharak, A.; Uyyanonvara, B.; Barman, S. Automatic exudate detection from non-dilated diabetic retinopathy retinal images using fuzzy c-means clustering. Sensors 2009, 9, 2148–2161. [Google Scholar] [CrossRef] [PubMed]

- Caramihale, T.; Popescu, D.; Ichim, L. Detection of regions of interest in retinal images using artificial neural networks and K-means clustering. In Proceedings of the International Conference on Applied Electromagnetics and Communications (ICECOM 2016), Dubrovnik, Croatia, 19–21 September 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Savu, M.; Popescu, D.; Ichim, L. Blood vessel segmentation in eye fundus images. In Proceedings of the International Conference on Smart Systems and Technologies (SST 2017), Osijek, Croatia, 18–20 October 2017; pp. 245–251. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 6, 610–621. [Google Scholar] [CrossRef]

- Sarkar, N.; Chaudhuri, B. An efficient differential box-counting approach to compute fractal dimension of image. IEEE Trans. Syst. Man Cybern. 1994, 24, 115–120. [Google Scholar] [CrossRef]

- Barros Filho, M.N.; Sobreira, F.J.A. Accuracy of lacunarity algorithms in texture classification of high spatial resolution images from urban areas. In Proceedings of the XXI Congress of International Society of Photogrammetry and Remote Sensing (ISPRS 2008), Beijing, China, 3–11 July 2008; Part B3b. Volume XXXVII, pp. 417–422. [Google Scholar]

- Ojala, T.; Pietikainen, M. Unsupervised texture segmentation using feature distributions. Pattern Recognit. 1999, 32, 477–486. [Google Scholar] [CrossRef]

- Deza, M.-M.; Deza, E. Dictionary of Distances; Elsevier: Amsterdam, Netherlands, 2006. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).