Data-Driven Weather Forecasting and Climate Modeling from the Perspective of Development

Abstract

1. Introduction

2. Evolution of Weather Forecasts

3. Data-Driven Models: Methodologies and Performance Evaluation

3.1. Datasets

3.2. Models Adaptations and Training

3.2.1. Model Based on MLP

3.2.2. Models Based on CNNs

3.2.3. Model Based on ResNet

3.2.4. Models Based on GNN

3.2.5. Models Based on Transformer

3.2.6. Hybrid Model with Physical Constraints

3.3. Evaluation

3.3.1. The Speed Benefits of Data-Driven Models

3.3.2. Evaluating Forecast Quality

3.3.3. Assessing Ensemble Forecasting Capabilities

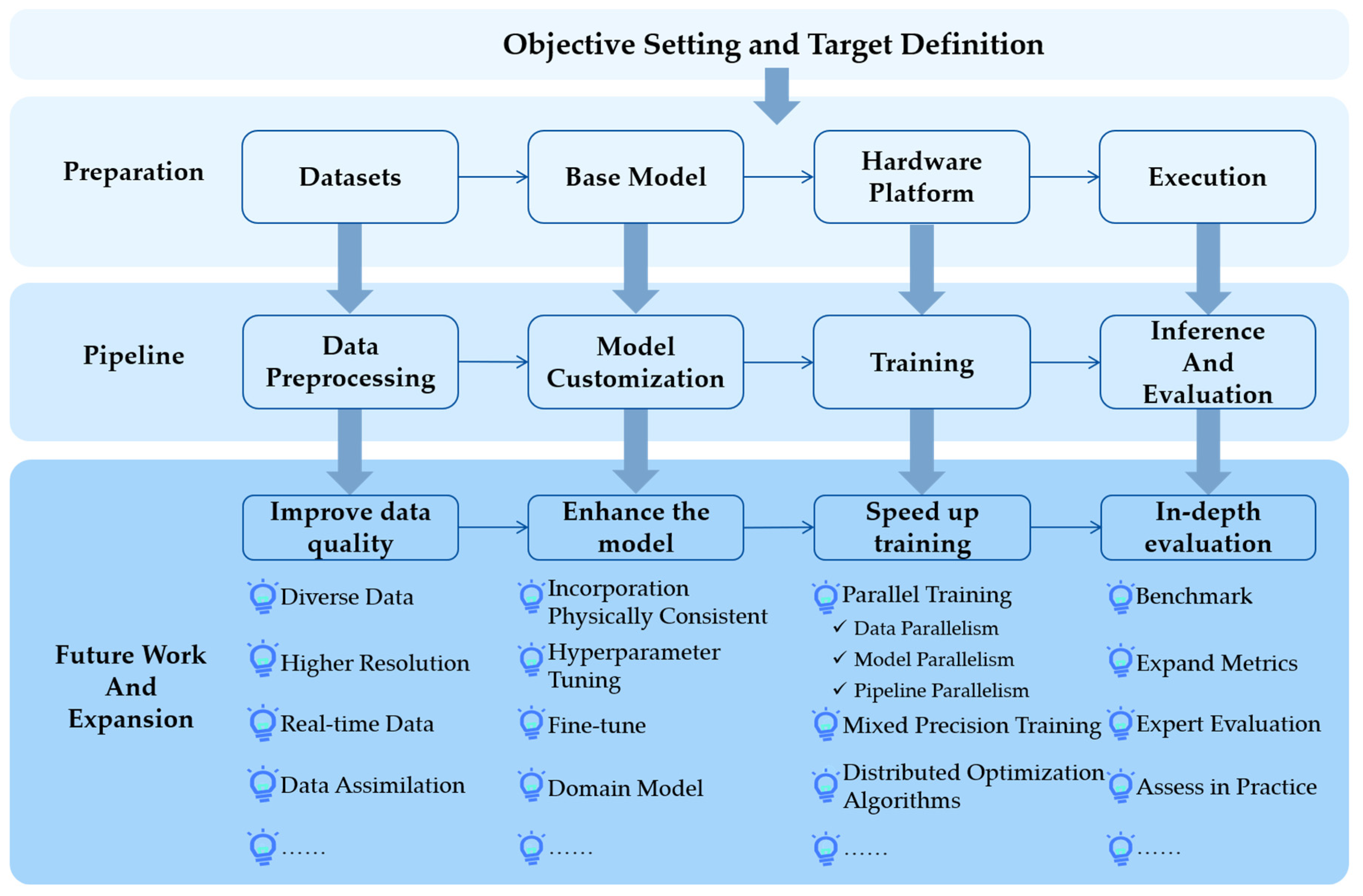

4. Opportunities and Challenges

4.1. Advantages

4.2. Limitations

4.2.1. Weak Interpretability

4.2.2. High Reliance on High-Quality Training Data

4.2.3. Uncertainty Quantification

4.2.4. Unsatisfactory in Extreme Cases

4.2.5. Incomplete Evaluation

4.3. Future Research

5. Summary

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ACC | Anomaly Correlation Coefficient |

| ACE | AI2ClimateEmulator |

| AFNO | Adaptive Fourier Neural Operator |

| AI | Artificial Intelligence |

| C3S | Copernicus Climate Change Service |

| CMA | China Meteorological Administration |

| CMIP6 | Coupled Model Intercomparison Project Phase 6 |

| CNNs | Convolutional Neural Networks |

| CRPS | Continuous Ranked Probability Score |

| ECMWF | European Centre for Medium-Range Weather Forecasts |

| HRES | High-Resolution Configuration of IFS |

| EPD | Encode–Process–Decode |

| ERA5 | Fifth Generation Of Ecmwf Atmospheric Reanalysis of The Global Climate |

| FV3GFS | the Atmospheric Component of the United States Weather Model |

| GFS | Global Forecast System |

| GNN | Graph Neural Network |

| GRF | Gaussian Random Fields |

| IFS | Integrated Forecasting System |

| LLMs | Large Language Models |

| LSTM | Long Short-Term Memory |

| MLP | Multi-Layer Perceptron |

| NorESM2 | Norwegian Earth System Model, Version 2 |

| NRMSE | Normalized Root-Mean-Square Error |

| NWP | Numerical Weather Prediction |

| PDEs | Partial Differential Equations |

| ResNet | Utilizing Residual Network |

| RMSE | Root Mean Square Error |

| SEEPS | Stable Equitable Error in Probability Space |

| SIME | Spatial Identical Mapping Extrapolate |

| T2M | 2-Meter Temperature |

| T500 | Temperature at 500 hPa |

| ViT | Vision Transformer |

| W-MSA | Window-Based Multi-Head Self-Attention |

| Z500 | Geopotential Height at 500 hPa |

References

- Brum-Bastos, V.S.; Long, J.A.; Demšar, U. Weather Effects on Human Mobility: A Study Using Multi-Channel Sequence Analysis. Comput. Environ. Urban Syst. 2018, 71, 131–152. [Google Scholar] [CrossRef]

- Wang, T.; Qu, Z.; Yang, Z.; Nichol, T.; Clarke, G.; Ge, Y.-E. Climate Change Research on Transportation Systems: Climate Risks, Adaptation and Planning. Transp. Res. Part D Transp. Environ. 2020, 88, 102553. [Google Scholar] [CrossRef]

- Palin, E.J.; Stipanovic Oslakovic, I.; Gavin, K.; Quinn, A. Implications of Climate Change for Railway Infrastructure. WIREs Clim. Chang. 2021, 12, e728. [Google Scholar] [CrossRef]

- Bernard, P.; Chevance, G.; Kingsbury, C.; Baillot, A.; Romain, A.-J.; Molinier, V.; Gadais, T.; Dancause, K.N. Climate Change, Physical Activity and Sport: A Systematic Review. Sports Med. 2021, 51, 1041–1059. [Google Scholar] [CrossRef]

- Parolini, G. Weather, Climate, and Agriculture: Historical Contributions and Perspectives from Agricultural Meteorology. WIREs Clim. Chang. 2022, 13, e766. [Google Scholar] [CrossRef]

- Falloon, P.; Bebber, D.P.; Dalin, C.; Ingram, J.; Mitchell, D.; Hartley, T.N.; Johnes, P.J.; Newbold, T.; Challinor, A.J.; Finch, J.; et al. What Do Changing Weather and Climate Shocks and Stresses Mean for the UK Food System? Environ. Res. Lett. 2022, 17, 051001. [Google Scholar] [CrossRef]

- Kim, K.-H.; Kabir, E.; Ara Jahan, S. A Review of the Consequences of Global Climate Change on Human Health. J. Environ. Sci. Health Part C 2014, 32, 299–318. [Google Scholar] [CrossRef]

- Meierrieks, D. Weather Shocks, Climate Change and Human Health. World Dev. 2021, 138, 105228. [Google Scholar] [CrossRef]

- Campbell-Lendrum, D.; Neville, T.; Schweizer, C.; Neira, M. Climate Change and Health: Three Grand Challenges. Nat. Med. 2023, 29, 1631–1638. [Google Scholar] [CrossRef]

- Liu, F.; Chang-Richards, A.; Wang, K.I.-K.; Dirks, K.N. Effects of Climate Change on Health and Wellbeing: A Systematic Review. Sustain. Dev. 2023, 31, 2067–2090. [Google Scholar] [CrossRef]

- Carleton, T.A.; Hsiang, S.M. Social and Economic Impacts of Climate. Science 2016, 353, aad9837. [Google Scholar] [CrossRef] [PubMed]

- Lenton, T.M.; Xu, C.; Abrams, J.F.; Ghadiali, A.; Loriani, S.; Sakschewski, B.; Zimm, C.; Ebi, K.L.; Dunn, R.R.; Svenning, J.-C.; et al. Quantifying the Human Cost of Global Warming. Nat. Sustain. 2023, 6, 1237–1247. [Google Scholar] [CrossRef]

- Malpede, M.; Percoco, M. Climate, Desertification, and Local Human Development: Evidence from 1564 Regions around the World. Ann. Reg. Sci. 2024, 72, 377–405. [Google Scholar] [CrossRef]

- Handmer, J.; Honda, Y.; Kundzewicz, Z.W.; Arnell, N.; Benito, G.; Hatfield, J.; Mohamed, I.F.; Peduzzi, P.; Wu, S.; Sherstyukov, B. Changes in Impacts of Climate Extremes: Human Systems and Ecosystems. In Managing the Risks of Extreme Events and Disasters to Advance Climate Change Adaptation Special Report of the Intergovernmental Panel on Climate Change; Cambridge University Press: Cambridge, UK, 2012; pp. 231–290. [Google Scholar] [CrossRef]

- Zhao, C.; Yang, Y.; Fan, H.; Huang, J.; Fu, Y.; Zhang, X.; Kang, S.; Cong, Z.; Letu, H.; Menenti, M. Aerosol Characteristics and Impacts on Weather and Climate over the Tibetan Plateau. Natl. Sci. Rev. 2020, 7, 492–495. [Google Scholar] [CrossRef] [PubMed]

- Griggs, D.; Stafford-Smith, M.; Warrilow, D.; Street, R.; Vera, C.; Scobie, M.; Sokona, Y. Use of Weather and Climate Information Essential for SDG Implementation. Nat. Rev. Earth Environ. 2021, 2, 2–4. [Google Scholar] [CrossRef] [PubMed]

- Wilkens, J.; Datchoua-Tirvaudey, A.R.C. Researching Climate Justice: A Decolonial Approach to Global Climate Governance. Int. Aff. 2022, 98, 125–143. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, D.; Wu, F.; Ji, Q. Climate Risks and Foreign Direct Investment in Developing Countries: The Role of National Governance. Sustain. Sci. 2022, 17, 1723–1740. [Google Scholar] [CrossRef]

- Stott, P. How Climate Change Affects Extreme Weather Events. Science 2016, 352, 1517–1518. [Google Scholar] [CrossRef] [PubMed]

- Zittis, G.; Almazroui, M.; Alpert, P.; Ciais, P.; Cramer, W.; Dahdal, Y.; Fnais, M.; Francis, D.; Hadjinicolaou, P.; Howari, F.; et al. Climate Change and Weather Extremes in the Eastern Mediterranean and Middle East. Rev. Geophys. 2022, 60, e2021RG000762. [Google Scholar] [CrossRef]

- Brunet, G.; Parsons, D.B.; Ivanov, D.; Lee, B.; Bauer, P.; Bernier, N.B.; Bouchet, V.; Brown, A.; Busalacchi, A.; Flatter, G.C.; et al. Advancing Weather and Climate Forecasting for Our Changing World. Bull. Am. Meteorol. Soc. 2023, 104, E909–E927. [Google Scholar] [CrossRef]

- Pu, Z.; Kalnay, E. Numerical Weather Prediction Basics: Models, Numerical Methods, and Data Assimilation. In Handbook of Hydrometeorological Ensemble Forecasting; Duan, Q., Pappenberger, F., Thielen, J., Wood, A., Cloke, H.L., Schaake, J.C., Eds.; Springer: Berlin/Heidelberg, Germany, 2018; pp. 1–31. ISBN 978-3-642-40457-3. [Google Scholar]

- Michalakes, J. HPC for Weather Forecasting. In Parallel Algorithms in Computational Science and Engineering; Grama, A., Sameh, A.H., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 297–323. ISBN 978-3-030-43736-7. [Google Scholar]

- Bi, K.; Xie, L.; Zhang, H.; Chen, X.; Gu, X.; Tian, Q. Accurate Medium-Range Global Weather Forecasting with 3D Neural Networks. Nature 2023, 619, 533–538. [Google Scholar] [CrossRef] [PubMed]

- Tufek, A.; Aktas, M.S. A Systematic Literature Review on Numerical Weather Prediction Models and Provenance Data. In Proceedings of the Computational Science and Its Applications—ICCSA 2022 Workshops; Gervasi, O., Murgante, B., Misra, S., Rocha, A.M.A.C., Garau, C., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 616–627. [Google Scholar]

- Brotzge, J.A.; Berchoff, D.; Carlis, D.L.; Carr, F.H.; Carr, R.H.; Gerth, J.J.; Gross, B.D.; Hamill, T.M.; Haupt, S.E.; Jacobs, N.; et al. Challenges and Opportunities in Numerical Weather Prediction. Bull. Am. Meteorol. Soc. 2023, 104, E698–E705. [Google Scholar] [CrossRef]

- Peng, X.; Che, Y.; Chang, J. A Novel Approach to Improve Numerical Weather Prediction Skills by Using Anomaly Integration and Historical Data. J. Geophys. Res. Atmos. 2013, 118, 8814–8826. [Google Scholar] [CrossRef]

- Bauer, P.; Thorpe, A.; Brunet, G. The Quiet Revolution of Numerical Weather Prediction. Nature 2015, 525, 47–55. [Google Scholar] [CrossRef] [PubMed]

- Govett, M.; Bah, B.; Bauer, P.; Berod, D.; Bouchet, V.; Corti, S.; Davis, C.; Duan, Y.; Graham, T.; Honda, Y.; et al. Exascale Computing and Data Handling: Challenges and Opportunities for Weather and Climate Prediction. Bull. Am. Meteorol. Soc. 2024; published online ahead of print. [Google Scholar] [CrossRef]

- Balaji, V. Climbing down Charney’s Ladder: Machine Learning and the Post-Dennard Era of Computational Climate Science. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2021, 379, 20200085. [Google Scholar] [CrossRef] [PubMed]

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer Feedforward Networks Are Universal Approximators. Neural Netw. 1989, 2, 359–366. [Google Scholar] [CrossRef]

- Lu, L.; Jin, P.; Pang, G.; Zhang, Z.; Karniadakis, G.E. Learning Nonlinear Operators via DeepONet Based on the Universal Approximation Theorem of Operators. Nat. Mach. Intell. 2021, 3, 218–229. [Google Scholar] [CrossRef]

- Aminabadi, R.Y.; Rajbhandari, S.; Awan, A.A.; Li, C.; Li, D.; Zheng, E.; Ruwase, O.; Smith, S.; Zhang, M.; Rasley, J.; et al. DeepSpeed- Inference: Enabling Efficient Inference of Transformer Models at Unprecedented Scale. In Proceedings of the SC22: International Conference for High Performance Computing, Networking, Storage and Analysis, Dallas, TX, USA, 13–18 November 2022; pp. 1–15. [Google Scholar]

- Shuvo, M.M.H.; Islam, S.K.; Cheng, J.; Morshed, B.I. Efficient Acceleration of Deep Learning Inference on Resource-Constrained Edge Devices: A Review. Proc. IEEE 2023, 111, 42–91. [Google Scholar] [CrossRef]

- Menghani, G. Efficient Deep Learning: A Survey on Making Deep Learning Models Smaller, Faster, and Better. ACM Comput. Surv. 2023, 55, 1–37. [Google Scholar] [CrossRef]

- Abbe, C. The physical basis of long-range weather forecasts. Mon. Wea. Rev. 1901, 29, 551–561. [Google Scholar] [CrossRef]

- Bjerknes, V. Das Problem Der Wettervorhersage, Betrachtet Vom Standpunkte Der Mechanik Und Der Physik. Meteor. Z. 1904, 21, 1–7. [Google Scholar]

- Lynch, P. The Origins of Computer Weather Prediction and Climate Modeling. J. Comput. Phys. 2008, 227, 3431–3444. [Google Scholar] [CrossRef]

- Mass, C. The Uncoordinated Giant II: Why U.S. Operational Numerical Weather Prediction Is Still Lagging and How to Fix It. Bull. Am. Meteorol. Soc. 2023, 104, E851–E871. [Google Scholar] [CrossRef]

- Gomes, B.; Ashley, E.A. Artificial Intelligence in Molecular Medicine. N. Engl. J. Med. 2023, 388, 2456–2465. [Google Scholar] [CrossRef] [PubMed]

- Mullowney, M.W.; Duncan, K.R.; Elsayed, S.S.; Garg, N.; van der Hooft, J.J.J.; Martin, N.I.; Meijer, D.; Terlouw, B.R.; Biermann, F.; Blin, K.; et al. Artificial Intelligence for Natural Product Drug Discovery. Nat. Rev. Drug Discov. 2023, 22, 895–916. [Google Scholar] [CrossRef] [PubMed]

- Kortemme, T. De Novo Protein Design—From New Structures to Programmable Functions. Cell 2024, 187, 526–544. [Google Scholar] [CrossRef] [PubMed]

- Karniadakis, G.E.; Kevrekidis, I.G.; Lu, L.; Perdikaris, P.; Wang, S.; Yang, L. Physics-Informed Machine Learning. Nat. Rev. Phys. 2021, 3, 422–440. [Google Scholar] [CrossRef]

- Huerta, E.A.; Khan, A.; Huang, X.; Tian, M.; Levental, M.; Chard, R.; Wei, W.; Heflin, M.; Katz, D.S.; Kindratenko, V.; et al. Accelerated, Scalable and Reproducible AI-Driven Gravitational Wave Detection. Nat. Astron. 2021, 5, 1062–1068. [Google Scholar] [CrossRef]

- López, C. Artificial Intelligence and Advanced Materials. Adv. Mater. 2023, 35, 2208683. [Google Scholar] [CrossRef]

- Wang, H.; Fu, T.; Du, Y.; Gao, W.; Huang, K.; Liu, Z.; Chandak, P.; Liu, S.; Van Katwyk, P.; Deac, A.; et al. Scientific Discovery in the Age of Artificial Intelligence. Nature 2023, 620, 47–60. [Google Scholar] [CrossRef]

- Berry, T.; Harlim, J. Correcting Biased Observation Model Error in Data Assimilation. Mon. Wea. Rev. 2017, 145, 2833–2853. [Google Scholar] [CrossRef]

- Cintra, R.; de Campos Velho, H.; Cocke, S. Tracking the Model: Data Assimilation by Artificial Neural Network. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 403–410. [Google Scholar]

- Yuval, J.; O’Gorman, P.A.; Hill, C.N. Use of Neural Networks for Stable, Accurate and Physically Consistent Parameterization of Subgrid Atmospheric Processes with Good Performance at Reduced Precision. Geophys. Res. Lett. 2021, 48, e2020GL091363. [Google Scholar] [CrossRef]

- McGovern, A.; Elmore, K.L.; Gagne, D.J.; Haupt, S.E.; Karstens, C.D.; Lagerquist, R.; Smith, T.; Williams, J.K. Using Artificial Intelligence to Improve Real-Time Decision-Making for High-Impact Weather. Bull. Am. Meteorol. Soc. 2017, 98, 2073–2090. [Google Scholar] [CrossRef]

- Chen, G.; Wang, W.-C. Short-Term Precipitation Prediction for Contiguous United States Using Deep Learning. Geophys. Res. Lett. 2022, 49, e2022GL097904. [Google Scholar] [CrossRef]

- Espeholt, L.; Agrawal, S.; Sønderby, C.; Kumar, M.; Heek, J.; Bromberg, C.; Gazen, C.; Carver, R.; Andrychowicz, M.; Hickey, J.; et al. Deep Learning for Twelve Hour Precipitation Forecasts. Nat. Commun. 2022, 13, 5145. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Wang, M.; Wang, S.; Chen, Y.; Wang, R.; Zhao, C.; Hu, X. Weather Radar Nowcasting for Extreme Precipitation Prediction Based on the Temporal and Spatial Generative Adversarial Network. Atmosphere 2022, 13, 1291. [Google Scholar] [CrossRef]

- Chen, Y.; Huang, G.; Wang, Y.; Tao, W.; Tian, Q.; Yang, K.; Zheng, J.; He, H. Improving the Heavy Rainfall Forecasting Using a Weighted Deep Learning Model. Front. Environ. Sci. 2023, 11, 1116672. [Google Scholar] [CrossRef]

- Wang, J.; Wang, X.; Guan, J.; Zhang, L.; Zhang, F.; Chang, T. STPF-Net: Short-Term Precipitation Forecast Based on a Recurrent Neural Network. Remote Sens. 2024, 16, 52. [Google Scholar] [CrossRef]

- Meng, F.; Yang, K.; Yao, Y.; Wang, Z.; Song, T. Tropical Cyclone Intensity Probabilistic Forecasting System Based on Deep Learning. Int. J. Intell. Syst. 2023, 2023, 3569538. [Google Scholar] [CrossRef]

- Wu, Y.; Geng, X.; Liu, Z.; Shi, Z. Tropical Cyclone Forecast Using Multitask Deep Learning Framework. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6503505. [Google Scholar] [CrossRef]

- Khodayar, M.; Wang, J.; Manthouri, M. Interval Deep Generative Neural Network for Wind Speed Forecasting. IEEE Trans. Smart Grid 2019, 10, 3974–3989. [Google Scholar] [CrossRef]

- Jiang, S.; Fan, H.; Wang, C. Improvement of Typhoon Intensity Forecasting by Using a Novel Spatio-Temporal Deep Learning Model. Remote Sens. 2022, 14, 5205. [Google Scholar] [CrossRef]

- Chiranjeevi, B.S.; Shreegagana, B.; Bhavana, H.S.; Karanth, I.; Asha Rani, K.P.; Gowrishankar, S. Weather Prediction Analysis Using Classifiers and Regressors in Machine Learning. In Proceedings of the 2023 5th International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 23–25 January 2023; p. 900, ISBN 978-1-66547-467-2. [Google Scholar]

- Scher, S. Toward Data-Driven Weather and Climate Forecasting: Approximating a Simple General Circulation Model with Deep Learning. Geophys. Res. Lett. 2018, 45, 12–616. [Google Scholar] [CrossRef]

- Dueben, P.D.; Bauer, P. Challenges and Design Choices for Global Weather and Climate Models Based on Machine Learning. Geosci. Model. Dev. 2018, 11, 3999–4009. [Google Scholar] [CrossRef]

- Scher, S.; Messori, G. Weather and Climate Forecasting with Neural Networks: Using General Circulation Models (GCMs) with Different Complexity as a Study Ground. Geosci. Model. Dev. 2019, 12, 2797–2809. [Google Scholar] [CrossRef]

- Weyn, J.A.; Durran, D.R.; Caruana, R. Can Machines Learn to Predict Weather? Using Deep Learning to Predict Gridded 500-hPa Geopotential Height from Historical Weather Data. J. Adv. Model. Earth Syst. 2019, 11, 2680–2693. [Google Scholar] [CrossRef]

- Lam, R.; Sanchez-Gonzalez, A.; Willson, M.; Wirnsberger, P.; Fortunato, M.; Alet, F.; Ravuri, S.; Ewalds, T.; Eaton-Rosen, Z.; Hu, W.; et al. Learning Skillful Medium-Range Global Weather Forecasting. Science 2023, 382, 1416–1421. [Google Scholar] [CrossRef]

- He, T.; Yu, S.; Wang, Z.; Li, J.; Chen, Z. From Data Quality to Model Quality: An Exploratory Study on Deep Learning. In Proceedings of the 11th Asia-Pacific Symposium on Internetware, Fukuoka, Japan, 28–29 October 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1–6. [Google Scholar]

- Whang, S.E.; Lee, J.-G. Data Collection and Quality Challenges for Deep Learning. Proc. VLDB Endow. 2020, 13, 3429–3432. [Google Scholar] [CrossRef]

- Hersbach, H.; Bell, B.; Berrisford, P.; Hirahara, S.; Horányi, A.; Muñoz-Sabater, J.; Nicolas, J.; Peubey, C.; Radu, R.; Schepers, D.; et al. The ERA5 Global Reanalysis. Quart. J. R. Meteoro Soc. 2020, 146, 1999–2049. [Google Scholar] [CrossRef]

- Garg, S.; Rasp, S.; Thuerey, N. WeatherBench Probability: A Benchmark Dataset for Probabilistic Medium-Range Weather Forecasting along with Deep Learning Baseline Models. arXiv 2022, arXiv:2205.00865. [Google Scholar]

- Rasp, S.; Hoyer, S.; Merose, A.; Langmore, I.; Battaglia, P.; Russel, T.; Sanchez-Gonzalez, A.; Yang, V.; Carver, R.; Agrawal, S.; et al. WeatherBench 2: A Benchmark for the next Generation of Data-Driven Global Weather Models. arXiv 2023, arXiv:2308.15560. [Google Scholar]

- Watson-Parris, D.; Rao, Y.; Olivié, D.; Seland, Ø.; Nowack, P.; Camps-Valls, G.; Stier, P.; Bouabid, S.; Dewey, M.; Fons, E.; et al. ClimateBench v1.0: A Benchmark for Data-Driven Climate Projections. J. Adv. Model. Earth Syst. 2022, 14, e2021MS002954. [Google Scholar] [CrossRef]

- Eyring, V.; Bony, S.; Meehl, G.A.; Senior, C.A.; Stevens, B.; Stouffer, R.J.; Taylor, K.E. Overview of the Coupled Model Intercomparison Project Phase 6 (CMIP6) Experimental Design and Organization. Geosci. Model. Dev. 2016, 9, 1937–1958. [Google Scholar] [CrossRef]

- Kurth, T.; Subramanian, S.; Harrington, P.; Pathak, J.; Mardani, M.; Hall, D.; Miele, A.; Kashinath, K.; Anandkumar, A. Four-CastNet: Accelerating Global High-Resolution Weather Forecasting Using Adaptive Fourier Neural Operators. In Proceedings of the Platform for Advanced Scientific Computing Conference, Davos, Switzerland, 26–28 June 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 1–11. [Google Scholar]

- Chen, K.; Han, T.; Gong, J.; Bai, L.; Ling, F.; Luo, J.-J.; Chen, X.; Ma, L.; Zhang, T.; Su, R.; et al. FengWu: Pushing the Skillful Global Medium-Range Weather Forecast beyond 10 Days Lead. arXiv 2023, arXiv:2304.02948. [Google Scholar]

- Chen, L.; Zhong, X.; Zhang, F.; Cheng, Y.; Xu, Y.; Qi, Y.; Li, H. FuXi: A Cascade Machine Learning Forecasting System for 15-Day Global Weather Forecast. npj Clim. Atmos. Sci. 2023, 6, 190. [Google Scholar] [CrossRef]

- Kochkov, D.; Yuval, J.; Langmore, I.; Norgaard, P.; Smith, J.; Mooers, G.; Klöwer, M.; Lottes, J.; Rasp, S.; Düben, P.; et al. Neural general circulation models. arXiv 2023, arXiv:2311.07222. [Google Scholar]

- Han, T.; Guo, S.; Ling, F.; Chen, K.; Gong, J.; Luo, J.; Gu, J.; Dai, K.; Ouyang, W.; Bai, L. FengWu-GHR: Learning the Kilometer-Scale Medium-Range Global Weather Forecasting. arXiv 2024, arXiv:2402.00059. [Google Scholar]

- Weyn, J.A.; Durran, D.R.; Caruana, R. Improving Data-Driven Global Weather Prediction Using Deep Convolutional Neural Networks on a Cubed Sphere. J. Adv. Model. Earth Syst. 2020, 12, e2020MS002109. [Google Scholar] [CrossRef]

- Rasp, S.; Thuerey, N. Data-Driven Medium-Range Weather Prediction with a Resnet Pretrained on Climate Simulations: A New Model for WeatherBench. J. Adv. Model. Earth Syst. 2021, 13, e2020MS002405. [Google Scholar] [CrossRef]

- Keisler, R. Forecasting Global Weather with Graph Neural Networks. arXiv 2022, arXiv:2202.07575. [Google Scholar]

- Nguyen, T.; Brandstetter, J.; Kapoor, A.; Gupta, J.K.; Grover, A. ClimaX: A Foundation Model for Weather and Climate. arXiv 2023, arXiv:2301.10343. [Google Scholar]

- Bhardwaj, R.; Duhoon, V. Weather Forecasting Using Soft Computing Techniques. In Proceedings of the 2018 International Conference on Computing, Power and Communication Technologies (GUCON), Greater Noida, India, 28–29 September 2018; pp. 1111–1115. [Google Scholar]

- Ling, F.; Ouyang, L.; Larbi, B.R.; Luo, J.-J.; Zhong, X.; Bai, L. Is Artificial Intelligence Providing the Second Revolution for Weather Forecasting? arXiv 2024, arXiv:2401.16669. [Google Scholar]

- Olivetti, L.; Messori, G. Advances and Prospects of Deep Learning for Medium-Range Extreme Weather Forecasting. Geosci. Model. Dev. 2024, 17, 2347–2358. [Google Scholar] [CrossRef]

- Cong, S.; Zhou, Y. A Review of Convolutional Neural Network Architectures and Their Optimizations. Artif. Intell. Rev. 2023, 56, 1905–1969. [Google Scholar] [CrossRef]

- Zhou, T.; Ma, Z.; Wang, X.; Wen, Q.; Sun, L.; Yin, T.Y.W.; Jin, R. FiLM: Frequency Improved Legendre Memory Model for Long-Term Time Series Forecasting. Adv. Neural Inf. Process. Syst. 2022, 35, 12677–12690. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. ISBN 978-3-319-24573-7. [Google Scholar]

- Schultz, M.G.; Betancourt, C.; Gong, B.; Kleinert, F.; Langguth, M.; Leufen, L.H.; Mozaffari, A.; Stadtler, S. Can Deep Learning Beat Numerical Weather Prediction? Philos. Trans. R. Soc. A-Math. Phys. Eng. Sci. 2021, 379, 20200097. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Scarselli, F.; Gori, M.; Tsoi, A.C.; Hagenbuchner, M.; Monfardini, G. The Graph Neural Network Model. IEEE Trans. Neural Netw. 2009, 20, 61–80. [Google Scholar] [CrossRef] [PubMed]

- Alet, F.; Jeewajee, A.K.; Villalonga, M.B.; Rodriguez, A.; Lozano-Perez, T.; Kaelbling, L. Graph Element Networks: Adaptive, Structured Computation and Memory. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; pp. 212–222. [Google Scholar]

- Sanchez-Gonzalez, A.; Godwin, J.; Pfaff, T.; Ying, R.; Leskovec, J.; Battaglia, P. Learning to Simulate Complex Physics with Graph Networks. In Proceedings of the 37th International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 8459–8468. [Google Scholar]

- Pfaff, T.; Fortunato, M.; Sanchez-Gonzalez, A.; Battaglia, P.W. Learning Mesh-Based Simulation with Graph Networks. arXiv 2020, arXiv:2010.03409. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All You Need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Liu, Z.; Hu, H.; Lin, Y.; Yao, Z.; Xie, Z.; Wei, Y.; Ning, J.; Cao, Y.; Zhang, Z.; Dong, L.; et al. Swin Transformer V2: Scaling Up Capacity and Resolution. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 12009–12019. [Google Scholar]

- Guibas, J.; Mardani, M.; Li, Z.; Tao, A.; Anandkumar, A.; Catanzaro, B. Adaptive Fourier Neural Operators: Efficient Token Mixers for Transformers. arXiv 2022, arXiv:2111.13587. [Google Scholar]

- Watt-Meyer, O.; Dresdner, G.; McGibbon, J.; Clark, S.K.; Henn, B.; Duncan, J.; Brenowitz, N.D.; Kashinath, K.; Pritchard, M.S.; Bonev, B.; et al. ACE: A Fast, Skillful Learned Global Atmospheric Model for Climate Prediction. arXiv 2023, arXiv:2310.02074. [Google Scholar]

- Bonev, B.; Kurth, T.; Hundt, C.; Pathak, J.; Baust, M.; Kashinath, K.; Anandkumar, A. Spherical Fourier Neural Operators: Learning Stable Dynamics on the Sphere. In Proceedings of the 40th International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023. [Google Scholar]

- Temam, R. Navier-Stokes Equations: Theory and Numerical Analysis; American Mathematical Soc.: Washington, DC, USA, 2001; Volume 343, ISBN 0-8218-2737-5. [Google Scholar]

- Bauer, P.; Quintino, T.; Wedi, N.; Bonanni, A.; Chrust, M.; Deconinck, W.; Diamantakis, M.; Düben, P.; English, S.; Flemming, J. The ECMWF Scalability Programme: Progress and Plans; European Centre for Medium Range Weather Forecasts: Reading, UK, 2020.

- Lofstead, J. Weather Forecasting Limitations in the Developing World. In Proceedings of the Distributed, Ambient and Pervasive Interactions; Streitz, N.A., Konomi, S., Eds.; Springer Nature: Cham, Switzerland, 2023; pp. 86–96. [Google Scholar]

- Xu, R.; Han, F.; Ta, Q. Deep Learning at Scale on NVIDIA V100 Accelerators. In Proceedings of the 2018 IEEE/ACM Performance Modeling, Benchmarking and Simulation of High Performance Computer Systems (PMBS), Dallas, TX, USA, 12 November 2018; pp. 23–32. [Google Scholar]

- Jeon, W.; Ko, G.; Lee, J.; Lee, H.; Ha, D.; Ro, W.W. Chapter Six—Deep Learning with GPUs. In Advances in Computers; Kim, S., Deka, G.C., Eds.; Hardware Accelerator Systems for Artificial Intelligence and Machine Learning; Elsevier: Amsterdam, The Netherlands, 2021; Volume 122, pp. 167–215. [Google Scholar]

- Jouppi, N.; Kurian, G.; Li, S.; Ma, P.; Nagarajan, R.; Nai, L.; Patil, N.; Subramanian, S.; Swing, A.; Towles, B.; et al. TPU v4: An Optically Reconfigurable Supercomputer for Machine Learning with Hardware Support for Embeddings. In Proceedings of the 50th Annual International Symposium on Computer Architecture, Orlando, FL, USA, 17–21 June 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 1–14. [Google Scholar]

- Shen, B.-W.; Pielke, R.A.; Zeng, X.; Baik, J.-J.; Faghih-Naini, S.; Cui, J.; Atlas, R. Is Weather Chaotic?: Coexistence of Chaos and Order within a Generalized Lorenz Model. Bull. Am. Meteorol. Soc. 2021, 102, E148–E158. [Google Scholar] [CrossRef]

- McGovern, A.; Lagerquist, R.; Gagne, D.J., II; Jergensen, G.E.; Elmore, K.L.; Homeyer, C.R.; Smith, T. Making the Black Box More Transparent: Understanding the Physical Implications of Machine Learning. Bull. Am. Meteorol. Soc. 2019, 100, 2175–2199. [Google Scholar] [CrossRef]

- Liu, Q.; Lou, X.; Yan, Z.; Qi, Y.; Jin, Y.; Yu, S.; Yang, X.; Zhao, D.; Xia, J. Deep-Learning Post-Processing of Short-Term Station Precipitation Based on NWP Forecasts. Atmos. Res. 2023, 295, 107032. [Google Scholar] [CrossRef]

- Hewamalage, H.; Ackermann, K.; Bergmeir, C. Forecast Evaluation for Data Scientists: Common Pitfalls and Best Practices. Data Min. Knowl. Discov. 2023, 37, 788–832. [Google Scholar] [CrossRef] [PubMed]

- Bouallègue, Z.B.; Clare, M.C.A.; Magnusson, L.; Gascón, E.; Maier-Gerber, M.; Janoušek, M.; Rodwell, M.; Pinault, F.; Dramsch, J.S.; Lang, S.T.K.; et al. The Rise of Data-Driven Weather Forecasting: A First Statistical Assessment of Machine Learning-Based Weather Forecasts in an Operational-like Context. Bull. Am. Meteorol. Soc. 2024; published online ahead of print. [Google Scholar] [CrossRef]

- Saleem, H.; Salim, F.; Purcell, C. Conformer: Embedding Continuous Attention in Vision Transformer for Weather Forecasting. arXiv 2024, arXiv:2402.17966. [Google Scholar]

- Ding, N.; Qin, Y.; Yang, G.; Wei, F.; Yang, Z.; Su, Y.; Hu, S.; Chen, Y.; Chan, C.-M.; Chen, W.; et al. Parameter-Efficient Fine-Tuning of Large-Scale Pre-Trained Language Models. Nat. Mach. Intell. 2023, 5, 220–235. [Google Scholar] [CrossRef]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models Are Few-Shot Learners. In Proceedings of the Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 1877–1901. [Google Scholar]

- Shawki, N.; Nunez, R.R.; Obeid, I.; Picone, J. On Automating Hyperparameter Optimization for Deep Learning Applications. In Proceedings of the 2021 IEEE Signal Processing in Medicine and Biology Symposium (SPMB), Philadelphia, PA, USA, 4 December 2021; pp. 1–7. [Google Scholar]

- Park, H.; Nam, Y.; Kim, J.-H.; Choo, J. HyperTendril: Visual Analytics for User-Driven Hyperparameter Optimization of Deep Neural Networks. IEEE Trans. Vis. Comput. Graph. 2021, 27, 1407–1416. [Google Scholar] [CrossRef]

- Bergstra, J.; Yamins, D.; Cox, D. Hyperopt: A Python Library for Optimizing the Hyperparameters of Machine Learning Algorithms. In Proceedings of the 12th Python in Science Conference, Austin, TX, USA, 24–29 June 2013; pp. 13–19. [Google Scholar]

- Akiba, T.; Sano, S.; Yanase, T.; Ohta, T.; Koyama, M. Optuna: A Next-Generation Hyperparameter Optimization Framework. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 2623–2631. [Google Scholar]

- Golovin, D.; Solnik, B.; Moitra, S.; Kochanski, G.; Karro, J.; Sculley, D. Google Vizier: A Service for Black-Box Optimization. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 1487–1495. [Google Scholar]

| Basic Model | Description | Method |

|---|---|---|

| MLP (Multi-Layer Perceptron) | early neural network suited for nonlinear challenges | Dueben et al. [62] |

| CNNs (Convolutional Neural Networks) | efficiently processing spatial data and extracting features | Scher et al. [61] |

| Weyn et al. [64] | ||

| Weyn et al. [78] | ||

| ResNet (Residual Network) | enabling deeper networks through efficient residual connections | Rasp et al. [79] |

| GNN (Graph Neural Network) | capturing spatial and temporal dynamics critical in fluid dynamics | Keisler et al. [80] |

| GraphCast [65] | ||

| Transformer | processes input data through self-attention and feedforward layers | FourCastNet [73] |

| FengWu [74] | ||

| Pangu [24] | ||

| ClimaX [81] | ||

| FengWu-GHR [77] | ||

| Fuxi [75] | ||

| EPD (Encode–Process–Decode) | a differentiable model with deep learning | NeuralGCM [76] |

| Hardware | Performance (TFLOPS) | MEMORY (GB) | Memory Bandwidth |

|---|---|---|---|

| Cloud TPU v4 | 275 | 32 | 1200 GB/s |

| NVIDIA A100 GPU | 312~1248 | 40 | 1.6 TB/s |

| NVIDIA V100 GPU | 112~125 | 32/16 | 900 GB/s |

| Method. | Hardware | Training Cost | Inference Cost |

|---|---|---|---|

| Weyn et al. [78] | 1 NVIDIA V100 GPU | 2–3 days | 4-week forecast in less than 0.2 s on one GPU |

| Keisler et al. [80] | 1 NVIDIA A100 GPU | 5.5 days | 5-day forecast takes about 0.8 s on one GPU |

| FourCastNet [73] | 64 NVIDIA A100 GPU | 16 h | 1 week-long forecast in less than 2 s on one GPU |

| Pangu [24] | 192 NVIDIA V100 GPU | 16 days | 5-day forecast takes 1.4 s on one GPU |

| FengWu [74] | 32 NVIDIA A100 GPU | 17 days | 10-day forecast takes 0.6 s for on one GPU |

| GraphCast [65] | 32 Cloud TPU v4 | about 4 weeks | 10-day forecast takes less than 1 min on one TPU |

| ACE [99] | 4 NVIDIA A100 GPU | 63 h | 1 day simulate takes 1 s on one GPU |

| NeuralGCM [76] | 16~256 Cloud TPU v4 | 1 day to 3 weeks | 10-day forecast takes from 2.5 s to 119 s in different spatial resolutions on one TPU |

| Method | Forecast Time (Days) | Atmospheric Variables | Vertical Pressure Levels | Surface Variables | Number of Total Variables | Spatial Resolution (°) |

|---|---|---|---|---|---|---|

| Pangu [24] | 7 | 5 | 13 | 4 | 69 | 0.25 |

| GraphCast [65] | 10 | 6 | 37 | 5 | 227 | 0.25 |

| FengWu [74] | 11.5 | 5 | 37 | 4 | 189 | 2.25 |

| Fuxi [75] | 14.5 | 5 | 13 | 5 | 70 | 0.25 |

| Fengwu-GHR [77] | 10 | 5 | 13 | 4 | 69 | 0.09 |

| NeuralGCM [76] | 15 | 7 | 32 | 0 | 224 | 0.7, 1.4, 2.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Y.; Xue, W. Data-Driven Weather Forecasting and Climate Modeling from the Perspective of Development. Atmosphere 2024, 15, 689. https://doi.org/10.3390/atmos15060689

Wu Y, Xue W. Data-Driven Weather Forecasting and Climate Modeling from the Perspective of Development. Atmosphere. 2024; 15(6):689. https://doi.org/10.3390/atmos15060689

Chicago/Turabian StyleWu, Yuting, and Wei Xue. 2024. "Data-Driven Weather Forecasting and Climate Modeling from the Perspective of Development" Atmosphere 15, no. 6: 689. https://doi.org/10.3390/atmos15060689

APA StyleWu, Y., & Xue, W. (2024). Data-Driven Weather Forecasting and Climate Modeling from the Perspective of Development. Atmosphere, 15(6), 689. https://doi.org/10.3390/atmos15060689