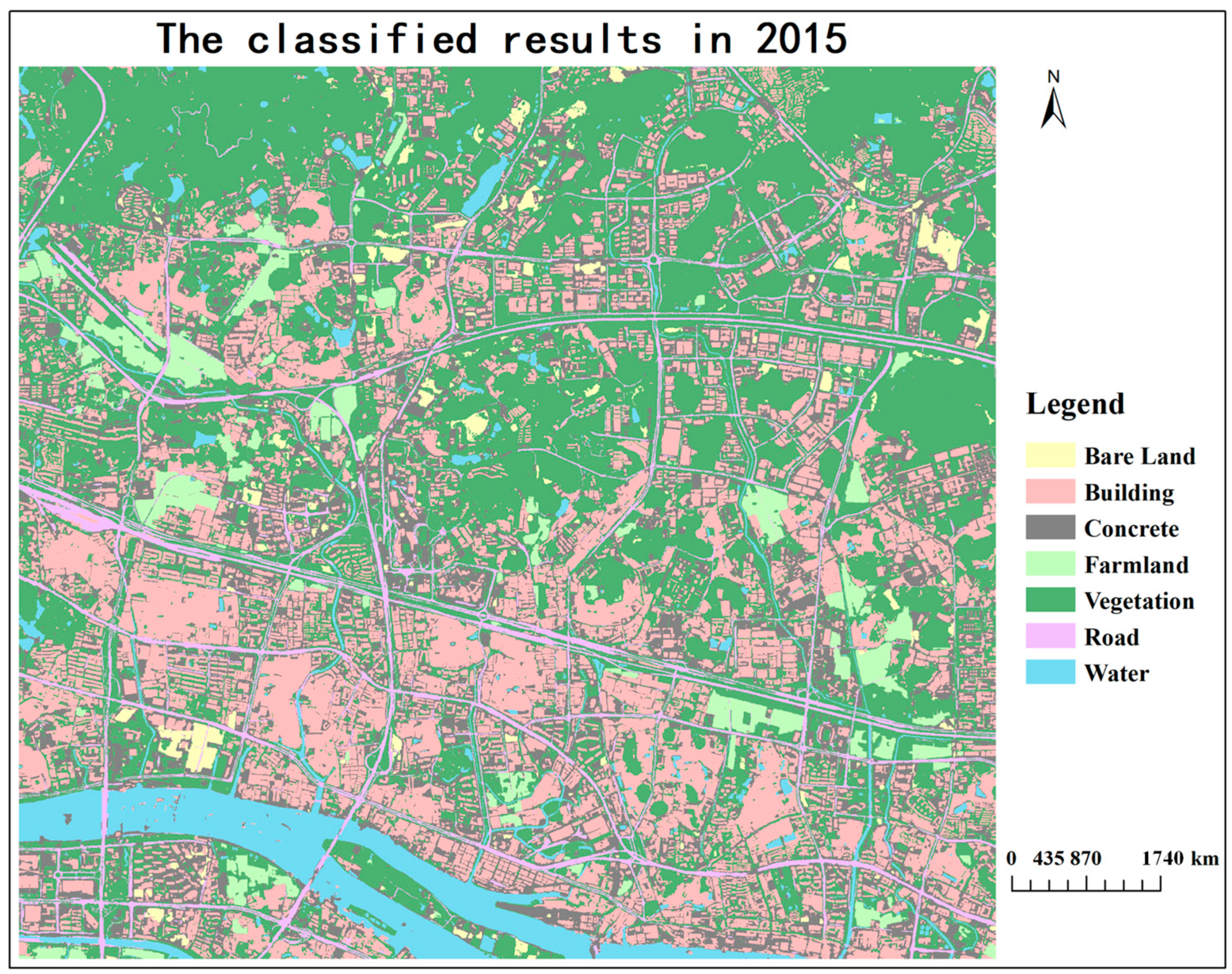

3.1. The Classification Results for 2015

The accuracy of the 2015 GF-2 remote sensing image classification model based on Deeplab V3+ in the verification set is shown in

Table 2. It can be seen from the statistical data in the table that the loss indicator decreased from 2.3 to about 0.29, which proves that the training of the classification model has a good convergence effect. In addition, the pixel accuracy obtained by the trained classification model on the verification set is 0.89, while the average MIoU is 0.78. This proved that the classification results were basically consistent with the distribution of real ground objects. Except for the concrete and road categories, the IoU values of different land object categories were all above 0.8 and that of the farmland category was greater than 0.9, which proves that the classification model has a good classification effect on these five land categories and that the classification effect on farmland is the best. However, the reason for the worse IoU in the concrete and road categories is that roads in downtown Guangzhou are close to the concrete, which is difficult to distinguish according to the spectral structure, so the classification model has difficulty in distinguishing between the road and concrete indicators.

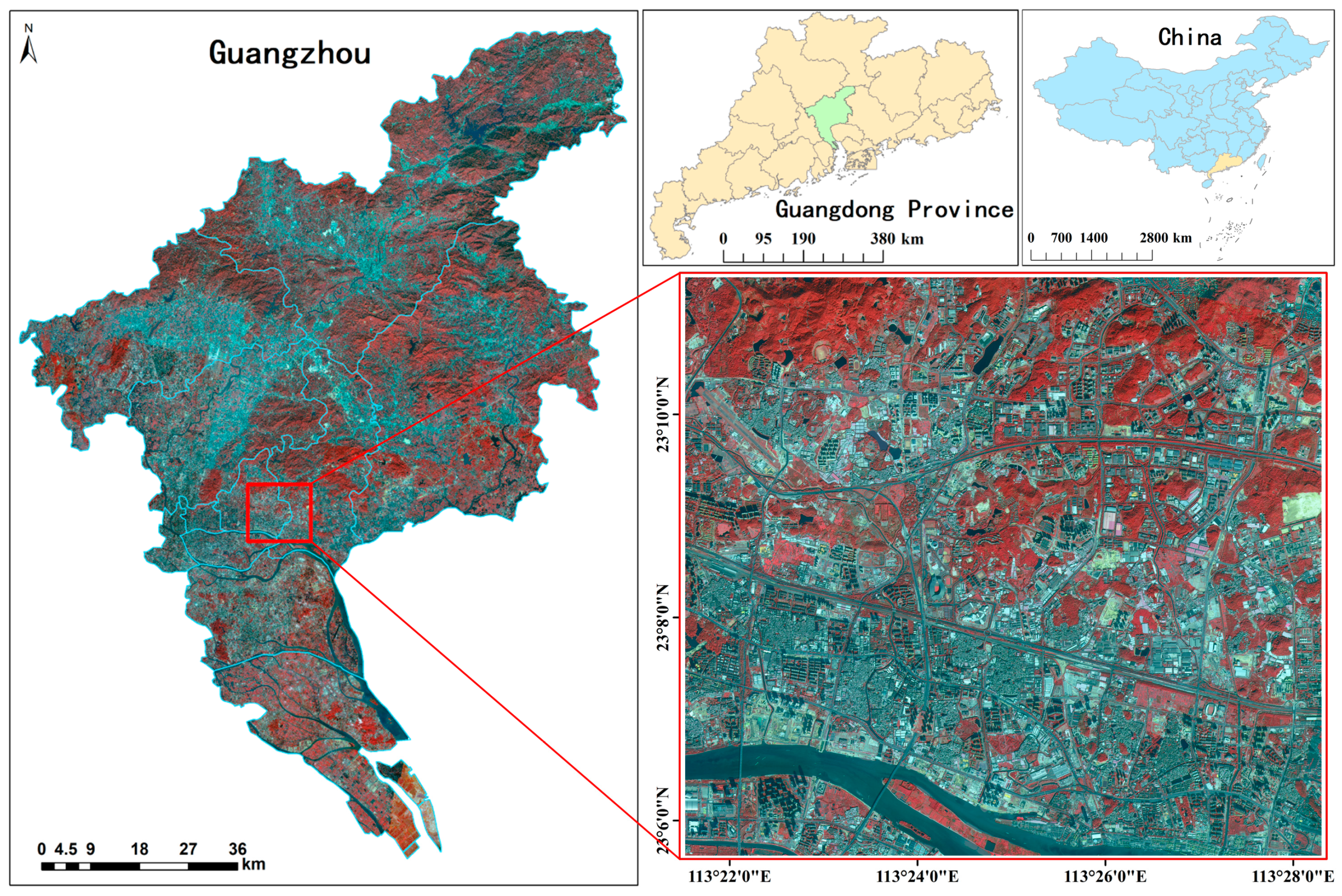

The trained classification model was applied to the classification of high-resolution remote sensing images of the study area in 2015, and the results were shown in

Figure 3 We isolated 200 evenly distributed areas of interest as the validation samples, calculated an overall accuracy of 0.875 and a kappa coefficient of 0.8326 according to the confusion matrix of the classification results, and counted the user accuracy (UA) and producer accuracy (PA) of different land use categories, as shown in

Table 3. It can be seen from the table that the classification effect on the concrete and road categories was worse, while other ground object categories achieved a high precision of more than 0.8, which is consistent with the performance of the classification model on the validation dataset. In addition, the best-performing land feature categories on the verification set were farmland and vegetation, respectively, while the confusion matrix calculated using the verification samples showed that the best-performing categories in the UA and PA indicators were vegetation and building, respectively, which proves that vegetation is, indeed, the land use category with the best classification effect. According to the statistical data on the UA and PA indicators, the PA of the building category was not much different from that of farmland, but the UA of the building category was much higher than that of farmland. The reason for this situation may be that the proportion of buildings in the whole research area was much higher than that of farmlands. Therefore, there were a large number of samples with the true value of buildings in the verification samples, and the proportion of misclassification in this category was relatively small.

3.2. The Change Detection Results for 2020

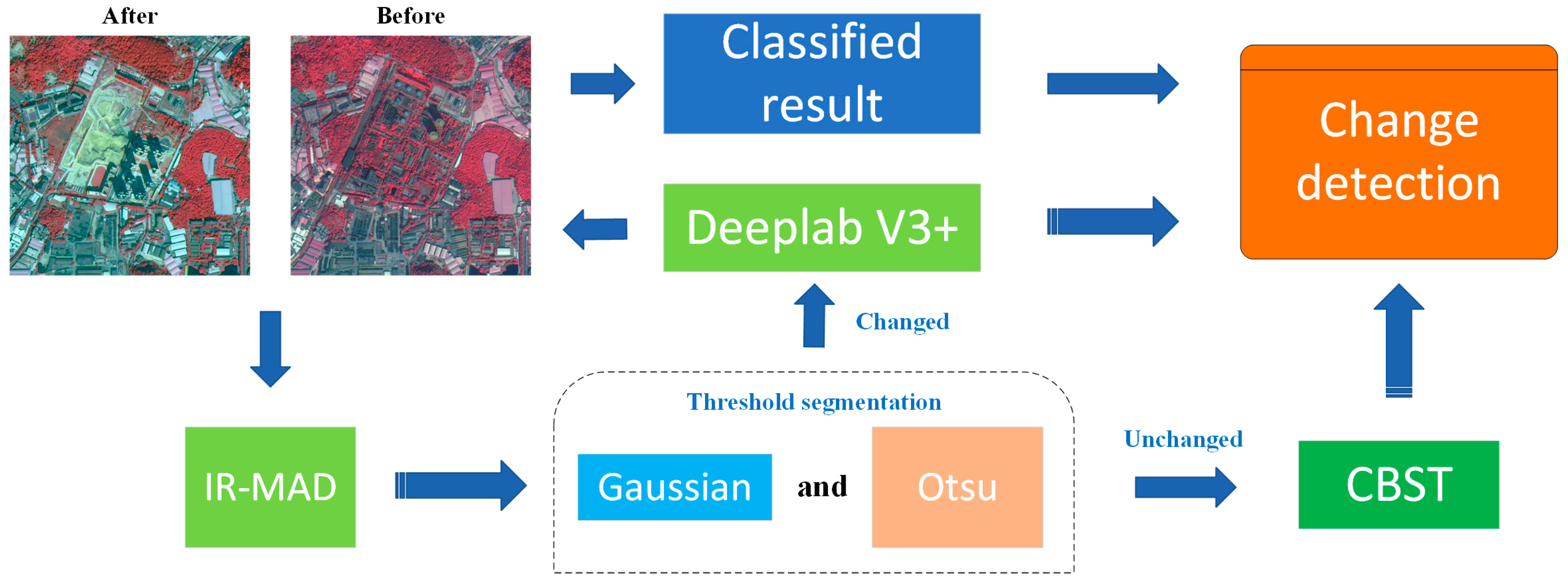

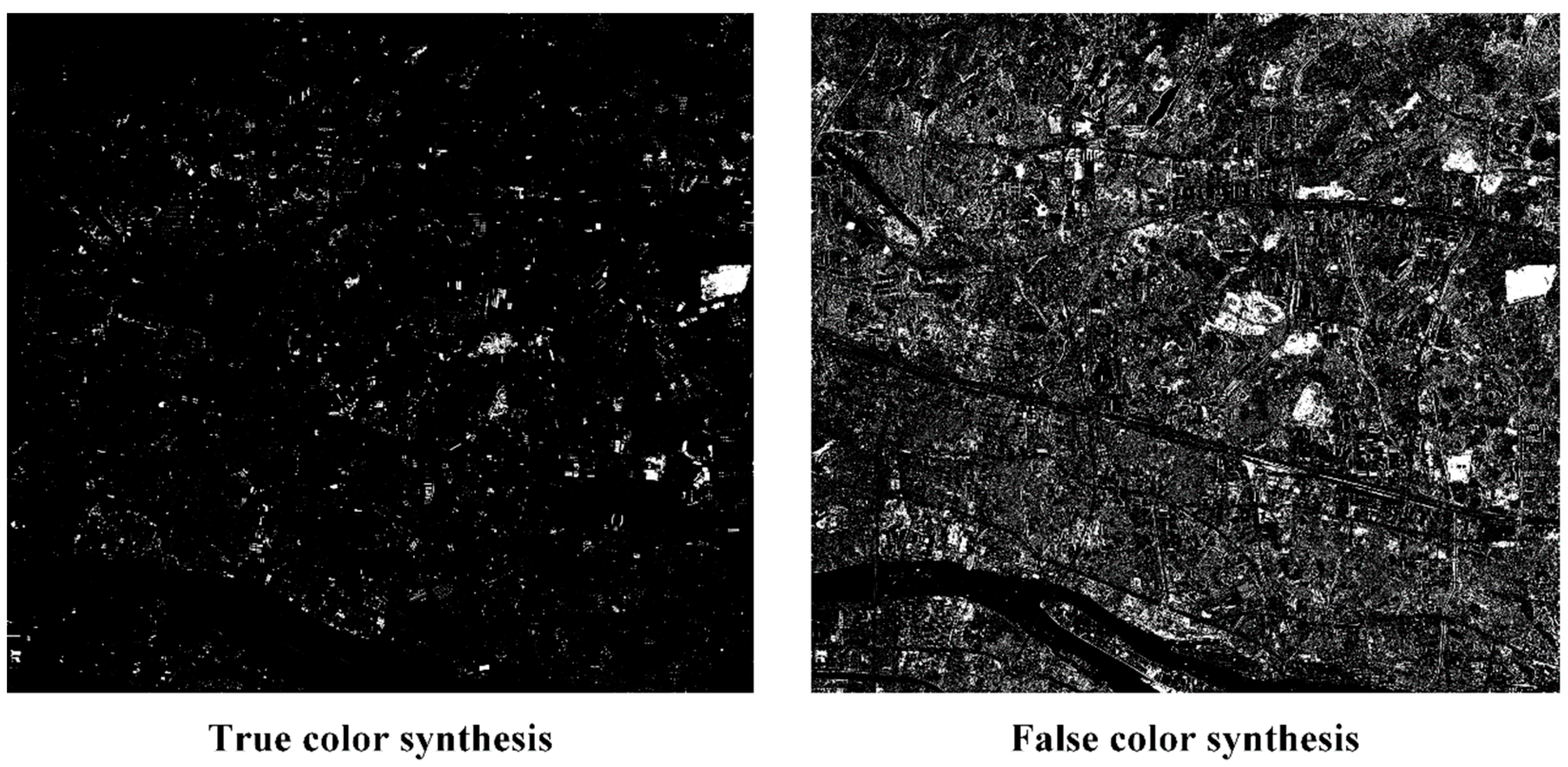

In the preliminary change detection experiments on high-resolution remote sensing images in the research area between 2015 and 2020, which were based on the IR-MAD optimization scheme and on OTSU threshold segmentation, the results of the GF-2 images inputting in IR-MAD using true color and false color synthesis are significantly different, as shown in

Figure 4. Most of the changed areas in the figure can be partially detected, and the preliminary change detection results under the false color synthesis on the right show that, in addition to the changed areas, some areas that have not actually changed may also be judged as having partially changed.

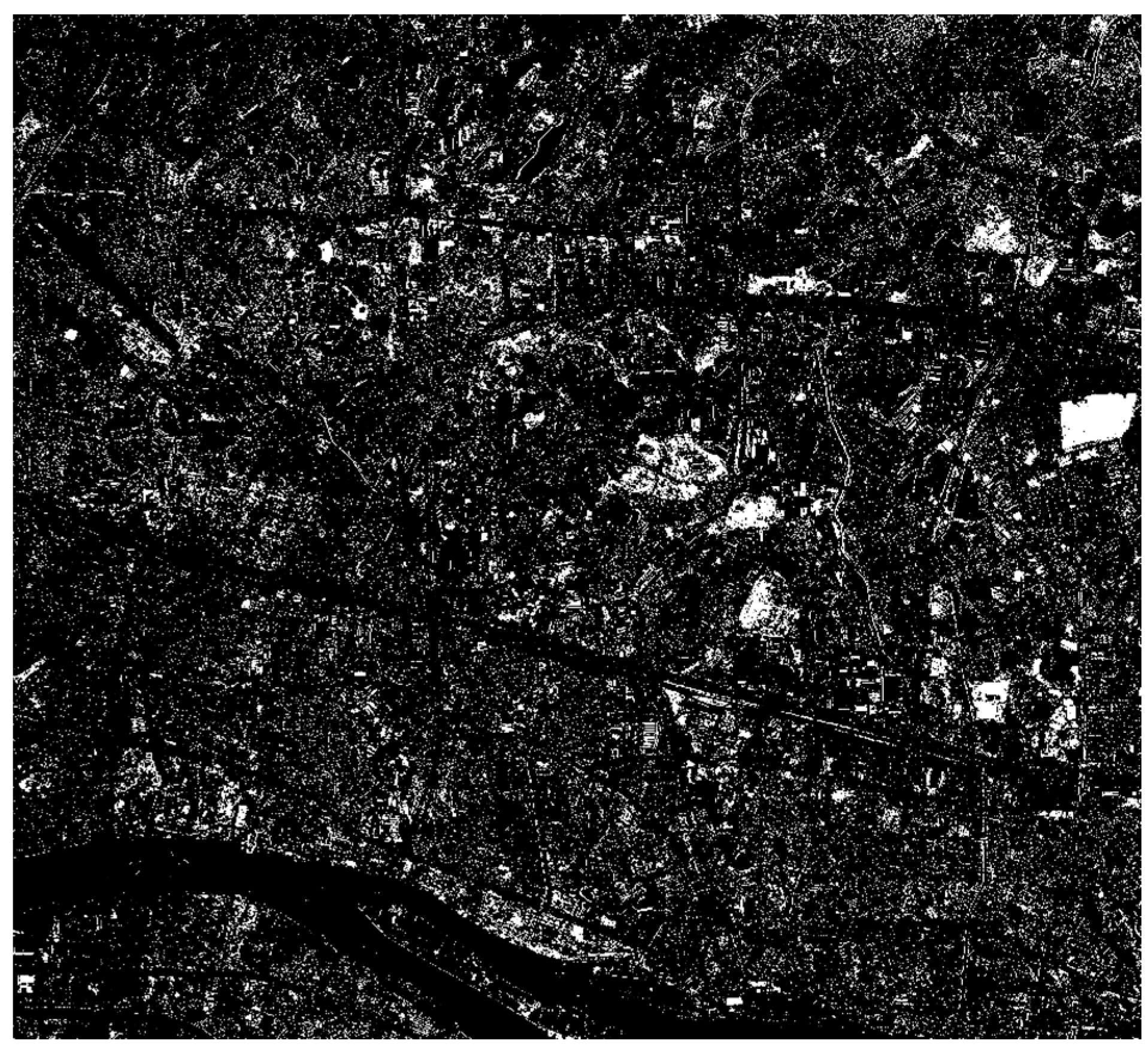

Therefore, in the experiment, the difference maps of change detection obtained with true color and false color synthesis were individually processed using Gaussian filtering; then, the two difference maps were superimposed, and the grid average was calculated. Finally, the resulting average difference maps were input for OTSU threshold segmentation, and the preliminary change detection results were finally obtained, as shown in

Figure 5.

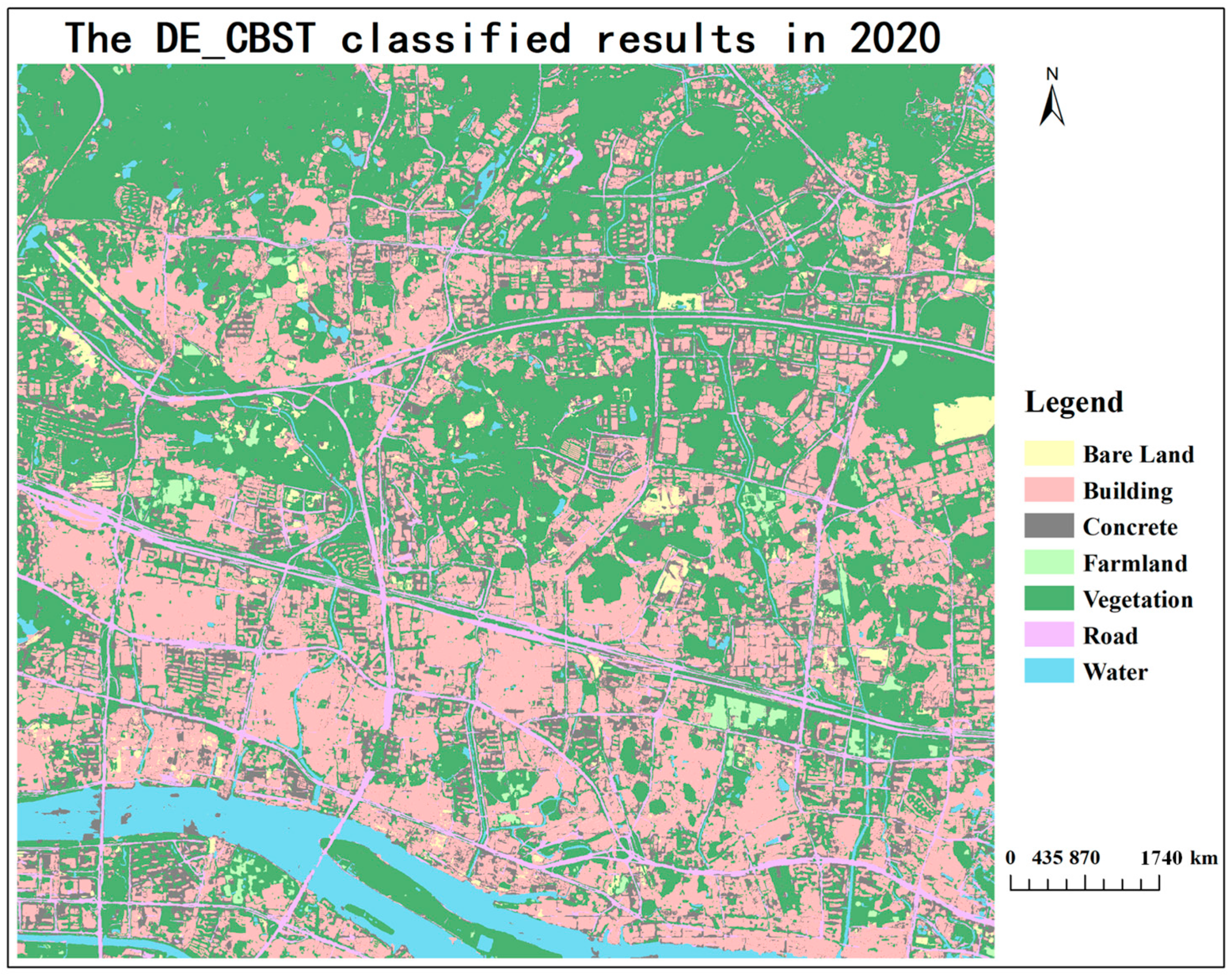

The unchanged region in the IR-MAD preliminary change detection results was used for a CBST region adaptation experiment, and, based on the Deeplab V3+ land use classification model trained on the study area in 2015, we directly migrated to the study area in 2020 through the training sample dataset and validation set of the changed area. Then, the classification results in 2020 of the changed and unchanged regions were fused, and the complete DE_CBST classification results on the GF-2 images of the study area in 2020 were finally obtained (as shown

Figure 6). In order to compare the classification accuracy of the DE_CBST experiment and the change detection accuracy relative to the classification results on the study area in 2015, the classification models of CBST region-adaptive experiment training and Deeplab V3+ direct migration were separately applied to the overall GF-2 images’ classification of the study area in 2020. In addition, the U-Net and SegNet models were selected as the comparison models to further compare and verify the accuracy of DE_CBST. In order to maintain the consistency of other parameters in the experiment involving these two classification models, the Deeplab V3+ land use classification model trained on the study area in 2015 was directly transferred to the U-Net and SegNet models for training, and its training sample dataset, validation sample dataset, training iteration number, batch size, and other parameters were consistent with the Deeplab V3+ transfer learning experiment.

In the accuracy evaluation process, 200 evenly distributed areas of interest were selected as the validation samples; the confusion matrix was designed according to the classification results of each experiment in 2020, and the overall accuracy and kappa coefficient were calculated (as shown in

Table 4). According to

Table 5, the DE_CBST classification results achieved the highest overall accuracy and kappa coefficient among all the classification models, while the SegNet classification results were the worst. The classification performance of the other three models was as follows: Deeplab V3+, U-Net, and CBST, in order from best to worst.

In addition, the user accuracy and producer accuracy for each land use category in the 2020 classification results of different models were calculated. According to the statistical data on the PA and UA of different land use categories in the classification results shown in

Table 5, it can be seen that the overall DE_CBST classification results have better classification effect than the other models. According to the classification effect on different land use categories, vegetation is the best land use category in all the classification models, and its producer accuracy and user accuracy were both above 0.8. Moreover, the PA of the building category in the SegNet classification model was less than 0.7; the PA and UA of the building category in the other classification models were above 0.7, which proves that the building category in all the classification models experienced a good classification effect. The concrete category did not perform well with respect to the classification effect of all the classification models, which was similar to the classification results on the study area in 2015. In addition, the CBST region adaptation experiment conducted a migration experiment based on samples from the unchanged region, while the bare land category in the changed region showed worse PA and UA scores of less than 0.4, which proves that the CBST model trained using bare land samples from the unchanged region could not identify bare land in the 2020 study area.

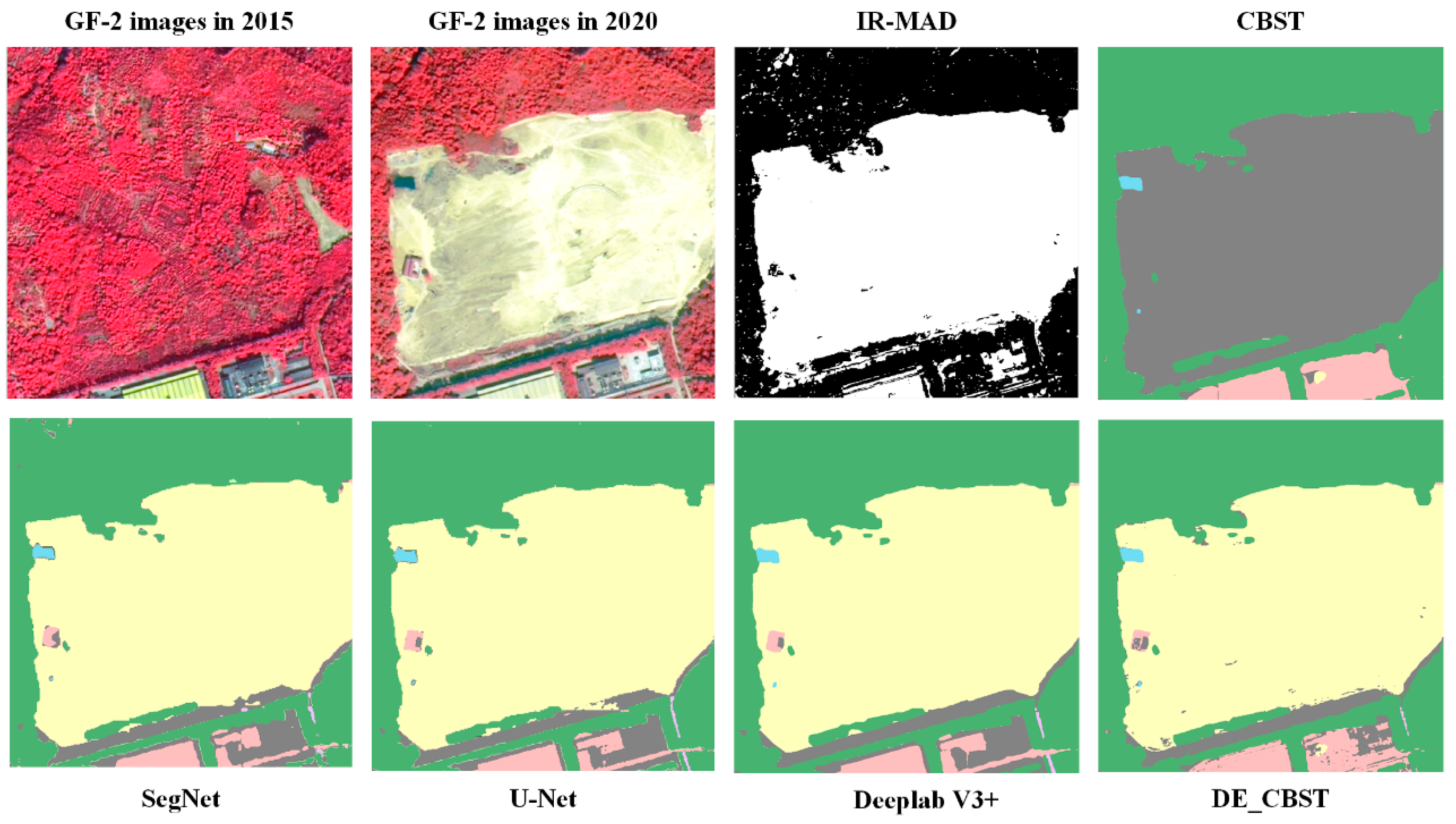

By comparing the classification results of different classifiers for the changed area in 2020 (as shown in

Figure 7), it can be seen that, when a large area of vegetation changed into bare land, CBST identified this area as concrete. The three classification models U-Net, SegNet, and Deeplab V3+, which were trained based on a sample dataset of the changing region, could all identify bare land precisely. Therefore, among the classification results of these three classification models, the PA and UA of the bare land category are much higher than those of the CBST model. The classified results of Deeplab V3+ showed the best performance (PA = 0.8750, UA = 0.7). In addition, since the vegetation change had been identified as bare land in the IR-MAD preliminary change detection results, the corresponding changed areas in the Deeplab V3+ classified results were spliced into the classification results of CBST’s invariant areas. Finally, the UA and PA of the DE_CBST classification results were significantly improved compared to the CBST classification results (PA = 0.8333, UA = 0.6667). According to

Table 5, since most water regions in the study area experienced little change from 2015 to 2020, the water category could achieve a good performance with PA and UA greater than 0.85 in the CBST classification results. Due to a lack of water samples in the training of the U-Net, SegNet, and Deeplab V3+ classification models, the water classified in the study area using these models showed worse accuracy values in its PA, which was less than 0.6.

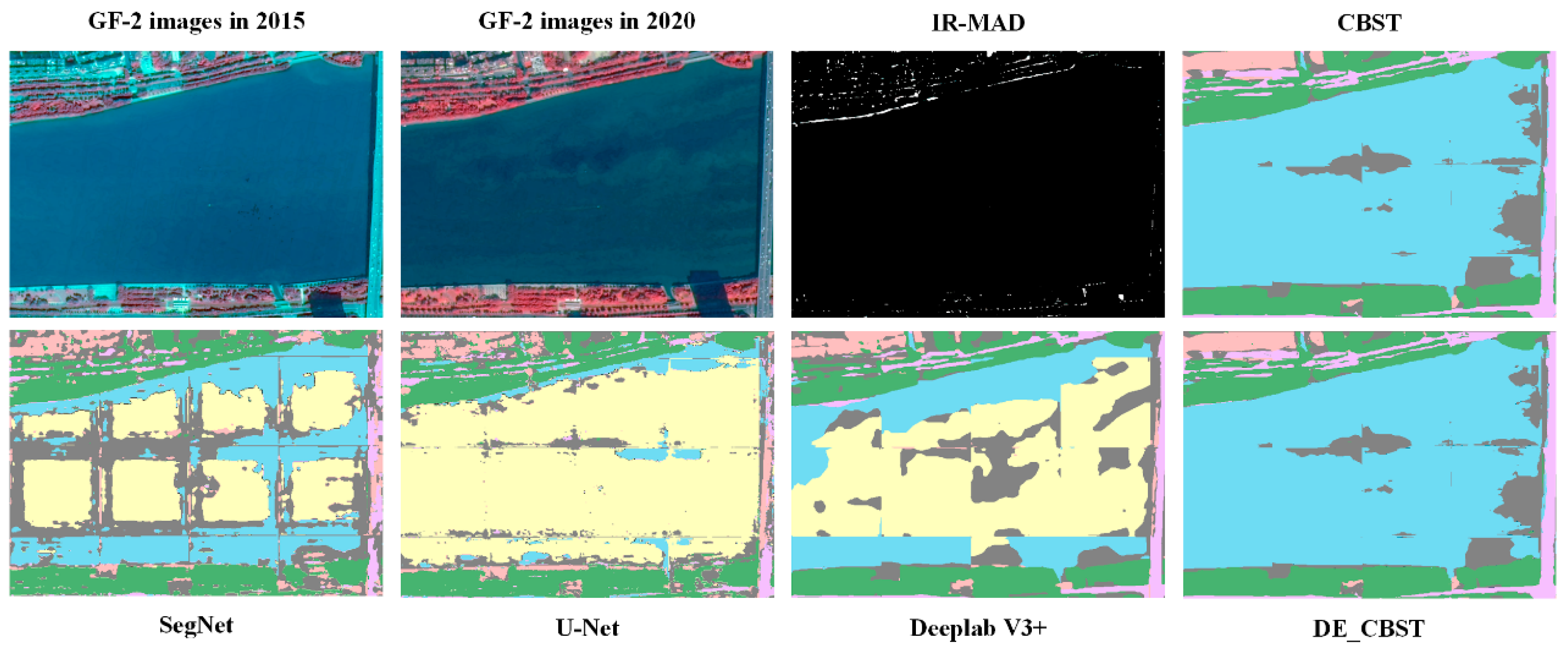

According to the unchanged water region in

Figure 8, a large area of water was identified as bare ground and concrete by the three classifiers U-Net, SegNet, and Deeplab V3+, while only a small amount of area was identified as concrete in the classification results of CBST, and most areas could be more accurately identified as water by the CBST model. In addition to the lower PA of the water category, the UA of the bare land and concrete categories in the classified results of the U-Net, SegNet, and Deeplab V3+ models were lower than those of PA. IR-MAD preliminary change detection identified the large area of water shown in

Figure 8 as an unchanged area; so, after the classification results of CBST in the unchanged area were superimposed onto the classification results of Deeplab V3+’s changed area, the final water accuracy obtained using DE_CBST significantly improved compared to Deeplab V3+ (PA = 0.8750, UA = 1.0).

The classification results of the five models on the GF-2 images of the 2020 study area were superimposed onto the classification results on the 2015 study area to obtain the change detection results for the 2015–2020 study area, and 200 evenly distributed areas of interest were selected as change detection verification samples. According to the comparison of the change detection accuracy obtained by superposition of the classification results of the five models in

Table 6 and the classification results on the research area in 2015, it can be seen that the commission indicator of the DE_CBST change detection results is the lowest, at 0.1415; the omission indicator is the only value lower than 0.1, and the other index values of DE_CBST are also the highest in their category. The F1 index is 0.8792; the overall accuracy is above 0.85, and the kappa coefficient is also greater than 0.75, which proves that the change detection accuracy of DE_CBST is the best among all the models and can be used for further analytical applications. In addition, DE_CBST achieved the maximum accuracy in change detection, resulting in a gain of 2.5% in precision, 1% in recall, and 1.8% in F1 compared to the Deeplab V3+ method. In comparison to the CBST, the respective increases were 3.8%, 8.9%, and 6.3%. Furthermore, the DE_CBST model exhibits lower commission and omission compared to both the Deeplab V3+ and CBST models. Specifically, commission was 2.5% lower than Deeplab V3+’s and 3.9% lower than CBST’s. Similarly, omission was 1% lower than Deeplab V3+’s and 8.9% lower than CBST’s. When compared to the semi-supervised change detection algorithm CDNet + IAug with DE_CBST, it was observed that DE_CBST achieved higher recall and F1 scores when CDNet + IAug marked 20% of the samples on public datasets Levi-CD and WHU-CD [

15]. Furthermore, the commission and omission indicators of DE_CBST exhibited superior performances compared to the semi-supervised FDCNN method when evaluated on public datasets WV3 Site1, WV3 Site2, ZY3, and QB, all of which possess similar spatial resolution to GF-2 [

14]. Therefore, the change detection results of the DE_CBST model were finally selected as the change detection results for the high-resolution remote sensing images in the research area from 2015 to 2020.

3.3. Land Use Change from 2015 to 2020

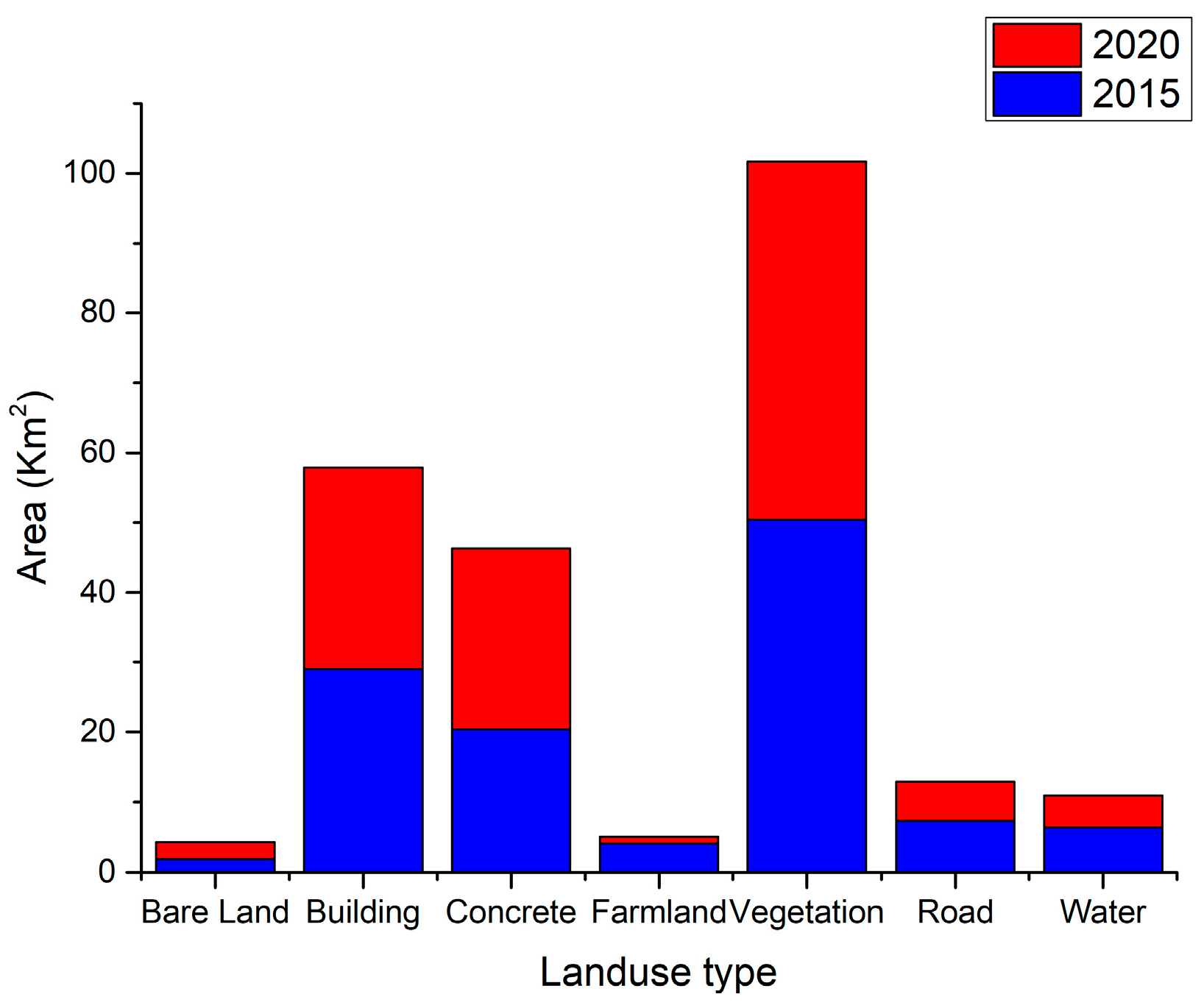

As shown in

Figure 9, the largest proportion of the total area of different types of land use in 2015 and 2020 comprised the vegetation, building, and concrete categories. Among these, the total area occupied by vegetation and buildings changed little, and the total area occupied by vegetation was about 50 km

2. The total area occupied by buildings was about 29 km

2. The overall area changed in the road and water bodies categories was also less than 1 km

2, of which the overall area occupied by water bodies was about 6 km

2 and the overall area occupied by roads about 7 km

2. In addition, the total area belonging to the concrete category in the study area in 2020 increased by about 3.57 km

2, compared to the 20.42 km

2 measured in 2015, while the total area occupied by farmland decreased, from 4.09 km

2 in 2015 to less than 1 km

2, with a large percentage of change.

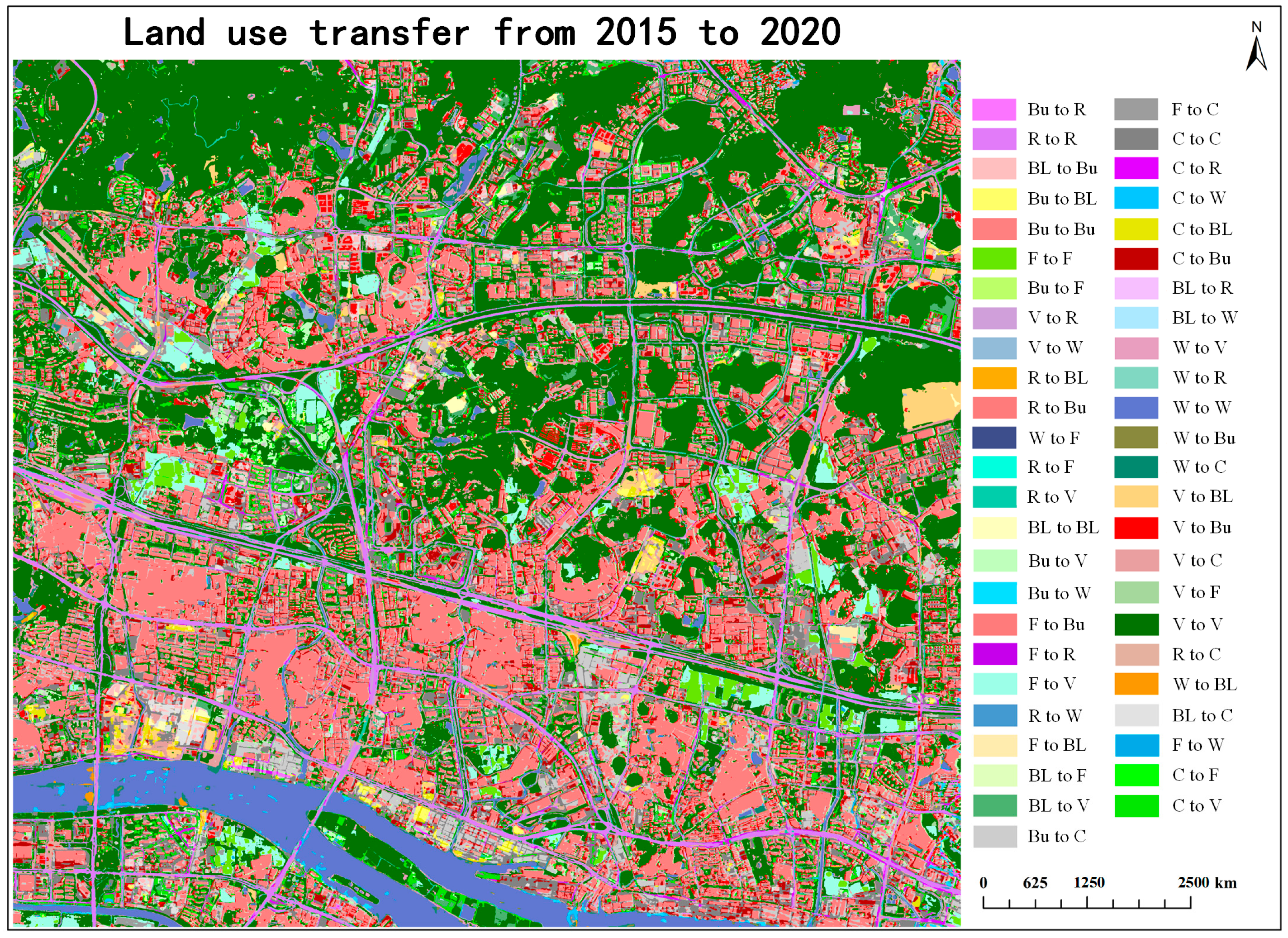

Based on the change detection results on the GF-2 images in the study area from 2015 to 2020, the land use transfer matrix and spatial distribution map of the study area from 2015 to 2020 were prepared, respectively, as shown in

Table 7 and

Figure 10. According to

Table 7, although the total area of bare land in 2015 was only 1.84 km

2 (

Figure 9), its change rate reached over 0.9, and bare land was mainly transformed into concrete and vegetation. In addition, the change rate of farmland also reached 0.7893, and about 2.3 km

2 of farmland was transformed into vegetation, resulting in a sharp decrease in the overall area of farmland. This proved that urban renewal in the study area between 2015 and 2020 resulted in an increase in bare land and a substantial reduction in farmland. In addition, water, vegetation, and building were the three types of land use with the smallest rate of change, among which the low rate of change of water was due to the relatively stable land use structure of water in the study area from 2015 to 2020, while the low rate of change of building and vegetation was due to the large overall area of these two types of land use in 2015, and the proportion of the changed area was relatively small. The actual changed area of the building and vegetation use categories amounted to more than 5 km

2. In the land use transfer of the building, vegetation, and concrete categories in the study area from 2015 to 2020, the most changes in area involved the interconversion process of these three types of land use. Among them, about 5.65 km

2 and 2.89 km

2 of the building area were converted to cement flooring and vegetation, respectively, and about 4.6 km

2 of the concrete area was converted to building and vegetation areas, respectively. In 2015, except for less than 1 km

2 of vegetation being converted to water and farmland, the area converted to the other four types of land use was more than 1 km

2. In addition, about 3.39 km

2 and 5.82 km

2 were converted to building and concrete areas, respectively. About 1.06 km

2 and 1.56 km

2 were also converted to bare land and road areas, respectively. In general, during the land use transfer in the study area from 2015 to 2020, the overall land use pattern of the water, building, and concrete use categories showed little change, while the increase or decrease in bare land and farmland areas, to different degrees, and the conversion of vegetation to different use types and areas mainly reflected the change in land use’s spatial pattern for the process of urbanization in this region.

3.4. The Relationship between Feature Types and LST

Based on PSC, Landsat 8 OLI data in the study area were obtained for LST retrieval, and the spatial resolution of the LST produced was 30 m. Due to the lack of images caused by cloud cover and other factors, the remote sensing image data from the 18th of October, at the end of autumn, was obtained in 2015, and the average LST recorded was about 31.2 °C, while the remote sensing image data from the 2nd of December, at the end of winter, was obtained in 2020, and the average LST was about 22.4 °C. It can be seen that, even in autumn and winter, the temperature in the downtown area of Guangzhou is over 20 °C. According to the spatial distribution of LST in 2015 and 2020 shown in

Figure 11, it can be seen that, in 2015, except for the water in the southwest corner and the large vegetation areas in the north and northeast, almost the entire study area showed an LST greater than 30 °C and that the temperature of part of the grid even exceeded 40 °C. However, the LST in most regions was reduced to about 22 °C, with scattered high-temperature regions above 30 °C, at the end of winter in 2020.

In order to explore the correlation between LST and land use type, we conducted Pearson’s correlation analysis based on the classification results and the corresponding LST data in 2015 and 2020, respectively, and obtained statistical data as shown in

Table 8. In comparison to the Pearson correlation coefficients reported by Prem et al. regarding the relationship between NDVI and the daytime LST for various land use categories in India [

45], the absolute values of the correlation coefficients presented in

Table 8 are greater. These results served as evidence that the correlation between the detected changes in ground features in this study and the LST is more robust, thereby indicating the efficacy of the DE_CBST method. In addition, since the materials comprising road and concrete are close to each other, the road and concrete categories were combined into CR, while vegetation and farmland were combined into FV. In addition, due to insufficient data samples of bare land, the correlation between bare land and LST could not be estimated. It can be seen from the statistical data that artificial surfaces such as building and concrete were positively correlated with the LST, while vegetation, farmland, and water were negatively correlated with the LST, and the correlation between the feature types and the LST was stronger in late autumn (2015), when the LST was higher. However, the surface features negatively correlated with the LST in 2015 and 2020 are more strongly correlated than the artificial surfaces with a positive correlation, which seems at odds with other studies [

46]. The reason is that the LST data of the two periods were both acquired in autumn and winter, so vegetation, farmland, and water mainly lead the cooling effect [

47].

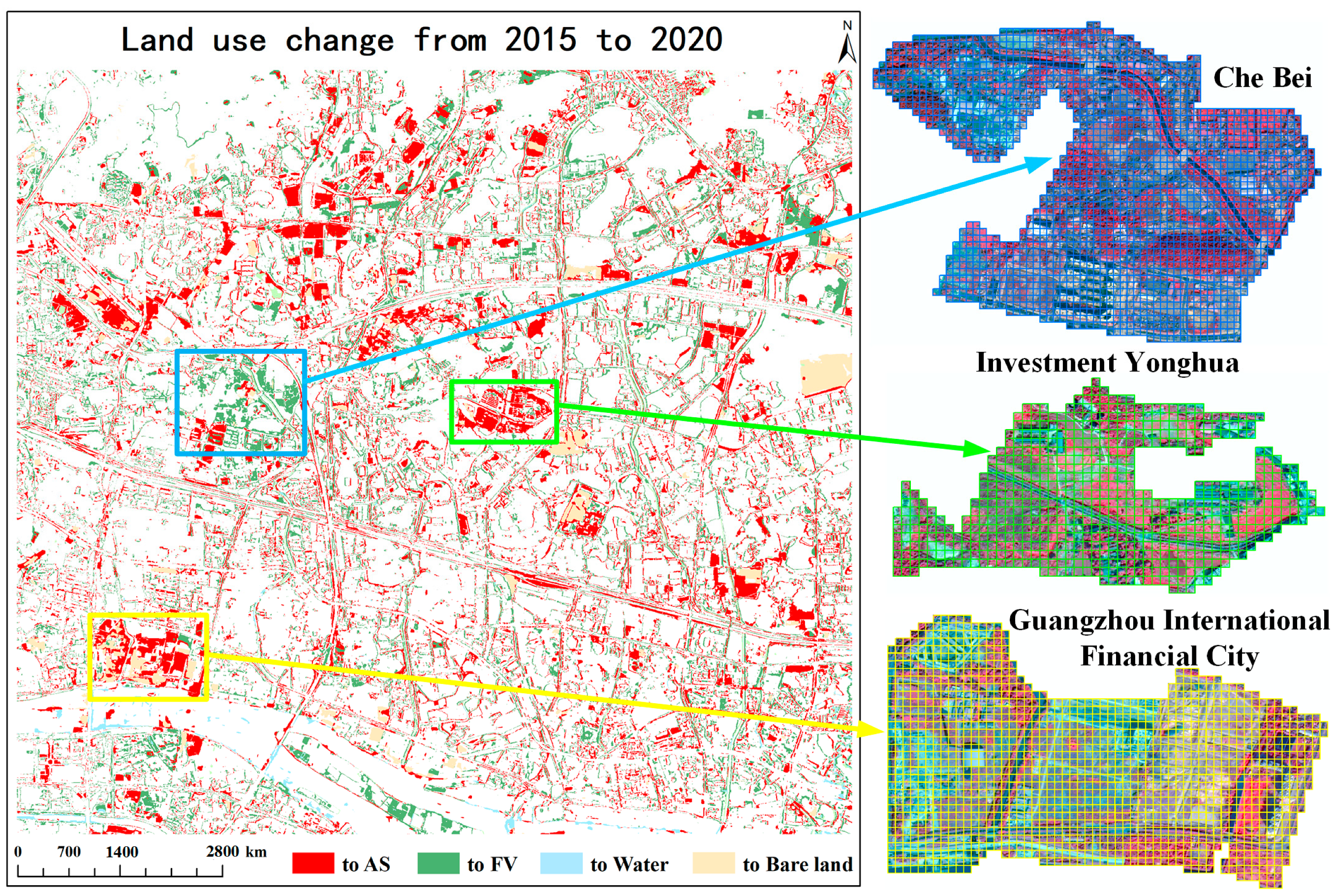

To more specifically target LST and features at a fine-scale, we extracted changed results and produced the 2015–2020 land use change map (

Figure 12), in which the building, road, and concrete categories are combined into one category, labeled artificial surfaces (AS). After comprehensive consideration of land use change and LST grid data in the two periods, we selected three regions with more changes within the research area shown in

Figure 12 for a GTWR analysis between surface feature categories and LST. The first region selected was Guangzhou International Financial City (GIFC), with an area of about 1.2 km

2, which was located in the southwest corner of the research area and mainly changed to building and bare land in 2020. The second region was Investment Yonghua (IY), with an area of about 0.6 km

2, which was located in the center of the research area and mainly changed to buildings in 2020. The last region was Che Bei (CB), with an area of about 1.6 km

2, which was located on the west side of the center of the study area and mainly changed to vegetation and a few buildings in 2020.

In the GTWR model’s fitting experiment, we first extracted the grid cells with a total of 1430 in GIFC, 678 in IY, and 1937 in CB according to the range of the three selected regions (as shown in

Figure 12). Secondly, according to the classification results on the study areas in 2015 and 2020, the area proportions of the building, CR, and FV use categories in each grid were calculated separately. Since the area proportion of water in the three regions was close to zero, the water data were not entered into the GTWR. Then, we took the LST data of each grid in 2015 and 2020 as the dependent variables, and the area proportions of the building, CR, and FV use categories as the independent variables. At the same time, the coordinate factors of latitude and longitude of the grid cells and the time factors were added into the GTWR model for fitting. In order to ensure the consistency of the land surface temperature data and the land use data, a few missing land surface temperature grids in the IY region in 2020 were uniformly removed from the experiment. Finally, the statistical data obtained by fitting the three types of surface features of building, CR, and FV areas into the GTWR model were shown in

Table 9. The statistical data presented in the table indicate that the R

2 adjusted values for the three areas surpass 0.9. We performed a comparison of our results with the GWR fitting outcomes for land use/land cover (LULC), topographic elevation, and surface temperature (LST) in Ilorin, Nigeria, as investigated by Njoku et al. from 2003 to 2020, all of which yielded coefficients below 0.9 [

48]. It demonstrated that the ground feature results of change detection presented herein exhibited a stronger fitting effect with the LST, which indicated the effectiveness of the DE_CBST model. In addition, since the area of the three regions was IY < G < C, the residual squares value also increased cumulatively with the increase in the number of grids, while the fitting effect (the value of R

2 adjusted) also increased, simultaneously.

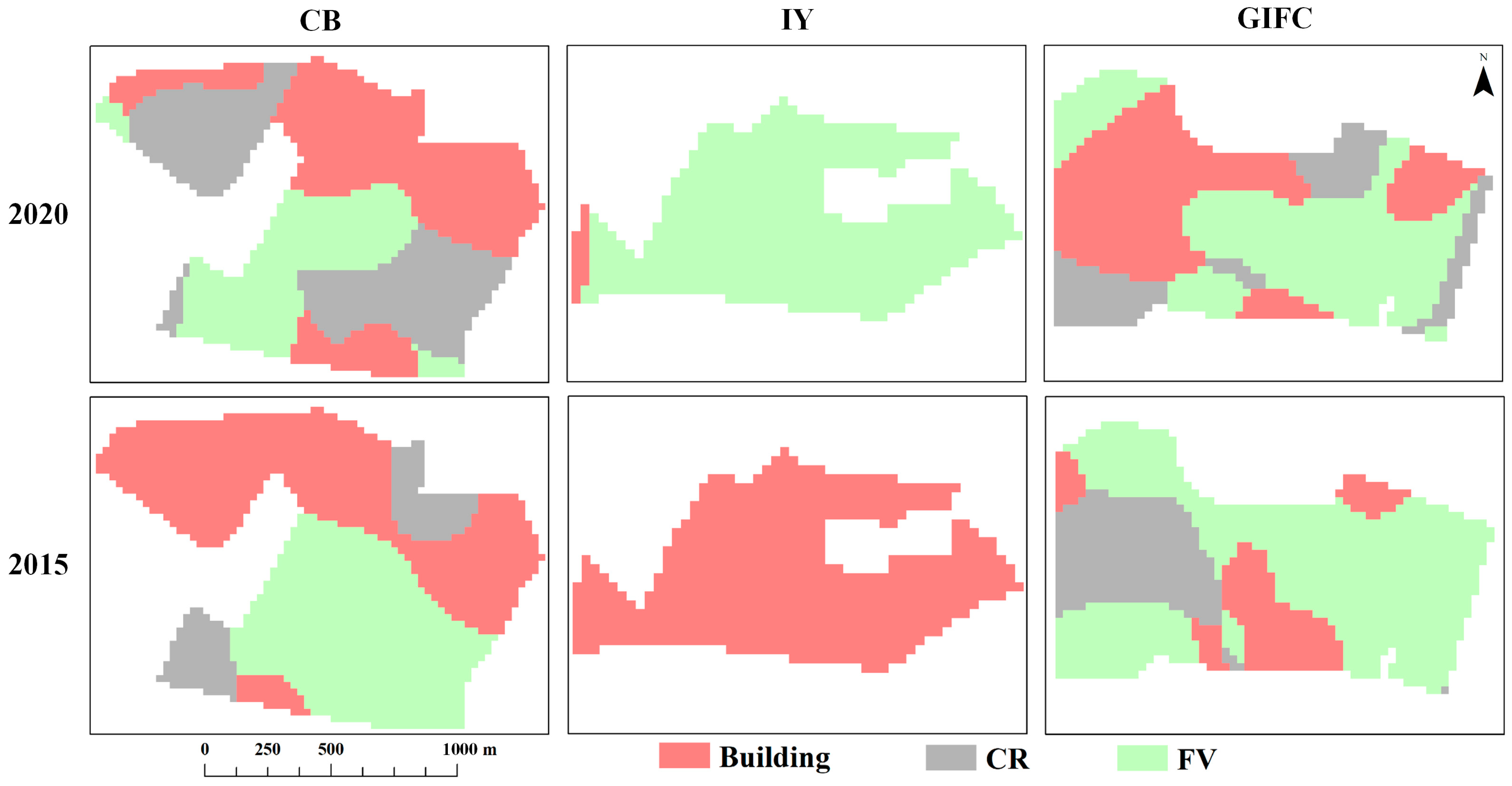

Figure 13 shows the GTWR model’s fitting results for the three regions, where the different colors of the grid cells represent the types of features with the greatest correlation coefficients, which means the types of features with the greatest correlation with LST. According to the change in the correlation coefficient of the GIFC region shown in

Figure 13, it can be seen that, even in the case of a low LST in the winter of 2020, the grid cells with a high correlation between building area and LST still occupied more than one-third of the overall area, indicating that the construction of Guangzhou International Financial City buildings promoted the warming effect of the LST. In addition, the higher CR correlation in the west side of the GIFC region in 2015 was due to the fact that part of the plot in 2015 comprised a large area of concrete, while, in 2020, after the change to buildings and, in part, to bare land, the warming effect of the LST was dominated by the building category. In summary, land use change in GIFC substantially increased the building category’s contribution to LST warming in 2020, while it reduced the cooling effect of the FV area on the LST. According to the spatial distribution of the correlation coefficients between the ground features and the LST in the IY region shown in

Figure 13, it can be seen that, even though a large area of farmland and vegetation plots in this region changed into multiple residential areas in 2020, the FV category dominated the cooling effect on LST in almost the entire region. However, when farmland and vegetation occupied a large area and only a small part of buildings were built in 2015, the building category dominated the LST warming effect of the entire area. The correlation between LST and ground objects in the IY region seems to be inconsistent with the common view [

49] that a large proportion of FV has a strong cooling effect on LST and that a large proportion of buildings has a strong warming effect on LST. There are two reasons for this: On the one hand, the LST of the IY region in the winter of 2020 ranged from 19 °C to 26 °C, so the main FV area with a cooling effect dominated the LST of the whole region, while the LST of 2015 ranged from 28 °C to 39 °C, so the building category, with the strongest LST-warming capacity, dominated the LST of the whole region. On the other hand, IY was located in the easternmost part of the Tianhe District, surrounded by a large area of vegetation. Therefore, in periods of low LST, residential and other buildings within the region can hardly achieve a high warming effect on the LST. According to the spatial distribution of the correlation coefficients between the features and the LST in CB (as shown in

Figure 13), it can be seen that, after the factory buildings in the northwest corner of the plot were demolished and turned into CR in 2020, the grid cells of the building category that had led to the LST temperature rising changed into CR. However, due to the completed construction of residential areas and hospitals in the southeastern side of the plot, there was a large proportion of increase in buildings and CR. Despite this, the LST heating grid cells of this part of the region in 2020 were dominated by the CR category rather than the building category. The reason behind this might be that, as the south-eastern block was affected by the Che Bei river across the whole region, from south to north, the warming effect on the LST was weakened by the water, so the correlation between the CR category and the LST seems highest.