1. Introduction

Snow is a viable proxy through which one may study climate change, as the associated modifications to precipitation and temperature patterns are believed to be strongest during the cold season in the mid and high latitudes where snowfall is prominent [

1,

2,

3,

4,

5]. The rate at which climate is changing in polar regions is thought to exceed the rate at which natural systems can adapt [

6]. Global climate models indicate that snow cover changes will considerably impact the cryopsheric portion of the water budget [

7,

8,

9]. Snow is a vital environmental and geophysical quantity and is sensitive to climate change since its magnitude and extent depend on both temperature and precipitation [

10,

11,

12].

Snow depth is the measured (or estimated) depth of a snow pack at a location, and takes into account the accumulation, ablation, and evolution of a snow pack. Therefore, snow depth should not be confused with snow cover (presence/absence) or new snowfall. Satellite data over the Northern Hemisphere suggest that snow cover has lessened since the mid-1980s [

6,

13,

14,

15,

16,

17,

18]. Snow depth analyses complement snow cover change studies, providing further information on hydrological resources, surface energy, soil processes, and ecological systems [

12,

19]. Trend estimates in snow depths [

12,

13,

20,

21] and new snowfall [

22,

23,

24,

25] over various portions of the United States and Canada have been previously computed and related to climate variability [

8,

18,

26,

27,

28].

When assessing long-term trends in snow depths, one should consider the temporal homogeneity of the data ([

21,

23,

29,

30]). Snow depth series often have discontinuities induced by changes in measuring location, equipment, or methods. These discontinuities—the so-called changepoints (breakpoints)—are crucial in constructing a realistic trend estimate at any one location ([

21,

24,

30]). We do not attempt to attribute causes to any identified changepoints—they could be due to climate shifts, measuring changes, station moves, etc.; see [

29] for additional discussion.

Perhaps the paramount contribution here lies with methods to handle the changepoint effects. Our methods apply equally well to snowfall and snow cover trend estimation. In this work, an unknown number of changepoints are modeled as mean level shifts at unknown points in time, for each gridded snow depth series.

Ref. [

30] lists four difficulties in estimation of daily snow depth trends:

Snow is seasonal, mostly absent during the summer months at all but Arctic or high alpine locations.

Daily snow depths are highly correlated in time: tomorrow’s snow depth depends on today’s snow depth. Statistical inferences that ignore correlation will yield artificially high levels of confidence.

Snow depths cannot be negative—this “zero modified support set issue" or hard boundary must be addressed.

One should allow for changepoints in trend analyses; as we will see, they are of critical importance.

This is the first study to address all four points above in a regional assessment of snow depth trends.

Previous snow trend studies have used various methods to obtain trends. Some studies use trend estimates obtained by minimizing a sum of squared deviations ([

12,

20]); however, homogenization issues are not considered there. Ref. [

24] quality control their data for inhomogeneities via expert opinion; however, their methods involve a target minus reference series approach, which are difficult to use here since our gridded series may aggregate many series over each grid cell. Other studies ([

8,

18,

28,

29]) use the methods in [

31], where a non-parametric regression analysis is performed on yearly maximal new snowfall amounts using Kendall’s tau statistic ([

32]). While Kendall’s tau statistic can be made to accommodate the serial correlation in snow depth data ([

31]), it is non-trivial to do such; moreover, these methods cannot handle changepoint features. Ref. [

7] address changepoints by merging distinct station records via the standard normal homogeneity test (SNHT) in [

33]. The SNHT is a single changepoint test and may not describe multiple changepoint scenarios well ([

34]).

Ref. [

29] note that the data used here contain many undocumented changepoints. In general, the snow depth data are replete with changepoints; indeed, the Napoleon series studied in [

30] contained 18 documented breakpoints from 1 January 1900 to 31 December 2003.

Estimation of multiple changepoint times via genetic algorithms (GA) has become increasingly popular in climate homogenization pursuits ([

35,

36]). Here, a GA is applied to the snow depth series at each grid to estimate changepoint times. These changepoints are subsequently used in a stochastic storage model. The storage model approach used here was introduced in [

30] and stems from queueing theory. Snow is a natural storage phenomena: the snow depth today is the snow depth yesterday, plus any new snow that has fallen, minus any ablation or compaction. Ref. [

37] also use storage models to describe daily snow depths, but do not allow for trends, melting in the fall and winter, or snow increases during spring ablation. In addition to trend estimates, computation of trend standard errors is also considered.

Results are demonstrated by applying our methods to a snow depth data set previously constructed and analyzed in [

12,

28,

29]. This article does not focus on how to assess the suitability of one data set over another, but rather how to get an accurate trend estimate from given data. The papers perhaps most relevant to this study are perhaps [

38], which develop additional statistical issues of the methods, and [

39], which also studies trends in snow depth time series with periodic aspects considered.

The rest of the study proceeds as follows. A description of the data and study period is presented in

Section 2.

Section 3 describes our changepoint estimation techniques.

Section 4 discusses the stochastic storage model used to compute trend standard errors.

Section 5 presents results and concluding remarks.

2. The Data

The development of the gridded data set used in this study is now summarized. The creation of

daily snow depth grids from station level data from 1900–2000 is discussed by Dyer and Mote (2006), although only data from 1960–2000 were analyzed there. Kluver et al. (2016) update the creation and validation procedures introduced in Dyer and Mote (2006), and extend the time record to 1900–2009. A brief review of the data is now discussed; the interested reader is referred to [

12,

22,

29], for additional detail.

The grids considered in the data examined here extend from

N to

N latitude and from

W to

W longitude. Station data are interpolated to

grids via the Spheremap spatial interpretation program in [

29,

40] report station density and found that the number of stations in a grid cell reporting snow depths varies widely with time (see

Figure 1 of [

29]), with a large increase in the number of reporting stations beginning in 1948 due to the full digitization of Cooperative Observer Program (COOP) station network data. A depiction of station density by grid is given in Figure 3 of [

29].

Ref. [

29] caution against using pre-1948 data in estimating trends, since station density is sparse before this time. As such, our study will commence in the summer of 1947 and finish in summer 2009 to permit work with “winter centered years” defined below. Observations during the latter half of 2009 will be omitted to accommodate the

Section 3 and

Section 4 methods.

Although [

12] study snow trends on the entirety of North America from 1960–2000, a period in which stations are claimed (without numerical quantification) to be more evenly dispersed, we restrict this study to the largest contiguous grid set reporting at least 20 winter seasons of station observations, subject to the following constraint: the northern most grid in the study region, at each longitude, is set to be the northernmost cell containing at least 20 winter seasons with one or more reporting stations during 1948–2009. Concessions are made to make this region contiguous; this essentially excludes only a few isolated Arctic grids with good data. Our study region will be evident in our graphics.

Ref. [

29] admit that the data contain various undocumented inhomogeneities in space and time due to differences in station recording procedures, reaffirming the importance of changepoints. After estimating the changepoint times, they are used in a stochastic storage model to help gauge uncertainty in the estimated trends. The storage model easily accommodates changepoint features.

3. Changepoint Methods

This section discusses our multiple changepoint detection methods. This is tantamount to data homogenization. The methods are illustrated with a snow depth series from a cell centered at latitude 44.5 N, Longitude 115.5 W, located near Warm Lake in the Boise National Forest in Central Idaho.

Genetic algorithms (GAs) have been successfully used in recent climate homogenization studies ([

34,

35]). GAs use principles of genetic selection and mutation to intelligently search for the best possible (as defined below) changepoint configuration. Although trends in daily depths are our focus, changepoints in yearly average snow depth series are first estimated. Homogenization for daily series is significantly more involved because of the long series lengths encountered ([

36]). Any changepoint detected in the yearly series is assigned to the first day of the corresponding gridwinter centered year.

Let denote the snow depth series at a fixed grid for days . Winter centered years (WCYs) are analyzed here. The starting observation for any WCY is 1 July; 30 June of the subsequent calendar year is the last day. WCYs prevent a single winter season from straddling two distinct years. To have 1948 commence our study, will correspond to 1 July 1947; corresponds to 30 June 2009, the study end date. Leap year data are handled by deleting the 30 June observation within the calendar year on which 29 February occurs—this has virtually no impact on results. There are years in our study. For a periodic notation with time, we write time t as where is the WCY and represents the day within the WCY.

At a given grid, define as the average winter snow depth for WCY , where is the number of elements in the set A and denotes the snow season for this grid. The snow season is considered to start on the first day in the Fall/Winter on which snow is present on at least 35 percent of the WCY years in the study; the last day of the WCY is the latest day in the Winter/Spring on which at least 35 percent of the WCY years report snow. The 35 percent proportion is somewhat arbitrary, but is chosen as it is high enough for accurate inferences to be made based on the minimum number of days with snow cover without being overly selective. For the grid centered near Warm Lake, Idaho, the snow season spans 25 October to 21 May inclusive, a length of 218 days.

We now describe our changepoint detection methods. Due to averaging (the central limit theorem),

is approximately normally distributed. Hence, the changepoint techniques for normal data from [

34] apply. Trend components must be included in the changepoint detection methodology to avoid identifying spurious changepoints caused by the presence of non-zero trends ([

41]). Our basic model for the yearly average snow depths

is the time series regression of form

Here,

models the grid’s average daily snow depth (less any trend),

is a linear trend parameter,

is a changepoint effect described further below, and

is a zero-mean first order autoregressive (AR(1)) error process that induces temporal correlation into the yearly averages. The AR(1) errors obey

, where

is a zero-mean white noise process with variance

, and

is the correlation between consecutive years of average snow depths. The changepoint effect

, with

k mean shifts at times

, respectively, is

where

and

by convention. Here,

is interpreted as the changepoint effect of the

ℓth regime, measured relative to the first regime (

is the shift magnitude since the last regime).

This model contains the regression parameters

, the changepoint parameters

k,

and the time series parameters

and

. For a given configuration of

k changepoints occurring at the times

, the regression parameters are estimated using standard linear regression methods; Yule–Walker estimators are fitted to linear regression residuals to estimate the time series parameters

and

. ([

42] provide details).

Estimation of the best changepoint configuration of a time series is a statistical model selection problem. Popular model selection criteria include the Akaike Information Criterion (AIC), the Bayesian Information (BIC), and Minimum Description Lengths (MDLs). To date, MDL methods have produced superior empirical results ([

43,

44]). MDL methods minimize an objective function of form

over all possible changepoint configurations. Here,

is an optimal model likelihood given the number of changepoints

k and their locations

, and

P, which depends on the number of changepoints and their location times, is a penalty term to prevent overfitting. Unlike AIC and BIC penalties, MDL penalties depend on where the changepoints lie. The MDL penalty is more than a multiple of the number of changepoints, penalizing closely spaced changepoint times more than sparsely spaced changepoints.

The Innovations form of the multivariate Gaussian likelihood is used:

Here,

is the one-step-ahead predictor of

and

is its mean squared prediction error. For an AR(1) series,

, where

, and

. Parameter estimates for

and

are calculated from the Yule–Walker equations involving the sample variance and lag one autocorrelation of

. Substituting the estimators of

into (

2) gives

for the changepoint configuration with

k changepoints at locations

.

The penalty term for the changepoint configuration is that in [

35]. When

,

when

, the penalty vanishes from the model (

). The best changepoint model is estimated as the one that minimizes the MDL score. As an exhaustive search for changepoint configuration(s) requires evaluation of

MDL scores, an arduous task even on the world’s fastest computers for even moderately large

n. Here, a GA was devised to perform the minimization. GAs have recently been employed for multiple changepoint detection in climate data ([

34,

35,

44]). See [

45] for more on genetic algorithms.

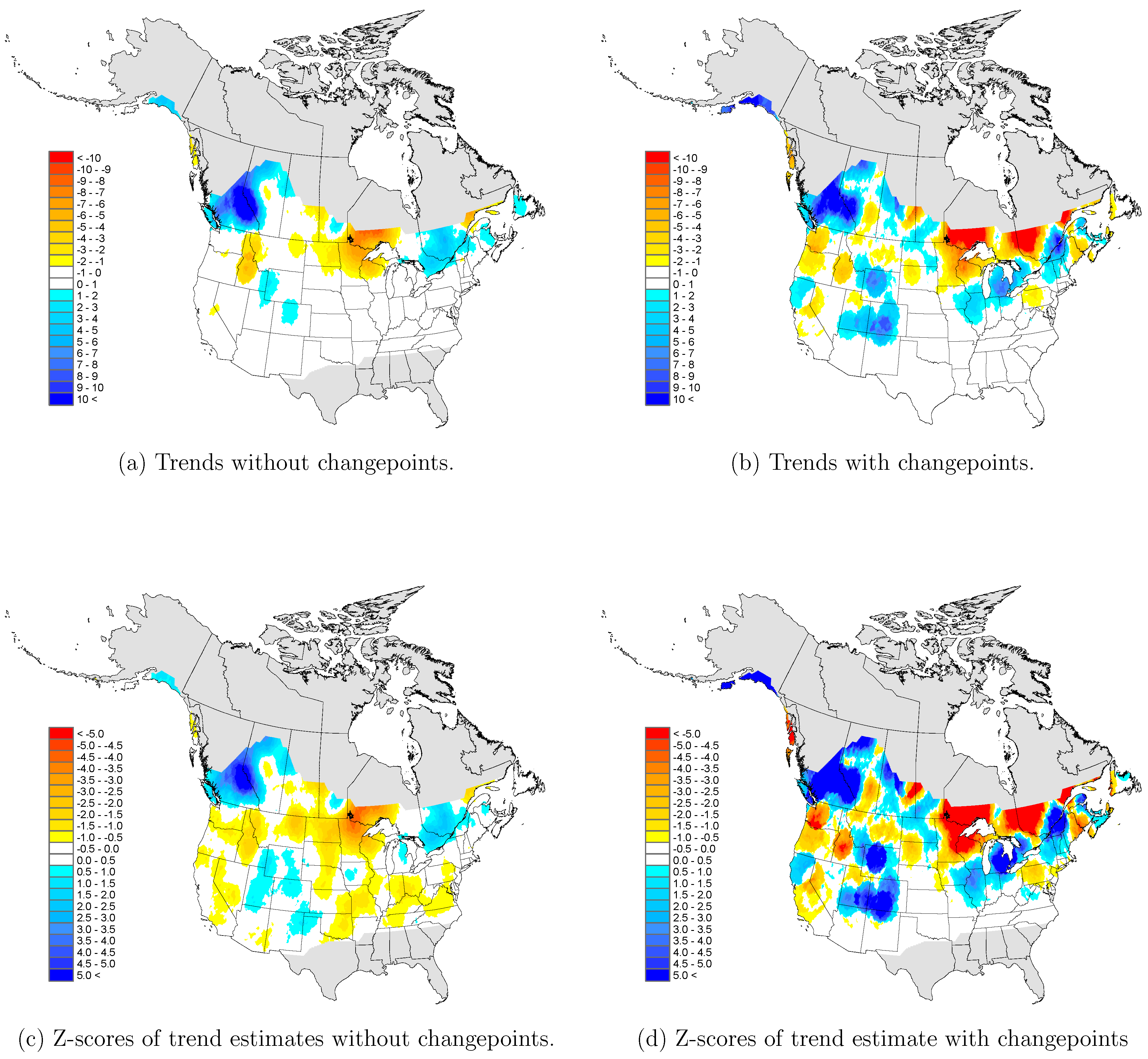

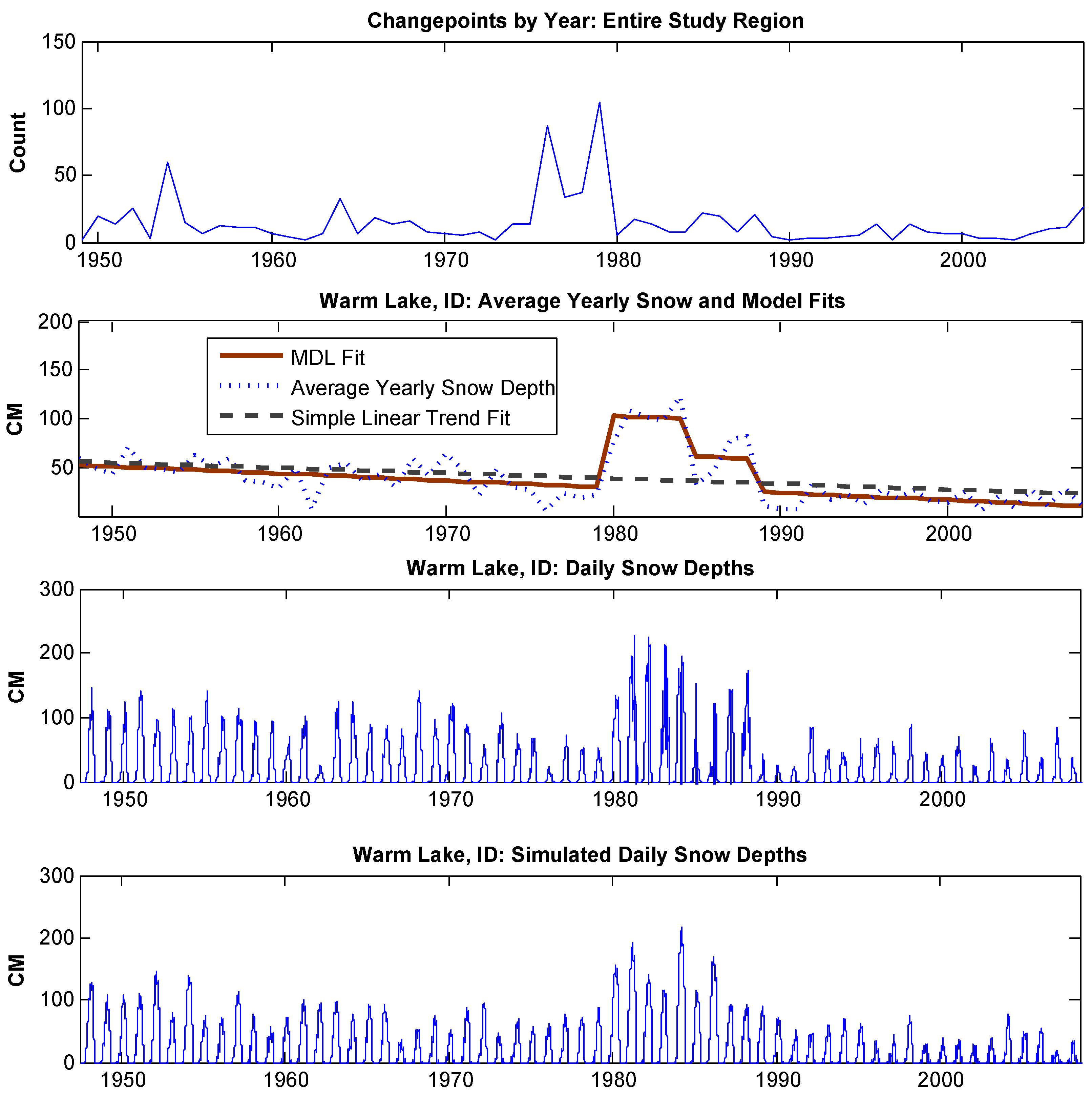

Panel 1 of

Figure 2 depicts the number of changepoints estimated by year for our 1217 grid study region. Three peaks are seen in 1953, 1974, and 1978 respectively. As an example, when these methods are applied to the Warm Lake, Idaho grid, changepoints are declared on 1 July of 1979, 1984, and 1988. Panel 1 of

Figure 2 graphically depicts the MDL and a simple linear regression fit to

. For this grid, the slope of the MDL regression structure is more negative than that for the simple linear trend when changepoints are ignored; more particulars about this example are given later.

4. The Storage Model

Ref. [

30] introduce a storage model to estimate trends in daily snow depths in the presence of changepoints. This model was fitted to a time series from Napoleon, North Dakota.

For each fixed grid, estimates of the number of mean shifts

k and their times

are now in place. Our model for the daily snow depths is based on the storage equation

where

quantifies the random change in the snow pack from day

to day

t. The max term prevents the snow depths from becoming negative. Here, the depth change

is assumed statistically independent of

.

We posit that

has a periodic component, possibly multiple changepoints, and a linear trend. The variance

is assumed periodic with period

. For computational convenience,

is assumed normally distributed:

; other distributions can be easily used if desired. Specifically,

is parameterized at time

as

where the mean shifts are parametrized as in the last section:

The term is a zero/one indicator that is unity only when .

To estimate the storage model parameters (this is not the same task as estimating changepoint numbers and locations), we minimize the weighted sum of squared one-step-ahead prediction errors

Here,

is a vector containing all model parameters and

is the one-step-ahead prediction, calculated in Woody et al. (2009) as

where

and

are the cumulative distribution and density functions, respectively, of a standard normal random variable. Because snow is seasonal, weighted least squares is used. Here, the weight

is set to

. To estimate

, first calculate a point estimate of the mean change from day

to day

via

. The estimate of

is then simply a multiple of the sample standard deviation:

We smooth these daily variances via the Matlab function “cfit” before using them to minimize variability.

Some minor quality control is applied to the daily snow depths before trend inference is conducted. Following [

46], if the day to day change in snow depths during season

is more than four standard deviations from the mean daily change for season

,

, then the data for that day is flagged and considered missing.

While the parameter

controls the trend and is the object of our study, it is not easily interpretable within the storage equation. For example, because of the maximum in the storage equation,

does not imply a depth change of 50 units per time. A quantity that does have interpretable units of depth change per time is the linear trend statistic in the presence of changepoints:

where

denote the set of times the series experienced the

r-th regime,

, and

and

denotes the average snow depth and time, respectively, for day

in regime

r. See [

47] or [

48] for more on this statistic. In this context,

estimates the mean change in the snow depth per time (scaled to cm per century below). To obtain standard errors for

, we simply simulate 1000 independent realizations of the storage model with parameters as estimated from the data for each grid, and then compute the sample mean and standard deviations of the 1000 trend estimates in (

5). Simulation is used because explicit forms for storage equation means do not exist.

At Warm Lake, three changepoints are estimated, and occur on 1 July 1979, 1 July 1984, and 1 July 1988. Parameter estimates for

in Equation (

3) are

,

,

,

,

,

, and

. The negative

implies overall declining snow depths; the positive

entails an abrupt shift to a snowier era from 1 July 1979–30 June 1984. This is followed by a modest drop in snow depths from 1 July 1984–29 June 1988, and finally another decrease in depths from 1 July 1988 onwards. The thousand simulations of the storage model with these parameters report an average trend of

cm/century, with a standard error of

cm/century. This yields a

z-score of

when testing the null hypothesis that

(a one-sided

p-value of

). The daily snow depths from 1 July 1948 to 30 June 2009 are depicted in Panel 3 of

Figure 2. A simulation of the daily snow depths using the parameter estimates from the storage model fit reported above is shown in Panel 4 of

Figure 2 and depicts a reasonable likeness to the raw data in Panel 3, including the estimated mean level shifts (simulation is another use of the storage model).

In contrast, if changepoints are ignored, parameter estimates are , , , and . The thousand simulations of the storage model with these parameters report an average trend statistic of cm/century, with a standard error of cm/century. This yields a z-score of when testing that (a one-sided p-value of ). Overall, snow depths are inferred to be decreasing at Warm Lake, more so when changepoints are taken into account.

5. Results

North American results are described for two cases: (1) when changepoints were ignored and (2) when changepoints were included. The study area contained 6613 grids in total; however, only 1217 grids in the study region experienced a winter season as defined above or have data that met our quality constraints. The average number of changepoints over these 1217 grids was 0.7198 with a standard error of 0.8975. More specifically, 664 (54.56%) of the grids were changepoint free, 272 grids (22.35%) had only one changepoint, 241 grids (19.80%) had two changepoints, 38 grids (03.12%) had three changepoints, and 2 (0.16%) had four changepoints (the maximum number detected).

More grids reported negative depth trends than positive depth trends, regardless of whether or not changepoints were considered. When changepoints were ignored, 469 grids (38.54%) reported positive depth trends and 748 grids (61.47%) reported negative depth trends. When changepoints were taken into account, 537 grids (44.12%) reported positive snow depth trends and 680 grids (55.88%) reported negative snow depth trends.

The magnitude of the estimated trends was a different story: the average grid trend was 0.3212 cm/century without changepoints, with a standard error of 4.6585, and 0.1519 cm/century, with a standard error of 9.1046, when changepoints were included. Overall, the addition of changepoints reduced the average depth trend.

The mean trend of grids with positive trends when changepoints were ignored was 2.7735 cm/century; the mean trend for grids with decreasing trends when changepoints were ignored was −0.8551 cm/century. In contrast, the mean trend for grids reporting increasing trends was 4.9067 cm/century when changepoints were taken into account; the mean trend for decreasing grids was −4.9698 cm/century when changepoints were taken into account. The magnitude of the trends was substantially larger when changepoints were considered.