Multi-Scale Object-Based Probabilistic Forecast Evaluation of WRF-Based CAM Ensemble Configurations

Abstract

1. Introduction

2. OBPROB Methodology

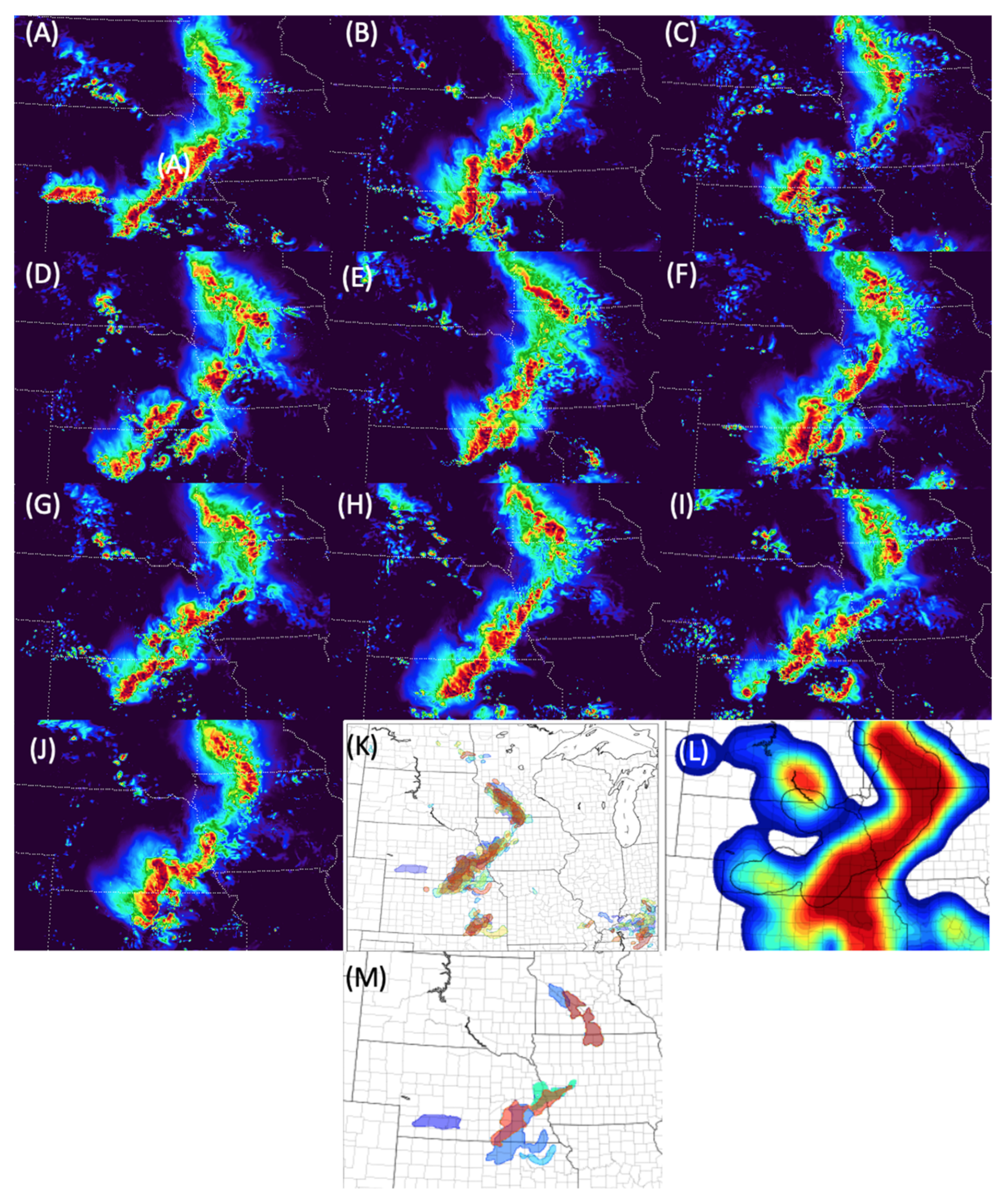

2.1. Object Definition

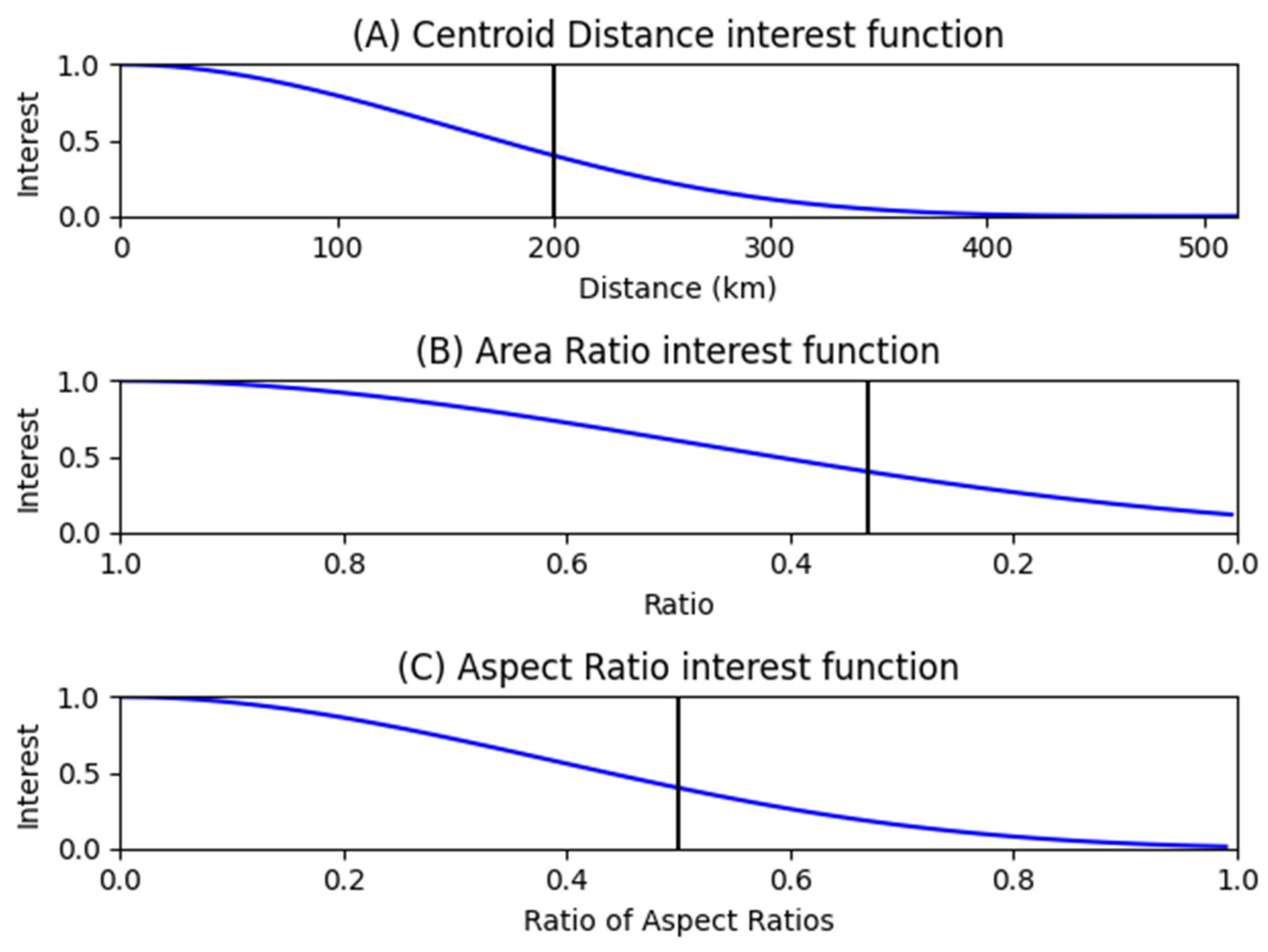

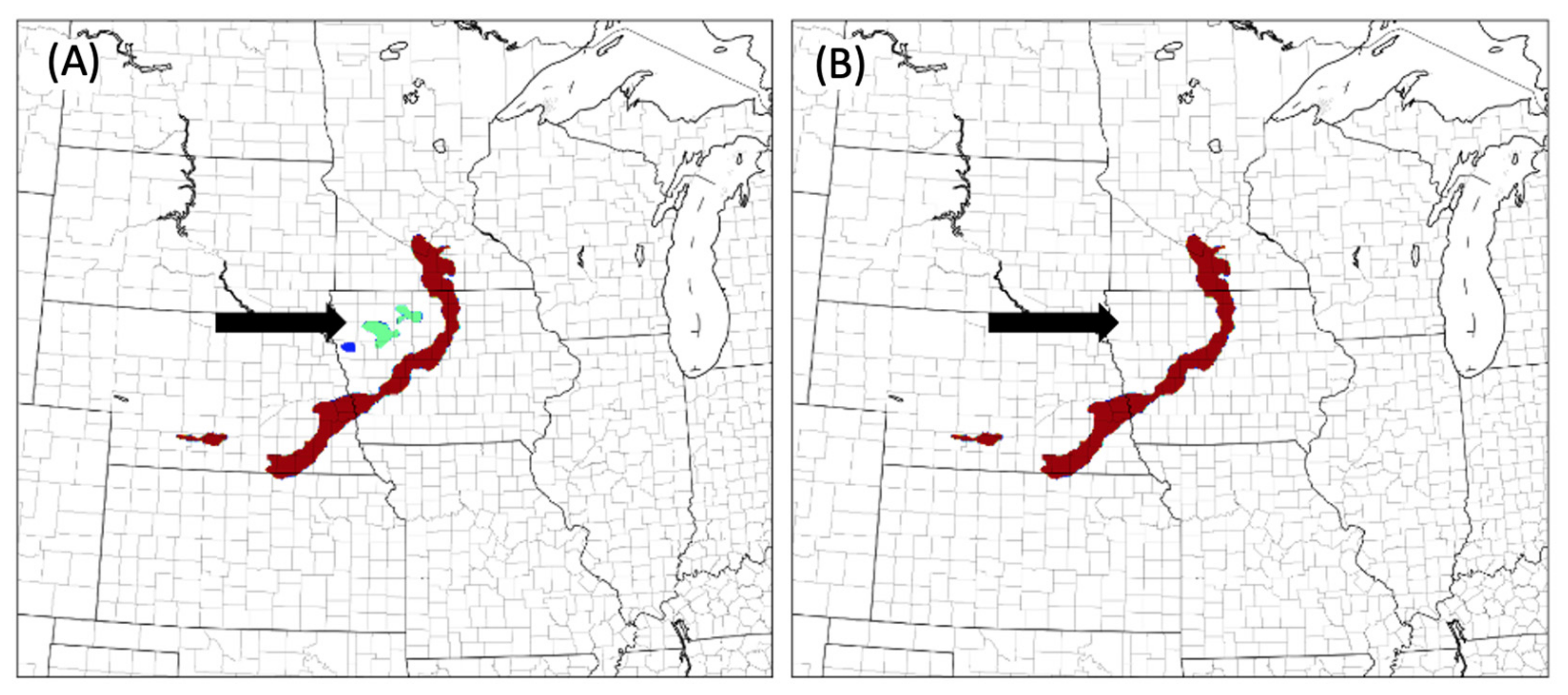

2.2. Object Matching

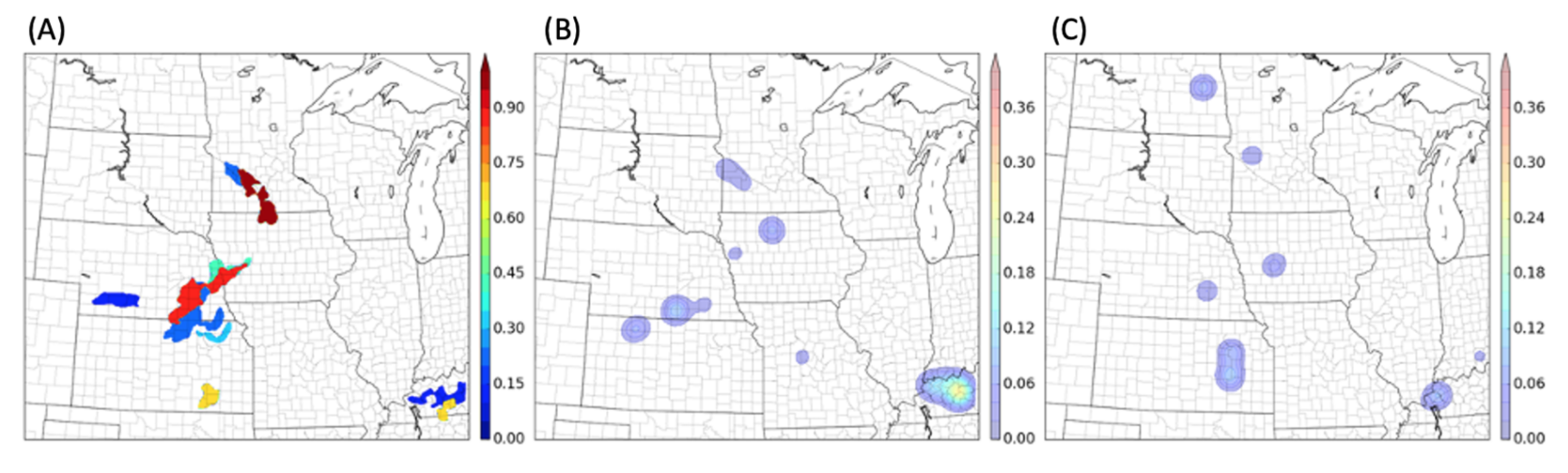

2.3. Object Probabilities

- Compile all forecasted objects into a single array with corresponding probabilities equaling the fraction of members with a matching object.

- Sort object probabilities in descending order, with ties in probability going toward the object with highest average total interest from the matching object in other members.

- Plot the highest probability object.

- Remove the highest probability object, in addition to all associated matching objects from the total array of objects, giving a new, shortened array.

- Repeat steps 2–4 until no objects remain in the array.

2.4. New Extensions of OBPROB Method

3. Experiment Design

3.1. Experiment Description

3.2. Verification Methods

4. Results

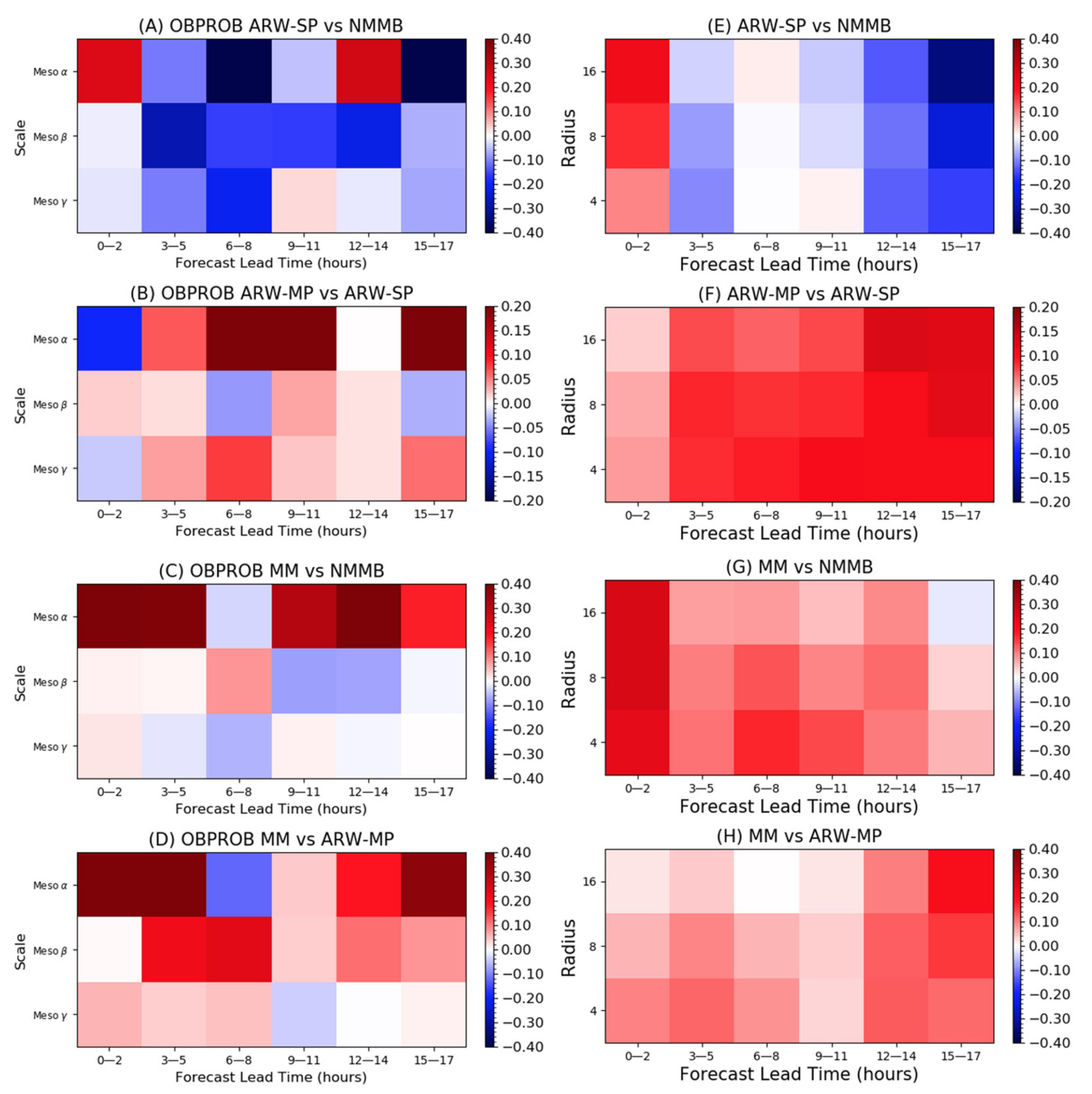

4.1. Objective Verification

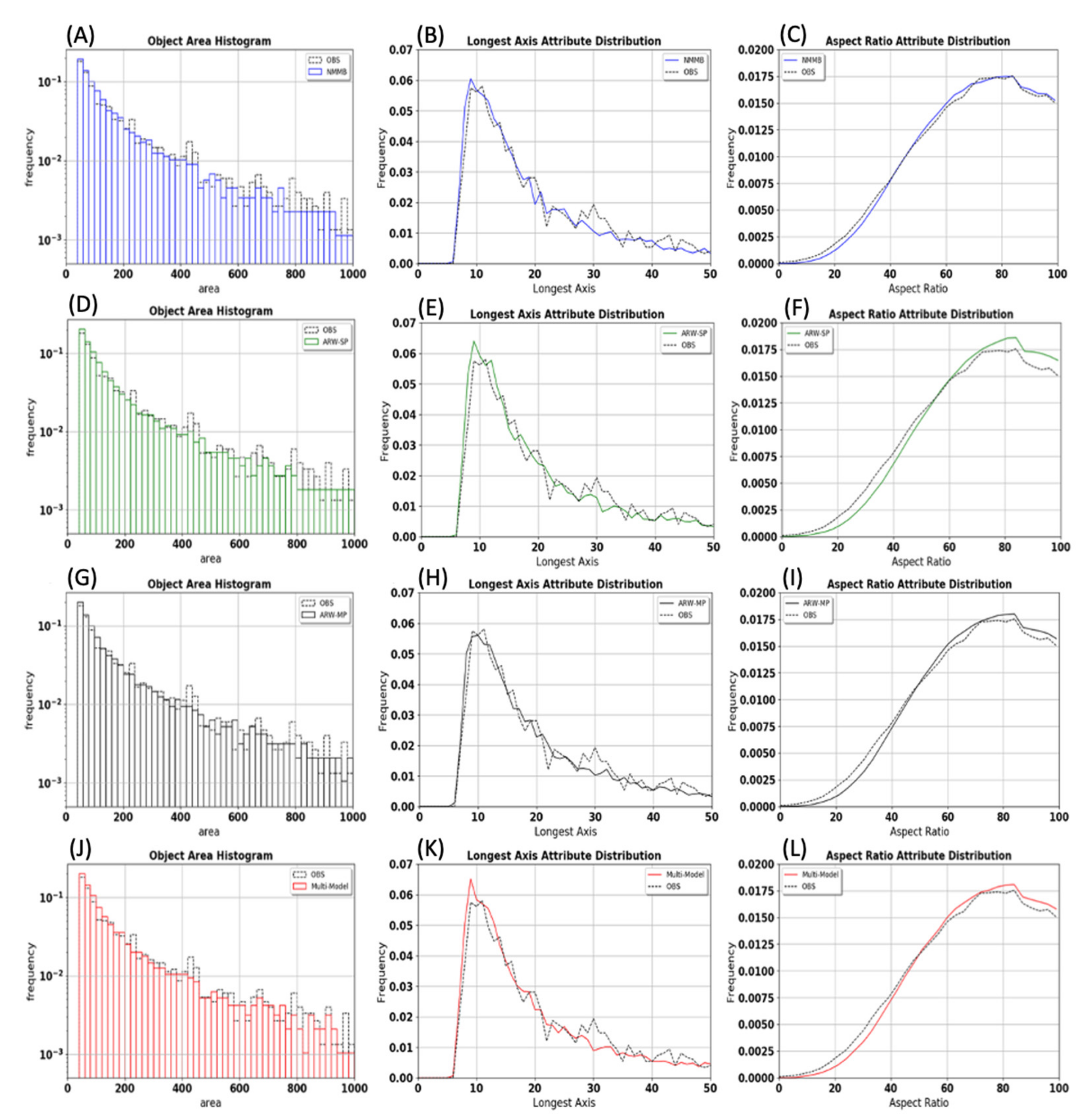

4.1.1. Object Attribute Distributions

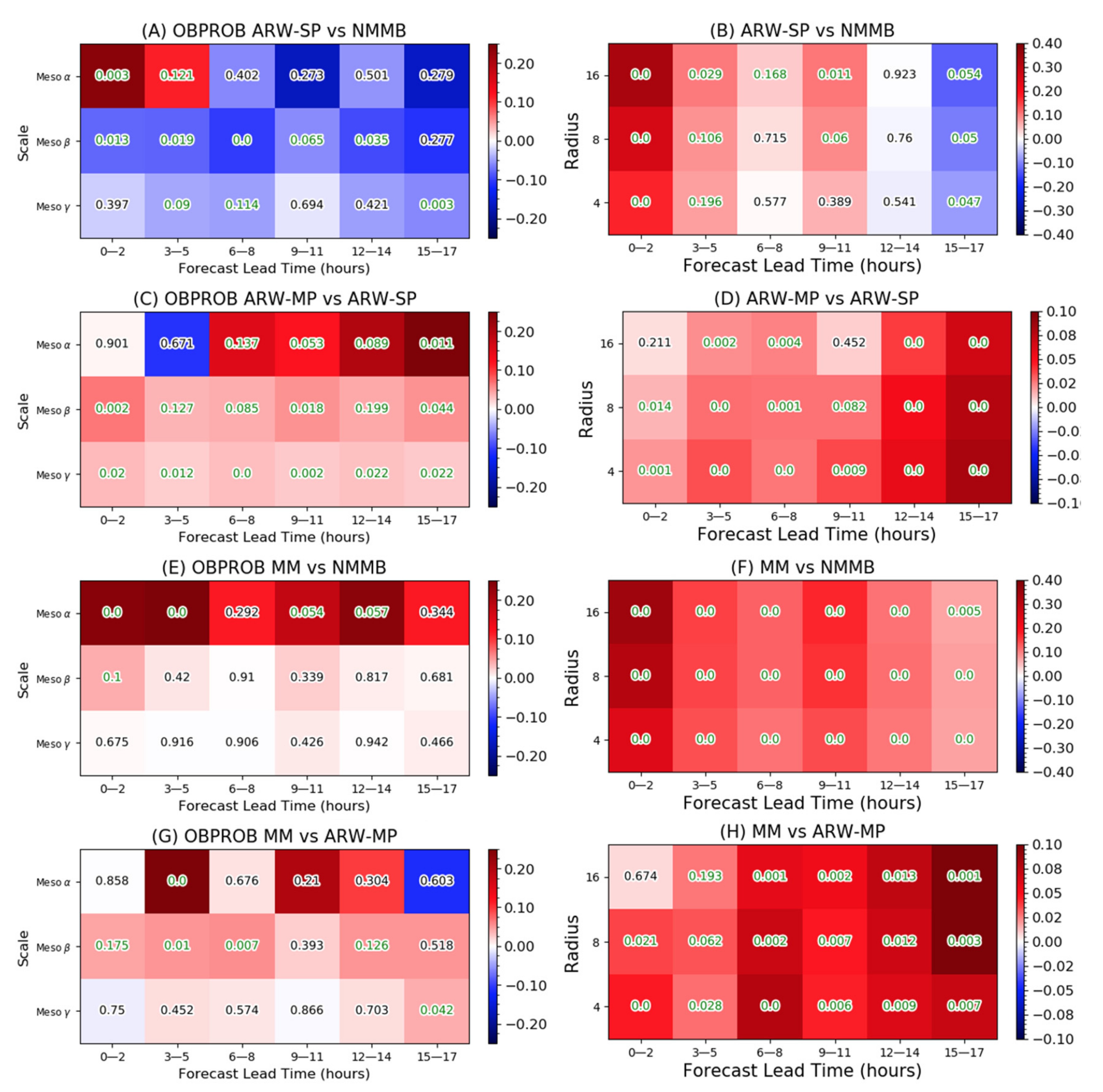

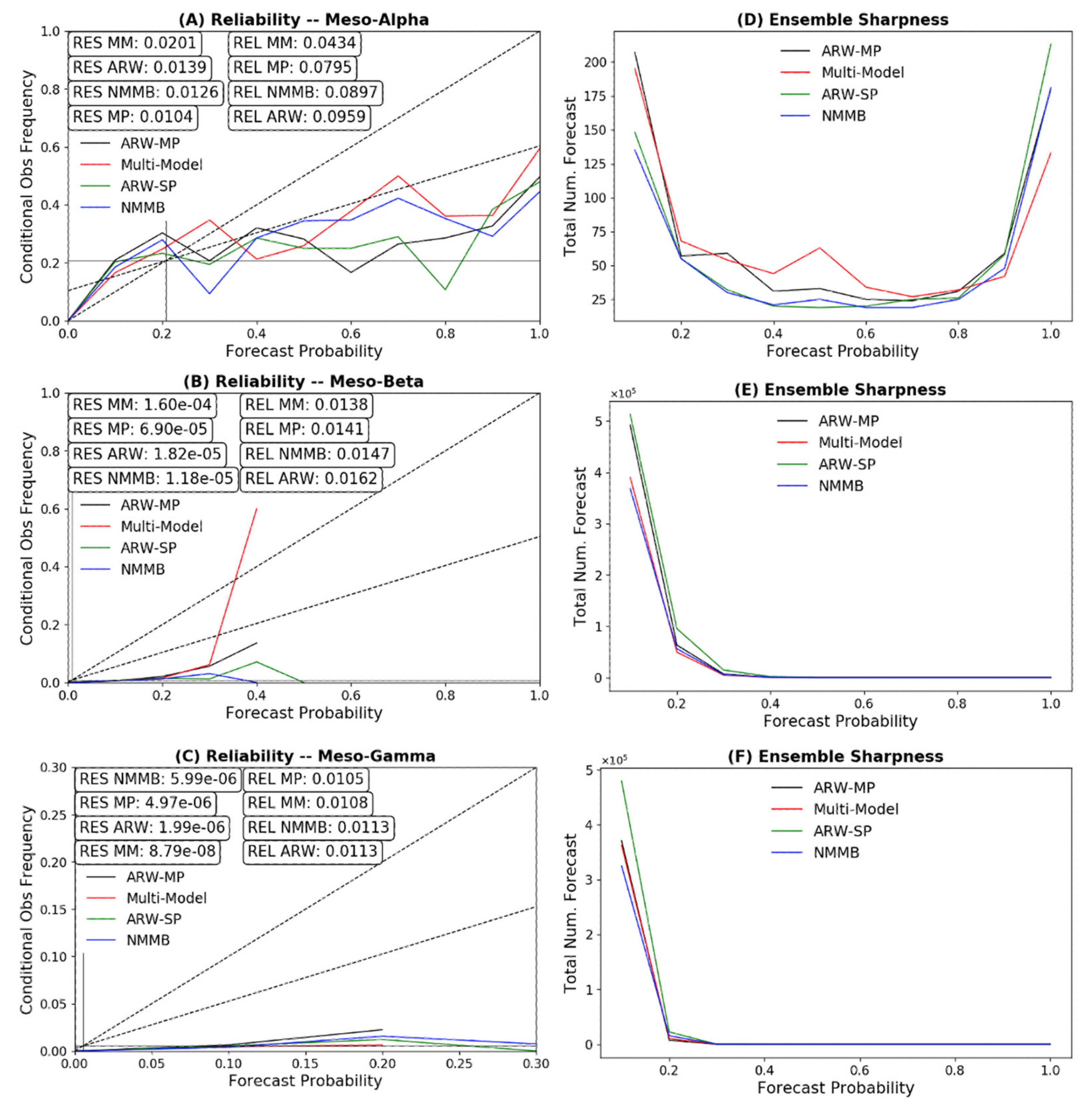

4.1.2. Probabilistic Verification and Forecast Spread

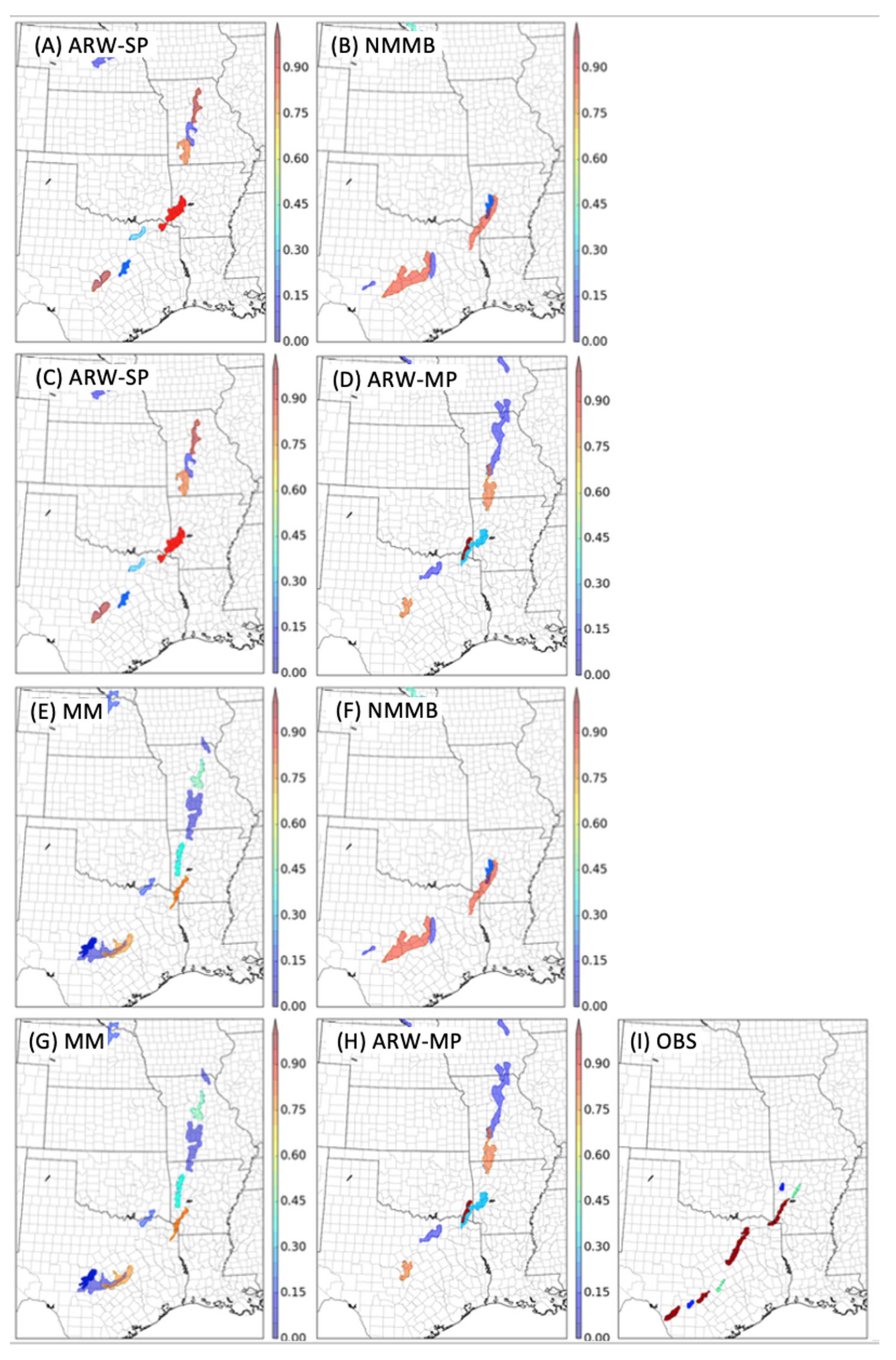

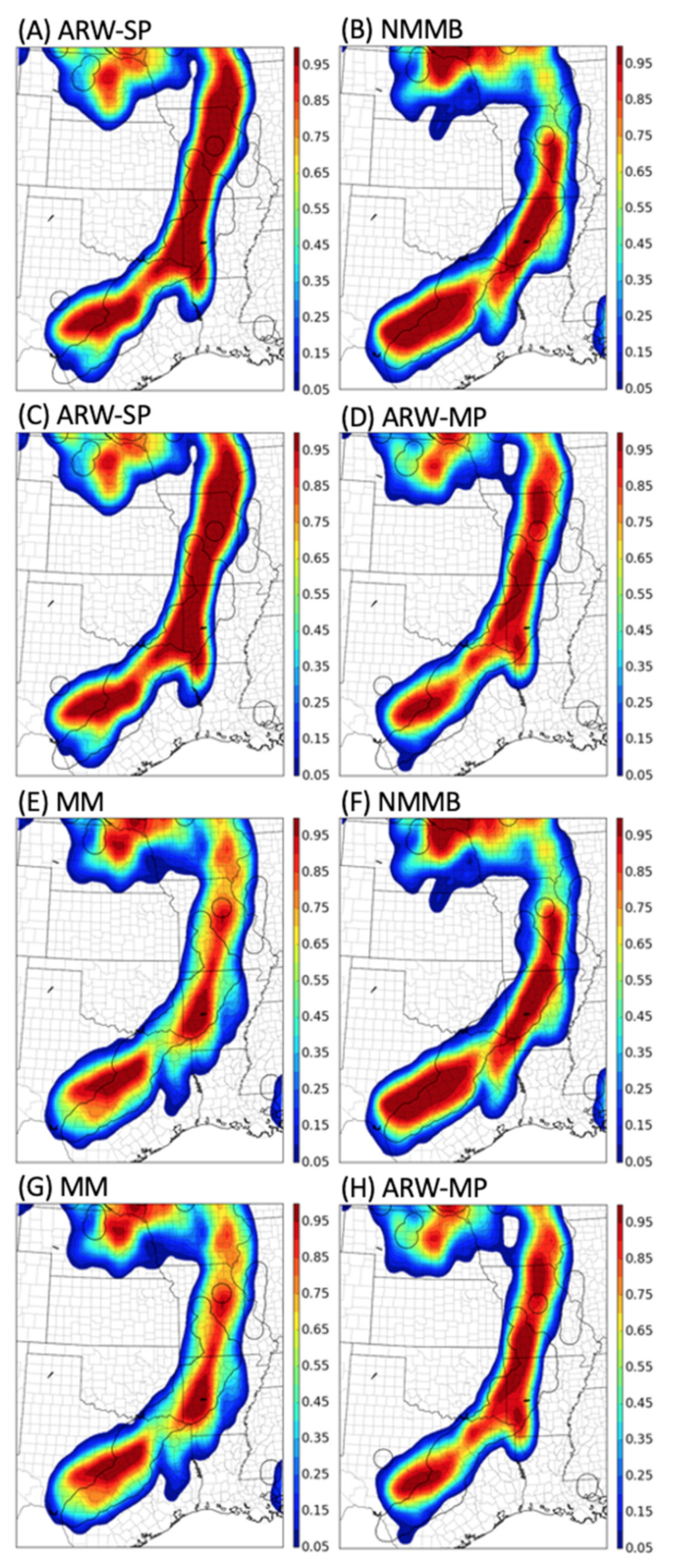

4.2. Subjective Evaluation

4.3. Comparison to NMEP

5. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Done, J.; Davis, C.A.; Weisman, M. The next generation of NWP: Explicit forecasts of convection using the weather research and forecasting (WRF) model. Atmos. Sci. Lett. 2004, 5, 110–117. [Google Scholar] [CrossRef]

- Kain, J.S.; Weiss, S.J.; Levit, J.J.; Baldwin, M.E.; Bright, D.R. Examination of Convection-Allowing Configurations of the WRF Model for the Prediction of Severe Convective Weather: The SPC/NSSL Spring Program 2004. Weather Forecast. 2006, 21, 167–181. [Google Scholar] [CrossRef][Green Version]

- Kain, J.S.; Weiss, S.J.; Bright, D.R.; Baldwin, M.E.; Levit, J.J.; Carbin, G.W.; Schwartz, C.S.; Weisman, M.L.; Droegemeier, K.K.; Weber, D.B.; et al. Some practical considerations regarding horizontal resolution in the first generation of operational convection-allowing NWP. Weather Forecast. 2008, 23, 931–952. [Google Scholar] [CrossRef]

- Weisman, M.L.; Davis, C.; Wang, W.; Manning, K.W.; Klemp, J.B. Experiences with 0–36-h Explicit Convective Forecasts with the WRF-ARW Model. Weather Forecast. 2008, 23, 407–437. [Google Scholar] [CrossRef]

- Schwartz, C.S.; Kain, J.S.; Weiss, S.J.; Xue, M.; Bright, D.R.; Kong, F.; Thomas, K.W.; Levit, J.J.; Coniglio, M.C. Next-Day Convection-Allowing WRF Model Guidance: A Second Look at 2-km versus 4-km Grid Spacing. Mon. Weather Rev. 2009, 137, 3351–3372. [Google Scholar] [CrossRef]

- Clark, A.J.; Gallus, W.A.; Weisman, M.L. Neighborhood-Based Verification of Precipitation Forecasts from Convection-Allowing NCAR WRF Model Simulations and the Operational NAM. Weather Forecast. 2010, 25, 1495–1509. [Google Scholar] [CrossRef]

- Schwartz, C.S.; Kain, J.S.; Weiss, S.J.; Xue, M.; Bright, D.R.; Kong, F.; Thomas, K.W.; Levit, J.J.; Coniglio, M.C.; Wandishin, M.S. Toward Improved Convection-Allowing Ensembles: Model Physics Sensitivities and Optimizing Probabilistic Guidance with Small Ensemble Membership. Weather Forecast. 2010, 25, 263–280. [Google Scholar] [CrossRef]

- Schwartz, C.S.; Romine, G.S.; Sobash, R.A.; Fossell, K.R.; Weisman, M.L. NCAR’s Experimental Real-Time Convection-Allowing Ensemble Prediction System. Weather Forecast. 2015, 30, 1645–1654. [Google Scholar] [CrossRef]

- Xue, M.; Jung, Y.; Zhang, G. State estimation of convective storms with a two-moment microphysics scheme and an ensemble Kalman filter: Experiments with simulated radar data. Q. J. R. Meteorol. Soc. 2010, 136, 685–700. [Google Scholar] [CrossRef]

- Johnson, A.; Wang, X. Verification and Calibration of Neighborhood and Object-Based Probabilistic Precipitation Forecasts from a Multimodel Convection-Allowing Ensemble. Mon. Weather Rev. 2012, 140, 3054–3077. [Google Scholar] [CrossRef]

- Johnson, A.; Wang, X. Design and Implementation of a GSI-Based Convection-Allowing Ensemble Data Assimilation and Forecast System for the PECAN Field Experiment. Part I: Optimal Configurations for Nocturnal Convection Prediction Using Retrospective Cases. Weather Forecast. 2017, 32, 289–315. [Google Scholar] [CrossRef]

- Duda, J.D.; Wang, X.; Kong, F.; Xue, M. Using Varied Microphysics to Account for Uncertainty in Warm-Season QPF in a Convection-Allowing Ensemble. Mon. Weather Rev. 2014, 142, 2198–2219. [Google Scholar] [CrossRef]

- Duda, J.D.; Wang, X.; Kong, F.; Xue, M.; Berner, J. Impact of a Stochastic Kinetic Energy Backscatter Scheme on Warm Season Convection-Allowing Ensemble Forecasts. Mon. Weather Rev. 2016, 144, 1887–1908. [Google Scholar] [CrossRef]

- Duda, J.D.; Wang, X.; Xue, M. Sensitivity of Convection-Allowing Forecasts to Land Surface Model Perturbations and Implications for Ensemble Design. Mon. Weather Rev. 2017, 145, 2001–2025. [Google Scholar] [CrossRef]

- Romine, G.S.; Schwartz, C.S.; Berner, J.; Fossell, K.R.; Snyder, C.; Anderson, J.L.; Weisman, M.L. Representing Forecast Error in a Convection-Permitting Ensemble System. Mon. Weather Rev. 2014, 142, 4519–4541. [Google Scholar] [CrossRef]

- Johnson, A.; Wang, X.; Degelia, S. Design and Implementation of a GSI-Based Convection-Allowing Ensemble-Based Data Assimilation and Forecast System for the PECAN Field Experiment. Part II: Overview and Evaluation of a Real-Time System. Weather Forecast. 2017, 32, 1227–1251. [Google Scholar] [CrossRef]

- Gasperoni, N.A.; Wang, X.; Wang, Y. A Comparison of Methods to Sample Model Errors for Convection-Allowing Ensemble Forecasts in the Setting of Multiscale Initial Conditions Produced by the GSI-Based EnVar Assimilation System. Mon. Weather Rev. 2020, 148, 1177–1203. [Google Scholar] [CrossRef]

- Johnson, A.; Wang, X.; Wang, Y.; Reinhart, A.; Clark, A.J.; Jirak, I.L. Neighborhood- and Object-Based Probabilistic Verification of the OU MAP Ensemble Forecasts during 2017 and 2018 Hazardous Weather Testbeds. Weather Forecast. 2020, 35, 169–191. [Google Scholar] [CrossRef]

- Clark, A.J. Generation of Ensemble Mean Precipitation Forecasts from Convection-Allowing Ensembles. Weather Forecast. 2017, 32, 1569–1583. [Google Scholar] [CrossRef]

- Schwartz, C.S.; Sobash, R.A. Generating Probabilistic Forecasts from Convection-Allowing Ensembles Using Neighborhood Approaches: A Review and Recommendations. Mon. Weather Rev. 2017, 145, 3397–3418. [Google Scholar] [CrossRef]

- Carlberg, B.R.; Gallus, W.A.; Franz, K.J. A Preliminary Examination of WRF Ensemble Prediction of Convective Mode Evolution. Weather Forecast. 2018, 33, 783–798. [Google Scholar] [CrossRef]

- Ebert, E.E. Fuzzy verification of high-resolution gridded forecasts: A review and proposed framework. Meteorol. Appl. 2008, 15, 51–64. [Google Scholar] [CrossRef]

- Gilleland, E. Testing Competing Precipitation Forecasts Accurately and Efficiently: The Spatial Prediction Comparison Test. Mon. Weather Rev. 2013, 141, 340–355. [Google Scholar] [CrossRef]

- Davis, C.; Brown, B.; Bullock, R. Object-Based Verification of Precipitation Forecasts. Part I: Methodology and Application to Mesoscale Rain Areas. Mon. Weather Rev. 2006, 134, 1772–1784. [Google Scholar] [CrossRef]

- Davis, C.; Brown, B.; Bullock, R. Object-Based Verification of Precipitation Forecasts. Part II: Application to Convective Rain Systems. Mon. Weather Rev. 2006, 134, 1785–1795. [Google Scholar] [CrossRef]

- Davis, C.A.; Brown, B.G.; Bullock, R.; Halley-Gotway, J. The Method for Object-Based Diagnostic Evaluation (MODE) Applied to Numerical Forecasts from the 2005 NSSL/SPC Spring Program. Weather Forecast. 2009, 24, 1252–1267. [Google Scholar] [CrossRef]

- Johnson, A.; Wang, X.; Kong, F.; Xue, M. Hierarchical Cluster Analysis of a Convection-Allowing Ensemble during the Hazardous Weather Testbed 2009 Spring Experiment. Part I: Development of the Object-Oriented Cluster Analysis Method for Precipitation Fields. Mon. Weather Rev. 2011, 139, 3673–3693. [Google Scholar] [CrossRef][Green Version]

- Johnson, A.; Wang, X.; Xue, M.; Kong, F. Hierarchical Cluster Analysis of a Convection-Allowing Ensemble during the Hazardous Weather Testbed 2009 Spring Experiment. Part II: Ensemble Clustering over the Whole Experiment Period. Mon. Weather Rev. 2011, 139, 3694–3710. [Google Scholar] [CrossRef]

- Johnson, A.; Wang, X.; Kong, F.; Xue, M. Object-Based Evaluation of the Impact of Horizontal Grid Spacing on Convection-Allowing Forecasts. Mon. Weather Rev. 2013, 141, 3413–3425. [Google Scholar] [CrossRef]

- Wolff, J.K.; Harrold, M.; Fowler, T.; Gotway, J.H.; Nance, L.; Brown, B.G. Beyond the Basics: Evaluating Model-Based Precipitation Forecasts Using Traditional, Spatial, and Object-Based Methods. Weather Forecast. 2014, 29, 1451–1472. [Google Scholar] [CrossRef]

- Clark, A.J.; Bullock, R.G.; Jensen, T.L.; Xue, M.; Kong, F. Application of Object-Based Time-Domain Diagnostics for Tracking Precipitation Systems in Convection-Allowing Models. Weather Forecast. 2014, 29, 517–542. [Google Scholar] [CrossRef]

- Stratman, D.R.; Brewster, K. Sensitivities of 1-km Forecasts of 24 May 2011 Tornadic Supercells to Microphysics Parameterizations. Mon. Weather Rev. 2017, 145, 2697–2721. [Google Scholar] [CrossRef]

- Schumacher, R.S.; Clark, A.J. Evaluation of Ensemble Configurations for the Analysis and Prediction of Heavy-Rain-Producing Mesoscale Convective Systems. Mon. Weather Rev. 2014, 142, 4108–4138. [Google Scholar] [CrossRef]

- Schwartz, C.S.; Romine, G.S.; Sobash, R.A.; Fossell, K.R.; Weisman, M.L. NCAR’s Real-Time Convection-Allowing Ensemble Project. Bull. Am. Meteorol. Soc. 2019, 100, 321–343. [Google Scholar] [CrossRef]

- Gowan, T.M.; Steenburgh, W.J.; Schwartz, C.S. Validation of Mountain Precipitation Forecasts from the Convection-Permitting NCAR Ensemble and Operational Forecast Systems over the Western United States. Weather Forecast. 2018, 33, 739–765. [Google Scholar] [CrossRef]

- Duc, L.; Saito, K.; Seko, H. Spatial-temporal fractions verification for high-resolution ensemble forecasts. Tellus A Dyn. Meteorol. Oceanogr. 2013, 65, 18171. [Google Scholar] [CrossRef]

- Schwartz, C.S.; Romine, G.S.; Smith, K.R.; Weisman, M.L. Characterizing and Optimizing Precipitation Forecasts from a Convection-Permitting Ensemble Initialized by a Mesoscale Ensemble Kalman Filter. Weather Forecast. 2014, 29, 1295–1318. [Google Scholar] [CrossRef]

- Ebert, E.E. Ability of a Poor Man’s Ensemble to Predict the Probability and Distribution of Precipitation. Mon. Weather Rev. 2001, 129, 2461–2480. [Google Scholar] [CrossRef]

- Wandishin, M.S.; Mullen, S.L.; Stensrud, D.J.; Brooks, H.E. Evaluation of a Short-Range Multimodel Ensemble System. Mon. Weather Rev. 2001, 129, 729–747. [Google Scholar] [CrossRef]

- Eckel, F.A.; Mass, C.F. Aspects of Effective Mesoscale, Short-Range Ensemble Forecasting. Weather Forecast. 2005, 20, 328–350. [Google Scholar] [CrossRef]

- Candille, G. The Multiensemble Approach: The NAEFS Example. Mon. Weather Rev. 2009, 137, 1655–1665. [Google Scholar] [CrossRef]

- Melhauser, C.; Zhang, F.; Weng, Y.; Jin, Y.; Jin, H.; Zhao, Q. A Multiple-Model Convection-Permitting Ensemble Examination of the Probabilistic Prediction of Tropical Cyclones: Hurricanes Sandy (2012) and Edouard (2014). Weather Forecast. 2017, 32, 665–688. [Google Scholar] [CrossRef]

- Du, J.; Berner, J.; Buizza, R.; Charron, M.; Houtekamer, P.; Hou, D.; Jankov, I.; Mu, M.; Wang, X.; Wei, M.; et al. Ensemble Methods for Meteorological Predictions. In Handbook of Hydrometeorological Ensemble Forecasting; Springer: Singapore, 2019; pp. 99–149. [Google Scholar]

- Gallus, W.A.; Snook, N.A.; Johnson, E.V. Spring and Summer Severe Weather Reports over the Midwest as a Function of Convective Mode: A Preliminary Study. Weather Forecast. 2008, 23, 101–113. [Google Scholar] [CrossRef]

- Duda, J.D.; Gallus, W.A. Spring and Summer Midwestern Severe Weather Reports in Supercells Compared to Other Morphologies. Weather Forecast. 2010, 25, 190–206. [Google Scholar] [CrossRef]

- Smith, B.T.; Thompson, R.L.; Grams, J.S.; Broyles, C.; Brooks, H.E. Convective Modes for Significant Severe Thunderstorms in the Contiguous United States. Part I: Storm Classification and Climatology. Weather Forecast. 2012, 27, 1114–1135. [Google Scholar] [CrossRef]

- Pettet, C.R.; Johnson, R.H. Airflow and Precipitation Structure of Two Leading Stratiform Mesoscale Convective Systems Determined from Operational Datasets. Weather Forecast. 2003, 18, 685–699. [Google Scholar] [CrossRef][Green Version]

- Skamarock, W.C. Evaluating Mesoscale NWP Models Using Kinetic Energy Spectra. Mon. Weather Rev. 2004, 132, 3019–3032. [Google Scholar] [CrossRef]

- Skinner, P.S.; Wheatley, D.M.; Knopfmeier, K.H.; Reinhart, A.E.; Choate, J.J.; Jones, T.A.; Creager, G.J.; Dowell, D.C.; Alexander, C.R.; Ladwig, T.T.; et al. Object-Based Verification of a Prototype Warn-on-Forecast System. Weather Forecast. 2018, 33, 1225–1250. [Google Scholar] [CrossRef]

- Zhang, F.; Odins, A.M.; Nielsen-Gammon, J.W. Mesoscale predictability of an extreme warm-season precipitation event. Weather Forecast. 2006, 21, 149–166. [Google Scholar] [CrossRef]

- Trentmann, J.; Keil, C.; Salzmann, M.; Barthlott, C.; Bauer, H.-S.; Schwitalla, T.; Lawrence, M.; Leuenberger, D.; Wulfmeyer, V.; Corsmeier, U.; et al. Multi-model simulations of a convective situation in low-mountain terrain in central Europe. Meteorol. Atmos. Phys. 2009, 103, 95–103. [Google Scholar] [CrossRef]

- Barthlott, C.; Burtonb, R.; Kirshbaumc, D.; Hanleyc, K.; Richardd, E.; Chaboureaud, J.-P.; Trentmanne, J.; Kerne, B.; Bauerf, H.-S.; Schwitallaf, T.; et al. Initiation of deep convection at marginal instability in an ensemble of mesoscale models: A case-study from COPS. Q. J. R. Meteorol. Soc. 2011, 137, 118–136. [Google Scholar] [CrossRef]

- Keil, C.; Heinlein, F.A.; Craig, G. The convective adjustment time-scale as indicator of predictability of convective precipitation. Q. J. R. Meteorol. Soc. 2014, 140, 480–490. [Google Scholar] [CrossRef]

- Houze, R.A., Jr. Cloud Dynamics; Academic Press: San Diego, CA, USA, 1993; 573p. [Google Scholar]

- Aligo, E.A.; Ferrier, B.; Carley, J. Modified NAM Microphysics for Forecasts of Deep Convective Storms. Mon. Weather Rev. 2018, 146, 4115–4153. [Google Scholar] [CrossRef]

- Janjic, Z.I. The step-mountain ETA coordinate model: Further developments of the convection, viscous sublayer, and turbulence closure schemes. Mon. Weather Rev. 1994, 122, 927–945. [Google Scholar] [CrossRef]

- Tewari, M.; Chen, F.; Wang, W.; Dudhia, J.; Lemone, M.A.; Mitchell, K.E.; Ek, M.; Gayno, G.; Wegiel, J.W.; Cuenca, R. Implementation and verification 872 of the unified NOAH land surface model. In Proceedings of the WRF Model, 20th Conference on Wea, Analysis and Forecasting/16th Conference on NWP, Seattle, WA, USA, 14 January 2004; pp. 11–15. [Google Scholar]

- Thompson, G.; Field, P.R.; Rasmussen, R.M.; Hall, W.D. Explicit Forecasts of Winter Precipitation Using an Improved Bulk Microphysics Scheme. Part II: Implementation of a New Snow Parameterization. Mon. Weather Rev. 2008, 136, 5095–5115. [Google Scholar] [CrossRef]

- Thompson, G.; Eidhammer, T. A Study of Aerosol Impacts on Clouds and Precipitation Development in a Large Winter Cyclone. J. Atmos. Sci. 2014, 71, 3636–3658. [Google Scholar] [CrossRef]

- Nakanishi, M.; Niino, H. Development of an Improved Turbulence Closure Model for the Atmospheric Boundary Layer. J. Meteorol. Soc. Jpn. 2009, 87, 895–912. [Google Scholar] [CrossRef]

- Benjamin, S.G.; Grell, G.A.; Brown, J.M.; Smirnova, T.G.; Bleck, R. Mesoscale Weather Prediction with the RUC Hybrid Isentropic–Terrain-Following Coordinate Model. Mon. Weather Rev. 2004, 132, 473–494. [Google Scholar] [CrossRef]

- Mansell, E.R. On Sedimentation and Advection in Multimoment Bulk Microphysics. J. Atmos. Sci. 2010, 67, 3084–3094. [Google Scholar] [CrossRef]

- Morrison, H.; Thompson, G.; Tatarskii, V. Impact of Cloud Microphysics on the Development of Trailing Stratiform Precipitation in a Simulated Squall Line: Comparison of One- and Two-Moment Schemes. Mon. Weather Rev. 2009, 137, 991–1007. [Google Scholar] [CrossRef]

- Morrison, H.; Milbrandt, J.A. Parameterization of Cloud Microphysics Based on the Prediction of Bulk Ice Particle Properties. Part I: Scheme Description and Idealized Tests. J. Atmos. Sci. 2015, 72, 287–311. [Google Scholar] [CrossRef]

- Hong, S.-Y.; Noh, Y.; Dudhia, J. A New Vertical Diffusion Package with an Explicit Treatment of Entrainment Processes. Mon. Weather. Rev. 2006, 134, 2318–2341. [Google Scholar] [CrossRef]

- Berner, J.; Ha, S.-Y.; Hacker, J.P.; Fournier, A.; Snyder, C.S. Model Uncertainty in a Mesoscale Ensemble Prediction System: Stochastic versus Multiphysics Representations. Mon. Weather Rev. 2011, 139, 1972–1995. [Google Scholar] [CrossRef]

- Jankov, I.; Beck, J.; Wolff, J.; Harrold, M.; Olson, J.B.; Smirnova, T.; Alexander, C.; Berner, J. Stochastically Perturbed Parameterizations in an HRRR-Based Ensemble. Mon. Weather Rev. 2018, 147, 153–173. [Google Scholar] [CrossRef]

- Smith, T.M.; Lakshmanan, V.; Stumpf, G.J.; Ortega, K.; Hondl, K.; Cooper, K.; Calhoun, K.; Kingfield, D.; Manross, K.L.; Toomey, R.; et al. Multi-Radar Multi-Sensor (MRMS) Severe Weather and Aviation Products: Initial Operating Capabilities. Bull. Am. Meteorol. Soc. 2016, 97, 1617–1630. [Google Scholar] [CrossRef]

- Brier, G.W. Verification of forecasts expressed in terms of probability. Mon. Weather Rev. 1950, 78, 1–3. [Google Scholar] [CrossRef]

- Roberts, N.M.; Lean, H.W. Scale-Selective Verification of Rainfall Accumulations from High-Resolution Forecasts of Convective Events. Mon. Weather Rev. 2008, 136, 78–97. [Google Scholar] [CrossRef]

- Hamill, T.M. Hypothesis Tests for Evaluating Numerical Precipitation Forecasts. Weather Forecast. 1999, 14, 155–167. [Google Scholar] [CrossRef]

- Sanders, F. On Subjective Probability Forecasting. J. Appl. Meteorol. 1963, 2, 191–201. [Google Scholar] [CrossRef]

- Murphy, A.H. A Note on the Ranked Probability Score. J. Appl. Meteorol. 1971, 10, 155–156. [Google Scholar] [CrossRef]

- Murphy, A.H. A new vector partition of the probability score. J. Appl. Meteorol. 1973, 12, 595–600. [Google Scholar] [CrossRef]

- Murphy, A.H. A New Decomposition of the Brier Score: Formulation and Interpretation. Mon. Weather Rev. 1986, 114, 2671–2673. [Google Scholar] [CrossRef]

- Stephenson, D.B.; Coelho, C.A.S.; Jolliffe, I.T. Two Extra Components in the Brier Score Decomposition. Weather Forecast. 2008, 23, 752–757. [Google Scholar] [CrossRef]

| Observations % dBZ | Forecasts % dBZ | ||

|---|---|---|---|

| 100 | 60 | 100 | 70 |

| 95 | 50 | 95 | 60 |

| 90 | 45 | 90 | 50 |

| 85 | 40 | 85 | 45 |

| 80 | 35 | 80 | 40 |

| 75 | 30 | 75 | 35 |

| Ensemble | Dynamical Core | Member Number | Microphysics | PBL | LSM |

|---|---|---|---|---|---|

| NMMB | NMMB | 0–9 | Ferrier–Aligo | MYJ | NOAH |

| ARW-SP | ARW | 0–9 | Thompson | MYNN | RUC |

| MM | NMMB | 0–4 | Ferrier–Aligo | MYJ | NOAH |

| ARW | 5–9 | Thompson | MYNN | RUC | |

| ARW-MP | ARW | 0 | Thompson | MYNN | RUC |

| 1 | Thompson | MYJ | NOAH | ||

| 2 | NSSL | YSU | NOAH | ||

| 3 | NSSL | MYNN | NOAH | ||

| 4 | Morrison | MYJ | NOAH | ||

| 5 | P3 | YSU | NOAH | ||

| 6 | NSSL | MYJ | NOAH | ||

| 7 | Morrison | YSU | NOAH | ||

| 8 | P3 | MYNN | NOAH | ||

| 9 | Thompson | MYNN | NOAH |

| Ensemble Comparison | Description |

|---|---|

| ARW-SP vs. NMMB (SMSP) | Comparing how model and scheme choices impact storm mode and morphology forecasts. |

| ARW-SP vs. ARW-MP (SPMP) | Analyzing the effects of physic scheme diversity on storm mode and morphology forecasts. |

| MM vs. NMMB (MMSM) | Investigating the impacts of model dynamical core diversity on storm mode and morphology forecasts. |

| ARW-MP vs. MM (MPMM) | Examining the relative effects that the model core and physics scheme diversity have on storm mode and morphology forecasts. |

| Case Date | Initialization Time | Synoptic Forcing | Case Description |

|---|---|---|---|

| 16 May 2015 | 2300 UTC | Strong | Single-cell dryline convection growing upscale into long-lived squall line from TX to MO |

| 25 May 2015 | 1300 UTC | Strong | Multi-cell convection with large upscale growth into bowing squall line in southeast TX |

| 26 June 2015 | 0400 UTC | Weak | Nocturnal, bowing MCS, KS to MO; Nocturnal MCS Ohio Valley; ensuing daytime convective initiation |

| 14 July 2015 | 1900 UTC | Strong | Southward advancing QLCS with associated cold front through decay, MS and OH valley |

| 11 September 2015 | 0100 UTC | Moderate | Supercellular convection growing upscale into squall line with advancing cold front |

| 22 May 2016 | 2300 UTC | Moderate | Isolated convection becoming outflow dominant QLCS, western TX |

| 17 June 2016 | 2000 UTC | Weak | Southward advancing squall line with bowing segment, southeastern US |

| 6 July 2016 | 0100 UTC | Weak | Southward propagating squall line growing in horizontal scale, MN to IL; convective clusters in KS and NE |

| 7 July 2016 | 0000 UTC | Weak | Supercellular convection growing upscale into bowing MCS, SD to MO |

| 10 July 2016 | 0400 UTC | Weak | Single and multi-cellular convection growing upscale into nocturnal MCS, Dakotas to IA |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wilkins, A.; Johnson, A.; Wang, X.; Gasperoni, N.A.; Wang, Y. Multi-Scale Object-Based Probabilistic Forecast Evaluation of WRF-Based CAM Ensemble Configurations. Atmosphere 2021, 12, 1630. https://doi.org/10.3390/atmos12121630

Wilkins A, Johnson A, Wang X, Gasperoni NA, Wang Y. Multi-Scale Object-Based Probabilistic Forecast Evaluation of WRF-Based CAM Ensemble Configurations. Atmosphere. 2021; 12(12):1630. https://doi.org/10.3390/atmos12121630

Chicago/Turabian StyleWilkins, Andrew, Aaron Johnson, Xuguang Wang, Nicholas A. Gasperoni, and Yongming Wang. 2021. "Multi-Scale Object-Based Probabilistic Forecast Evaluation of WRF-Based CAM Ensemble Configurations" Atmosphere 12, no. 12: 1630. https://doi.org/10.3390/atmos12121630

APA StyleWilkins, A., Johnson, A., Wang, X., Gasperoni, N. A., & Wang, Y. (2021). Multi-Scale Object-Based Probabilistic Forecast Evaluation of WRF-Based CAM Ensemble Configurations. Atmosphere, 12(12), 1630. https://doi.org/10.3390/atmos12121630