3.1. Configuration

The experimental design are as follows. In the experiments, we first compared existing object detection algorithms with the attention-based feature fusion method proposed in this paper and then compared the fusion results of schemes involving different layers based on the attention mechanism. Then, the confidence of the preliminary detection results based on the proposed feature fusion method was judged, and the validity of the confidence degree was assessed based on the accuracy of the detection results. Finally, we compared our method with other MA detection methods.

The parameters related to the experiments are listed in

Table 3. The learning rate represents the speed of parameter updating; the momentum is the weight of the previous gradient update during the gradient update process and protects the model from both the disappearing and exploding gradient problems. Gamma is the weight parameter for the learning rate strategy; Weight_decay is the weight of the attenuation rate, which is used to prevent overfitting; Batch_size is the number of images read each time; Num_seed_boxex is the initial number of seed frames generated; and Num_output_boxes is the final number of seed frames.

To comprehensively evaluate the effectiveness of the proposed method, the following metrics were adopted: precision, recall (sensitivity), average precision, and F-measure. Before the above evaluation indicators can be defined, several related concepts must be introduced: the number of true positives (TP) is the number of positive samples that are correctly identified as positive samples, the number of true negatives (TN) is the number of negative samples that are correctly identified as negative samples, the number of false positives (FP) is the number of negative samples misidentified as positive samples, and the number of false negatives (FN) is the number of positive samples misidentified as negative samples. The Intersection-over-Union (IoU) reflects the degree of coincidence between the detection result of the model and the original ground-truth frame, and it is calculated as follows:

Among the specific evaluation indicators, the precision refers to the proportion of positive samples among the detected results and is calculated as follows:

The recall rate (sensitivity) is the proportion of positive samples detected among all positive samples and is calculated as follows:

The average precision (AP) is the area under the precision–recall curve. In this paper, the 11-point calculation method used in the Pascal VOC2007 challenge is applied to calculate the AP. The precision is measured at 11 different points according to the recall interval, and the calculation formula is as follows:

The F-measure is a comprehensive evaluation index that comprehensively considers the discrepancy between precision and recall; specifically, it is the weighted harmonic average of the precision and recall. This paper mainly uses the F1 score, which is calculated as follows:

In this paper, three object detection methods, namely, SSD, Faster R-CNN and AttractioNet, are used as the base algorithms for comparison. SSD is a regression-based target detection algorithm, while Faster R-CNN and AttractioNet are based on region-nomination target detection algorithms. By comparing SSD with Faster R-CNN and AttractioNet, the adaptability of these two types of detection algorithms to fundus images can be verified. By comparing Faster R-CNN with AttractioNet, it is possible to verify the adaptability of different region nomination algorithms (an RPN and an Attend & Refine Network, ARN) to fundus images.

The specific experimental comparison design is as follows. First, SSD, Faster R-CNN and AttractioNet were compared with our feature fusion method (Ours-1) based on the attention mechanism proposed in this paper. Then, the preliminary detection results obtained with the proposed attention-based feature fusion method were compared with the further results obtained with the spatial confidence discrimination method (Ours-2) proposed in this paper.

3.2. Experimental Results

All experiments were conducted using the IDRiD_VOC dataset described in

Section 2.1. The performances of the different detection algorithms are as follows.

For the experiment with the proposed feature fusion method based on the multilayer attention mechanism,

Table 4 compares the detection results of the existing target detection algorithms with those of the proposed attention-based feature fusion method using the AP metric.

Table 5 compares the results of fusion schemes using different layers

for attention-based feature fusion using the precision, recall rate and F1 score as the evaluation indicators.

For the experiment conducted to test the confidence discrimination method based on spatial confidence proposed in this paper,

Table 6 shows the detection results obtained by applying the confidence degree based on the preliminary test results obtained with Ours-1, using the detection precision and sensitivity as the evaluation indices.

To illustrate the performance of our methods, the results of various object detection methods are compared.

Table 4 compares the detection results of the existing object detection algorithms with those of the proposed feature fusion method based on the attention mechanism. At different IoU thresholds, our algorithm outperforms its competitors based on the corresponding AP metrics. When the IoU threshold is 0.5, the AP value of our algorithm reaches 0.757.

In

Table 4, the Ours-1 method is applied to fuse downsampled features from the C5 layer and previous layers based on the attention mechanism. Considering that shallow layers capture rich feature information relevant to small targets, the appropriate layers

,

,

, and

are selected for feature fusion. In terms of the AP metric, Ours-1 achieves better detection performance than the other detection algorithms do. SSD also uses multilayer features for prediction. However, most of the feature information that is useful for small targets is contained in the shallow layers. Ours-1 uses the shallow layers

,

,

, and

, and thus can effectively extract features that are relevant to small targets, while SSD uses the deeper layers

, which may result in the absence of small target features. Although SSD also uses layer

, a single layer is a weak basis on which to extract small target features; by contrast, Faster R-CNN and AttractioNet use region nomination networks and thus achieve better accuracy than SSD for small target detection. Nevertheless, Ours-1 uses layers

,

,

and

to extract abundant features relevant to small targets, while Faster R-CNN and AttractioNet use only the top-level features of layer

for prediction and thus ignore crucial shallow-layer features for small object detection. Thus, Ours-1 is superior to SSD, Faster R-CNN, and AttractioNet.

Table 5 compares the results of using different layers

for feature fusion based on the attention mechanism. As seen from this comparison, the inclusion of shallow features effectively improves the MA detection performance. For the base fusion scheme (using only

and

) with attention, the F1 score is 0.582, whereas the F1 score increases to 0.840 when

and

are added.

Table 5 compares the results of different fusion schemes using the proposed attention-based feature fusion method. The results without the attention mechanism are also included in

Table 5 for the fusion of the same feature layers. The experimental results show that the attention mechanism effectively improves the detection precision and recall rate for a given fusion scheme. For example, when only

and

are considered for feature fusion, the F1 score increases from 0.549 to 0.582 when the attention mechanism is adopted. For fusion schemes at different levels, when shallower feature layers are used, a higher detection recall rate is achieved. This finding shows that smaller objects are better detected when features from shallow layers are included because these features are more conducive to the detection of small targets. However, the semantic information of shallow feature layers is not sufficiently rich, leading to a decrease in precision. For instance, the detection precision achieved when features from

,

, and

is higher than that achieved when using features from

,

,

, and

; however, the comprehensive F1 score increases in the latter case, indicating that the overall detection performance is improved.

Table 6 compares the preliminary detection results obtained with Ours-1 and the further results obtained when confidence discrimination is applied. When the voting function threshold is set to 0.8, the precision of Ours-2 reaches 0.895.

In

Table 6, the preliminary test results of Ours-1 are compared with those of the confidence discriminant method. Under different probability thresholds, the influence factors are the accuracy and sensitivity of detection. The experimental results show that higher sensitivity can be obtained with lower probability threshold, but its accuracy decreases. As a discriminant factor, spatial confidence can reduce the misjudgment of pseudoaneurysms on or far from blood vessels. The strategy improves the accuracy of the model (by 2–4 percentage points) while maintaining the sensitivity of the model.

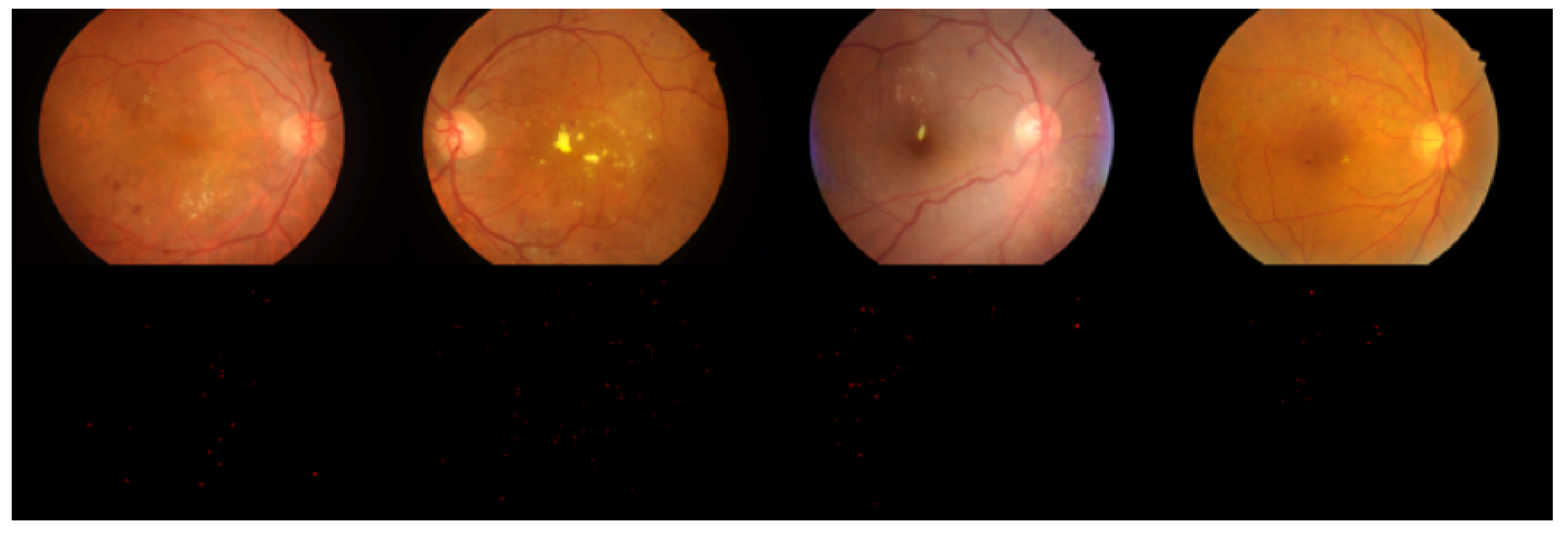

Selected detection results from the experiment are shown in

Figure 9 and

Figure 10.

Figure 9 compares the detection results of Ours-1 with those of the other models based on contrast detection. The first row shows the detection results of SSD, the second row shows the detection results of Faster R-CNN, the third row shows the detection results of AttractioNet, and the fourth row shows the detection results of Ours-1.

Figure 10 shows examples of false detection based on confidence degree discrimination in which the falsely detected objects fall on a blood vessel. The white circles indicate such false detections. The distance between such an object and the nearest blood vessel is obtained by calculating the distance relationship, and the corresponding confidence score is 0. Through majority voting, these false objects can be effectively screened out, thereby improving the detection accuracy.

Based on the small object detection experiments reported in this paper,

Table 7 compares the method proposed in this paper with other methods for MA detection, using the detection sensitivity as the evaluation index. As shown, our method achieves the highest sensitivity value of 0.868.

As shown in

Table 7, MA detection algorithms based on different protocols were selected for an experimental comparison. Here, because the sensitivity was chosen as the indicator, the experimental results are compared with those from [

30], in which a single network is used to detect multiple lesions such as exudate, hemorrhage, and MA regions, and [

31], in which blood vessel removal and re-extraction are first performed. In addition, the methods of [

32,

33] and others detect MAs through pixel-by-pixel classification. This comparison shows that the proposed approach can more successfully detect MAs in fundus images.