Development of a Mushroom Growth Measurement System Applying Deep Learning for Image Recognition

Abstract

:1. Introduction

2. Materials and Methods

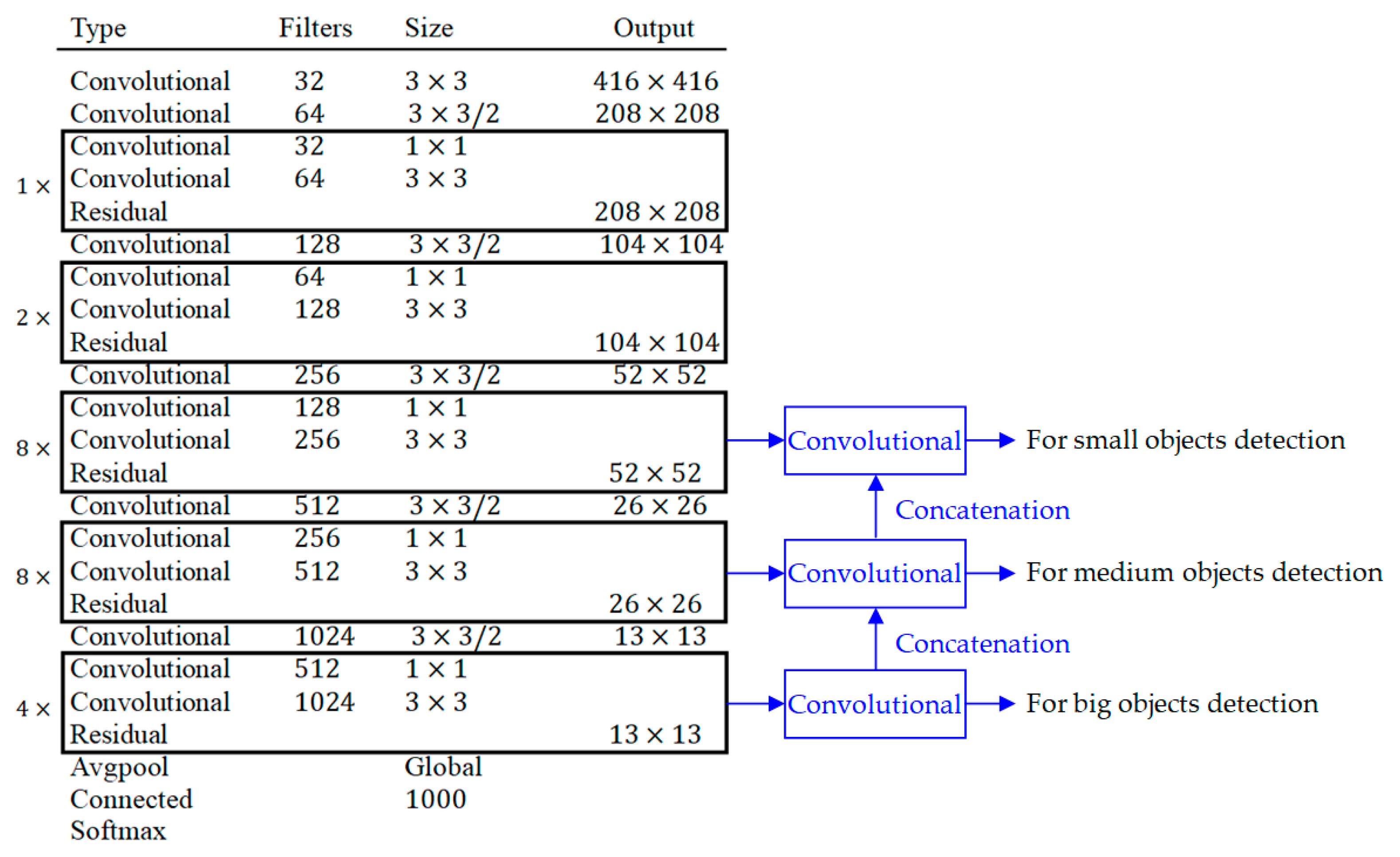

2.1. The CNN Algorithm

2.1.1. Batch Normalization

2.1.2. High Resolution Classifier

2.1.3. Convolutional Neural Network with Anchor Boxes

2.1.4. Dimension Clusters of Anchor Box

2.1.5. Fine-Grained Features

2.1.6. Multi-Scale Training

2.1.7. Training

- , are constants, (, );

- are the center coordinates of the ith anchor box;

- are the center coordinates of the ith known ground truth box;

- are the width and height of the ith anchor box;

- are the width and height of the ith ground truth box;

- is the confidence score of the ith objectness;

- is the objectness of the ith ground truth box;

- is the classification loss of the ith object; and

- is the classification loss of the ith ground truth box.

2.1.8. Detection Purpose Training

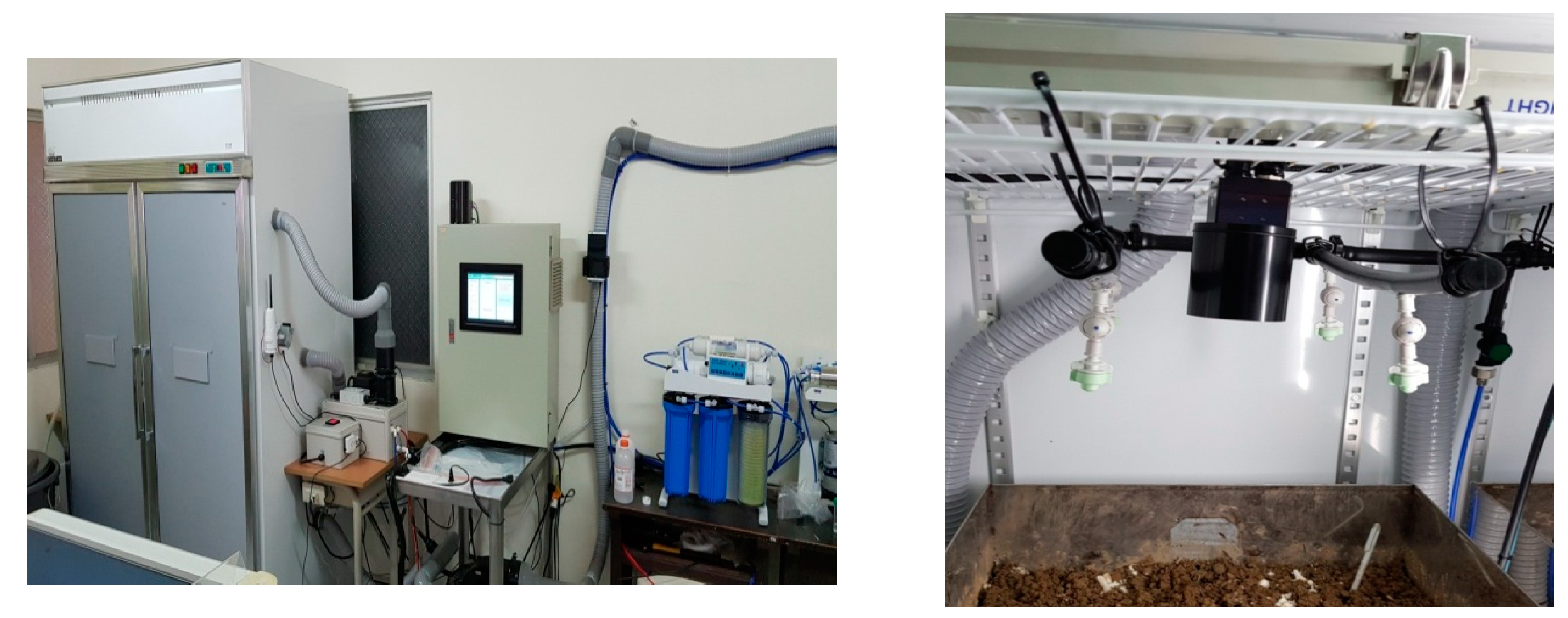

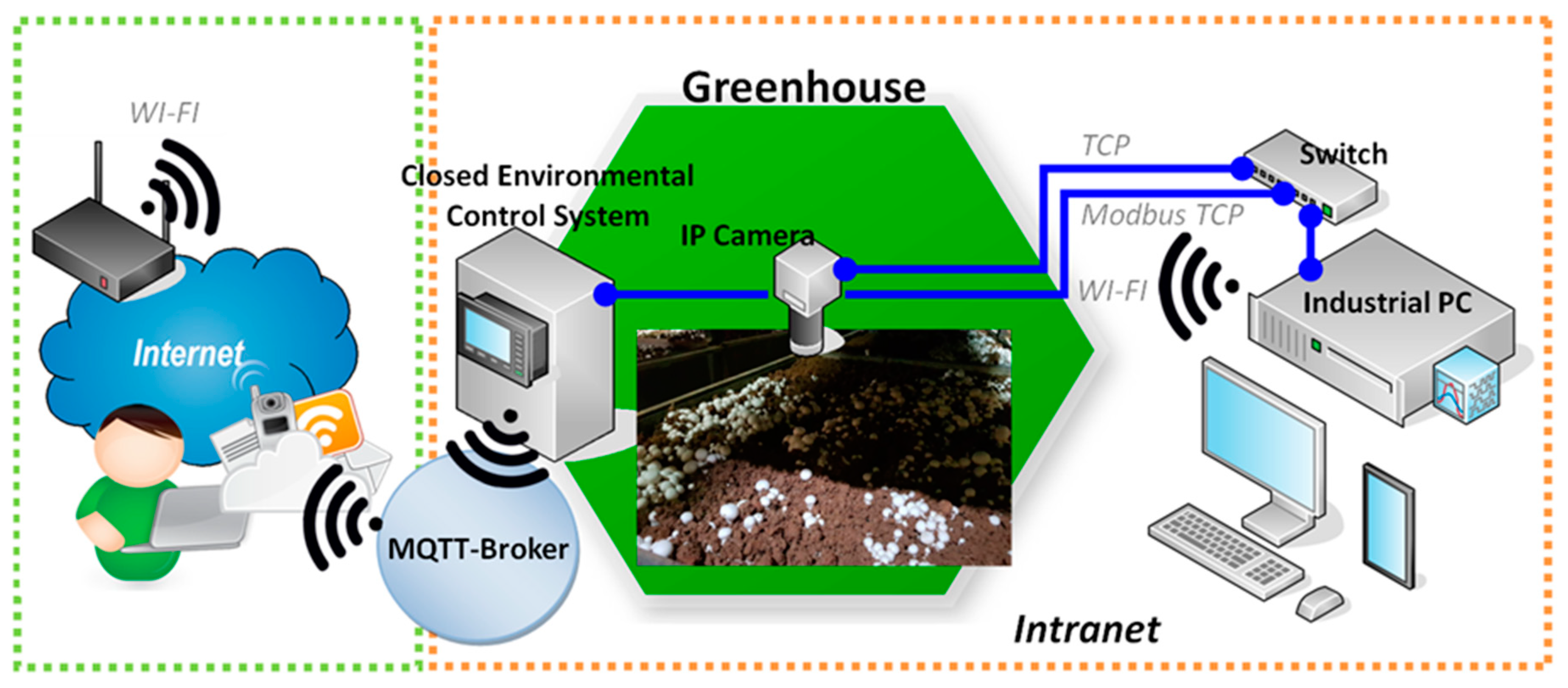

2.2. System Design

2.2.1. System Overview

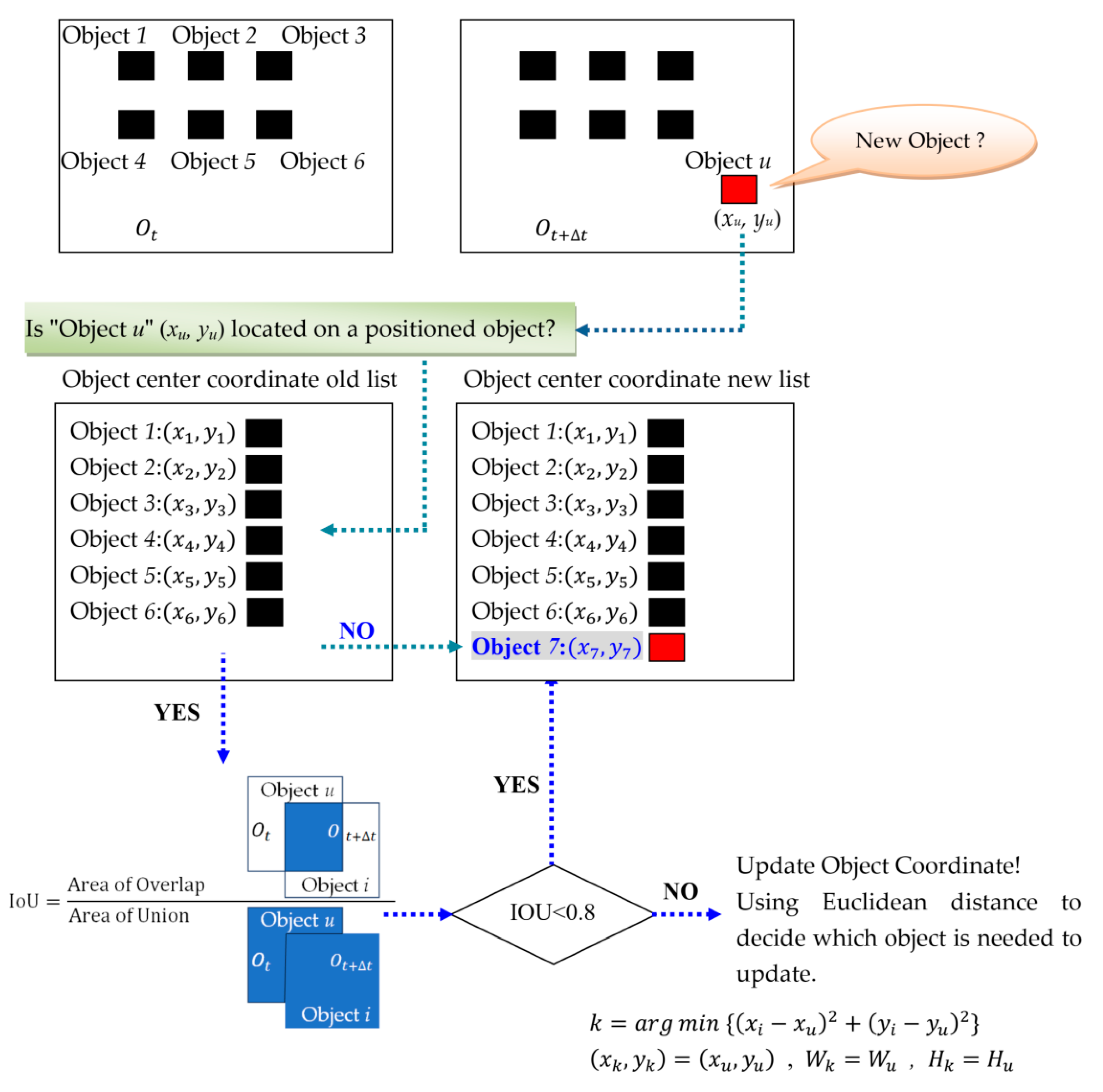

2.2.2. Positioning Correction Method

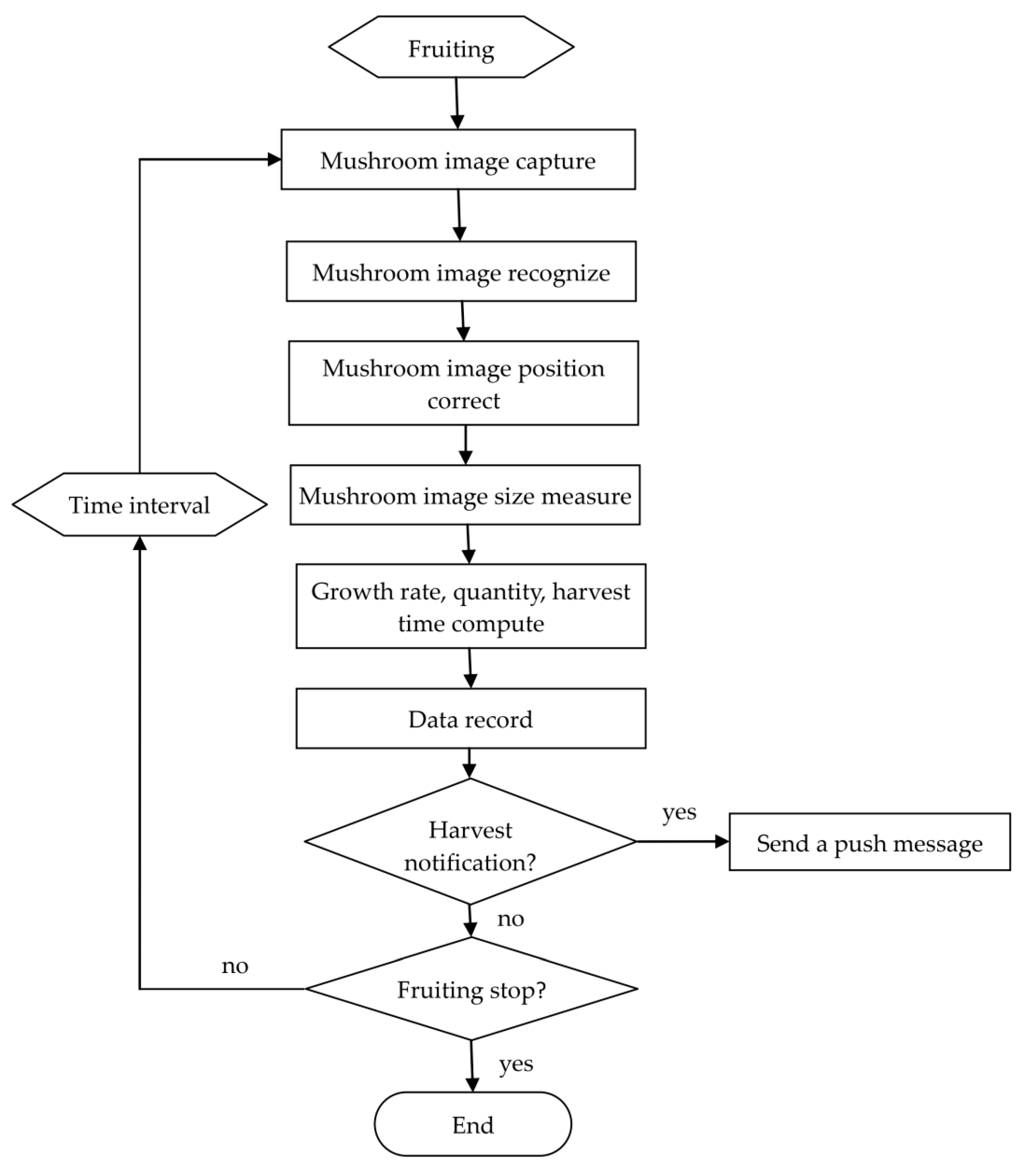

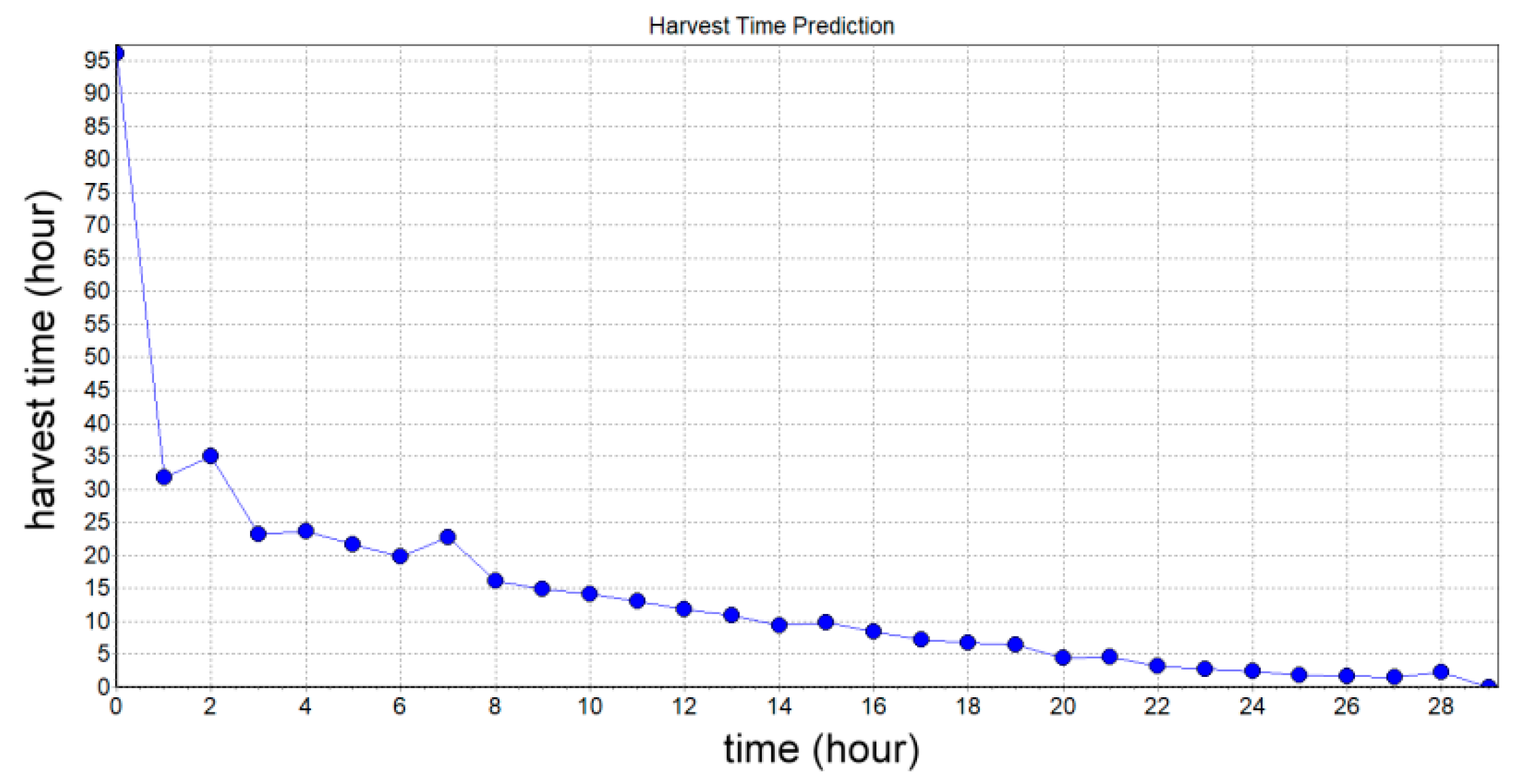

2.2.3. Estimation of Cap Size, Growth Rate, Amount, and Harvest Time

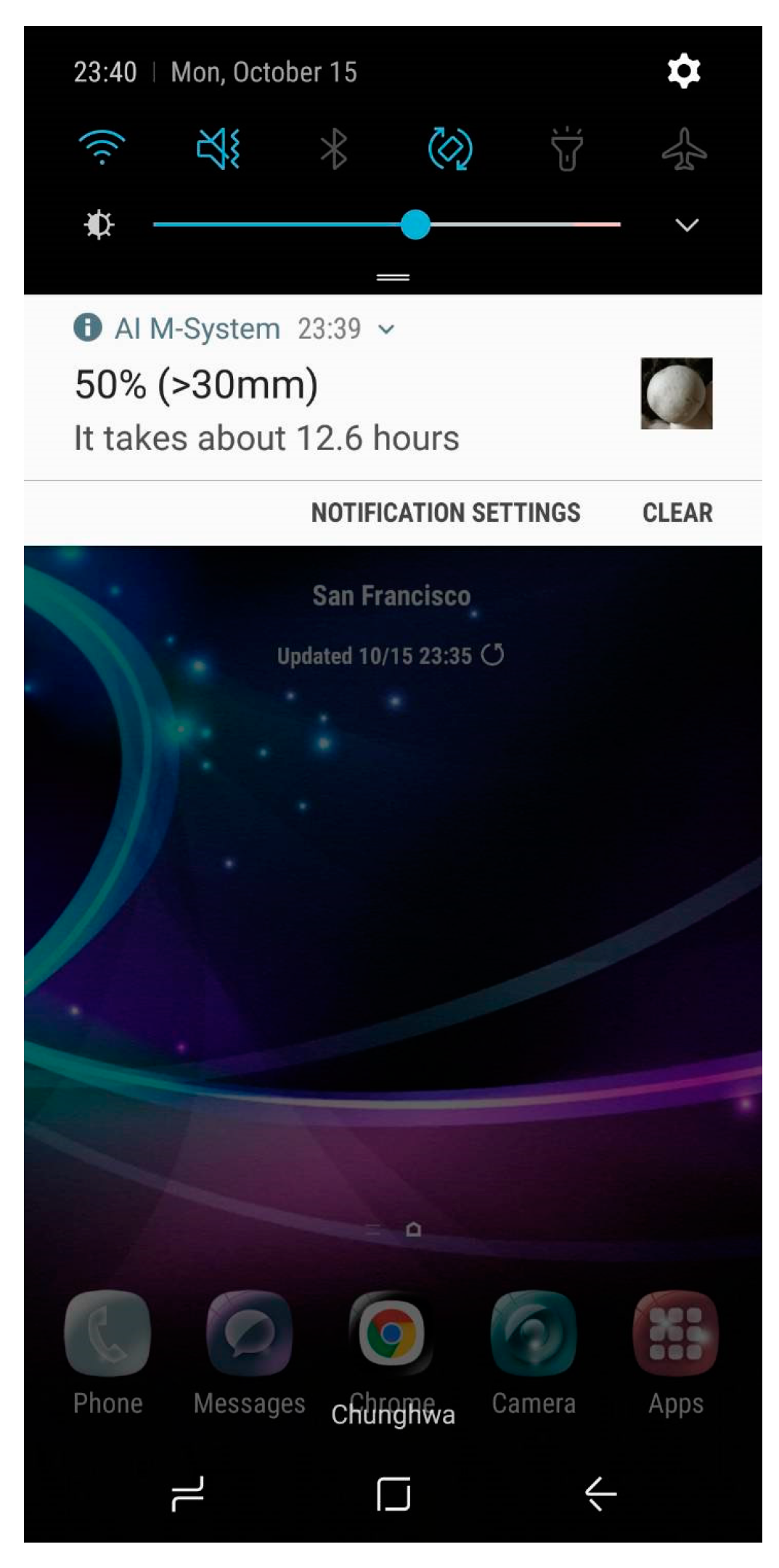

2.2.4. Human Machine Interface

3. Results

3.1. Sample Training

- Number of training images (batch = 64). This parameter sets the number of images for each training sample (64 in this paper). This parameter must be adjusted according to the memory size of the image processing card.

- Number of segment training (subdivisions = 16). In order to match the Nvidia CUDA library (executing GPU), this parameter allows the training image to be segmented. After one by one execution, the iterative process is completed again.

- The input image was set as 416 by 416 with 24-bit color (channels = 3). All the original images were resized to match the input image.

- Regional comparison rate (momentum = 0.9). It is not easy to find the best value if this parameter is set larger than 1.

- Weight attenuation ratio (decay = 0.0005). This parameter is used to reduce the possibility of overfitting.

- Diversified parameters (angle = 0, saturation = 1.5, exposure = 1.5, hue = 0.1). These are used to adjust the rotation, saturation, exposure, and hue of the image. This is to enhance the diversification of the sample and improve the practical recognition effect of the image.

- Network learning rate (learning rate = 0.001). This parameter is used to determine the speed of the weight adjustment. A larger value indicates a smaller number of calculations, but divergence may occur. As the number becomes smaller, the convergence speed becomes slower, and it is easy to find the result of local optimization.

- The maximum number of iterations was 10,000 (max batches = 10,000).

- Learning policies are Fixed, Step, Exp, Inv, Multistep, Poly, or Sigmoid. We used Step in the YOLOv3 algorithm.

- The number of feature generation filters was 18 (filter = 18).

- Number of object categories was 1 (classes = 1). The mushroom is the only object in this study.

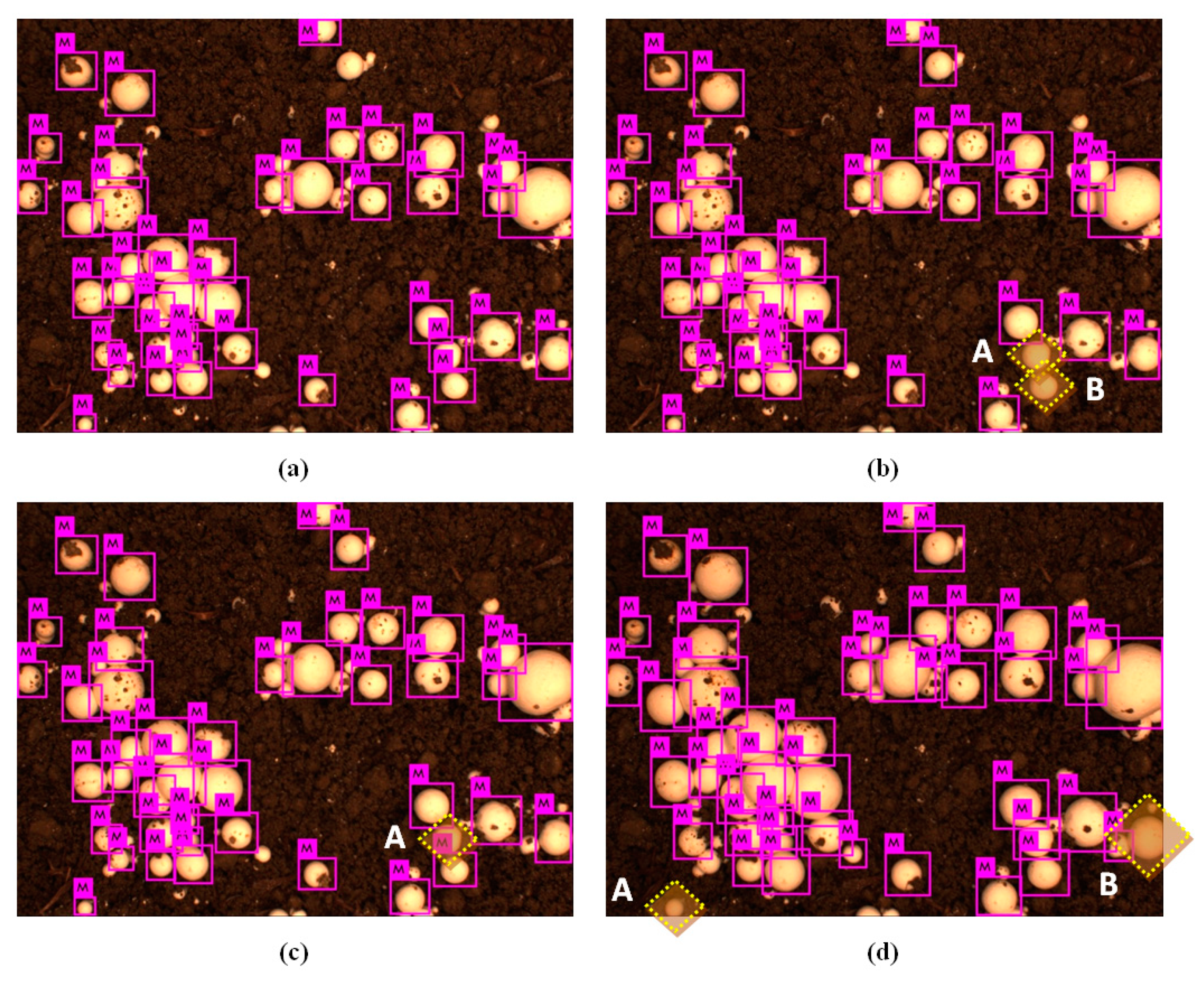

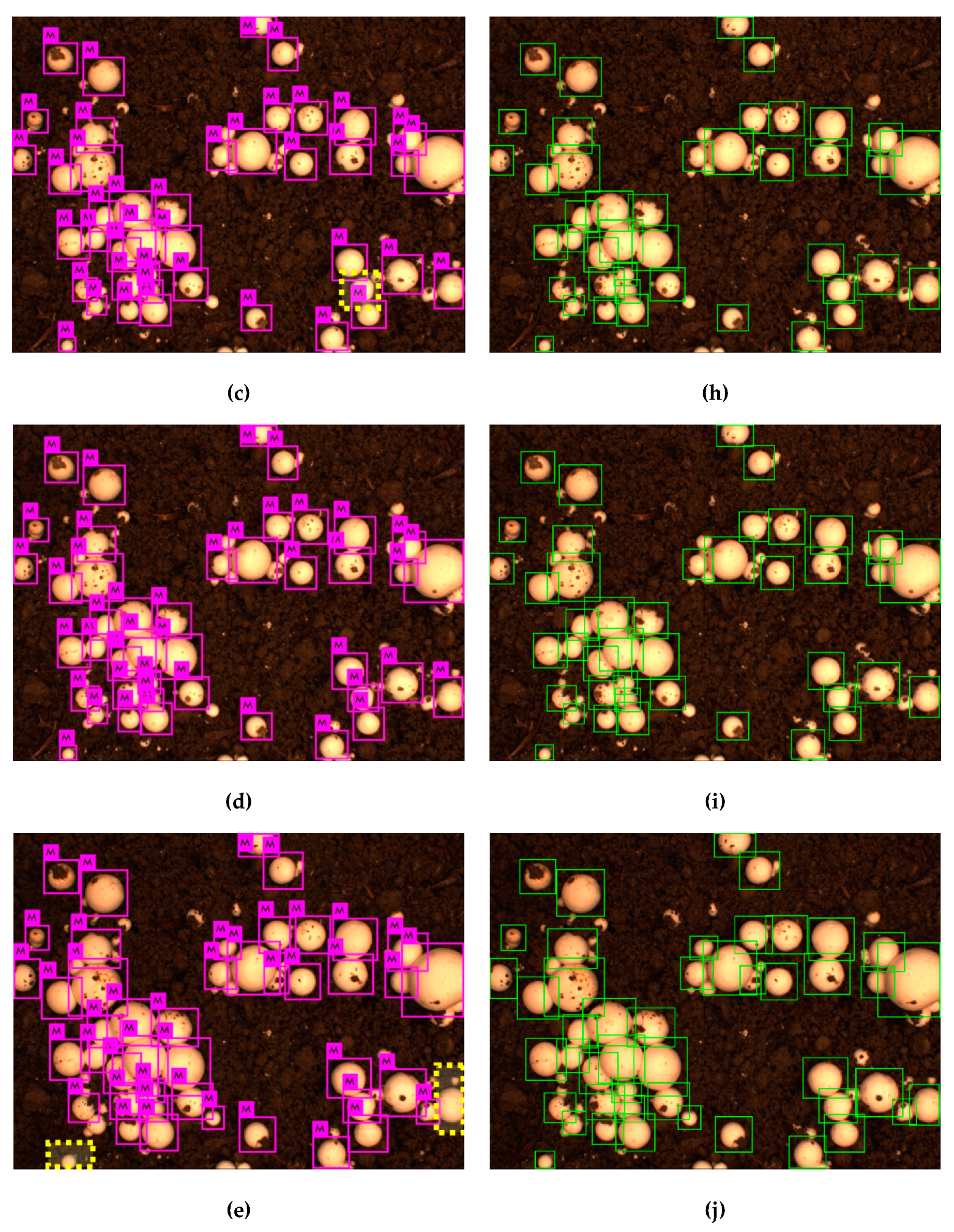

3.2. The Experiment of Mushroom Positioning Correction

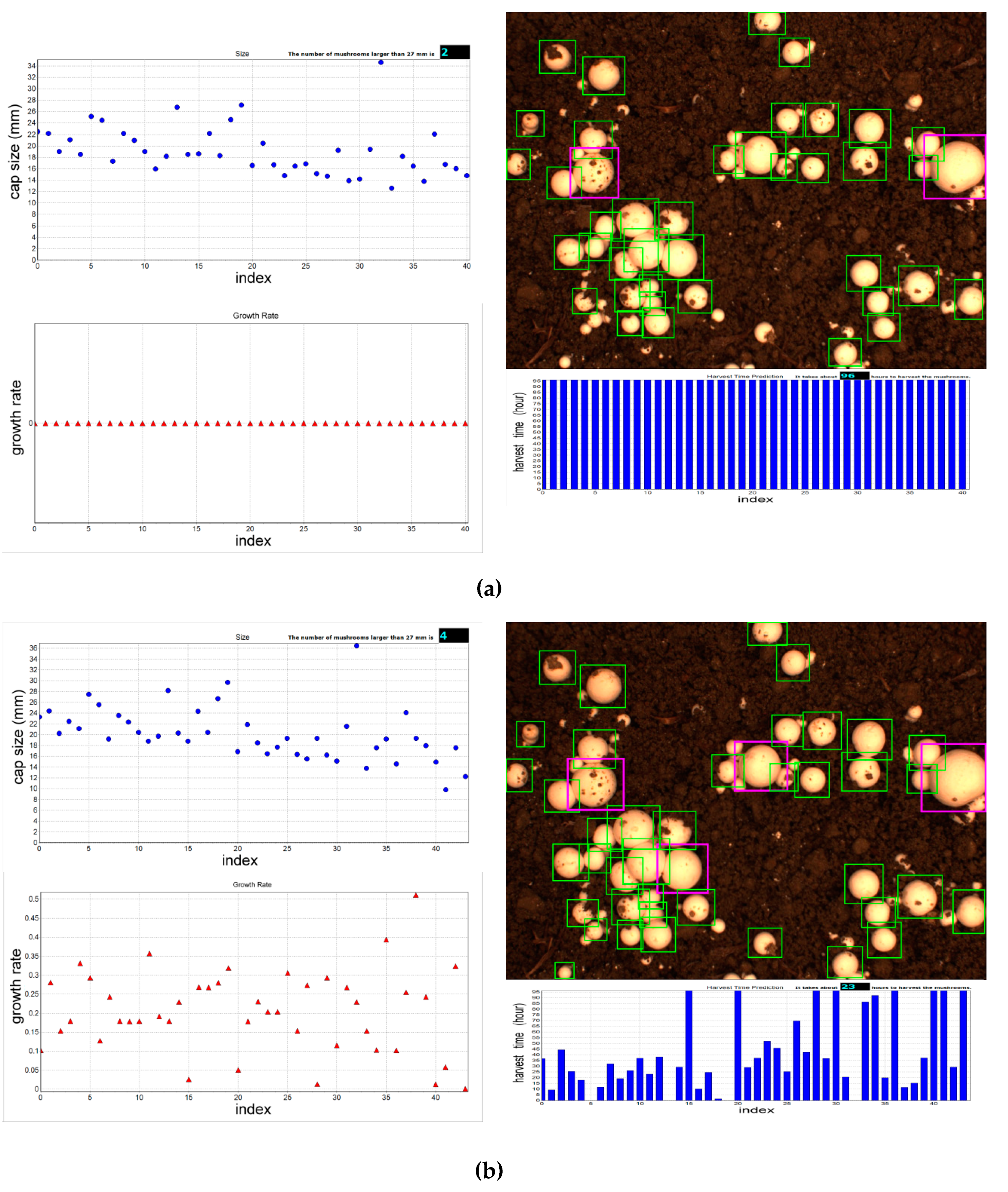

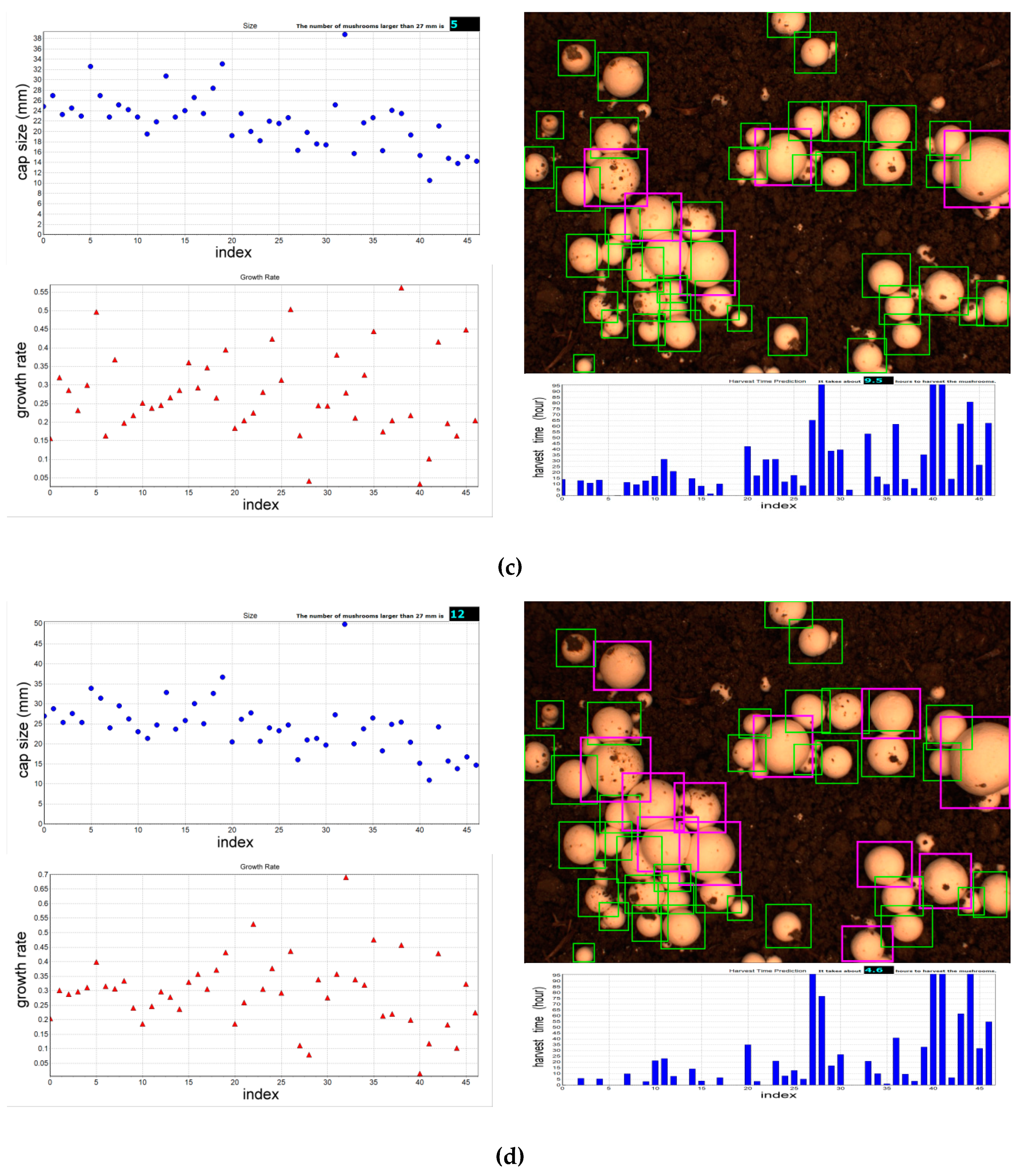

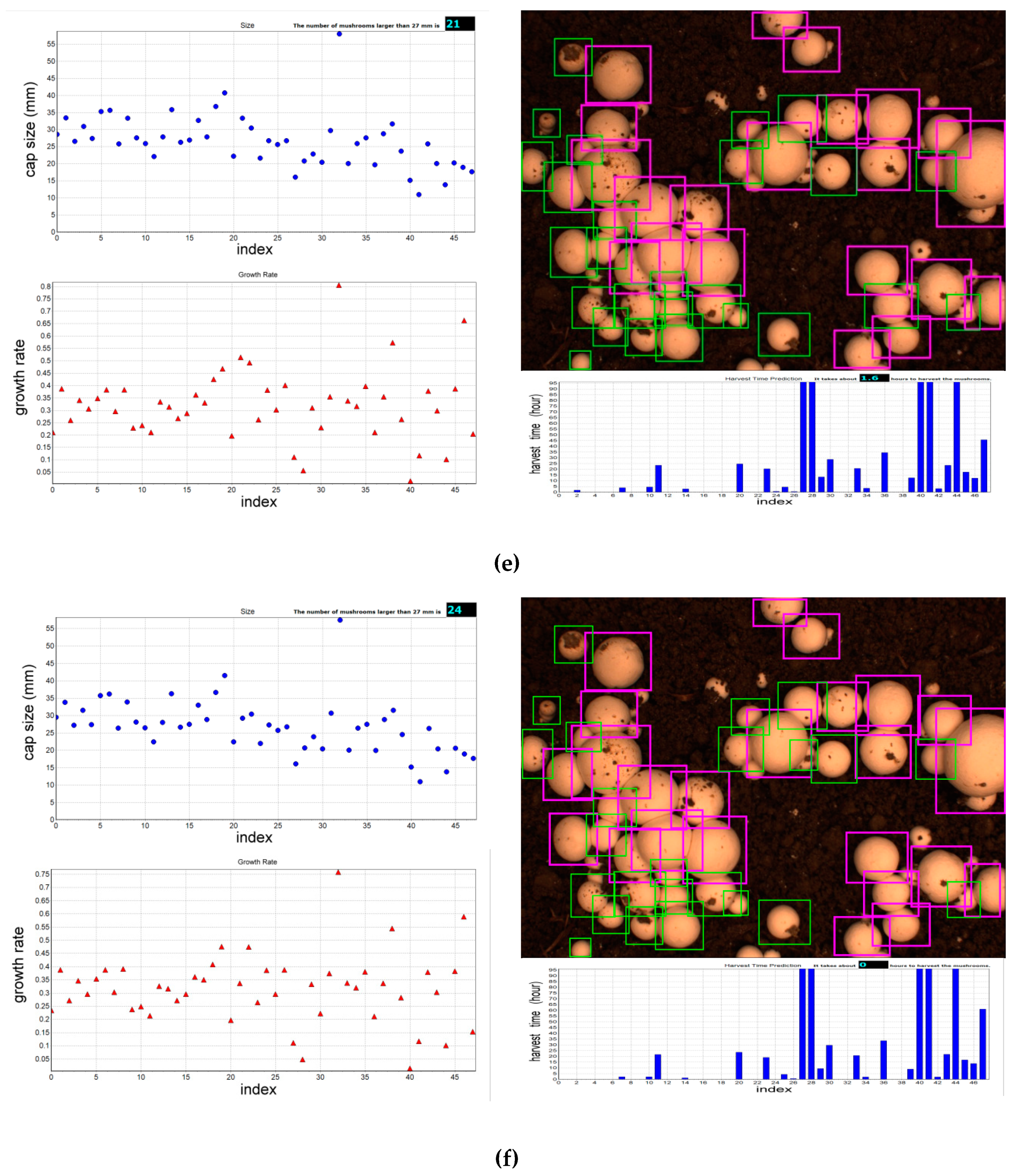

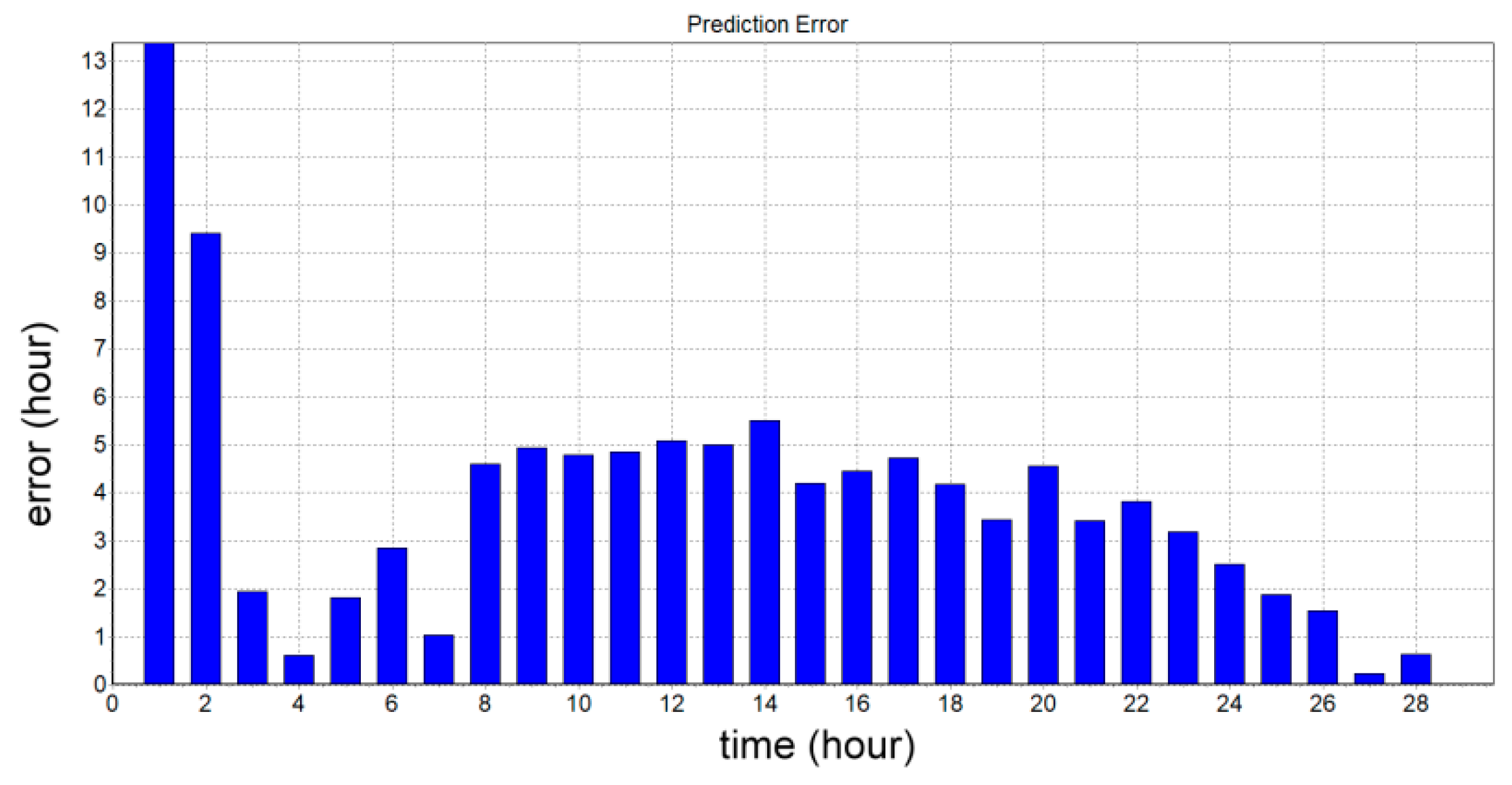

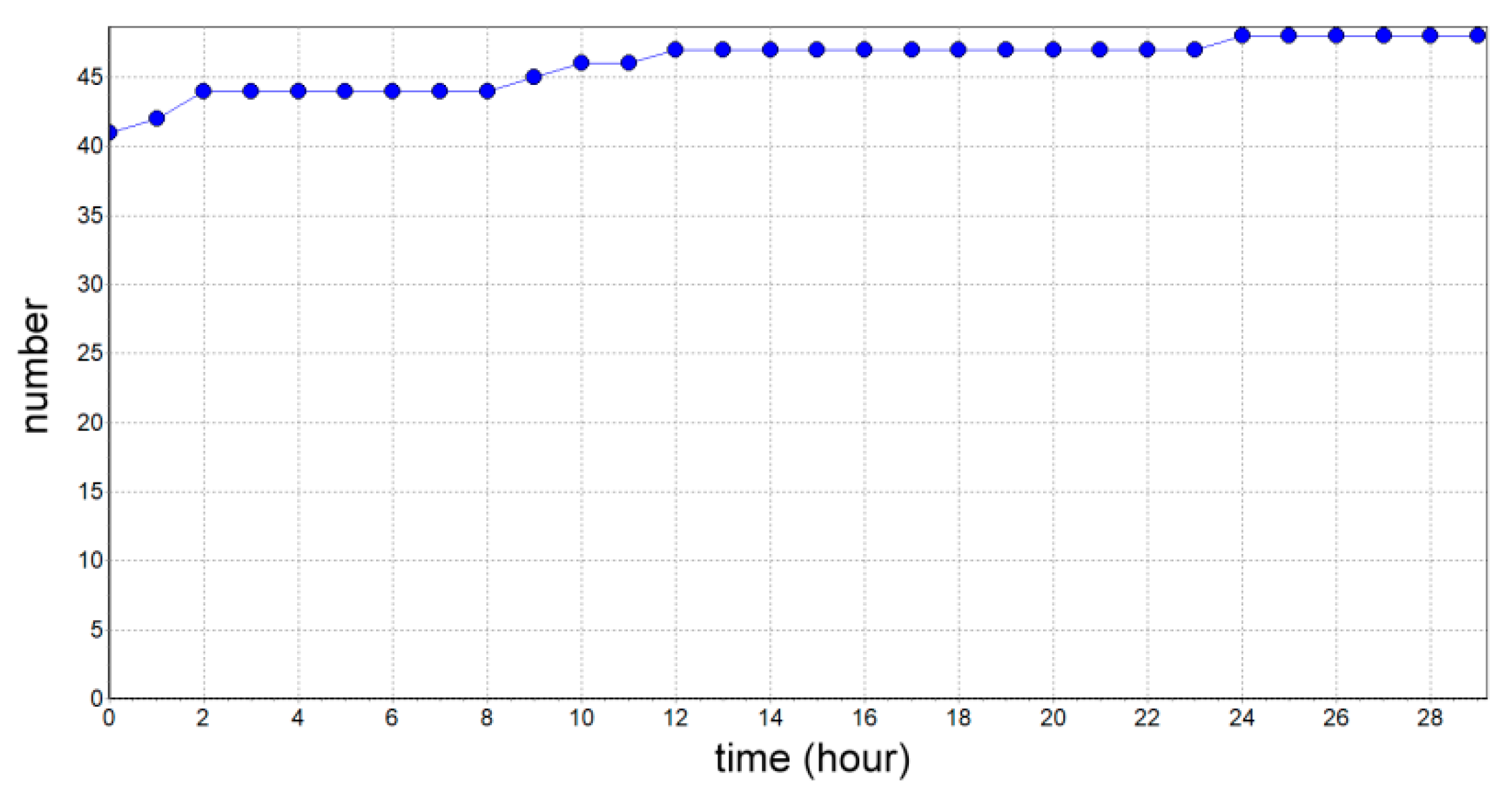

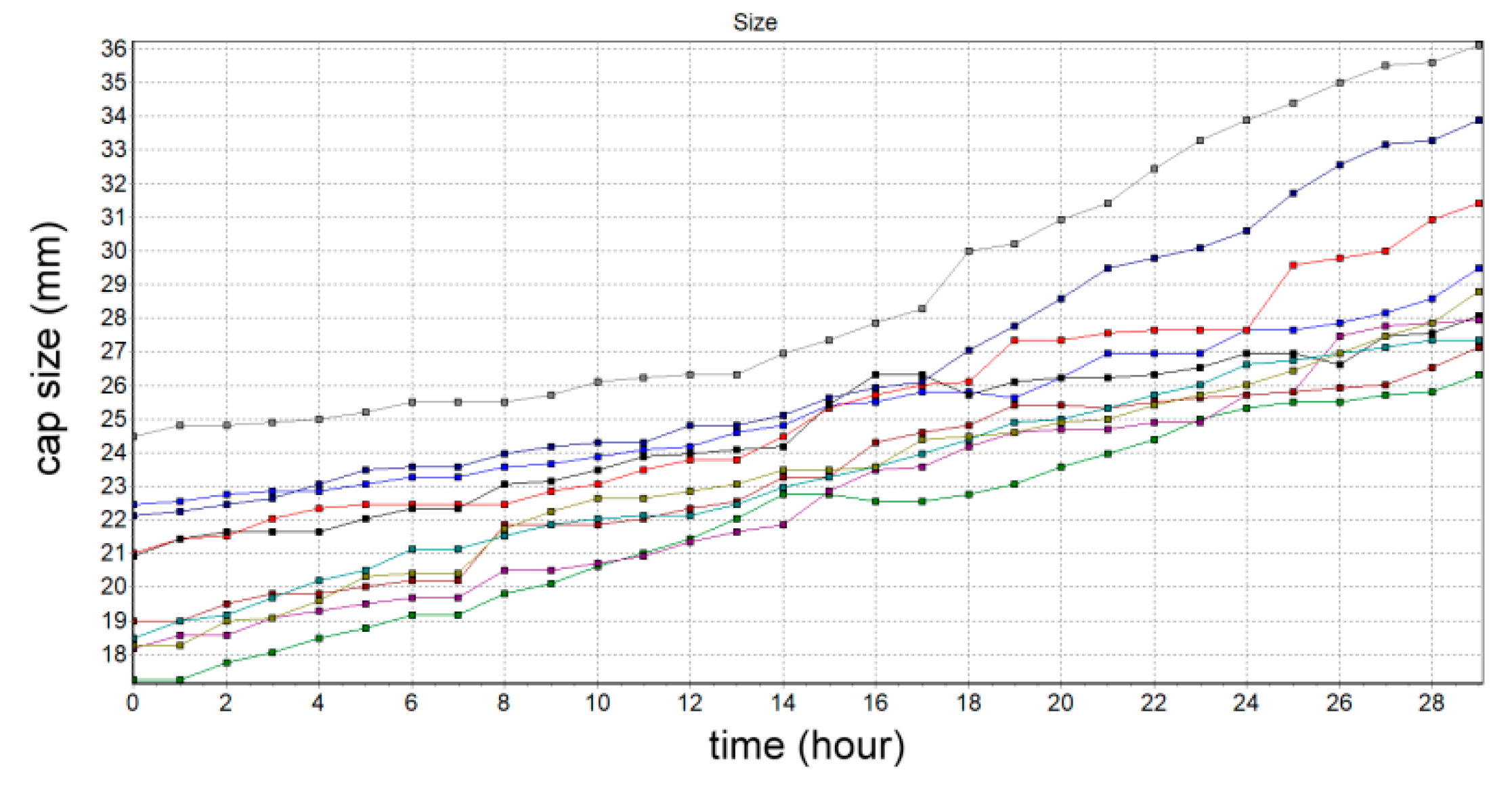

3.3. The Experiments of Cap Size, Growth Rate, Amount, and Harvest Time Estimation

4. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Zhou, J.-H.; Zuo, Y.-M. Application of Computer Control System in the Greenhouse Environmental Management. In Proceedings of the 2009 International Conference on Future BioMedical Information Engineering, Sanya, China, 13–14 December 2009; pp. 204–205. [Google Scholar]

- Zhang, C.-F.; Chen, Y.-H. Applications of DMC-PID Algorithm in the Measurement and Control System for the Greenhouse Environmental Factors. In Proceedings of the 2011 Chinese Control and Decision Conference, Nanchang, China, 23–25 May 2011; pp. 483–485. [Google Scholar]

- Idris, I.; Sani, M.I. Monitoring and control of aeroponic growing system for potato production. In Proceedings of the 2012 IEEE Conference on Control, Systems & Industrial Informatics, Bandung, Indonesia, 23–26 September 2012; pp. 120–125. [Google Scholar]

- Liu, Q.; Jin, D.; Shen, J.; Fu, Z.; Linge, N. A WSN-Based prediction model of microclimate in a greenhouse using extreme learning approaches. In Proceedings of the 2016 18th International Conference on Advanced Communication Technology, PyeongChang, South Korea, 31 January–3 February 2016; pp. 730–735. [Google Scholar]

- Wu, W.-H.; Zhou, L.; Chen, J.; Qiu, Z.; He, Y. GainTKW: A measurement system of thousand kernel weight based on the android platform. Agronomy 2018, 8, 178. [Google Scholar] [CrossRef]

- Gong, L.; Lin, K.; Wang, T.; Liu, C.-L.; Yuan, Z.; Zhang, D.-B.; Hong, J. Image-Based on-panicle rice [Oryza sativa L.] grain counting with a prior edge wavelet correction model. Agronomy 2018, 8, 91. [Google Scholar] [CrossRef]

- Zhang, C.-Y.; Si, Y.-S.; Lamkey, J.; Boydston, R.A.; Garland-Campbell, K.A.; Sankaran, S. High-throughput phenotyping of seed/seedling evaluation using digital image analysis. Agronomy 2018, 8, 63. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: London, UK, 2016; ISBN 0262035618. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Proc. Syst. 2012, 1, 1097–1105. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Sermanet, P.; Eigen, D.; Zhang, X.; Mathieu, M.; Fergus, R.; LeCun, Y. Overfeat: Integrated recognition, localization and detection using convolutional networks. In Proceedings of the 2013, OverFeat: Integrated Recognition, Localization and Detection Using Convolutional Networks, International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2013. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Computer Vision—ECCV 2014. Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2014; Volume 8689, pp. 818–833. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. 2015 Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 1440–1448. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 2015 International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015; pp. 1–14. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 Jun 2015; pp. 1–9. [Google Scholar]

- Szegedy, C.; Reed, S.; Erhan, D.; Anguelov, D. Scalable, High-Quality Object Detection. arXiv Preprint, 2015; arXiv:1412.1441. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single shot multibox detector. In Computer Vision—ECCV 2016. Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement (Tech Report). 2018. Available online: https://pjreddie.com/media/files/papers/YOLOv3. pdf (accessed on 1 November 2018).

- MQTT. Available online: http://mqtt.org (accessed on 15 November 2018).

- LabelImg. Available online: https://github.com/tzutalin/labelImg (accessed on 3 November 2018).

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, C.-P.; Liaw, J.-J.; Wu, T.-C.; Hung, T.-F. Development of a Mushroom Growth Measurement System Applying Deep Learning for Image Recognition. Agronomy 2019, 9, 32. https://doi.org/10.3390/agronomy9010032

Lu C-P, Liaw J-J, Wu T-C, Hung T-F. Development of a Mushroom Growth Measurement System Applying Deep Learning for Image Recognition. Agronomy. 2019; 9(1):32. https://doi.org/10.3390/agronomy9010032

Chicago/Turabian StyleLu, Chuan-Pin, Jiun-Jian Liaw, Tzu-Ching Wu, and Tsung-Fu Hung. 2019. "Development of a Mushroom Growth Measurement System Applying Deep Learning for Image Recognition" Agronomy 9, no. 1: 32. https://doi.org/10.3390/agronomy9010032

APA StyleLu, C.-P., Liaw, J.-J., Wu, T.-C., & Hung, T.-F. (2019). Development of a Mushroom Growth Measurement System Applying Deep Learning for Image Recognition. Agronomy, 9(1), 32. https://doi.org/10.3390/agronomy9010032