A Systematic Review of 59 Field Robots for Agricultural Tasks: Applications, Trends, and Future Directions

Abstract

1. Introduction

2. Methodology

2.1. Scopus

2.2. Web of Science

3. Agricultural Field Robots: General Overview

3.1. Definition and History

3.2. Global Market

3.3. Current Limitations

4. Field Robots: Main Tasks and Description

4.1. Multi-Purpose Robots

4.2. Harvesting Robots

4.3. Mechanical Weeding Robots

4.4. Pest Control and Chemical Weeding Robots

4.5. Transplanting Robots

4.6. Tillage-Sowing Robots

4.7. Scouting Monitoring Robots

5. Trends and Discussion

5.1. Country

5.2. Sensors: Navigation and Safety

5.3. Engine

5.4. Presence on the Market

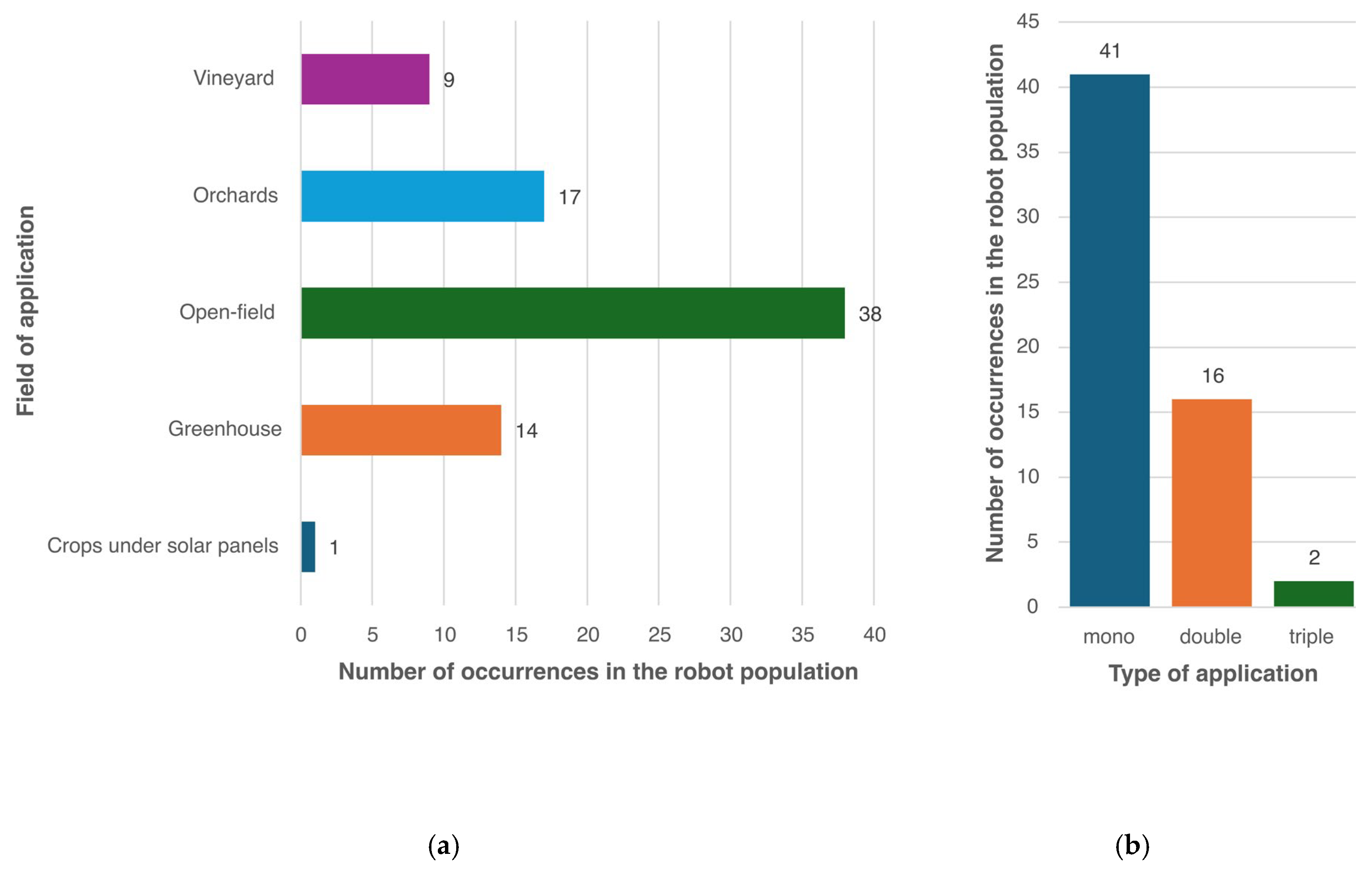

5.5. Application Field

5.6. Main Task

5.7. Traction Systems

5.8. Future Perspectives

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Kennedy, J.; Hurtt, G.C.; Liang, X.-Z.; Chini, L.; Ma, L. Changing Cropland in Changing Climates: Quantifying Two Decades of Global Cropland Changes. Environ. Res. Lett. 2023, 18, 064010. [Google Scholar] [CrossRef]

- Crippa, M.; Solazzo, E.; Guizzardi, D.; Monforti-Ferrario, F.; Tubiello, F.N.; Leip, A. Food Systems Are Responsible for a Third of Global Anthropogenic GHG Emissions. Nat. Food 2021, 2, 198–209. [Google Scholar] [CrossRef]

- Raj, R.; Kumar, S.; Lal, S.P.; Singh, H.; Pradhan, J.; Bhardwaj, Y. A Brief Overview of Technologies in Automated Agriculture: Shaping the Farms of Tomorrow. Int. J. Environ. Clim. Change 2024, 14, 181–209. [Google Scholar] [CrossRef]

- Duckett, T.; Pearson, S.; Blackmore, S.; Grieve, B. Agricultural Robotics: The Future of Robotic Agriculture; UKRAS White Papers; EPSRC UK-RAS Network: London, UK, 2018. [Google Scholar]

- Hamilton, S.F.; Richards, T.J.; Shafran, A.P.; Vasilaky, K.N. Farm Labor Productivity and the Impact of Mechanization. Am. J. Agric. Econ. 2021, 104, 1435–1459. [Google Scholar] [CrossRef]

- Abella, M. The Prosperity Paradox: Fewer and More Vulnerable Farm Workers by Philip Martin, Oxford, Oxford University Press, 2021, xix + 213 pp.; Oxford University Press: Oxford, UK, 2021; Volume 59, pp. 230–233. [Google Scholar] [CrossRef]

- Yerebakan, M.O.; Hu, B. Human–Robot Collaboration in Modern Agriculture: A Review of the Current Research Landscape. Adv. Intell. Syst. 2024, 6, 2300823. [Google Scholar] [CrossRef]

- Tagarakis, A.C.; Benos, L.; Aivazidou, E.; Anagnostis, A.; Kateris, D.; Bochtis, D. Wearable Sensors for Identifying Activity Signatures in Human-Robot Collaborative Agricultural Environments. In Proceedings of the 13th EFITA International Conference, Online, 19 November 2021; MDPI: Basel, Switzerland, 2021; p. 5. [Google Scholar]

- Adamides, G.; Edan, Y. Human–Robot Collaboration Systems in Agricultural Tasks: A Review and Roadmap. Comput. Electron. Agric. 2023, 204, 107541. [Google Scholar] [CrossRef]

- Castro, H. Autonomous Field Robotics: Optimizing Efficiency and Safety Through Data; ResearchGate: Berlin, Germany, 2023. [Google Scholar]

- Li, X.; Ma, N.; Han, Y.; Yang, S.; Zheng, S. AHPPEBot: Autonomous Robot for Tomato Harvesting Based on Phenotyping and Pose Estimation 2024. arXiv 2024, arXiv:2405.06959. [Google Scholar] [CrossRef]

- Zhang, Z.; Kayacan, E.; Thompson, B.; Chowdhary, G. High Precision Control and Deep Learning-Based Corn Stand Counting Algorithms for Agricultural Robot. Auton. Robot. 2020, 44, 1289–1302. [Google Scholar] [CrossRef]

- Ahmadi, A.; Halstead, M.; McCool, C. Towards Autonomous Visual Navigation in Arable Fields 2022. arXiv 2022, arXiv:2109.11936. [Google Scholar] [CrossRef]

- Bras, A.; Montanaro, A.; Pierre, C.; Pradel, M.; Laconte, J. Toward a Better Understanding of Robot Energy Consumption in Agroecological Applications 2024. arXiv 2024, arXiv:2410.07697. [Google Scholar] [CrossRef]

- Cole, J. Autonomous Robotics in Action: Performance and Safety Optimization with Data; ResearchGate: Berlin, Germany, 2023. [Google Scholar]

- Padhiary, M.; Kumar, A.; Sethi, L.N. Emerging Technologies for Smart and Sustainable Precision Agriculture. Discov. Robot. 2025, 1, 6. [Google Scholar] [CrossRef]

- What Is a Robot?|WIRED. Available online: https://www.wired.com/story/what-is-a-robot/ (accessed on 11 March 2025).

- Lowenberg-DeBoer, J.; Huang, I.Y.; Grigoriadis, V.; Blackmore, S. Economics of Robots and Automation in Field Crop Production. Precis. Agric. 2020, 21, 278–299. [Google Scholar] [CrossRef]

- Shaikh, T.A.; Mir, W.A.; Rasool, T.; Sofi, S. Machine Learning for Smart Agriculture and Precision Farming: Towards Making the Fields Talk. Arch. Comput. Methods Eng. 2022, 29, 4557–4597. [Google Scholar] [CrossRef]

- Ayoub Shaikh, T.; Rasool, T.; Rasheed Lone, F. Towards Leveraging the Role of Machine Learning and Artificial Intelligence in Precision Agriculture and Smart Farming. Comput. Electron. Agric. 2022, 198, 107119. [Google Scholar] [CrossRef]

- Saiz-Rubio, V.; Rovira-Más, F. From Smart Farming towards Agriculture 5.0: A Review on Crop Data Management. Agronomy 2020, 10, 207. [Google Scholar] [CrossRef]

- Xie, D.; Chen, L.; Liu, L.; Chen, L.; Wang, H. Actuators and Sensors for Application in Agricultural Robots: A Review. Machines 2022, 10, 913. [Google Scholar] [CrossRef]

- Vougioukas, S.G. Agricultural Robotics. Annu. Rev. Control Robot. Auton. Syst. Agric. Robot. 2019, 2, 365–392. [Google Scholar] [CrossRef]

- Upadhyay, A.; Zhang, Y.; Koparan, C.; Rai, N.; Howatt, K.; Bajwa, S.; Sun, X. Advances in Ground Robotic Technologies for Site-Specific Weed Management in Precision Agriculture: A Review. Comput. Electron. Agric. 2024, 225, 109363. [Google Scholar] [CrossRef]

- Liu, L.; Yang, F.; Liu, X.; Du, Y.; Li, X.; Li, G.; Chen, D.; Zhu, Z.; Song, Z. A Review of the Current Status and Common Key Technologies for Agricultural Field Robots. Comput. Electron. Agric. 2024, 227, 109630. [Google Scholar] [CrossRef]

- Gonzalez-de-Soto, M.; Emmi, L.; Benavides, C.; Garcia, I.; Gonzalez-de-Santos, P. Reducing Air Pollution with Hybrid-Powered Robotic Tractors for Precision Agriculture. Biosyst. Eng. 2016, 143, 79–94. [Google Scholar] [CrossRef]

- Wilson, J.N. Guidance of Agricultural Vehicles—A Historical Perspective. Comput. Electron. Agric. 2000, 25, 3–9. [Google Scholar] [CrossRef]

- Lochan, K.; Khan, A.; Elsayed, I.; Suthar, B.; Seneviratne, L.; Hussain, I. Advancements in Precision Spraying of Agricultural Robots: A Comprehensive Review. IEEE Access 2024, 12, 129447–129483. [Google Scholar] [CrossRef]

- MarketsandMarkets. Available online: https://www.marketsandmarkets.com/ (accessed on 11 July 2025).

- Grand View Research. Available online: https://www.researchandmarkets.com/ (accessed on 11 July 2025).

- Spherical Insights. Available online: https://www.sphericalinsights.com/ (accessed on 11 July 2025).

- Ritchie, H. Food Production Is Responsible for One-Quarter of the World’s Greenhouse Gas Emissions—Our World in Data. Available online: https://ourworldindata.org/food-ghg-emissions (accessed on 5 December 2024).

- 2022 Census of Agriculture|USDA/NASS. Available online: https://www.nass.usda.gov/Publications/AgCensus/2022/ (accessed on 11 July 2025).

- O’Meara, P. The Ageing Farming Workforce and the Health and Sustainability of Agricultural Communities: A Narrative Review. Aust. J. Rural Health 2019, 27, 281–289. [Google Scholar] [CrossRef]

- Adetunji, C.O.; Hefft, D.I.; Olugbemi, O.T. Agribots: A Gateway to the next Revolution in Agriculture. In AI, Edge and IoT-Based Smart Agriculture; Elsevier: Amsterdam, The Netherlands, 2022; pp. 301–311. ISBN 978-0-12-823694-9. [Google Scholar]

- Sivasangari, A.; Teja, A.K.; Gokulnath, S.; Ajitha, P.; Gomathi, R.M. Vignesh Revolutionizing Agriculture: Developing Autonomous Robots for Precise Farming. In Proceedings of the 2023 International Conference on Inventive Computation Technologies (ICICT), Lalitpur, Nepal, 26 April 2023; IEEE: New York City, NY, USA, 2023; pp. 1461–1468. [Google Scholar]

- Shahrooz, M.; Talaeizadeh, A.; Alasty, A. Agricultural Spraying Drones: Advantages and Disadvantages. In Proceedings of the 2020 Virtual Symposium in Plant Omics Sciences (OMICAS), Bogotá, Colombia, 23 November 2020; IEEE: New York City, NY, USA, 2020; pp. 1–5. [Google Scholar]

- Adekola Adebayo, R.; Constance Obiuto, N.; Clinton Festus-Ikhuoria, I.; Kayode Olajiga, O. Robotics in Manufacturing: A Review of Advances in Automation and Workforce Implications. Int. J. Adv. Multidiscip. Res. Stud. 2024, 4, 632–638. [Google Scholar] [CrossRef]

- Otani, T.; Itoh, A.; Mizukami, H.; Murakami, M.; Yoshida, S.; Terae, K.; Tanaka, T.; Masaya, K.; Aotake, S.; Funabashi, M.; et al. Agricultural Robot under Solar Panels for Sowing, Pruning, and Harvesting in a Synecoculture Environment. Agriculture 2022, 13, 18. [Google Scholar] [CrossRef]

- Vitibot Bakus L. Available online: https://vitibot.fr/ (accessed on 26 February 2025).

- Valero, C. Robótica en viñedo la ciencia ficción se hace realidad. VIDA MAQ 2022, 525, 50–54. [Google Scholar]

- AgXeed 5.115T2. Available online: https://www.agxeed.com/ (accessed on 21 February 2025).

- Mula. Available online: https://mula.ai/it/ (accessed on 26 February 2025).

- Black Shire RC3075. Available online: https://black-shire.com/ (accessed on 21 February 2025).

- Pellenc RX-20. Available online: https://www.pellenc.com/fr-fr/ (accessed on 26 February 2025).

- Pek SlopeHelper. Available online: https://slopehelper.com/ (accessed on 26 February 2025).

- AutoAgri IC20. Available online: https://autoagri.no/ (accessed on 21 February 2025).

- Naïo Technologies Orio. Available online: www.naio-technologies.com/en/orio-robot/ (accessed on 26 February 2025).

- Naïo Technologies Oz. Available online: www.naio-technologies.com/en/oz-robot/ (accessed on 26 February 2025).

- Naïo Technologies Ted. Available online: www.naio-technologies.com/en/ted-robot/ (accessed on 26 February 2025).

- Naïo Technologies Jo. Available online: www.naio-technologies.com/en/jo-robot/ (accessed on 26 February 2025).

- Free Green Nature Leonardo. Available online: https://www.freegreen-nature.it/ (accessed on 26 February 2025).

- Agrointelli Robotti 150D. Available online: https://agrointelli.com/robotti/ (accessed on 26 February 2025).

- Calleja-Huerta, A.; Lamandé, M.; Green, O.; Munkholm, L.J. Impacts of Load and Repeated Wheeling from a Lightweight Autonomous Field Robot on the Physical Properties of a Loamy Sand Soil. Soil Tillage Res. 2023, 233, 105791. [Google Scholar] [CrossRef]

- Amos A3. Available online: https://www.amospower.com/ (accessed on 21 February 2025).

- EarthSense TerraMax. Available online: https://www.earthsense.co/terramax (accessed on 26 February 2025).

- XAG R150. Available online: https://www.xa.com/en/r150-2022 (accessed on 26 February 2025).

- Goncharov, D.V.; Ivashchuk, O.A.; Kaliuzhnaya, E.V. Development of a Mobile Robotic Complex for Automated Monitoring and Harvesting of Agricultural Crops. In Proceedings of the 2024 International Russian Automation Conference (RusAutoCon), Sochi, Russia, 8 September 2024; IEEE: New York City, NY, USA, 2024; pp. 338–343. [Google Scholar]

- Liu, L.; Yang, Q.; He, W.; Yang, X.; Zhou, Q.; Addy, M.M. Design and Experiment of Nighttime Greenhouse Tomato Harvesting Robot. J. Eng. Technol. Sci. 2024, 56, 340–352. [Google Scholar] [CrossRef]

- Birrell, S.; Hughes, J.; Cai, J.Y.; Iida, F. A Field-tested Robotic Harvesting System for Iceberg Lettuce. J. Field Robot. 2020, 37, 225–245. [Google Scholar] [CrossRef] [PubMed]

- Tituaña, L.; Gholami, A.; He, Z.; Xu, Y.; Karkee, M.; Ehsani, R. A Small Autonomous Field Robot for Strawberry Harvesting. Smart Agric. Technol. 2024, 8, 100454. [Google Scholar] [CrossRef]

- Agrobot E-Series. Available online: https://www.agrobot.com/e-series (accessed on 11 February 2025).

- Ant Robotics ValeraFlex. Available online: https://www.antrobotics.de/wp-content/uploads/2023/11/Datenblatt_Valera-8_DE.pdf/ (accessed on 11 February 2025).

- AVL Motion S9000. Available online: https://www.avlmotion.com/nl/ (accessed on 11 February 2025).

- Zhong, M.; Han, R.; Liu, Y.; Huang, B.; Chai, X.; Liu, Y. Development, Integration, and Field Evaluation of an Autonomous Agaricus Bisporus Picking Robot. Comput. Electron. Agric. 2024, 220, 108871. [Google Scholar] [CrossRef]

- Miao, Z.; Yu, X.; Li, N.; Zhang, Z.; He, C.; Li, Z.; Deng, C.; Sun, T. Efficient Tomato Harvesting Robot Based on Image Processing and Deep Learning. Precis. Agric. 2023, 24, 254–287. [Google Scholar] [CrossRef]

- Hua, W.; Zhang, W.; Zhang, Z.; Liu, X.; Saha, C.; Hu, C.; Wang, X. Design, Assembly and Test of a Low-Cost Vacuum Based Apple Harvesting Robot. Smart Agric. 2024, 10, 27–48. [Google Scholar] [CrossRef]

- Sportelli, M.; Frasconi, C.; Fontanelli, M.; Pirchio, M.; Raffaelli, M.; Magni, S.; Caturegli, L.; Volterrani, M.; Mainardi, M.; Peruzzi, A. Autonomous Mowing and Complete Floor Cover for Weed Control in Vineyards. Agronomy 2021, 11, 538. [Google Scholar] [CrossRef]

- Zhao, P.; Chen, J.; Li, J.; Ning, J.; Chang, Y.; Yang, S. Design and Testing of an Autonomous Laser Weeding Robot for Strawberry Fields Based on DIN-LW-YOLO. Comput. Electron. Agric. 2025, 229, 109808. [Google Scholar] [CrossRef]

- Zheng, S.; Zhao, X.; Fu, H.; Tan, H.; Zhai, C.; Chen, L. Design and Experimental Evaluation of a Smart Intra-Row Weed Control System for Open-Field Cabbage. Agronomy 2025, 15, 112. [Google Scholar] [CrossRef]

- Gallou, J.; Lippi, M.; Galle, M.; Marino, A.; Gasparri, A. Modeling and Control of the Vitirover Robot for Weed Management in Precision Agriculture. In Proceedings of the 2024 10th International Conference on Control, Decision and Information Technologies (CoDIT), Vallette, Malta, 1 July 2024; IEEE: New York City, NY, USA, 2024; Volume 5, pp. 2670–2675. [Google Scholar]

- Moondino. Available online: https://www.moondino.it/ (accessed on 20 January 2025).

- Aigro, Up. Available online: https://www.aigro.nl/ (accessed on 20 January 2025).

- Aigen Element. Available online: https://www.aigen.io/ (accessed on 20 January 2025).

- Earth Rover Claws. Available online: https://www.earthrover.farm/ (accessed on 20 January 2025).

- Gonzalez-de-Santos, P.; Ribeiro, A.; Fernandez-Quintanilla, C.; Lopez-Granados, F.; Brandstoetter, M.; Tomic, S.; Pedrazzi, S.; Peruzzi, A.; Pajares, G.; Kaplanis, G.; et al. Fleets of Robots for Environmentally-Safe Pest Control in Agriculture. Precis. Agric. 2017, 18, 574–614. [Google Scholar] [CrossRef]

- Cantelli, L.; Bonaccorso, F.; Longo, D.; Melita, C.D.; Schillaci, G.; Muscato, G. A Small Versatile Electrical Robot for Autonomous Spraying in Agriculture. AgriEngineering 2019, 1, 391–402. [Google Scholar] [CrossRef]

- Fan, X.; Chai, X.; Zhou, J.; Sun, T. Deep Learning Based Weed Detection and Target Spraying Robot System at Seedling Stage of Cotton Field. Comput. Electron. Agric. 2023, 214, 108317. [Google Scholar] [CrossRef]

- Padhiary, M.; Tikute, S.V.; Saha, D.; Barbhuiya, J.A.; Sethi, L.N. Development of an IOT-Based Semi-Autonomous Vehicle Sprayer. Agric. Res. 2025, 14, 229–239. [Google Scholar] [CrossRef]

- Loukatos, D.; Templalexis, C.; Lentzou, D.; Xanthopoulos, G.; Arvanitis, K.G. Enhancing a Flexible Robotic Spraying Platform for Distant Plant Inspection via High-Quality Thermal Im-agery Data. Comput. Electron. Agric. 2021, 190, 106462. [Google Scholar] [CrossRef]

- Mohanty, T.; Pattanaik, P.; Dash, S.; Tripathy, H.P.; Holderbaum, W. Smart Robotic System Guided with YOLOv5 Based Machine Learning Framework for Efficient Herbicide Us-age in Rice (Oryza Sativa L.) under Precision Agriculture. Comput. Electron. Agric. 2025, 231, 110032. [Google Scholar] [CrossRef]

- Liu, H.; Du, Z.; Shen, Y.; Du, W.; Zhang, X. Development and Evaluation of an Intelligent Multivariable Spraying Robot for Orchards and Nurseries. Comput. Electron. Agric. 2024, 222, 109056. [Google Scholar] [CrossRef]

- Kilter AX-1. Available online: https://www.kiltersystems.com/ (accessed on 12 March 2025).

- Gerhards, R.; Andújar Sanchez, D.; Hamouz, P.; Peteinatos, G.G.; Christensen, S.; Fernandez-Quintanilla, C. Advances in Site-specific Weed Management in Agriculture—A Review. Weed Res. 2022, 62, 123–133. [Google Scholar] [CrossRef]

- Yanmar YV01. Available online: https://www.yanmar.com/eu/campaign/2021/10/vineyard/ (accessed on 12 March 2025).

- Agrobot Bug Vacuum. Available online: https://www.agrobot.com/bugvac (accessed on 12 March 2025).

- Ant Robotics Adir Tunnel Sprayer. Available online: https://www.antrobotics.de/wp-content/uploads/2025/08/Datenblatt_Adir-Power_DE-1.pdf (accessed on 12 March 2025).

- AgBot II. Available online: https://research.qut.edu.au/qcr/Projects/agbot-ii-robotic-site-specific-crop-and-weed-management-tool/ (accessed on 12 March 2025).

- Hejazipoor, H.; Massah, J.; Soryani, M.; Asefpour Vakilian, K.; Chegini, G. An Intelligent Spraying Robot Based on Plant Bulk Volume. Comput. Electron. Agric. 2021, 180, 105859. [Google Scholar] [CrossRef]

- Ecorobotix AVO. Available online: https://ecorobotix.com/ (accessed on 12 March 2025).

- Zhang, W.; Miao, Z.; Li, N.; He, C.; Sun, T. Review of Current Robotic Approaches for Precision Weed Management. Curr. Robot. Rep. 2022, 3, 139–151. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Wang, X.; Zheng, W.; Lv, Z.; Zhang, W. Design of a Sweet Potato Transplanter Based on a Robot Arm. Appl. Sci. 2021, 11, 9349. [Google Scholar] [CrossRef]

- Li, M.; Zhu, X.; Ji, J.; Jin, X.; Li, B.; Chen, K.; Zhang, W. Visual Perception Enabled Agriculture Intelligence: A Selective Seedling Picking Transplanting Robot. Comput. Electron. Agric. 2025, 229, 109821. [Google Scholar] [CrossRef]

- FarmDroid FD20. Available online: https://farmdroid.com/ (accessed on 11 May 2025).

- Gerhards, R.; Risser, P.; Spaeth, M.; Saile, M.; Peteinatos, G. A Comparison of Seven Innovative Robotic Weeding Systems and Reference Herbicide Strategies in Sugar Beet (Beta vulgaris subsp. vulgaris L.) and Rapeseed (Brassica napus L.). Weed Res. 2024, 64, 42–53. [Google Scholar] [CrossRef]

- EarthSense Terra Petra. Available online: https://www.earthsense.co/icover (accessed on 11 May 2025).

- Amrita, S.A.; Abirami, E.; Ankita, A.; Praveena, R.; Srimeena, R. Agricultural Robot for Automatic Ploughing and Seeding. In Proceedings of the 2015 IEEE Technological Innovation in ICT for Agriculture and Rural Development (TIAR), Chennai, India, 10–12 July 2015; IEEE: New York City, NY, USA, 2015; pp. 17–23. [Google Scholar]

- Shanmugasundar, G.; Kumar, G.M.; Gouthem, S.E.; Prakash, V.S. Design and Development of Solar Powered Autonomous Seed Sowing Robot. J. Pharm. Negat. Results 2022, 13, 1013–1016. [Google Scholar] [CrossRef]

- Zhang, Z.; He, W.; Wu, F.; Quesada, L.; Xiang, L. Development of a Bionic Hexapod Robot with Adaptive Gait and Clearance for Enhanced Agricultural Field Scouting. Front. Robot. AI 2024, 11, 1426269. [Google Scholar] [CrossRef] [PubMed]

- Antobot Insight. Available online: https://www.antobot.ai/ (accessed on 21 March 2025).

- EarthSense Terra Sentia+. Available online: https://www.earthsense.co/terrasentia (accessed on 21 March 2025).

- Schmitz, A.; Badgujar, C.; Mansur, H.; Flippo, D.; McCornack, B.; Sharda, A. Design of a Reconfigurable Crop Scouting Vehicle for Row Crop Navigation: A Proof-of-Concept Study. Sensors 2022, 22, 6203. [Google Scholar] [CrossRef]

- China Releases Smart Agriculture Action Plan. Available online: https://www.dcz-china.org/2024/10/31/china-releases-smart-agriculture-action-plan/?utm_source=chatgpt.com (accessed on 15 April 2025).

- Agriculture’s Technology Future: How Connectivity Can Yield New Growth|McKinsey. Available online: https://www.mckinsey.com/industries/agriculture/our-insights/agricultures-connected-future-how-technology-can-yield-new-growth?utm_source=chatgpt.com (accessed on 16 July 2025).

- Climate-Smart Agriculture and Forestry Resources. Available online: https://www.farmers.gov/conservation/climate-smart?utm_source=chatgpt.com (accessed on 16 July 2025).

- Farm Labor|Economic Research Service. Available online: https://www.ers.usda.gov/topics/farm-economy/farm-labor (accessed on 16 July 2025).

- France 2030. Available online: https://www.info.gouv.fr/actualite/la-french-agritech-au-service-de-l-innovation-agricole?utm_source=chatgpt.com (accessed on 17 July 2025).

- Gorjian, S.; Ebadi, H.; Trommsdorff, M.; Sharon, H.; Demant, M.; Schindele, S. The Advent of Modern Solar-Powered Electric Agricultural Machinery: A Solution for Sustainable Farm Operations. J. Clean. Prod. 2021, 292, 126030. [Google Scholar] [CrossRef]

- Liang, Z.; He, J.; Hu, C.; Pu, X.; Khani, H.; Dai, L.; Fan, D.; Manthiram, A.; Wang, Z.-L. Next-Generation Energy Harvesting and Storage Technologies for Robots Across All Scales. Adv. Intell. Syst. 2023, 5, 2200045. [Google Scholar] [CrossRef]

- Ummadi, V.; Gundlapalle, A.; Shaik, A.; B, S.M.R. Autonomous Agriculture Robot for Smart Farming. arXiv 2023, arXiv:2208.01708. [Google Scholar] [CrossRef]

- Quaglia, G.; Visconte, C.; Scimmi, L.S.; Melchiorre, M.; Cavallone, P.; Pastorelli, S. Design of a UGV Powered by Solar Energy for Precision Agriculture. Robotics 2020, 9, 13. [Google Scholar] [CrossRef]

- Chand, A.A.; Prasad, K.A.; Mar, E.; Dakai, S.; Mamun, K.A.; Islam, F.R.; Mehta, U.; Kumar, N.M. Design and Analysis of Photovoltaic Powered Battery-Operated Computer Vision-Based Multi-Purpose Smart Farming Robot. Agronomy 2021, 11, 530. [Google Scholar] [CrossRef]

- Bwambale, E.; Wanyama, J.; Adongo, T.A.; Umukiza, E.; Ntole, R.; Chikavumbwa, S.R.; Sibale, D.; Jeremaih, Z. A Review of Model Predictive Control in Precision Agriculture. Smart Agric. Technol. 2025, 10, 100716. [Google Scholar] [CrossRef]

- Young, S.N. Editorial: Intelligent Robots for Agriculture—Ag-Robot Development, Navigation, and Information Perception. Front. Robot. AI 2025, 12, 1597912. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Kim, G.; Yoshitoshi, R.; Tokuda, K. Real-Time Object Detection for Edge Computing-Based Agricultural Automation: A Case Study Comparing the YOLOX and YOLOv12 Architectures and Their Performance in Potato Harvesting Systems. Sensors 2025, 25, 4586. [Google Scholar] [CrossRef]

- Li, X.; Zhu, L.; Chu, X.; Fu, H. Edge Computing-Enabled Wireless Sensor Networks for Multiple Data Collection Tasks in Smart Agriculture. J. Sens. 2020, 2020, 4398061. [Google Scholar] [CrossRef]

- Wang, L. Digital Twins in Agriculture: A Review of Recent Progress and Open Issues. Electronics 2024, 13, 2209. [Google Scholar] [CrossRef]

- Goldenits, G.; Mallinger, K.; Raubitzek, S.; Neubauer, T. Current Applications and Potential Future Directions of Reinforcement Learning-Based Digital Twins in Agriculture. Smart Agric. Technol. 2024, 8, 100512. [Google Scholar] [CrossRef]

- Liu, J.; Shu, L.; Lu, X.; Liu, Y. Survey of Intelligent Agricultural IoT Based on 5G. Electronics 2023, 12, 2336. [Google Scholar] [CrossRef]

- Gutiérrez Cejudo, J.; Enguix Andrés, F.; Lujak, M.; Carrascosa Casamayor, C.; Fernandez, A.; Hernández López, L. Towards Agrirobot Digital Twins: Agri-RO5—A Multi-Agent Architecture for Dynamic Fleet Simulation. Electronics 2023, 13, 80. [Google Scholar] [CrossRef]

- Nguyen, L.V. Swarm Intelligence-Based Multi-Robotics: A Comprehensive Review. AppliedMath 2024, 4, 1192–1210. [Google Scholar] [CrossRef]

| Ref. | Traction System | Engine | Main Task | Application Field | Navigation System | Safety System | Presence on the Market | Country |

|---|---|---|---|---|---|---|---|---|

| [39] | 4WD | Electric | Multi-purpose | Crops under solar panels | 360° Camera | - | Research | Japan |

| [40] | 4WD | Electric | Multi-purpose | Vineyards | Lidar, RTK-GPS | Bumpers, LiDAR | Commercial | France |

| [42] | Tracks | Hybrid (diesel+electric) | Multi-purpose | Open-field crops | RTK-GPS | LiDAR, sonar, radar, bumpes | Commercial | Netherlands |

| [43] | 4WD | Electric | Multi-purpose | Orchards, open-field crops, greenhouse | RTK-GPS, 4G | - | Commercial | Spain |

| [44] | Tracks | Hybrid (diesel+electric) | Multi-purpose | Orchards, open-field crops | RTK-GPS, 4G, Wi-Fi | Bumpers, LiDAR | Commercial | Italy |

| [45] | Tracks | Hybrid (diesel+electric) | Multi-purpose | Vineyards | RTK-GPS, 4G | 360° Camera, bumpers | Commercial | France |

| [46] | Tracks | Electric | Multi-purpose | Orchards, vineyards | IMU, radiolocator, AI-powered sensors | Bumpers | Commercial | Slovenia |

| [47] | 4WD | Electric | Multi-purpose | Orchards, open-field crops | RTK-GPS | LiDAR, GPS fence, 2x HD cameras | Commercial | Norway |

| [49] | 4WD | Electric | Multi-purpose | Greenhouse, open-field crops | RTK-GPS | Geo fencing, RGB | Commercial | France |

| [51] | Tracks | Electric | Multi-purpose | Vineyards, orchards | RTK-GPS | RGB, LiDAR, bumpers | Commercial | France |

| [50] | 4WD | Electric | Multi-purpose | Vineyards | RTK-GPS, LiDAR, RGB | LiDAR, RGB, geo fencing, ultrasonic sensors | Commercial | France |

| [48] | 4WD | Electric | Multi-purpose | Open-field crops | RTK-GPS | Bumpers, LiDAR, geo fencing module | Commercial | France |

| [52] | 4WD or 2WD and 2 Tracks | Hybrid (diesel+electric) | Multi-purpose | Vineyards, orchards | IMU, RTK-GPS, LTE 4G | Radar, AI-powered camera, bumpers, geo fencing | Commercial | Italy |

| [53] | 4WD | Endothermic (diesel) | Multi-purpose | Open-field crops | Dual RTK-GPS | LiDAR, bumpers, geo fencing | Commercial | Denmark |

| [55] | Tracks | Electric | Multi-purpose | Orchards, open-field crops | - | - | Commercial | USA |

| [57] | 4WD | Electric | Multi-purpose | Orchards | RTK-GPS | - | Commercial | China |

| [56] | 4WD | Electric | Multi-purpose | Orchards, open-field crops | Computer vision, machine learning | Computer vision, machine learning | Commercial | USA |

| [58] | Tracks | Electric | Harvesting | Orchards | Wireless control, RGB camera | - | Research | Russia |

| [59] | Tracks | Electric | Harvesting | Greenhouse | Stereo camera | - | Research | China |

| [60] | 4WD | Gasoline generator | Harvesting | Open-field crops | Remote control, 2x cameras | - | Research | UK |

| [61] | 4WD | Electric | Harvesting | Open-field crops | RGB-D camera | - | Research | USA |

| [62] | 3 wheels | Electric | Harvesting | Greenhouse, open-field crop | LiDAR | LiDAR, virtual perimeter | Commercial | Spain |

| [63] | 2WD | Solar-powered | Harvesting | Open-field crops | Stereo camera | Stereo camera, nearfield sensors | Commercial | Germany |

| [64] | 2WD | Hybrid (diesel+electric) | Harvesting | Open-field crop | Remote control, RGB camera, LiDAR | - | Commercial | Holland |

| [65] | 4WD | Electric | Harvesting | Greenhouse | Stereo camera | - | Research | China |

| [66] | 4WD | Electric | Harvesting | Greenhouse | RGB-D, LiDAR, IMU | - | Research | China |

| [67] | Tracks | Electric | Harvesting | Orchards | RGB-D | - | Research | China |

| [69] | Tracks | Electric | Mechanical weeding | Greenhouse | RGB-D | - | Research | China |

| [70] | 4WD | Solar-powered | Mechanical weeding | Open-field crop | Stereo camera | - | Research | China |

| [71] | 4WD | Solar-powered | Mechanical weeding | Orchards, vineyards | RTK-GPS, RGB, LiDAR | - | Commercial-Research | France |

| [72] | 2WD | Solar-powered | Mechanical weeding | Open-field crop (rice) | RTK-GPS | - | Commercial | Switzerland |

| [73] | 2WD | Electric | Mechanical weeding | Open-field crops, orchards | RTK-GPS | - | Commercial | Netherlands |

| [74] | 4WD | Solar-powered | Mechanical weeding | Open-field crops | RTK-GPS, AI vision | AI vision | Commercial | USA |

| [68] | 4WD | Electric | Mechanical weeding | Vineyards | Wire fence | Ultranosic sensors, bumpers | Commercial-Research | Italy |

| [75] | 4WD | Solar-powered | Mechanical weeding | Open-field crops | RGB-D, IR, AI Vision | - | Commercial | UK |

| [77] | Tracked | Electric | Pest control and chemical weeding | Orchards, greenhouse | RTK-DGPS GNSS, LiDAR, ultrasonic sensor, stereo camera | Ultrasonic sensor, laser scanner | Research | Italy |

| [78] | 4WD | Electric | Pest control and chemical weeding | Open-field crops | RGB-D | - | Research | China |

| [79] | 2WD | Electric | Pest control and chemical weeding | Open-field crops, greenhouse | Remote control | - | Research | India |

| [80] | 2WD | Electric and solar-panel assisted | Pest control and chemical weeding | Open-field crops, greenhouse | RTK-GPS, IMU, thermal/optical camera | - | Research | Greece |

| [81] | 2WD | Electric | Pest control and chemical weeding | Open-field crops, greenhouse | Web camera | - | Research | India |

| [82] | Tracked | Electric | Pest control and chemical weeding | Orchards | LiDAR, RTK-GPS | - | Research | China |

| [83] | 2WD | Electric | Pest control and chemical weeding | Open-field crops | RTK-GPS, camera | - | Commercial | Norway |

| [85] | Tracked | Endothermic (gasoline) | Pest control and chemical weeding | Vineyards | RTK-GPS | - | Commercial | Japan |

| [86] | 2WD | Endothermic (diesel) | Pest control and chemical weeding | Open-field crops | LiDAR | LiDAR, bumpers | Commercial | Spain |

| [87] | 4WD | Electric | Pest control and chemical weeding | Greenhouse | IMU | - | Commercial | Germany |

| [88] | 2WD | Electric | Pest control and chemical weeding | Open-field crops | RTK-GPS, cameras | - | Prototype | Australia |

| [89] | 2WD | Electric | Pest control and chemical weeding | Greenhouse | RGB-D, IR camera | - | Research | Iran |

| [76] | 4WD | Endothermic (diesel) | Pest control and chemical weeding | Open-field crops | GNSS RTK, LiDAR, IMU, RGB, ultrasonic sensors | LiDAR | Commercial-Research | Spain |

| [90] | 4WD | Solar-powered, interchangeable batteries | Pest control and chemical weeding | Open-field crops | RTK-GPS, LiDAR, sonar | LiDAR, sonar | Commercial | Switzerland |

| [92] | Tracked | Hybrid (gasoline+electric) | Transplanting | Open-field crops | 2x cameras | - | Research | China |

| [93] | 2WD | Electric | Transplanting | Open-field crops | CCD camera | - | Research | China |

| [94] | 2WD | Solar-powered, power-banks | Ploughing seeding | Open-field crops | RTK-GPS | - | Commercial | Denmark |

| [96] | 4WD | Electric | Ploughing seeding | Open-field crops | RTK-GPS, thermal camera | - | Start-up | USA |

| [97] | 4WD | Electric | Ploughing seeding | Open-field crops | IR camera, ultrasonic sensor | - | Research | India |

| [98] | 2WD | Solar-powered | Ploughing seeding | Open-field crops | IR camera | - | Research | India |

| [99] | 6 flexible legs | Electric | Scouting monitoring | Open-field crops, greenhouse, orchards | 3x RGB cameras, IMU, LiDAR | - | Research | USA |

| [100] | 4WD | Solar-powered, power-banks | Scouting monitoring | Open-field crops, orchards | RTK-GPS, | - | Commercial | UK |

| [101] | 4WD | Electric | Scouting monitoring | Open-field crops | 4 RGB cameras, GPS, LoRa, LiDAR | - | Start-up | USA |

| [102] | Tracked | Electric | Scouting monitoring | Open-field crops | Ultrasonic sensors | Ultrasonic sensors | Research | USA |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fontani, M.; Luglio, S.M.; Gagliardi, L.; Peruzzi, A.; Frasconi, C.; Raffaelli, M.; Fontanelli, M. A Systematic Review of 59 Field Robots for Agricultural Tasks: Applications, Trends, and Future Directions. Agronomy 2025, 15, 2185. https://doi.org/10.3390/agronomy15092185

Fontani M, Luglio SM, Gagliardi L, Peruzzi A, Frasconi C, Raffaelli M, Fontanelli M. A Systematic Review of 59 Field Robots for Agricultural Tasks: Applications, Trends, and Future Directions. Agronomy. 2025; 15(9):2185. https://doi.org/10.3390/agronomy15092185

Chicago/Turabian StyleFontani, Mattia, Sofia Matilde Luglio, Lorenzo Gagliardi, Andrea Peruzzi, Christian Frasconi, Michele Raffaelli, and Marco Fontanelli. 2025. "A Systematic Review of 59 Field Robots for Agricultural Tasks: Applications, Trends, and Future Directions" Agronomy 15, no. 9: 2185. https://doi.org/10.3390/agronomy15092185

APA StyleFontani, M., Luglio, S. M., Gagliardi, L., Peruzzi, A., Frasconi, C., Raffaelli, M., & Fontanelli, M. (2025). A Systematic Review of 59 Field Robots for Agricultural Tasks: Applications, Trends, and Future Directions. Agronomy, 15(9), 2185. https://doi.org/10.3390/agronomy15092185