Applications, Trends, and Challenges of Precision Weed Control Technologies Based on Deep Learning and Machine Vision

Abstract

1. Introduction

1.1. The Urgent Need for Green and Sustainable Agricultural Development

1.2. Development Status and Challenges of Weed Treatment Technology

- (1)

- The visual similarity between weeds and crops in natural settings, combined with factors like light and shading interference, and the high economic and time costs for data collection and annotation;

- (2)

- The need for real-time processing of large amounts of image data, along with model generalization and robustness;

- (3)

- The necessity for precise operation and integrated control of innovative weeding systems, as well as issues related to cost management and scalability.

1.3. Deep Learning-Driven Intelligent Weed Control Technology Evolution

1.4. Research Objectives and Content Architecture

- To delineate the technical progression of deep learning models (CNN, Transformer, and hybrid architectures) in weed detection, together with associated technical accomplishments, and to assess their advantages and limits;

- To investigate the utilization of machine vision and modal sensor fusion in agricultural contexts, and categorize and summarize the methodologies and impacts of current technologies;

- To carefully summarize the integration frameworks of intelligent weeding apparatus and evaluate the operational effectiveness of various intelligent actuators;

- To identify the existing technology constraints and suggest potential research avenues for intelligent agricultural weeding machinery and environmentally sustainable agriculture.

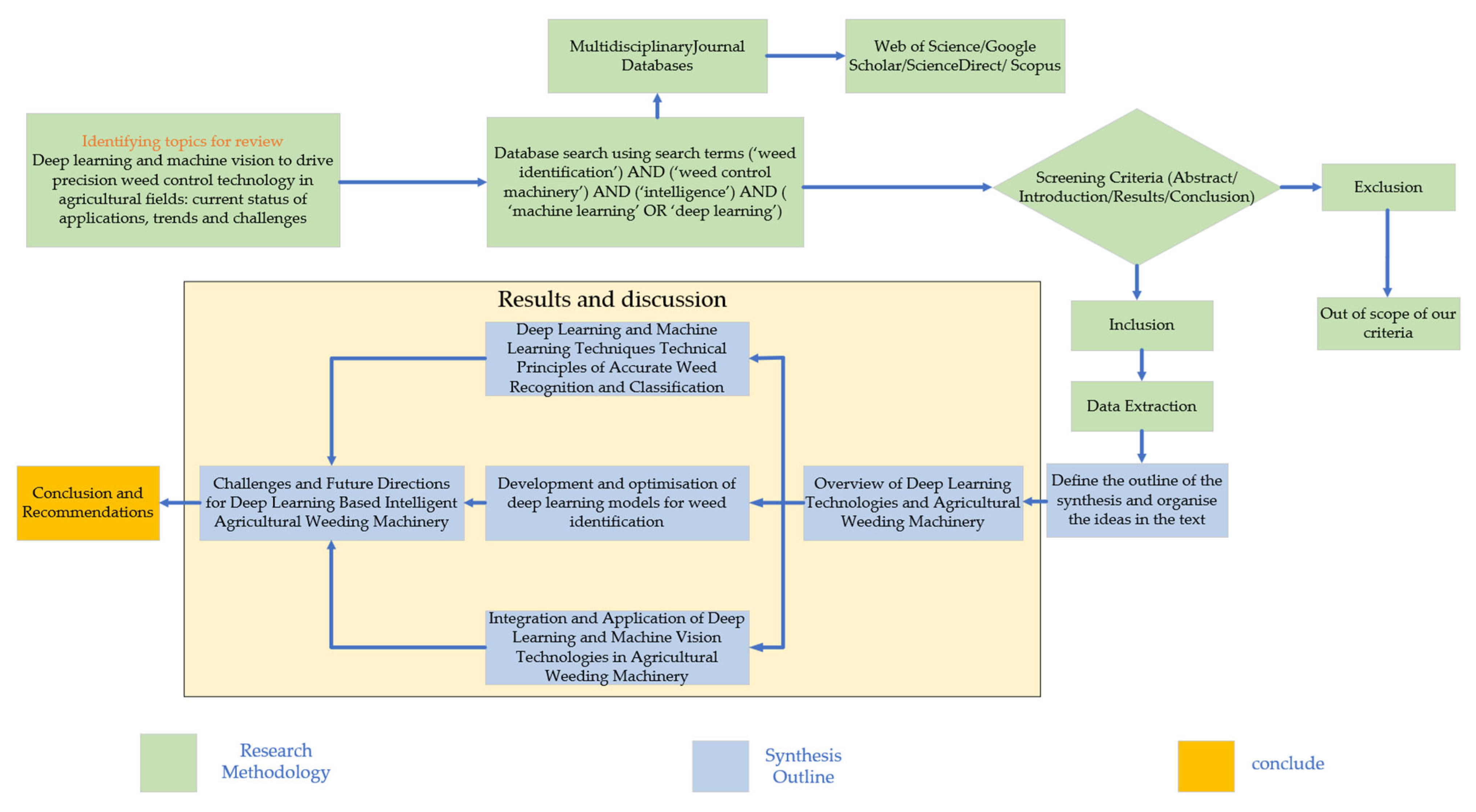

2. Review Methodology

- What methods can be employed to identify weed species in agricultural fields accurately?

- What remote sensors and collection systems are appropriate for monitoring weeds in agricultural fields?

- What are the conventional concepts of deep learning and machine learning in weed control technologies? What are the strategies for improvement and performance enhancement?

- What are the fundamental mechanisms employed in weeding operations?

- Do weeding machines incorporate deep learning or machine learning modules?

3. Results and Discussion

3.1. Deep Learning Infrastructure

3.1.1. Convolutional Neural Networks (CNN)

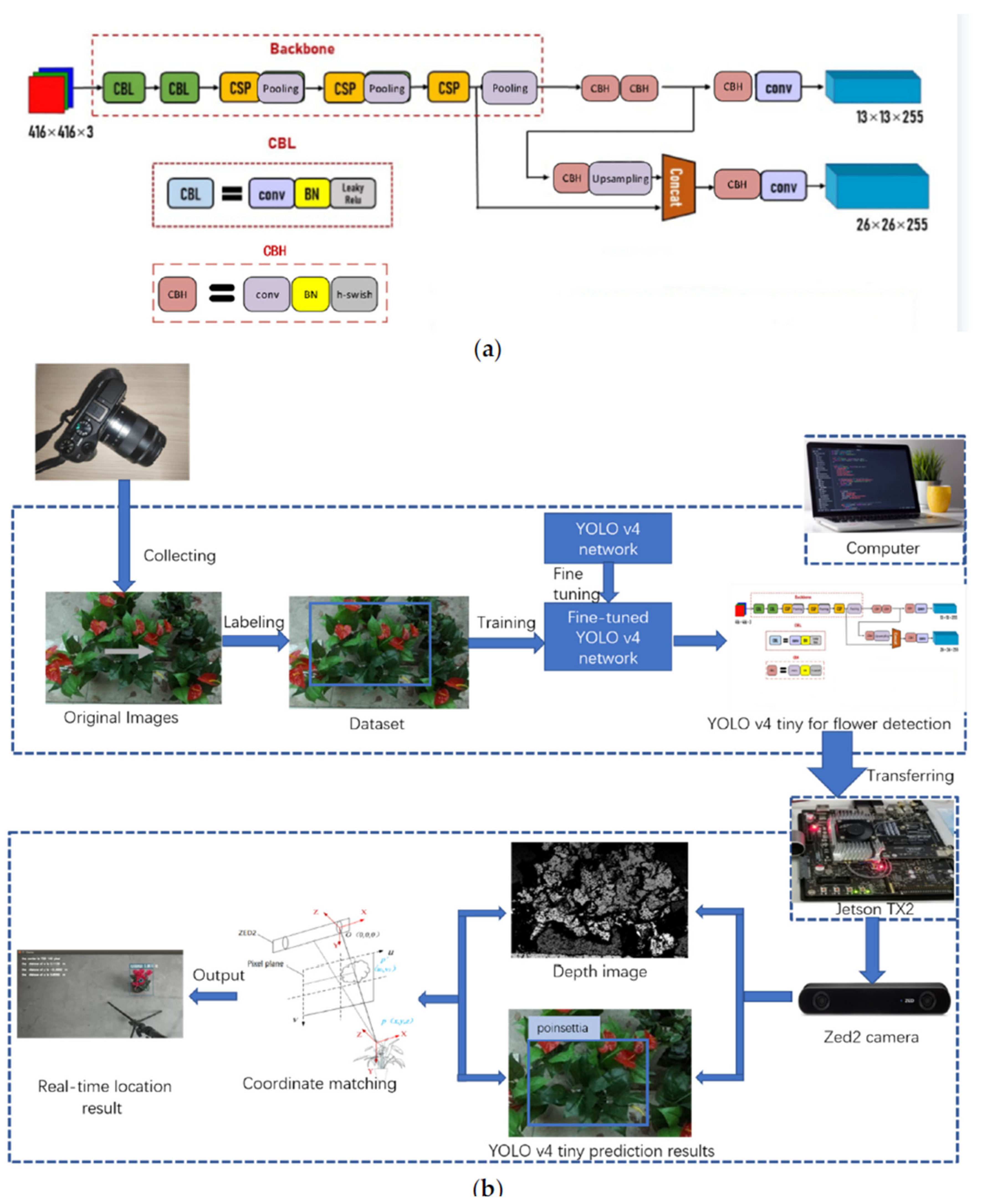

3.1.2. Target Detection Models

3.1.3. Semantic Segmentation Models

3.2. Machine Vision Sensing Technologies

3.2.1. Combination of RGB Imaging and Deep Learning

3.2.2. Multi-Spectral Imaging Technology

3.2.3. Hyperspectral Imaging Technology

3.2.4. Application of Depth and Stereo Vision

3.3. Multi-Technology Convergence Framework

3.3.1. Sensor Fusion Strategy

3.3.2. Cross-Modal Feature Learning

3.4. Model Architecture Innovation

3.4.1. Lightweight Model Design

3.4.2. Application of Transformer Architecture

3.4.3. Hybrid Model Architecture

3.5. Model Optimization Techniques

3.5.1. Data Enhancement and Expansion

3.5.2. Loss Function Optimization

3.5.3. Model Compression and Acceleration

3.5.4. Modelling Algorithm Improvements

3.6. Scene Adaptation Optimization

3.6.1. Multi-Scale Feature Processing

3.6.2. Anti-Environmental Disturbance Technique

3.6.3. Few-Shot and Unsupervised Learning

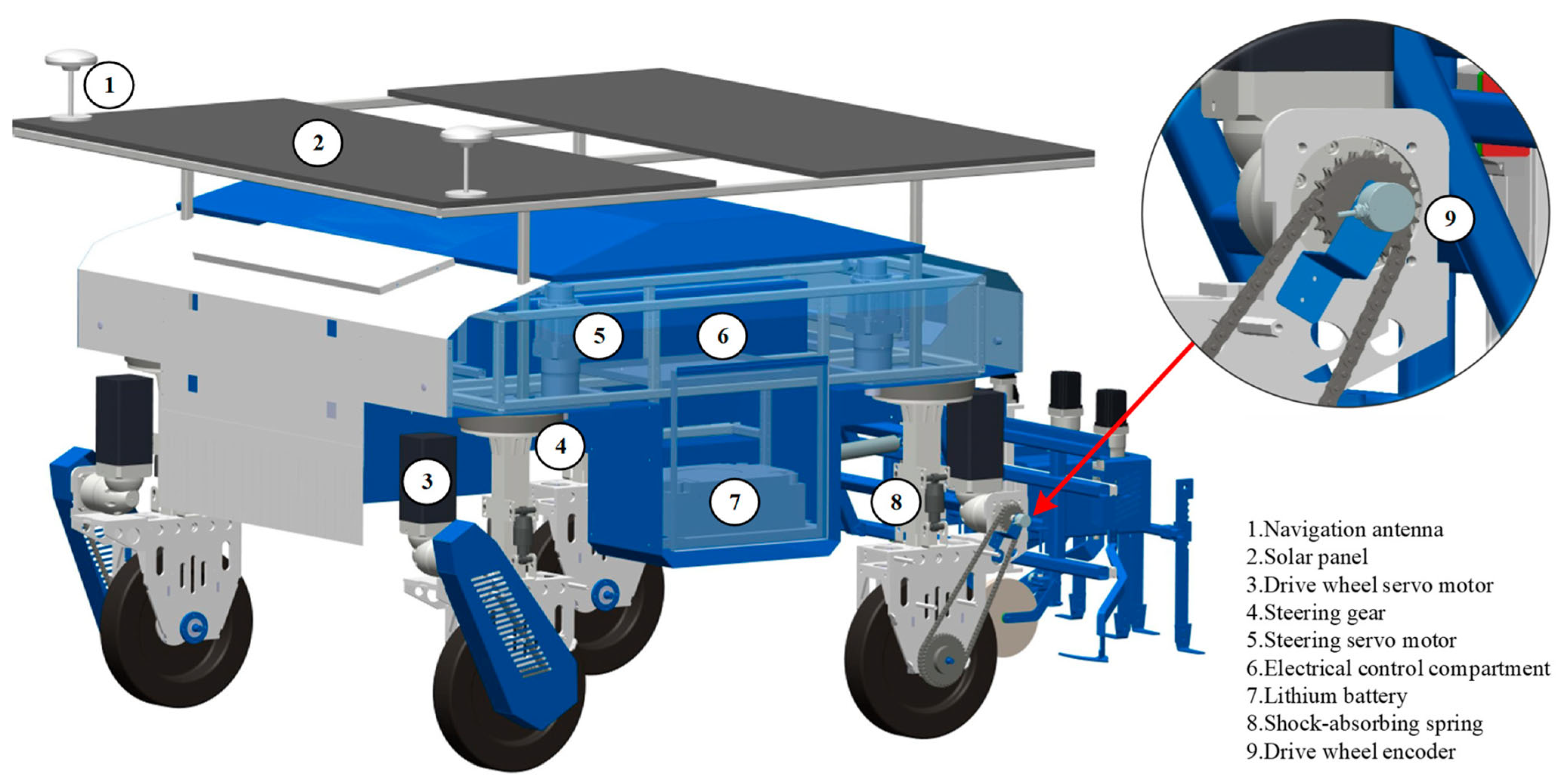

3.7. Hardware System Architecture

3.7.1. Mobile Platform Design

3.7.2. Perception System Integration

3.7.3. Weeding Mechanism Design

3.8. Software System Architecture

3.8.1. Real-Time Operating System

3.8.2. Task Planning Algorithms

3.8.3. Human–Machine Interface

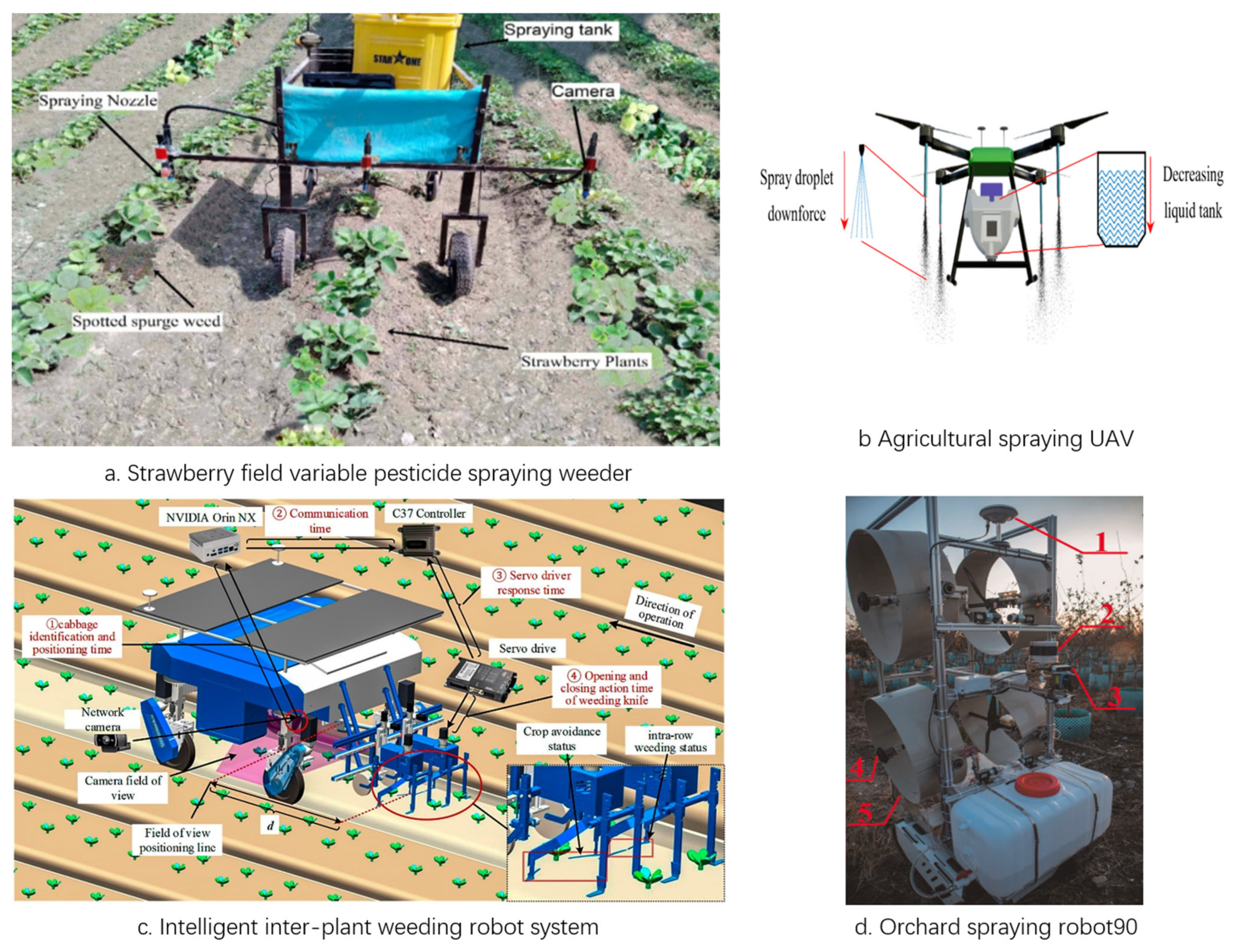

3.9. Typical Application Scenarios

3.9.1. Row Crop Scenario

3.9.2. Vegetable and Orchard Scenarios

3.9.3. Paddy Field Scenario

3.10. Analysis of Environmental Protection and Economic Benefits

3.10.1. Environmental Benefits

3.10.2. Economic Benefits

3.11. Existing Technical Challenges

3.11.1. Insufficient Model Generalization Capability

3.11.2. Contradiction Between Real-Time and Accuracy

3.11.3. Complexity of Multimodal Data Processing

3.11.4. System Reliability to Be Improved

3.12. Future Directions

3.12.1. Generic AI Model Development

3.12.2. Edge Computing and Model Light Weighting

3.12.3. Multimodal Fusion and Active Sensing

3.12.4. Digital Twin and Intelligent Decision Making

3.12.5. Construction of a Sustainable Technology System

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Chauhan, B.S. Grand Challenges in Weed Management. Front. Agron. 2020, 1, 3. [Google Scholar] [CrossRef]

- Ofosu, R.; Agyemang, E.D.; Márton, A.; Pásztor, G.; Taller, J.; Kazinczi, G. Herbicide Resistance: Managing Weeds in a Changing World. Agronomy 2023, 13, 1595. [Google Scholar] [CrossRef]

- Sapkota, R.; Stenger, J.; Ostlie, M.; Flores, P. Towards reducing chemical usage for weed control in agriculture using UAS imagery analysis and computer vision techniques. Sci. Rep. 2023, 13, 6548. [Google Scholar] [CrossRef]

- Huang, X.; Wang, W.; Li, Z.; Wang, Q.; Zhu, C.; Chen, L. Design method and experiment of machinery for the combined application of seed, fertiliser, and herbicide. Int. J. Agric. Biol. Eng. 2019, 12, 63–71. [Google Scholar] [CrossRef]

- Adhinata, F.D.; Wahyono; Sumiharto, R. A comprehensive survey on weed and crop classification using machine learning and deep learning. Artif. Intell. Agric. 2024, 13, 45–63. [Google Scholar] [CrossRef]

- Tang, M.; Xiang, S.; Wang, L. A YOLOv3-based framework for weed detection in agricultural fields. In Proceedings of the 2023 8th International Conference on Intelligent Computing and Signal Processing (ICSP), Xi’an, China, 21–23 April 2023; pp. 1980–1986. [Google Scholar]

- Li, H.; Travlos, I.; Qi, L.; Kanatas, P.; Wang, P. Optimization of Herbicide Use: Study on Spreading and Evaporation Characteristics of Glyphosate-Organic Silicone Mixture Droplets on Weed Leaves. Agronomy 2019, 9, 547. [Google Scholar] [CrossRef]

- Wu, P.; Lei, X.; Zeng, J.; Qi, Y.; Yuan, Q.; Huang, W.; Ma, Z.; Shen, Q.; Lyu, X. Research progress in mechanized and intelligentized pollination technologies for fruit and vegetable crops. Int. J. Agric. Biol. Eng. 2024, 17, 11–21. [Google Scholar] [CrossRef]

- Scavo, A.; Mauromicale, G. Integrated Weed Management in Herbaceous Field Crops. Agronomy 2020, 10, 466. [Google Scholar] [CrossRef]

- Shaikh, T.A.; Rasool, T.; Lone, F.R. Towards leveraging the role of machine learning and artificial intelligence in precision agriculture and smart farming. Comput. Electron. Agric. 2022, 198, 107119. [Google Scholar] [CrossRef]

- Li, Y.; Guo, R.; Li, R.; Ji, R.; Wu, M.; Chen, D.; Han, C.; Han, R.; Liu, Y.; Ruan, Y.; et al. An improved U-net and attention mechanism-based model for sugar beet and weed segmentation. Front. Plant Sci. 2025, 15, 1449514. [Google Scholar] [CrossRef]

- Zheng, L.; Long, L.; Zhu, C.; Jia, M.; Chen, P.; Tie, J. A Lightweight Cotton Field Weed Detection Model Enhanced with EfficientNet and Attention Mechanisms. Agronomy 2024, 14, 2649. [Google Scholar] [CrossRef]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-unet: Unet-like pure transformer for medical image segmentation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer Nature: Cham, Switzerland; pp. 205–218. [Google Scholar]

- Hasan, A.S.M.M.; Sohel, F.; Diepeveen, D.; Laga, H.; Jones, M.G. A survey of deep learning techniques for weed detection from images. Comput. Electron. Agric. 2021, 184, 106067. [Google Scholar] [CrossRef]

- Chen, C.; Zhu, W.; Steibel, J.; Siegford, J.; Han, J.; Norton, T. Classification of drinking and drinker-playing in pigs by a video-based deep learning method. Biosyst. Eng. 2020, 196, 1–14. [Google Scholar] [CrossRef]

- Melander, B.; McCollough, M.R.; Poulsen, F. Informing the Operation of Intelligent Automated Intra-Row Weeding Machines in Direct-Sown Sugar Beet (Beta vulgaris L.): Crop Effects of Hoeing and Flaming Across Early Growth Stages, Tool Working Distances, and Intensities. Tool Work. Distances Intensities 2024, 177, 106562. [Google Scholar]

- Kverneland. Onyx: Autonomous Weeding Robot; Kverneland Group: Klepp Stasjon, Norway, 2021. [Google Scholar]

- Wang, Y.P.; Wang, Y.K. Carbon robotics launches new laser weeding machine capable of autonomously eradicating weeds. Agric. Eng. Technol. 2022, 42, 117–118. [Google Scholar]

- Bakker, T.; van Asselt, K.; Bontsema, J.; Müller, J.; van Straten, G. Autonomous navigation using a robot platform in a sugar beet field. Biosyst. Eng. 2011, 109, 357–368. [Google Scholar] [CrossRef]

- Zhao, C.J.; Fan, B.B.; Li, J.; Feng, Q.C. Progress, Challenges, and Trends in Agricultural Robotics. Smart Agric. 2023, 5, 1–15. [Google Scholar] [CrossRef]

- Liu, J.; Abbas, I.; Noor, R.S. Development of Deep Learning-Based Variable Rate Agrochemical Spraying System for Targeted Weeds Control in Strawberry Crop. Agronomy 2021, 11, 1480. [Google Scholar] [CrossRef]

- Ahmed, S.; Qiu, B.; Ahmad, F.; Kong, C.-W.; Xin, H. A State-of-the-Art Analysis of Obstacle Avoidance Methods from the Perspective of an Agricultural Sprayer UAV’s Operation Scenario. Agronomy 2021, 11, 1069. [Google Scholar] [CrossRef]

- Zheng, S.; Zhao, X.; Fu, H.; Tan, H.; Zhai, C.; Chen, L. Design and Experimental Evaluation of a Smart Intra-Row Weed Control System for Open-Field Cabbage. Agronomy 2025, 15, 112. [Google Scholar] [CrossRef]

- Liu, H.; Zeng, X.; Shen, Y.; Xu, J.; Khan, Z. A Single-Stage Navigation Path Extraction Network for agricultural robots in orchards. Comput. Electron. Agric. 2025, 229, 109687. [Google Scholar] [CrossRef]

- Han, X.; Wang, H.; Yuan, T.; Zou, K.; Liao, Q.; Deng, K.; Zhang, Z.; Zhang, C.; Li, W. A rapid segmentation method for weed based on CDM and ExG index. Crop. Prot. 2023, 172, 106321. [Google Scholar] [CrossRef]

- Jiang, H.; Zhang, C.; Qiao, Y.; Zhang, Z.; Zhang, W.; Song, C. CNN feature based graph convolutional network for weed and crop recognition in smart farming. Comput. Electron. Agric. 2020, 174, 105450. [Google Scholar] [CrossRef]

- Arsa, D.M.S.; Ilyas, T.; Park, S.-H.; Won, O.; Kim, H. Eco-friendly weeding through precise detection of growing points via efficient multi-branch convolutional neural networks. Comput. Electron. Agric. 2023, 209, 107830. [Google Scholar] [CrossRef]

- Visentin, F.; Cremasco, S.; Sozzi, M.; Signorini, L.; Signorini, M.; Marinello, F.; Muradore, R. A mixed-autonomous robotic platform for intra-row and inter-row weed removal for precision agriculture. Comput. Electron. Agric. 2023, 214, 108270. [Google Scholar] [CrossRef]

- Arakeri, M.P.; Kumar, B.P.V.; Barsaiya, S.; Sairam, H.V. Computer vision based robotic weed control system for precision agriculture. In Proceedings of the 2017 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Udupi, India, 13–16 September 2017; pp. 1201–1205. [Google Scholar]

- Zhao, X.; Wang, X.; Li, C.; Fu, H.; Yang, S.; Zhai, C. Cabbage and Weed Identification Based on Machine Learning and Target Spraying System Design. Front. Plant Sci. 2022, 13, 924973. [Google Scholar] [CrossRef]

- Jin, X.; Che, J.; Chen, Y. Weed Identification Using Deep Learning and Image Processing in Vegetable Plantation. IEEE Access 2021, 9, 10940–10950. [Google Scholar] [CrossRef]

- Tufail, M.; Iqbal, J.; Tiwana, M.I.; Alam, M.S.; Khan, Z.A.; Khan, M.T. Identification of Tobacco Crop Based on Machine Learning for a Precision Agricultural Sprayer. IEEE Access 2021, 9, 23814–23825. [Google Scholar] [CrossRef]

- Lin, Y.; Xia, S.; Wang, L.; Qiao, B.; Han, H.; Wang, L.; He, X.; Liu, Y. Multi-task deep convolutional neural network for weed detection and navigation path extraction. Comput. Electron. Agric. 2025, 229, 109776. [Google Scholar] [CrossRef]

- Xu, Y.; Bai, Y.; Fu, D.; Cong, X.; Jing, H.; Liu, Z.; Zhou, Y. Multi-species weed detection and variable spraying system for farmland based on W-YOLOv5. Crop. Prot. 2024, 182, 106720. [Google Scholar] [CrossRef]

- Karim, J.; Nahiduzzaman; Ahsan, M.; Haider, J. Development of an early detection and automatic targeting system for cotton weeds using an improved lightweight YOLOv8 architecture on an edge device. Knowl. Based Syst. 2024, 300, 112204. [Google Scholar] [CrossRef]

- Herterich, N.; Liu, K.; Stein, A. Accelerating weed detection for smart agricultural sprayers using a Neural Processing Unit. Comput. Electron. Agric. 2025, 237, 110608. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, Q.; Wang, C.; Wang, X.; Xu, Z.; Lu, C. Guidelines for mechanical weeding: Developing weed control lines through point extraction at maize root zones. Biosyst. Eng. 2024, 248, 321–336. [Google Scholar] [CrossRef]

- Xiang, M.; Gao, X.; Wang, G.; Qi, J.; Qu, M.; Ma, Z.; Chen, X.; Zhou, Z.; Song, K. An application oriented all-round intelligent weeding machine with enhanced YOLOv5. Biosyst. Eng. 2024, 248, 269–282. [Google Scholar] [CrossRef]

- Utstumo, T.; Urdal, F.; Brevik, A.; Dørum, J.; Netland, J.; Overskeid, Ø.; Berge, T.W.; Gravdahl, J.T. Robotic in-row weed control in vegetables. Comput. Electron. Agric. 2018, 154, 36–45. [Google Scholar] [CrossRef]

- Azghadi, M.R.; Olsen, A.; Wood, J.; Saleh, A.; Calvert, B.; Granshaw, T.; Fillols, E.; Philippa, B. Precision robotic spot-spraying: Reducing herbicide use and enhancing environmental outcomes in sugarcane. Comput. Electron. Agric. 2025, 235, 110365. [Google Scholar] [CrossRef]

- Sassu, A.; Motta, J.; Deidda, A.; Ghiani, L.; Carlevaro, A.; Garibotto, G.; Gambella, F. Artichoke deep learning detection network for site-specific agrochemicals UAS spraying. Comput. Electron. Agric. 2023, 213, 108185. [Google Scholar] [CrossRef]

- Zhao, P.; Chen, J.; Li, J.; Ning, J.; Chang, Y.; Yang, S. Design and Testing of an autonomous laser weeding robot for strawberry fields based on DIN-LW-YOLO. Comput. Electron. Agric. 2025, 229, 109808. [Google Scholar] [CrossRef]

- Li, J.L.; Su, W.H.; Hu, R.; Niu, L.T.; Wang, Q. Innovative weeding system with multi-sensor fusion for tomato plant detection and targeted micro-spraying of intra-row weeds. Comput. Electron. Agric. 2025, 237, 110598. [Google Scholar] [CrossRef]

- Jin, X.; McCullough, P.E.; Liu, T.; Yang, D.; Zhu, W.; Chen, Y.; Yu, J. An innovative sprayer for weed control in ber-mudagrass turf based on the herbicide weed control spectrum. Crop Prot. 2023, 170, 106270. [Google Scholar] [CrossRef]

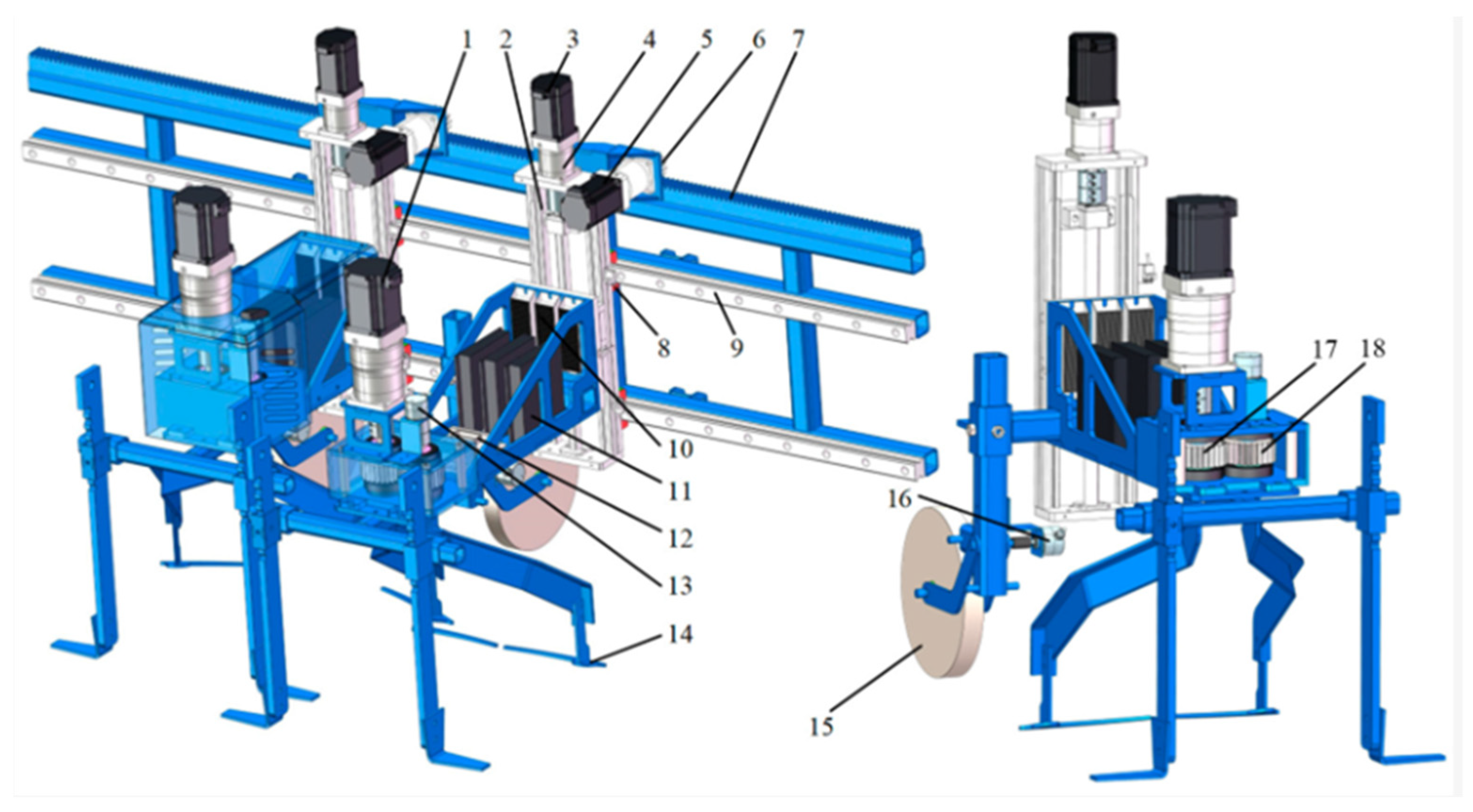

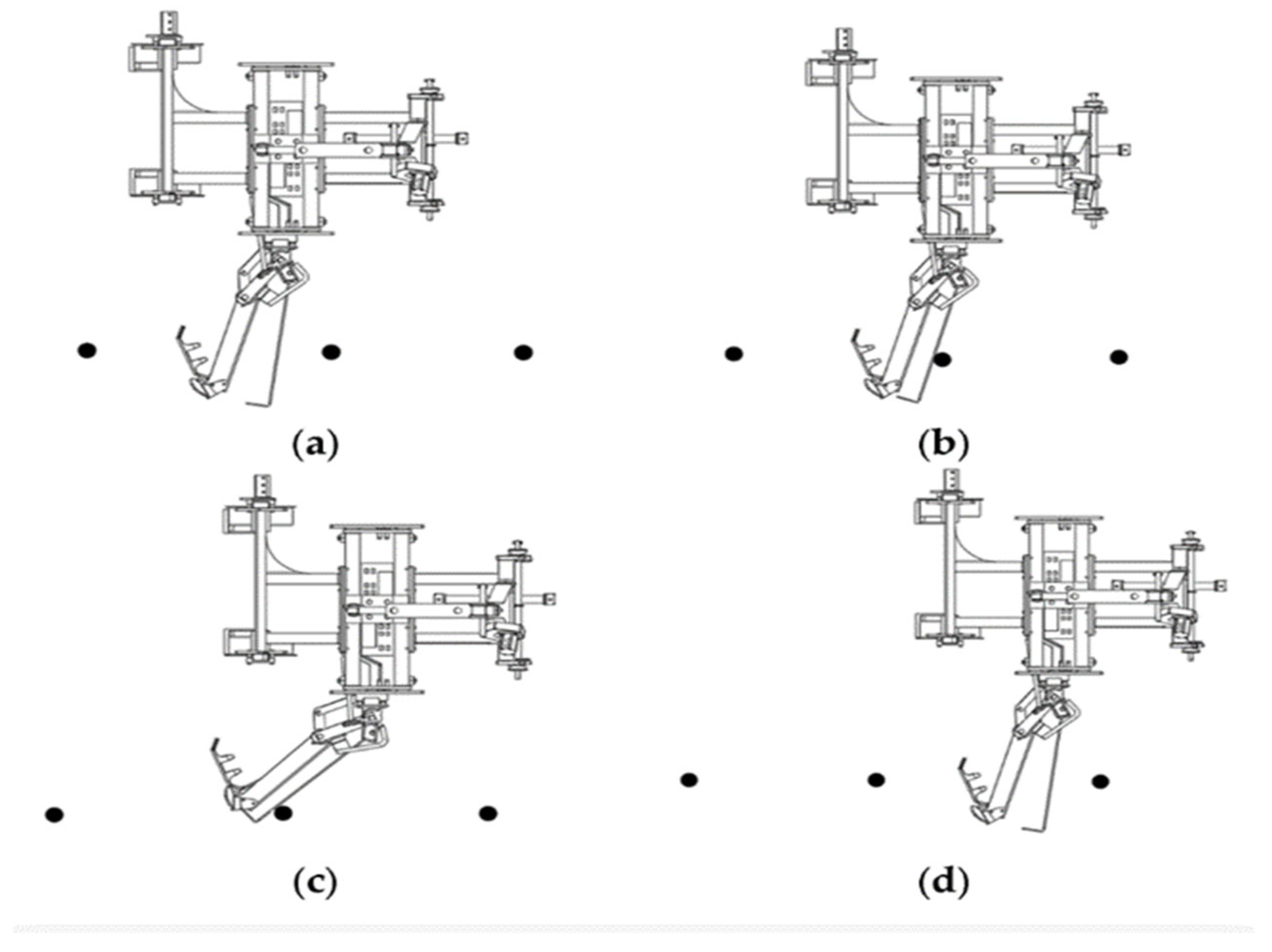

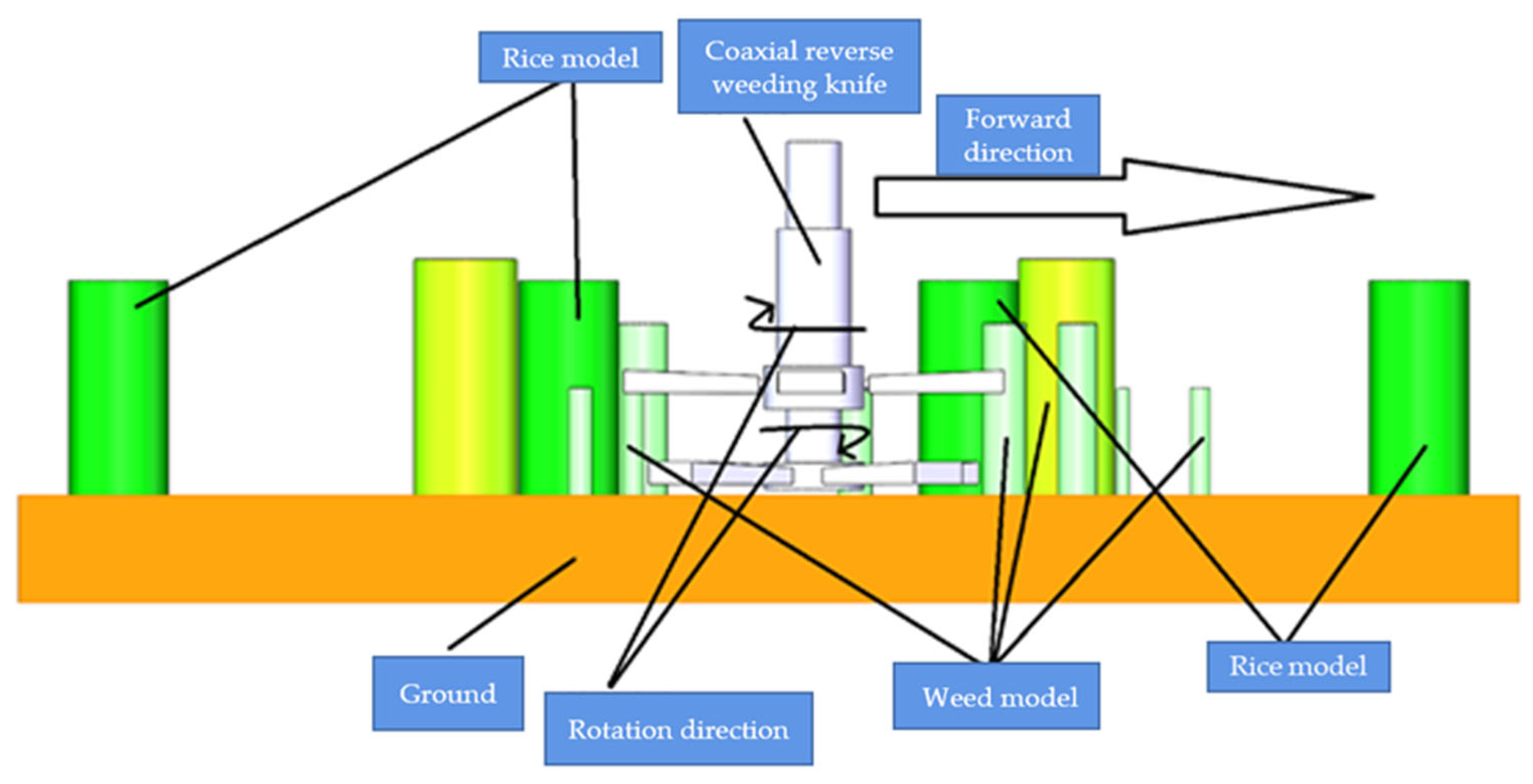

- Quan, L.; Jiang, W.; Li, H.; Li, H.; Wang, Q.; Chen, L. Intelligent intra-row robotic weeding system combining deep learning technology with a targeted weeding mode. Biosyst. Eng. 2022, 216, 13–31. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Tiwari, O.; Goyal, V.; Kumar, P.; Vij, S. An experimental setup for utilizing a convolutional neural network in automated weed detection. In Proceedings of the 2019 4th International Conference on Internet of Things: Smart Innovation and Usages (IoT-SIU), Ghaziabad, India, 18–19 April 2019; pp. 1–6. [Google Scholar]

- Garibaldi-Márquez, F.; Flores, G.; Mercado-Ravell, D.A.; Ramírez-Pedraza, A.; Valentín-Coronado, L.M. Weed Classification from Natural Corn Field-Multi-Plant Images Based on Shallow and Deep Learning. Sensors 2022, 22, 3021. [Google Scholar] [CrossRef] [PubMed]

- Asad, M.H.; Bais, A. Weed detection in canola fields using maximum likelihood classification and deep convolutional neural network. Inf. Process. Agric. 2020, 7, 535–545. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, X.; Ma, G.; Du, X.; Shaheen, N.; Mao, H. Recognition of weeds at asparagus fields using multi-feature fusion and backpropagation neural network. Int. J. Agric. Biol. Eng. 2021, 14, 190–198. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Mwitta, C.; Rains, G.C.; Prostko, E.P. Autonomous diode laser weeding mobile robot in cotton field using deep learning, visual servoing and finite state machine. Front. Agron. 2024, 6, 1388452. [Google Scholar] [CrossRef]

- Abd Ghani, M.A.; Juraimi, A.S.; Su, A.S.M.; Ahmad-Hamdani, M.S.; Islam, A.M.; Motmainna, M. Chemical weed control in direct-seeded rice using drone and mist flow spray technology. Crop Prot. 2024, 184, 106853. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, Z.; Guo, Y.; Ma, Y.; Cao, W.; Chen, D.; Yang, S.; Gao, R. Weed Detection in Peanut Fields Based on Machine Vision. Agriculture 2022, 12, 1541. [Google Scholar] [CrossRef]

- Peng, H.; Li, Z.; Zhou, Z.; Shao, Y. Weed detection in paddy field using an improved RetinaNet network. Comput. Electron. Agric. 2022, 199, 107179. [Google Scholar] [CrossRef]

- Zhang, Z.; Lu, Y.; Yang, M.; Wang, G.; Zhao, Y.; Hu, Y. Optimal training strategy for high-performance detection model of multi-cultivar tea shoots based on deep learning methods. Sci. Hortic. 2024, 328, 112949. [Google Scholar] [CrossRef]

- Sharma, A.; Kumar, V.; Longchamps, L. Comparative performance of YOLOv8, YOLOv9, YOLOv10, YOLOv11 and Faster R-CNN models for detection of multiple weed species. Smart Agric. Technol. 2024, 9, 100648. [Google Scholar] [CrossRef]

- Pei, H.; Sun, Y.; Huang, H.; Zhang, W.; Sheng, J.; Zhang, Z. Weed Detection in Maize Fields by UAV Images Based on Crop Row Preprocessing and Improved YOLOv4. Agriculture 2022, 12, 975. [Google Scholar] [CrossRef]

- Xu, M.; Sun, J.; Cheng, J.; Yao, K.; Wu, X.; Zhou, X. Non-destructive prediction of total soluble solids and titratable acidity in Kyoho grape using hyperspectral imaging and deep learning algorithm. Int. J. Food Sci. Technol. 2023, 58, 9–21. [Google Scholar] [CrossRef]

- Wang, J.; Gao, Z.; Zhang, Y.; Zhou, J.; Wu, J.; Li, P. Real-Time Detection and Location of Potted Flowers Based on a ZED Camera and a YOLO V4-Tiny Deep Learning Algorithm. Horticulturae 2022, 8, 21. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Computer Science, Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015 (MICCAI 2015), Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W., Frangi, A., Eds.; Springer: Cham, Switzerland, 2015; Volume 9351. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Sun, J.; Yang, K.; He, X.; Luo, Y.; Wu, X.; Shen, J. Beet seedling and weed recognition based on a convolutional neural network and multi-modality images. Multimedia Tools Appl. 2022, 81, 5239–5258. [Google Scholar] [CrossRef]

- Yu, H.; Che, M.; Yu, H.; Zhang, J. Development of Weed Detection Method in Soybean Fields Utilising Improved DeepLabv3+ Platform. Agronomy 2022, 12, 2889. [Google Scholar] [CrossRef]

- Chen, G.; Fu, L.; Sun, L.; Zhao, B.; He, X. GT-DeepLabv3+: A Deep Learning-Based Algorithm for Field Crop Segmentation. In Proceedings of the 2024 International Symposium on Electrical, Electronics and Information Engineering (ISEEIE), Leicester, UK, 28–30 August 2024; pp. 401–405. [Google Scholar]

- Memon, M.S.; Chen, S.; Shen, B.; Liang, R.; Tang, Z.; Wang, S.; Zhou, W.; Memon, N. Automatic visual recognition, detection and classification of weeds in cotton fields based on machine vision. Crop. Prot. 2024, 187, 106966. [Google Scholar] [CrossRef]

- Chen, S.; Memon, M.S.; Shen, B.; Guo, J.; Du, Z.; Tang, Z.; Guo, X.; Memon, H. Identification of weeds in cotton fields at various growth stages using color feature techniques. Ital. J. Agron. 2024, 19, 100021. [Google Scholar] [CrossRef]

- Islam, N.; Rashid, M.; Wibowo, S.; Xu, C.-Y.; Morshed, A.; Wasimi, S.A.; Moore, S.; Rahman, S.M. Early Weed Detection Using Image Processing and Machine Learning Techniques in an Australian Chilli Farm. Agriculture 2021, 11, 387. [Google Scholar] [CrossRef]

- Dang, F.; Chen, D.; Lu, Y.; Li, Z. YOLOWeeds: A novel benchmark of YOLO object detectors for multi-class weed detection in cotton production systems. Comput. Electron. Agric. 2023, 205, 107655. [Google Scholar] [CrossRef]

- Jin, X.; Bagavathiannan, M.; McCullough, P.E.; Chen, Y.; Yu, J. A deep learning-based method for classification, detection, and localisation of weeds in turfgrass. Pest Manag. Sci. 2022, 78, 4809–4821. [Google Scholar] [CrossRef]

- Marx, C.; Barcikowski, S.; Hustedt, M.; Haferkamp, H.; Rath, T. Design and application of a weed damage model for laser-based weed control. Biosyst. Eng. 2012, 113, 148–157. [Google Scholar] [CrossRef]

- Louargant, M.; Jones, G.; Faroux, R.; Paoli, J.-N.; Maillot, T.; Gée, C.; Villette, S. Unsupervised Classification Algorithm for Early Weed Detection in Row-Crops by Combining Spatial and Spectral Information. Remote Sens. 2018, 10, 761. [Google Scholar] [CrossRef]

- Celikkan, E.; Kunzmann, T.; Yeskaliyev, Y.; Itzerott, S.; Klein, N.; Herold, M. WeedsGalore: A Multispectral and Multitemporal UAV-Based Dataset for Crop and Weed Segmentation in Agricultural Maize Fields. In Proceedings of the 2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Tucson, AZ, USA, 8 April 2025; pp. 4767–4777. [Google Scholar]

- Wang, A.; Li, W.; Men, X.; Gao, B.; Xu, Y.; Wei, X. Vegetation detection based on spectral information and development of a low-cost vegetation sensor for selective spraying. Pest Manag. Sci. 2022, 78, 2467–2476. [Google Scholar] [CrossRef]

- Xu, S.; Xu, X.; Zhu, Q.; Meng, Y.; Yang, G.; Feng, H.; Yang, M.; Zhu, Q.; Xue, H.; Wang, B. Monitoring leaf nitrogen content in rice based on information fusion of multi-sensor imagery from UAV. Precis. Agric. 2023, 24, 2327–2349. [Google Scholar] [CrossRef]

- Farooq, A.; Hu, J.; Jia, X. Analysis of Spectral Bands and Spatial Resolutions for Weed Classification Via Deep Convolutional Neural Network. IEEE Geosci. Remote. Sens. Lett. 2019, 16, 183–187. [Google Scholar] [CrossRef]

- Li, Y.; Al-Sarayreh, M.; Irie, K.; Hackell, D.; Bourdot, G.; Reis, M.M.; Ghamkhar, K. Identification of Weeds Based on Hyperspectral Imaging and Machine Learning. Front. Plant Sci. 2021, 11, 611622. [Google Scholar] [CrossRef]

- Yue, J.; Yang, H.; Feng, H.; Han, S.; Zhou, C.; Fu, Y.; Guo, W.; Ma, X.; Qiao, H.; Yang, G. Hyperspectral-to-image transform and CNN transfer learning enhancing soybean LCC estimation. Comput. Electron. Agric. 2023, 211, 108011. [Google Scholar] [CrossRef]

- Hidalgo, D.R.; Cortés, B.B.; Bravo, E.C. Dimensionality reduction of hyperspectral images of vegetation and crops based on self-organized maps. Inf. Process. Agric. 2021, 8, 310–327. [Google Scholar] [CrossRef]

- Dadashzadeh, M.; Abbaspour-Gilandeh, Y.; Mesri-Gundoshmian, T.; Sabzi, S.; Arribas, J.I. A stereoscopic video computer vision system for weed discrimination in rice field under both natural and controlled light conditions by machine learning. Measurement 2024, 237, 115072. [Google Scholar] [CrossRef]

- Tian, Y.; Sun, J.; Zhou, X.; Yao, K.; Tang, N. Detection of soluble solid content in apples based on hyperspectral technology combined with a deep learning algorithm. J. Food Process. Preserv. 2022, 46, e16414. [Google Scholar] [CrossRef]

- Huang, F.H.; Liu, Y.H.; Sun, X.; Yang, H. Quality inspection of nectarine based on hyperspectral imaging technology. Syst. Sci. Control. Eng. 2021, 9, 350–357. [Google Scholar] [CrossRef]

- Zhu, J.; Jiang, X.; Rong, Y.; Wei, W.; Wu, S.; Jiao, T.; Chen, Q. Label-free detection of trace level zearalenone in corn oil by surface-enhanced Raman spectroscopy (SERS) coupled with deep learning models. Food Chem. 2023, 414, 135705. [Google Scholar] [CrossRef]

- Le, V.N.T.; Apopei, B.; Alameh, K. Effective plant discrimination based on the combination of local binary pattern operators and multiclass support vector machine methods. Inf. Process. Agric. 2019, 6, 116–131. [Google Scholar] [CrossRef]

- Xu, Y.; Gao, Z.; Khot, L.; Meng, X.; Zhang, Q. A Real-Time Weed Mapping and Precision Herbicide Spraying System for Row Crops. Sensors 2018, 18, 4245. [Google Scholar] [CrossRef]

- Festo. 3D Vision-Guided Weeding Manipulator; Festo Didactic: Eatontown, NY, USA, 2022. [Google Scholar]

- Zou, K.; Chen, X.; Wang, Y.; Zhang, C.; Zhang, F. A modified U-Net with a specific data argumentation method for semantic segmentation of weed images in the field. Comput. Electron. Agric. 2021, 187, 106242. [Google Scholar] [CrossRef]

- Farooq, A.; Jia, X.; Hu, J.; Zhou, J. Multi-Resolution Weed Classification via Convolutional Neural Network and Superpixel Based Local Binary Pattern Using Remote Sensing Images. Remote. Sens. 2019, 11, 1692. [Google Scholar] [CrossRef]

- Chen, Y.; Wu, Z.; Zhao, B.; Fan, C.; Shi, S. Weed and Corn Seedling Detection in Field Based on Multi Feature Fusion and Support Vector Machine. Sensors 2020, 21, 212. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Xie, S.; Ning, J.; Chen, Q.; Zhang, Z. Evaluating green tea quality based on multisensor data fusion combining hyperspectral imaging and olfactory visualization systems. J. Sci. Food Agric. 2019, 99, 1787–1794. [Google Scholar] [CrossRef]

- Yu, S.; Huang, X.; Wang, L.; Chang, X.; Ren, Y.; Zhang, X.; Wang, Y. Qualitative and quantitative assessment of flavor quality of Chinese soybean paste using multiple sensor technologies combined with chemometrics and a data fusion strategy. Food Chem. 2023, 405, 134859. [Google Scholar] [CrossRef]

- Espejo-Garcia, B.; Mylonas, N.; Athanasakos, L.; Fountas, S.; Vasilakoglou, I. Towards weeds identification assistance through transfer learning. Comput. Electron. Agric. 2020, 171, 105306. [Google Scholar] [CrossRef]

- Yang, X.; Xiong, B.; Huang, Y.; Xu, C. Cross-modal federated human activity recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 5345–5361. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Yu, H.; Liu, Y.; Zhang, L.; Li, D.; Zhang, J.; Li, X.; Sui, Y. Prediction of Anthocyanin Content in Purple-Leaf Lettuce Based on Spectral Features and Optimized Extreme Learning Machine Algorithm. Agronomy 2024, 14, 2915. [Google Scholar] [CrossRef]

- Zhou, X.; Sun, J.; Tian, Y.; Lu, B.; Hang, Y.; Chen, Q. Hyperspectral technique combined with deep learning algorithm for detection of compound heavy metals in lettuce. Food Chem. 2020, 321, 126503. [Google Scholar] [CrossRef]

- Razfar, N.; True, J.; Bassiouny, R.; Venkatesh, V.; Kashef, R. Weed detection in soybean crops using custom lightweight deep learning models. J. Agric. Food Res. 2022, 8, 100308. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Adam, H. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Fan, X.; Sun, T.; Chai, X.; Zhou, J. YOLO-WDNet: A lightweight and accurate model for weeds detection in cotton field. Comput. Electron. Agric. 2024, 225, 109317. [Google Scholar] [CrossRef]

- Kerpauskas, P.; Sirvydas, A.P.; Lazauskas, P.; Vasinauskiene, R.; Tamosiunas, A. Possibilities of weed control by water steam. Agron. Res. 2006, 4, 221–225. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Houlsby, N. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- García-Navarrete, O.L.; Camacho-Tamayo, J.H.; Bregon, A.B.; Martín-García, J.; Navas-Gracia, L.M. Performance Analysis of Real-Time Detection Transformer and You Only Look Once Models for Weed Detection in Maize Cultivation. Agronomy 2025, 15, 796. [Google Scholar] [CrossRef]

- Ji, W.; Wang, J.; Xu, B.; Zhang, T. Apple Grading Based on Multi-Dimensional View Processing and Deep Learning. Foods 2023, 12, 2117. [Google Scholar] [CrossRef]

- Dai, Z. CoAtNet: Marrying Convolution and Attention for All Visual Tasks. arXiv 2022, arXiv:2106.04803. [Google Scholar]

- Wei, Y.; Feng, Y.; Zu, D.; Zhang, X. A hybrid CNN-transformer network: Accurate and efficient semantic segmentation of crops and weeds on resource-constrained embedded devices. Crop. Prot. 2025, 188, 107018. [Google Scholar] [CrossRef]

- Xue, Y.; Jiang, H. Monitoring of Chlorpyrifos Residues in Corn Oil Based on Raman Spectral Deep-Learning Model. Foods 2023, 12, 2402. [Google Scholar] [CrossRef]

- Maram, B.; Das, S.; Daniya, T.; Cristin, R. A Framework for Weed Detection in Agricultural Fields Using Image Processing and Machine Learning Algorithms. In Proceedings of the 2022 International Conference on Intelligent Controller and Computing for Smart Power (ICICCSP), Hyderabad, India, 21–23 July 2022; pp. 1–6. [Google Scholar]

- Su, D.; Kong, H.; Qiao, Y.; Sukkarieh, S. Data augmentation for deep learning based semantic segmentation and crop-weed classification in agricultural robotics. Comput. Electron. Agric. 2021, 190, 106418. [Google Scholar] [CrossRef]

- Chen, D.; Qi, X.; Zheng, Y.; Lu, Y.; Huang, Y.; Li, Z. Synthetic data augmentation by diffusion probabilistic models to enhance weed recognition. Comput. Electron. Agric. 2024, 216, 108517. [Google Scholar] [CrossRef]

- Zamani, S.A.; Baleghi, Y. A novel plant arrangement-based image augmentation method for crop, weed, and background segmentation in agricultural field images. Comput. Electron. Agric. 2025, 233, 110151. [Google Scholar] [CrossRef]

- Deng, L.; Miao, Z.; Zhao, X.; Yang, S.; Gao, Y.; Zhai, C.; Zhao, C. HAD-YOLO: An Accurate and Effective Weed Detection Model Based on Improved YOLOV5 Network. Agronomy 2025, 15, 57. [Google Scholar] [CrossRef]

- Van Der Jeught, S.; Muyshondt, P.G.; Lobato, I. Optimized loss function in deep learning profilometry for improved prediction performance. J. Phys. Photonics 2021, 3, 024014. [Google Scholar] [CrossRef]

- Lee, J.; Park, S.; Mo, S.; Ahn, S.; Shin, J. Layer-adaptive sparsity for the magnitude-based pruning. arXiv 2020, arXiv:2010.07611. [Google Scholar]

- NVIDIA. TensorRT Optimisation for Weed Detection Models. In NVIDIA Technical Report; NVIDIA: Santa Clara, CA, USA, 2022. [Google Scholar]

- Zhou, P.; Zhu, Y.; Jin, C.; Gu, Y.; Kong, Y.; Ou, Y.; Yin, X.; Hao, S. A new training strategy: Coordinating distillation techniques for training lightweight weed detection model. Crop. Prot. 2025, 190, 107124. [Google Scholar] [CrossRef]

- Bah, M.D.; Hafiane, A.; Canals, R. Deep learning with unsupervised data labelling for weed detection in line crops in UAV images. Remote Sens. 2018, 10, 1690. [Google Scholar] [CrossRef]

- Khan, S.; Tufail, M.; Khan, M.T.; Khan, Z.A.; Anwar, S. Deep learning-based identification system of weeds and crops in strawberry and pea fields for a precision agriculture sprayer. Precis. Agric. 2021, 22, 1711–1727. [Google Scholar] [CrossRef]

- Xu, K.; Zhu, Y.; Cao, W.; Jiang, X.; Jiang, Z.; Li, S.; Ni, J. Multi-Modal Deep Learning for Weeds Detection in Wheat Field Based on RGB-D Images. Front. Plant Sci. 2021, 12, 732968. [Google Scholar] [CrossRef]

- Ahmad, A.; Saraswat, D.; Aggarwal, V.; Etienne, A.; Hancock, B. Performance of deep learning models for classifying and detecting common weeds in corn and soybean production systems. Comput. Electron. Agric. 2021, 184, 106081. [Google Scholar] [CrossRef]

- Urmashev, B.; Buribayev, Z.; Amirgaliyeva, Z.; Ataniyazova, A.; Zhassuzak, M.; Turegali, A. Development of a weed detection system using machine learning and neural network algorithms. East.-Eur. J. Enterp. Technol. 2021, 6, 114. [Google Scholar] [CrossRef]

- Niu, W.; Lei, X.; Li, H.; Wu, H.; Hu, F.; Wen, X.; Song, H. YOLOv8-ECFS: A lightweight model for weed species de-tection in soybean fields. Crop Prot. 2024, 184, 106847. [Google Scholar] [CrossRef]

- Khan, Z.; Liu, H.; Shen, Y.; Yang, Z.; Zhang, L.; Yang, F. Optimising precision agriculture: A real-time detection approach for grape vineyard unhealthy leaves using deep learning improved YOLOv7 with feature extraction capabilities. Comput. Electron. Agric. 2025, 231, 109969. [Google Scholar] [CrossRef]

- Liu, G.; Di, J.; Wang, Q.; Zhao, Y.; Yang, Y. An Enhanced and Lightweight YOLOv8-Based Model for Accurate Rice Pest Detection. IEEE Access 2025, 13, 91046–91064. [Google Scholar] [CrossRef]

- Van Quyen, T.; Kim, M.Y. Feature pyramid network with multi-scale prediction fusion for real-time semantic segmentation. Neurocomputing 2023, 519, 104–113. [Google Scholar] [CrossRef]

- Hnewa, M.; Radha, H. Multiscale domain adaptive yolo for cross-domain object detection. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 3323–3327. [Google Scholar]

- Zhou, X.; Sun, J.; Mao, H.; Wu, X.; Zhang, X.; Yang, N. Visualization research of moisture content in leaf lettuce leaves based on WT-PLSR and hyperspectral imaging technology. J. Food Process. Eng. 2018, 41, e12647. [Google Scholar] [CrossRef]

- Sun, Y.; Zhang, N.; Han, C.; Chen, Z.; Zhai, X.; Li, Z.; Zheng, K.; Zhu, J.; Wang, X.; Zou, X.; et al. Competitive immunosensor for sensitive and optical anti-interference detection of imidacloprid by surface-enhanced Raman scattering. Food Chem. 2021, 358, 129898. [Google Scholar] [CrossRef] [PubMed]

- Kong, X.; Liu, T.; Chen, X.; Lian, P.; Zhai, D.; Li, A.; Yu, J. Exploring the semi-supervised learning for weed detection in wheat. Crop. Prot. 2024, 184, 106823. [Google Scholar] [CrossRef]

- Caron, M.; Touvron, H.; Misra, I.; Jégou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging Properties in Self-Supervised Vision Transformers. arXiv 2021, arXiv:2104.14294. [Google Scholar] [CrossRef]

- Yu, X.; Sun, Y.J.; Zhao, W.C.; Gao, J.; Qi, T.; Jin, R.; Liu, W. Structural design of an agricultural omnidirectional mobile platform based on un-structured environments. Intern. Combust. Engine Parts 2017, 3, 1–3. [Google Scholar] [CrossRef]

- Zhang, N.F.; Yang, J.F.; Xue, Y.J.; Li, Z.; Huang, X.L. Agricultural Machinery Operation Posture Rapid Detection Method Based on Intelligent Sensor. Appl. Mech. Mater. 2013, 373–375, 936–939. [Google Scholar] [CrossRef]

- Yang, L.; Kamata, S.; Hoshino, Y.; Liu, Y.; Tomioka, C. Development of EV Crawler-Type Weeding Robot for Organic Onion. Agriculture 2025, 15, 2. [Google Scholar] [CrossRef]

- DJI. T60 Agricultural Drone Technical Specifications; DJI Innovations: Shenzhen, China, 2024. [Google Scholar]

- Gebresenbet, G.; Bosona, T.; Patterson, D.; Persson, H.; Fischer, B.; Mandaluniz, N.; Chirici, G.; Zacepins, A.; Komasilovs, V.; Pitulac, T.; et al. A concept for application of integrated digital technologies to enhance future smart agricultural systems. Smart Agric. Technol. 2023, 5, 100255. [Google Scholar] [CrossRef]

- Chen, S.; Li, X.; Li, S.; Zhou, Y.; Yang, X. iKalibr: Unified Targetless Spatiotemporal Calibration for Resilient Integrated Inertial Systems. IEEE Trans. Robot. 2025, 41, 1618–1638. [Google Scholar] [CrossRef]

- Aygün, S.; Güneş, E.O.; Subaşı, M.A.; Alkan, S. Sensor Fusion for IoT-Based Intelligent Agriculture Systems. In Proceedings of the 2019 the 8th International Conference on Agro-Geoinformatics (Agro-Geoinformatics), Istanbul, Turkey, 16–19 July 2019; pp. 1–5. [Google Scholar]

- Zhang, X.; Chen, N.; Li, J.; Chen, Z.; Niyogi, D. Multi-sensor integrated framework and index for agricultural drought monitoring. Remote. Sens. Environ. 2017, 188, 141–163. [Google Scholar] [CrossRef]

- Chang, C.-L.; Xie, B.-X.; Chung, S.-C. Mechanical Control with a Deep Learning Method for Precise Weeding on a Farm. Agriculture 2021, 11, 1049. [Google Scholar] [CrossRef]

- Parulski, M.L. Design of an Automated Arm for a Robotic Weeding Platform. Master’s Thesis, Case Western Reserve University, Cleveland, OH, USA, 2020. [Google Scholar]

- Jia, W.; Tai, K.; Wang, X.; Dong, X.; Ou, M. Design and Simulation of Intra-Row Obstacle Avoidance Shovel-Type Weeding Machine in Orchard. Agriculture 2024, 14, 1124. [Google Scholar] [CrossRef]

- Allmendinger, A.; Spaeth, M.; Saile, M.; Peteinatos, G.G.; Gerhards, R. Precision Chemical Weed Management Strategies: A Review and a Design of a New CNN-Based Modular Spot Sprayer. Agronomy 2022, 12, 1620. [Google Scholar] [CrossRef]

- Yu, Z.; He, X.; Qi, P.; Wang, Z.; Liu, L.; Han, L.; Huang, Z.; Wang, C. A Static Laser Weeding Device and System Based on Fiber Laser: Development, Experimentation, and Evaluation. Agronomy 2024, 14, 1426. [Google Scholar] [CrossRef]

- Xiong, Y.; Ge, Y.; Liang, Y.; Blackmore, S. Development of a prototype robot and fast path-planning algorithm for static laser weeding. Comput. Electron. Agric. 2017, 142, 494–503. [Google Scholar] [CrossRef]

- Zhu, H.; Zhang, Y.; Mu, D.; Bai, L.; Zhuang, H.; Li, H. YOLOX-based blue laser weeding robot in corn field. Front. Plant Sci. 2022, 13, 1017803. [Google Scholar] [CrossRef] [PubMed]

- Hu, R.; Niu, L.T.; Su, W.H. A novel mechanical-laser collaborative intra-row weeding prototype: Structural design and optimisation, weeding knife simulation, and laser weeding experiment. Front. Plant Sci. 2024, 15, 1469098. [Google Scholar] [CrossRef] [PubMed]

- Upadhyay, A.; Singh, K.P.; Jhala, K.; Kumar, M.; Salem, A. Non-chemical weed management: Harnessing flame weeding for effective weed control. Heliyon 2024, 10, e32776. [Google Scholar] [CrossRef]

- Dress, A.; Balah, M. Flame is used for weed control in certain crops. J. Soil Sci. Agric. Eng. 2016, 7, 751–756. [Google Scholar]

- Qin, W.-C.; Qiu, B.-J.; Xue, X.-Y.; Chen, C.; Xu, Z.-F.; Zhou, Q.-Q. Droplet deposition and control effect of insecticides sprayed with an unmanned aerial vehicle against plant hoppers. Crop. Prot. 2016, 85, 79–88. [Google Scholar] [CrossRef]

- Sebastian, S.; Kalita, K. Development and field performance assessment of roller rake weeder. Crop. Prot. 2025, 189, 107051. [Google Scholar] [CrossRef]

- Xiang, M.; Wei, S.; Zhang, M.; Li, M.Z. Real-time monitoring system for agricultural machinery operation information, utilizing ARM11 and GNSS technologies. IFAC-Pap. 2016, 49, 121–126. [Google Scholar]

- Khadatkar, A.; Sujit, P.; Agrawal, R.; Vishwanath, K.; Sawant, C.; Magar, A.; Chaudhary, V. WeeRo: Design, development and application of a remotely controlled robotic weeder for mechanical weeding in row crops for sustainable crop production. Results Eng. 2025, 26, 105202. [Google Scholar] [CrossRef]

- Rachmawati, D.; Gustin, L. Analysis of Dijkstra’s Algorithm and A* Algorithm in Shortest Path Problem. J. Phys. Conf. Ser. 2020, 1566, 012061. [Google Scholar] [CrossRef]

- Adiyatov, O.; Varol, H.A. A novel RRT*-based algorithm for motion planning in dynamic environments. In Proceedings of the 2017 IEEE International Conference on Mechatronics and Automation (ICMA), Takamatsu, Japan, 6–9 August 2017; pp. 1416–1421. [Google Scholar]

- Levine, S.; Finn, C.; Darrell, T.; Abbeel, P. End-to-end training of deep visuomotor policies. J. Mach. Learn. Res. 2016, 17, 1–40. [Google Scholar]

- Shafaei, S.; Loghavi, M.; Kamgar, S. Development and implementation of a human machine interface-assisted digital instrumentation system for high precision measurement of tractor performance parameters. Eng. Agric. Environ. Food 2019, 12, 11–23. [Google Scholar] [CrossRef]

- Khramov, V.V. Development of a Human-Machine Interface Based on Hybrid Intelligence. Int. Sci. J. Mod. Inf. Technol. IT Educ. 2020, 16, 893–900. [Google Scholar] [CrossRef]

- Li, X.; Lloyd, R.; Ward, S.; Cox, J.; Coutts, S.; Fox, C. Robotic crop row tracking around weeds using cereal-specific features. Comput. Electron. Agric. 2022, 197, 106941. [Google Scholar] [CrossRef]

- Fan, X.; Chai, X.; Zhou, J.; Sun, T. Deep learning based weed detection and target spraying robot system at seedling stage of cotton field. Comput. Electron. Agric. 2023, 214, 108317. [Google Scholar] [CrossRef]

- Upadhyay, A.; Sunil, G.C.; Zhang, Y.; Koparan, C.; Sun, X. Development and evaluation of a machine vision and deep learning-based smart sprayer system for site-specific weed management in row crops: An edge computing approach. J. Agric. Food Res. 2024, 18, 101331. [Google Scholar] [CrossRef]

- Jia, W.; Tai, K.; Dong, X.; Ou, M.; Wang, X. Design of and Experimentation on an Intelligent Intra-Row Obstacle Avoidance and Weeding Machine for Orchards. Agriculture 2025, 15, 947. [Google Scholar] [CrossRef]

- Hu, R.; Su, W.-H.; Li, J.-L.; Peng, Y. Real-time lettuce-weed localization and weed severity classification based on lightweight YOLO convolutional neural networks for intelligent intra-row weed control. Comput. Electron. Agric. 2024, 226, 109404. [Google Scholar] [CrossRef]

- El Hafyani, M.; Saddik, A.; Hssaisoune, M.; Labbaci, A.; Tairi, A.; Abdelfadel, F.; Bouchaou, L. Weeds detection in a Citrus orchard using multispectral UAV data and machine learning algorithms: A case study from Souss-Massa basin, Morocco. Remote. Sens. Appl. Soc. Environ. 2025, 38, 101553. [Google Scholar] [CrossRef]

- Yu, J.; Long, Q.; Hao, G.; Chuang, L.; Ming, T.; Xiaolu, H.; Qinling, C. Design and experiment of pneumatic paddy intra-row weeding device. J. South China Agric. Univ. 2020, 41, 37–49. [Google Scholar]

- Wang, S.; Yu, S.; Zhang, W.; Wang, X. The identification of straight-curved rice seedling rows for automatic row avoidance and weeding system. Biosyst. Eng. 2023, 233, 47–62. [Google Scholar] [CrossRef]

- Ju, J.; Chen, G.; Lv, Z.; Zhao, M.; Sun, L.; Wang, Z.; Wang, J. Design and experiment of an adaptive cruise weeding robot for paddy fields based on improved YOLOv5. Comput. Electron. Agric. 2024, 219, 108824. [Google Scholar] [CrossRef]

- Monteiro, A.; Santos, S. Sustainable Approach to Weed Management: The Role of Precision Weed Management. Agronomy 2022, 12, 118. [Google Scholar] [CrossRef]

- Mohanty, T.; Pattanaik, P.; Dash, S.; Tripathy, H.P.; Holderbaum, W. Innovative robotic system guided with YOLOv5-based machine learning framework for efficient herbicide usage in rice (Oryza sativa L.) under precision agriculture. Comput. Electron Agric. 2025, 231, 110032. [Google Scholar] [CrossRef]

- Jiang, W.; Quan, L.; Wei, G.; Chang, C.; Geng, T. A conceptual evaluation of a weed control method with post-damage application of herbicides: A composite intelligent intra-row weeding robot. Soil Tillage Res. 2023, 234, 105837. [Google Scholar] [CrossRef]

- Lottes, P.; Khanna, R.; Pfeifer, J.; Siegwart, R.; Stachniss, C. UAV-based crop and weed classification for smart farming. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3024–3031. [Google Scholar]

- Sinha, J.P. Aerial robot for smart farming and enhancing farmers’ net benefit. Indian J. Agric. Sci. 2020, 90, 258–267. [Google Scholar] [CrossRef]

- Partel, V.; Kakarla, S.C.; Ampatzidis, Y. Development and evaluation of a low-cost and smart technology for precision weed management utilizing artificial intelligence. Comput. Electron. Agric. 2019, 157, 339–350. [Google Scholar] [CrossRef]

- Balafoutis, A.T.; Evert, F.K.V.; Fountas, S. Innovative farming technology trends: Economic and environmental effects, labour impact, and adoption readiness. Agronomy 2020, 10, 743. [Google Scholar] [CrossRef]

- Li, Y.; Guo, Z.; Shuang, F.; Zhang, M.; Li, X. Key technologies of machine vision for weeding robots: A review and benchmark. Comput. Electron. Agric. 2022, 196, 106880. [Google Scholar] [CrossRef]

- Shorewala, S.; Ashfaque, A.; Sidharth, R.; Verma, U. Weed Density and Distribution Estimation for Precision Agriculture Using Semi-Supervised Learning. IEEE Access 2021, 9, 27971–27986. [Google Scholar] [CrossRef]

- Yang, J.; Guo, X.; Li, Y.; Marinello, F.; Ercisli, S.; Zhang, Z. A survey of few-shot learning in smart agriculture: Devel-opments, applications, and challenges. Plant Methods 2022, 18, 28. [Google Scholar] [CrossRef]

- Zhang, J.; Yu, F.; Zhang, Q.; Wang, M.; Yu, J.; Tan, Y. Advancements of UAV and Deep Learning Technologies for Weed Management in Farmland. Agronomy 2024, 14, 494. [Google Scholar] [CrossRef]

- Zhao, F.; Zhang, C.; Geng, B. Deep multimodal data fusion. ACM Comput. Surv. 2024, 56, 1–36. [Google Scholar] [CrossRef]

- Xu, K.; Shu, L.; Xie, Q.; Song, M.; Zhu, Y.; Cao, W.; Ni, J. Precision weed detection in wheat fields for agriculture 4.0: A survey of enabling technologies, methods, and research challenges. Comput. Electron. Agric. 2023, 212, 108106. [Google Scholar] [CrossRef]

- Trong, V.H.; Gwang-Hyun, Y.; Vu, D.T.; Jin-Young, K. Late fusion of multimodal deep neural networks for weeds classification. Comput. Electron. Agric. 2020, 175, 105506. [Google Scholar] [CrossRef]

- Chang, C.-L.; Lin, K.-M. Smart Agricultural Machine with a Computer Vision-Based Weeding and Variable-Rate Irrigation Scheme. Robotics 2018, 7, 38. [Google Scholar] [CrossRef]

- Chang-Tao, Z.; Rui-Feng, W.; Yu-Hao, T.; Xiao-Xu, P.; Wen-Hao, S. Automatic lettuce weed detection and classification based on optimised convolutional neural networks for robotic weed control. Agronomy 2024, 14, 2838. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Micikevicius, P.; Narang, S.; Alben, J.; Diamos, G.; Elsen, E.; Garcia, D.; Wu, H. Mixed precision training. arXiv 2017, arXiv:1710.03740. [Google Scholar] [CrossRef]

- Xie, D.; Chen, L.; Liu, L.; Chen, L.; Wang, H. Actuators and sensors for application in agricultural robots: A review. Machines 2022, 10, 913. [Google Scholar] [CrossRef]

- Pantazi, X.-E.; Moshou, D.; Bravo, C. Active learning system for weed species recognition based on hyperspectral sensing. Biosyst. Eng. 2016, 146, 193–202. [Google Scholar] [CrossRef]

- Agrawal, A.; Fischer, M.; Singh, V. Digital Twin: From Concept to Practice. J. Manag. Eng. 2022, 38, 06022001. [Google Scholar] [CrossRef]

- Palladations, N.; Piramal’s, D.; Chimeras, V.; Tserepas, E.; Munteanu, R.A.; Papageorgas, P. Enhancing smart agriculture by implementing digital twins: A comprehensive review. Sensors 2023, 23, 7128. [Google Scholar] [CrossRef]

- San, C.T.; Kakani, V. Smart Precision Weeding in Agriculture Using 5IR Technologies. Electronics 2025, 14, 2517. [Google Scholar] [CrossRef]

- Schut, A.G.; Giller, K.E. Sustainable intensification of agriculture in Africa. Front. Agric. Sci. Eng. 2020, 7, 371–375. [Google Scholar] [CrossRef]

- Miranda, J.; Ponce, P.; Molina, A.; Wright, P. Sensing, smart and sustainable technologies for Agri-Food 4.0. Comput. Ind. 2019, 108, 21–36. [Google Scholar] [CrossRef]

- Karunathilake, E.M.B.M.; Le, A.T.; Heo, S.; Chung, Y.S.; Mansoor, S. The Path to Smart Farming: Innovations and Opportunities in Precision Agriculture. Agriculture 2023, 13, 1593. [Google Scholar] [CrossRef]

- Anastasiou, E.; Fountas, S.; Voulgaraki, M.; Psiroukis, V.; Koutsiaras, M.; Kriezi, O.; Lazarou, E.; Vatsanidou, A.; Fu, L.; Di Bartolo, F.; et al. Precision farming technologies for crop protection: A meta-analysis. Smart Agric. Technol. 2023, 5, 100323. [Google Scholar] [CrossRef]

| Author, Reference | Machine Learning or Deep Learning | Crop and Weed Species | Recognition Accuracy | Weed Control Methods | Weed Control Effectiveness |

|---|---|---|---|---|---|

| Sapkota, R. [3] | Self-developed Crop Row Identification (CRI) computer vision algorithm | Crop: Maize Weeds: All green vegetation outside the maize rows | Maize row detection: Accuracy: 99.5% Weed mapping: 35% of grid cells weed-free | Grid-based identification of weeds and precise spraying of herbicides | Application accuracy: 78.4% of actual actuation accuracy in non-sprayed areas |

| Zheng, S. [23] | Improved YOLOv5s model | Crop: Kale Weeds: Plants other than crops | Recognition accuracy for kale under motion blur conditions: 96.1% | Electric pendulum mechanical wedding | Field optimum: 96.00% weed control + 1.57% seedling injury |

| Xiaowu Han [25] | Improved YOLO-V4 Tiny model | Crop: Maize Weeds: Six species of oxalis, thistle, etc. | Average precision: 94.83% | Precision spraying systems | Jetson NX embedded decoding performance: FP32 mode accuracy 94.8%/time consumed 73 ms INT8 mode accuracy 84.2%/time consumed 13.6 ms |

| Honghua Jiang [26] | CNN Feature Extraction + GCN-ResNet-101 Model | Crops: Corn, lettuce, radish Weeds: Cirsium, bluegrass, sedge | GCN-ResNet-101 model performance: Corn dataset: 97.80% Lettuce dataset: 99.37% Radish dataset: 98.93% | Targeted spraying of herbicides, avoiding uniform spraying | Spraying for weeds only reduces herbicide use |

| Dewa Made Sri Arsa [27] | Improved Encoder–Decoder CNN Model | Crop: Beans Weeds: Focused Growth Spot Detection | Weed: Detection rate: 0.8505 Precision rate: 0.8641 | Laser-based weeding: Destruction by spot irradiation with a CO2 laser | 1. Environmentally friendly: only damages the growing point, no injury to leaves/roots 2. Accuracy: Positioning error ≤ 15 pixels |

| Visentin, F [28] | Pre-training the ResNet18 model | Crop: Lactuca sativa Weeds: Satureja, Taraxacum, etc. | Recognition accuracy: Crop: 98% Weed: 97.8% | The robot pulls weeds and disposes of them in the recycling bin | Weed control success rate: 92% Weed control efficiency: 10 plants per minute |

| Arakeri, M. P [29] | Weed identification using artificial neural networks (ANN) | Crop: Onion Weeds: Asphodelus Fistulosus | Accuracy: 98.64% Sensitivity: 96.83%. Specificity: 99.57% | Automatic control of herbicide spraying by sprayers | Automatic control of the sprayer to apply herbicide based on weed density |

| Zhao, X [30] | Support Vector Machine (SVM), Fusion Skeleton Point-to-Line Ratio with Maximum Inner Circle Radius | Crop: Cabbage Weeds: Portulaca oleracea, Galinsoga parviflora | Average recognition accuracy in the field: Cabbage 95.0%; weeds 93.5% | Targeted spraying system based on active light sources | Effective spray rate: 92.9% |

| Jin, X [31] | Optimization of weed identification based on CenterNet and a genetic algorithm | Crop: Cabbage Weeds: Green objects outside the detection box | CenterNet detection: accuracy 95.6% | Spray weed killer based on the size of the weed area identified | Can reduce the area of pesticide spraying and achieve a weed segmentation accuracy rate of 92.7% under natural conditions |

| Tufail, M. [32] | 1. Support SVMs 2. Customized ResNet18 Single Stage Detector (SSD) combined with MobileNet v2 | Crop: Tobacco Weeds: Common broadleaf/narrowleaf weeds in the field | Supports SVM: 96% accuracy ResNet18 + SVM: 100% accuracy SSD-MobileNet v2: 81% accuracy | Control of electromagnetic valve directional spraying and switching based on SVM classification results | Can reduce pesticide use by 30–40% with an effective spraying rate of 92.9% |

| Lin, Y. [33] | Improved Multitasking YOLO Algorithm | Crop: Pineapple Weeds: All types of weeds in pineapple fields | Detection: Accuracy (P): 84.37% Segmentation: mIoU: 77.80% Accuracy: 86.35% | Precision spraying of herbicides | Indoor experiment: 98% of weeds were correctly sprayed, 10.1% survived |

| Xu, Y. [34] | W-YOLOv5 | Crops: Wheat, radish Weeds: Multiple weeds in crop fields | Crop detection: mAP@0.5: 87.6% Weed detection: 98% | Precise spraying of herbicides based on weed identification results | Field test: Spraying accuracy 90.32 per cent at 4 km/h. Flow rate control error ≤2% |

| Karim, M. J. [35] | Improved YOLOv8n | Crop: Cotton Weeds: Waterhemp, beat bowl flower, etc. | Overall performance: mAP@0.5: 97.6% Precision: 94.5%, | After identifying weeds and locating them with lasers, apply herbicides with precision | Laser positioning accuracy: 92.3% |

| Herterich, N [36] | Lightweight weed detection model based on NPU optimization | Crops: Cotton, sugar beet Weeds: Field weeds, etc. | Overall performance: mAP@0.5: 97.6% Precision: 94.5% | Real-time detection of weed location based on NPU and precise spraying of herbicide | Pesticide coverage efficiency on diseased leaves was improved by 65.7%, and pesticide waste was reduced by 10% to 55% |

| Zhang, X [37] | Improved YOLOv8Pose Models | Crop: Corn | Average Precision (AP): 0.804 mAP@0.5: 0.957 | Based on the detection results, control the finger-type weed remover to remove weeds along the route | Effective weed control rate (EWR): 95.6% |

| Xiang M [38] | Enhancement of the YOLOv5 model | Crops: Lettuce Weeds: Amaranth, caper | Accuracy rate of 99.1% | S-shaped flexible weed cutter for weeding within and between rows | Average weed control rate: 96.87% |

| Utstumo, T. [39] | Drop-on-Demand (DoD) Droplet Precision Spraying System | Crops: Carrots Weeds: Quinoa, fescue, etc. | The visual system detects weeds and sprays precisely to achieve 100% weed control | Precise spraying of weed control and inter-row mechanical weed control based on machine vision | Field: 5.3 µg glyphosate/drop for 100% weed control Reduces herbicide use by more than 90% compared to conventional methods |

| Azghadi, M. R [40] | MobileNetV2-based image classification model | Crops: Sugarcane, green beans Weeds: Balsam, grass weeds | The MobileNetV2 model achieved an average weed control rate of 95% | Computer vision-based precision spraying technology | Herbicide use reduced by 35% Weed control efficiency reaches 97% |

| Sassu, A., Motta [41] | Single-stage target detection model based on Feature Pyramid Network (FPN) | Crops: Cynara cardunculus L. | FPN model: Accuracy rate 93.2% YOLOv5n model: Accuracy rate 98.7% | Precision spraying using unmanned aerial systems (UAS) | Pesticide use reduced by 35–65% Foliar coverage efficiency (SR) of 91.5–95.7% |

| Zhao, P. [42] | Improved model based on YOLOv8-pose DIN-LW-YOLO | Crop: Strawberries Weeds: Field weeds | Average precision (mAP): 88.5% Average precision of weed growth points (MAP): 85.0% | Determine the coordinates of weeds based on the detection results and use laser equipment to remove them | Field trial: 92.6% weed control, 1.2% seedling injury 100% weed mortality 3 days after laser treatment |

| Li, J. [43] | Crop–weed classification algorithm based on multi-sensor fusion | Crops: Tomatoes Weeds: Snakeberry, salvia, etc. | Classification accuracy: 95.43% Spraying efficiency: 99.96% | Real-time herbicide spraying based on sensor signals | Weed control rate: 99.96% Average number of sprays: 5.81 |

| Jin, X. [44] | Grid classification using EfficientNet-v2, ResNet, and VGGNet | Crop: Dogbane lawn Weeds: Annual bluegrass, dandelion | EfficientNet-v2: Weed detection F1: 99.6% ResNet: F1 value: 99.6% VGGNet: F1 value: 98.5% | Based on the detection results of the HWCS neural network, precise herbicide spraying is achieved | Precise spraying is equivalent to comprehensive spraying |

| Quan, L. [45] | YOLOv3-based target detection model | Crop: Maize Weeds: Broadleaf weeds, grass weeds | Maize detection accuracy: 98.5% Average weed detection accuracy: 90.9% | Based on the detection results, use a vertical rotary weed cutter for precise weed removal | Under single-crop cultivation conditions: Weed control effectiveness: 85.91% Crop damage: 1.17% |

| Model Architecture | Application Scenarios | Recognition Accuracy | Data Sources |

|---|---|---|---|

| VGG-16 | Strawberry field variable spraying | 93% | [21] |

| Inception V2 | Drone weed detection | 98% | [47] |

| Xception | Early classification of cornfields | 97.83% | [48] |

| ResNet-50 + SegNet | Semantic segmentation of rapeseed fields | mIoU 0.8288 | [49] |

| Model Architecture | Application Scenarios | mAP@0.5 | Inference Time | Data Sources |

|---|---|---|---|---|

| YOLOv4-Tiny | Weed detection in peanut fields | 94.54% | 73 ms (FP32) | [55] |

| RetinaNet | Weed detection in a rice field | 94.10% | 41.1 ms | [56] |

| Faster R-CNN | Weed detection in farmland | 78.20% | 218 ms | [58] |

| YOLOv8 | Weed detection in farmland | 85.60% | 22.3 ms | |

| YOLOv9 | Weed detection in farmland | 93.50% | 18.7 ms | |

| YOLOv11 | Weed detection in farmland | 89.10% | 13.5 ms | |

| Improvements to YOLOv4 | Weed detection in corn fields | 86.89% | [59] | |

| YOLOv7 | Multi-species tea bud detection | 87.10% | [60] |

| Model | Training Weights | Pr | mAP50 | mAP0.5–0.9 | F1 | TIME (h) | Size (MB) |

|---|---|---|---|---|---|---|---|

| YOLOv5 | Pre-weighting | 81% | 86% | 64% | 83% | 81% | 14.10 |

| Pre-weighting after training | 86% | 90% | 61% | 83% | 86% | 13.70 | |

| YOLOv8 | Pre-weighting | 76% | 88% | 66% | 72% | 76% | 6.23 |

| Pre-weighting after training | 78% | 95% | 76% | 76% | 78% | 5.98 | |

| YOLOv10 | Pre-weighting | 82% | 87% | 65% | 59% | 82% | 31.40 |

| Pre-weighting after training | 87% | 92% | 73% | 75% | 87% | 15.80 | |

| YOLOv11 | Pre-weighting | 81% | 88% | 66% | 84% | 81% | 5.35 |

| Pre-weighting after training | 82% | 88% | 66% | 84% | 82% | 5.22 | |

| YOLOv12 | Pre-weighting | 82% | 87% | 67% | 86% | 82% | 5.25 |

| Pre-weighting after training | 87% | 88% | 67% | 84% | 87% | 5.23 |

| Model Architecture | Application Scenarios | mIoU | Key Technologies | Data Sources |

|---|---|---|---|---|

| U-Net | Beet field | 88.59% | Jump-join feature fusion | [11] |

| Improvement of R-FCN | Beet field | 89% | Cross-Scale Feature Fusion | [64] |

| Swin-DeepLab | Soybean field | 91.53% | Swin Transformer + CBAM Attention | [65] |

| GT-DeepLabv3+ | Rice paddy | 64.91% | MobileNet v2 + GS-ASPP | [66] |

| Sub-Area Machine Vision | Cotton field | 89.4% | Positional characteristics + Morphological analysis | [67] |

| Color Feature Segmentation | Cotton field | 92.9% | B-R standard deviation threshold + Otsu splitting | [68] |

| Technical Program | Application Scenarios | Key Indicator | Data Sources |

|---|---|---|---|

| DoD Robotics | Carrot field | Reduction in herbicide use by 90% | [39] |

| RF + RGB | Chili field | Detection accuracy 96% | [69] |

| SVM + RGB | Detection accuracy 94% | ||

| YOLOv5 + RGB | Cotton field | mAP 0.82 | [70] |

| RGB-D + 3-Channel Network | Wheat field | Gramineae mAP 36.1% | [72] |

| Broadleaf weeds MAP 42.9% |

| Technical Program | Application Scenarios | Key Indicator | Data Sources |

|---|---|---|---|

| Spectral–Spatial Fusion SVM Classification | Corn field | Detection accuracy 89% | [73] |

| DeepLabv3 + Probabilistic Modelling | Corn field | Multi-spectral mIoU 82.90% | [74] |

| Blue LED Fluorescent Sensor | Generic Scenarios | Vegetation Detection Accuracy 100% | [75] |

| RGB—Multi-spectral Fusion GPR Monitoring | Rice paddy | 20% improvement in LNC estimation accuracy | [76] |

| Technical Program | Application Scenarios | Key Indicator | Data Sources |

|---|---|---|---|

| 61-band hyperspectral + CNN | Weed classification | Accuracy better than RGB data | [77] |

| Ultra Pixel Spectroscopy + MLP | Classification of weeds in rangelands | Accuracy 89.1% | [78] |

| HIT + CNN Transfer Learning | Soya chlorophyll estimates | R2 = 0.78 | [79] |

| SOM + RBF Classifiers | Crop vegetation classification | Accuracy 88.5% | [80] |

| HSI + SWAE + GWO-SVR | Apple SSC predictions | Rp2 = 0.9436, RMSEP = 0.1328 | [82] |

| Visible - near-infrared hyperspectral imaging technology | Detection of external defects in nectarines | PLS model accuracy: 89.73%; LS-SVM model accuracy: 94.45%; ELM model accuracy: 88.62 | [83] |

| SERS + CNN | Corn oil toxin detection | Trace ZEN Markerless Detection | [84] |

| Model Architecture | Core Technology Features | Application Scenarios | mAP@0.5 | Quantity of Participants | Speed of Reasoning | Data Sources |

|---|---|---|---|---|---|---|

| YOLOv8n + CBAM | Attention Module + Lightweight Components | Cotton field | 97.6% | 13.84 FPS | [35] | |

| YOLOv8s + MobileNetV3 | Deep Separable Convolution + Attention Mechanism | Cotton field | 82% | 38% Original model | [52] | |

| EM-YOLOv4-Tiny | Mixed-precision quantization + multi-scale detection | Peanut field | 90% | 40% reduction | 73 ms (FP32) | [55] |

| 5-Layer CNN | Customized Convolutional Architecture + Model Quantization | Generic Scenarios | 95.12% | 0.012 GB | 16.754 ms | [97] |

| YOLO-WDNet | Feature Fusion Optimization + Loss Function Design | Cotton field | 97.8% | [99] | ||

| YOLOv8-ECFS | EfficientNet + Focal_SIoU + CA | Soybean field | 95.0% | GFLOPs↓ 11.1G | [100] |

| Model Architecture | Application Scenarios | Core Technology | mAP@0.5 | Speed of Reasoning | Quantity of Participants | Data Sources |

|---|---|---|---|---|---|---|

| MobileNetV2 + Transformer | Sugar cane field | Chunk Sorting + Hardware Acceleration | 18.6 FPS | 35% reduction | [40] | |

| Swin Transformer + UNet | Soybean field | Sliding Window Attention + CBAM | 91.53% | 40% reduction | [65] | |

| ViT-Base | Weed classification | Image Patch Sequence + Self-Attention | 92.1% | 28.7 FPS | 65 M | [101] |

| RT-DETR-l | Corn field | Hybrid Query Strategy + NMS-free | 91.2% | 42.3 FPS | 32.5 M | [102] |

| Authors, Reference | Model | Modelling Improvements | Precision |

|---|---|---|---|

| Bah, M. D. [116] | Improved ResNet18 Convolutional Neural Network | 1. Core method: Improved ResNet18 convolutional neural network combined with transfer learning. 2. Unsupervised data annotation: Training data is automatically generated through crop row detection and superpixel segmentation. | 1. Spinach field: no supervisory labelling AUC 94.34%, supervisory labelling AUC 95.70% 2. Bean field: unsupervised labelling AUC 88.73%, supervised labelling AUC 94.84%. |

| Khan, S [117] | Improved Faster R-CNN | Replaced VGG16 with ResNet-101 and increased the number of anchors from 9 to 16. | Average weed identification accuracy: 95.3% Overall average accuracy: 94.73% |

| Xu, K [118] | Three-Channel Deep Learning Network Based on RGB-D Images | 1. Recode single-channel depth images into three-channel PHA images. 2. Integrate multimodal information through feature-level fusion and decision-level fusion. | Grass weeds: mAP 36.1% Broadleaf weeds: mAP 42.9% Overall Detection Accuracy:89.3% |

| Ahmad, A. [119] | VGG16, ResNet50, InceptionV3 and YOLOv3 models | 1. Image classification: Transfer learning based on the Keras and PyTorch frameworks, and replace the output layer with a 4-node soft maximum likelihood layer. 2. Object detection: Use the Darknet-53 feature extractor and adjust the image size to 416 × 416 pixels during training. | Image classification: VGG16 and ResNet50 accuracy: 97.80%. InceptionV3 accuracy: 96.70%. Average accuracy of target detection: 54.3%. |

| Mashev, B. [120] | Improved YOLOv5 | ECA-Net Attention Module Introduced in YOLOv5 to Enhance Inter-Channel Feature Interaction and Improve Small Target Detection Capability. | Accuracy range by category: 82–92% mAP@0.5: 78.1% |

| Hong Hua Jiang [121] | YOLOv8-ECFS | 1. Replace Backbone with EfficientNet-B0, introducing the MBConv module and SENet attention mechanism. 2. Use the Focal_SIoU loss function. 3. Add a coordination attention (CA) module after the C2f module in the Neck. | 1. Overall performance: Accuracy: 92.2% 2. Typical weeds: Clover and alfalfa (CHW): mAP improved by 5.2% Soybean seedlings: mAP enhanced by 0.8% |

| Khan, Z. [122] | Improved YOLOv7 algorithm | 1. Integrate lightweight convolutional layers into the backbone network to enhance feature extraction capabilities. 2. Introduce squeezed excitation (SE) blocks and batch normalization blocks to integrate spatial and channel information. 3. Combine adaptive gradient optimizers with Lasso regularization to improve model generalization capabilities. 4. Replace activation functions with ELU and GELU to improve model convergence and non-linear expression capabilities. | Compared to the original YOLOv7: Precision improved by 3.2% Recall improved by 6.2% mAP@0.5 improved by 1.6% mAP@0.5:0.95 improved by 7.1% F1-Score increased by 5% |

| Authors, Reference | Weed Control Mechanism | Weeding Method | Crops and Weeds | Weed Control Effect |

|---|---|---|---|---|

| Melander, B. [16] | Weed Simulator: Side blade length 3 cm, pneumatic drive Flame Weed Simulator: Single nozzle, height 7.5 cm, angle 50°, propane flow rate 1.0 L/min | Mechanical weeding: Side blade weeding. Flame weeding: Weeding with a propane flame sprayer. | Crop: Direct-seeded sugar beet Weeds: Inter-row weeds | Mechanical hoe: Weed within 1 cm of the center of the crop Flame weeding: Two-leaf stage: Propane usage ≤ 0.74 kg/km Four-leaf stage: Usage ≤ 1.49 kg/km Six-leaf stage: Usage ≤ 5.95 kg/km |

| Mwitta, C. [53] | Mobile platform: Ackerman steerable four-wheeled robot Robotic arm: 2D Cartesian arm Laser module: pan-and-tilt rotating mechanism with servo motors | Control: Vision servo to locate weed stems, PID to regulate robot position. Tactics: Jittering of the laser beam (10° swing) to increase contact area, 2 s for a single shot (10 J energy). | Crop: Cotton Weed: Palmer amaranth (Amaranthus palmeri) | No tracking mode: Weed killing rate is 47%, cycle time is 9.5 s per plant, and when the laser is tilted downwards by 10°, weeds can be effectively killed |

| Abd Ghani [54] | UAV: Multi-rotor agricultural drone equipped with a GPS positioning system Atomizing sprayer: Backpack-mounted electric sprayer | Drone spraying: flight speed 18 km/h, spraying width 2 m, mist sprayer. Spraying: flow rate 0.05–2.64 L/min. | Crop: Direct-seeded rice Weeds: Grasses: barnyardgrass, miller’s weed | Best weed control efficiency: 96.26% for Novlect Rice yield: 38% higher yield in Novlect-treated areas compared to untreated areas |

| Marx, C. [72] | Hardware: CO2 laser systems, coaxial HeNe laser positioning system Key parameters: Energy density: max. 5.00 J/mm2 | Directed irradiation based on the CO2 laser. Dynamic adjustment of laser energy, spot diameter. | Weeds: Monocotyledonous: barnyard grass Dicotyledonous: Amaranthus antiquus | Lethal dose (95% success rate): Weeding efficiency: lethality over 90% for laser energy ≥ 54 J |

| Kerpauskas, P. [100] | Experimental tractor unit: 4th generation mobile water vapor weeder | Water vapor spraying: Temperature-controlled water vapor contacts the weed in short bursts of 1–2 s. | Crops: onions, barley, maize Weeds: other annual weeds | Weed shoot destruction: up to 98% Weed dry weight reduction: 40–57% |

| Jia, W. [140] | Mechanical structure: parallelogram linkage, hydraulic drive cylinder Sensing system: Obstacle detection rod and displacement sensor | Shovel-type mechanical weed control (spade-type weed control shovel, 3 cm depth of entry) + hydraulic automatic obstacle avoidance system. | Crop: Grapevines Weeds: Grapevine interplant weeds | 86.8% reduction in weed cover after optimization |

| Sebastian, S. [149] | Main structure: Handle, rollers (with V-shaped spikes), fixed rake, float Total machine mass 5.4 kg, operating speed 1.9–2.1 km/h | Hand-operated push–pull mechanical weed control using roller spikes and fixed rakes working in unison. | Crop: Rice Weeds: Row weeds | Weeding efficiency: 88–95% Actual field capacity: 0.038–0.04 ha/h Power requirement: 0.032–0.036 HP |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, X.; Gao, J.; Qureshi, W.A. Applications, Trends, and Challenges of Precision Weed Control Technologies Based on Deep Learning and Machine Vision. Agronomy 2025, 15, 1954. https://doi.org/10.3390/agronomy15081954

Gao X, Gao J, Qureshi WA. Applications, Trends, and Challenges of Precision Weed Control Technologies Based on Deep Learning and Machine Vision. Agronomy. 2025; 15(8):1954. https://doi.org/10.3390/agronomy15081954

Chicago/Turabian StyleGao, Xiangxin, Jianmin Gao, and Waqar Ahmed Qureshi. 2025. "Applications, Trends, and Challenges of Precision Weed Control Technologies Based on Deep Learning and Machine Vision" Agronomy 15, no. 8: 1954. https://doi.org/10.3390/agronomy15081954

APA StyleGao, X., Gao, J., & Qureshi, W. A. (2025). Applications, Trends, and Challenges of Precision Weed Control Technologies Based on Deep Learning and Machine Vision. Agronomy, 15(8), 1954. https://doi.org/10.3390/agronomy15081954