Image and Point Cloud-Based Neural Network Models and Applications in Agricultural Nursery Plant Protection Tasks

Abstract

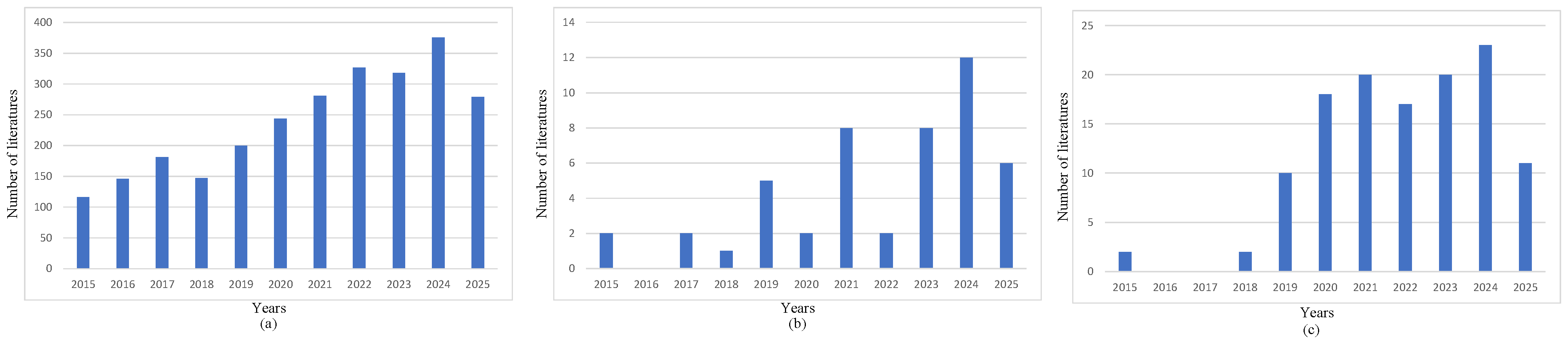

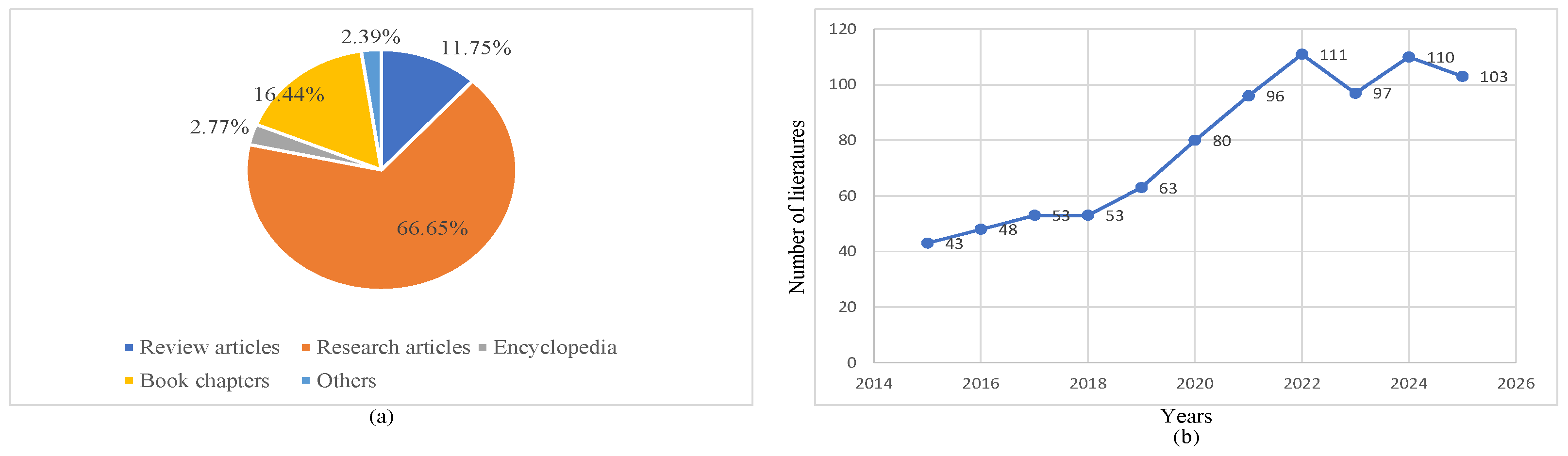

1. Introduction

2. Image-Based Neural Network Models

2.1. Classification Models

2.1.1. Visual Geometry Group Network (VGG)

2.1.2. GoogLeNet

2.1.3. ResNet

2.1.4. MobileNet Series

2.1.5. EfficientNet Series

2.2. Segmentation Models

2.2.1. U-Net Series

2.2.2. DeepLab Series

2.2.3. SegFormer

2.2.4. SegNet

2.3. Object Detection Models

2.3.1. R-CNN Series

2.3.2. YOLO Series

2.3.3. Single Shot MultiBox Detector (SSD)

3. Point Cloud-Based Neural Network Models

3.1. Multi-View-Based Neural Network Models

3.2. Voxel and Mesh-Based Neural Network Models

3.3. Original Point Cloud-Based Neural Network Models

4. Image and Point Cloud-Based Neural Network Models for Plant Protection

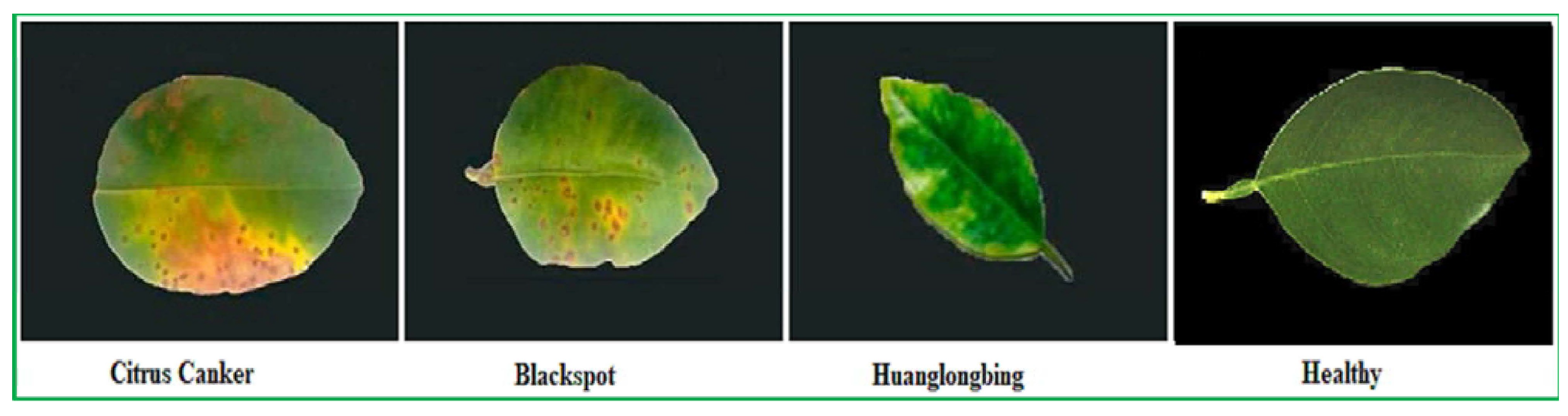

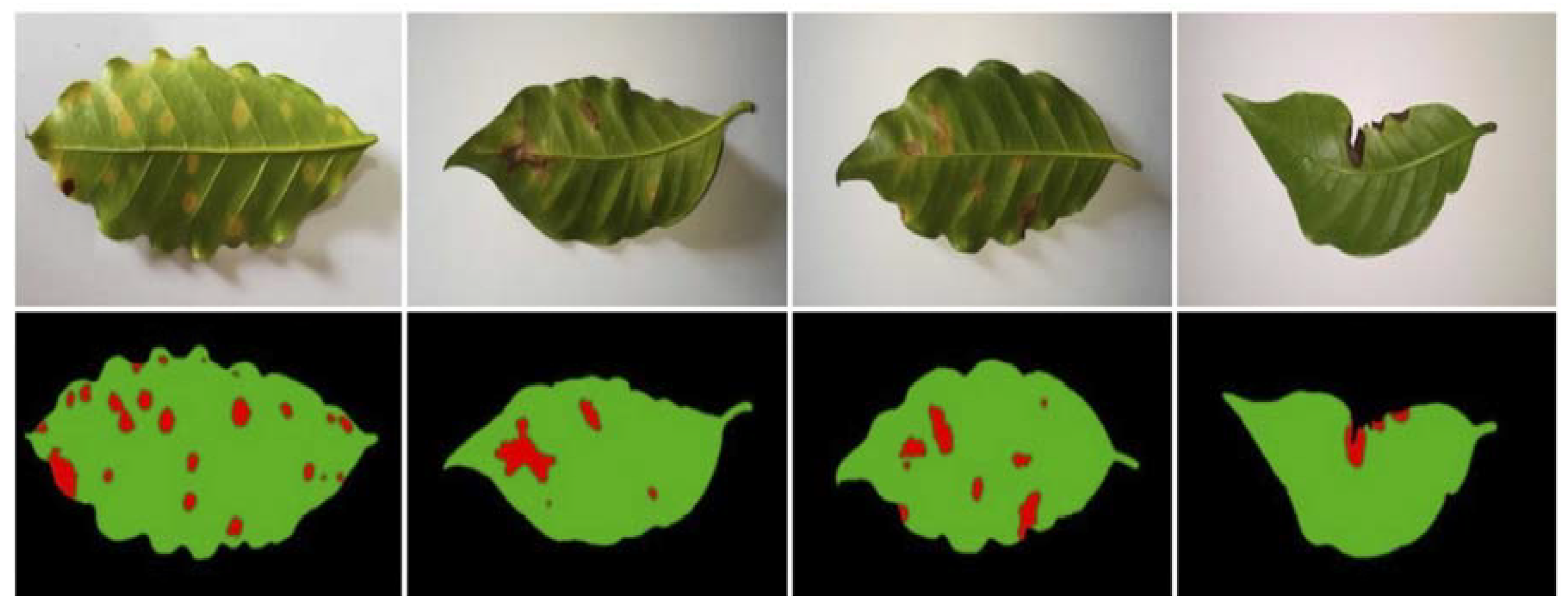

4.1. Leaf Disease Detection

4.2. Pest Identification

4.3. Weed Recognition

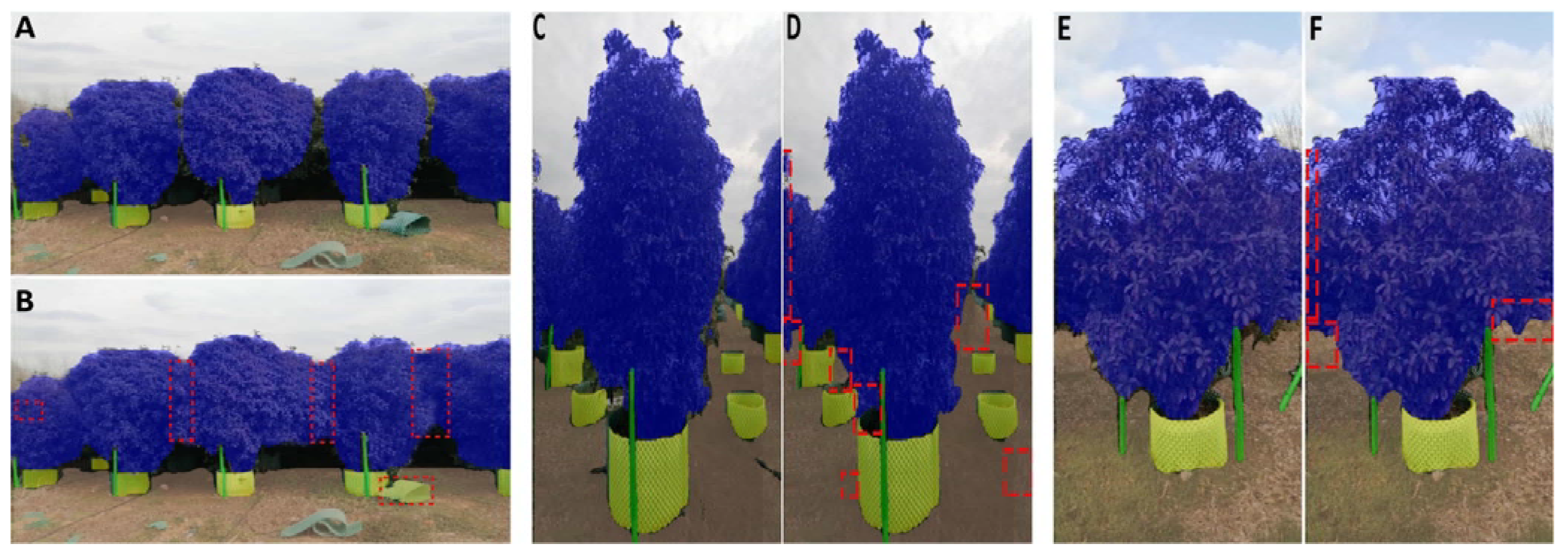

4.4. Target and Non-Target Object Detection

4.5. Seedling Information Monitoring

4.6. Spray Drift Assessment

5. Future Directions and Research Challenges

5.1. Multi-Source Data Fusion and Utilization

5.2. Improvement of Perception Model Performances

5.3. Design of Lightweight Models

5.4. Enhancement of Generalization Abilities of Models

6. Conclusions

- Compared with neural network models based on point clouds, neural network models based on images are more widely applied. Image-based neural network models can fully utilize the color information of objects, enabling them to identify features such as diseased parts of leaves according to color differences. In contrast, point cloud-based neural network models focus more on leveraging the spatial information of objects. Their applications are relatively limited in tasks that rely more on color features, such as leaf disease detection and pest identification, which in turn restricts their scope of application. However, because point cloud–based neural network models can acquire spatial information, they can be used to obtain the spatial position information of targets, making them suitable for tasks like target detection. Moreover, since the position information in point clouds is not affected by lighting conditions, point cloud-based neural network models are more suitable for application scenarios with significant lighting variations.

- In real-time application scenarios, like quickly identifying targets and non-targets and promptly spraying after getting target information, the hardware deploy abilities and fast inference abilities of models are important. The MobileNet series utilizes depth-separable convolutions, SegNet removes the fully-connected layers, the YOLO series adopts the single-stage object detection architecture, and neural network models based on raw point clouds do not require additional data operations. Relying on their unique architectural advantages, these models can effectively meet the requirements for fast inference in such scenarios. Conversely, in application scenarios where high-precision perception takes precedence over real-time performance, like pest recognition and leaf disease diagnosis, more advanced models such as SegFormer and the R-CNN series can be used. These models have strong feature extraction and analytical capabilities, which can provide more accurate detection results.

- Acquiring more valuable features contributes to enhancing the performance of models. Take the GoogLeNet, the DeepLab series, and the SegFormer series as examples. GoogLeNet incorporates the Inception module, and the DeepLab series employs the ASPP module. Both methods can obtain multi-scale features, and the SegFormer series leverages the Transformer architecture to capture global features. When the detection targets are partially occluded, these multi-scale or global features can significantly improve the accuracy of recognition.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| Abbreviation | Full-Length Name |

| SVM | Support Vector Machine |

| PCA | Principal Component Analysis |

| VGG | Visual Geometry Group |

| CNN | Convolutional Neural Network |

| CRF | Conditional Random Field |

| ASPP | Atrous Spatial Pyramid Pooling |

| YOLO | You Only Look Once |

| RPN | Region Proposal Network |

| FPN | Feature Pyramid Network |

| SPP | Spatial Pyramid Pooling |

| MVCNN | Multi-View Convolutional Neural Network |

| AMTCL | Adaptive Margin based Triplet-Center Loss |

| CAL | Capsule Attention Layer |

| VFE | Voxel Feature Encoding |

| SE | Squeeze-and-Excitation Attention Module |

| CA | Coordinate Attention Module |

| ECA | Efficient Channel Attention |

| CBAM | Convolutional Block Attention Module |

| AE-HHO | Adaptive Energy-based Harris Hawks Optimization |

| mAP | Mean Average Precision |

| MSFF | Multi-scale Feature Fusion |

| MSDA | Multi-scale Dilated Attention |

| RICAP | Random Image Cropping and Patching |

| UIB | Universal Inverted Bottleneck |

| PRCN | Parallel RepConv Network |

| PRC | Parallel RepConv |

| DFSNet | Dynamic Fusion Segmentation Network |

References

- Essegbemon, A.; Tjeerd, J.S.; Dansou, K.K.; Alphonse, O.O.; Paul, C.S. Effects of nursery management practices on morphological quality attributes of tree seedlings at planting: The case of oil palm (Elaeis guineensis Jacq.). For. Ecol. Manag. 2014, 324, 28–36. [Google Scholar] [CrossRef]

- Amit, K.J.; Ellen, R.G.; Yigal, E.; Omer, F. Biochar as a management tool for soilborne diseases affecting early stage nursery seedling production. For. Ecol. Manag. 2019, 120, 34–42. [Google Scholar]

- Victor, M.G.; Cinthia, N.; Nazim, S.G.; Angelo, S.; Jesús, G.; Roberto, R.; Jesús, O.; Catalina, E.; Juan, A.F. An in-depth analysis of sustainable practices in vegetable seedlings nurseries: A review. Sci. Hortic. 2024, 334, 113342. [Google Scholar] [CrossRef]

- Li, J.; Wu, Z.; Li, M.; Shang, Z. Dynamic Measurement Method for Steering Wheel Angle of Autonomous Agricultural Vehicles. Agriculture 2024, 14, 1602. [Google Scholar] [CrossRef]

- Ahmed, S.; Qiu, B.; Ahmad, F.; Kong, C.-W.; Xin, H. A State-of-the-Art Analysis of Obstacle Avoidance Methods from the Perspective of an Agricultural Sprayer UAV’s Operation Scenario. Agronomy 2021, 11, 1069. [Google Scholar] [CrossRef]

- Sun, J.; Wang, Z.; Ding, S.; Xia, J.; Xing, G. Adaptive disturbance observer-based fixed time nonsingular terminal sliding mode control for path-tracking of unmanned agricultural tractors. Biosyst. Eng. 2024, 246, 96–109. [Google Scholar]

- Lu, E.; Xue, J.; Chen, T.; Jiang, S. Robust Trajectory Tracking Control of an Autonomous Tractor-Trailer Considering Model Parameter Uncertainties and Disturbances. Agriculture 2023, 13, 869. [Google Scholar] [CrossRef]

- Liu, H.; Yan, S.; Shen, Y.; Li, C.; Zhang, Y.; Hussain, F. Model predictive control system based on direct yaw moment control for 4WID self-steering agriculture vehicle. Int. J. Agric. Biol. Eng. 2021, 14, 175–181. [Google Scholar] [CrossRef]

- Zhu, Y.; Cui, B.; Yu, Z.; Gao, Y.; Wei, X. Tillage Depth Detection and Control Based on Attitude Estimation and Online Calibration of Model Parameters. Agriculture 2024, 14, 2130. [Google Scholar] [CrossRef]

- Dai, D.; Chen, D.; Wang, S.; Li, S.; Mao, X.; Zhang, B.; Wang, Z.; Ma, Z. Compilation and Extrapolation of Load Spectrum of Tractor Ground Vibration Load Based on CEEMDAN-POT Model. Agriculture 2023, 13, 125. [Google Scholar] [CrossRef]

- Liao, J.; Luo, X.; Wang, P.; Zhou, Z.; O’Donnell, C.C.; Zang, Y.; Hewitt, A.J. Analysis of the Influence of Different Parameters on Droplet Characteristics and Droplet Size Classification Categories for Air Induction Nozzle. Agronomy 2020, 10, 256. [Google Scholar] [CrossRef]

- Li, Y.; Li, Y.; Nie, J.; Li, Z.; Li, J.; Gao, J.; Fang, Z. Navigation of the spraying robot in jujube orchard. Alex. Eng. J. 2025, 126, 320–340. [Google Scholar] [CrossRef]

- Prashanta, P.; Ajay, S.; Daniel, F.; Karla, L. Design and systematic evaluation of an under-canopy robotic spray system for row crops. Smart Agric. Technol. 2024, 8, 100510. [Google Scholar] [CrossRef]

- Hamed, R.; Hassan, Z.; Hassan, M.; Gholamreza, A. A new DSWTS algorithm for real-time pedestrian detection in autonomous agricultural tractors as a computer vision system. Measurement 2016, 93, 126–134. [Google Scholar] [CrossRef]

- Hearst, M.A.; Dumais, S.T.; Osuna, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Their Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Kotsiantis, S.B. Decision trees: A recent overview. Artif. Intell. Rev. 2013, 39, 261–283. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Sun, J.; Yang, F.; Cheng, J.; Wang, S.; Fu, L. Nondestructive identification of soybean protein in minced chicken meat based on hyperspectral imaging and VGG16-SVM. J. Food Compos. Anal. 2024, 125, 105713. [Google Scholar] [CrossRef]

- Ding, Y.; Yan, Y.; Li, J.; Chen, X.; Jiang, H. Classification of Tea Quality Levels Using Near-Infrared Spectroscopy Based on CLPSO-SVM. Foods 2022, 11, 1658. [Google Scholar] [CrossRef]

- Dai, C.; Sun, J.; Huang, X.; Zhang, X.; Tian, X.; Wang, W.; Sun, J.; Luan, Y. Application of Hyperspectral Imaging as a Nondestructive Technology for Identifying Tomato Maturity and Quantitatively Predicting Lycopene Content. Foods 2023, 12, 2957. [Google Scholar] [CrossRef]

- Cheng, J.; Sun, J.; Yao, K.; Xu, M.; Zhou, X. Nondestructive detection and visualization of protein oxidation degree of frozen-thawed pork using fluorescence hyperspectral imaging. Meat Sci. 2022, 194, 108975. [Google Scholar] [CrossRef]

- Li, H.; Wu, P.; Dai, J.; Pan, T.; Holmes, M.; Chen, T.; Zou, X. Discriminating compounds identification based on the innovative sparse representation chemometrics to assess the quality of Maofeng tea. J. Food Compos. Anal. 2023, 123, 105590. [Google Scholar] [CrossRef]

- Yao, K.; Sun, J.; Zhang, L.; Zhou, X.; Tian, Y.; Tang, N.; Wu, X. Nondestructive detection for egg freshness based on hyperspectral imaging technology combined with harris hawks optimization support vector regression. J. Food Saf. 2021, 41, e12888. [Google Scholar] [CrossRef]

- Sun, J.; Liu, Y.; Wu, G.; Zhang, Y.; Zhang, R.; Li, X.J. A Fusion Parameter Method for Classifying Freshness of Fish Based on Electrochemical Impedance Spectroscopy. J. Food Qual. 2021, 2021, 6664291. [Google Scholar] [CrossRef]

- Yao, K.; Sun, J.; Zhou, X.; Nirere, A.; Tian, Y.; Wu, X. Nondestructive detection for egg freshness grade based on hyperspectral imaging technology. J. Food Process Eng. 2020, 43, e13422. [Google Scholar] [CrossRef]

- Li, Y.; Pan, T.; Li, H.; Chen, S. Non-invasive quality analysis of thawed tuna using near infrared spectroscopy with baseline correction. J. Food Process Eng. 2020, 43, e13445. [Google Scholar] [CrossRef]

- Wu, X.; Zhou, H.; Wu, B.; Fu, H. Determination of apple varieties by near infrared reflectance spectroscopy coupled with improved possibilistic Gath–Geva clustering algorithm. J. Food Process Eng. 2020, 44, e14561. [Google Scholar] [CrossRef]

- Nirere, A.; Sun, J.; Atindana, V.A.; Hussain, A.; Zhou, X.; Yao, K. A comparative analysis of hybrid SVM and LS-SVM classification algorithms to identify dried wolfberry fruits quality based on hyperspectral imaging technology. J. Food Process. Preserv. 2022, 46, e16320. [Google Scholar] [CrossRef]

- Nirere, A.; Sun, J.; Kama, R.; Atindana, V.A.; Nikubwimana, F.D.; Dusabe, K.D.; Zhong, Y. Nondestructive detection of adulterated wolfberry (Lycium Chinense) fruits based on hyperspectral imaging technology. J. Food Process Eng. 2023, 46, e14293. [Google Scholar] [CrossRef]

- Wang, S.; Sun, J.; Fu, L.; Xu, M.; Tang, N.; Cao, Y.; Yao, K.; Jing, J. Identification of red jujube varieties based on hyperspectral imaging technology combined with CARS-IRIV and SSA-SVM. J. Food Process Eng. 2022, 45, e14137. [Google Scholar] [CrossRef]

- Ahmad, H.; Sun, J.; Nirere, A.; Shaheen, N.; Zhou, X.; Yao, K. Classification of tea varieties based on fluorescence hyperspectral image technology and ABC-SVM algorithm. J. Food Process Eng. 2021, 45, e15241. [Google Scholar] [CrossRef]

- Yao, K.; Sun, J.; Tang, N.; Xu, M.; Cao, Y.; Fu, L.; Zhou, X.; Wu, X. Nondestructive detection for Panax notoginseng powder grades based on hyperspectral imaging technology combined with CARS-PCA and MPA-LSSVM. J. Food Process Eng. 2021, 44, e13718. [Google Scholar] [CrossRef]

- Fu, L.; Sun, J.; Wang, S.; Xu, M.; Yao, K.; Cao, Y.; Tang, N. Identification of maize seed varieties based on stacked sparse autoencoder and near-infrared hyperspectral imaging technology. J. Food Process Eng. 2022, 45, e14120. [Google Scholar] [CrossRef]

- Tang, N.; Sun, J.; Yao, K.; Zhou, X.; Tian, Y.; Cao, Y.; Nirere, A. Identification of varieties based on hyperspectral imaging technique and competitive adaptive reweighted sampling-whale optimization algorithm-support vector machine. J. Food Process Eng. 2021, 44, e13603. [Google Scholar] [CrossRef]

- Jan, S.; Jaroslav, Č.; Eva, N.; Olusegun, O.A.; Jiří, C.; Daniel, P.; Markku, K.; Petya, C.; Jana, A.; Milan, L.; et al. Making the Genotypic Variation Visible: Hyperspectral Phenotyping in Scots Pine Seedlings. Plant Phenomics 2023, 5, 0111. [Google Scholar] [CrossRef] [PubMed]

- Finn, A.; Kumar, P.; Peters, S.; O’Hehir, J. Unsupervised spectral-spatial processing of drone imagery for identification of pine seedlings. ISPRS J. Photogramm. Remote Sens. 2022, 183, 363–388. [Google Scholar] [CrossRef]

- Raypah, M.E.; Nasru, M.I.M.; Nazim, M.H.H.; Omar, A.F.; Zahir, S.A.D.M.; Jamlos, M.F.; Muncan, J. Spectral response to early detection of stressed oil palm seedlings using near-infrared reflectance spectra at region 900–1000 nm. Infrared Phys. Technol. 2023, 135, 104984. [Google Scholar] [CrossRef]

- Zuo, X.; Chu, J.; Shen, J.; Sun, J. Multi-Granularity Feature Aggregation with Self-Attention and Spatial Reasoning for Fine-Grained Crop Disease Classification. Agriculture 2022, 12, 1499. [Google Scholar] [CrossRef]

- Bing, L.; Sun, J.; Yang, N.; Wu, X.; Zhou, X. Identification of tea white star disease and anthrax based on hyperspectral image information. J. Food Process Eng. 2020, 44, e13584. [Google Scholar]

- Wang, Y.; Li, T.; Chen, T.; Zhang, X.; Taha, M.F.; Yang, N.; Mao, H.; Shi, Q. Cucumber Downy Mildew Disease Prediction Using a CNN-LSTM Approach. Agriculture 2024, 14, 1155. [Google Scholar] [CrossRef]

- Deng, J.; Ni, L.; Bai, X.; Jiang, H.; Xu, L. Simultaneous analysis of mildew degree and aflatoxin B1 of wheat by a multi-task deep learning strategy based on microwave detection technology. LWT 2023, 184, 115047. [Google Scholar] [CrossRef]

- Wang, B.; Deng, J.; Jiang, H. Markov Transition Field Combined with Convolutional Neural Network Improved the Predictive Performance of Near-Infrared Spectroscopy Models for Determination of Aflatoxin B1 in Maize. Foods 2022, 11, 2210. [Google Scholar] [CrossRef]

- Cheng, J.; Sun, J.; Shi, L.; Dai, C. An effective method fusing electronic nose and fluorescence hyperspectral imaging for the detection of pork freshness. Food Biosci. 2024, 59, 103880. [Google Scholar] [CrossRef]

- Sun, J.; Cheng, J.; Xu, M.; Yao, K. A method for freshness detection of pork using two-dimensional correlation spectroscopy images combined with dual-branch deep learning. J. Food Compos. Anal. 2024, 129, 106144. [Google Scholar] [CrossRef]

- Xu, B.; Cui, X.; Ji, W.; Yuan, H.; Wang, J. Apple Grading Method Design and Implementation for Automatic Grader Based on Improved YOLOv5. Agriculture 2023, 13, 124. [Google Scholar] [CrossRef]

- Cheng, J.; Sun, J.; Yao, K.; Xu, M.; Dai, C. Multi-task convolutional neural network for simultaneous monitoring of lipid and protein oxidative damage in frozen-thawed pork using hyperspectral imaging. Meat Sci. 2023, 201, 109196. [Google Scholar] [CrossRef] [PubMed]

- Cheng, J.; Sun, J.; Yao, K.; Dai, C. Generalized and hetero two-dimensional correlation analysis of hyperspectral imaging combined with three-dimensional convolutional neural network for evaluating lipid oxidation in pork. Food Control 2023, 153, 109940. [Google Scholar] [CrossRef]

- Yang, F.; Sun, J.; Cheng, J.; Fu, L.; Wang, S.; Xu, M. Detection of starch in minced chicken meat based on hyperspectral imaging technique and transfer learning. J. Food Process Eng. 2023, 46, e14304. [Google Scholar] [CrossRef]

- Geoffrey, C.; Eric, R. Intelligent Imaging in Nuclear Medicine: The Principles of Artificial Intelligence, Machine Learning and Deep Learning. Semin. Nucl. Med. 2021, 51, 102–111. [Google Scholar] [CrossRef]

- Usman, K.; Muhammad, K.K.; Muhammad, A.L.; Muhammad, N.; Muhammad, M.A.; Salman, A.K.; Mazliham, M.S. A Systematic Literature Review of Machine Learning and Deep Learning Approaches for Spectral Image Classification in Agricultural Applications Using Aerial Photography. Comput. Mater. Contin. 2024, 78, 2967–3000. [Google Scholar] [CrossRef]

- Jayme, G.; Arnal, B. A review on the combination of deep learning techniques with proximal hyperspectral images in agriculture. Comput. Electron. Agric. 2023, 210, 107920. [Google Scholar] [CrossRef]

- Atiya, K.; Amol, D.V.; Shankar, M.; Patil, C.H. A systematic review on hyperspectral imaging technology with a machine and deep learning methodology for agricultural applications. Ecol. Inform. 2022, 69, 101678. [Google Scholar]

- Larissa, F.R.M.; Rodrigo, M.; Bruno, A.N.T.; André, R.B. Deep learning based image classification for embedded devices: A systematic review. Neurocomputing 2025, 623, 129402. [Google Scholar] [CrossRef]

- Simonyan, K.; Andrew, Z. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 2015 International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Christian, S.; Liu, W.; Jia, Y.; Pierre, S.; Scott, R.; Dragomir, A.; Dumitru, E.; Vincent, V.; Andrew, R. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. In Proceedings of the AAAI Conference on Artificial Intelligence 2017, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Andrew, G.H.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Tobias, W.; Marco, A.; Hartwig, A. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Mark, S.; Andrew, H.; Zhu, M.; Zhmoginov, A.; Chen, L. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.; Tan, M.; Chu, G.; Vasudevan, V.; Zhu, Y.; Pang, R.; et al. Searching for MobileNetV3. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Adnan, H.; Muhammad, A.; Jin, S.H.; Haseeb, S.; Nadeem, U.; Kang, R.P. Multi-scale and multi-receptive field-based feature fusion for robust segmentation of plant disease and fruit using agricultural images. Appl. Soft Comput. 2024, 167, 112300. [Google Scholar]

- Cheng, J.; Song, Z.; Wu, Y.; Xu, J. ALDNet: A two-stage method with deep aggregation and multi-scale fusion for apple leaf disease spot segmentation. Measurement 2025, 253, 117706. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Chen, L.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic Image Segmentation with Deep Convolutional Nets and Fully Connected CRFs. In Proceedings of the 2014 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Chen, L.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. In Proceedings of the 2017 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017. [Google Scholar]

- Chen, L.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the 2018 European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 833–851. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Jose, M.A.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. In Proceedings of the 2021 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Wang, S.; Zeng, Q.; Ni, W.; Cheng, C.; Wang, Y. ODP-Transformer: Interpretation of pest classification results using image caption generation techniques. Comput. Electron. Agric. 2023, 209, 107863. [Google Scholar] [CrossRef]

- Prasath, B.; Akila, M. IoT-based pest detection and classification using deep features with enhanced deep learning strategies. Eng. Appl. Artif. Intell. 2023, 121, 105985. [Google Scholar]

- Farooq, A.; Huma, Q.; Kashif, S.; Iftikhar, A.; Muhammad, J.I. YOLOCSP-PEST for Crops Pest Localization and Classification. Comput. Mater. Contin. 2023, 82, 2373–2388. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. StructToken: Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Bochkovskiy, A.; Wang, C.; Liao, M.H. YOLOv4: Optimal Speed and Accuracy of Object Detection. In Proceedings of the 2020 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. In Proceedings of the 2024 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024. [Google Scholar]

- Rahima, K.; Muhammad, H. YOLOv11: An Overview of the Key Architectural Enhancements. In Proceedings of the2024 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024. [Google Scholar]

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. In Proceedings of the 2025 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 11–15 June 2025. [Google Scholar]

- Chetan, M.B.; Alwin, P.; Hao, G. Agricultural object detection with You Only Look Once (YOLO) Algorithm: A bibliometric and systematic literature review. Comput. Electron. Agric. 2024, 223, 109090. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Alexander, C.B. SSD: Single Shot MultiBox Detector. In Proceedings of the 2016 European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Hang, S.; Subhransu, M.; Evangelos, K.; Erik, L. Multi-view Convolutional Neural Networks for 3D Shape Recognition. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 945–953. [Google Scholar]

- He, X.; Bai, S.; Chu, J.; Bai, X. An Improved Multi-View Convolutional Neural Network for 3D Object Retrieval. IEEE Trans. Image Process. 2020, 29, 7917–7930. [Google Scholar] [CrossRef]

- Sun, K.; Zhang, J.; Xu, S.; Zhao, Z.; Zhang, C.; Liu, J.; Hu, J. CACNN: Capsule Attention Convolutional Neural Networks for 3D Object Recognition. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 4091–4102. [Google Scholar] [CrossRef]

- Gao, Z.; Zhang, Y.; Zhang, H.; Guan, W.; Feng, D.; Chen, S. Multi-Level View Associative Convolution Network for View-Based 3D Model Retrieval. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 2264–2278. [Google Scholar]

- Wei, X.; Yu, R.; Sun, J. View-GCN: View-Based Graph Convolutional Network for 3D Shape Analysis. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1847–1856. [Google Scholar]

- Maturana, D.; Scherer, S. VoxNet: A 3D Convolutional Neural Network for real-time object recognition. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 922–928. [Google Scholar]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Chen, Y.; Liu, J.; Zhang, X.; Qi, X.; Jia, J. VoxelNeXt: Fully Sparse VoxelNet for 3D Object Detection and Tracking. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 21674–21683. [Google Scholar]

- Li, B. 3D fully convolutional network for vehicle detection in point cloud. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1513–1518. [Google Scholar]

- Wu, Z.; Song, S.; Khosla, A.; Tang, X.; Xiao, J. 3D ShapeNets for 2.5D Object Recognition and Next-Best-View Prediction. In Proceedings of the 2015 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 8–10 June 2015. [Google Scholar]

- Riegler, G.; Ulusoy, A.O.; Geiger, A. OctNet: Learning Deep 3D Representations at High Resolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6620–6629. [Google Scholar]

- Wang, P.; Liu, Y.; Guo, Y.; Sun, C.; Tong, X. O-CNN: Octree-based convolutional neural networks for 3D shape analysis. Assoc. Comput. Mach. 2017, 36, 11. [Google Scholar]

- Klokov, R.; Lempitsky, V. Escape from Cells: Deep Kd-Networks for the Recognition of 3D Point Cloud Models. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 863–872. [Google Scholar]

- Tchapmi, L.; Choy, C.; Armeni, I.; Gwak, J.; Savarese, S. SEGCloud: Semantic Segmentation of 3D Point Clouds. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; pp. 537–547. [Google Scholar]

- Riansyah, M.I.; Putra, O.V.; Priyadi, A.; Sardjono, T.A.; Yuniarno, E.M.; Purnomo, M.H. Modified CNN VoxNet Based Depthwise Separable Convolution for Voxel-Driven Body Orientation Classification. In Proceedings of the 2024 IEEE International Conference on Imaging Systems and Techniques (IST), Tokyo, Japan, 14–16 October 2024; pp. 1–6. [Google Scholar]

- Hanocka, R.; Hertz, A.; Fish, N.; Giryes, R.; Fleishman, S.; Cohen-Or, D. MeshCNN: A network with an edge. Assoc. Comput. Mach. 2019, 38, 12. [Google Scholar]

- Charles, R.Q.; Su, H.; Kaichun, M.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 77–85. [Google Scholar]

- Charles, R.Q.; Charles, R.Q.; Li, Y.; Hao, S.; Leonidas, J.G. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5105–5114. [Google Scholar]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic Graph CNN for Learning on Point Clouds. Assoc. Comput. Mach. 2019, 38, 12. [Google Scholar]

- Zhao, H.; Jiang, L.; Fu, C.; Jia, J. PointWeb: Enhancing Local Neighborhood Features for Point Cloud Processing. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5560–5568. [Google Scholar]

- Jiang, M.; Wu, Y.; Zhao, Z.; Lu, C. PointSIFT: A SIFT-like Network Module for 3D Point Cloud Semantic Segmentation. arXiv 2018, arXiv:1807.00652. [Google Scholar]

- Qian, G.; Hammoud, H.A.A.K.; Li, G.; Thabet, A.; Ghanem, B. ASSANet: An anisotropic separable set abstraction for efficient point cloud representation learning. In Proceedings of the 35th International Conference on Neural Information Processing Systems, Online, 6–14 December 2021; pp. 28119–28130. [Google Scholar]

- Qian, G.; Li, Y.; Peng, H.; Mai, J.; Hammoud, H.; Elhoseiny, M.; Ghanem, B. PointNeXt: Revisiting PointNet++ with Improved Training and Scaling Strategies. Adv. Neural Inf. Process. Syst. 2022, 35, 23192–23204. [Google Scholar]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. RandLA-Net: Efficient Semantic Segmentation of Large-Scale Point Clouds. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11105–11114. [Google Scholar]

- Zhao, H.; Jiang, L.; Jia, J.; Torr, P.; Koltun, V. Point Transformer. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 16239–16248. [Google Scholar]

- Wu, X.; Lao, Y.; Jiang, L.; Liu, X.; Zhao, H. Point Transformer V2: Grouped Vector Attention and Partition-based Pooling. Adv. Neural Inf. Process. Syst. 2022, 35, 33330–33342. [Google Scholar]

- Wu, X.; Jiang, L.; Wang, P.; Liu, Z.; Liu, X.; Qiao, Y.; Ouyang, W.; He, T.; Zhao, H. Point Transformer V3: Simpler, Faster, Stronger. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 4840–4851. [Google Scholar]

- Ananda, S.P.; Vandana, B.M. Transfer Learning for Multi-Crop Leaf Disease Image Classification using Convolutional Neural Network VGG. Artif. Intell. Agric. 2022, 6, 23–33. [Google Scholar] [CrossRef]

- Hong, P.; Luo, X.; Bao, L. Crop disease diagnosis and prediction using two-stream hybrid convolutional neural networks. Crop Prot. 2024, 184, 106867. [Google Scholar] [CrossRef]

- Pudumalar, S.; Muthuramalingam, S. Hydra: An ensemble deep learning recognition model for plant diseases. J. Eng. Res. 2024, 12, 781–792. [Google Scholar] [CrossRef]

- Roopali, D.; Shalli, R.; Aman, S.; Marwan, A.A.; Alina, E.B.; Ahmed, A. Deep learning model for detection of brown spot rice leaf disease with smart agriculture. Comput. Electr. Eng. 2023, 109, 108659. [Google Scholar] [CrossRef]

- Suri, b.N.; Midhun, P.M.; Abubeker, K.M.; Shafeena, K.A. SwinGNet: A Hybrid Swin Transform- GoogleNet Framework for Real-Time Grape Leaf Disease Classification. Procedia Comput. Sci. 2025, 258, 1629–1639. [Google Scholar]

- Biniyam, M.A.; Abdela, A.M. Coffee disease classification using Convolutional Neural Network based on feature concatenation. Inform. Med. Unlocked 2023, 39, 101245. [Google Scholar]

- Liu, Y.; Wang, Z.; Wang, R.; Chen, J.; Gao, H. Flooding-based MobileNet to identify cucumber diseases from leaf images in natural scenes. Comput. Electron. Agric. 2023, 213, 108166. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, D.; Suzauddola, M.; Zeb, A. Identifying crop diseases using attention embedded MobileNet-V2 model. Appl. Soft Comput. 2021, 113, 107901. [Google Scholar] [CrossRef]

- Rukuna, L.A.; Zambuk, F.U.; Gital, A.Y.; Bello, M.U. Citrus diseases detection and classification based on efficientnet-B5. Syst. Soft Comput. 2025, 7, 200199. [Google Scholar] [CrossRef]

- Ding, Y.; Yang, W.; Zhang, J. An improved DeepLabV3+ based approach for disease spot segmentation on apple leaves. Comput. Electron. Agric. 2025, 231, 110041. [Google Scholar] [CrossRef]

- Zhang, Y.; Lv, C. TinySegformer: A lightweight visual segmentation model for real-time agricultural pest detection. Comput. Electron. Agric. 2024, 218, 108740. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, Y.; Xu, X. Dilated inception U-Net with attention for crop pest image segmentation in real-field environment. Smart Agric. Technol. 2025, 11, 100917. [Google Scholar] [CrossRef]

- Peteinatos, G.G.; Reichel, P.; Karouta, J.; Andújar, D.; Gerhards, R. Weed Identification in Maize, Sunflower, and Potatoes with the Aid of Convolutional Neural Networks. Remote Sens. 2020, 12, 4185. [Google Scholar] [CrossRef]

- Alex, O.; Dmitry, A.K.; Bronson, P.; Peter, R.; Jake, C.W.; Jamie, J.; Wesley, B.; Benjamin, G.; Owen, K.; James, W.; et al. DeepWeeds: A Multiclass Weed Species Image Dataset for Deep Learning. Sci. Rep. 2019, 9, 2058. [Google Scholar] [CrossRef]

- Liu, J.; Abbas, I.; Noor, R.S. Development of Deep Learning-Based Variable Rate Agrochemical Spraying System for Targeted Weeds Control in Strawberry Crop. Agronomy 2021, 11, 1480. [Google Scholar] [CrossRef]

- Akhilesh, S.; Vipan, K.; Louis, L. Comparative performance of YOLOv8, YOLOv9, YOLOv10, YOLOv11 and Faster R-CNN models for detection of multiple weed species. Smart Agric. Technol. 2024, 9, 100648. [Google Scholar] [CrossRef]

- García-Navarrete, O.L.; Camacho-Tamayo, J.H.; Bregon, A.B.; Martín-García, J.; Navas-Gracia, L.M. Performance Analysis of Real-Time Detection Transformer and You Only Look Once Models for Weed Detection in Maize Cultivation. Agronomy 2025, 15, 796. [Google Scholar] [CrossRef]

- Deng, L.; Miao, Z.; Zhao, X.; Yang, S.; Gao, Y.; Zhai, C.; Zhao, C. HAD-YOLO: An Accurate and Effective Weed Detection Model Based on Improved YOLOV5 Network. Agronomy 2025, 15, 57. [Google Scholar] [CrossRef]

- Fadwa, A.; Mashael, M.A.; Rana, A.; Radwa, M.; Anwer, M.H.; Ahmed, A.; Deepak, G. Hybrid leader based optimization with deep learning driven weed detection on internet of things enabled smart agriculture environment. Comput. Electr. Eng. 2022, 104, 108411. [Google Scholar] [CrossRef]

- Nitin, R.; Yu, Z.; Maria, V.; Kirk, H.; Michael, O.; Xin, S. Agricultural weed identification in images and videos by integrating optimized deep learning architecture on an edge computing technology. Comput. Electr. Eng. 2024, 216, 108442. [Google Scholar]

- Ma, C.; Chi, G.; Ju, X.; Zhang, J.; Yan, C. YOLO-CWD: A novel model for crop and weed detection based on improved YOLOv8. Crop Prot. 2025, 192, 107169. [Google Scholar] [CrossRef]

- Steininger, D.; Trondl, A.; Croonen, G.; Simon, J.; Widhalm, V. The CropAndWeed Dataset: A Multi-Modal Learning Approach for Efficient Crop and Weed Manipulation. In Proceedings of the 2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2–7 January 2023; pp. 3718–3727. [Google Scholar]

- Qi, Z.; Wang, J. PMDNet: An Improved Object Detection Model for Wheat Field Weed. Agronomy 2025, 15, 55. [Google Scholar] [CrossRef]

- Jin, T.; Liang, K.; Lu, M.; Zhao, Y.; Xu, Y. WeedsSORT: A weed tracking-by-detection framework for laser weeding applications within precision agriculture. Smart Agric. Technol. 2025, 11, 100883. [Google Scholar] [CrossRef]

- Nils, H.; Kai, L.; Anthony, S. Accelerating weed detection for smart agricultural sprayers using a Neural Processing Unit. Comput. Electron. Agric. 2025, 237, 110608. [Google Scholar] [CrossRef]

- Sanjay, K.G.; Shivam, K.Y.; Sanjay, K.S.; Udai, S.; Pradeep, K.S. Multiclass weed identification using semantic segmentation: An automated approach for precision agriculture. Ecol. Inform. 2023, 78, 102366. [Google Scholar]

- Su, D.; Kong, H.; Qiao, Y.; Sukkarieh, S. Data augmentation for deep learning based semantic segmentation and crop-weed classification in agricultural robotics. Comput. Electron. Agric. 2021, 190, 106418. [Google Scholar] [CrossRef]

- Mohammed, H.; Salma, S.; Adil, T.; Youssef, O. New segmentation approach for effective weed management in agriculture. Smart Agric. Technol. 2024, 8, 100505. [Google Scholar] [CrossRef]

- Liu, G.; Jin, C.; Ni, Y.; Yang, T.; Liu, Z. UCIW-YOLO: Multi-category and high-precision obstacle detection model for agricultural machinery in unstructured farmland environments. Expert Syst. Appl. 2025, 294, 128686. [Google Scholar] [CrossRef]

- Cui, X.; Zhu, L.; Zhao, B.; Wang, R.; Han, Z.; Zhang, W.; Dong, L. Parallel RepConv network: Efficient vineyard obstacle detection with adaptability to multi-illumination conditions. Comput. Electron. Agric. 2025, 230, 109901. [Google Scholar] [CrossRef]

- Li, Y.; Li, M.; Qi, J.; Zhou, D.; Zou, Z.; Liu, K. Detection of typical obstacles in orchards based on deep convolutional neural network. Comput. Electron. Agric. 2021, 181, 105932. [Google Scholar] [CrossRef]

- Liu, H.; Du, Z.; Yang, F.; Zhang, Y.; Shen, Y. Real-time recognizing spray target in forest and fruit orchard using lightweight PointNet. Trans. Chin. Soc. Agric. Eng. 2024, 40, 144–151. [Google Scholar]

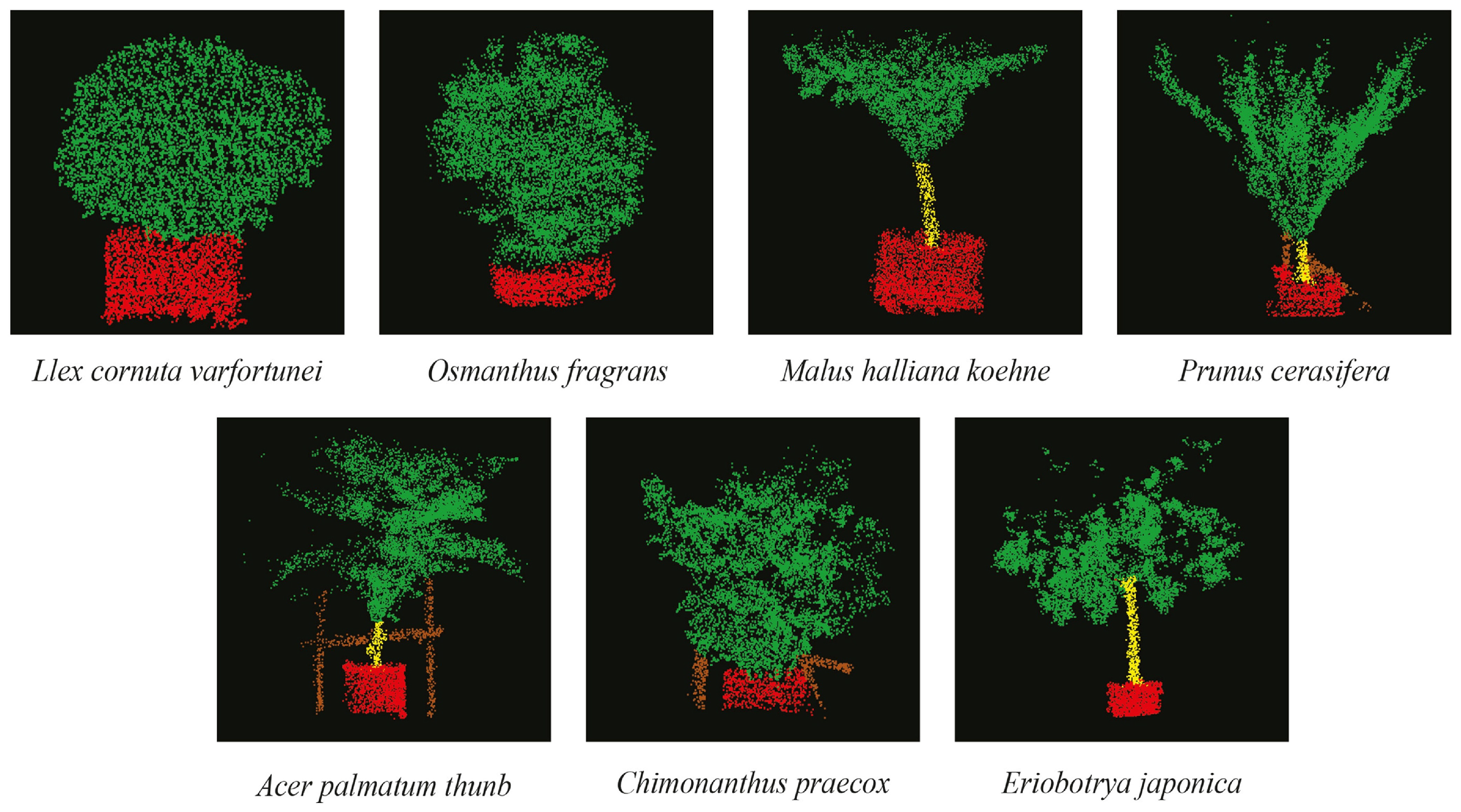

- Liu, H.; Wang, X.; Shen, Y.; Xu, J. Multi-objective classification method of nursery scene based on 3D laser point cloud. J. ZheJiang Univ. (Eng. Sci.) 2023, 57, 2430–2438. [Google Scholar]

- Qin, J.; Sun, R.; Zhou, K.; Xu, Y.; Lin, B.; Yang, L.; Chen, Z.; Wen, L.; Wu, C. Lidar-Based 3D Obstacle Detection Using Focal Voxel R-CNN for Farmland Environment. Agronomy 2023, 13, 650. [Google Scholar] [CrossRef]

- Can, T.N.; Phan, K.D.; Dang, H.N.; Nguyen, K.D.; Nguyen, T.H.D.; Thanh-Noi, P.; Vo, Q.M.; Nguyen, H.Q. Leveraging convolutional neural networks and textural features for tropical fruit tree species classification. Remote Sens. Appl. Soc. Environ. 2025, 39, 101633. [Google Scholar]

- Mulugeta, A.K.; Durga, P.S.; Mesfin, A.H. Deep learning for Ethiopian indigenous medicinal plant species identification and classification. J. Ayurveda Integr. Med. 2024, 15, 100987. [Google Scholar] [CrossRef]

- Xu, L.; Lu, C.; Zhou, T.; Wu, J.; Feng, H. A 3D-2DCNN-CA approach for enhanced classification of hickory tree species using UAV-based hyperspectral imaging. Microchem. J. 2024, 199, 109981. [Google Scholar] [CrossRef]

- Muhammad, A.; Ahmar, R.; Khurram, K.; Abid, I.; Faheem, K.; Muhammad, A.A.; Hammad, M.C. Real-time precision spraying application for tobacco plants. Smart Agric. Technol. 2024, 8, 100497. [Google Scholar] [CrossRef]

- Khan, Z.; Liu, H.; Shen, Y.; Zeng, X. Deep learning improved YOLOv8 algorithm: Real-time precise instance segmentation of crown region orchard canopies in natural environment. Comput. Electron. Agric. 2024, 224, 109168. [Google Scholar] [CrossRef]

- Wei, P.; Yan, X.; Yan, W.; Sun, L.; Xu, J.; Yuan, H. Precise extraction of targeted apple tree canopy with YOLO-Fi model for advanced UAV spraying plans. Comput. Electron. Agric. 2024, 226, 109425. [Google Scholar] [CrossRef]

- Zhang, J.; Lu, J.; Zhang, Q.; Qi, Q.; Zheng, G.; Chen, F.; Chen, S.; Zhang, F.; Fang, W.; Guan, Z. Estimation of Garden Chrysanthemum Crown Diameter Using Unmanned Aerial Vehicle (UAV)-Based RGB Imagery. Agronomy 2024, 14, 337. [Google Scholar] [CrossRef]

- He, J.; Duan, J.; Yang, Z.; Ou, J.; Ou, X.; Yu, S.; Xie, M.; Luo, Y.; Wang, H.; Jiang, Q. Method for Segmentation of Banana Crown Based on Improved DeepLabv3+. Agronomy 2023, 13, 1838. [Google Scholar] [CrossRef]

- Huo, Y.; Leng, L.; Wang, M.; Ji, X.; Wang, M. CSA-PointNet: A tree species classification model for coniferous and broad-leaved mixed forests. World Geol. 2024, 43, 551–556. [Google Scholar]

- Xu, J.; Liu, H.; Shen, Y.; Zeng, X.; Zheng, X. Individual nursery trees classification and segmentation using a point cloud-based neural network with dense connection pattern. Sci. Hortic. 2024, 328, 112945. [Google Scholar] [CrossRef]

- Bu, X.; Liu, C.; Liu, H.; Yang, G.; Shen, Y.; Xu, J. DFSNet: A 3D Point Cloud Segmentation Network toward Trees Detection in an Orchard Scene. Sensors 2024, 24, 2244. [Google Scholar] [CrossRef] [PubMed]

- Seol, J.; Kim, J.; Son, H.I. Spray Drift Segmentation for Intelligent Spraying System Using 3D Point Cloud Deep Learning Framework. IEEE Access 2022, 10, 77263–77271. [Google Scholar] [CrossRef]

- Liu, H.; Xu, J.; Chen, W.; Shen, Y.; Kai, J. Efficient Semantic Segmentation for Large-Scale Agricultural Nursery Managements via Point Cloud-Based Neural Network. Remote Sens. 2024, 16, 4011. [Google Scholar] [CrossRef]

- Yang, J.; Gan, R.; Luo, B.; Wang, A.; Shi, S.; Du, L. An Improved Method for Individual Tree Segmentation in Complex Urban Scenes Based on Using Multispectral LiDAR by Deep Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 6561–6576. [Google Scholar] [CrossRef]

- Chang, L.; Fan, H.; Zhu, N.; Dong, Z. A Two-Stage Approach for Individual Tree Segmentation From TLS Point Clouds. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 8682–8693. [Google Scholar] [CrossRef]

- Xi, Z.; Degenhardt, D. A new unified framework for supervised 3D crown segmentation (TreeisoNet) using deep neural networks across airborne, UAV-borne, and terrestrial laser scans. ISPRS Open J. Photogramm. Remote Sens. 2025, 15, 100083. [Google Scholar] [CrossRef]

- Jiang, T.; Wang, Y.; Liu, S.; Zhang, Q.; Zhao, L.; Sun, J. Instance recognition of street trees from urban point clouds using a three-stage neural network. ISPRS Open J. Photogramm. Remote Sens. 2023, 199, 305–334. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, A.; Gao, P. From Crown Detection to Boundary Segmentation: Advancing Forest Analytics with Enhanced YOLO Model and Airborne LiDAR Point Clouds. Forests 2025, 16, 248. [Google Scholar] [CrossRef]

- Chen, S.; Liu, J.; Xu, X.; Guo, J.; Hu, S.; Zhou, Z.; Lan, Y. Detection and tracking of agricultural spray droplets using GSConv-enhanced YOLOv5s and DeepSORT. Comput. Electron. Agric. 2025, 235, 110353. [Google Scholar]

- Praneel, A.; Travis, B.; Kim-Doang, N. AI-enabled droplet detection and tracking for agricultural spraying systems. Comput. Electron. Agric. 2022, 202, 107325. [Google Scholar] [CrossRef]

- Kumar, M.S.; Hogan, J.C.; Fredericks, S.A.; Hong, J. Visualization and characterization of agricultural sprays using machine learning based digital inline holography. Comput. Electron. Agric. 2024, 216, 108486. [Google Scholar] [CrossRef]

- Seol, J.; Kim, C.; Ju, E.; Son, H.I. STPAS: Spatial-Temporal Filtering-Based Perception and Analysis System for Precision Aerial Spraying. IEEE Access 2024, 12, 145997–146008. [Google Scholar] [CrossRef]

| Method | Types of Inputs | Tasks | Advantages | Disadvantages | References |

|---|---|---|---|---|---|

| VGG series | Images | Leaf Disease Detection; Pest Identification; Weed Recognition; Seedling Information Monitoring. | Simple structures; Composed of common modules; Easy to understand and implement. | Computational complexity. | [75,117,118,119,120,129,142,152] |

| GoogLeNet series | Images | Leaf Disease Detection; Weed Recognition. | Obtain multi-scale features; High accuracy performance. | Complex structures; Computational complexity. | [121,122,131] |

| ResNet series | Images | Leaf Disease Detection; Pest Identification; Weed Recognition. | Alleviates the problems of vanishing and exploding gradients. | Much redundant information. | [75,122,129,130,142] |

| MobileNet series | Images | Leaf Disease Detection; Weed Recognition; Target and Non-target Object Detection; Seedling Information Monitoring. | Computational efficiency; Low-memory utilization; Rapid inference speed. | Limits feature extraction capabilities; Poor performance in complex scenarios. | [123,124,126,142,147,158] |

| EfficientNet series | Images | Leaf Disease Detection. | Adjust parameters to get high-performance efficiency. | Simultaneously adjusting multiple parameters increases complexity. | [125] |

| U-Net series | Images | Leaf Disease Detection; Pest Identification; Weed Recognition; Spray Drift Assessment. | Preserve multi-level feature information; Good performances in small-sample conditions. | Complex structures; High memory consumption; Long training times for large-scale data. | [65,128,142,144,171] |

| DeepLab series | Images | Leaf Disease Detection; Seedling Information Monitoring. | Larger receptive field; Obtains multi-scale features. | High memory consumption; Computational complexity Lack robustness in complex scenarios. | [65,126,158] |

| SegFormer | Images | Pest Identification. | Capture global features; High accuracy performance. | Complex structures; High memory consumption; Computational complexity. | [127] |

| SegNet | Images | Leaf Disease Detection. | Obtain precise boundary information; Low memory requirements; Fewer model parameters. | Low segmentation accuracy for small-sized objects. | [65] |

| R-CNN series | Images | Pest Identification; Target and Non-target Object Detection; Seedling Information Monitoring; Spray Drift Assessment. | Relatively high detection accuracy; Good performances for small objects. | Involve multi-step processes; Computational complexity. | [74,147,150,157,170] |

| YOLO series | Images | Pest Identification; Target and Non-target Object Detection; Seedling Information Monitoring; Spray Drift Assessment. | Adopt one-stage object detection architectures; Simple network structures. | Low accuracy in detecting small objects; Poor localization precision. | [75,76,134,135,136,137,140,144,145,147,154,155,156,165,168,169] |

| SSD series | Images | Target and Non-target Object Detection. | Obtain multi-scale features; High accuracy performance. | High memory consumption during training and inference; Computational complexity. | [147] |

| Multi-view-based Neural Network Models | Point clouds | Seedling Information Monitoring. | Utilize high-performance 2D neural network models; High accuracy performance. | Obtain the relationships between different images; The loss of original 3D spatial features. | [165] |

| Voxel and Mesh-based Neural Network Models | Point clouds | Target and Non-target Object Detection. | Utilize high-performance 3D neural network models; High accuracy performance. | Requires more memory and time for Voxelizing processes; The loss of original 3D spatial features. | [150] |

| Original point cloud-based Neural Network Models | Point clouds | Target and Non-target Object Detection; Seedling Information Monitoring; Spray Drift Assessment. | Fully utilize the original spatial features of point clouds. | Problems such as the original disorder of point clouds need to be solved. | [148,149,159,160,161,162,163,164,165,172] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, J.; Liu, H.; Shen, Y. Image and Point Cloud-Based Neural Network Models and Applications in Agricultural Nursery Plant Protection Tasks. Agronomy 2025, 15, 2147. https://doi.org/10.3390/agronomy15092147

Xu J, Liu H, Shen Y. Image and Point Cloud-Based Neural Network Models and Applications in Agricultural Nursery Plant Protection Tasks. Agronomy. 2025; 15(9):2147. https://doi.org/10.3390/agronomy15092147

Chicago/Turabian StyleXu, Jie, Hui Liu, and Yue Shen. 2025. "Image and Point Cloud-Based Neural Network Models and Applications in Agricultural Nursery Plant Protection Tasks" Agronomy 15, no. 9: 2147. https://doi.org/10.3390/agronomy15092147

APA StyleXu, J., Liu, H., & Shen, Y. (2025). Image and Point Cloud-Based Neural Network Models and Applications in Agricultural Nursery Plant Protection Tasks. Agronomy, 15(9), 2147. https://doi.org/10.3390/agronomy15092147