1. Introduction

As an important economic crop, cotton has an annual global production of more than 25 million tons and generates at least 600 billion U.S. dollars per year [

1]. Its economic impact is significant, as its value chain covers textiles, clothing, oil processing, and other fields. Cotton occupies a pivotal position in the global textile industry and agricultural economy [

2,

3]. However, cotton leaves are susceptible to various pests and diseases, including leaf spot, aphids, armyworms, bacterial blight, fusarium wilt, leaf curl disease, etc. These pests and diseases seriously affect the yield and quality of cotton and pose a threat to the economic returns of cotton farmers and the ecological environment [

4]. Leaf blight, for example, can lead to the yellowing and shedding of cotton plant leaves and result in severe yield loss of more than 50%. According to Food and Agriculture Organization (FAO) statistics, the global annual loss of cotton due to pests and diseases exceeds 12 billion U.S. dollars, with 65% of this loss occurring in developing countries [

5]. Although traditional chemical pesticide spraying can temporarily control pests and diseases, cotton farmers usually need to inspect the field 2–3 times a day. They rely on visual observation and experience to determine the type of pests and diseases. Additionally, whole-field testing takes up to 3–5 h, and the accuracy rate is limited by the inspector’s professionalism. Grassroots cotton farmers misjudge the disease more than 40% of the time. The problems of efficiency, speed, time costs, labor costs, and accuracy are becoming more obvious, prompting the need for smarter, more sustainable pest control strategies [

6].

In recent years, the rapid development of artificial intelligence technology has made the application of deep learning in precision agriculture highly promising [

7,

8]. The agricultural sector has seen new methods for detecting pests and diseases [

9]. Due to their high efficiency, high accuracy, and strong robustness, target detection algorithms based on deep learning have become a hotspot in pest and disease detection research [

10,

11]. These algorithms can automatically learn image features to rapidly localize and classify pests and diseases, providing a powerful tool for the early detection and precise prevention and control of cotton pests and diseases [

12]. Rui et al. [

13] proposed a multi-source data fusion (MDF) decision-making method based on ShuffleNet V2 for detecting leaf pests and diseases. The method combines RGB images, multispectral images, and thermal infrared images, improving the detection performance through multi-source data fusion. To enhance the robustness of the model, a label smoothing technique is introduced in the study to reduce the issue of model overconfidence. The method proposes an MDF model to improve the accuracy and robustness of grape leaf pests and diseases detection, especially in complex environments. Yang et al. [

14] proposed a new maize pest detection method, Maize-YOLO, which is based on YOLOv7 and introduces the CSPResNeXt-50 module and the VoVGSCSP module. The CSPResNeXt-50 module improves the detection accuracy by optimizing the network structure. The VoVGSCSP module, on the other hand, further improves the detection speed. The method achieves 76.3% mAP and 77.3% recall. However, none of them were able to establish an effective local–global feature interaction chain and lacked an adaptive recalibration mechanism for lesion channels, making it difficult to detect features in complex background situations.

Li et al. [

15] proposed a new deep learning network, YOLO-JD, for detecting jute pests and diseases. The network introduces an hourglass feature extraction module (SCFEM), a deep hourglass feature extraction module (DSCFEM), and a spatial pyramid pooling module (SPPM) to improve the feature extraction efficiency. Furthermore, a comprehensive loss function, including IoU loss and CIoU loss, is proposed to enhance the detection accuracy in dealing with pests and diseases in jute production. Wang et al. [

16] proposed an improved YOLO model, YOLO-PWD, for detecting pine wood nematode disease (PWD). The model is based on YOLOv5s and introduces a squeeze and excitation (SE) network, a CBAM, and a bi-directional feature pyramid network (BiFPN) to strengthen the feature extraction capability. Additionally, a Dynamic Convolutional Kernel (Dynamic Conv) was introduced to improve the model’s deployment ability on edge devices (e.g., Autonomous Aerial Vehicle (AAV)), and improve the accuracy of detecting PWDs in complex environments, providing technical support for the conservation of pine forest resources and sustainable environmental development. Yuan et al. [

17] proposed a new highly robust detection method for pine wood nematode disease, YOLOv8-RD, which is based on the YOLOv8 model. This approach combines the advantages of residual learning and fuzzy deep neural network (FDNN), and designs a residual fuzzy module (ResFuzzy) to efficiently filter image noise and enhance the smoothness of background features. Meanwhile, the study introduces a dynamic upsampling operator (DySample) to dynamically adjust the upsampling step size so as to effectively recover the detail information in the feature map. None of the above methods have solved the dynamics of the target scale in agricultural scenarios, resulting in the model being unable to adaptively focus on key areas and having low performance under complex background interference.

Liu et al. [

18] proposed the tomato leaf disease detection model YOLO-BSMamba, which enhances global context modeling by fusing convolution with the state space model (HCMamba module) and introduces the SimAM attention mechanism to suppress background noise, achieving an 86.7% mAP@0.5 in complex backgrounds. However, its feature extraction process still relies on static convolution kernels with fixed sampling positions, resulting in insufficient geometric adaptability to lesion deformation (such as irregular edge expansion). Similarly, Fang et al. [

19] proposed RRDD-Yolov11n for rice diseases, adopted the SCSABlock attention module to optimize multi-semantic fusion, and utilized CARAFE upsampling to restore detailed features, increasing the mAP to 88.3%. However, CARAFE only reorganizes the kernel weights through content prediction and does not achieve dynamic offset learning of the sampling position, which limits the perception accuracy of the model for the morphological changes of the lesion.

Detecting cotton leaf pests and diseases is difficult [

20,

21]. Significant differences exist in the leaf morphology of different cotton varieties [

22]. Due to differences in cuticle thickness and chlorophyll levels, we observe a different set of symptoms. For example, wilt is characterized by yellowing and curling in young leaves and brown necrotic spots in mature leaves. Aphids are only 0.5~2 mm long, leaf spot disease spots are 2~15 mm in diameter, and wilt disease covers the entire leaf blade. These differences make it hard for pest and disease detection model to accurately identify pests and diseases. Meanwhile, a more complex agricultural background, combined with varying weather conditions and light intensity leads to different backgrounds [

23]. This contributes to the multi-scale characteristics of pests and diseases on cotton leaves, thereby increasing the requirements for improving the detection accuracy.

It turns out that developing intelligent detection algorithms for cotton leaf pests and diseases is of great importance for the sustainability of modern agriculture [

24]. This technological improvement allows scientists to manage and safeguard cotton resources intelligently, promote biodiversity, and more effectively identify the early onset of pests or plant diseases. This technology can also reduce economic losses and maintain a healthy and stable ecological environment [

25,

26].

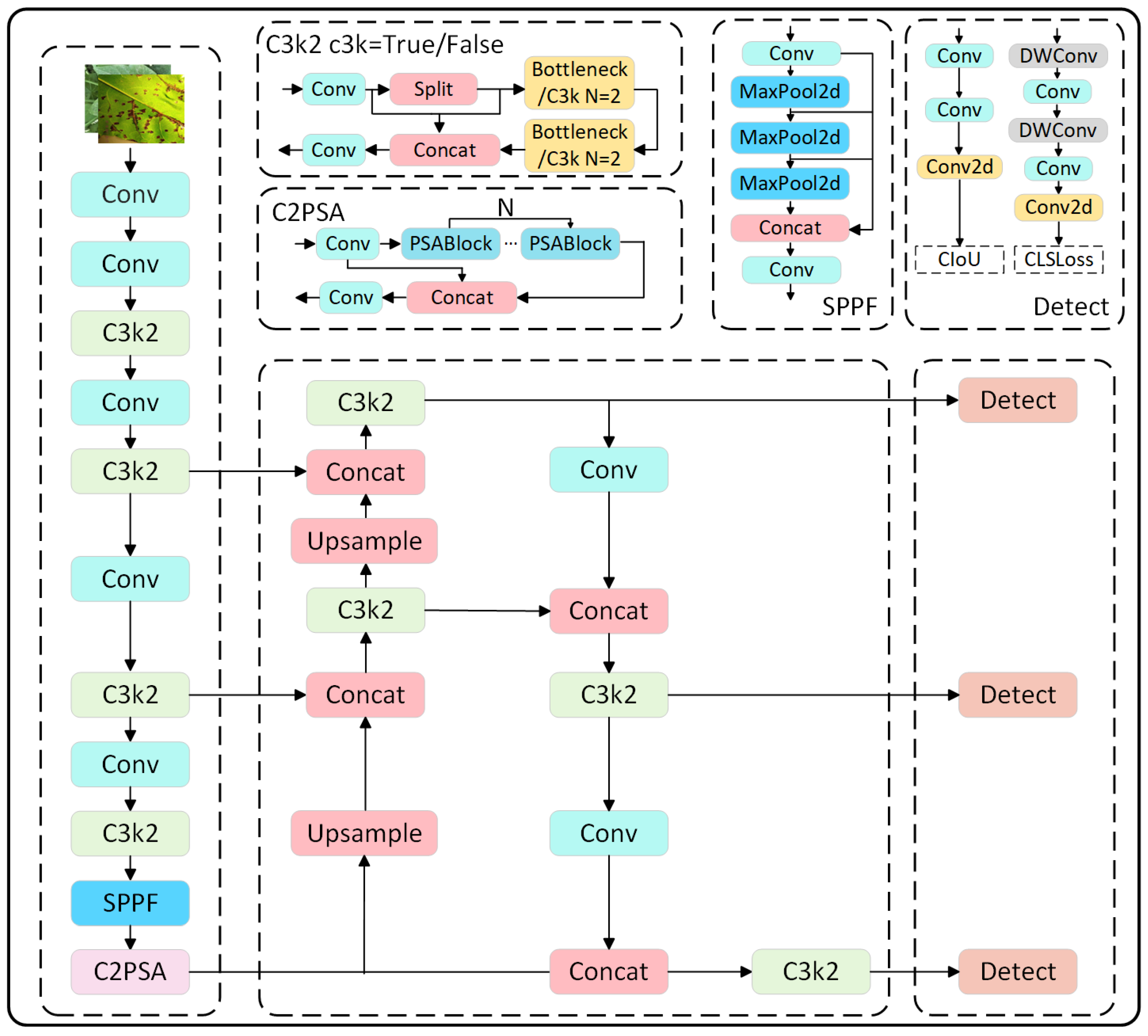

To address the aforementioned challenges of complex backgrounds, diverse lesion morphologies, and information loss from fixed sampling, this study proposes a novel model, RDL-YOLO, which integrates RepViT-A for enhancing local–global feature interaction, DDC for achieving a dynamic multi-scale receptive field, and LDConv for dynamic sampling. Based on the YOLOv11 framework, RDL-YOLO effectively improves its capability for multi-scale detection of cotton leaf pests and diseases. The main contributions of this work are as follows:

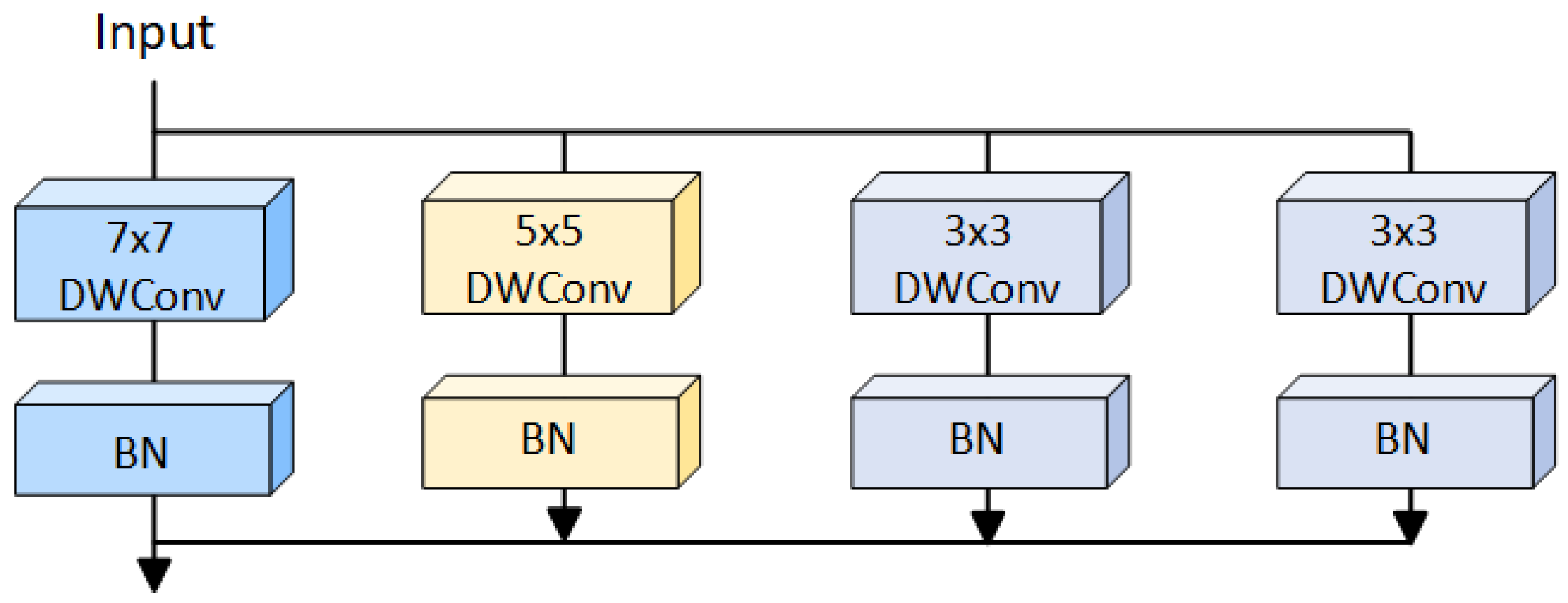

(a) In order to enhance the model’s ability to extract features of cotton leaf pests and diseases, RepViT-Atrous Convolution (RepViT-A) was adopted as the backbone network. The original RepViTBlock and RepViTSEBlock were replaced with the improved and developed AADBlock and AADSEBlock modules. These modules enhance local–global interaction. The AADSEBlock extends the AADBlock through a squeezing and excitation mechanism. The AADBlock realizes the recalibration of channel features, significantly improving the response strength and extraction accuracy of key lesion features under complex background interference.

(b) To solve the problems of inflexibility of the traditional convolution receptive field and excessive sensitivity to noises such as cotton leaf fuzz and uneven illumination, a CCD convolution module is proposed. Through dilated convolution to extract and adjust multi-scale features and relative weights, a dynamic multi-scale receptive field is achieved, further enhancing the adaptability of the model to defects of different shapes and sizes. Regarding the problem of detail loss in illumination changes, through the channel-space joint filtering of CBAM, the non-lesion background interference in the cotton field images is effectively suppressed.

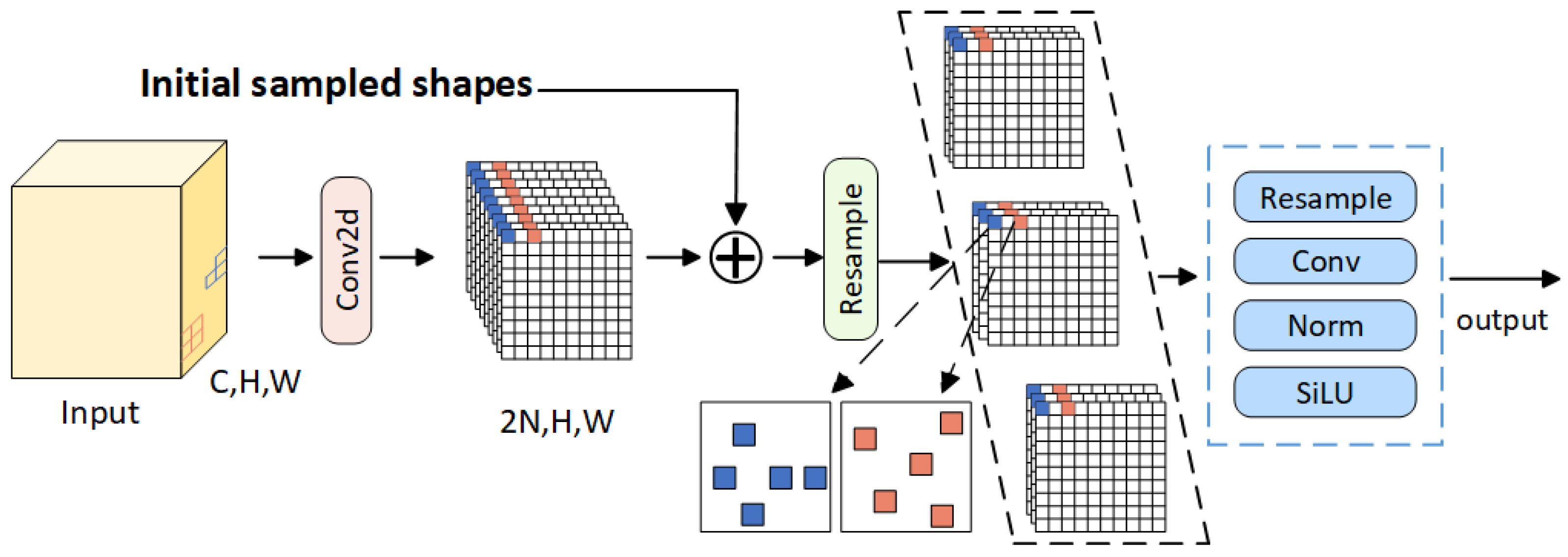

(c) To capture of cotton leaf features more rapidly, the issue of feature information loss or inaccuracy due to the fixed sampling position in traditional convolutions is resolved. Meanwhile, LDConv downsampling is combined with the dynamic sampling mechanism to resample the feature map according to the learned offset, further optimizing the effect of feature fusion.

3. Results

This section provides a detailed introduction to the application of the RDL-YOLO performance model in the task of detecting diseases and pests of cotton leaves. The various indicators of RDL-YOLO were verified through comparative experiments and ablation experiments. We also verify the validity of the proposed module and model.

3.1. Ablation Experiments

The performance of the cotton leaf pest and disease detection model was analyzed through three evaluation indices: P, R, and mAP.

Table 3 shows the results of ablation experiments with different module combinations. The results indicate that adopting the RepViT-A structure greatly enhanced the model’s detection capabilities, especially in the recall, which increased from 69.2% to 71.8% and the mAP improved from 73.4% to 76.4%. This proves that RepViT-A can enhance the model’s perception of cotton leaf pest regions at multiple scales. Furthermore, introducing the LDConv module on top of RepViT-A improved spatial adaptability in feature extraction, increasing recall to 73.4% and mAP to 76.7%.

Additionally, the DDC module on top of RepViT-A shows strong detection ability, with mAP reaching 77.0%, and precision 85.0%. The structure is more sensitive in differentiating between diseased areas and complicated backgrounds, thus minimizing false alarms. After the fusion of the three modules, RepViT-A, DDC, and LDConv, the model finally achieved a perfect score in detecting cotton leaf pests and diseases, with a recall of 73.6% and a mAP of 77.1%. This success can be attributed to the combined advantages of the modules in feature extraction, fusion, and spatial perception. To substantiate these findings, we conducted modular ablation experiments to demonstrate how each component enhances the model’s ability to detect cotton leaf pests and diseases. Ultimately, the final integrated model demonstrates superior performance across all types of pests and diseases, particularly excelling in the detection of small and occlusion targets, occluded objects, and regions with unclear boundaries.

3.2. Comparative Experiments

Recent studies have shown that, on standard object detection datasets, an improvement of more than 2% in mAP relative to the baseline is generally considered a meaningful performance improvement [

33,

34]. Therefore, this work used a 2% mAP as the practical threshold to determine the performance difference between models. In this study, the performance of the proposed RDL-YOLO model in a cotton leaf pest and disease detection task was evaluated. A variety of target detection models (including YOLOv10n, YOLOv11n, YOLOv12n, Real-Time Detection Transformer (RT-DETR50), DETRs as Fine-grained Distribution Refinement (D-FINE) [

35], and Spatial Transformer Network-YOLO (STN-YOLO) [

36]) was compared with the proposed model on the Cotton Leaf dataset. Additionally, the generalization ability of the model was verified on the publicly available Tomato Leaf Diseases Detection Computer Vision dataset. The results of the comparison experiments are shown in

Table 4. The experimental results show that on the Cotton Leaf dataset, RDL-YOLO achieves a precision(P) of 81.7%, a recall(R) of 73.6%, and a mAP of 77.1%, outperforming all comparison models in overall detection performance. Compared with YOLOv11n (80.3% P, 69.2% R, 73.4% mAP), the precision of RDL-YOLO increased by 1.4%, the recall increased by 4.4% and the mAP increased by 3.7%. Compared with RT-DETR50 (83.9% P, 69.0% R, 73.2% mAP), the precision of RDL-YOLO decreased by 2.2%, the recall increased by 4.6% and the mAP increased by 3.9%. Analysis shows that although the precision (P) has slightly decreased, the comprehensive performance of RDL-YOLO is better than that of RT-DETR50. Compared with D-FINE (74.9% P, 65.1% R, 74.9% mAP), the precision of RDL-YOLO increased by 6.8%, the recall increased by 8.5% and the mAP increased by 2.2%, further demonstrating its comprehensive advantages in target coverage, target recognition and positioning accuracy. Compared with STN-YOLO (79.1% P, 70.4% R, 73.9% mAP), the precision of RDL-YOLO increased by 2.6%, the recall of increased by 3.2%, and the mAP has increased by 3.2%, demonstrating its stronger detection ability for targets in complex backgrounds. On the Tomato Leaf Diseases Detection dataset, RDL-YOLO also demonstrated excellent generalization ability, achieving a mAP of 74.5%, outperforming all comparison models. In contrast, the mAP values for YOLOv10n, YOLOv11n, YOLOv12n, RT-DETR50, D-FINE, and STN-YOLO were 66.5%, 68.6%, 67.2%, 61.5%, 67.9%, and 67.7%, respectively. The mAP of RDL-YOLO was significantly higher than comparative models.It further proves the stability and adaptability of RDL-YOLO in cross-crop scenarios. The number of parameters (Params), GFLOPs, and inference speed (FPS) are presented in

Table 4 to comprehensively evaluate the computational cost. These metrics facilitate a trade-off analysis between detection accuracy and inference efficiency. RDL-YOLO has a large number of parameters and relatively low inference speed, addressing these limitations will be an important focus of future research.

Specifically, the advantages of RDL-YOLO are mainly reflected in the following two aspects:

(1) In the Cotton and Tomato Leaf datasets, the recall of RDL-YOLO was 73.6% and 77.1%, respectively. In the Cotton dataset, the recall of RDL-YOLO was exceeded all the comparison models by at least 3%. In the Tomato Leaf dataset, the recall of YOLOv11n was slightly higher (79.3%), but RDL-YOLO still maintained a significant lead among all the other models. Especially compared with YOLOv10n and RT-DETR50, the recall was increased 18.2% and 12.2%, respectively. This indicates that RDL-YOLO was more sensitive and comprehensive in capturing target features, thereby effectively reducing the rate of missed detections. (2) In the Cotton Leaf dataset, RDL-YOLO achieved a mAP of 77.1%, representing improvements of 3.7% over YOLOv11n and 3.2% over STN-YOLO. In the Tomato Leaf dataset, RDL-YOLO achieved a mAP of 74.5%, exceeding the performance of all compared models by more than 2%. It indicates that RDL-YOLO exhibits stable performance in diverse detection scenarios.

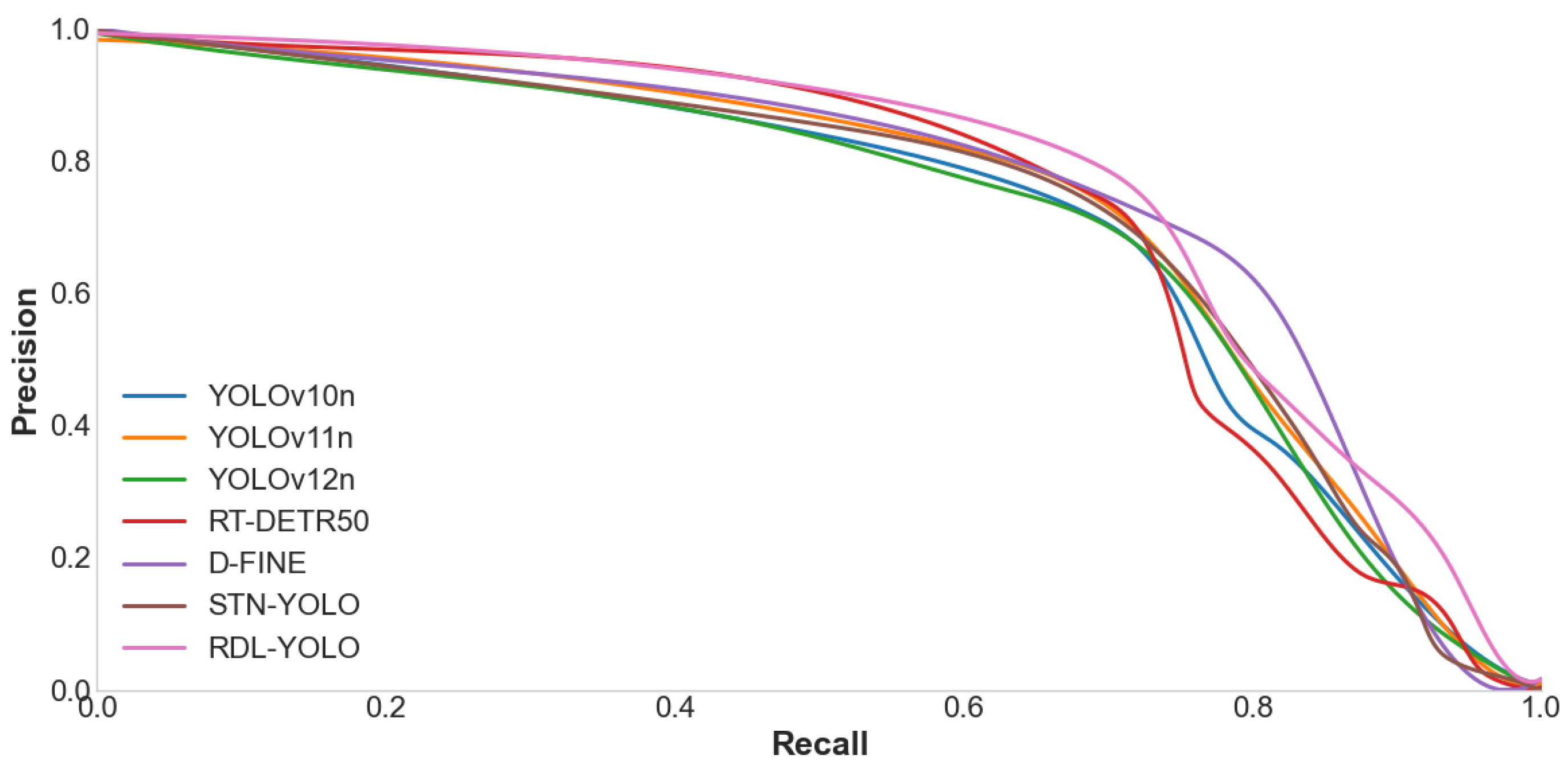

Combined with P-R curves shown in

Figure 9, the results indicate that RDL-YOLO significantly enhances overall precision and recall while concurrently preserving computational efficiency. These findings suggest that RDL-YOLO achieves an optimal balance between precision and recall in the context of cotton pest and disease detection. This work will provide methodological support and practical strategies for pest and disease monitoring within precision agriculture frameworks.

3.3. Visual Analysis

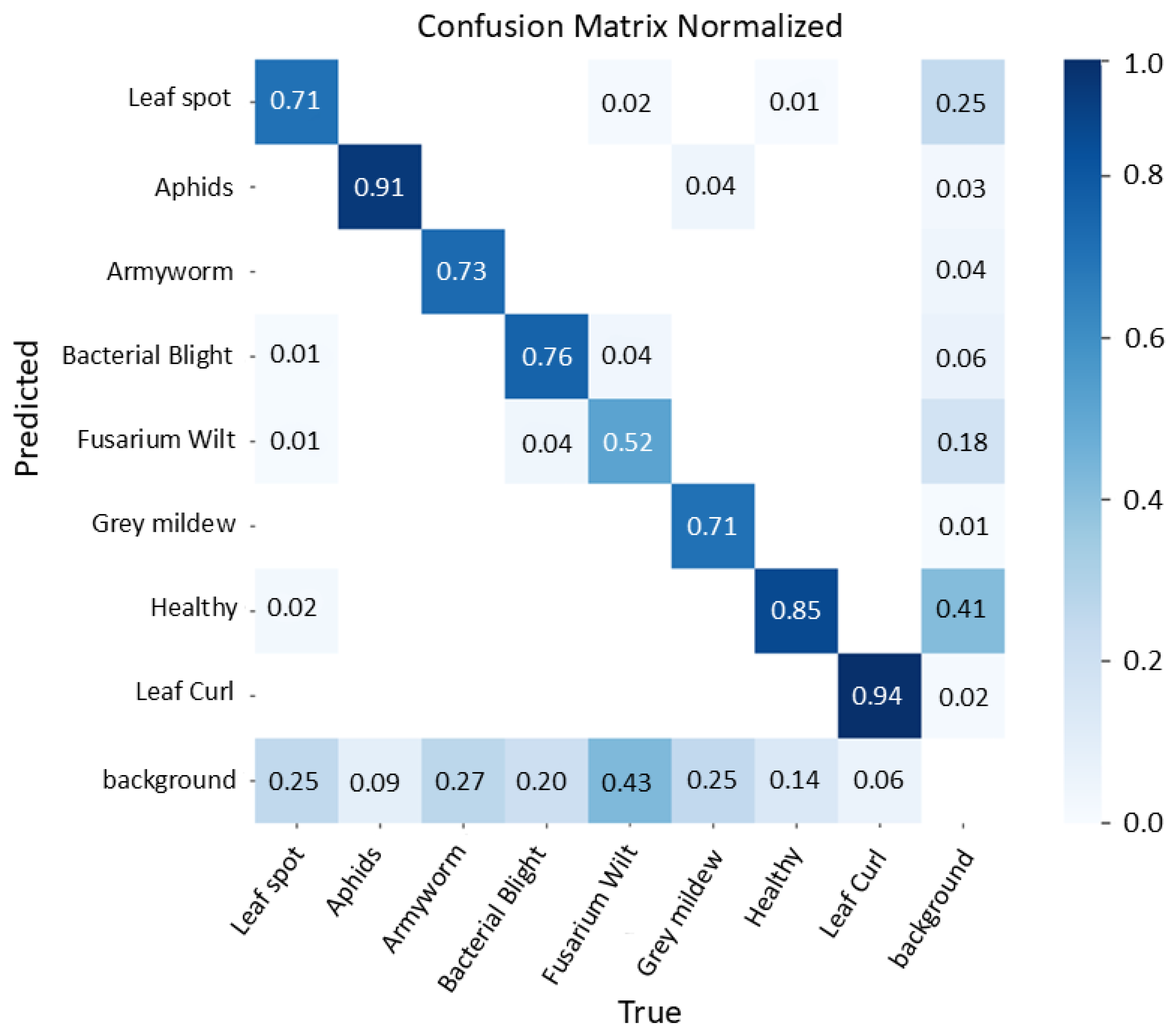

As shown in

Figure 10, the normalized confusion matrix of RDL-YOLO on the cotton leaf pests and diseases detection task reflects the improvement effect and existing shortcomings of each of the three modules.

First, the RepViT-A module significantly improves the response strength and extraction accuracy of high-contrast spots (e.g., leaf curl and aphids) by enhancing local–global interactions and enabling channel feature recalibration—94% accuracy for leaf curl and 91% for aphids—with only a very small amount of misclassification as background. Second, the parallel adaptive multi-scale convolutional kernel weights of the DDC convolutional module effectively extend the model’s receptive field to defects of different shapes and sizes, resulting in a steady improvement in the detection accuracy of armyworm (73%), leaf spot disease (71%), and bacterial blight disease (76%), but there are still about 20–25% of samples missed due to background interference.

Finally, LDConv’s dynamic sampling mechanism optimized feature fusion by learning offsets to resample key locations, which helped the recognition accuracy of some fine textures (e.g., gray mildew) to reach 71%, but the accuracy of Fusarium wilt was still only 52%; 43% were misidentified as background, and 18% were confused with bacterial mottle disease. Healthy leaves were correctly recognized by 85%. Overall, the three improvements have played a positive role in most of the mainstream pest and disease detection tasks, but the model still needs to further improve its robustness and fine-grained recognition ability by richer data augmentation and stronger attentional mechanisms for very low-contrast or highly background-similar spots.

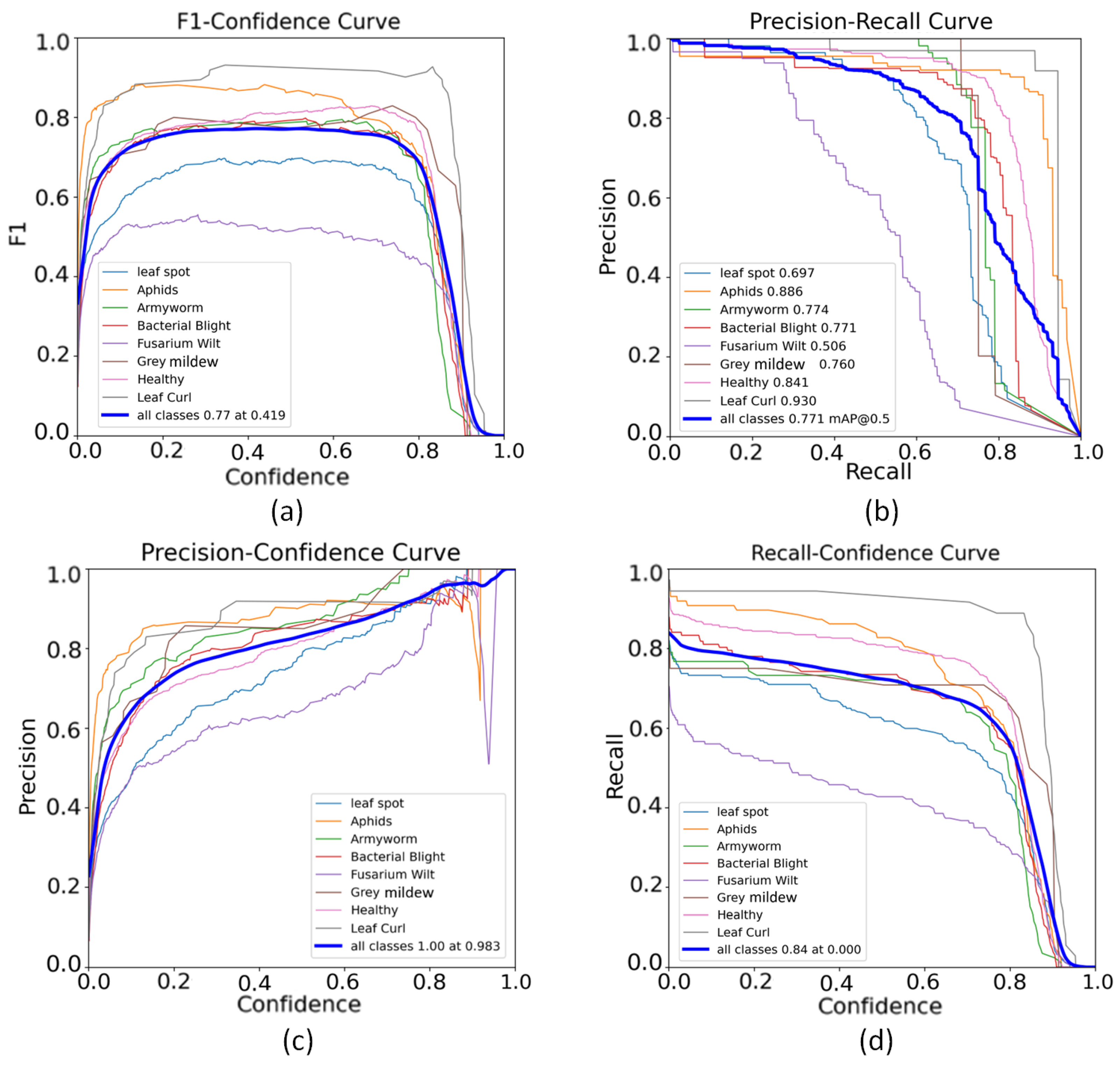

Figure 11 demonstrates the superior performance of the RDL-YOLO model through a multi-dimensional evaluation curve. The F1-confidence curve shows that RDL-YOLO has a peak F1 value of 0.77, which is significantly better than the benchmark model.

This robustness stems from its RepViT-A module, which significantly improves the response strength and extraction accuracy of key spot features under complex background interference by enhancing local–global interactions and recalibrating channel features. The precision–recall curve further validates RDL-YOLO’s strong category discrimination capabilities, with excellent mAP@0.5 values for key disease categories such as leaf curl (0.930) and healthy (0.841). This is attributed to its DDC convolutional module, which realizes a dynamic multi-scale sensory field by learning the weights of convolutional kernels at different scales in parallel. The dynamic multi-scale design effectively captures irregular disease patterns, and thus is able to adaptively perceive different sizes and shapes of disease spots. The accuracy–confidence curve achieves near-perfect accuracy at a very high confidence threshold (1.00 accuracy at 0.983 confidence), demonstrating a very low false detection rate even in highly confident predictions. This breakthrough is driven by LDConv’s dynamic sampling mechanism, which eliminates the problem of losing key features due to fixed sampling locations by guiding resampling with learned offsets. Meanwhile, the recall–confidence curve (recall remains 0.84 at a confidence level of 0.000) further confirms the strong generalization ability of RDL-YOLO in low confidence scenarios, and its optimized feature fusion ensures the comprehensive coverage of diseased areas. In summary, the synergistic effect of feature recalibration of RepViT-A, adaptive scale sensing of DDC, and deformable sampling mechanism of LDConv enables RDL-YOLO to strike a new optimal balance between precision (mAP@0.5: 0.771) and recall (class-wide average F1: 0.77), and establishes a robust framework for the detection of crop diseases in complex environments framework for crop disease detection in complex environments.

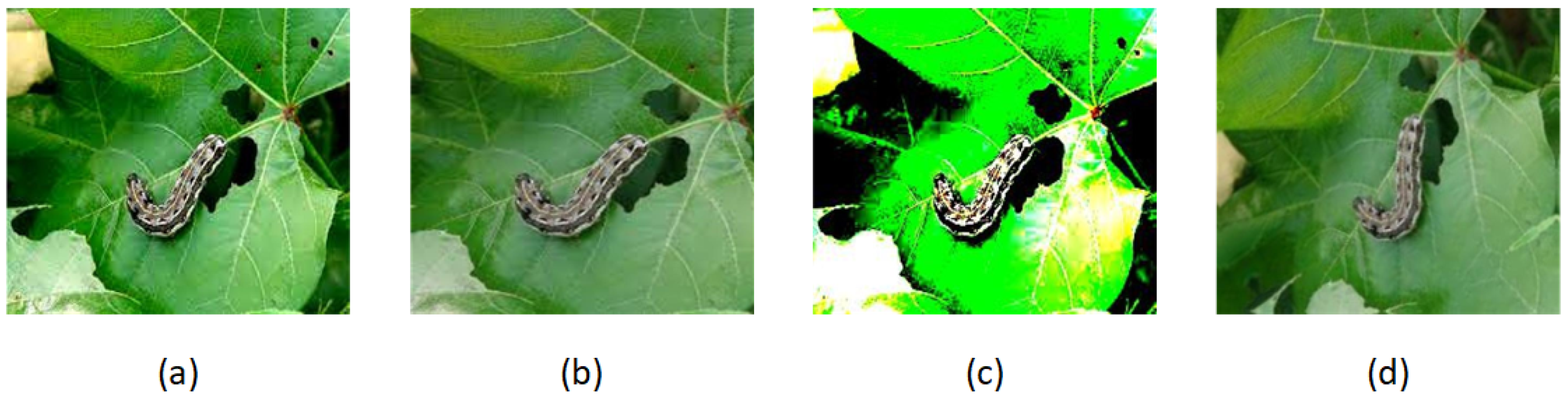

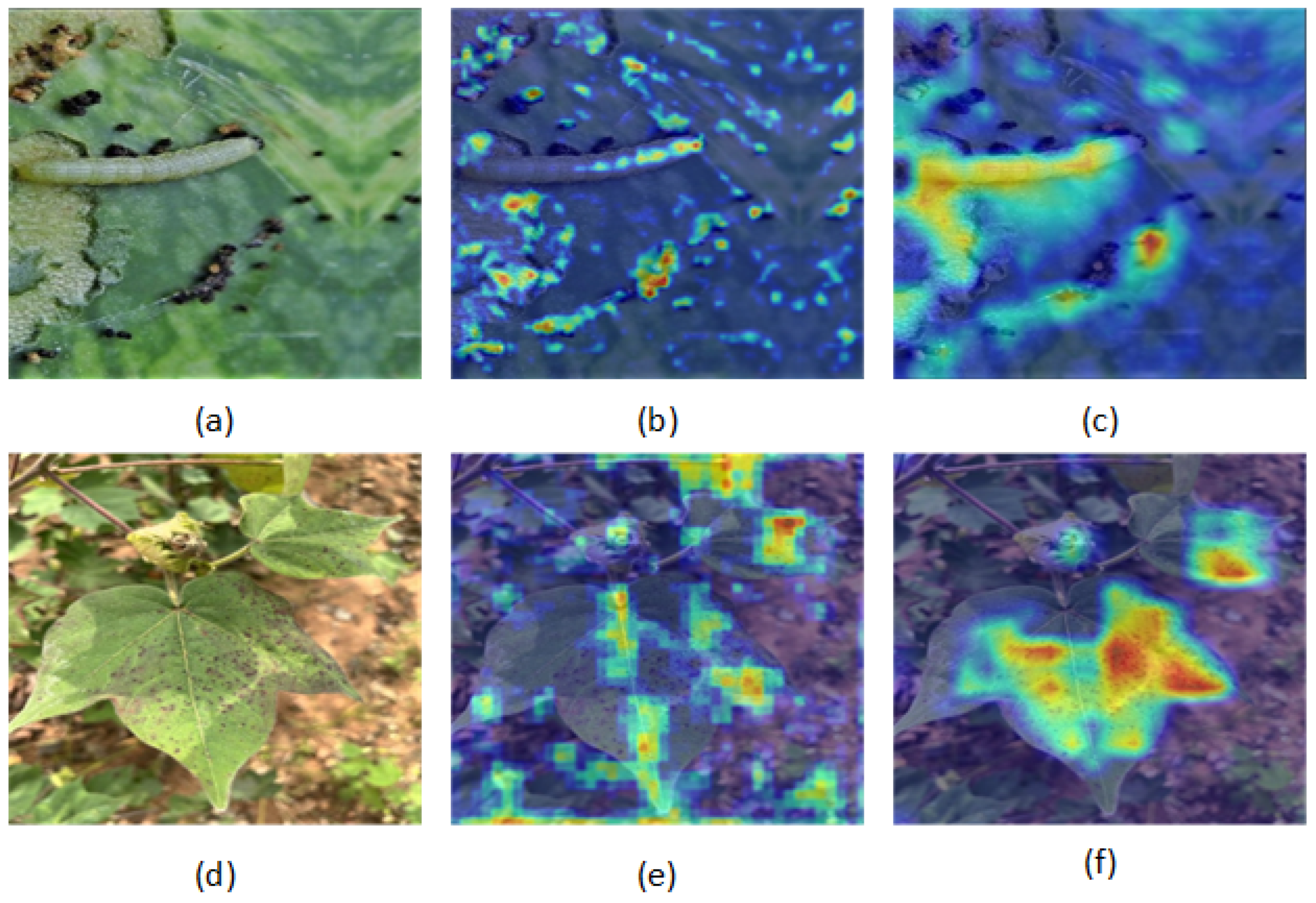

To further validate the model’s local–global interaction ability,

Figure 12 illustrates the attention distribution of a representative sample visualized using Grad-CAM. It can be seem from (b) and (e) that the heatmap generated by YOLOv11n have problems of concern drift and inaccurate positioning, which are particularly prominent in images with blurred edges of lesions or dense distribution of multiple lesions. This indicates that it has certain deficiencies in capturing local fine-grained features and global context information.

As shown in (c) and (f), RDL-YOLO effectively enhances the local–global interaction ability by introducing the RepViT-A backbone, DDC module, and LDConv structure, and realizes the feature adaptive calibration of the channel dimension. The synergy of these three structures enables the model to enhance its response to key lesion areas while suppressing background noise interference, significantly improving the discriminative ability of lesion detection.

It can be observed from the heatmap that the attention of RDL-YOLO in the lesion area is more concentrated, compact, and highly consistent with the shape of the lesion. It can accurately focus on the center of the lesion and reasonably extend to the edge area. This indicates that the model has a stronger global semantic understanding ability and local fine positioning ability. Especially in sample image (f), RDL-YOLO demonstrates collaborative attention toward both the edge and central regions of the lesion, while effectively recalibrating channel features. The results indicate that RDL-YOLO has a better spatial attention collaborative mechanism and the ability of fine feature regulation across channels.

Figure 13a–c shows that there are large areas disease spots and multiple small-scale disease spots in the same image. The detection capability of YOLOv11n is relatively limited, and YOLOv12n exhibits a low level of confidence in its predictions. However, RDL-YOLO can accurately detect multiple lesion areas of different scales, and the confidence level is improved, indicating that it has a better multi-scale receptive field ability. This is attributed to the dynamic multi-scale receptive field mechanism of DDC.

Figure 13d–f shows a comparison of the dynamic sampling detection of Armyworm. The model performs feature map resampling guided by learned offsets. The detection frame generated by YOLOv11n does not fit the target object, while YOLOv12n performs better. The detection frame generated by RDL-YOLO model is the most accurate, precisely locating the actual area of the detection target. This indicates that the fixed sampling method has perceptual limitations for detecting target, while RDL-YOLO can learn the appropriate offset by means of the LDConv dynamic sampling mechanism, achieving more effective detection of non-central and asymmetric targets.

The comprehensive analysis of

Figure 10,

Figure 11,

Figure 12 and

Figure 13 confirms that RDL-YOLO shows stronger robustness and practicability in numerical performance, perceptual visualization, and fine-grained target modeling.

4. Discussion

The RDL-YOLO model achieves significant performance breakthroughs in cotton leaf pest and disease detection tasks, and its core innovations are the enhancement of local–global feature interactions through the RepViT-A backbone network, and the introduction of channel feature recalibration techniques. The macro-diffusion at the forest level requires large receptive field context modeling, DDC convolution realizes dynamic multi-scale receptive field to suppress irrelevant background and enhance the intensity of spot response, and LDConv sampling mechanism optimizes feature fusion, and the dynamic receptive field of convolutional kernel can better match the irregular morphology of the spots and accurately reconstruct the details of the morphology, which effectively solves key problems such as weak response of the spots, poor morphology, and loss of spatial information in the complex farmland background. This effectively solves the key problems of weak response of lesion features, poor morphological adaptability, and loss of spatial information in complex farmland background.

Experiments show that the RDL-YOLO model has achieved a significant performance improvement in the task of detecting diseases and pests of cotton leaves, with its mAP reaching 77.1%. It outperforms YOLOv11n (73.4%), STN-YOLO (73.9%), and RT-DETR based on the Transformer architecture (73.2%), indicating that the proposed model has a leading advantage between traditional convolutional structures and new Transformer detectors. Although the mAP of D-FINE on the cotton leaf dataset reached 74.9%, RDL-YOLO, with its multi-scale dynamic receptive field and offset-sampling, performed better in mAP, also improved recall to 73.6% (65.1% for D-FINE) and the precision reached 81.7% (74.9% for D-FINE). For the model’s generalization ability evaluated on the Tomato Leaf Diseases dataset, RDL-YOLO was also superior to YOLOv11n (68.6%), YOLOv12n (67.2%), STN-YOLO (67.7%), and D-FINE (67.9%), with a mAP of 74.5%. Among them, the recall was 77.1%. The results further verified the model’s detection ability under different crops and disease types.

Furthermore, RDL-YOLO was also compared with other methods. Zambre et al. [

36] proposed the STN-YOLO model, which introduces a spatial transformer module to enhance robustness against rotation and translation. On the Plant Growth and Phenotyping (PGP) dataset, it achieved a precision of 95.3% (1.0% higher than the baseline), a recall of 89.5% (0.3% higher), and an mAP of 72.6% (0.8% higher). This model is suitable for image scenarios with significant affine transformations but exhibits limited adaptability to object structures and lacks strong multi-scale feature extraction capabilities. Li et al. [

37] proposed the CFNet-VoV-GCSP-LSKNet-YOLOv8s model, which achieved a precision of 89.9% (2.0% higher than the baseline), a recall of 90.7% (1.0% higher), and an mAP of 93.7% (1.2% higher) on a private cotton pest and disease dataset. The model improves small-object detection and accelerates convergence by enhancing multi-scale feature fusion, but its ability to model local–global feature interactions remains limited. Pan et al. [

38] proposed the cotton diseases real-time detection model CDDLite-YOLO for detecting cotton diseases under natural field conditions. It achieved a precision of 89.0% (1.9% higher than the baseline), a recall of 86.1% (0.9% higher), and an mAP of 90.6% (2.0% higher) on a private cotton dataset. While the model shows strong localization ability for small features, its performance declines when dealing with large variations in lesion morphology. In this work, the proposed RDL-YOLO model achieved a precision of 81.7% (1.4% higher than the baseline), a recall of 73.6% (4.4% higher), and an mAP of 77.1% (3.7% higher) on the Cotton Leaf dataset. Compared with the above studies, RDL-YOLO achieves the largest improvement in recall and mAP over the baseline model, and it demonstrates that the proposed modifications of RDL-YOLO are more effective. It is well-suited for real-world field scenarios characterized by complex lesion morphology and strong background interference, significantly enhancing the detection of cotton leaf diseases. However, this study relies on a single curated cotton leaf dataset, which may contain inherent biases such as lighting conditions, cotton varieties, and geographical locations. Future work will evaluate the model on more diverse datasets from different agricultural environments to validate its generalization capability in real-world scenarios more broadly.

Despite the excellent performance of RDL-YOLO, challenges remain, e.g., real-time constraints, combining RepViT-A, DDC, and LDConv improves the accuracy but increases the inference latency at the edge end. To address this issue in future work, we plan to optimize the model for deployment on mobile and UAV platforms using lightweight techniques such as network pruning, quantization, or knowledge distillation.