1. Introduction

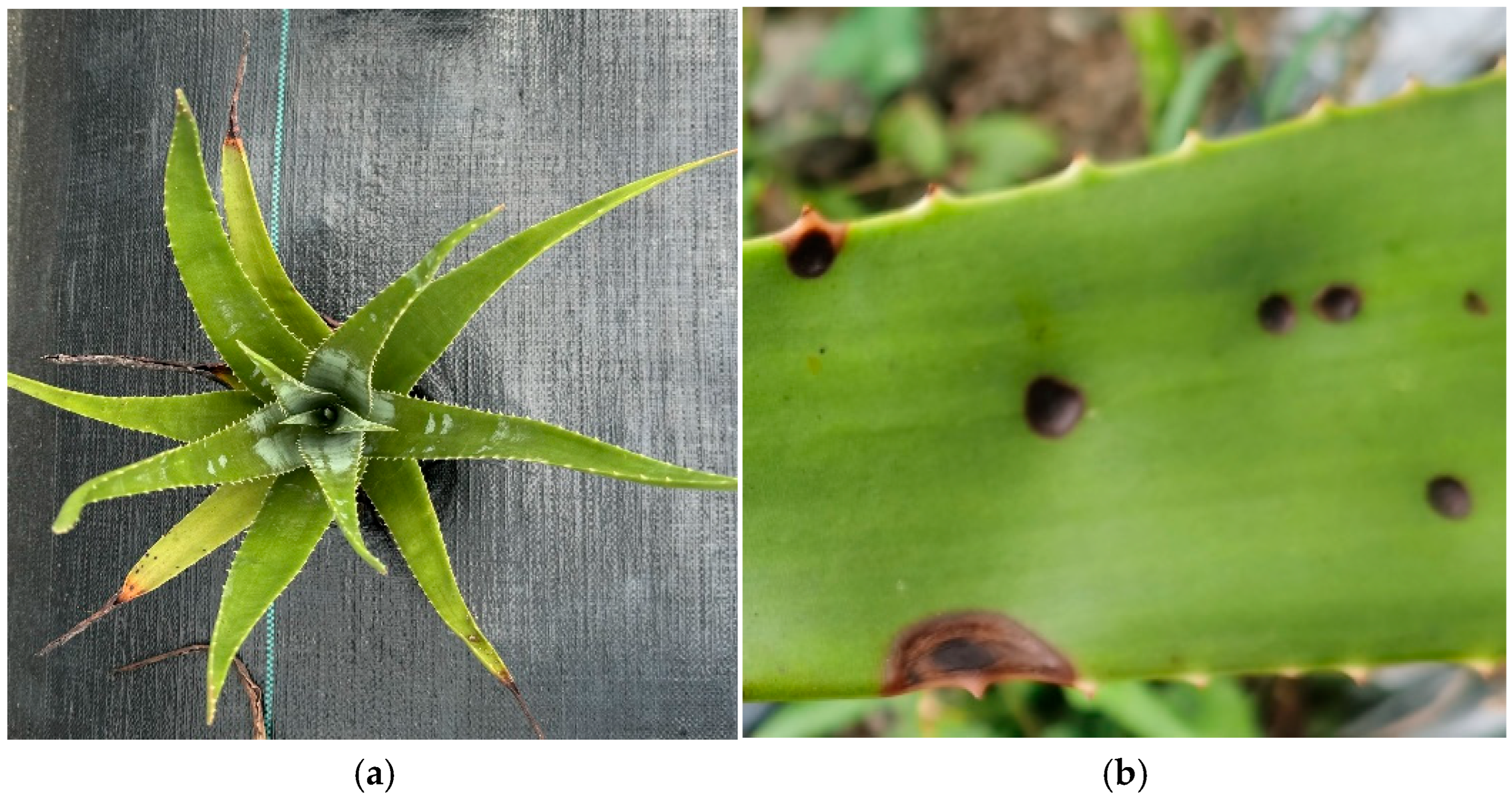

Aloe is a widely recognized perennial herbaceous plant, valued for its diverse applications in food, medicine, cosmetics, and health. Historically, aloe has been utilized in traditional medicine for its therapeutic properties, including wound healing, anti-inflammatory effects, immune regulation, and anti-diabetic benefits, making it an effective treatment for various diseases [

1,

2]. Moreover, aloe has significant uses in animal husbandry. Due to its anti-inflammatory, antioxidant, and antibacterial properties, incorporating aloe into poultry feed has been shown to enhance production performance, improve digestive health, and reduce the incidence of disease [

3]. However, aloe cultivation is highly sensitive to environmental factors such as soil composition, temperature, light, and moisture [

4]. Inappropriate environmental conditions and the presence of invasive organisms can lead to pest infestations and disease outbreaks, which significantly affect crop yield and result in substantial economic losses. The primary diseases affecting aloe include anthracnose, as well as a smaller number of leaf blight and leaf spot diseases, which cause sunken lesions and necrotic spots on the leaf surface, thereby reducing yield [

5].

Traditional methods for diagnosing aloe diseases rely heavily on manual observation and subjective judgment. These methods are time-consuming, labor-intensive, prone to errors, and lack the precision necessary for accurate disease identification [

6]. With the advancement of machine learning and deep learning technologies, artificial intelligence (AI) and robotic systems are increasingly being integrated into agricultural automation [

7]. The utilization of AI and robotic systems to perform tasks such as field identification, detection, and path planning offers significant advantages over manual operations. These technological solutions not only demonstrate superior efficiency but also contribute to substantial cost reduction while minimizing the likelihood of operational errors. As an advanced image processing and data analysis tool, deep learning can replace human eyes and learn and analyze by collecting visual information. It can not only recognize and calculate targets but also take corresponding preventive measures for special situations, showing great potential in the agricultural sector [

8]. By leveraging computer vision, AI-based deep learning systems are capable of automatically identifying crop pests and diseases, which not only enhances detection efficiency and accuracy but also significantly reduces labor costs, contributing to the sustainable development of agriculture [

9].

While object detection methods have shown substantial success across various fields, they still face limitations in tasks such as lesion identification and morphological analysis [

10]. These tasks require not only precise localization of the target but also an in-depth analysis of the target’s morphological characteristics requirements that traditional object detection techniques struggle to meet [

11]. In contrast, semantic segmentation models have pixel-level accuracy and the ability to accurately segment the target boundary. They can accurately segment the shape of the target or calculate the pixel area, which have better effects in medicine [

12] and disease degree classification [

13], gradually become a research hotspot in recent years. These models are widely applied in image analysis tasks but still face challenges, including high practical complexity and limited fault tolerance. As a result, there is an ongoing need for further refinement to enhance precision and recall rates, reduce false positives and negatives, and achieve a lightweight design. Many existing semantic segmentation models aim to address the challenge of irregular small target segmentation, particularly in plant disease segmentation. For example, Hao Zhou et al. [

14] proposed an improved Deeplabv3+ model with a gated pyramid feature fusion structure and a lightweight MobileNetv2 backbone, which improved the model’s mIoU and accuracy while reducing parameters, can efficiently and accurately identify the diseases on oil tea leaves. Wang Jianlong et al. [

15] developed an improved RAAWC-UNet network, which incorporated a modulation factor and convolutional block attention module (CBAM) to enhance detection capabilities for small lesions. By replacing the downsampling layer with atrous spatial pyramid pooling (ASPP), the model achieved higher segmentation accuracy in complex environments. While these models improved accuracy, they also increased the number of parameters, highlighting the need for lightweight solutions. Some studies have sought to combine multiple network models to leverage their respective advantages. For example, Esgario et al. [

16] integrated UNet and PSPNet models for coffee leaf segmentation, and employed CNN models like ResNet for disease classification, with a practical Android client for users to upload images and obtain disease information. While this system is practical, it still requires an internet connection and has not yet addressed the issue of lightweight local deployment. Mac et al. [

17] introduced a greenhouse tomato disease detection system combining Deep Convolutional Generative Adversarial Networks (DCGANs) and ResNet-152 models, which enhanced image data and improved classification and segmentation accuracy, achieving 99.69% accuracy on an augmented dataset. Similarly, Lu Bibo et al. [

18] proposed a MixSeg model combining CNN, Transformer, and multi-layer perceptron architectures, which achieved high IoUs for apple scab and grey spot segmentation. However, its segmentation capability for other diseases and crops requires further optimization. In contrast, Polly et al. [

19] introduced a multi-stage deep learning system combining YOLOv8, Deeplabv3+, and UNet models. This system accurately localizes diseases in drone images and performs pixel-level semantic segmentation, achieving over 92% accuracy for seven diseases and an IoU exceeding 85%, offering quantitative damage area results.

With the introduction of the YOLOv11 model, its exceptional lightweight features and multifunctional advantages have made it the model of choice for many researchers [

20]. Sapkota et al. [

21] addressed labor shortages in US plantations by improving the YOLOv11 series segmentation model. Through data augmentation and the CBAM attention mechanism, they enhanced the model’s segmentation accuracy for tree trunks and branches, providing valuable support for orchard robots in pruning and picking operations. Tao Jinxiao et al. [

22] proposed the CEFW-YOLO model, which enhanced disease detection by compressing convolutional modules and introducing ECC and FML attention modules. By optimizing the loss function, they improved boundary regression accuracy, increasing mAP@50 and inference speed, and enabling efficient deployment on edge devices. However, unlike segmentation models, object detection solutions are limited in their ability to assess disease severity. The aforementioned research highlights the significant progress made with deep learning technologies in crop disease recognition and segmentation. However, further optimization is required, particularly in improving accuracy, reducing errors, and achieving lightweight deployment.

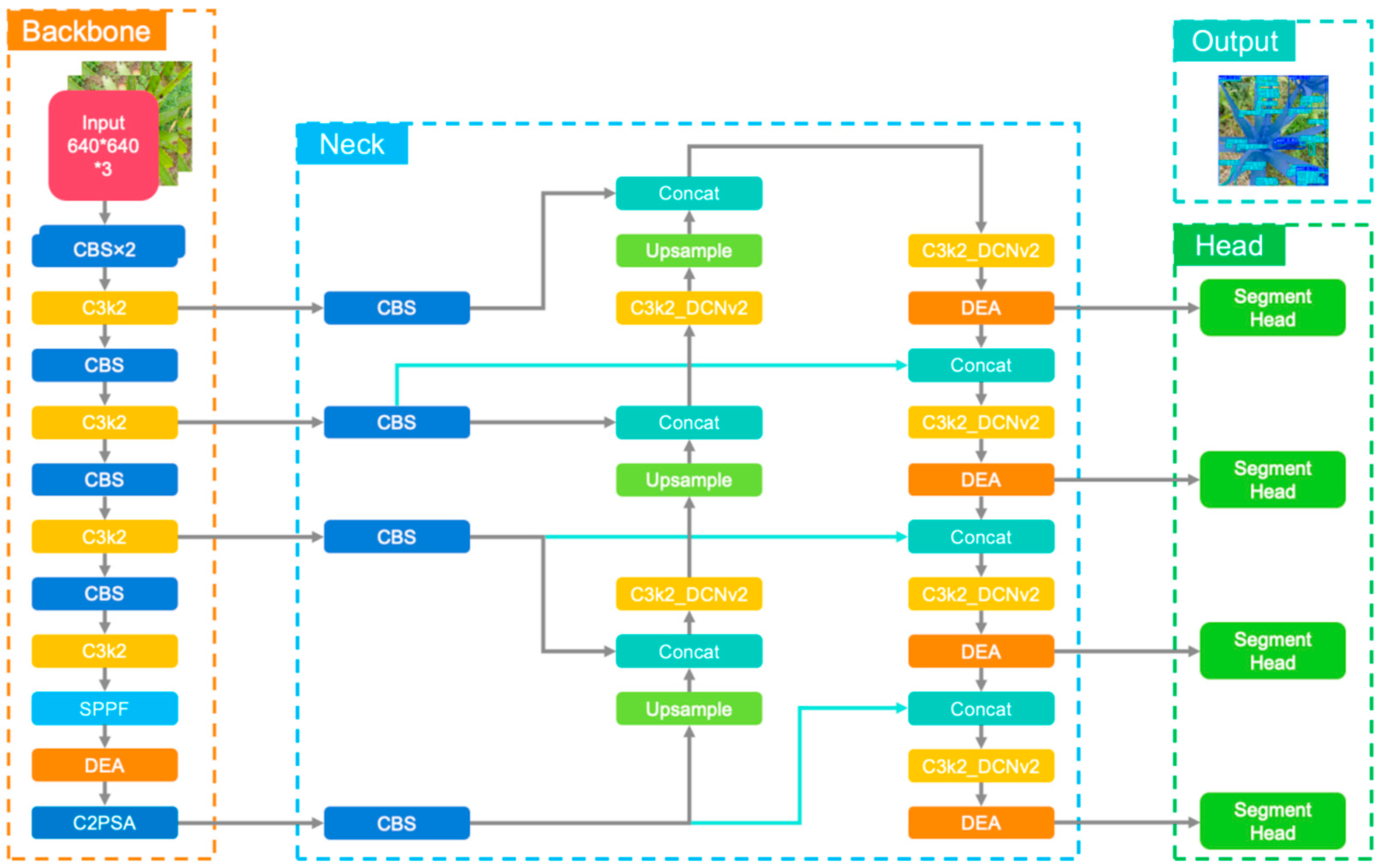

To address the challenges associated with accurately identifying aloe anthracnose lesions in complex environments and assessing disease severity, this paper proposes a high-performance disease segmentation model, YOLOv11-seg-DEDB, based on an enhanced YOLOv11-seg. This model not only enables precise segmentation of aloe disease lesions but also facilitates disease grading, allowing for effective assessment of disease severity and replacing traditional manual identification methods. The key improvements introduced in this work are as follows: (1) A DE attention mechanism, specifically designed for small lesion segmentation, is embedded at the end of the backbone network and before each detection head module, enhancing the network’s segmentation capability at lesion boundaries; (2) The DCNv2 is incorporated into the network neck to improve feature extraction and fusion in complex environments; (3) The BiFPN structure replaces the original PANet network, reducing redundant calculations. Additionally, a p2 detection head is introduced to further improve the fusion of lesion features and preserve small target details; (4) A lightweight detection head is designed by merging repeated modules across branches and eliminating unnecessary complex functions, resulting in a significant reduction in the model’s parameter count. YOLOv11-seg-DEDB not only runs faster than other mainstream segmentation models, such as UNet and Deeplabv3+, but also has a 5.3% increase in accuracy and a 27.9% reduction in parameter count compared to the original YOLOv11-seg.

These improvements enable the model to more accurately identify lesions and assess disease severity in complex environments, offering an effective technical solution for disease prevention and control. This model provides an efficient, precise approach to safeguarding aloe yields and ensuring the sustainable use of resources.

3. Results

3.1. Training Results of YOLOv11-seg-DEDB

The YOLOv11n-seg model served as the pre-trained model for this study. Optimal performance was achieved after 300 training epochs. To evaluate the proposed enhancements, ablation studies were conducted, comparing the modified model against the baseline and other semantic segmentation models. The training outcomes for the YOLOv11-seg-DEDB model are presented in

Figure 8.

Figure 8a illustrates the precision, recall, and mAP@50 curves for the YOLOv11-seg-DEDB model, evaluated on both the training and validation sets for aloe anthracnose. The results indicate that the precision, recall, and mAP@50 metrics stabilized after approximately 240 epochs.

Figure 8b presents the Precision–Recall (PR) curve for the YOLOv11-seg-DEDB model. The model demonstrates excellent performance in segmenting aloe leaves and achieving high precision in segmenting anthracnose lesions. However, a slight decline in precision was observed as recall increased, which is a common challenge faced by segmentation models in detecting small targets.

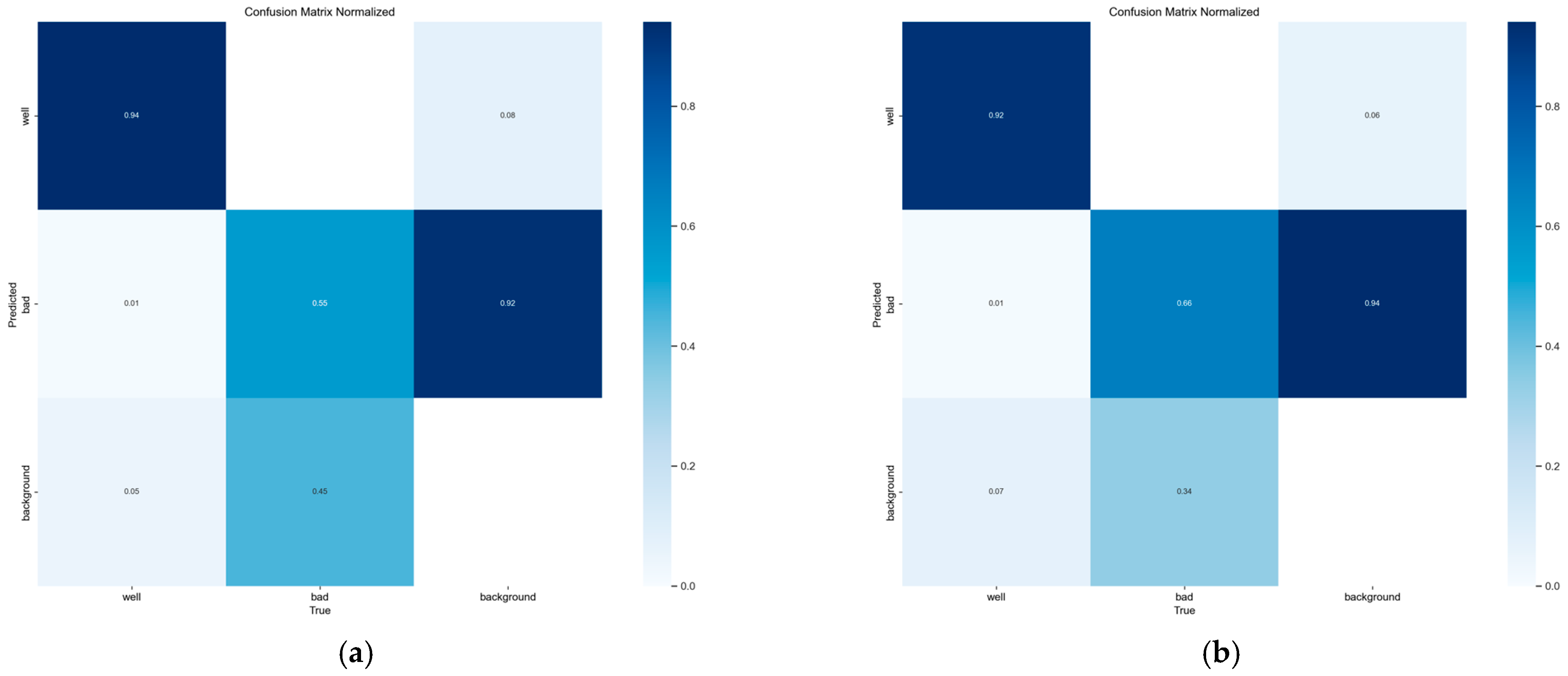

Figure 9 displays the confusion matrices comparing the original YOLOv11-seg model with the enhanced YOLOv11-seg-DEDB model for segmenting aloe leaves and anthracnose lesions. Given that the primary focus of this study was to improve the segmentation of small and challenging lesion targets, the proposed modifications inevitably impacted the segmentation capability for healthy leaves. The confusion matrix results indicate that the enhanced YOLOv11-seg-DEDB model significantly improved segmentation precision for anthracnose lesions while maintaining high overall precision and effectively reducing the false negative rate. Although some false positive occurrences remained, experimental data and visual segmentation results suggest that these were mostly confined to a few pixels at the lesion boundaries, exerting minimal impact on the disease area calculation.

When segmenting individual aloe leaves, both models successfully identified lesions and leaf tissue. However, the original YOLOv11-seg model exhibited inferior performance at lesion boundaries, often misclassifying them as non-leaf or non-lesion regions, leading to incomplete segmentation (highlighted by red boxes in

Figure 10b,e). In contrast, the enhanced YOLOv11-seg-DEDB model effectively mitigated this issue, providing more accurate segmentation of the boundary between healthy leaf tissue and lesions. For whole-plant aloe detection, which involves multiple targets and complex backgrounds, both models exhibited some degree of missed detection. Moreover, the original YOLOv11-seg model showed reduced precision in segmenting healthy leaves (

Figure 10h,k), with red and orange boxes indicating incomplete leaf segmentation and missed lesions, respectively. Conversely, the YOLOv11-seg-DEDB model demonstrated superior performance in segmenting lesions near the plant center and provided more precise boundary delineation. Additionally, the DEDB model consistently exhibited higher confidence levels in lesion segmentation compared to the original model.

It is worth noting that the models used in these experiments were the ”n” variants, representing the smallest parameter size within the YOLOv11-seg series. With sufficient computational resources, larger models (e.g., ”x”) could further enhance segmentation precision and recall. In summary, the YOLOv11-seg-DEDB model demonstrates improved precision in segmenting aloe anthracnose lesions and superior performance in delineating the boundaries between healthy leaf tissue and lesions.

3.2. Ablation Study

This study introduces four key enhancements to the YOLOv11n-seg model. Since the modification to the detection head primarily impacts feature output, this ablation study focuses on evaluating the remaining three core improvements. As presented in

Table 2, each experimental group utilized a unique combination of these modules, which were compared against the baseline YOLOv11n-seg model. A total of nine experimental groups were established. All experiments employed the same aloe anthracnose lesion dataset, with consistent training epochs and hyper-parameter settings across all groups. Model performance was assessed using several metrics, including lesion segmentation precision (

p (bad)), overall segmentation precision (

p (all)), F1-score (F1 (all)), mean Average Precision for segmentation (mAP@50 (all)), number of parameters (Params), and model size.

Table 2 shows the comparison of different indicators of the model under different module combinations. Firstly, integrating either the DE attention mechanism or the DCNv2 into the YOLOv11n-seg model yielded improvements in segmentation precision. However, compared to the baseline model, the introduction of the DE attention mechanism alone resulted in a slight decrease in the F1-score. This is attributed to the mechanism potentially increasing the model’s aggressiveness in lesion segmentation, thereby elevating the false positive rate. Consequently, combining the DE attention mechanism with the DCNv2 effectively mitigated this risk by enhancing the model’s focus on genuine lesion features. Furthermore, adopting the BiFPN structure significantly reduced both model size and parameter count by 15% and 19.8%, respectively. The introduction of the p2 detection head markedly improved the model’s capability to segment small lesion targets, although it slightly diminished the overall segmentation precision (

p (all)) for all targets by 0.3%, primarily affecting healthy leaf segmentation. To achieve optimal overall model performance, the synergistic combination of the DE attention mechanism and DCNv2 proved essential.

When all three core improvements (DE attention, DCNv2, BiFPN(p2)) were applied simultaneously, all evaluation metrics demonstrated improvement. Compared to the baseline model, the overall segmentation precision (p (all)) and mean segmentation accuracy (mAP@50 (all)) increased by 3.0% and 3.9%, respectively, while lesion segmentation precision (p (bad)) saw a substantial gain of 5.8%. Concurrently, model size and parameter count were significantly reduced. Notably, even compared to the larger YOLOv11s-seg model, this enhanced configuration exhibited superior performance in precision and mean segmentation accuracy. Finally, the YOLOv11n-seg-DEDB model incorporated the lightweight detection head. Although this modification slightly impacted segmentation capability compared to the three-module combination, it resulted in a significant further reduction in model size and parameters, achieving reductions of 23.3% (4.6 MB) and 27.9% (2.04 M).

In summary, for deployment on devices with ample computational power, utilizing the combination of DE attention, DCNv2, and BiFPN(p2) maximizes model performance. However, considering the requirements for deployment on edge or mobile devices, where balancing performance and lightweight design is crucial, the YOLOv11n-seg-DEDB model presents a more suitable solution.

3.3. Comparison Experiments of Modules and Models

This section validates the effectiveness of the proposed YOLOv11-seg-DEDB network model through comparative experiments. These include a comparison between the proposed DE attention mechanism and mainstream attention modules, and a comparison between the YOLOv11n-seg-DEDB model and other leading segmentation models. Model performance was assessed using several metrics, including lesion segmentation precision (p (bad)), overall segmentation precision (p (all)), F1-score (F1 (all)), mean Average Precision for segmentation (mAP@50 (all)), number of parameters (Params), and model size.

Table 3 presents a comparison between the proposed DE attention mechanism and several mainstream attention mechanisms (CBAM, EMA, SE), all based on the YOLOv11n-seg model. The results indicate that the incorporation of any attention mechanism leads to an increase in model size and parameter count, as these mechanisms primarily enhance feature extraction capabilities without fundamentally altering the network architecture. Except for CBAM, attention mechanisms improved segmentation precision. The proposed DE attention achieved the best performance in terms of target segmentation precision (

p (bad),

p (all)), and mean segmentation accuracy (mAP@50 (all)). Although the F1-score of DE attention was slightly lower than those of EMA and SE, the DCNv2 network’s effectiveness in feature fusion mitigated false positive issues. As a result, the high precision of DE attention makes it particularly suitable for the aloe anthracnose lesion segmentation task.

Table 4 compares the YOLOv11-seg-DEDB model with other mainstream YOLO segmentation models. The results demonstrate that the YOLOv11n-seg-DEDB model outperforms all other segmentation models in terms of precision for aloe anthracnose lesions (

p (bad)), achieving 82.5%. Regarding

p (all), the improved model was only 0.1% lower than the significantly larger YOLOv11s-seg model. The YOLOv9t-seg model matched the improved model’s overall precision (

p (all) 85.4%). However, this model’s utilization of five detection heads increased its sensitivity to targets, consequently elevating the false positive rate. This is reflected in its lower F1-score and mAP@50 compared to the improved model. In contrast, the YOLOv11n-seg-DEDB model not only achieved a 0.5% higher mAP@50 than YOLOv11s-seg (76.4% vs. 75.9%) but also exhibited a smaller model size and fewer parameters than the YOLOv5n-seg (the smallest baseline model).

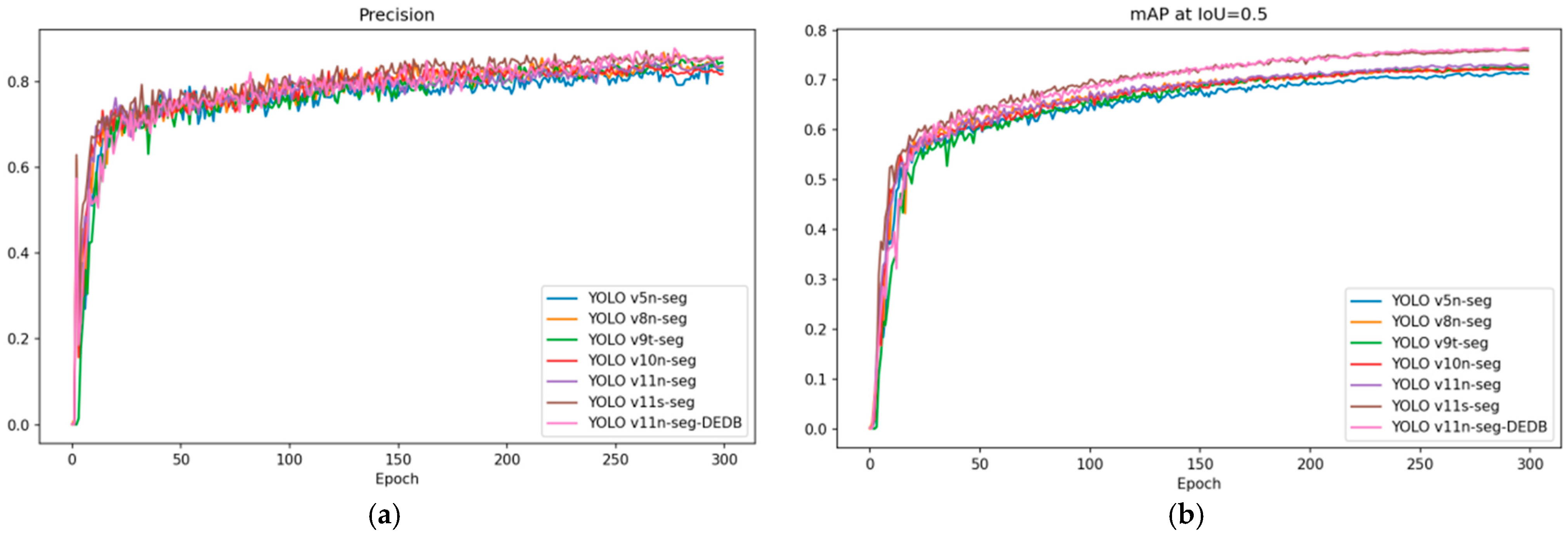

Figure 11 illustrates the changes in precision and mAP@50 for different models as training epochs increase. All models demonstrated a rapid increase in both precision and mAP@50, reaching approximately 70% and 60% within the first 30 epochs. Following this, although some fluctuations occurred, both metrics generally showed a slow upward trend across all models, stabilizing around epoch 260 and converging by epoch 300. Notably, YOLOv9t-seg, YOLOv11s-seg, and YOLOv11n-seg-DEDB maintained higher precision levels than the other models. Furthermore, YOLOv11s-seg and YOLOv11n-seg-DEDB demonstrated significantly superior mAP@50 performance. Considering the substantially larger size of YOLOv11s-seg compared to the other models, the YOLOv11n-seg-DEDB model offers the best overall performance balance among the evaluated segmentation models.

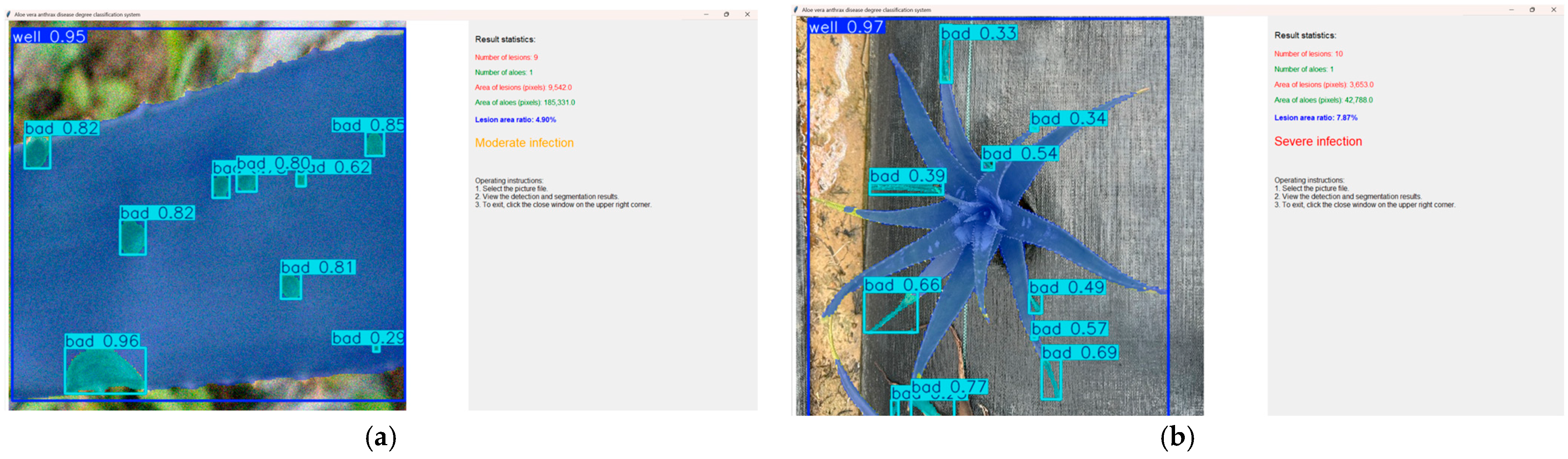

3.4. Visual Output of Disease Severity Classification

Section 2.3 detailed the formula for calculating the severity percentage of aloe anthracnose disease and the corresponding grading standards. To provide a more intuitive presentation of the results, a dedicated visualization program was developed. The interface of this program is depicted in

Figure 12.

Figure 12 illustrates the interface of the Aloe Anthracnose Disease Severity Classification System. Upon launching the program, users are prompted to input the image requiring detection. After loading the input image, the system automatically performs image detection and segmentation, presenting the results visually. The left panel of the interface displays the segmentation results, distinguishing lesion areas (bad) from healthy leaf areas (well). The top-left corner of each segmented region is annotated with its corresponding label type and confidence score. The right panel presents statistical results, including the number of lesions, the number of healthy leaf segments, the pixel counts for lesions and healthy leaves, and the calculated lesion area ratio relative to the total leaf area. Based on this area ratio, the program outputs the disease severity level according to the predefined standards.

Figure 12a demonstrates the segmentation performance on a single leaf under simple environmental conditions, while

Figure 12b showcases the segmentation results for an entire aloe plant within a complex background.

This visualization program effectively communicates the detection and segmentation outcomes for aloe anthracnose. It not only precisely locates lesions but also quantitatively assesses the severity of the infection. The system enables users to clearly understand the impact of the disease on the aloe plant, facilitating timely implementation of appropriate control measures to mitigate economic losses.

4. Discussion

This study utilized RGB cameras to capture images of anthracnose lesions on field-grown aloe vera leaves under diverse weather and lighting conditions. The raw images, with an initial resolution of 4284 × 4284 pixels, were subsequently downscaled to 640 × 640 pixels to reduce storage requirements and enhance training efficiency. A series of data augmentation techniques were applied to enhance data diversity, thereby strengthening the intensity and robustness of model training.

By improving the mainstream semantic segmentation models, this study proposes a novel YOLOv11-seg-DEDB model tailored for effective segmentation of aloe anthracnose lesions and grading of disease severity. A DE attention mechanism, integrated with DCNv2, is designed to significantly improve segmentation precision and recall rates. Furthermore, BiFPN architecture is adopted with an additional p2 detection head, enabling the incorporation of more small-target features into the feature fusion process. To optimize computational efficiency, a lightweight segmentation-detection head is developed, effectively reducing model parameters and size.

Table 4 shows the YOLOv11-seg-DEDB model outperforms other mainstream segmentation models in both segmentation accuracy and model compactness. Compared to the original YOLOv11-seg model, it improves segmentation accuracy and mAP@50 for infected lesions by 5.3% and 3.4%, while reducing model parameters by 27.9%. These results validate its superior performance in aloe anthracnose segmentation tasks. In summary, comparative experiments with various network architectures confirm that the YOLOv11-seg-DEDB model not only enhances the segmentation precision of aloe anthracnose lesions, particularly under complex environmental conditions, but also ensures the lightweight nature of the model. This enables simultaneous lesion segmentation and disease severity grading, effectively meeting practical application requirements.

With the rapid advancement of deep learning, there is an escalating demand for more sophisticated approaches in agricultural disease research. Timely detection of diseases, accurate assessment of disease severity, and subsequent implementation of differential control measures and economic impact evaluations hold critical significance for sustainable agricultural management [

43]. Wang Jianlong et al. [

15] developed an improved RAAWC-UNet network, which incorporated a modulation factor and CBAM to enhance detection capabilities for small lesions. By replacing the downsampling layer with ASPP, the model achieved higher segmentation accuracy in complex environments. While these models improved accuracy, they also increased the number of parameters, highlighting the need for lightweight solutions. Esgario et al. [

16] integrated UNet and PSPNet models for coffee leaf segmentation, and employed CNN models like ResNet for disease classification, with a practical Android client for users to upload images and obtain disease information. While this system is practical, it still requires an internet connection and has not yet addressed the issue of lightweight local deployment. Previous studies have provided valuable references for the present research, thus the improvements in this work balance accuracy and lightweighting. Notably, the YOLOv11-seg-DEDB model integrates both detection and segmentation capabilities, enabling not only counting of aloe anthracnose lesions but also grading of disease severity through calculation of lesion area. Additionally, its lightweight design facilitates deployment on mobile and edge devices, enhancing user accessibility. These strengths of the study are anticipated to contribute to more in-depth analyses and advancements in this research field.

5. Conclusions

The primary contribution of this work lies in establishing a high-precision, low-complexity intelligent diagnostic framework for aloe anthracnose. The synergistic integration of the DE attention mechanism and DCNv2 modules significantly enhances the model’s ability to detect irregular, small-scale lesions within complex field scenes. Furthermore, the combination of BiFPN and the lightweight detection head optimizes computational efficiency, providing a technical foundation for deployment on mobile or edge devices. The disease grading system, based on pixel-level segmentation results, enables objective quantification of anthracnose severity, offering an effective tool for early disease warning and informed control decisions in precision agriculture.

This study acknowledges several limitations: Firstly, the model’s adaptability to scenes with high leaf occlusion density requires further enhancement, indicating a need to improve robustness under extreme environmental complexity. Secondly, the existing grading standard relies solely on lesion area percentage and does not account for the influence of lesion spatial distribution (e.g., proximity to growth points) on disease progression. Thirdly, despite substantial model volume has been significantly compressed, the repeated invocation of the DE attention and DCNv2 modules may introduce inference latency. Optimization techniques, such as operator refinement or hardware acceleration, could further enhance real-time performance. Lastly, the model currently focuses exclusively on anthracnose. Future research should extend it to multi-disease recognition, addressing other common aloe afflictions, such as leaf spot and leaf blight. Therefore, in the future, improvements to other modules will be considered to ensure the model’s universality in various disease segmentation tasks. Future work will also explore model pruning and quantization techniques to optimize inference speed and develop a unified multi-disease segmentation framework incorporating dynamic severity assessment algorithms for comprehensive disease diagnosis. In the future, mobile apps or monitoring platforms will be developed to meet the needs of different users (individual farmers or large-scale planting enterprises) for agricultural planting and disease detection. This work provides a valuable technical reference for deep learning-driven plant phenotyping analysis and holds promising implications for advancing smart agriculture.