1. Introduction

Strawberry (

Fragaria × ananassa Duch.) is a globally significant economic crop, highly valued for its nutritional content and substantial financial benefits [

1]. However, various diseases persistently challenge the strawberry industry, leading to significant yield losses, degraded fruit quality, and increased production costs [

2]. Consequently, developing methods for rapidly and accurately identifying these phytopathological threats is imperative for realizing precision agriculture and sustainable development [

3,

4]. Such methods can facilitate timely interventions to mitigate economic losses and reduce the reliance on broad-spectrum pesticides [

5]. Traditional methods, such as manual field scouting and diagnosis, are often labor-intensive, time-consuming, subjective, and heavily reliant on expert experience, making them inadequate for the fine-grained management demands of modern, large-scale agriculture [

6].

In recent years, the advancement of artificial intelligence (AI), particularly deep learning (DL) and computer vision techniques, has ushered in a new era for automated agricultural monitoring [

7,

8]. Single-stage object detection models, exemplified by the You Only Look Once (YOLO) series, have demonstrated immense potential for rapidly localizing and identifying various objects in images, including plant diseases, owing to their excellent balance between speed and accuracy [

9,

10,

11].

Nevertheless, deploying existing detection models in complex, real-world agricultural environments—especially for fine-grained tasks like strawberry disease recognition—still confronts several fundamental and persistent challenges. These unresolved issues form the core motivation for this research:

Deploying existing detection models in complex, real-world agricultural environments—especially for fine-grained tasks like strawberry disease recognition—still confronts several fundamental and persistent challenges. A primary issue is the difficulty in detecting small, dense, and occluded targets. Early-stage symptoms, such as aphids, thrips, or initial powdery mildew spots, manifest as small, densely distributed, or heavily occluded targets. Standard detection heads often struggle to effectively focus on these critical yet faint visual cues, leading to a high miss detection rate [

12]. Furthermore, these diseases’ visual characteristics can vary dramatically in size, from minuscule spots to large lesions, leading to an insufficient capture of multi-scale contextual information [

13]. Due to their inherent local receptive fields, conventional convolutional networks have difficulty effectively integrating this multi-scale information, which is crucial for distinguishing pathological features from background noise. This problem is compounded by scale-dependent localization instability, where minor pixel shifts in bounding boxes for small targets can cause drastic fluctuations in Intersection over Union (IoU) values. This IoU sensitivity disrupts the positive-negative sample assignment during training, leading to model instability and ultimately compromising localization accuracy [

14]. Finally, standard convolution operations often produce redundant or insufficiently diverse features, resulting in limited feature representation capability. This limitation hinders the model’s ability to discriminate between visually similar yet pathologically distinct diseases or pests, particularly amidst complex background textures [

15].

To address the challenges above, this paper proposes a novel detection framework named PPA-MC-YOLO, which has been meticulously optimized for strawberry disease recognition. The framework is built upon the high-performance YOLOv12 baseline and is enhanced by systematically integrating four synergistic innovations to overcome the above challenges. The primary contributions of this work are summarized as follows:

We introduce a novel Parallel Pyramid Attention (PPA) module specifically designed for the detection head. While inspired by established pyramid attention concepts, our module employs a unique multi-branch parallel architecture to simultaneously capture global context, multi-scale local details, and hierarchical information. This design is tailored to enhance the model’s focus on the small, dense, and occluded targets characteristic of agricultural scenes, directly addressing Gap 1.

To improve multi-scale fusion, we integrate Monte Carlo Attention (MCAttn). It leverages stochastic sampling pooling to generate scale-invariant attention, improving contextual awareness for targets of varying sizes, thereby resolving Gap 2.

We introduce the Scale-Decoupled (SD) Loss function to stabilize small object training. Dynamically re-weighting losses effectively mitigates the training instability caused by IoU sensitivity, thus overcoming Gap 3.

To enrich feature representations, we adopt Frequency Dynamic Convolution (FDConv). Without increasing the computational burden, this method generates diverse convolutional kernels, substantially enhancing the model’s feature discriminability for similar diseases, aiming to fill Gap 4.

Extensive experiments on a public strawberry disease dataset demonstrate that our proposed PPA-MC-YOLO model significantly outperforms a range of state-of-the-art detectors, including NanoDet-Plus, MobileNetV2-SSD, RT-DETR-R18, YOLOv8-n, YOLOv10-n, YOLOv11-n, and our baseline model, YOLOv12-n. These results substantiate the effectiveness of our approach and highlight its substantial potential as a reliable tool for intelligent agricultural management and precision crop protection.

2. Related Work

This chapter aims to systematically review the relevant research in plant disease detection, focusing on three primary areas: traditional machine learning-based detection methods, deep learning-based object detection methods, and advanced techniques targeting small objects and multi-scale challenges. By outlining the progress and limitations of existing studies, this chapter will clarify the proposed method’s research motivation and innovative value.

2.1. Traditional Methods for Plant Disease Detection

Before the widespread adoption of deep learning, the automated detection of plant diseases predominantly relied on traditional computer vision and machine learning techniques. These methods typically followed a fixed pipeline: image preprocessing, lesion segmentation, feature extraction, and classifier training. Researchers utilized visual cues such as color, texture, and shape to distinguish between healthy and diseased tissues. For instance, Jamjoom et al. [

16] employed K-means clustering for lesion segmentation. They used a Support Vector Machine (SVM) to classify plant leaf diseases based on color and texture features. Similarly, Yogeshwari and Thailambal [

17] achieved plant disease identification by extracting color histograms and Gray-Level Co-occurrence Matrix (GLCM) texture features.

However, these traditional methods suffer from significant limitations. Firstly, they heavily depend on hand-crafted features, which exhibit poor generalization capabilities and struggle to adapt to the complex and variable conditions of field environments, such as fluctuations in lighting, background interference, and the morphological diversity of diseases. Secondly, the accuracy of lesion segmentation directly impacts the final detection performance, yet achieving robust segmentation against complex backgrounds is a challenging problem. Lastly, these methods can typically only determine the presence of a disease in an image. Still, they struggle to accurately localize its specific position or identify multiple instances, failing to meet the requirements of precision spraying.

2.2. Deep Learning-Based Object Detection Methods

With the rise of deep learning, Convolutional Neural Networks (CNNs) have achieved breakthrough progress in plant disease detection due to their powerful automatic feature extraction capabilities. Deep learning-based object detection models can identify disease categories and precisely localize their positions within an image, providing robust technical support for precision agriculture. These models are primarily categorized into two types:

Two-Stage Detectors: This category is represented by the R-CNN series, including Fast R-CNN [

18] and Faster R-CNN [

19]. They generate a series of region proposals and then perform classification and bounding box regression on these regions. For example, Fuentes et al. [

20] successfully detected multiple diseases in tomatoes using Faster R-CNN, validating its effectiveness in complex backgrounds. Although two-stage detectors generally achieve high detection accuracy, their high computational complexity and slow inference speeds make them challenging to deploy for real-time detection applications.

Single-Stage Detectors: This category of models directly predicts bounding boxes and classes on the image, bypassing the region proposal generation step, which makes them significantly faster. Representative models include the Single Shot MultiBox Detector (SSD) [

21] and the You Only Look Once (YOLO) series [

9,

22]. YOLO, in particular, has become a mainstream choice in agricultural applications due to its excellent balance between speed and accuracy. Numerous researchers have proposed improvements based on YOLO. For instance, Guo et al. [

23] developed YOLO-T, an enhanced YOLOv7 variant that improved tea leaf disease detection through architectural optimizations. Similarly, Tao et al. [

24] achieved higher Precision in bell pepper disease detection by adapting YOLOv5’s feature extraction and training methodology.

Despite the remarkable success of YOLO-based models, they still face challenges when dealing with targets characterized by small size, dense distribution, and occlusion, as is common in strawberry disease detection. Standard YOLO models have room for improvement in multi-scale feature fusion and attention to minuscule targets, which is a key motivation for the present work.

2.3. Advanced Techniques for Small Object and Multi-Scale Detection

Researchers have proposed various enhancement strategies to address the difficulties of small object detection and poor multi-scale adaptability, with attention mechanisms and advanced feature fusion structures being the primary approaches.

Attention Mechanisms: Mimicking the human visual system, attention mechanisms enable models to adaptively focus on critical information in an image while suppressing irrelevant background noise. Squeeze-and-Excitation (SE) [

25] recalibrates channel-wise features by learning inter-channel correlations. The Convolutional Block Attention Module (CBAM) [

26] further enhances feature discriminability by combining channel and spatial attention. These attention modules have been widely applied in agricultural object detection to augment the response to key regions such as disease lesions. However, most existing attention mechanisms are general-purpose designs and may not fully account for the specific patterns of pathological features in agricultural scenes.

Multi-Scale Feature Learning: Feature Pyramid Network (FPN) [

27] and its variants, such as PANet [

28] and AugFPN [

29], enhance the model’s ability to detect multi-scale targets by fusing feature maps from different levels. These structures effectively combine high-level semantic information with low-level detailed information. Furthermore, some studies have explored more flexible feature aggregation methods. For example, Stochastic Pooling [

30] was introduced to prevent overfitting by incorporating randomness and has been shown to help models learn more robust feature representations. The MCAttn module proposed in this paper draws inspiration from this idea, constructing scale-invariant attention maps through stochastic sampling.

While the aforementioned feature pyramid networks improve multi-scale representation, Pyramid Attention mechanisms have also been explored to explicitly capture multi-scale context within attention modules themselves. These methods are effective but often rely on a serial or hierarchical structure to fuse pyramid features. Our work diverges from this trend by proposing a parallel architecture for pyramid attention within the detection head. This parallel design is motivated by the specific need in agriculture to concurrently process microscopic disease spots and large lesions, which we argue is more effective than sequential fusion for preserving fine-grained details of small targets.

2.4. Current Limitations of Strawberry Disease Detection Methods

While significant progress has been made in deep learning-based object detection, current mainstream detectors still exhibit limitations when applied to the precise detection of strawberry diseases in complex agricultural environments. Specifically, these methods often struggle with the inherent challenges of small symptom sizes, mutual occlusion among plant parts and symptoms, and the high intra-class variance of disease manifestations. Existing approaches, while effective in controlled settings, frequently show reduced performance and robustness when confronted with these real-world complexities. These identified limitations underscore the pressing need for a more robust and adaptive detection framework capable of overcoming these hurdles, which serves as the primary motivation for the present study.

In conclusion, the work presented in this paper provides a high-performance technical solution for strawberry disease detection, but, more importantly, its modular design philosophy offers new insights and empirical support for resolving common technical challenges prevalent in agricultural vision tasks.

4. Results

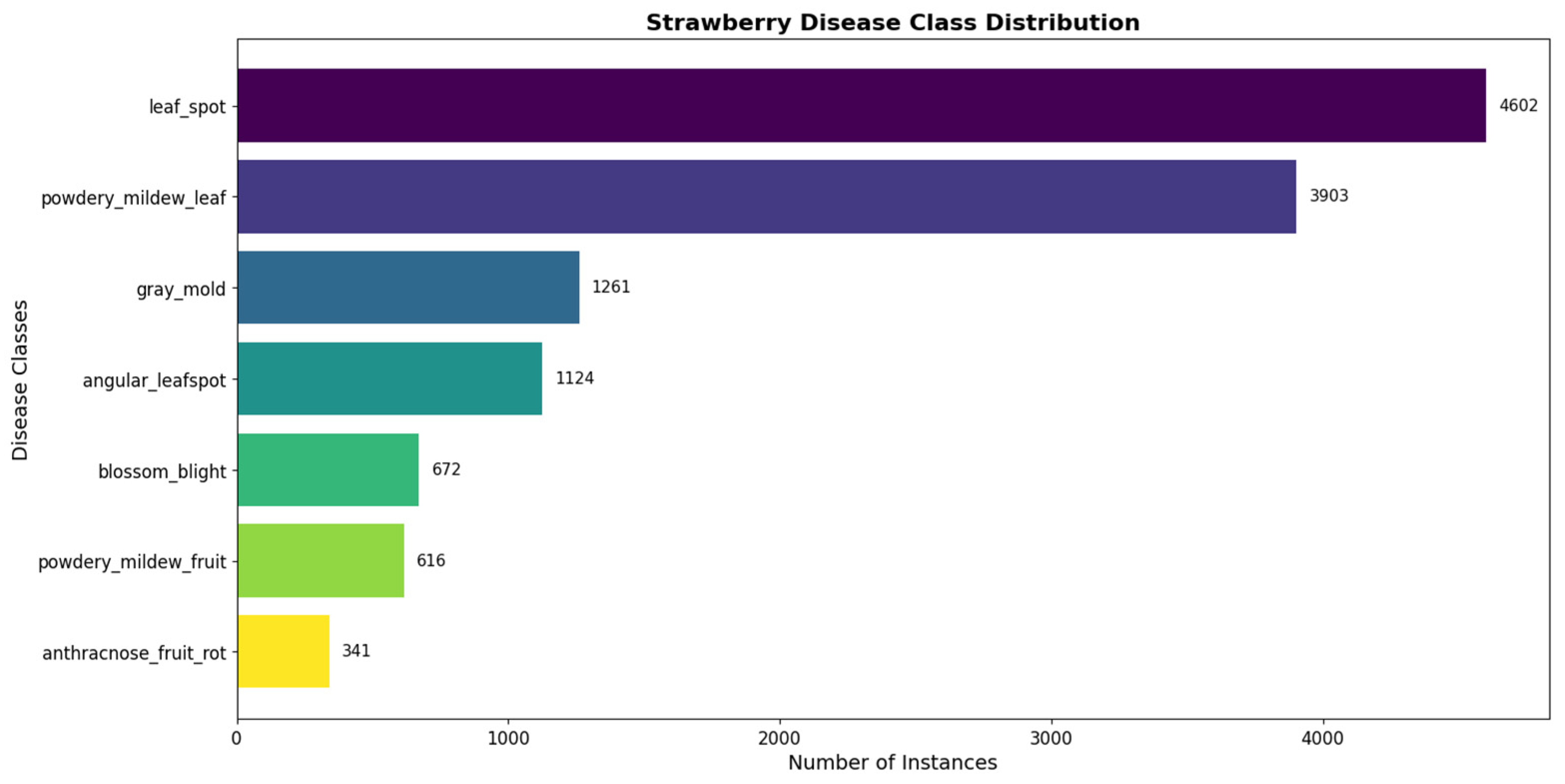

This chapter details the experimental results for the proposed enhanced YOLOv12 framework and other comparative models on the strawberry disease dataset. We begin by introducing the main detection performance comparison, followed by ablation studies to validate the effectiveness of each innovative module, and conclude with a visualization analysis of several challenging detection cases.

4.1. Main Detection Performance Comparison

To comprehensively evaluate the performance of our proposed PPA-MC-YOLO framework, we conducted a fair comparison against a series of representative, state-of-the-art (SOTA) detectors spanning various architectures. These include lightweight models (NanoDet-Plus, MobileNetV2-SSD), a Transformer-based model (RT-DETR-R18), and advanced models from the YOLO series (YOLOv8-n, v10-n, v11-n). We selected YOLOv12, which has the closest model complexity to our own, as the direct baseline for comparison. All experiments were conducted on the same hardware platform, and the detailed performance comparison is presented in

Table 1.

As demonstrated by the comprehensive data in

Table 1, our proposed PPA-MC-YOLO model effectively balances accuracy and efficiency.

Regarding detection accuracy, our model attains the best performance across all major evaluation metrics. It achieves an mAP@0.5 of 81.1% and an mAP@0.5:0.95 of 63.2%, representing improvements of 2.1 and 1.9 percentage points, respectively, over the baseline YOLOv12. This significant accuracy enhancement directly substantiates the collective efficacy of our introduced improvements. Specifically, the adoption of SD Loss effectively stabilized the training process. At the same time, the PPA module enhanced the focus on hard-to-detect targets, with multiple improvements collectively contributing to the overall surge in Precision.

Regarding model efficiency, PPA-MC-YOLO exhibits superior lightweight characteristics. With only 2.44 M parameters and 6.3 GFLOPs, it is the most lightweight among all high-accuracy models (mAP@0.5 > 79%). This is primarily attributed to the application of FDConv, which effectively enhances feature representation capabilities without significantly increasing computational costs.

While a 2.1% absolute improvement in overall mAP@0.5 may appear modest, its significance is underscored by several critical factors. Firstly, this gain is achieved over the highly optimized and strong YOLOv12 baseline, where further improvements are notoriously difficult to obtain. Secondly, and more importantly, the overall average improvement conceals substantial, targeted gains on the most challenging disease classes. As evidenced by the per-class analysis in

Table 2, our model achieves a remarkable 11.0% absolute AP increase for ‘powdery_mildew_fruit’, a class characterized by minute, hard-to-recognize symptoms. This demonstrates that our framework’s improvements are not random but systematically solve key pain points in strawberry disease detection. Finally, this superior accuracy is delivered by a more efficient model, with 17.3% fewer parameters and 4.5% fewer GFLOPs than the baseline. This enhanced performance-efficiency trade-off provides strong evidence for the architectural superiority of our proposed PPA-MC-YOLO.

Our model demonstrates outstanding overall performance in the trade-off between accuracy and efficiency. Compared to the baseline YOLOv12, PPA-MC-YOLO achieves an mAP improvement of over two percentage points while simultaneously reducing parameters and GFLOPs by 17.3% and 4.5%, respectively. Although there is a slight decrease in inference speed (from 480 to 465 FPS), it still maintains a high capacity for real-time inference. This proves that our framework design successfully achieves effective model lightweighting while boosting detection accuracy.

To further investigate the specific sources of performance improvement in the PPA-MC-YOLO model, we conducted a detailed analysis of its Average Precision (AP), Precision (P), and Recall (R) for each disease class in comparison to the baseline YOLOv12 model. The detailed results are presented in

Table 2.

The per-class analysis results in

Table 2 provide compelling evidence for the success of our model at a micro-level. PPA-MC-YOLO achieves a 2.1 percentage point increase in overall mAP and demonstrates targeted improvements on several challenging classes.

Significantly Enhanced Detection of Small and Hard-to-Recognize Targets: The most noteworthy improvement is observed in the ‘powdery_mildew_fruit’ class. This category is extremely difficult to detect due to its minute early-stage symptoms and low contrast against the fruit background. The baseline model achieved a Recall of only 46.5%, indicating many missed detections. In contrast, our PPA-MC-YOLO model, benefiting from the effective capture of fine-grained features by the PPA module and the aggregation of multi-scale information by MCAttn, increased the Recall to 52.0% (a 5.5 percentage point increase). Its AP surged from 63.5% to 74.5% (an 11.0% increase). This substantiates the effectiveness of our improvements in addressing the challenges of detecting small and difficult-to-recognize targets.

Accuracy Gains from Improved Feature Discriminability: Our model also performs well on diseases with visually similar and easily confusable features, such as ‘leaf_spot’ and ‘angular_leafspot’. For instance, the AP for ‘angular_leafspot’ increased by 2.5 percentage points, and the AP for ‘leaf_spot’ rose by 2.4 percentage points. This is primarily attributed to the synergistic effects of modules like FDConv. The model can more accurately distinguish the subtle differences between these similar diseases by constructing richer feature representations, thereby improving detection accuracy.

Achieved a Better Precision-Recall Balance: On average, our model increased the overall Recall from 73.2% to 76.2% (a 3.0 percentage point increase) while raising the overall Precision from 81.2% to 81.6%. This indicates that the performance improvement is comprehensive and balanced. Our model effectively controls false detections through stronger feature representations and a more stable training process while simultaneously reducing missed detections, thus achieving superior overall detection performance.

In summary, the results of the per-class analysis further confirm the effectiveness of our proposed PPA-MC-YOLO framework in handling the detection of complex pathological features. It demonstrates significant improvements, particularly in recognizing small targets and similar diseases, highlighting the model’s potential for practical application in agriculture.

4.2. Ablation Study

To systematically quantify the individual contributions of our four proposed modules—PPA, MCAttn, SD Loss, and FDConv—and to investigate their potential synergistic effects, we conducted a series of exhaustive ablation experiments using YOLOv12 as the baseline under identical experimental settings. The mAP@0.5 was uniformly adopted as the evaluation metric, and the detailed results are presented in

Table 3.

The results in

Table 3 deconstruct the sources of our model’s performance improvement, from which several key insights can be drawn.

Independent Efficacy of Each Module: The forward-addition experiments (#2 to #5) confirm that our proposed modules independently contribute to performance gains on top of the baseline model. Notably, the MCAttn module exhibits the most significant individual gain, boosting the mAP@0.5 by 1.0 percentage point (experiment #3). This finding provides a crucial insight for our task: enhancing the model’s ability to generate scale-invariant attention for multi-scale contextual awareness is the most effective single intervention for improving detection performance. The PPA module also contributes a significant 0.7 percentage point increase (experiment #2), demonstrating the value of its attention-driven detection head in focusing on fine-grained target features. While the SD Loss and FDConv modules show smaller individual gains, they provide essential training stability and feature enrichment for the model.

Synergistic Effects Between Modules: The combination experiments reveal positive synergy between the modules. For example, when PPA and MCAttn are integrated (experiment #6), the mAP@0.5 increases by 1.5 percentage points. This combined effect indicates that the local-focusing capability of PPA and the global, scale-invariant contextual ability of MCAttn are complementary, working together to achieve a more robust feature representation. Ultimately, the final model integrating all four components (experiment #8) reaches a peak performance of 81.1%, with a total improvement of 2.1 percentage points, proving the effectiveness of our overall framework design.

Indispensability of Core Components: The backward-removal experiments (#9 and #10) further validate the necessity of each key module. Removing any single component from the final PPA-MC-YOLO model results in a performance drop. In particular, removing the MCAttn module causes the largest performance degradation (−1.0 percentage point, experiment #10), reaffirming its status as the most critical contributor to our framework’s success. Similarly, removing the PPA module also leads to a notable performance decline of 0.7 percentage points.

To further validate the effectiveness of our proposed Scale-Decoupled (SD) Loss, we conducted a comparative ablation study against several state-of-the-art loss functions. As shown in

Table 4, the results demonstrate the superiority of our approach.

When compared to the default CIoU loss, SD Loss achieves a notable improvement across all key metrics, particularly boosting the AP for small objects (AP_small) by 2.0 percentage points. This directly confirms its efficacy in stabilizing the training for minuscule targets, which was its primary design motivation.

While Focal Loss slightly improves Precision, it comes at the cost of Recall and overall mAP, indicating that solely addressing class imbalance is insufficient for this task. The advanced Wise-IoU Loss shows competitive performance, but our SD Loss still outperforms it, especially in the crucial AP_small metric.

This quantitative comparison provides strong evidence that by adaptively re-weighting localization and classification losses based on target scale, SD Loss offers a more effective optimization strategy for detecting objects with significant scale variations, a common challenge in agricultural scenes.

In conclusion, the ablation study validates the effectiveness of all four of our innovations and highlights the core driving role played by the MCAttn module in enhancing multi-scale awareness. This core function, supported by the focused attention of PPA, the enriched features from FDConv, and the stabilized training from SD Loss, collectively forms a powerful and synergistically efficient detection framework capable of effectively addressing complex agricultural scenes.

4.3. Parameter Sensitivity Analysis

We conducted a series of parameter sensitivity analyses to validate the design rationale of our key modules and determine their optimal hyperparameters. These analyses provide a solid empirical basis for the final configuration of the PPA-MC-YOLO framework.

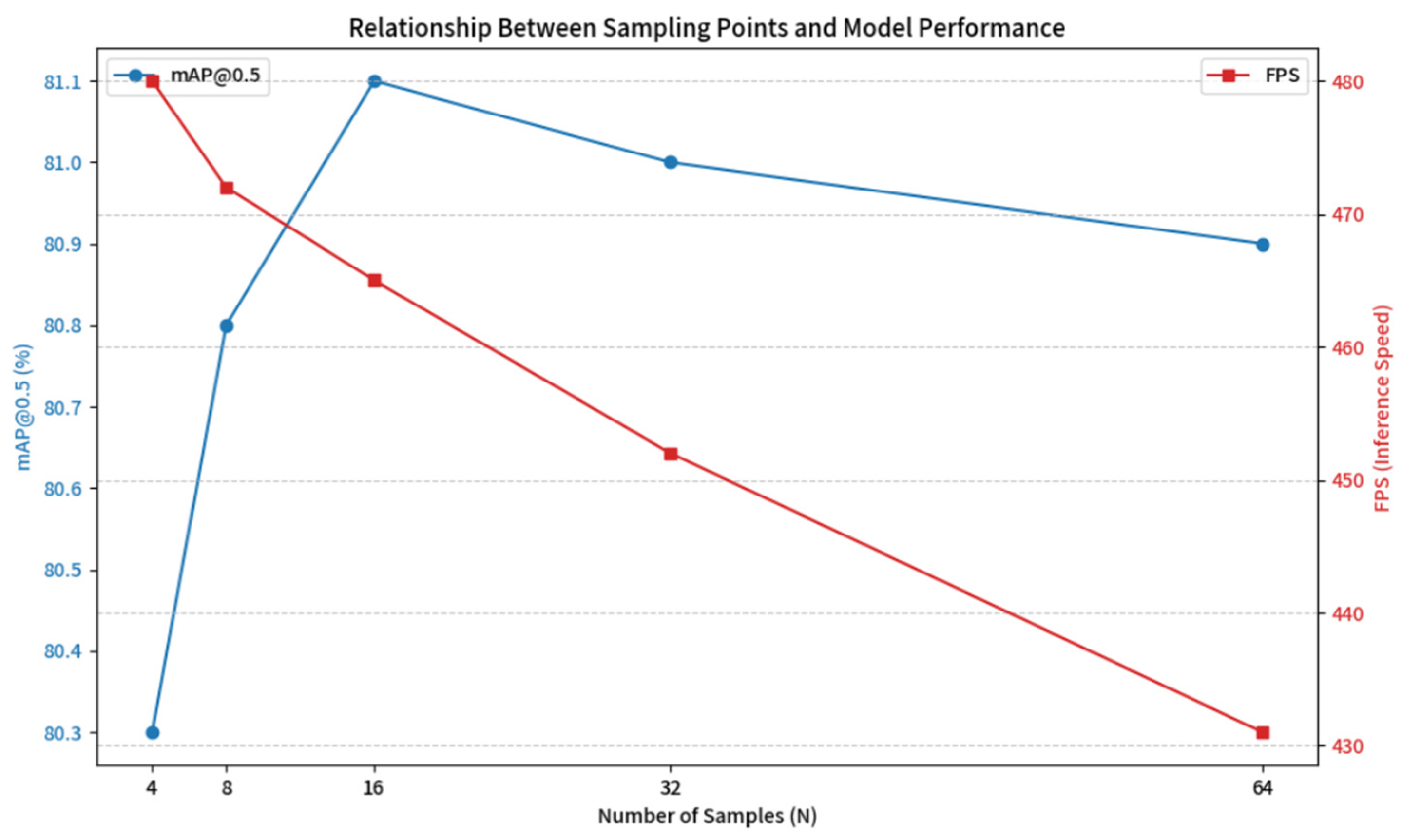

4.3.1. Impact of the Number of Sampling Points N in MCAttn

The number of sampling points, N, in the MCAttn module is a critical hyperparameter that directly influences the model’s accuracy and efficiency. We tested the impact on the mAP@0.5 and FPS of the full PPA-MC-YOLO model as N was varied within the set {4, 8, 16, 32, 64}. The results are illustrated in

Figure 5.

Analysis: As illustrated in

Figure 5, the model’s performance is sensitive to the value of N. As N increases from 4 to 16, the mAP@0.5 steadily rises from 79.9% to a peak of 81.1%. This indicates that moderate stochastic sampling can effectively form a robust feature representation. However, as N continues to increase to 64, the mAP slightly decreases to 80.8%, possibly because oversampling diminishes the advantages brought by randomness. Concurrently, the FPS monotonically decreases (from 475 to 425) as N increases. Considering both aspects, N = 16 strikes the optimal balance between accuracy and efficiency and was therefore selected for the final configuration.

4.3.2. Impact of Branch Combinations in the PPA Module

The PPA module is composed of three branches: Global (G), Local Parallel (L), and Serial (S). To verify the necessity of each branch, we conducted an internal ablation study on their combinations. The results are presented in

Table 5.

Analysis: The results in

Table 5 validate the effectiveness of our multi-branch design. The individual Local (L) and Serial (S) branches significantly improve performance. When combined (L+S), the mAP increases to 80.7%, demonstrating that these two paradigms—one for capturing multi-scale local details and the other for hierarchical information—are highly complementary.

Crucially, introducing the Global (G) branch pushes the performance to its peak of 81.1%. This confirms that the global context is vital for the model to distinguish foreground from complex backgrounds. Therefore, the complete architecture incorporating all three branches (G+L+S) is confirmed as the optimal design for the PPA module.

The parameter sensitivity analyses above provided sufficient experimental support for PPA-MC-YOLO’s key design and hyperparameter selections.

4.4. Visualization Results and Analysis

To intuitively evaluate the PPA-MC-YOLO model’s performance and investigate its internal working mechanisms, this section presents qualitative analysis results under typical challenging scenarios.

Figure 6 compares the detection results between our proposed PPA-MC-YOLO model and the baseline YOLOv12 on four representative types of challenging samples. These results reveal the practical performance gains from the modules we introduced.

Small and Dense Targets (

Figure 6a,b): The first row displays a leaf with fine leaf-spot symptoms. The baseline model (a) uses a single, imprecise, and overly large box to cover all lesions, failing to distinguish individual spots effectively. In contrast, our model (b) can generate multiple smaller, more precise bounding boxes, locally localizing each lesion area. This provides compelling evidence for the superiority of the PPA module in focusing on and segmenting dense, minuscule targets.

Detection of Concealed Targets in Complex Backgrounds (

Figure 6c,d): The second row presents a complex scene containing a withered flower (gray_mold). This target is small, has an irregular shape, and is easily mistaken for harmless background clutter. The baseline model (c) failed to identify this concealed target, resulting in a missed detection. Our model (d), however, successfully detected it. This highlights the comprehensive advantages of our framework: the PPA module enables it to focus on small targets. At the same time, MCAttn and FDConv work in synergy to enhance the model’s ability to discriminate true targets from complex backgrounds.

Co-existence of Multi-scale Targets (

Figure 6e,f): In the sample from the third row, the main leaf exhibits clear powdery_mildew_leaf, while another leaf in the background has a much smaller lesion. The baseline model (e) only detected the large target in the foreground, completely ignoring the small one. Our model (f) accurately detected the large target and successfully captured the distant, tiny lesion, demonstrating its excellent multi-scale detection capability, which is attributable to the synergistic effect of all our innovative modules.

Discrimination of Confusing Classes (

Figure 6g,h): The fourth row provides an excellent case for distinguishing between two visually similar diseases. The baseline model (g) misclassified, incorrectly identifying the sample as angular_leafspot. However, our model (h) made the correct classification, identifying it as leaf_spot. This improvement is directly attributed to the FDConv module, which enables the model to capture the subtle visual cues that differentiate these similar diseases by extracting richer and more discriminative frequency-domain features.

In conclusion, the qualitative comparison results in

Figure 6 intuitively validate the superiority of the PPA-MC-YOLO framework in addressing various complex detection challenges. Whether dealing with small, dense targets or identifying concealed, easily confused diseases in complex backgrounds, our model consistently demonstrates greater robustness and accuracy than the baseline model. This fully substantiates the practical value of our proposed multi-module synergistic design in real-world applications.

4.5. Efficiency Analysis

While pursuing higher detection accuracy, this study has also paid close attention to model efficiency. As shown in

Table 1, PPA-MC-YOLO demonstrates a significant advantage in terms of model complexity. Compared to the baseline YOLOv12, our model’s parameter count is reduced from 2.95 M to 2.44 M (a 17.3% decrease), and its theoretical computational load (GFLOPs) is also reduced from 6.6 to 6.3 (a 4.5% decrease). This proves that our proposed framework, particularly modules like FDConv, can effectively enhance feature representation capabilities without increasing—and in fact, while decreasing—model complexity.

Notably, despite the reduction in theoretical computational load, the model’s actual inference speed (FPS) slightly decreased from 480 to 465. This discrepancy between theoretical efficiency and practical speed may stem from the operations introduced in the PPA and MCAttn modules, such as multi-branch processing and attention calculations. Although these operations may not have a high total number of floating-point operations, they might increase the Memory Access Cost (MAC) or data dependencies, thereby affecting parallel computing efficiency on the GPU.

Nevertheless, PPA-MC-YOLO achieves an mAP improvement of over two percentage points while simultaneously reducing model complexity. Its inference speed meets the’ real-time or near-real-time requirements of agricultural scenarios. This indicates that our model has struck an excellent balance among accuracy, complexity, and speed, making it a highly efficient and powerful solution.

5. Discussion

The PPA-MC-YOLO framework proposed in this study is an enhanced object detector that has demonstrated superior performance on a challenging strawberry disease dataset, surpassing the baseline YOLOv12 and other state-of-the-art (SOTA) models. This chapter aims to provide an in-depth interpretation of the mechanisms driving these performance gains, critically evaluate the model’s strengths and inherent limitations, and situate our contributions within the context of related work, while also proposing promising avenues for future research.

5.1. Interpretation of Performance Enhancement Mechanisms

The empirical results, particularly the comprehensive ablation studies in

Table 3, unequivocally demonstrate that our proposed modules positively contribute to the model’s final efficacy. This performance uplift is not coincidental but stems from a synergistic design in which each component targets specific challenges prevalent in agricultural computer vision.

Efficacy of the PPA Module in Detecting Small and Ambiguous Targets. The success of the Parallel Pyramid Attention (PPA) module can be attributed to its innovative multi-branch parallel feature extraction strategy. This design overcomes the limitations of conventional detection heads, which typically employ a single convolutional path with a fixed receptive field. The PPA’s architecture facilitates a more comprehensive feature analysis: (1) a global branch provides contextual priors, enabling the model to distinguish foreground from complex backgrounds; (2) a parallel local branch, utilizing dilated convolutions with varying rates, functions like a multi-focal “microscope,” capturing fine-grained details across a spectrum of scales, from minuscule spots to medium-sized lesions; and (3) a serial branch preserves hierarchical feature extraction capabilities. Fusing these branches generates an adaptive attention map that optimally allocates feature weights for targets of different sizes. The effectiveness of this mechanism is validated by the model’s performance on notoriously difficult classes. For instance, in the powdery_mildew_fruit class, characterized by minute, low-contrast symptoms, the AP achieved a remarkable 11.0 percentage point surge, with a corresponding 5.5-point increase in Recall (

Table 2). This demonstrates the critical role of PPA in enhancing focus on early-stage, subtle pathological features.

Role of MCAttn in Achieving Scale-Invariant Recognition. The core innovation of the Monte Carlo Attention (MCAttn) module lies in its stochastic sampling-based pooling mechanism. This approach diverges from traditional deterministic pooling methods (e.g., average or max pooling), which are inherently sensitive to target scale and background interference. By employing random sampling, MCAttn shifts the attention dependency from an aggregate of all pixels within a region to a representative subset. This confers two distinct advantages: (1) Scale Invariance: The stochastic nature of the sampling ensures that stable and effective attention responses can be generated regardless of target size, as long as its core features are sampled, thereby mitigating overfitting to specific scales. (2) Robustness: Random sampling reduces the model’s sensitivity to spurious, high-activation pixels, which may be noise, allowing it to extract true disease features from complex background textures more robustly. The pivotal contribution of MCAttn is substantiated by the ablation study (

Table 3), where its standalone integration yielded the largest single performance gain (+1.0% mAP@0.5), and its removal caused the most significant performance degradation (−1.0% mAP@0.5).

Synergistic Contributions of FDConv and SD Loss. While the PPA and MCAttn modules provide the primary performance boosts, the contributions of FDConv and SD Loss are equally crucial for constructing a comprehensive, high-performance model. FDConv enriches feature representation without incurring additional parametric costs by constructing frequency-diverse convolution kernels. Low-frequency kernels capture smooth, global structures (e.g., leaf contours), while high-frequency kernels focus on fine textural details (e.g., lesion edges). This enhanced feature diversity is likely the reason for the model’s improved ability to distinguish between visually similar diseases, as evidenced by the AP gains of +2.5% and +2.4% for angular_leafspot and leaf_spot, respectively (

Table 2). Concurrently, SD Loss provides critical optimization stability. By dynamically re-weighing classification and localization losses during training, the inherent IoU sensitivity problem in small object detection is addressed, leading to more precise bounding box regression. This is reflected in the model’s overall performance, where a substantial 3.0-point increase in Recall was achieved with a concurrent improvement in Precision (

Table 2), indicating a more optimal precision-recall balance.

5.2. Strengths and Limitations

Superior Accuracy on Challenging Targets: The most significant advantage of PPA-MC-YOLO is its marked improvement in detection accuracy, particularly when handling typical challenges in agricultural scenes such as small, dense, and partially occluded targets. As detailed in

Table 2, the model achieved substantial AP gains on key difficult classes, highlighting its practical utility.

Targeted and Synergistic Design: Unlike general-purpose object detectors, PPA-MC-YOLO components were specifically engineered to address known pain points in agricultural vision. The ablation study (

Table 3) confirms that the synergy between these targeted modules yields a more significant performance uplift than any single modification could.

Excellent Accuracy-Efficiency Trade-off: A core strength is that PPA-MC-YOLO achieves a high degree of model lightweighting while simultaneously improving accuracy. Compared to the baseline YOLOv12, our model not only reduces the number of parameters by a remarkable 17.3% but also decreases the theoretical computational load (GFLOPs) from 6.6 to 6.3 (a 4.5% reduction) (

Table 1). This result demonstrates that our proposed modules, particularly FDConv, possess exceptional computational efficiency while enhancing feature representation. Achieving higher detection accuracy at a lower computational and storage cost is paramount for future deployment on resource-constrained edge devices.

Trade-off between Theoretical Computation and Practical Speed: Although PPA-MC-YOLO is superior to the baseline model in terms of theoretical computational load (GFLOPs), its actual inference speed (FPS) slightly decreased (from 480 to 465). This reveals a common trade-off in model design: certain operations, despite having fewer floating-point operations, may introduce higher Memory Access Cost (MAC) or more complex control flows, thereby impacting parallel execution efficiency on specific hardware like GPUs. The multi-branch and attention computations in the PPA and MCAttn modules likely fall into this category. Therefore, a comprehensive consideration of theoretical efficiency and practical deployment performance is necessary when evaluating the model.

Sensitivity to Extreme Environmental Conditions: While the model was trained on a diverse dataset, its performance may degrade under conditions not well-represented in the training data, such as extreme over- or under-exposure, or severe motion blur caused by rapid movement.

Unverified Generalizability to Other Crops: The current model was trained and optimized exclusively on a strawberry dataset. Its efficacy on other crops (e.g., tomato, cucumber, rice) remains unproven. The pathological features and environmental contexts can vary significantly across different plant species, which may necessitate domain-specific fine-tuning or adaptation.

5.3. Comparison with Related Work and Future Directions

The PPA-MC-YOLO framework proposed in this study surpasses various mainstream detectors in performance, with its advantages rooted in a deep understanding of and targeted design for the specific pain points of agricultural scenes.

Firstly, our approach is more systematic and comprehensive compared to other studies. For instance, Guo et al. [

23] adapted the YOLOv7 model, proposing YOLO-T to enhance tea leaf disease detection performance. Similarly, Tao et al. [

24] effectively applied YOLOv5 to the task of disease identification in bell pepper plants. These works demonstrate the effectiveness of tailoring the YOLO architecture for specialized application domains. However, our PPA-MC-YOLO framework introduces synergistic innovations at four distinct levels: the detection head (PPA), feature fusion (MCAttn), convolutional kernel design (FDConv), and loss function (SD Loss). The ablation study (

Table 3) shows that this multi-pronged strategy yields a more significant performance gain than improvements to a single module, forming a more complete solution.

Secondly, our method exhibits a distinct design philosophy in addressing the challenges of small objects and multi-scale issues. Many advanced detectors, such as EfficientDet [

39] with its BiFPN, improve performance through complex cross-scale connections and weighted feature fusion. While effective, these serial or iterative fusion methods may lose the fine-grained features of minuscule targets during repeated downsampling and upsampling. Our results indicate that the parallel branch design of the PPA module leads to a very significant improvement in small object detection (e.g., the AP increase for ‘powdery_mildew_fruit’ in

Table 2). This is likely because its parallel structure can better preserve original features, avoiding information attenuation during transmission through deep networks. This forms an interesting contrast and complement to the literature [

27,

29] that emphasizes the importance of top-down pathways.

Furthermore, our modules are more targeted than general-purpose attention mechanisms like SE [

25] or CBAM [

26]. SE and CBAM primarily re-weight features along the channel and spatial dimensions, whereas our MCAttn module addresses the fundamental problem of scale invariance. By introducing stochastic sampling, it resolves the issue of scale sensitivity caused by deterministic pooling, which is particularly effective when dealing with disease targets of dramatically varying sizes.

Based on the findings and identified limitations of this research, we propose the following promising avenues for future investigation:

Model Lightweighting and Inference Speed Optimization: Although PPA-MC-YOLO has been made lightweight regarding parameters and GFLOPs, there is still room for optimization in its practical inference speed (see

Table 1). Future work should focus on optimizing the implementation of modules like PPA and MCAttn, for instance, through operator fusion or designing structures better suited for parallel computation. This would reduce memory access costs and fully translate theoretical computational advantages into practical speed improvements, better adapting the model for real-time deployment on mobile or edge devices.

Multi-Modal Data Fusion: The current research is based solely on RGB images. However, multispectral or hyperspectral imagery can provide plant physiological information invisible to the naked eye, which is crucial for the early, pre-symptomatic detection of diseases. Future research could explore how to effectively fuse this multi-modal data with our model by designing dual-stream network architectures, potentially enabling earlier warnings at the initial onset of a disease.

Cross-Crop Generalization and Domain Adaptation: The current model has been optimized and validated on a strawberry dataset. When applied to other crops (e.g., tomato, cucumber, rice), it may face performance degradation due to differences in pathological features and growing environments. Therefore, investigating Domain Adaptation techniques and exploring how to efficiently transfer the knowledge learned from strawberries to new crops using unsupervised or few-shot learning is a research direction of significant practical value.

Disease Progression Prediction Combined with Time-Series Analysis: The current model processes static images. Future research could incorporate time-series analysis. By continuously monitoring images of the same plant at different time points, it would be possible to detect diseases and predict their development trends and spread rates, providing data support for more precise intervention measures.

6. Conclusions

The rapid and accurate detection of diseases is a critical step in ensuring stable yields and enhancing the quality of strawberries. However, existing models often face challenges in complex field environments, such as insufficient capability in detecting small and ambiguous targets, poor multi-scale adaptability, and limited feature discriminability. To address these challenges, this paper proposes an enhanced deep learning model optimized for strawberry disease detection—PPA-MC-YOLO. This framework achieves a comprehensive performance improvement by systematically integrating four synergistic innovative modules:

The Parallel Pyramid Attention (PPA) Module, which introduces a multi-branch parallel structure in the detection head to effectively enhance the model’s ability to focus on the features of small, dense, and occluded targets; Monte Carlo Attention (MCAttn), which achieves scale-invariant contextual awareness through a novel stochastic sampling mechanism, significantly improving recognition robustness for targets of varying sizes; Frequency Dynamic Convolution (FDConv), which, without adding any parameters or computational costs, greatly enriches feature representations by constructing frequency-diverse convolutional kernels, enhancing the model’s ability to discriminate between similar diseases; and Scale-Decoupled Loss (SD Loss), which dynamically adjusts loss weights to optimize the training process, effectively mitigating the IoU sensitivity issue in small object detection and improving the model’s precision-recall balance.

Extensive experimental results on a custom strawberry disease dataset demonstrate that the PPA-MC-YOLO framework surpasses the baseline YOLOv12 and other SOTA models across all key metrics. It achieves an mAP@0.5 of 81.1% and an mAP@0.5:0.95 of 63.2%, representing improvements of 2.1 and 1.9 percentage points over the baseline model, respectively. Crucially, this performance leap is achieved on a more lightweight model, making it highly suitable for resource-constrained environments. Compared to the baseline, PPA-MC-YOLO’s parameters and GFLOPs are reduced by 17.3% and 4.5%, respectively, which is a key advantage for deployment on embedded systems. The model demonstrates outstanding performance in addressing visually ambiguous and hard-to-recognize classes, such as ‘powdery_mildew_fruit’, with an 11.0 percentage point increase in AP. This validates its effectiveness in solving real-world agricultural problems, including automated crop health management and precision spraying. Such high accuracy on challenging classes directly underscores its practical value in mitigating economic losses. Comprehensive ablation studies and visualization analyses confirm each innovative module’s independent efficacy and synergistic effects.

In conclusion, this study successfully developed and validated PPA-MC-YOLO, an efficient and accurate framework for detecting strawberry diseases. By employing a multi-module approach, it significantly enhances detection performance and model efficiency, offering a robust solution for intelligent crop health management. This research provides a promising blueprint for designing next-generation vision systems for fine-grained agricultural tasks. Building on these advancements, future work will focus on both technical optimization and a comprehensive cost–benefit analysis. Specifically, we plan to migrate the framework to a wider range of crop species and optimize the model for edge computing devices through techniques like model quantization to enable deployment on agricultural robots, drones, and portable diagnostic tools. Simultaneously, we will collaborate with agricultural experts and economists to conduct a cost–benefit analysis. This will quantify the model’s economic value by measuring savings in pesticide and labor costs, as well as reduced yield losses due to early disease control, providing a data-driven basis for its commercialization and widespread application.