Automatic Scribble Annotations Based Semantic Segmentation Model for Seedling-Stage Maize Images

Abstract

1. Introduction

2. Materials and Methods

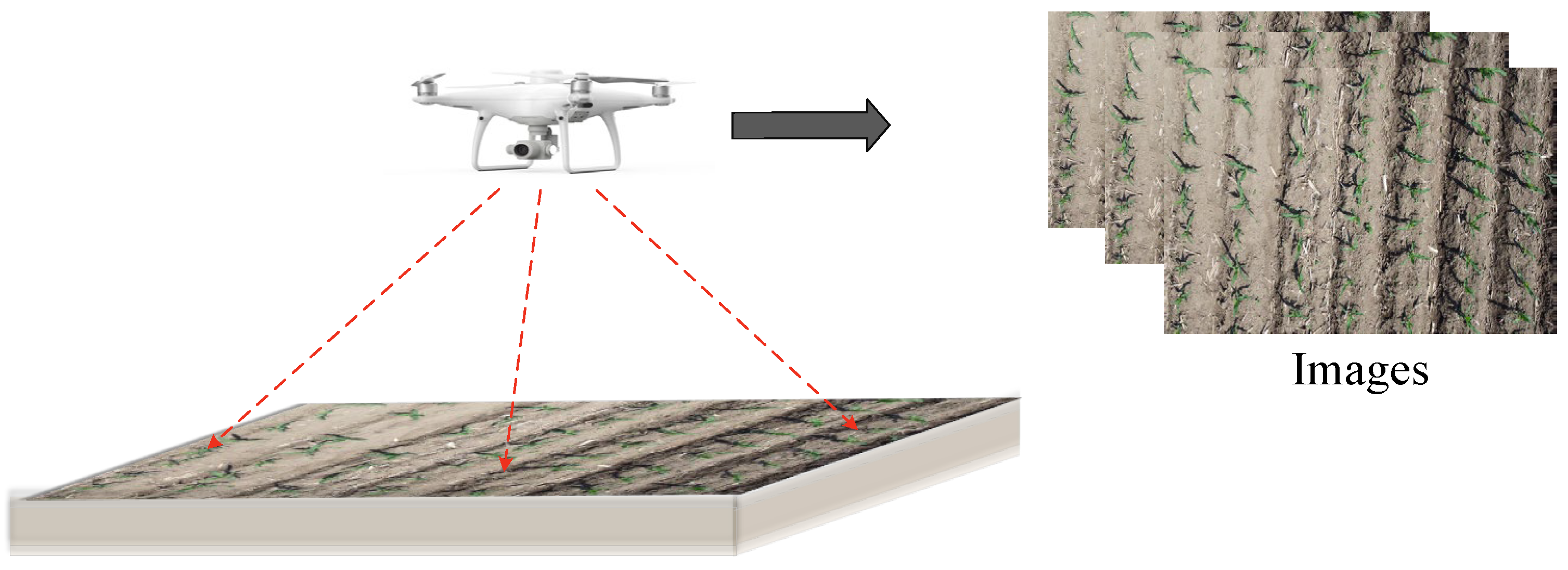

2.1. Data Acquisition

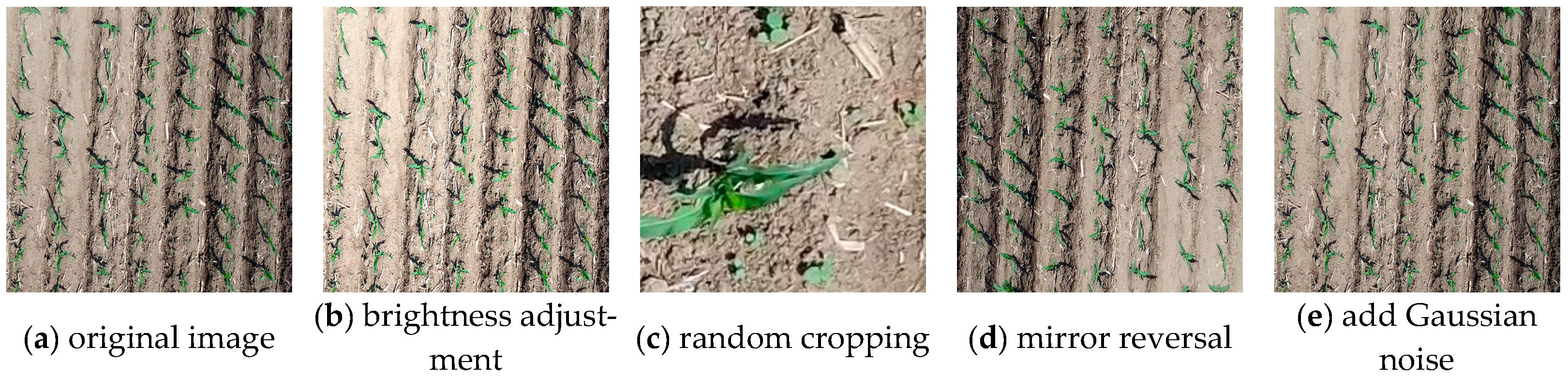

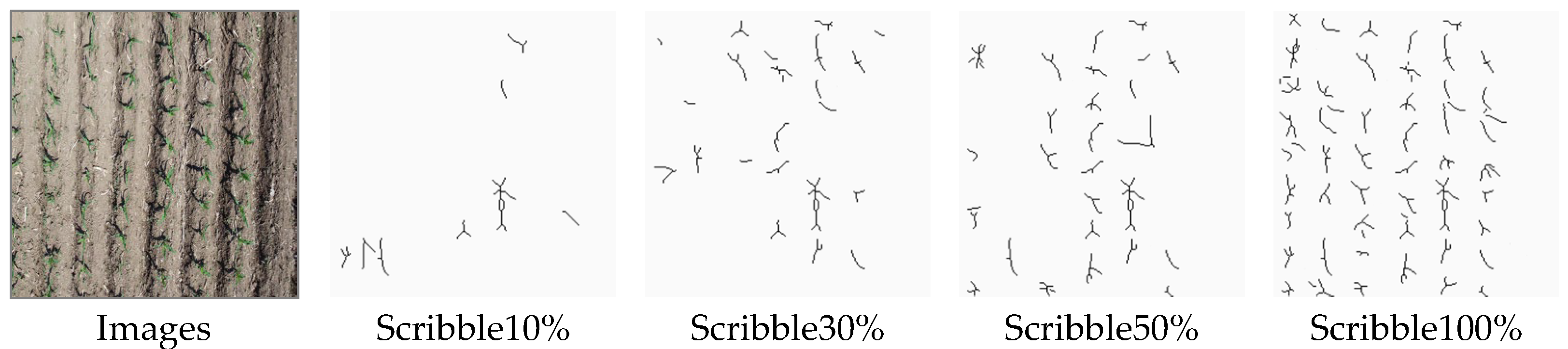

2.2. Datasets Construction

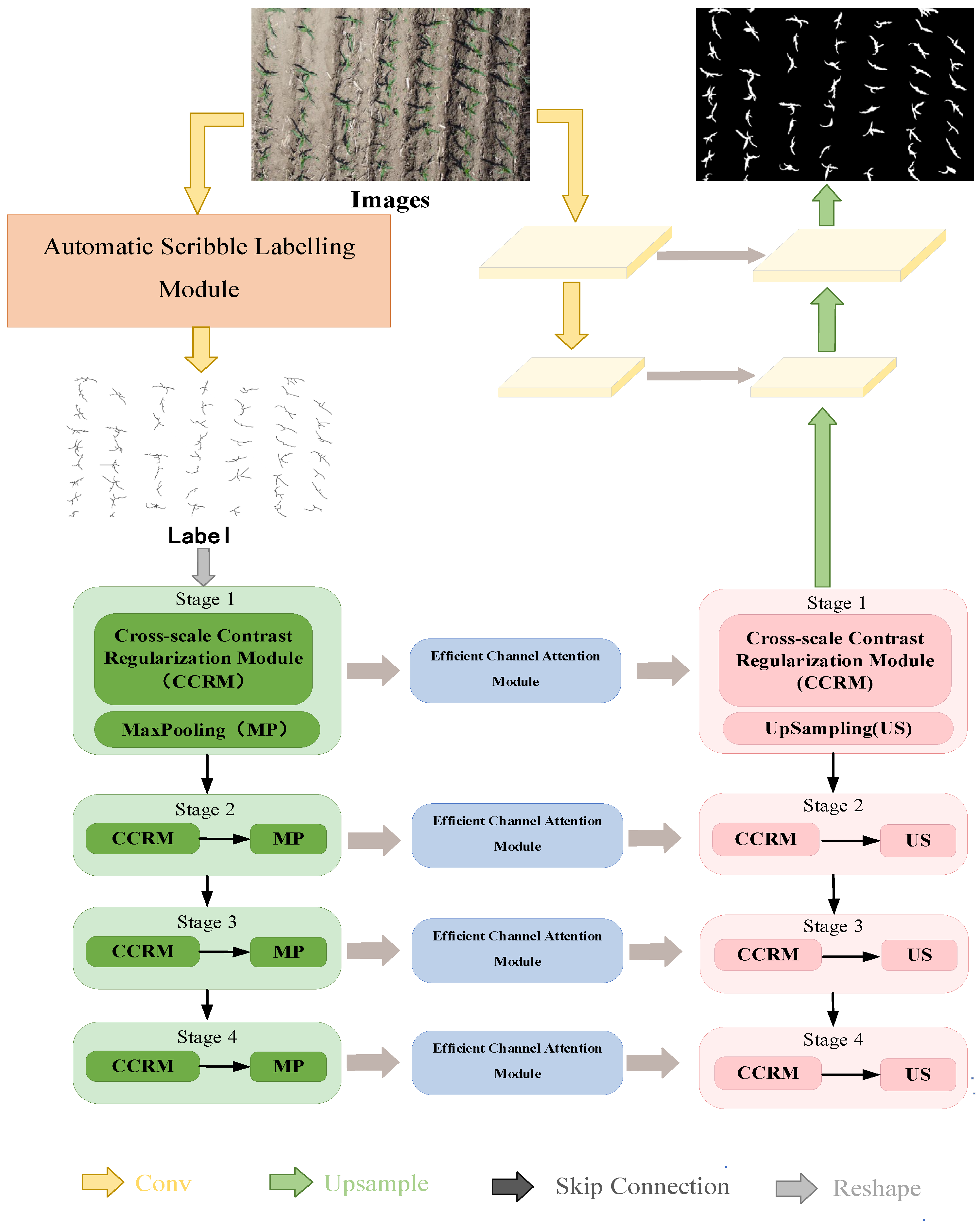

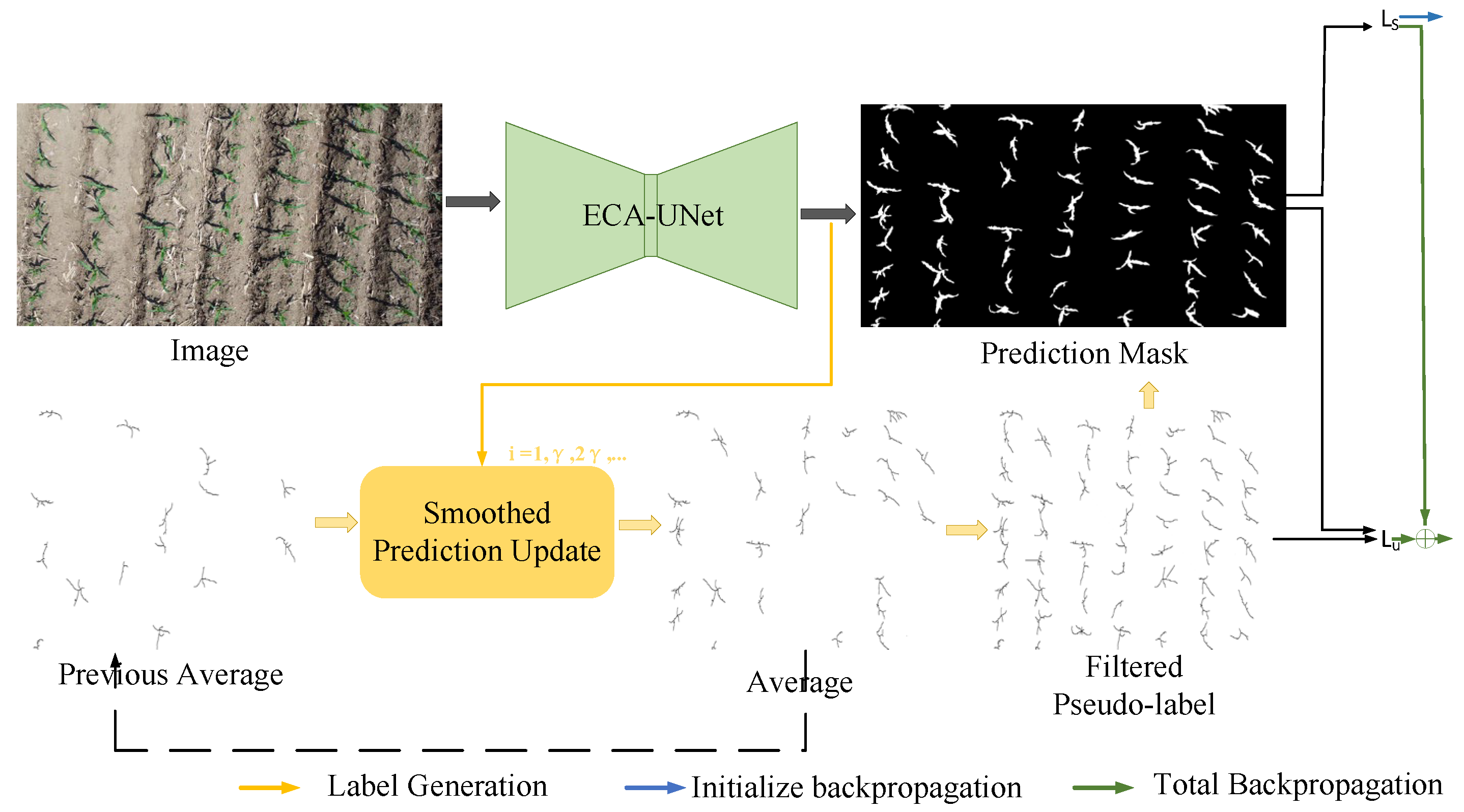

2.3. Semantic Segmentation Model Based on Self-Generated Labels

2.3.1. Research Objectives and Model Framework

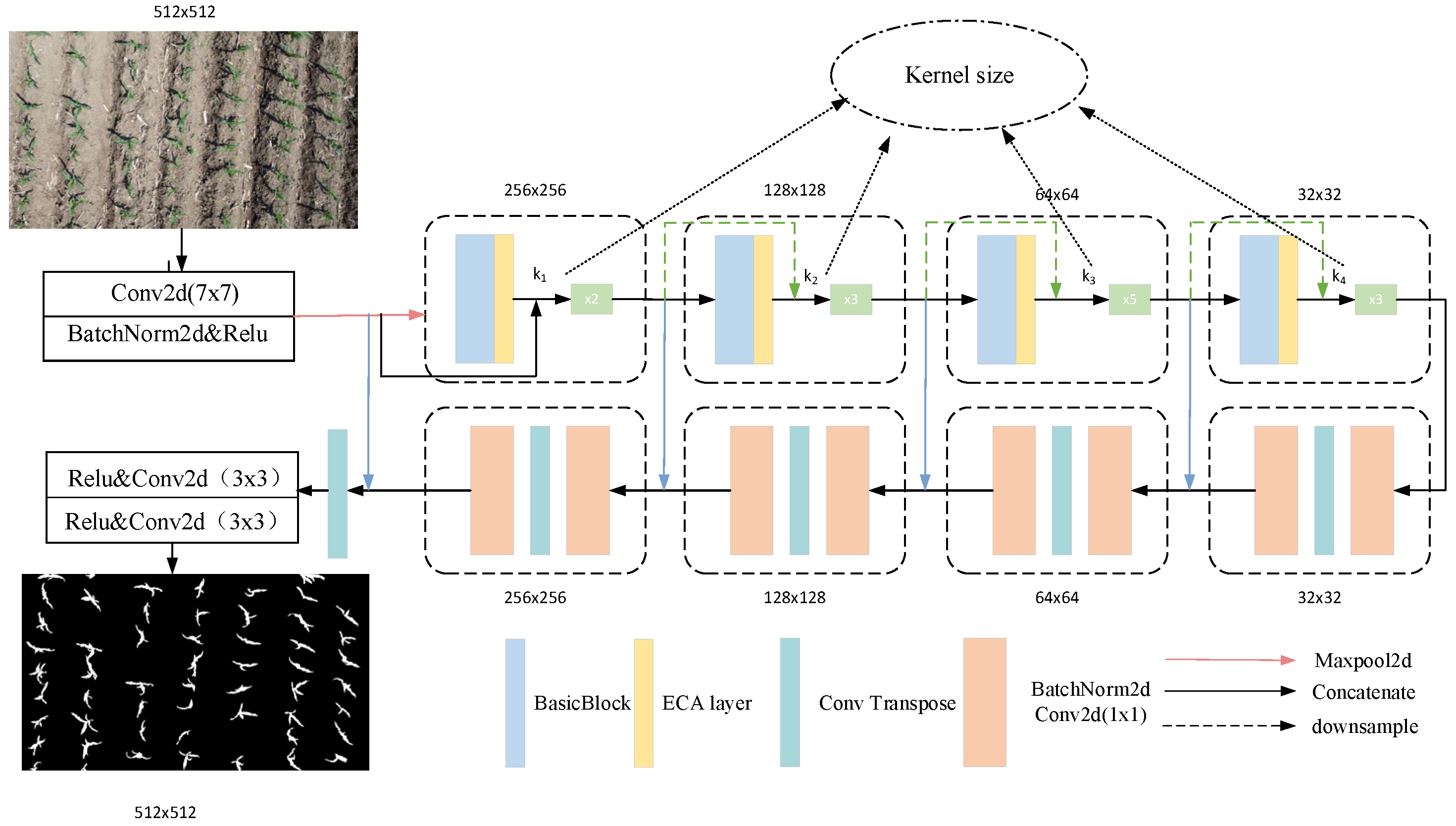

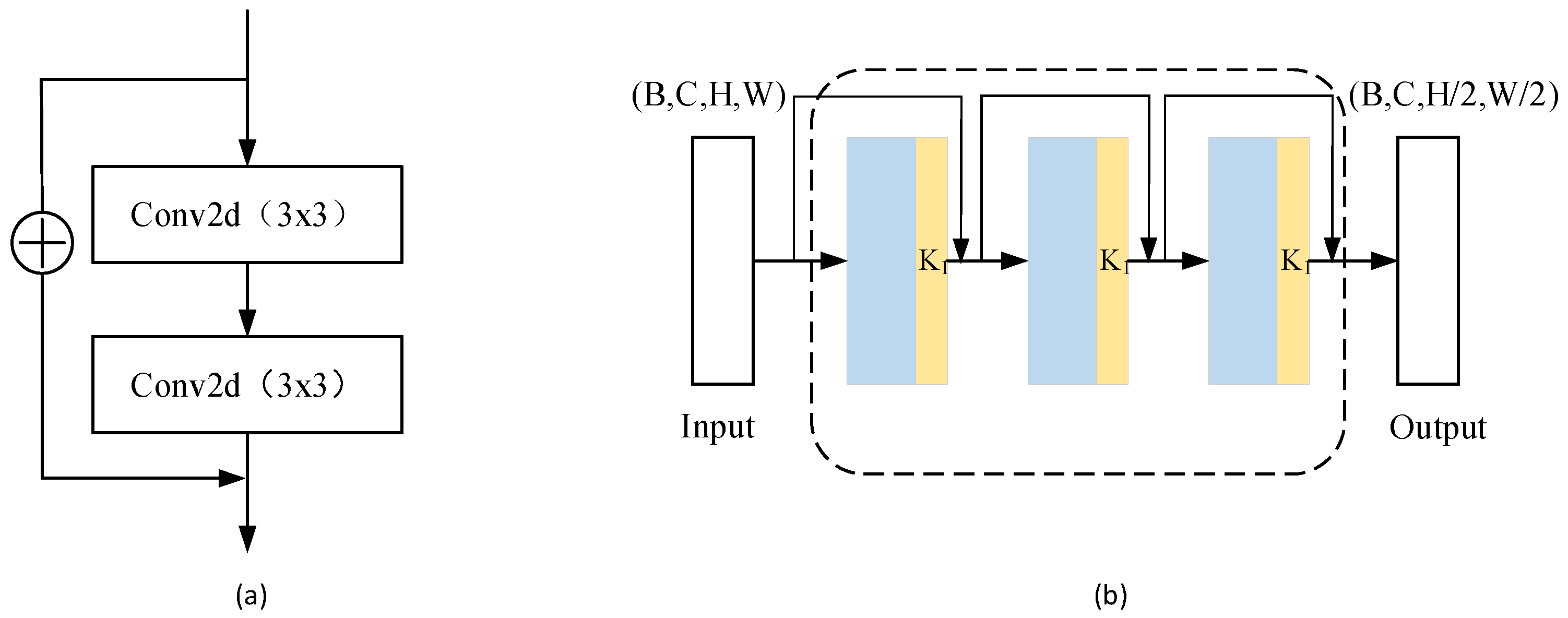

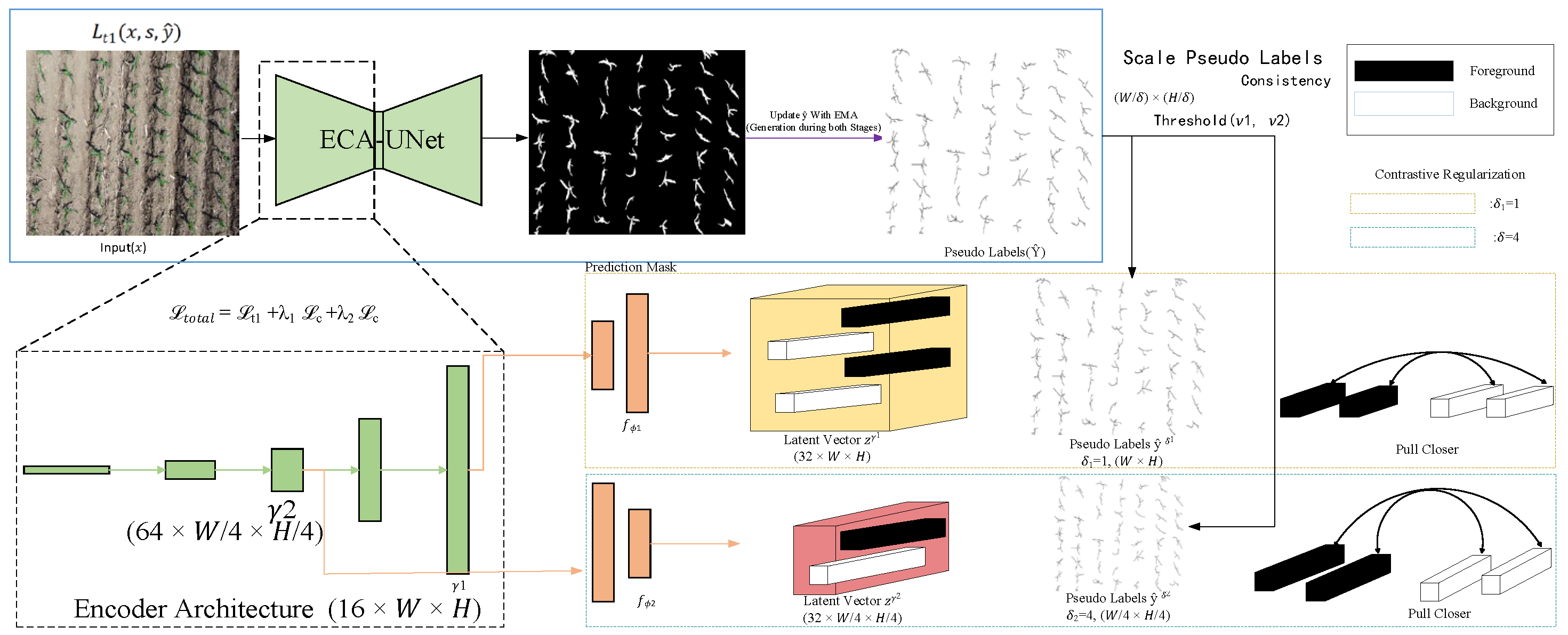

2.3.2. Semantic Segmentation Network

2.3.3. Automatic Scribble Labeling Module

Scribble Label Generation

Pseudo-Label Guided Training

Double-Loss Joint Optimization

- 1.

- Initialization phase: sparse annotation supervision and prediction integration

- 2.

- Pseudo-label-driven reinforcement learning phase

2.3.4. Cross-Scale Contrast Regularization Module

2.3.5. Efficient Channel Attention Module

2.4. Environment Setup

2.4.1. Experimental Environment and Parameters

2.4.2. Metrics of Evaluation

3. Results

3.1. Ablation Experiment

3.2. Self-Generated Label Quality Assessment

3.3. Comparison Among Different Segmentation Models

3.3.1. Quantitative Comparison

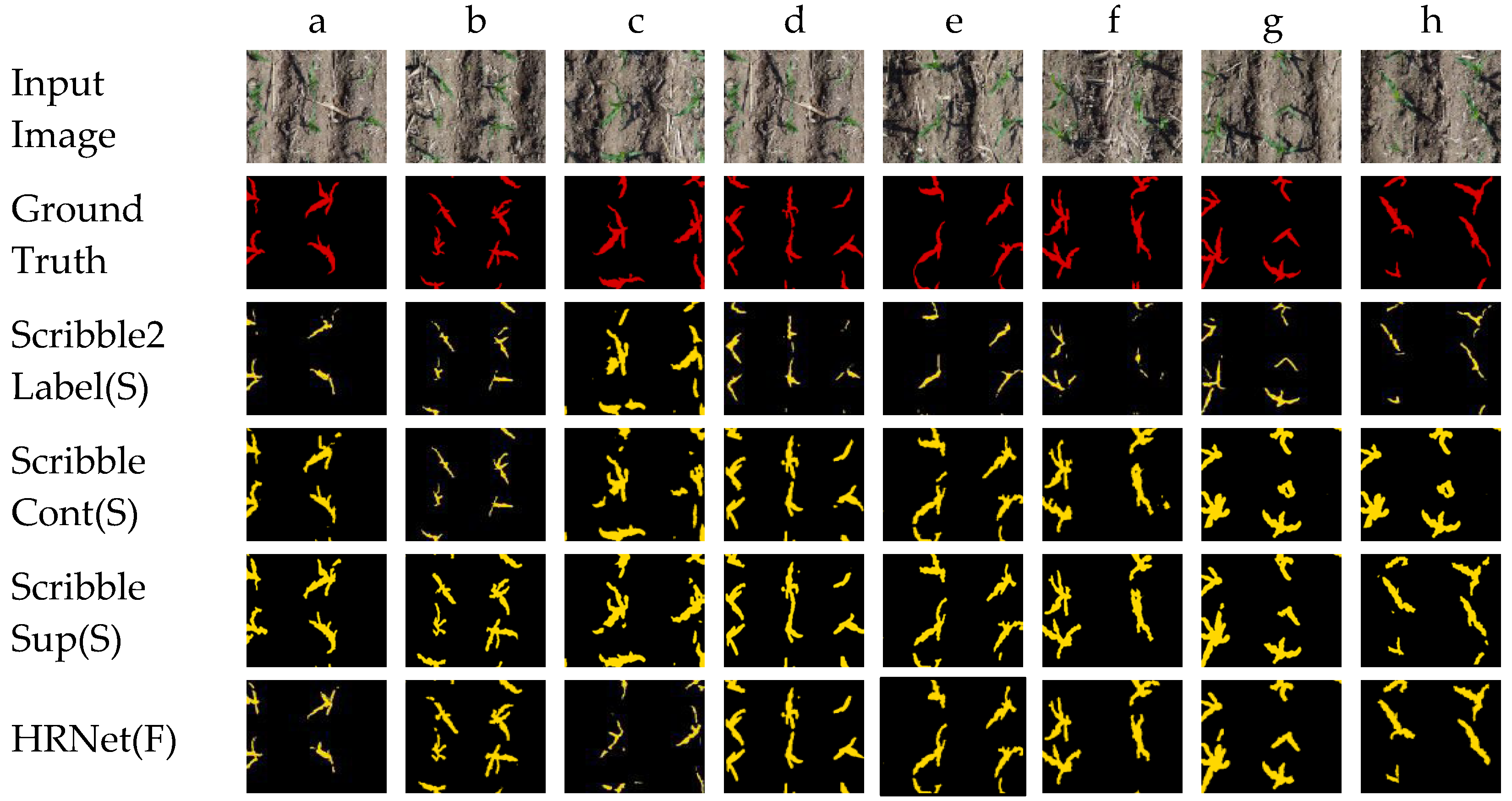

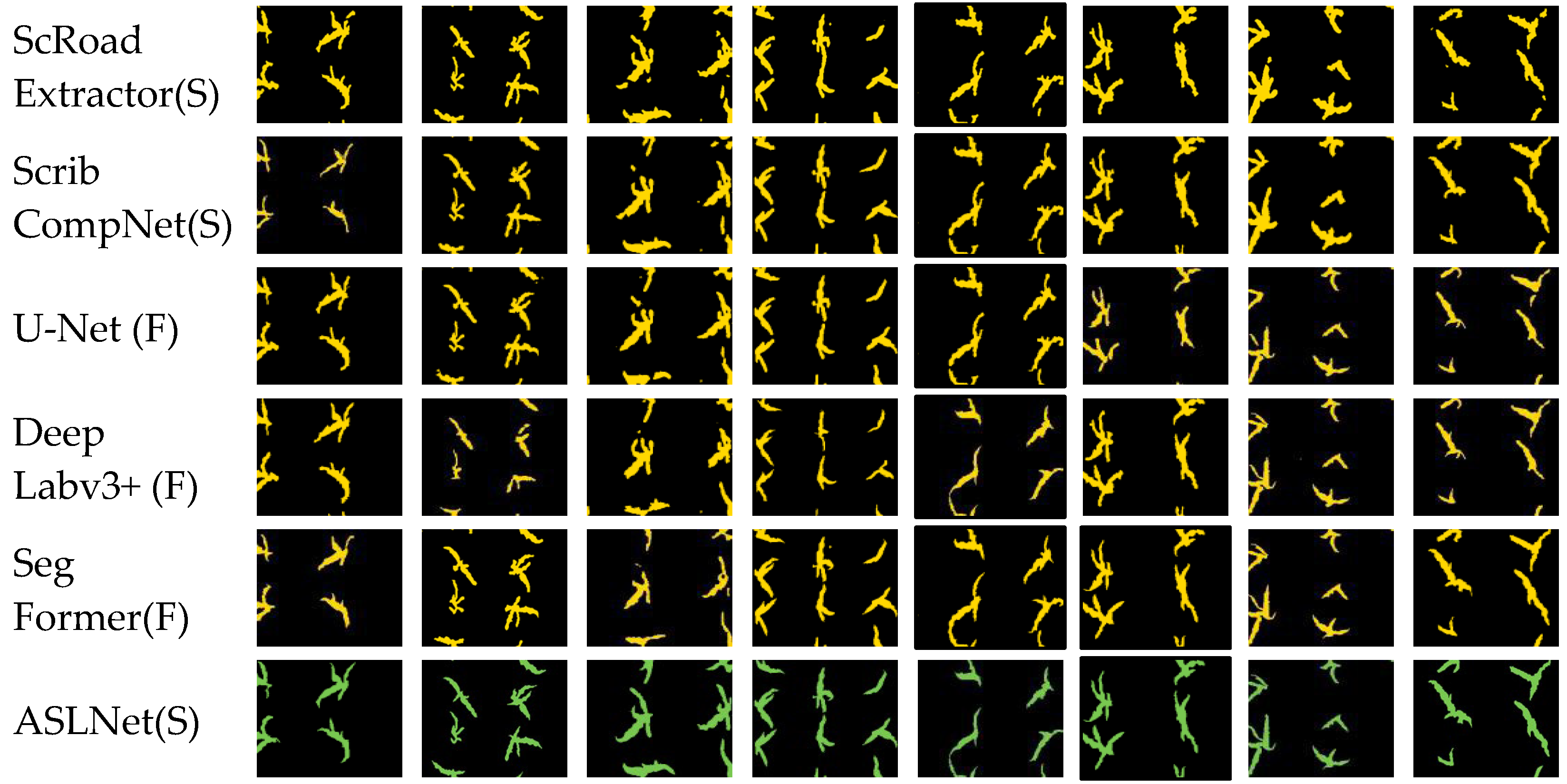

3.3.2. Qualitative Comparison

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ninomiya, S. High-throughput field crop phenotyping: Current status and challenges. Breed. Sci. 2022, 72, 3–18. [Google Scholar] [CrossRef]

- Jia, F.; Tao, Z.; Wang, F. Wooden pallet image segmentation based on Otsu and marker watershed. Proc. J. Phys. Conf. Ser. 2021, 1976, 012005. [Google Scholar] [CrossRef]

- Zheng, X.; Lei, Q.; Yao, R.; Gong, Y.; Yin, Q. Image segmentation based on adaptive K-means algorithm. J. Image Video Proc. 2018, 68. [Google Scholar] [CrossRef]

- Tongbram, S.; Shimray, B.A.; Singh, L.S. Segmentation of image based on k-means and modified subtractive clustering. Indones. J. Electr. Eng. Comput. Sci. 2021, 22, 1396–1403. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhu, Q.; Xie, Y. A novel image matting approach based on naive bayes classifier. In Intelligent Computing Technology, Proceedings of the 8th International Conference, ICIC 2012, Huangshan, China, 25–29 July 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 433–441. [Google Scholar] [CrossRef]

- Yu, H.; Song, J.; Chen, C.; Heidari, A.A.; Liu, J.; Chen, H.; Mafarja, M. Image segmentation of leaf spot diseases on maize using multi-stage Cauchy-enabled grey wolf algorithm. Eng. Appl. Artif. Intell. 2022, 109, 104653. [Google Scholar] [CrossRef]

- Hariharan, B.; Arbeláez, P.; Girshick, R.; Malik, J. Simultaneous detection and segmentation. In Computer Vision–ECCV 2014, Proceedings of the 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Springer International Publishing: Berlin/Heidelberg, Germany, 2014; Part VII, pp. 297–312. [Google Scholar] [CrossRef]

- Zhang, J.; Gong, J.; Zhang, Y.; Mostafa, K.; Yuan, G. Weed identification in maize fields based on improved Swin-Unet. Agronomy 2023, 13, 1846. [Google Scholar] [CrossRef]

- Hong, S.; Jinbo, Q.; Song, L. Recognition of the maize canopy at the jointing stage based on deep learning. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2021, 37, 53–61. [Google Scholar] [CrossRef]

- Zenkl, R.; Timofte, R.; Kirchgessner, N.; Roth, L.; Hund, A.; Van Gool, L.; Aasen, H. Outdoor plant segmentation with deep learning for high-throughput field phenotyping on a diverse wheat dataset. Front. Plant Sci. 2022, 12, 774068. [Google Scholar] [CrossRef]

- Turgut, K.; Dutagaci, H.; Rousseau, D. RoseSegNet: An attention-based deep learning architecture for organ segmentation of plants. Biosyst. Eng. 2022, 221, 138–153. [Google Scholar] [CrossRef]

- Fan, X.; Zhou, R.; Tjahjadi, T.; Das Choudhury, S.; Ye, Q. A segmentation-guided deep learning framework for leaf counting. Front. Plant Sci. 2022, 13, 844522. [Google Scholar] [CrossRef]

- Narisetti, N.; Henke, M.; Neumann, K.; Stolzenburg, F.; Altmann, T.; Gladilin, E. Deep learning based greenhouse image segmentation and shoot phenotyping (deepshoot). Front. Plant Sci. 2022, 13, 906410. [Google Scholar] [CrossRef] [PubMed]

- Ahn, J.; Kwak, S. Learning pixel-level semantic affinity with image-level supervision for weakly supervised semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4981–4990. [Google Scholar] [CrossRef]

- Ahn, J.; Cho, S.; Kwak, S. Weakly supervised learning of instance segmentation with inter-pixel relations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2209–2218. [Google Scholar] [CrossRef]

- Lee, J.; Kim, E.; Lee, S.; Lee, J.; Yoon, S. Ficklenet: Weakly and semi-supervised semantic image segmentation using stochastic inference. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5267–5276. [Google Scholar] [CrossRef]

- Dai, J.; He, K.; Sun, J. Boxsup: Exploiting bounding boxes to supervise convolutional networks for semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision 2015, Santiago, Chile, 7–13 December 2015; pp. 1635–1643. [Google Scholar] [CrossRef]

- Song, C.; Huang, Y.; Ouyang, W.; Wang, L. Box-driven class-wise region masking and filling rate guided loss for weakly supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3136–3145. [Google Scholar] [CrossRef]

- Lan, S.; Yu, Z.; Choy, C.; Radhakrishnan, S.; Liu, G.; Zhu, Y.; Anandkumar, A. Discobox: Weakly supervised instance segmentation and semantic correspondence from box supervision. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3406–3416. [Google Scholar] [CrossRef]

- Zhang, K.; Zhuang, X. Cyclemix: A holistic strategy for medical image segmentation from scribble supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11656–11665. [Google Scholar] [CrossRef]

- Pan, Z.; Sun, H.; Jiang, P.; Li, G.; Tu, C.; Ling, H. CC4S: Encouraging certainty and consistency in scribble-supervised semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 8918–8935. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Zheng, Y.; Shan, D.; Yang, S.; Li, Q.; Wang, B.; Shen, D. Scribformer: Transformer makes CNN work better for scribble-based medical image segmentation. IEEE Trans. Med. Imaging 2024, 43, 2254–2265. [Google Scholar] [CrossRef] [PubMed]

- Zhao, L.; Zhao, Y.; Liu, T.; Deng, H. A weakly supervised semantic segmentation model of maize seedlings and weed images based on scrawl labels. Sensors 2023, 23, 9846. [Google Scholar] [CrossRef]

- Xia, X.; Kulis, B. W-Net: A deep model for fully unsupervised image segmentation. arXiv 2017, arXiv:1711.08506. [Google Scholar] [CrossRef]

- Chaturvedi, K.; Braytee, A.; Li, J.; Prasad, M. SS-CPGAN: Self-supervised cut-and-pasting generative adversarial network for object segmentation. Sensors 2023, 23, 3649. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; Part III, Volume 9351, pp. 234–241. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, Q.; Wang, Y. Road extraction by deep residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 11534–11542. [Google Scholar] [CrossRef]

- Boettcher, W.; Hoyer, L.; Unal, O.; Lenssen, J.E.; Schiele, B. Scribbles for All: Benchmarking Scribble Supervised Segmentation Across Datasets. arXiv 2024, arXiv:2408.12489. [Google Scholar] [CrossRef]

- Lin, D.; Dai, J.; Jia, J.; He, K.; Sun, J. Scribblesup: Scribble-supervised convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3159–3167. [Google Scholar] [CrossRef]

- Lee, H.; Jeong, W.K. Scribble2Label: Scribble-supervised cell segmentation via self-generating pseudo-labels with consistency. In Proceedings of the 23rd International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Lima, Peru, 4–8 October 2020; Part I, Volume 12261, pp. 14–23. [Google Scholar] [CrossRef]

- Wei, Y.; Ji, S. Scribble-based weakly supervised deep learning for road surface extraction from remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–12. [Google Scholar] [CrossRef]

- Oh, H.J.; Lee, K.; Jeong, W.K. Scribble-supervised cell segmentation using multiscale contrastive regularization. In Proceedings of the 2022 IEEE 19th International Symposium on Biomedical Imaging (ISBI), Kolkata, India, 28–31 March 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Zhang, C.; Li, K.; Yin, Z.; Qin, R. Weakly-supervised structural component segmentation via scribble annotations. Comput.-Aided Civ. Inf. 2025, 40, 561–578. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar] [CrossRef]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J.; Wang, X. Deep High-Resolution Representation Learning for Human Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 5693–5703. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar] [CrossRef]

- Chen, Z.; Sun, Q. Weakly-supervised Semantic Segmentation with Image-level Labels: From Traditional Models to Foundation Models. ACM Comput. Surv. 2025, 57, 111. [Google Scholar] [CrossRef]

- Schnell, J.; Wang, J.; Qi, L.; Hu, V.T.; Tang, M. Scribblegen: Generative Data Augmentation Improves Scribble-Supervised Semantic Segmentation. arXiv 2023, arXiv:2311.17121. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, L.; Liu, X.; Yan, P. An Improved Semantic Segmentation Algorithm for High-Resolution Remote Sensing Images Based on DeepLabv3+. Sci. Rep. 2024, 14, 9716. [Google Scholar] [CrossRef]

- Zhang, L.; Lu, J.; Zheng, S.; Zhao, X.; Zhu, X.; Fu, Y.; Wang, L.; Huang, G.; Torr, P.H.S. Vision Transformers: From Semantic Segmentation to Dense Prediction. Int. J. Comput. Vis. 2024, 132, 6142–6162. [Google Scholar] [CrossRef]

| Parameter Name | Parameter Value |

|---|---|

| Image size/pixel | 256 × 256 |

| Batch size | 8 |

| Learning rate | 1 × 10−5 |

| Max training epoch | 1000 |

| Weight decay | 0.00005 |

| Index | ResNet50 | ResNet101 | CCR | ECA | mIoU (%) | F1-Score (%) |

|---|---|---|---|---|---|---|

| (a) | √ | 59.58 | 76.36 | |||

| (b) | √ | 46.72 | 67.51 | |||

| (c) | √ | √ | 63.14 | 77.34 | ||

| (d) | √ | √ | 72.30 | 83.47 | ||

| (e) | √ | √ | 40.34 | 60.67 | ||

| (f) | √ | √ | 46.72 | 67.51 | ||

| (g) | √ | √ | √ | 74.86 | 85.76 |

| Method | mIoU (%) | F1-Score (%) |

|---|---|---|

| Scribble4All | 71.71 | 80.69 |

| 1 | 74.86 | 85.76 |

| 0.5 | 68.36 | 77.59 |

| 0.3 | 66.80 | 76.03 |

| 0.1 | 63.54 | 68.81 |

| Type | Method | Backbone | mIoU(%) | F1-Score (%) |

|---|---|---|---|---|

| F | U-Net | ResNet34 | 72.39 | 83.90 |

| U-Net | ResNet50 | 74.32 | 85.21 | |

| DeepLabv3+ | MobileNetV2 | 73.75 | 82.79 | |

| SegFormer | SegFormer-B0 | 71.35 | 80.57 | |

| SegFormer | SegFormer-B1 | 74.89 | 83.72 | |

| SegFormer | SegFormer-B2 | 74.81 | 83.62 | |

| SegFormer | SegFormer-B3 | 72.56 | 81.93 | |

| SegFormer | SegFormer-B4 | 72.71 | 81.77 | |

| HRNet | HRNetV2-W18 | 68.26 | 77.55 | |

| S | ScribbleSup | Xception | 67.80 | 81.74 |

| Scribble2Label | ResNet50 | 58 | 76.36 | |

| ScribbleCont | ResNet50 | 67.50 | 81.58 | |

| ScRoadExtractor | ResNet34 | 69.32 | 97 | |

| ScribCompNet | HRNetV2-W18 | 70.45 | 84.95 | |

| ASLNet | ResNet50 | 74.86 | 85.76 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Liu, X.; Deng, H.; Zhou, Y.; Miao, T. Automatic Scribble Annotations Based Semantic Segmentation Model for Seedling-Stage Maize Images. Agronomy 2025, 15, 1972. https://doi.org/10.3390/agronomy15081972

Li Z, Liu X, Deng H, Zhou Y, Miao T. Automatic Scribble Annotations Based Semantic Segmentation Model for Seedling-Stage Maize Images. Agronomy. 2025; 15(8):1972. https://doi.org/10.3390/agronomy15081972

Chicago/Turabian StyleLi, Zhaoyang, Xin Liu, Hanbing Deng, Yuncheng Zhou, and Teng Miao. 2025. "Automatic Scribble Annotations Based Semantic Segmentation Model for Seedling-Stage Maize Images" Agronomy 15, no. 8: 1972. https://doi.org/10.3390/agronomy15081972

APA StyleLi, Z., Liu, X., Deng, H., Zhou, Y., & Miao, T. (2025). Automatic Scribble Annotations Based Semantic Segmentation Model for Seedling-Stage Maize Images. Agronomy, 15(8), 1972. https://doi.org/10.3390/agronomy15081972