1. Introduction

Tobacco holds significant economic value. Although its harmful effects on human health are widely recognized, it remains a major cash crop globally, especially in southwestern China, where it is a vital source of income for many farmers. Ensuring the healthy development of tobacco cultivation is thus essential for improving farmers’ livelihoods and promoting regional agricultural economies. However, tobacco plants are frequently affected by various diseases, which severely impact yield and quality. Traditional disease diagnosis methods rely heavily on human expertise, which is inefficient and often leads to overly generalized treatment strategies. For instance, farmers tend to spray pesticides over large areas, resulting in excessive pesticide use and serious ecological pollution. In contrast, computer vision techniques enable faster and more accurate identification of tobacco leaf diseases, providing scientific support for disease prevention and control decisions [

1].

In recent years, image classification techniques have been widely applied to tobacco leaf disease recognition with notable progress. For example, Lin et al. [

2] proposed a Meta-Baseline-based few-shot learning (FSL) method, which enhances feature representation through cascaded multi-scale fusion and channel attention mechanisms, alleviating data scarcity issues. The method achieved 61.24% and 77.43% accuracy on single-plant 1-shot and 5-shot tasks, and 82.52% and 92.83% on multi-plant tasks. Subsequently, Lin et al. [

3] introduced instance embedding and task adaptation techniques, achieving 66.04% 5-way 1-shot accuracy on the PlantVillage dataset and 45.5% and 56.5% accuracy under two TLA dataset settings. Additionally, frequency-domain features have been integrated into FSL frameworks to improve generalization and feature expression under limited data conditions [

4].

Deep learning models have also shown excellent performance in crop disease diagnosis. Mohanty et al. [

5] trained CNNs on over 54,000 leaf images captured by smartphones, achieving 99.35% accuracy and demonstrating strong scalability and device independence. Ferentinos et al. [

6] trained CNNs on 87,848 images covering 58 plant disease classes, reaching 99.53% accuracy. Too et al. evaluated various CNN architectures and found DenseNet to perform best with 99.75% accuracy due to its parameter efficiency and resistance to overfitting. Sun et al. [

7] used ResNet-101 to grade aphid damage on tobacco leaves, significantly improving accuracy with data augmentation and a three-tier classification strategy. Sladojevic [

8] proposed a deep CNN achieving 96.3% average accuracy in single-class plant disease classification. Bharali et al. [

9] demonstrated that a lightweight model trained on only 1400 images can still achieve 96.6% accuracy using Keras and TensorFlow. In UAV-based recognition, Thimmegowda et al. [

10] combined HOF, MBH, and optimized HOG features with PCA-based selection, achieving 95% accuracy and 92% sensitivity.

For object detection, Lin et al. [

4] proposed an improved YOLOX-Tiny network with Hierarchical Mixed-scale Units (HMUs) in the neck module, enhancing cross-channel interaction and achieving 80.56% accuracy for tobacco brown spot under natural conditions. Zhang et al. [

11] used deep residual networks and k-means-optimized anchor boxes to boost tomato disease detection accuracy by 2.71%. Sadi Uysal et al. [

12] applied ResNet-18 and Class Activation Maps (CAMs) for lesion localization, performing well in small-object detection. Iwano et al. [

13] built a Hierarchical Object Detection and Recognition Framework (HODRF), effectively reducing false positives and improving small-lesion recognition. Other works include a YOLOv5 variant with BiFPN and Shuffle Attention for pine wilt detection in UAV images [

14], and a Mask R-CNN variant with COT for accurate segmentation of overlapping tobacco leaves [

15].

While image classification determines disease presence, it cannot localize lesions. Object detection offers bounding boxes but fails to delineate lesion contours accurately, especially for multi-scale small objects due to downsampling errors. Despite the impressive performance of classification and detection models in plant disease diagnosis, their applicability to small lesion segmentation tasks remains limited. Classification models, by design, provide only global predictions without spatial localization, making them unsuitable for scenarios where precise lesion boundaries are required, such as disease monitoring or progression analysis. Object detection methods, while more spatially aware, often suffer from significant performance degradation when applied to small targets due to several factors. First, the multi-stage downsampling in convolutional backbones reduces the spatial resolution of feature maps, leading to the loss of fine-grained lesion details. Second, the use of fixed-size anchor boxes and IoU-based assignment strategies makes it difficult to generate high-quality proposals for tiny, irregularly shaped lesions.Third, when lesions are densely distributed or partially occluded, which is common in tobacco leaves, detection models may misidentify overlapping lesions as a single entity or completely miss them.

Furthermore, many detection frameworks are optimized for instance-level recognition rather than pixel-level delineation, limiting their capacity for accurately segmenting small, scattered disease regions. In contrast, semantic segmentation assigns labels at the pixel level, achieving precise lesion localization, which particularly suitable when multiple small diseased areas appear on a single leaf. These limitations motivate the adoption of semantic segmentation models with refined attention mechanisms that can preserve high-resolution information and focus selectively on subtle lesion features. Our proposed AFMA and DA modules aim to address precisely these challenges by enhancing feature representation at multiple scales and guiding the model’s attention to meaningful spatial and channel-wise cues.

Semantic segmentation provides fine-grained, spatially accurate information by assigning semantic labels to each pixel, making it ideal for identifying both disease type and lesion distribution. For instance, Chen et al. [

16] proposed MD-UNet, which integrates multi-scale convolution and dense residual dilated convolution modules, achieving effective segmentation of tobacco and other plant diseases. Zhang et al. [

17] improved Mask R-CNN with feature fusion and hybrid attention to handle occlusions and blurred edges, boosting mIoU by 11.10%. Ou et al. [

18] designed a segmentation network combining CBAM and skip connections, achieving 64.99% mIoU on tobacco disease segmentation. Chen et al. [

19] further incorporated attention into MD-UNet, achieving 84.93% IoU, though the model distinguishes only between healthy and unhealthy regions.

Semantic segmentation, a fundamental task in computer vision, aims to assign semantic categories to each pixel. Despite advancements in deep learning, small-object segmentation remains challenging, especially for tobacco leaf disease recognition in agriculture. Lesions are often irregularly shaped, have fuzzy edges, and occupy a small portion of the image, complicating detection and segmentation.

Recent architectures, such as FCN [

20], SegFormer [

21], Mask2Former [

22], and PointRend [

23], have advanced semantic segmentation. Meanwhile, baseline models like UNet [

24], DeepLab [

25,

26,

27], and HRNet [

28] remain widely used due to their unique strengths. UNet, with its simple structure, detail retention, and strong small-object performance, is especially suited for plant disease segmentation.

To address the limitations of traditional architectures in small-object processing, this study explores a hybrid attention-enhanced UNet framework by integrating cross-feature attention (AFMA) and dual-branch attention (DA) modules. This design improves fine-grained feature extraction while maintaining low computational overhead, significantly enhancing small-object segmentation performance.

This study focuses on the identification of small target diseases. Small target diseases refer to lesion areas occupying less than 32 pixels of the image, with blurred contours and easily confused with the background. They often appear as spots or small irregular patches, typically representing the early stage of disease with important warning significance. If not identified promptly, these lesions can spread into large-scale infections, causing severe damage. Semantic segmentation can accurately delineate disease boundaries at the pixel level, providing finer spatial information compared with rough localization, thereby supporting subsequent disease assessment and precise control measures. Therefore, the precise segmentation of small target diseases holds both scientific significance and practical application value.

Considering the small-object nature of tobacco leaf diseases, we propose a novel method combining AFMA and DA attention mechanisms to improve segmentation accuracy for minor lesions.

The main contributions of this work are summarized as follows:

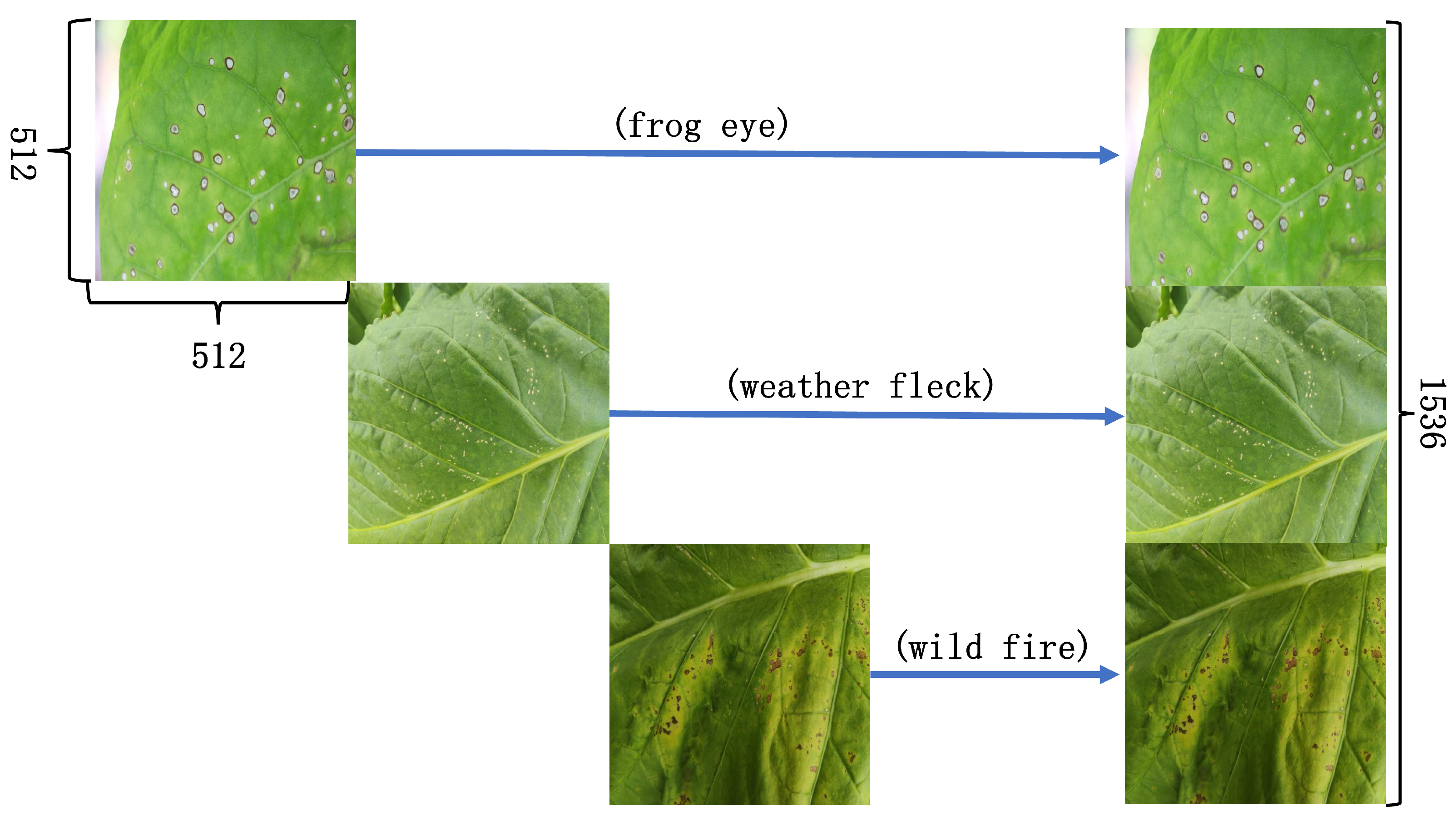

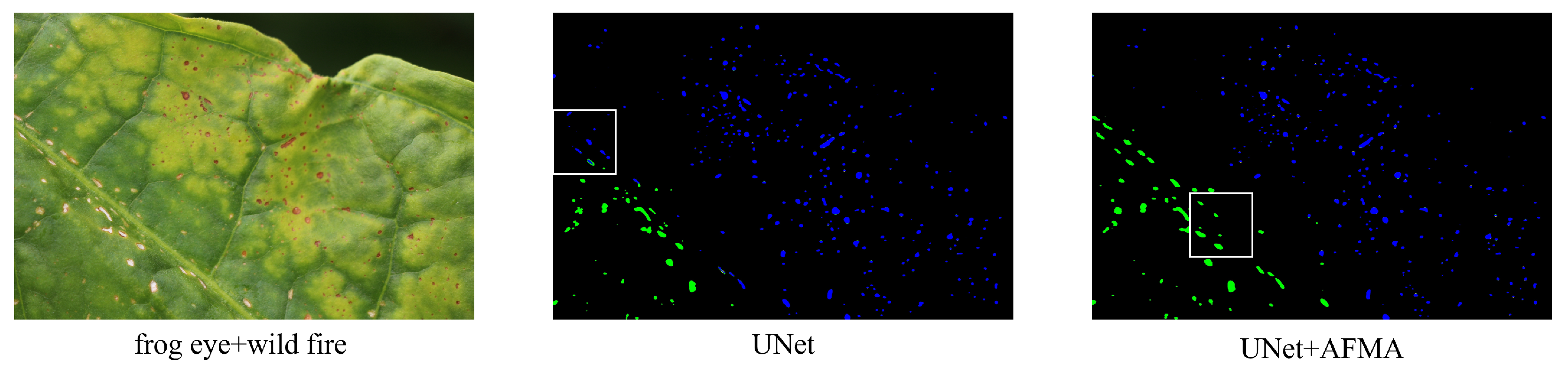

Image stitching training strategy: We introduce a data augmentation method based on image stitching by randomly combining tobacco leaf images with different diseases. This alleviates pixel-level class imbalance, simulates co-occurrence scenarios, and enhances dataset diversity and representativeness.

Dual attention mechanism design: A hierarchical feature enhancement framework is constructed by integrating two complementary attention modules. The DA module captures fine-grained local features with minimal parameters, while the AFMA module enhances cross-scale feature representation. Their synergy significantly improves minor lesion segmentation.

Lightweight attention integration: The proposed attention modules are lightweight and plug-and-play, making them easy to embed into mainstream segmentation networks such as UNet, DeepLab, and HRNet. They deliver performance gains with minimal computational cost.

2. Materials and Methods

This section describes the data sources, preprocessing steps, baseline network, attention modules, and overall network architecture, enabling the reproducibility of our work.

2.1. Dataset and Preprocessing

The dataset used in this study was collected between 2021 and 2024 from various regions in Yunnan Province, including Dali, Qujing, and Honghe. It consists of images of tobacco leaves infected by various diseases under natural field conditions. To ensure diversity and representativeness, the samples cover different growth stages and environmental conditions.

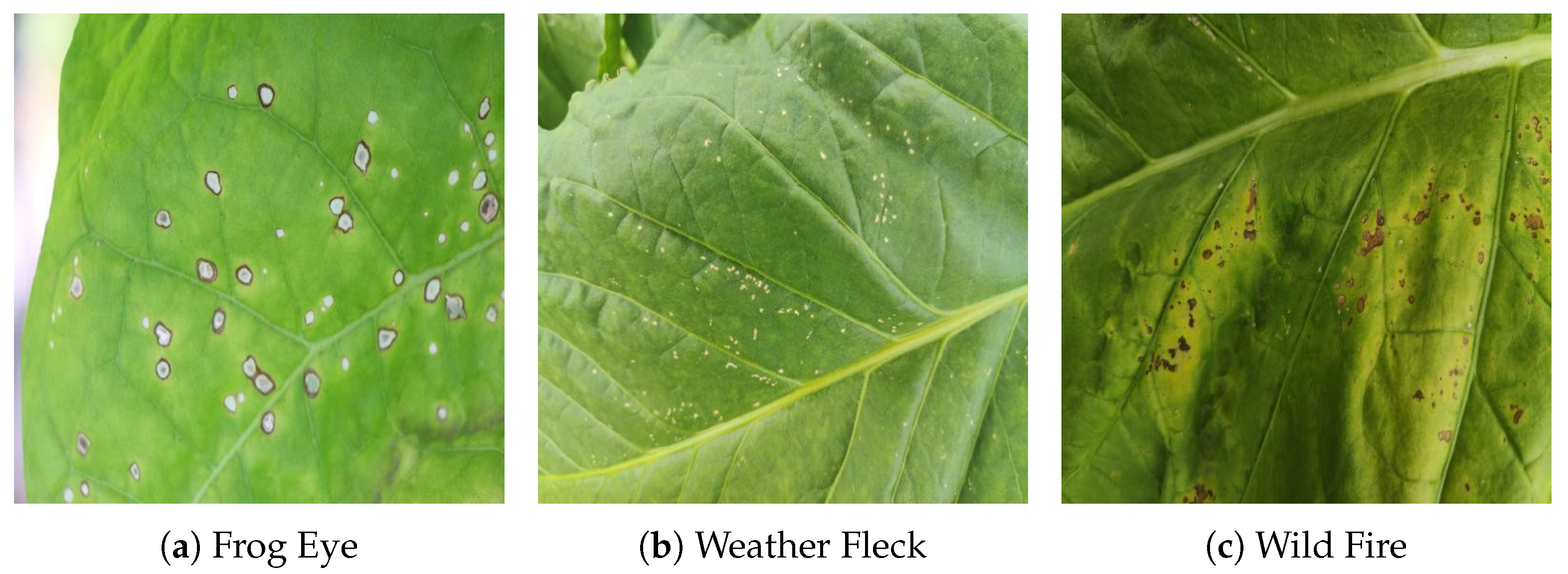

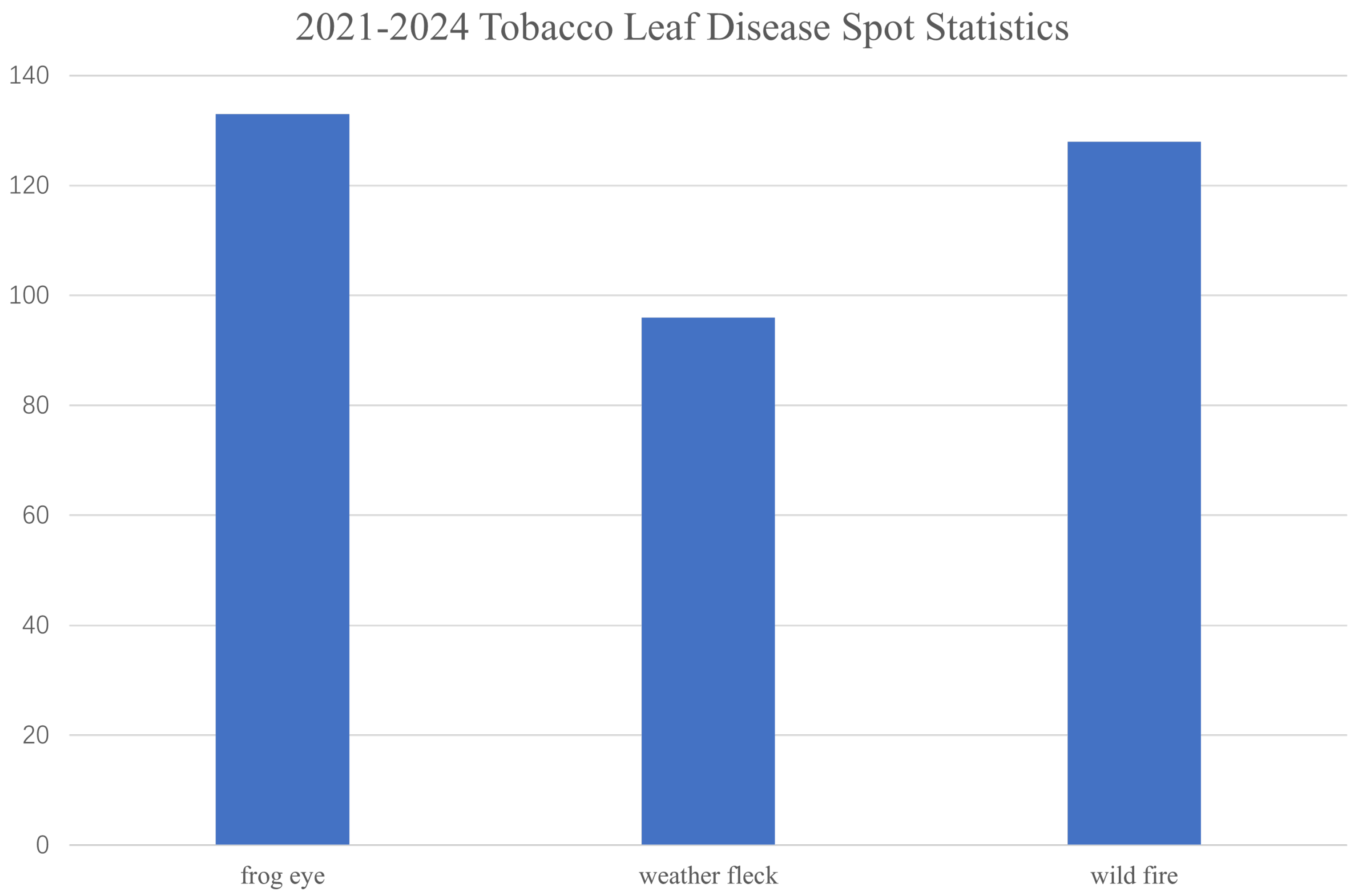

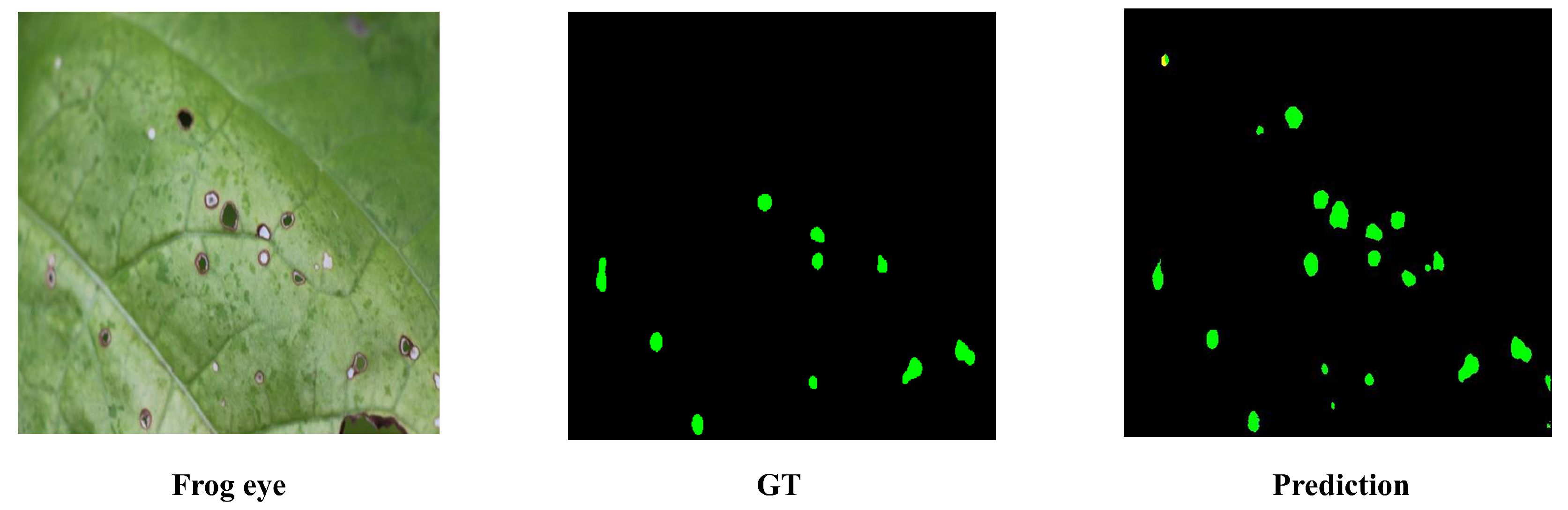

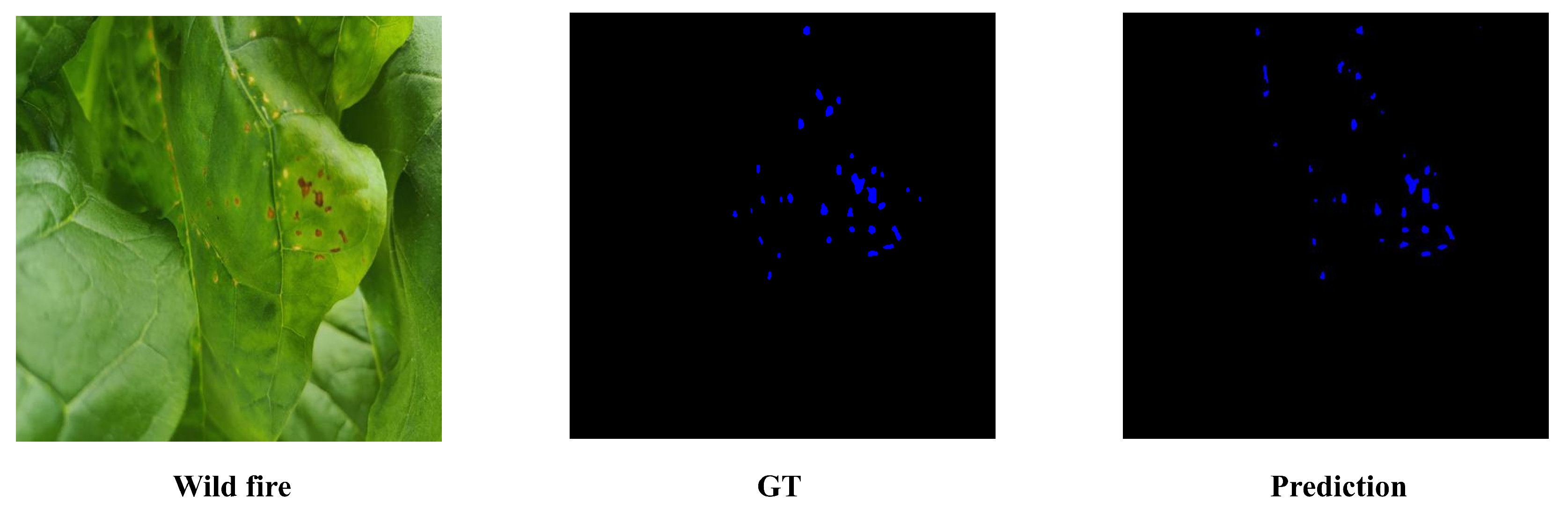

The main disease types include frog-eye leaf spot, climate spot, and wildfire disease (see

Figure 1). As depicted in

Figure 2, our data collection from 2021 to 2024 collected a total of 133 images of frog-eye spot disease, 96 images of climate spot disease, and 128 images of wildfire disease.

Images were captured using a Canon EOS 700D digital camera (Canon Inc., Tokyo, Japan) under natural daylight. A reflective umbrella was used to minimize the effects of strong sunlight and shadows. The camera was consistently positioned at approximately a 90-degree angle to the leaf surface. Image collection mainly took place on sunny or slightly overcast days. Images that were out of focus, had strong reflections, or contained occlusions were excluded.

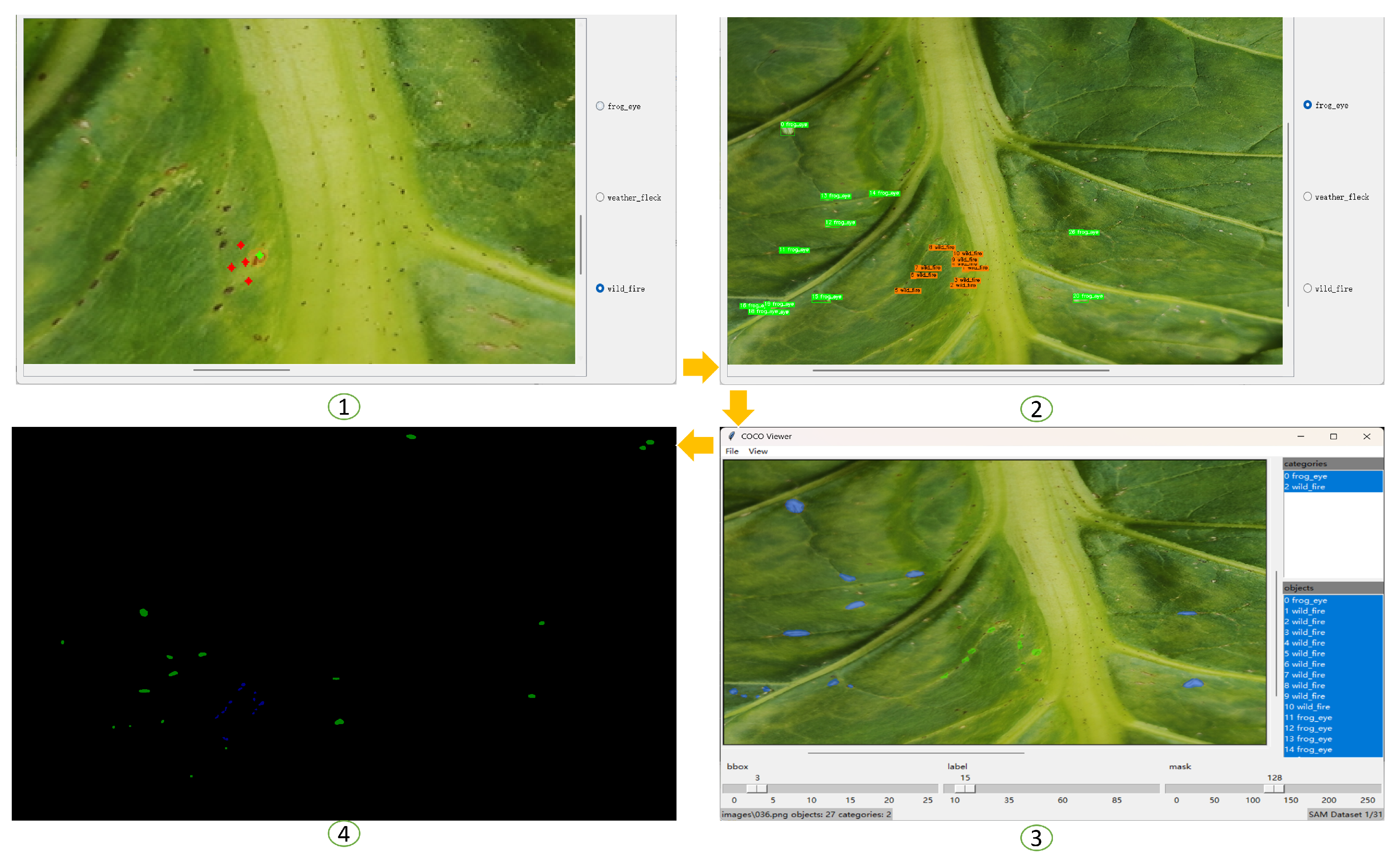

Before training, all images were resized to a standardized resolution suitable for network input (e.g., 512 × 512 pixels). Semantic annotations were assisted by the Segment Anything tool (see

Figure 3) to reduce labeling subjectivity. In addition, image stitching was performed, as illustrated in

Figure 4. A fixed random seed was set for all procedures to ensure reproducibility of the experiments.

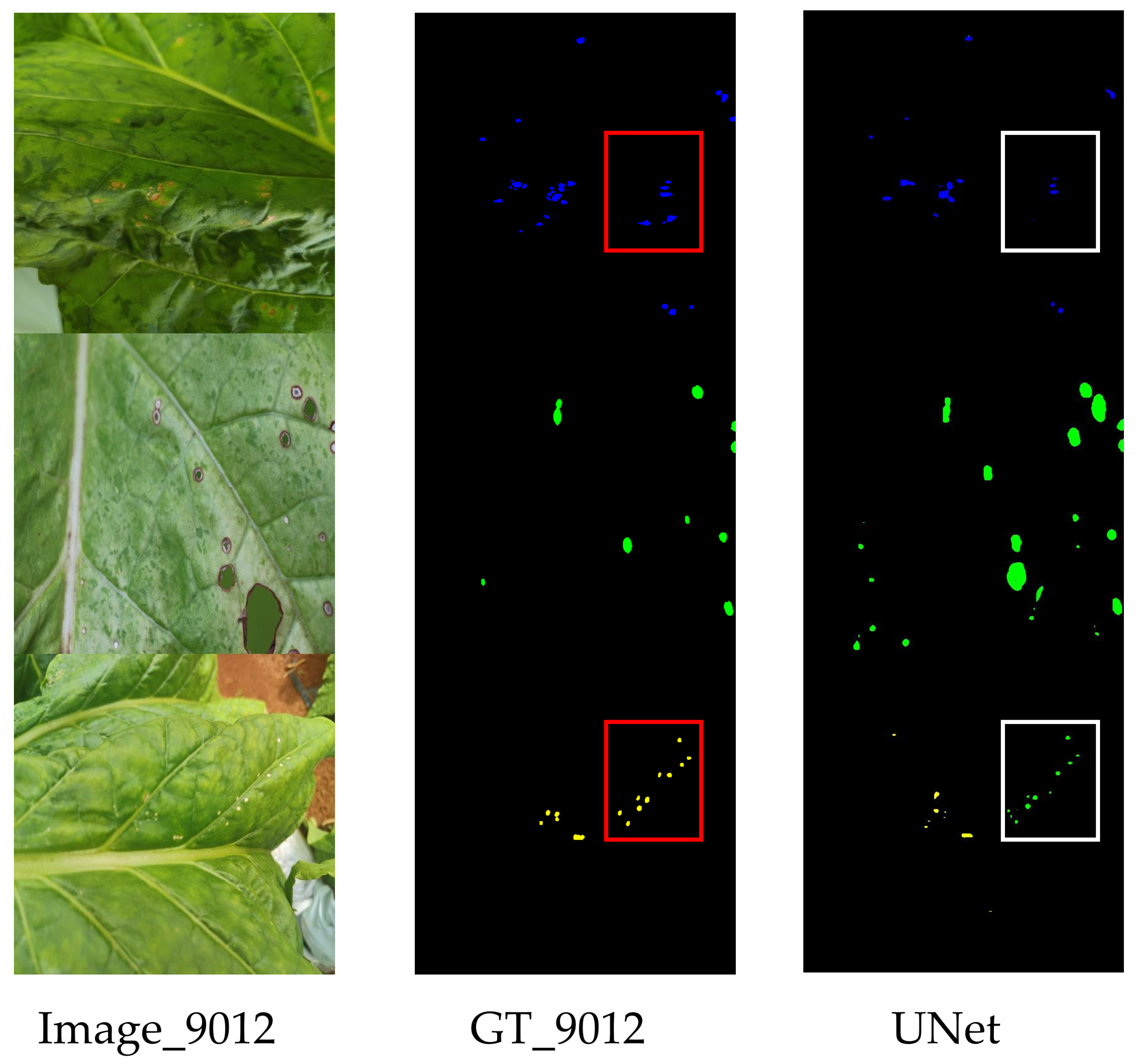

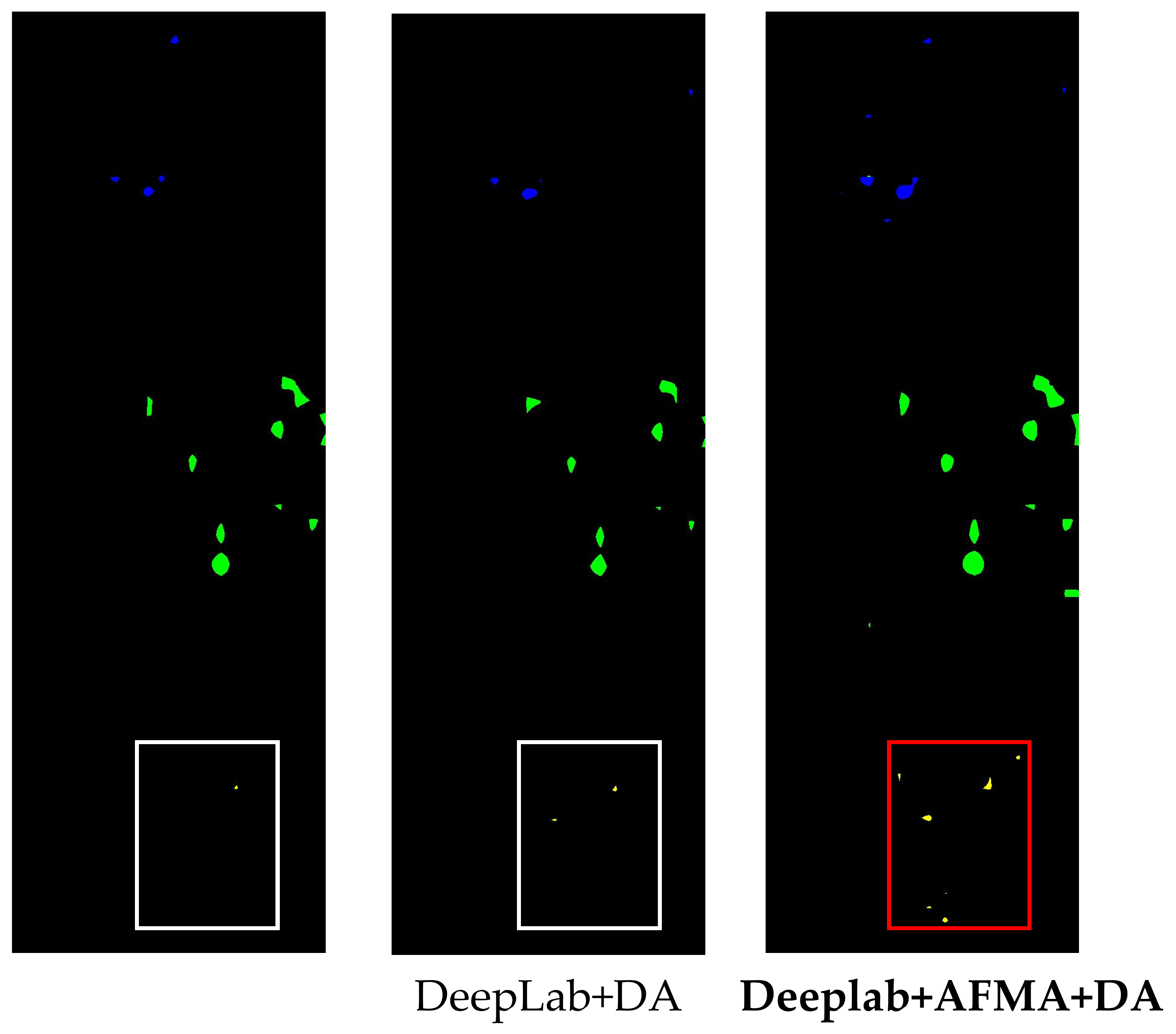

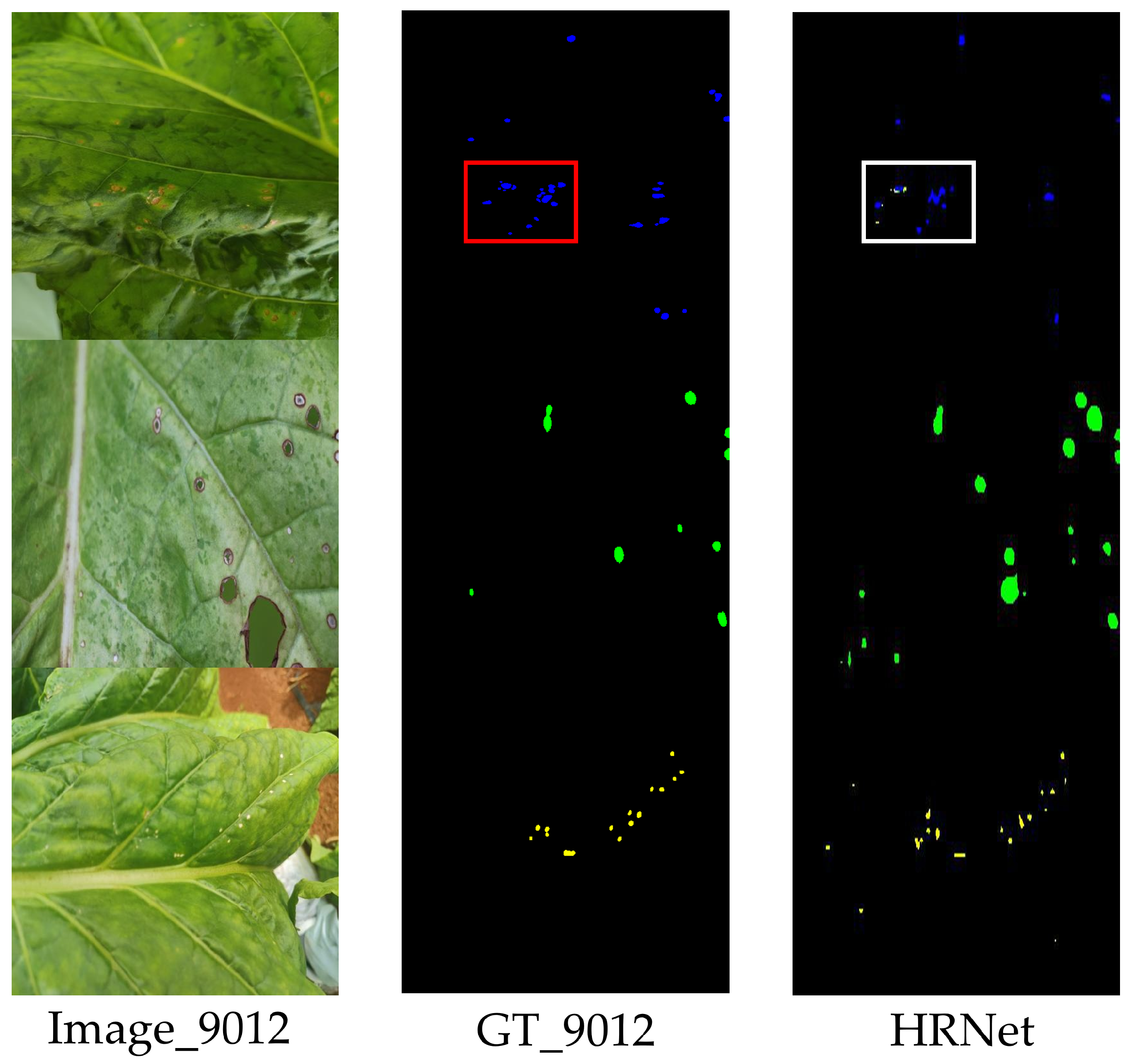

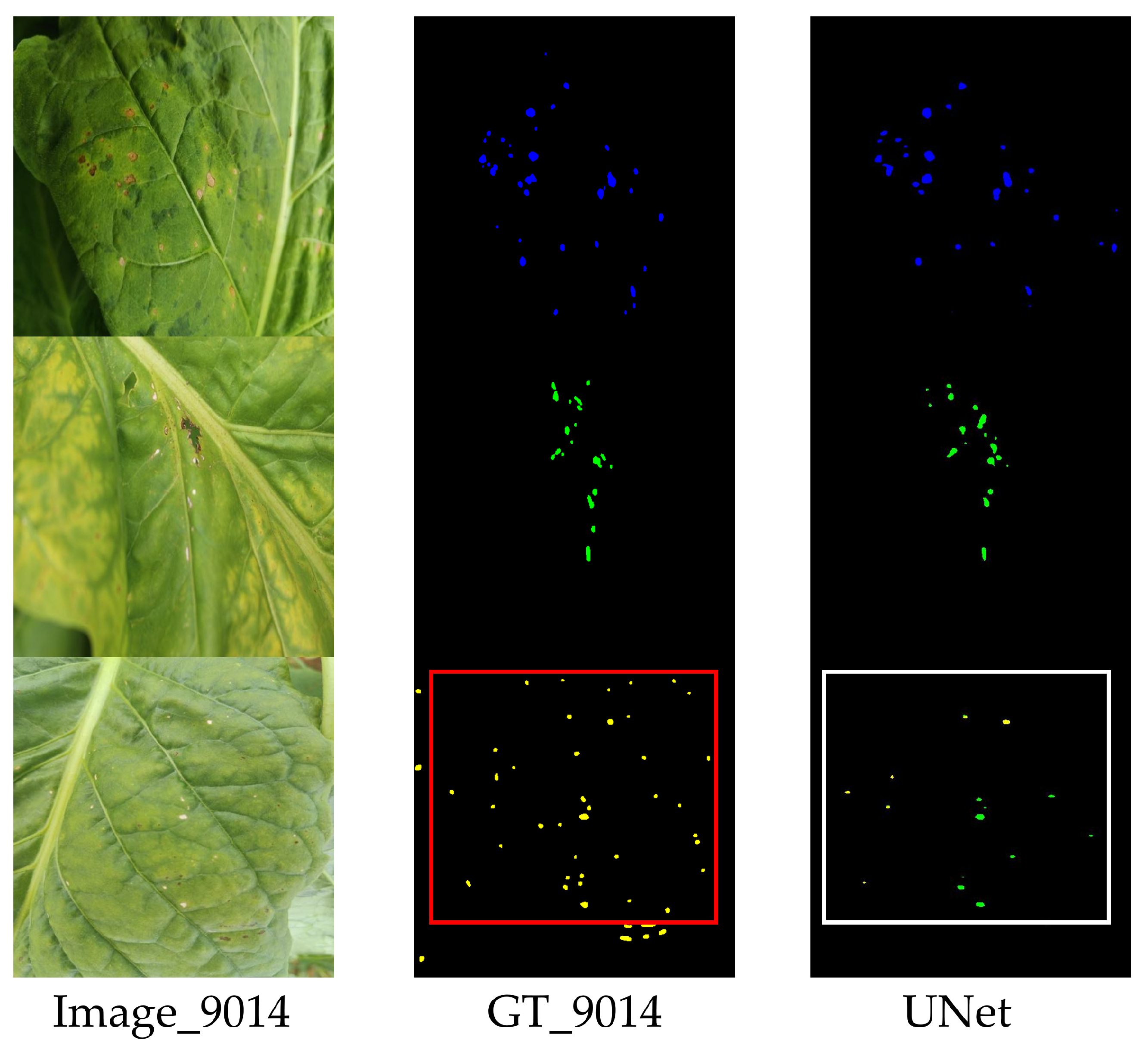

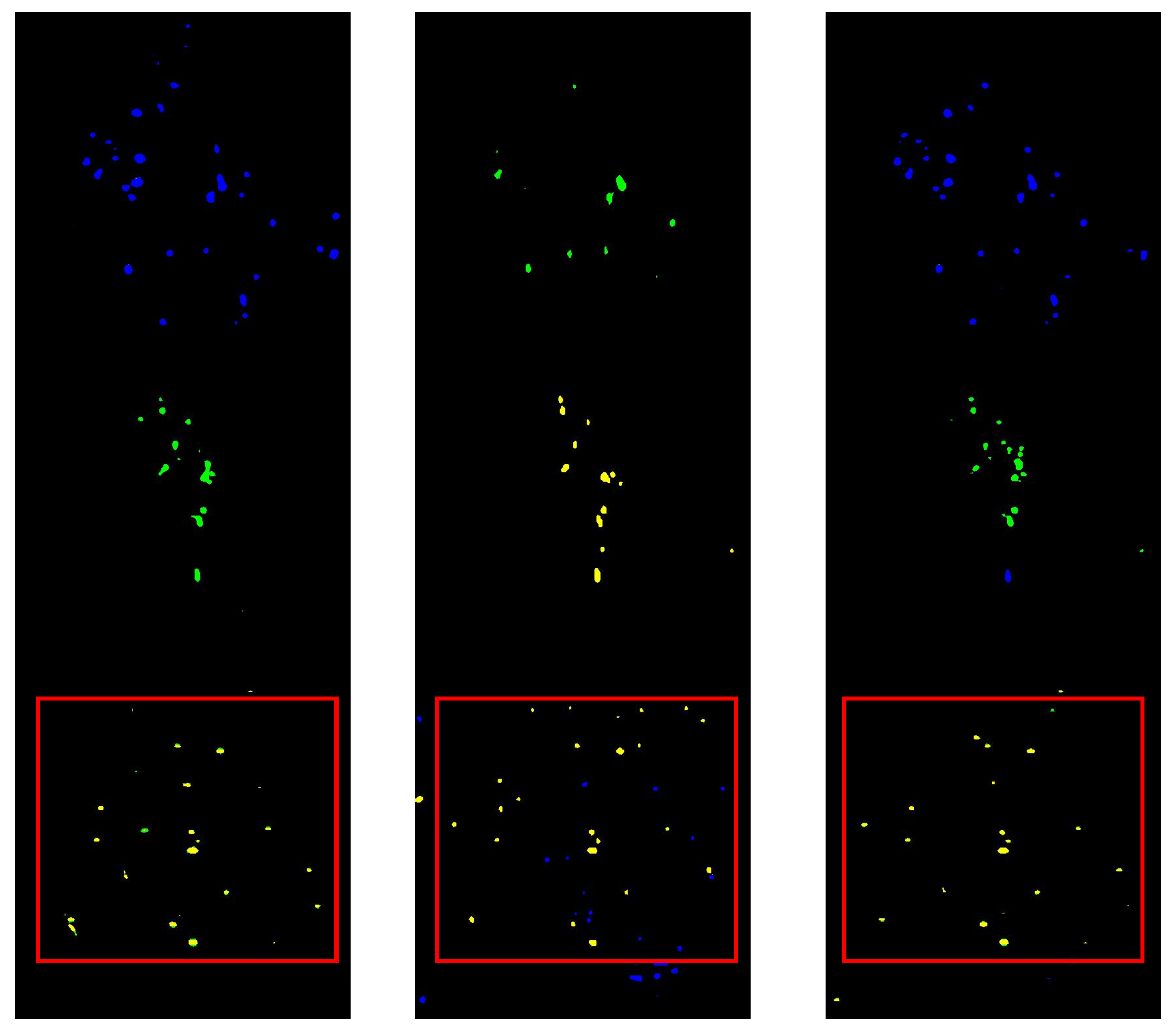

Data splicing aims to create a composite sample of size 512 × 1536 by combining three randomly selected images of different diseases (such as frog-eye spot, climate spot, and wildfire), thereby increasing data diversity, reducing the risk of overfitting, and simulating the coexistence of multiple diseases. The specific steps include the following: resizing each image to 512 × 512 pixels, optionally applying random horizontal flipping to increase variation, and then vertically stacking these three images from top to bottom. The final spliced image has dimensions of 512 pixels (width) × 1536 pixels (height). Finally, the processed dataset was split into training, validation, and test sets in a ratio of 8:1:1. This means there are 8000 samples in the training set, 1000 samples in the validation set, and 1000 samples in the test set.

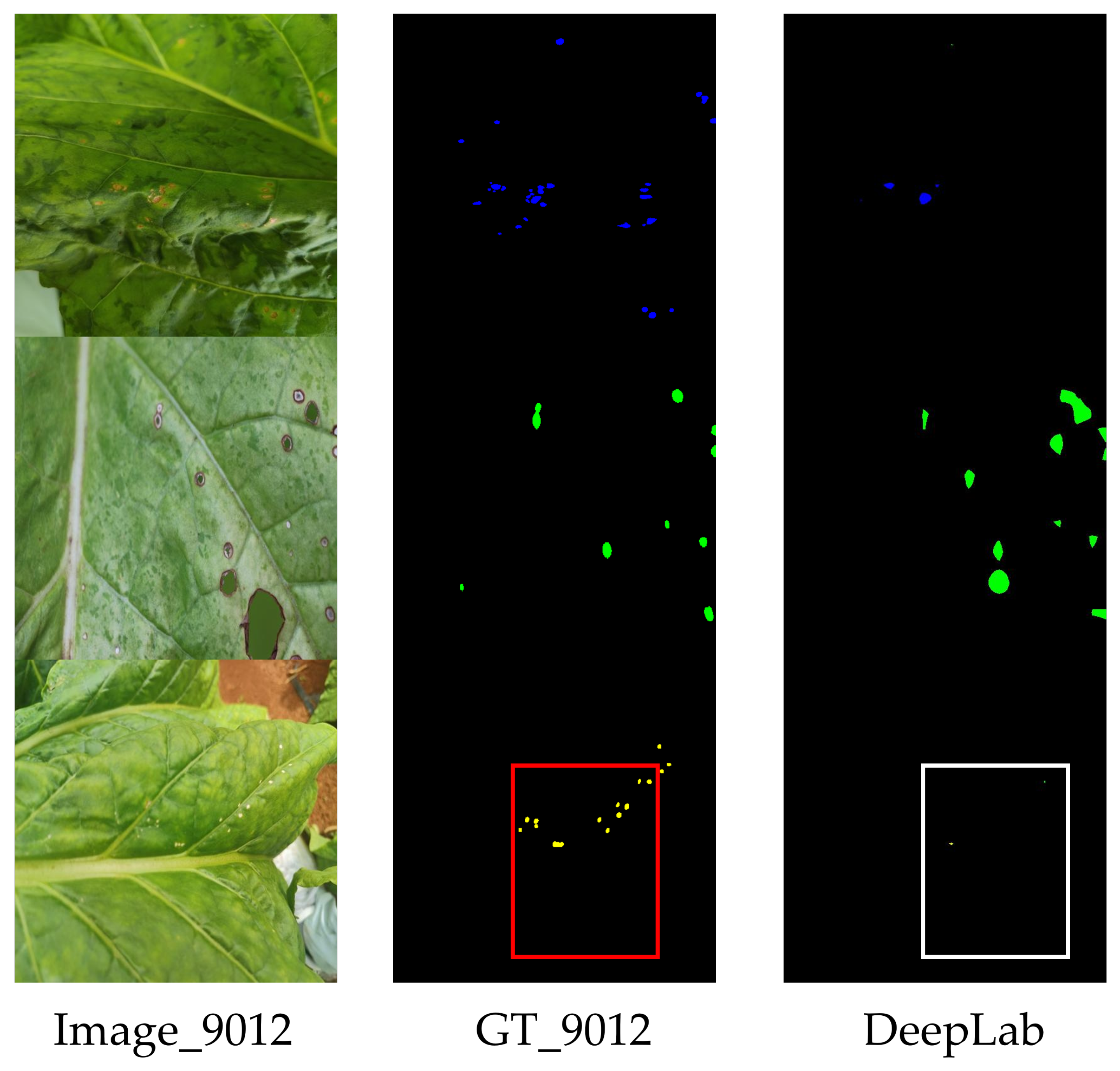

2.2. Selection of Baseline Networks

To evaluate and compare the performance of state-of-the-art semantic segmentation networks on the tobacco leaf disease dataset, we selected three widely used models: UNet, DeepLab, and HRNet. UNet employs a symmetric encoder-decoder architecture with skip connections that effectively fuse shallow spatial details with deep semantic features. It is particularly well-suited for segmenting small lesion areas, as it can better preserve boundary and texture information. In contrast, DeepLab utilizes atrous convolutions and conditional random fields (CRF) to capture multi-scale contextual information and refine boundaries. However, its relatively large downsampling rate may cause loss of details for small or blurry targets. HRNet maintains high-resolution feature representations and is suitable for large-scale scenes, but its high computational complexity makes it less effective for recognizing sparsely distributed small lesions.

Furthermore,

Table 1, which compares the Throughput and Inference Latency (ms) of the three baseline networks, further demonstrates the advantages of the UNet baseline network.

After a comprehensive comparison, we selected UNet as the baseline model due to its stable performance, simple architecture, and superior results in small-object segmentation tasks.

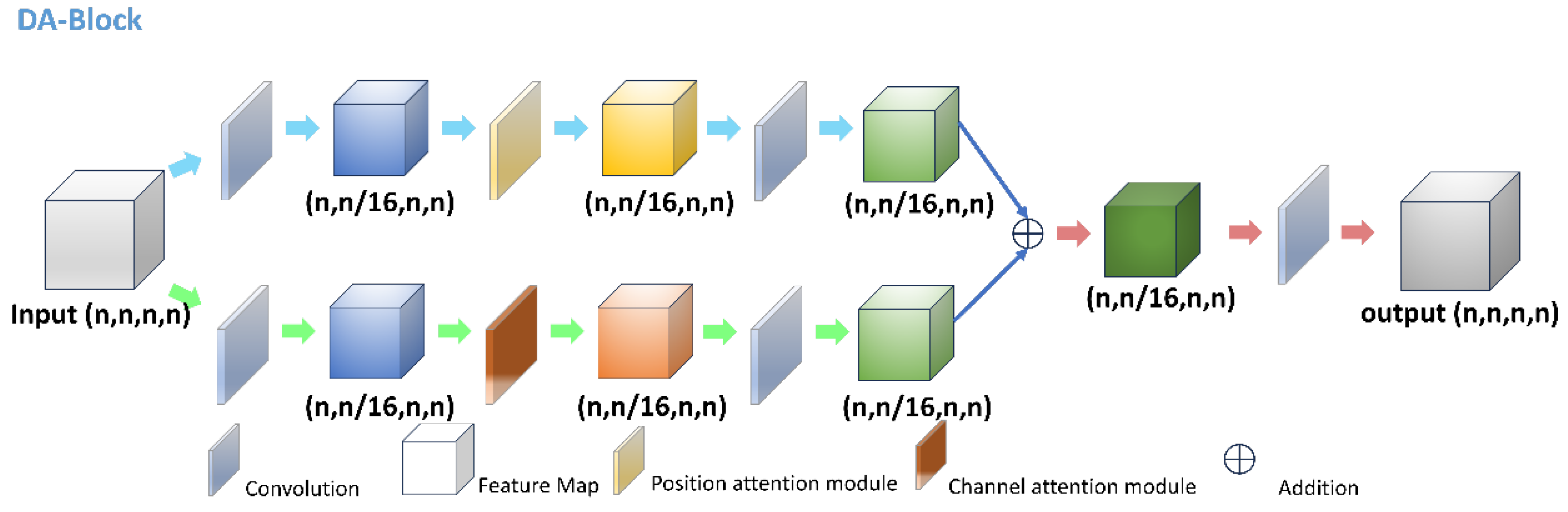

2.3. Dual-Attention Module (DA)

The Dual-Attention (DA) module [

29] integrates both spatial (positional) and channel attention mechanisms to enhance feature representation. By simultaneously emphasizing critical spatial locations and channel-wise features, the DA module (as shown in

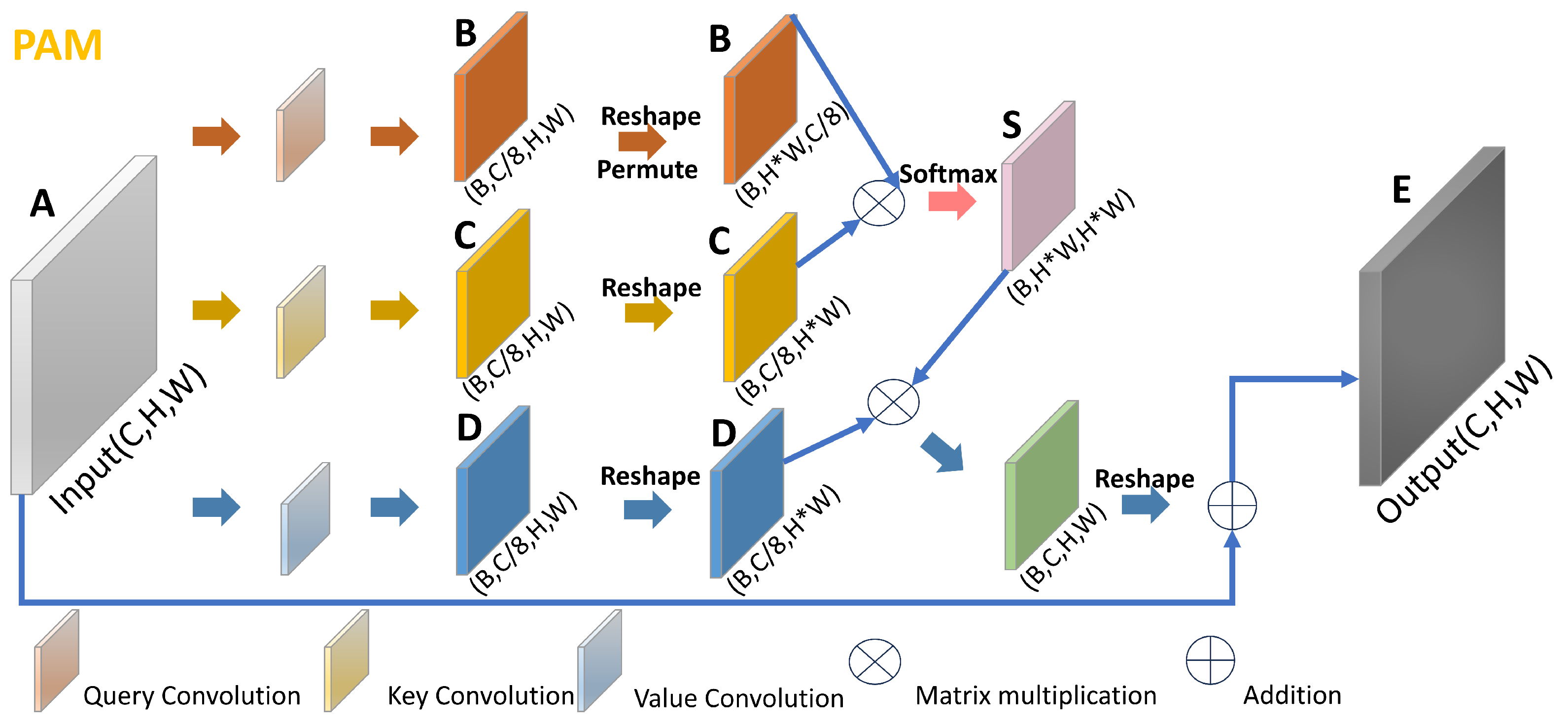

Figure 5) improves the network’s ability to localize and distinguish small and complex target regions. This module consists of the Position Attention Module (PAM, see

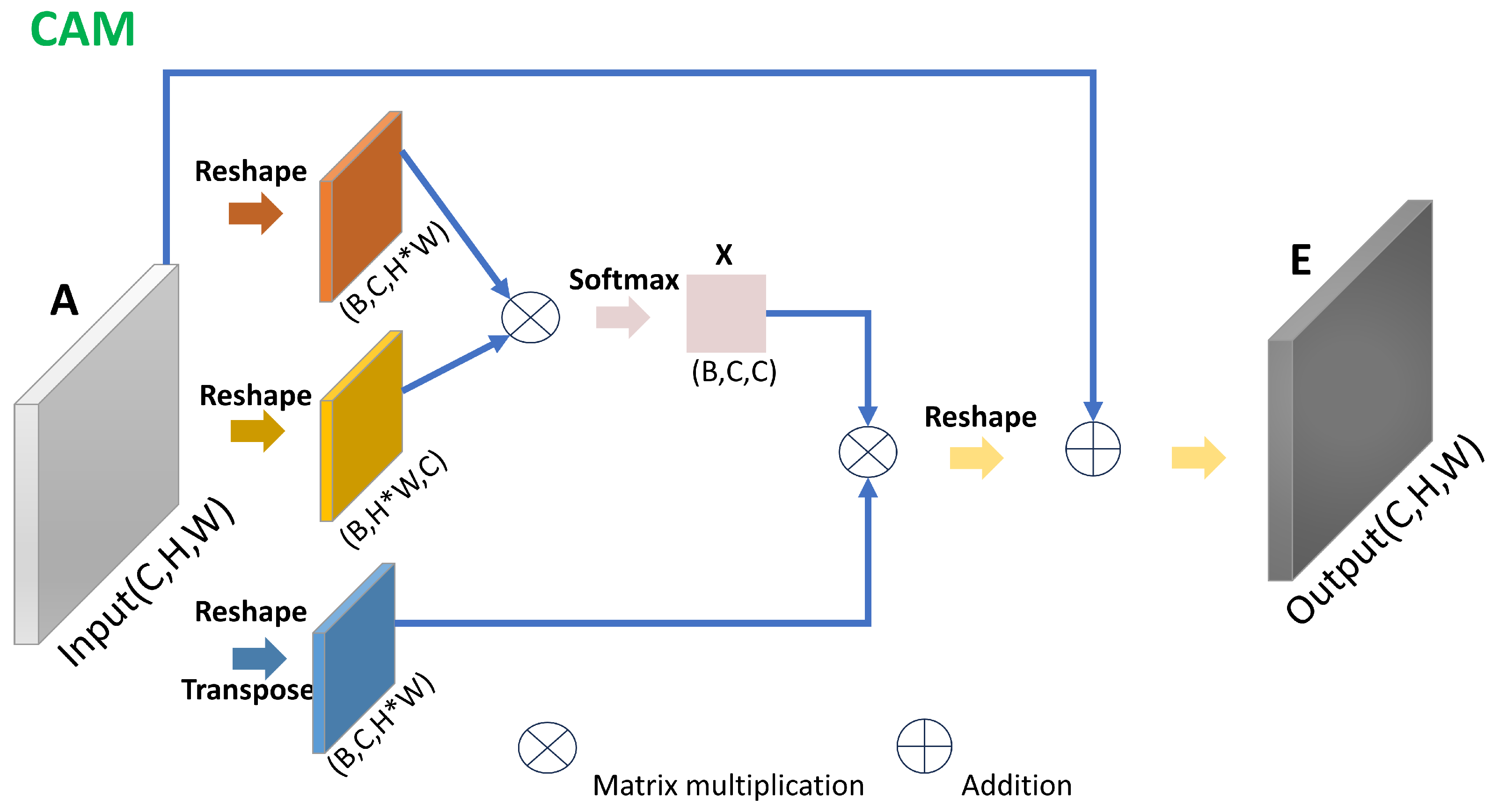

Figure 6) and the Channel Attention Module (CAM, see

Figure 7).

UNet: In this study, we integrate Dual Attention (DA) modules into a UNet-based image segmentation framework to enhance multi-scale feature representation capabilities. We adopt ResNet50 as the encoder backbone network and embed DANet modules at multiple feature levels (i.e., after the second to fifth ResNet modules). The feature maps output from these levels (denoted as feat2 to feat5) are first optimized by the corresponding DANet modules (DANet2 to DANet5) before being passed to the upsampling path. This design enables the model to capture long-range contextual dependencies and focus on information-rich regions in both spatial and channel dimensions.

DeepLab: We add Dual Attention (DA) modules to improve feature extraction at different levels. The ResNet50 backbone provides two feature maps: one with detailed spatial information and another with strong semantic information. We apply DA modules to both maps—DANet3 for the detailed map and DANet5 for the semantic map. These modules use spatial and channel attention to highlight useful parts and reduce noise.

HRNet: We add Dual Attention (DA) modules to the HRNet’s high-resolution multi-branch structure to improve semantic features at specific resolutions. We use HRNetV2, which keeps multiple feature streams at different resolutions. From the final stage, four feature maps are created. We enhance the 3rd and 4th branches (medium and low resolution) using DA modules (DA2 and DA3).

This module is introduced specifically to address the challenge of inadequate attention on small target areas, improving segmentation accuracy in such cases.

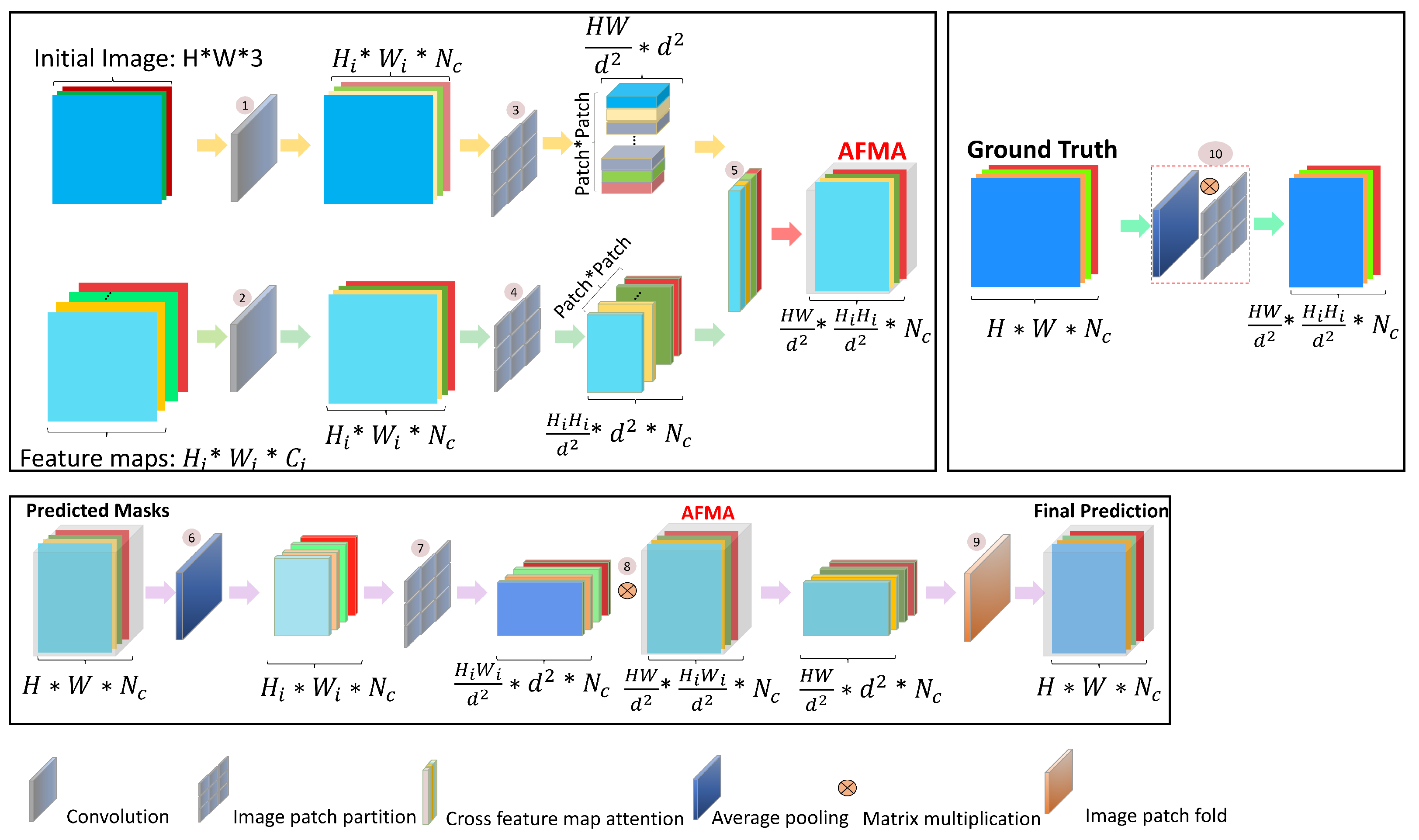

2.4. Cross Feature-Map Attention (AFMA)

The Cross Feature-Map Attention (AFMA) module [

30] establishes cross-scale context modeling by aligning and modulating multi-scale feature attention maps. During the decoding phase, AFMA adaptively recalibrates features from different scales to better capture spatial dependencies and enhance semantic consistency.

Figure 8 illustrates the modulation process of the AFMA module within the decoder.

2.5. Small Object Segmentation Strategies

Small object segmentation poses unique challenges due to limited pixel representation and weak contrast against complex backgrounds. Definitions vary, with relative scale [

31] describing small objects as having a median area ratio between 0.08% and 0.58% of the image, and absolute scale defining them as less than

pixels (as per MS COCO standards).

To tackle this, multi-branch architectures have shown promising results. For example, Fact-Seg [

32] introduces foreground activation and semantic refinement branches, GSCNN [

33] utilizes a shape stream with gated convolution, and MLDA-Net [

34] incorporates self-supervised depth learning and attention modules.

Inspired by these methods, we design a multi-branch attention module to enhance the segmentation accuracy of small lesions in tobacco leaf images.

2.6. Attention Mechanism Fusion in Semantic Segmentation

Attention mechanisms play a crucial role in emphasizing informative features and suppressing irrelevant ones. The channel attention mechanism, exemplified by SE-Net [

35], enhances feature discrimination across channels. However, its application to high-resolution inputs can lead to increased computational cost and overfitting.

The position attention mechanism (PAM), as used in DANet [

36], models long-range spatial dependencies and enhances global context aggregation. Despite its effectiveness, PAM incurs a quadratic complexity with respect to image size, which may limit its real-time applicability.

To balance detail retention and efficiency, this study integrates cross-feature map attention and dual-branch attention mechanisms, drawing inspiration from DANet [

37] and HMANet [

38]. This combination enhances both local sensitivity and global awareness, enabling better segmentation of small and irregular lesions with minimal additional overhead.

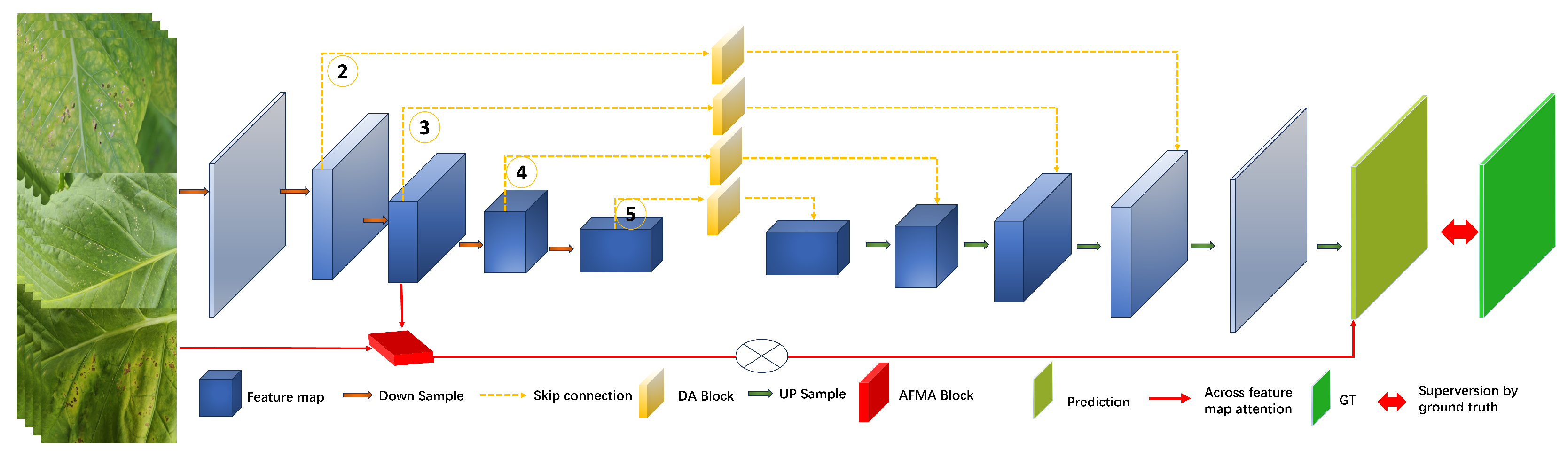

2.7. Network Architecture (UNet+AFMA+DA)

The overall network architecture integrates the baseline UNet with the AFMA and DA modules to leverage their complementary strengths. The DA module is embedded within the encoder to boost attention on spatial and channel dimensions, while the AFMA module refines multi-scale feature fusion in the decoder.

Figure 9 presents the full network diagram, highlighting the interaction and placement of the modules.

The combined model contains approximately X million parameters, balancing model complexity and performance gains.

2.8. Loss Function

The overall training loss consists of three terms: (1) Cross Entropy Loss (ce), which measures the difference between the model’s predictions and the true labels to ensure pixel-level classification accuracy; (2) AFMA loss, which minimizes the discrepancy between the AFMA features learned by the model and the ideal AFMA features, thereby improving the accuracy and consistency of multi-scale feature modeling; (3) Focal Loss, which addresses class imbalance by focusing training on hard, misclassified examples.

By incorporating AFMA loss, the network is encouraged to achieve better AFMA attention scores during training, aiding in the segmentation of small target objects. The total loss is given by Equation (

1):

The cross-entropy loss function introduces category weights

W to address imbalance in the number of samples between categories. The cross-entropy loss is computed as follows (Equation (

2)):

where

N is the number of samples,

is the weight for the true class

to balance class imbalance,

C is the number of classes,

is the predicted score for the true class of sample

i, and

is the predicted score for class

j of sample

i.

AFMA loss is calculated using the mean square error (MSE) between the predicted attention map and the ground truth attention map. Its formula is given by Equation (

3):

where

is the number of categories,

and

are the height and width of the feature map,

is the predicted attention value at location

in the

k-th channel of the

i-th layer feature map, and

is the corresponding ground truth attention value.

Focal Loss is designed to mitigate class imbalance by reducing the loss contribution from easy examples, thus focusing training on harder, misclassified examples. The formula is shown in Equation (

4):

where

denotes the ground truth label for class

i, and

is the predicted probability for class

i. Define

when

, and

when

. The weighting factor

balances class importance:

if

, and

if

(typically

). The focusing parameter

controls the rate at which easy examples are down-weighted, commonly set as

.

4. Discussion

To enrich data diversity and simulate the co-occurrence of multiple disease types, we employed an image splicing strategy to construct composite samples. While effective in exposing the model to complex patterns, this method may introduce artifacts such as lighting inconsistencies, sharp edges, and unnatural transitions, potentially affecting model generalization. To mitigate these issues, we normalized brightness and contrast before stitching and applied augmentations (e.g., rotation, flipping, Gaussian blur) to reduce overfitting. Additionally, the attention mechanisms (AFMA and DA) help the model focus on relevant lesion features while suppressing noise. Experimental results show stable performance on both stitched and original images, indicating minimal impact from potential artifacts.

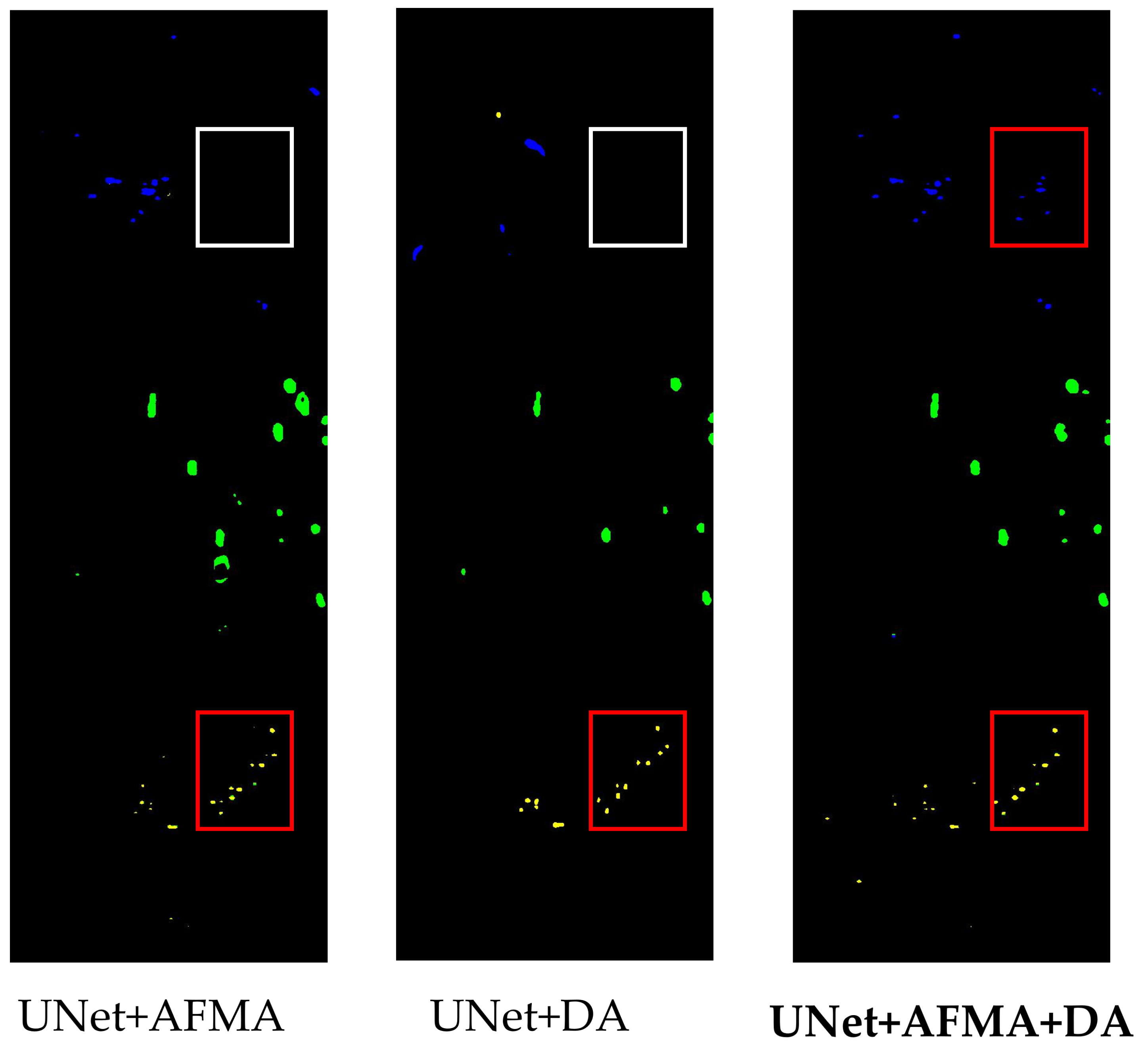

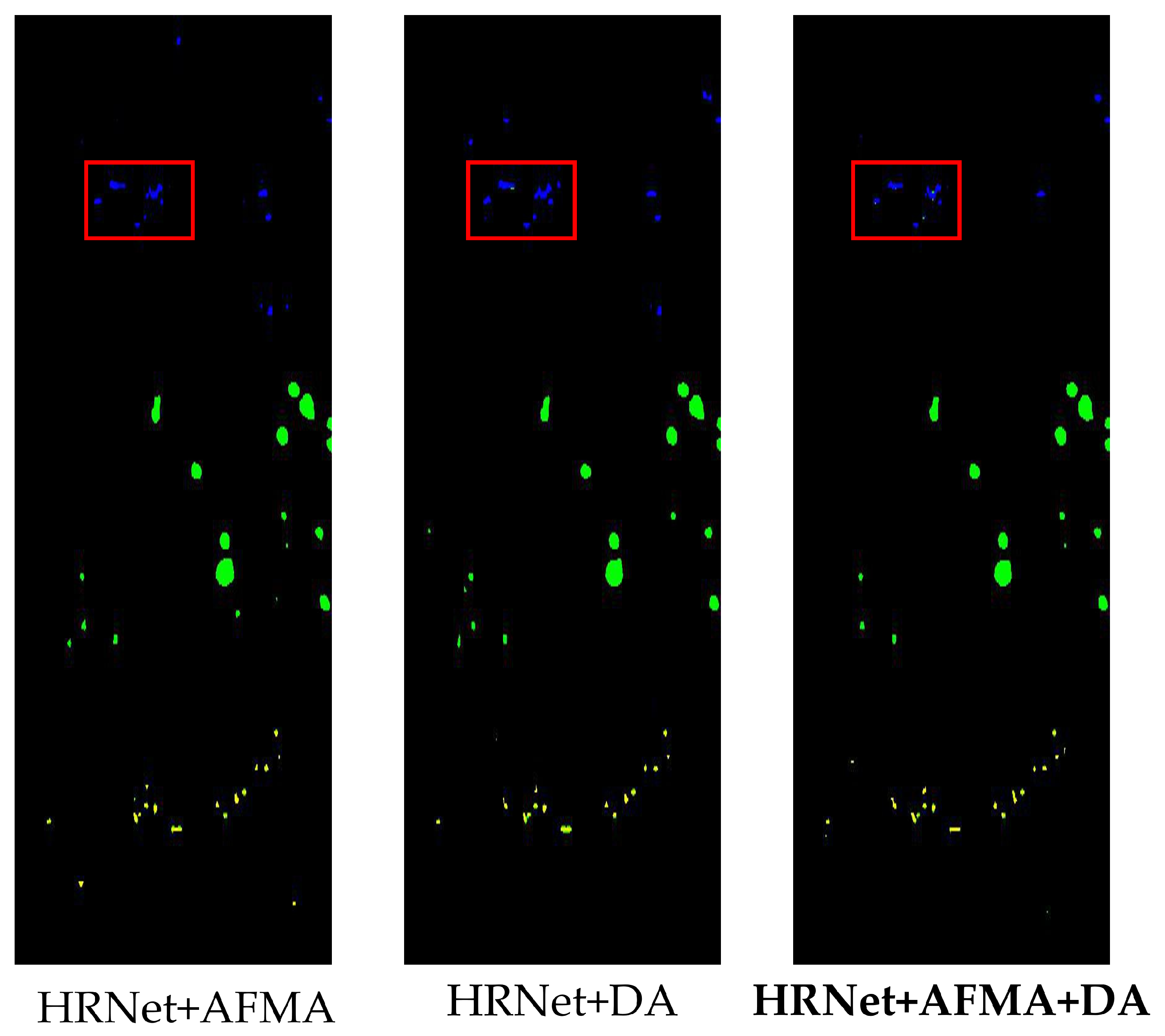

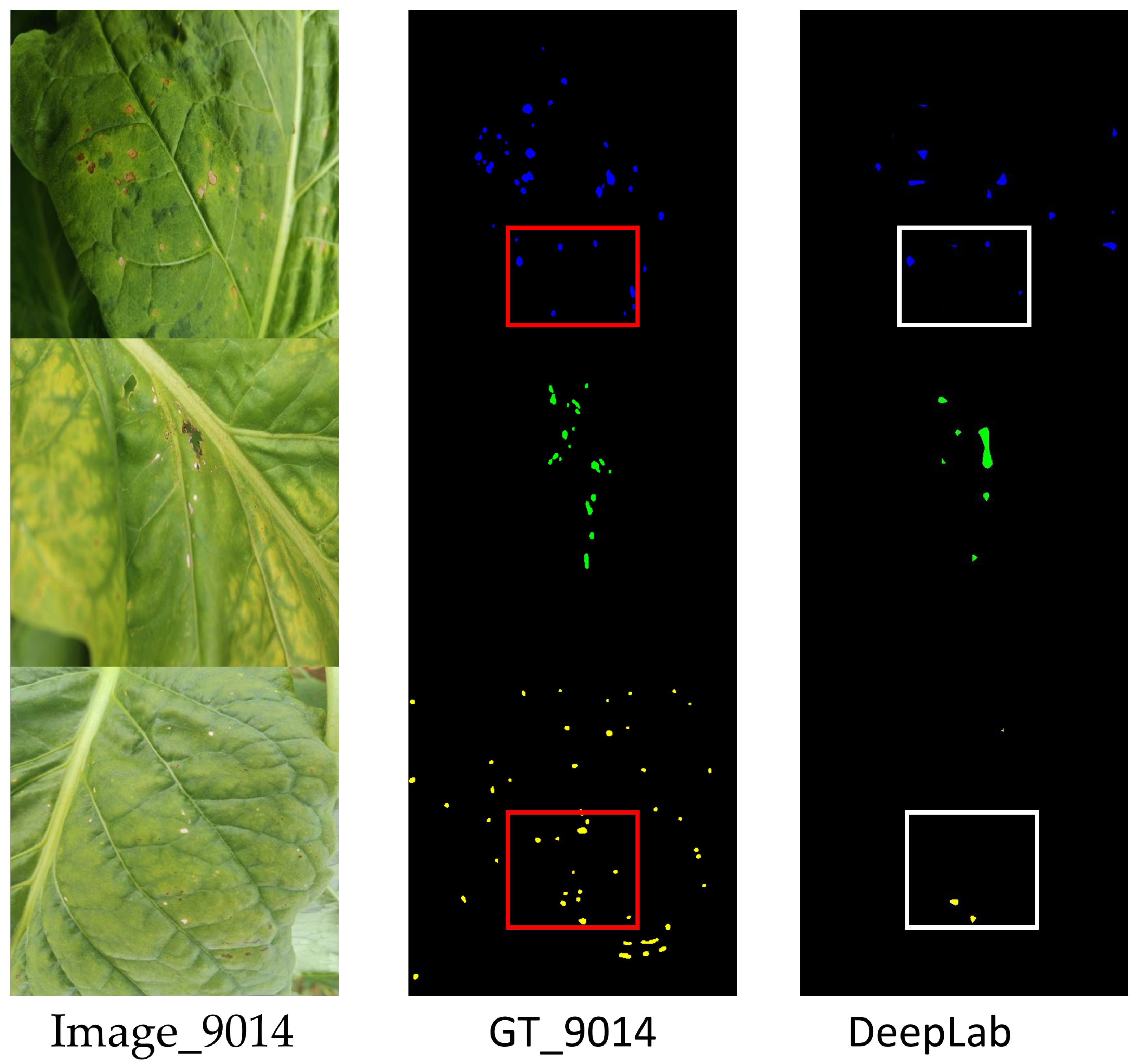

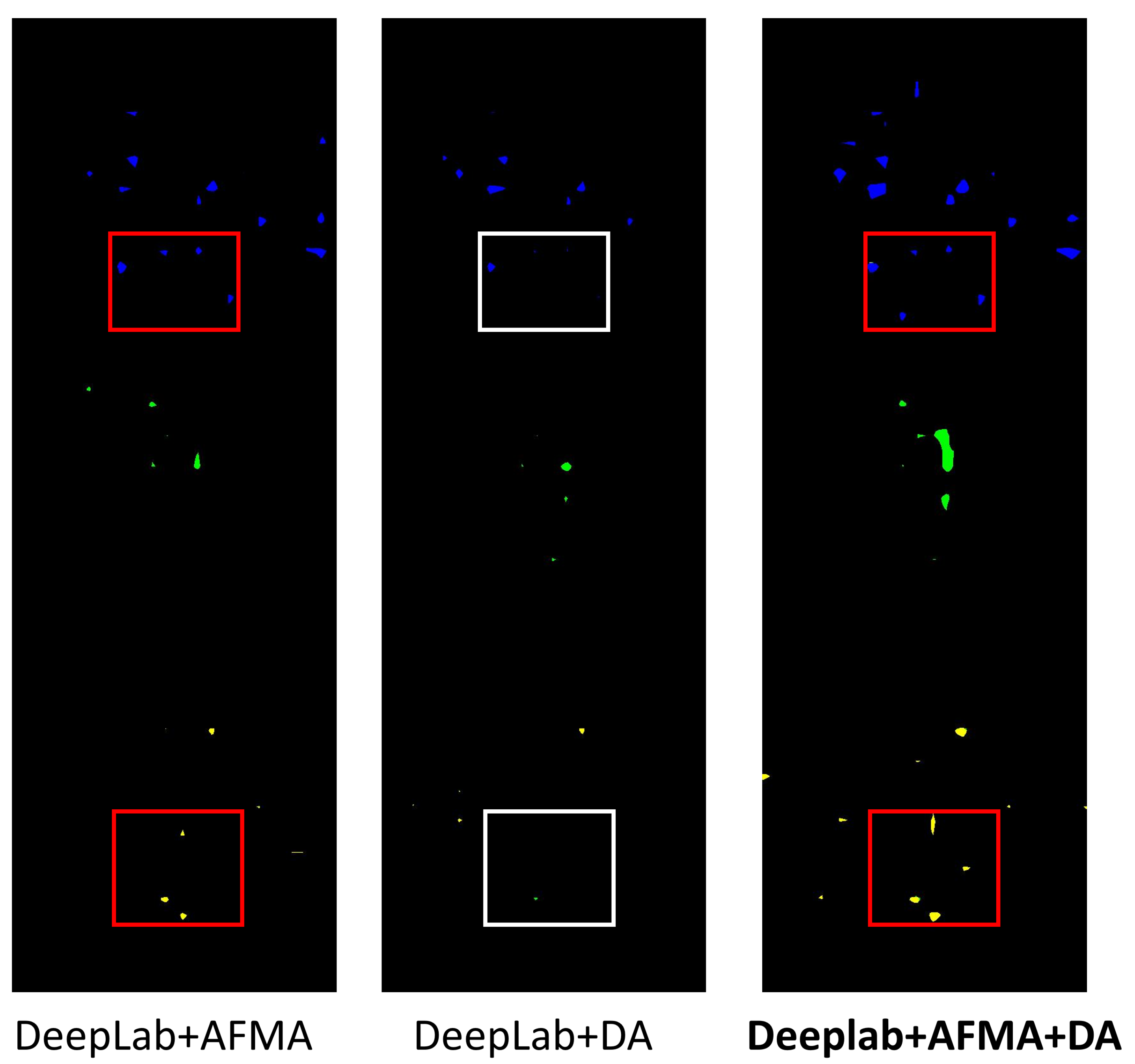

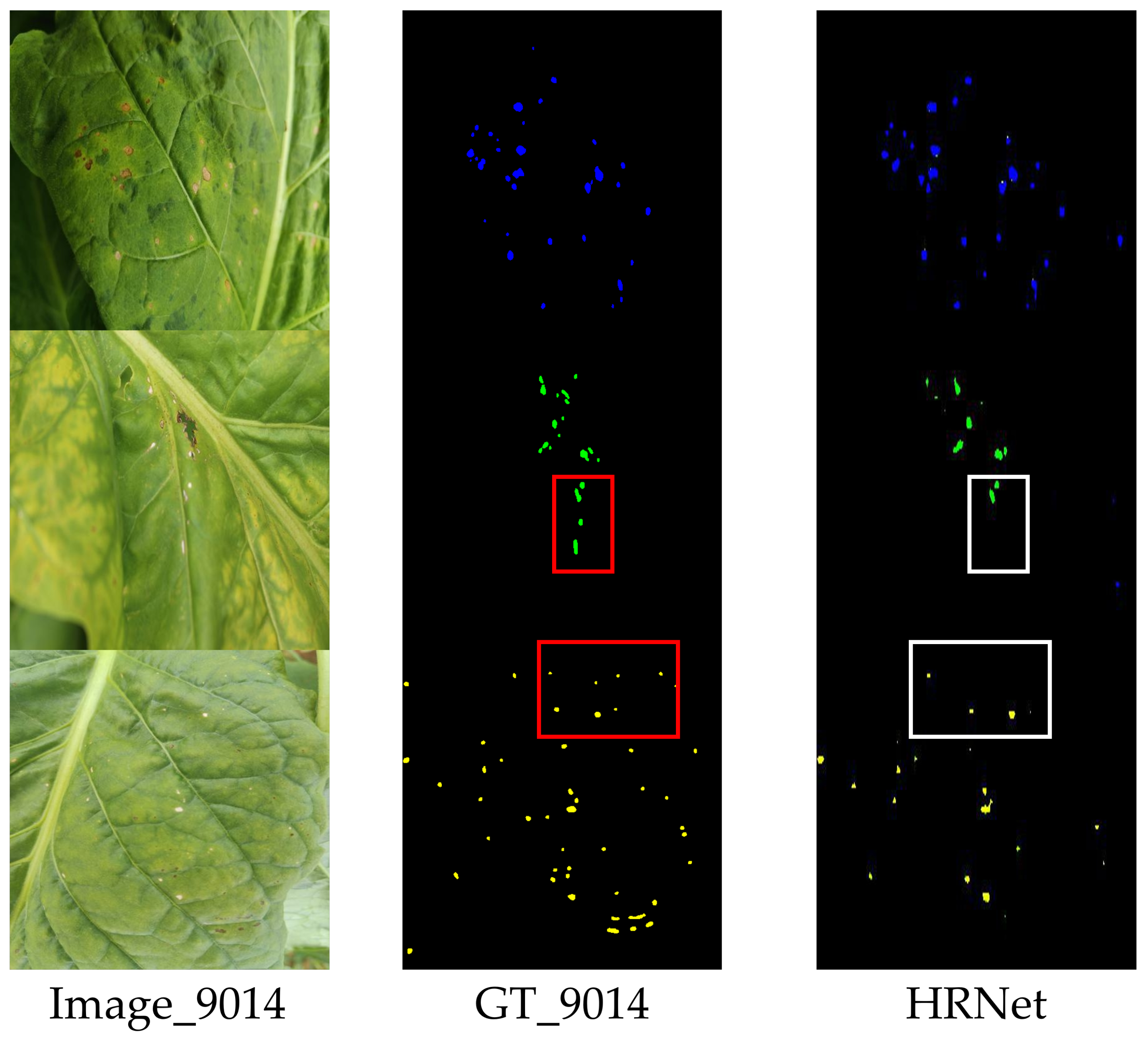

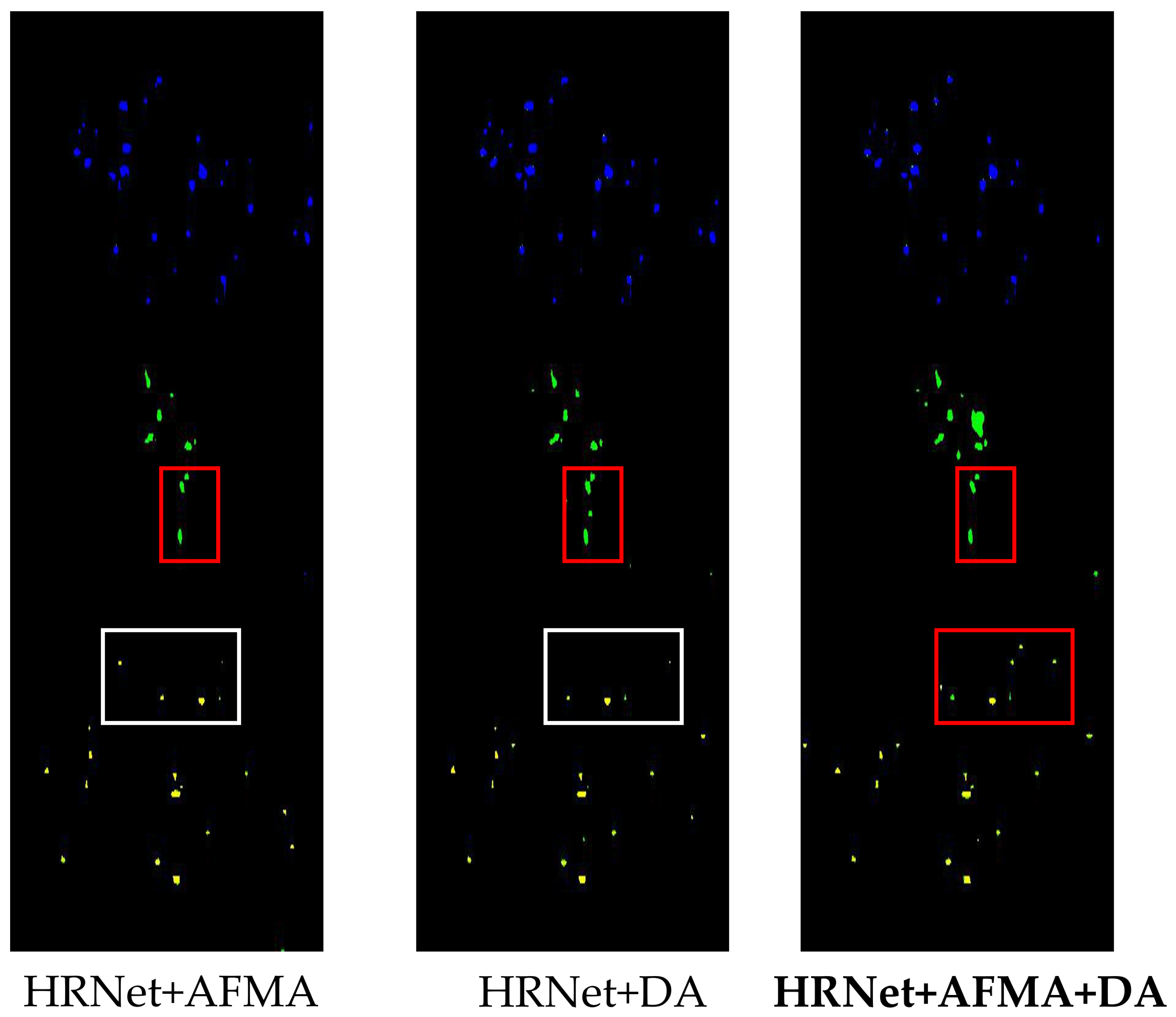

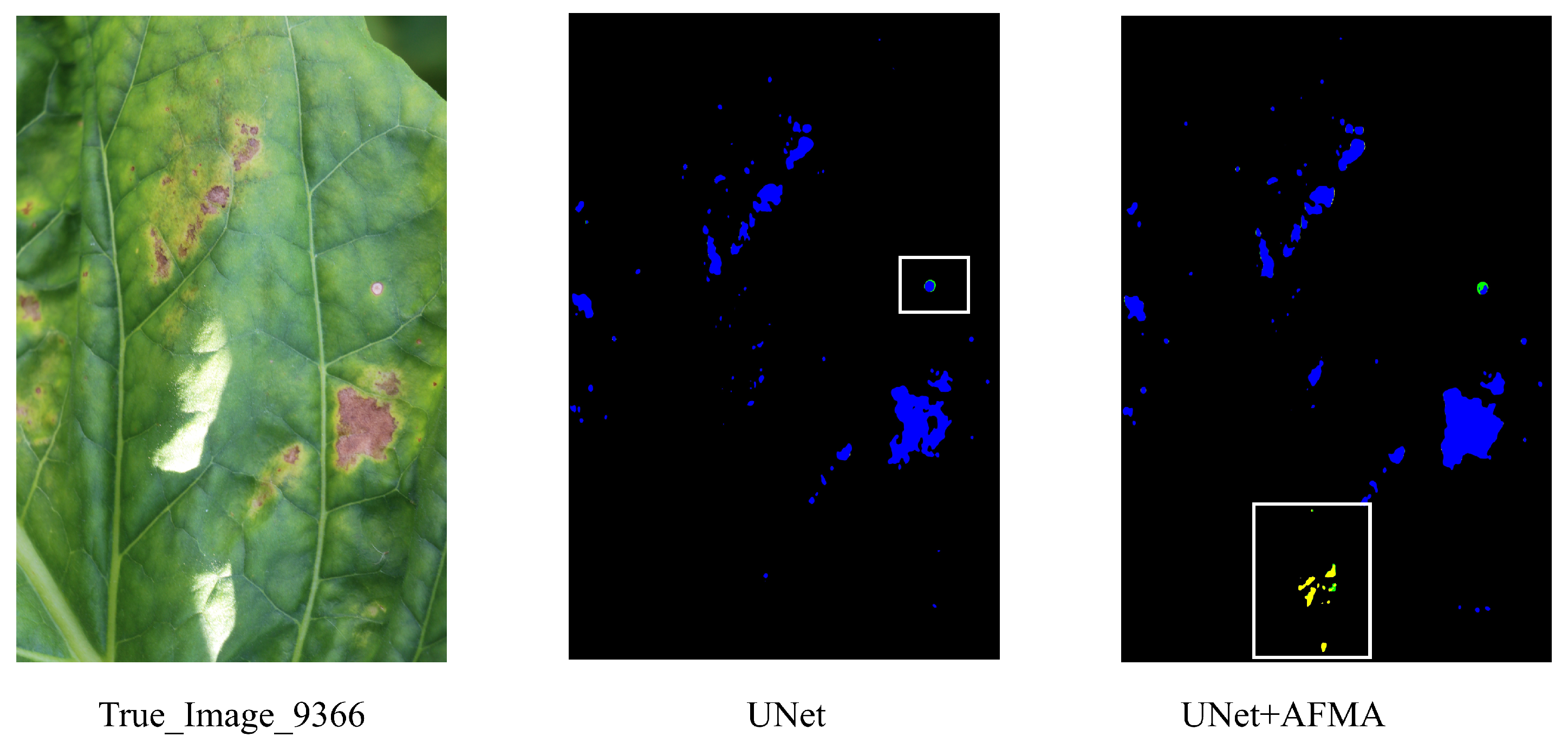

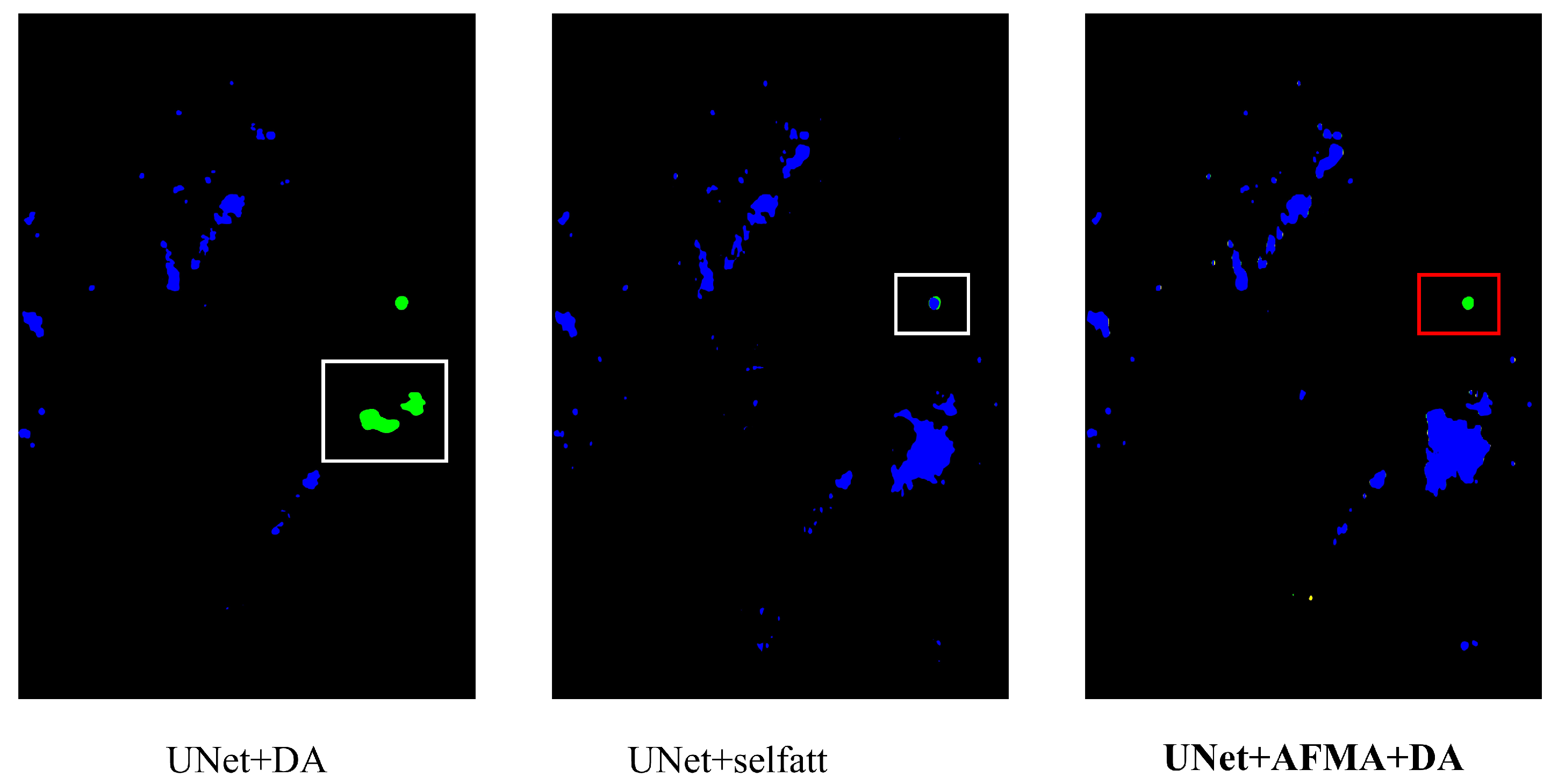

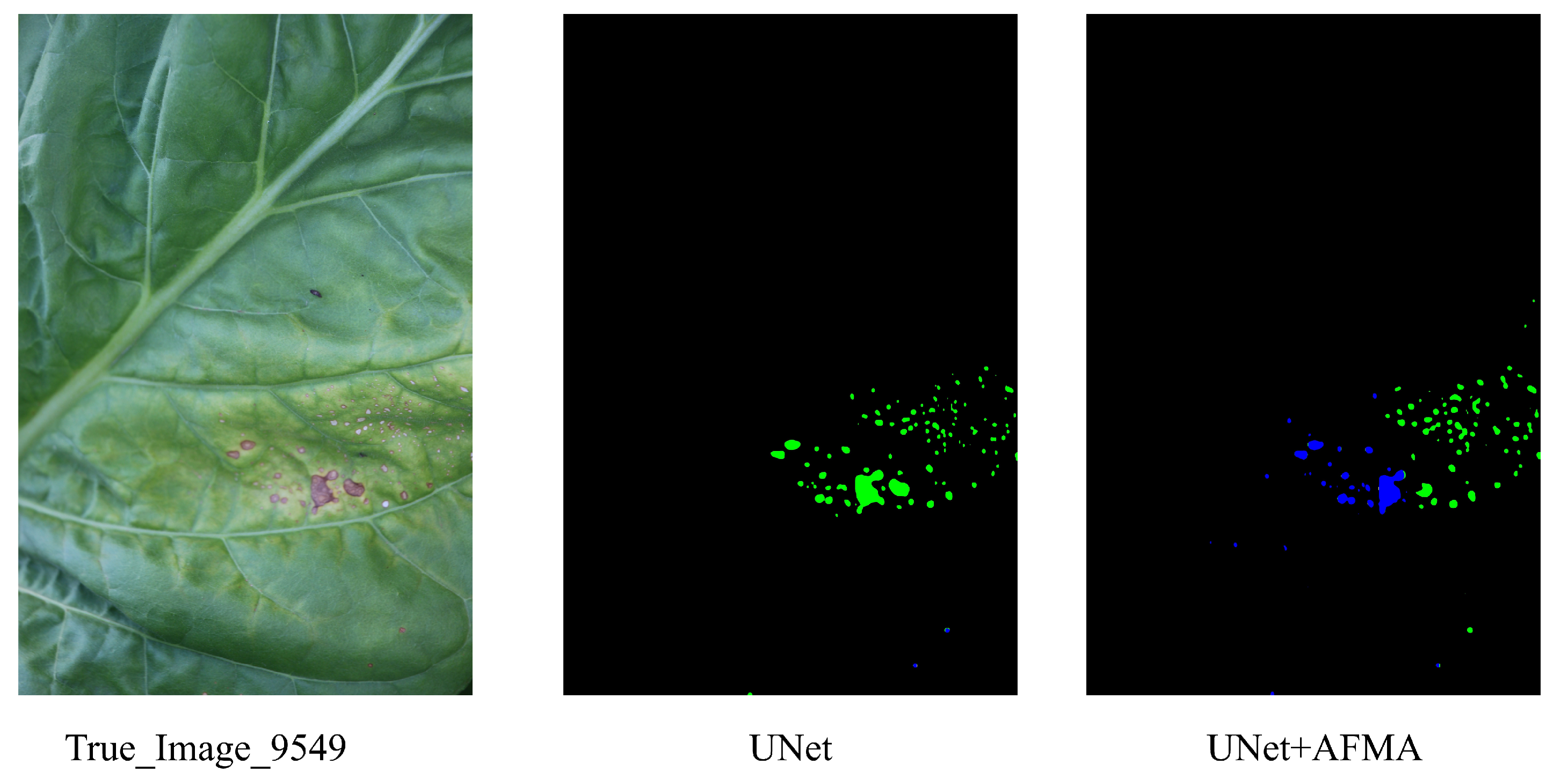

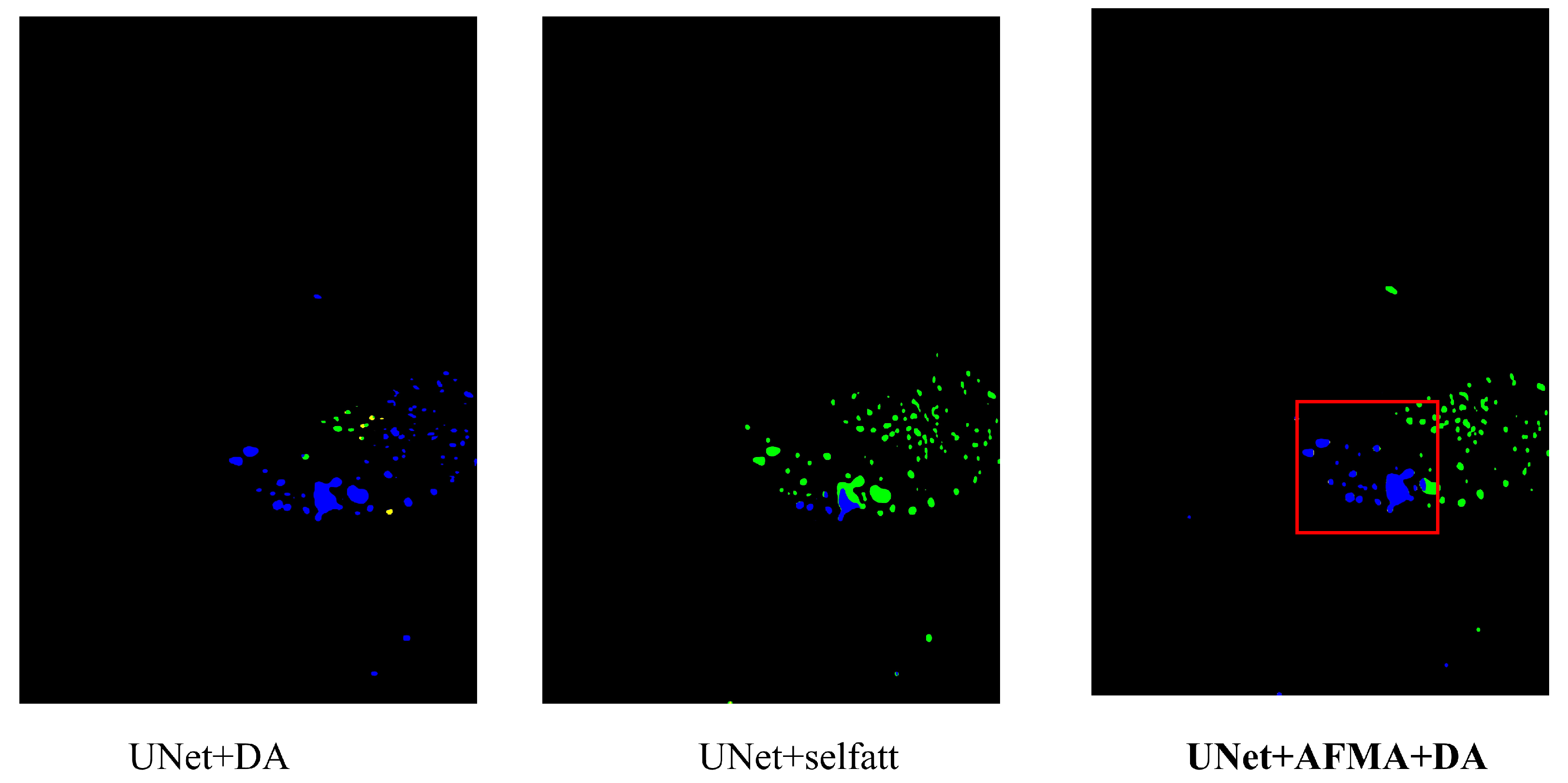

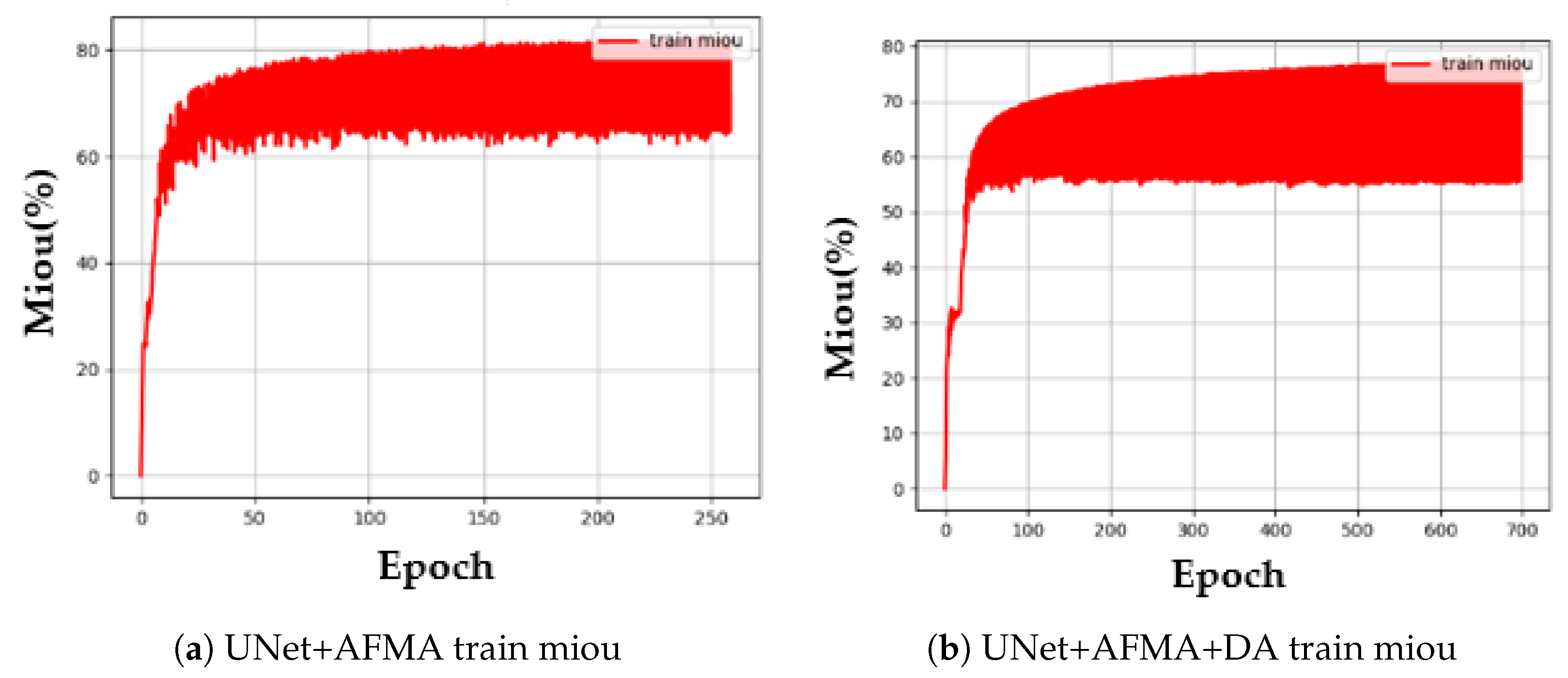

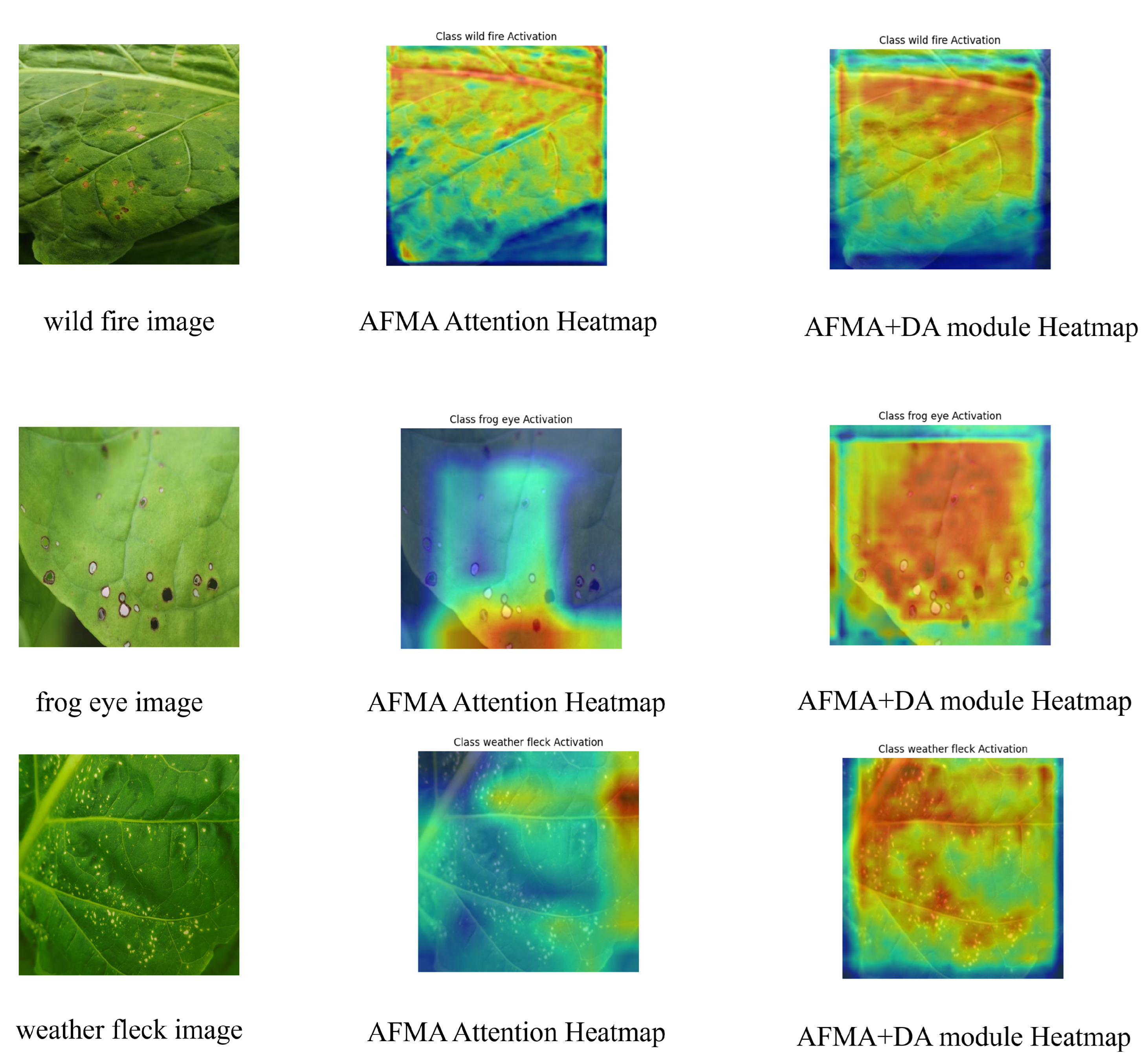

Experimental results indicate that using AFMA alone led to a decline in wildfire disease identification performance (IoU decreased from 43.77% to 37.88%). This is attributed to its difficulty in handling lesions with variable morphology or ambiguous developmental stages, often resulting in the neglect of edges or misdirected attention towards healthy regions. Conversely, using DA alone also significantly reduced performance (IoU dropped by 30.11%), as its inherent focus on small lesions caused it to overlook larger ones, potentially suppressing the response to large targets and leading to misclassification, blurred boundaries, and intensified competition among smaller categories. However, the combination of AFMA and DA achieved complementary advantages: AFMA’s mapping of lesion size relationships effectively alleviated DA’s suppression of large targets, significantly improving overall segmentation performance. The Unet+AFMA+DA model achieved 46.58% IoU on wildfire lesions and 54.79% overall mIoU, outperforming models using either module individually.

Regarding the issue of dataset size, data scarcity has long been a major constraint on the widespread application of deep learning and related methods in tobacco disease identification. In addition to further data collection and accumulation, techniques such as image splicing, image fusion, and image generation can help mitigate data-related limitations, particularly in cases involving multiple coexisting diseases. In summary, while AFMA and DA individually have limitations, their combination delivers the best performance for recognizing various tobacco leaf disease classes.