GTDR-YOLOv12: Optimizing YOLO for Efficient and Accurate Weed Detection in Agriculture

Abstract

1. Introduction

2. Data Resources and Optimization Strategies for Weed Detection Training

2.1. Dataset

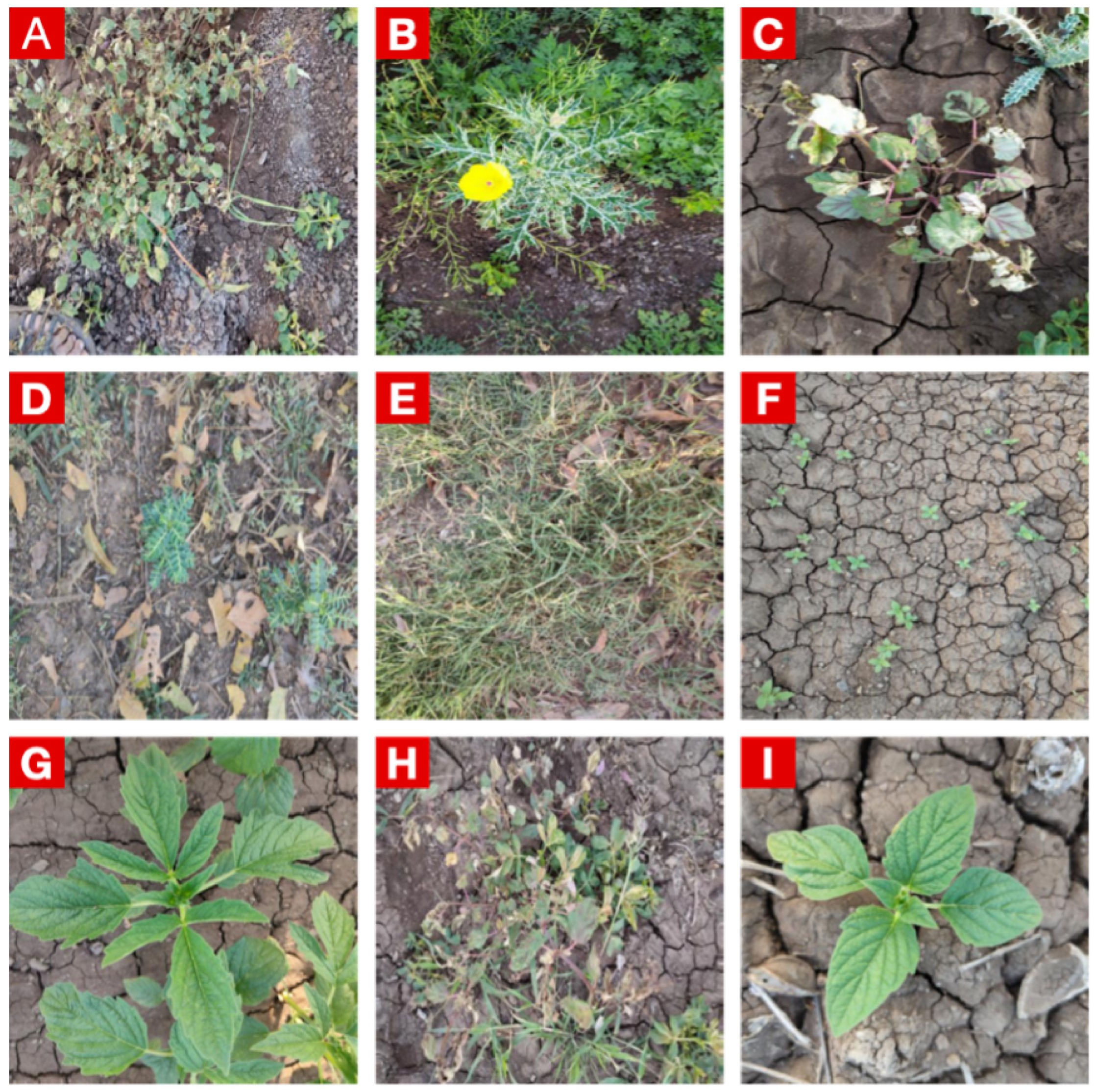

2.2. Composition of Weed Species in Weeds Detection Dataset

2.3. Dataset Training and Preparation Strategy

3. Methodology

3.1. Baseline Architecture: YOLOv12

3.2. Improved YOLOv12: GTDR-YOLOv12

3.2.1. GDR-Conv

3.2.2. GTDR-C3

3.2.3. Lookahead Optimizer

3.3. Performance Metrics

4. Experimental Validation

4.1. Convergence Analysis of GTDR-YOLOv12

4.2. Impact of Lookahead Optimizer on Detection Performance

4.3. Ablation Analysis of GTDR-YOLOv12

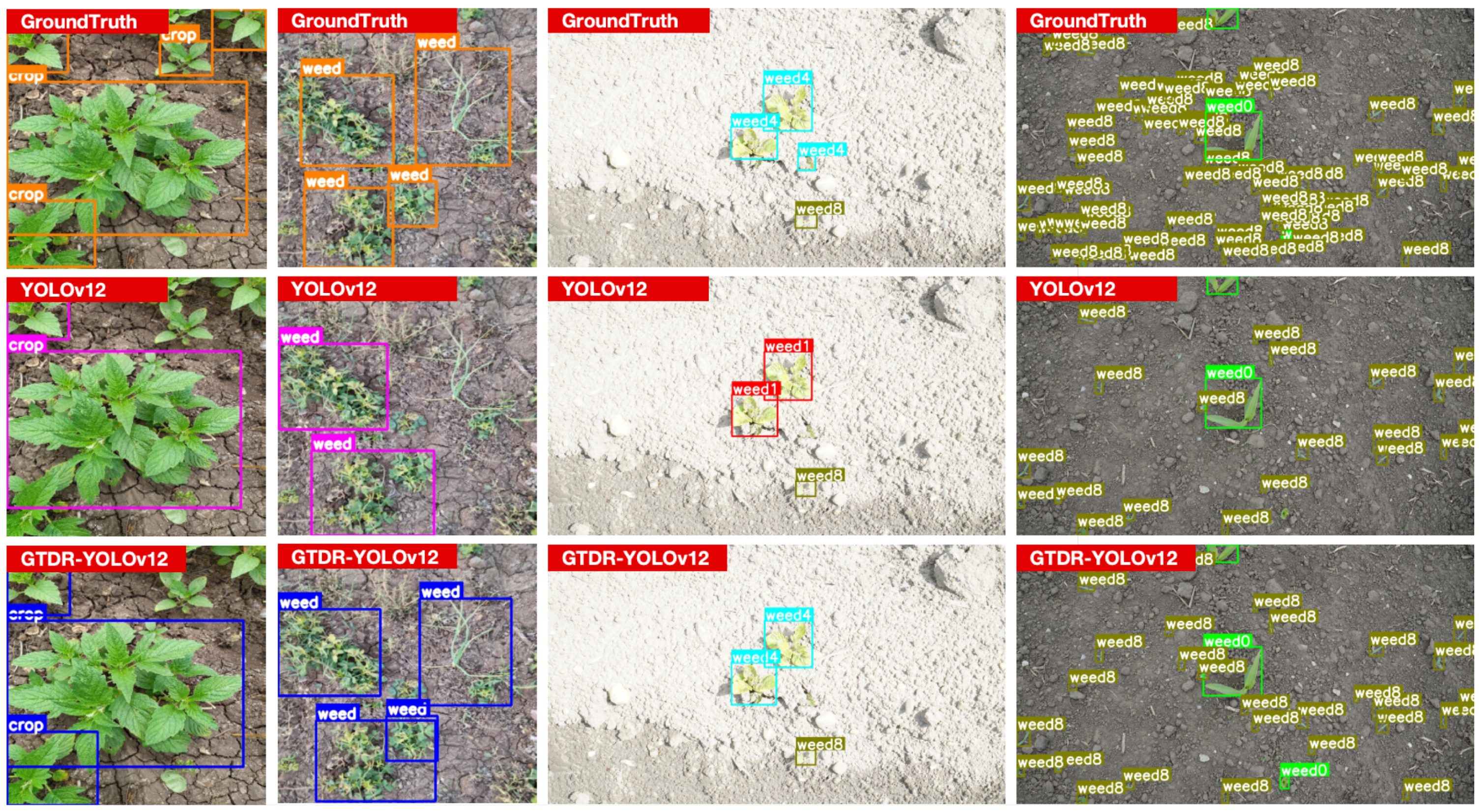

4.4. Experimental Evaluation of YOLOv12 and GTDR-YOLOv12

4.5. Comparative Analysis of YOLO Variants

4.6. Evaluating the Benefits of Transfer Learning and Domain Adaptation

4.7. Embedded Platform Testing

5. Discussion and Limitations

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Murad, N.Y.; Mahmood, T.; Forkan, A.R.M.; Morshed, A.; Jayaraman, P.P.; Siddiqui, M.S. Weed detection using deep learning: A systematic literature review. Sensors 2023, 23, 3670. [Google Scholar] [CrossRef]

- Nath, C.P.; Singh, R.G.; Choudhary, V.K.; Datta, D.; Nandan, R.; Singh, S.S. Challenges and alternatives of herbicide-based weed management. Agronomy 2024, 14, 126. [Google Scholar] [CrossRef]

- Akhtari, H.; Navid, H.; Karimi, H.; Dammer, K.H. Deep learning-based object detection model for location and recognition of weeds in cereal fields using colour imagery. Crop Pasture Sci. 2025, 76, 4. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 779–788. [Google Scholar]

- Ji, W.; Pan, Y.; Xu, B.; Wang, J. A real-time apple targets detection method for picking robot based on ShufflenetV2-YOLOX. Agriculture 2022, 12, 856. [Google Scholar] [CrossRef]

- Wang, J.; Gao, Z.; Zhang, Y.; Zhou, J.; Wu, J.; Li, P. Real-time detection and location of potted flowers based on a ZED camera and a YOLO V4-tiny deep learning algorithm. Horticulturae 2021, 8, 21. [Google Scholar] [CrossRef]

- Zuo, Z.; Gao, S.; Peng, H.; Xue, Y.; Han, L.; Ma, G.; Mao, H. Lightweight Detection of Broccoli Heads in Complex Field Environments Based on LBDC-YOLO. Agronomy 2024, 14, 2359. [Google Scholar] [CrossRef]

- Guan, X.; Shi, L.; Yang, W.; Ge, H.; Wei, X.; Ding, Y. Multi-Feature Fusion Recognition and Localization Method for Unmanned Harvesting of Aquatic Vegetables. Agriculture 2024, 14, 971. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhang, X.; Sun, J.; Yu, T.; Cai, Z.; Zhang, Z.; Mao, H. Low-cost lettuce height measurement based on depth vision and lightweight instance segmentation model. Agriculture 2024, 14, 1596. [Google Scholar] [CrossRef]

- Zhang, T.; Zhou, J.; Liu, W.; Yue, R.; Shi, J.; Zhou, C.; Hu, J. SN-CNN: A Lightweight and Accurate Line Extraction Algorithm for Seedling Navigation in Ridge-Planted Vegetables. Agriculture 2024, 14, 1596. [Google Scholar] [CrossRef]

- Zhang, T.; Zhou, J.; Liu, W.; Yue, R.; Yao, M.; Shi, J.; Hu, J. Seedling-YOLO: High-Efficiency Target Detection Algorithm for Field Broccoli Seedling Transplanting Quality Based on YOLOv7-Tiny. Agronomy 2024, 14, 931. [Google Scholar] [CrossRef]

- Zhang, Q.; Chen, Q.; Xu, W.; Xu, L.; Lu, E. Prediction of Feed Quantity for Wheat Combine Harvester Based on Improved YOLOv5s and Weight of Single Wheat Plant without Stubble. Agriculture 2024, 14, 1251. [Google Scholar] [CrossRef]

- Shen, Y.; Yang, Z.; Khan, Z.; Liu, H.; Chen, W.; Duan, S. Optimization of Improved YOLOv8 for Precision Tomato Leaf Disease Detection in Sustainable Agriculture. Sensors 2025, 25, 1398. [Google Scholar] [CrossRef]

- Khan, Z.; Liu, H.; Shen, Y.; Yang, Z.; Zhang, L.; Yang, F. Optimizing Precision Agriculture: A Real-Time Detection Approach for Grape Vineyard Unhealthy Leaves Using Deep Learning Improved YOLOv7. Comput. Electron. Agric. 2025, 231, 109969. [Google Scholar] [CrossRef]

- Khan, Z.; Liu, H.; Shen, Y.; Zeng, X. Deep Learning Improved YOLOv8 Algorithm: Real-Time Precise Instance Segmentation of Crown Region Orchard Canopies in Natural Environment. Comput. Electron. Agric. 2024, 224, 109168. [Google Scholar] [CrossRef]

- Pei, H.; Sun, Y.; Huang, H.; Zhang, W.; Sheng, J.; Zhang, Z. Weed detection in maize fields by UAV images based on crop row preprocessing and improved YOLOv4. Agriculture 2022, 12, 975. [Google Scholar] [CrossRef]

- Tao, T.; Wei, X. STBNA-YOLOv5: An Improved YOLOv5 Network for Weed Detection in Rapeseed Field. Agriculture 2024, 15, 22. [Google Scholar] [CrossRef]

- Deng, L.; Miao, Z.; Zhao, X.; Yang, S.; Gao, Y.; Zhai, C.; Zhao, C. HAD-YOLO: An Accurate and Effective Weed Detection Model Based on Improved YOLOV5 Network. Agronomy 2025, 15, 57. [Google Scholar] [CrossRef]

- Liu, J.; Abbas, I.; Noor, R.S. Development of deep learning-based variable rate agrochemical spraying system for targeted weeds control in strawberry crop. Agronomy 2021, 11, 1480. [Google Scholar] [CrossRef]

- Sa, I.; Popović, M.; Khanna, R.; Chen, Z.; Lottes, P.; Liebisch, F.; Nieto, J.; Stachniss, C.; Walter, A.; Siegwart, R. WeedMap: A Large-Scale Semantic Weed Mapping Framework Using Aerial Multispectral Imaging and Deep Neural Network for Precision Farming. Remote Sens. 2018, 10, 1423. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhao, P.; Zheng, Z.; Luo, W.; Cheng, B.; Wang, S.; Zheng, Z. Rt-Mwdt: A Lightweight Real-Time Transformer with Edge-Driven Multiscale Fusion for Precisely Detecting Weeds in Complex Cornfield Environments. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5260823 (accessed on 19 July 2025).

- Chen, S.; Memon, M.S.; Shen, B.; Guo, J.; Du, Z.; Tang, Z.; Memon, H. Identification of weeds in cotton fields at various growth stages using color feature techniques. Ital. J. Agron. 2024, 19, 100021. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, X.; Ma, G.; Du, X.; Shaheen, N.; Mao, H. Recognition of weeds at asparagus fields using multi-feature fusion and backpropagation neural network. Int. J. Agric. Biol. Eng. 2021, 14, 190–198. [Google Scholar] [CrossRef]

- Memon, M.S.; Chen, S.; Shen, B.; Liang, R.; Tang, Z.; Wang, S.; Memon, N. Automatic visual recognition, detection and classification of weeds in cotton fields based on machine vision. Crop Prot. 2025, 187, 106966. [Google Scholar] [CrossRef]

- Zhang, Z.; Lu, Y.; Zhao, Y.; Pan, Q.; Jin, K.; Xu, G.; Hu, Y. Ts-yolo: An All-Day and Lightweight Tea Canopy Shoots Detection Model. Agronomy 2023, 13, 1411. [Google Scholar] [CrossRef]

- Feng, G.; Wang, C.; Wang, A.; Gao, Y.; Zhou, Y.; Huang, S.; Luo, B. Segmentation of Wheat Lodging Areas from UAV Imagery Using an Ultra-Lightweight Network. Agriculture 2024, 14, 244. [Google Scholar] [CrossRef]

- Rahman, M.G.; Rahman, M.A.; Parvez, M.Z.; Patwary, M.A.K.; Ahamed, T.; Fleming-Muñoz, D.A.; Moni, M.A. ADeepWeeD: An Adaptive Deep Learning Framework for Weed Species Classification. Artif. Intell. Agric. 2025, 15, 590–609. [Google Scholar] [CrossRef]

- Li, S.; Chen, Z.; Xie, J.; Zhang, H.; Guo, J. PD-YOLO: A Novel Weed Detection Method Based on Multi-Scale Feature Fusion. Front. Plant Sci. 2025, 16, 1506524. [Google Scholar] [CrossRef]

- Farooq, U.; Rehman, A.; Khanam, T.; Amtullah, A.; Bou-Rabee, M.A.; Tariq, M. Lightweight Deep Learning Model for Weed Detection for IoT Devices. In Proceedings of the 2022 2nd International Conference on Emerging Frontiers in Electrical and Electronic Technologies (ICEFEET), Patna, India, 24–25 June 2022; IEEE: New York, NY, USA, 2022; pp. 1–5. [Google Scholar]

- Islam, M.D.; Liu, W.; Izere, P.; Singh, P.; Yu, C.; Riggan, B.; Shi, Y. Towards Real-Time Weed Detection and Segmentation with Lightweight CNN Models on Edge Devices. Comput. Electron. Agric. 2025, 237, 110600. [Google Scholar] [CrossRef]

- Nakabayashi, T.; Yamagishi, K.; Suzuki, T. Automated Weeding Systems for Weed Detection and Removal in Garlic/Ginger Fields. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 4. [Google Scholar] [CrossRef]

- swish9. Weeds Detection [Data set]. Kaggle. 2022. Available online: https://www.kaggle.com/datasets/swish9/weeds-detection (accessed on 24 July 2025).

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic ReLU. In Computer Vision—ECCV 2020; Springer: Cham, Switzerland, 2020; pp. 351–367. [Google Scholar]

- Steininger, D.; Trondl, A.; Croonen, G.; Simon, J.; Widhalm, V. The CropAndWeed Dataset: A Multi-Modal Learning Approach for Efficient Crop and Weed Manipulation. In Proceedings of the 2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2–7 January 2023; pp. 3729–3738. [Google Scholar]

- Shuai, Y.; Shi, J.; Li, Y.; Zhou, S.; Zhang, L.; Mu, J. YOLO-SW: A Real-Time Weed Detection Model for Soybean Fields Using Swin Transformer and RT-DETR. Agronomy 2025, 15, 1712. [Google Scholar] [CrossRef]

- Sun, Y.; Guo, H.; Chen, X.; Li, M.; Fang, B.; Cao, Y. YOLOv8n-SSDW: A Lightweight and Accurate Model for Barnyard Grass Detection in Fields. Agriculture 2025, 15, 1510. [Google Scholar] [CrossRef]

- Huang, J.; Xia, X.; Diao, Z.; Li, X.; Zhao, S.; Zhang, J.; Li, G. A Lightweight Model for Weed Detection Based on the Improved YOLOv8s Network in Maize Fields. Agronomy 2024, 14, 3062. [Google Scholar] [CrossRef]

- Lu, Z.; Chengao, Z.; Lu, L.; Yan, Y.; Jun, W.; Wei, X.; Jun, T. Star-YOLO: A Lightweight and Efficient Model for Weed Detection in Cotton Fields Using Advanced YOLOv8 Improvements. Comput. Electron. Agric. 2025, 235, 110306. [Google Scholar] [CrossRef]

| Category | Configuration |

|---|---|

| CPU | Intel(R) Xeon(R) Gold 6226R CPU 2.90 GHz |

| GPU | Nvidia 3090 |

| Framework | PyTorch 2.0.1 |

| Programming | Python 3.8.20 |

| Attribute | Details |

|---|---|

| Total images | 3982 |

| Training set | 3735 |

| Validation set | 494 |

| Test set | 247 |

| Epochs | 100 |

| Batch size | 16 |

| Image size | 640 × 640 |

| Learning rate schedule | Initial: 0.01, Final: 0.01 (cosine decay disabled) |

| Early stopping | Enabled (patience = 20 epochs) |

| Regularization | Weight decay: 0.0005 |

| Augmentation | Weather (fog/rain, 10%), brightness shift (±25%) or gamma (0.7–1.5) |

| Preprocessing | Auto-orientation for alignment |

| Outputs per image | 2 object classes |

| Model | Precision (%) | Recall (%) | mAP:0.5 (%) | mAP:0.5:0.95 (%) | Inf (ms) | GFLOPs | Parameters (M) | F1-Score (%) |

|---|---|---|---|---|---|---|---|---|

| v12 + AdamW | 85.0 | 79.7 | 87.0 | 58.0 | 3.6 | 5.8 | 2.5 | 82.3 |

| v12 + SGD | 64.0 | 71.0 | 72.7 | 39.7 | 4.1 | 5.8 | 2.5 | 67.2 |

| v12 + Lookahead | 86.9 | 80.2 | 86.7 | 56.9 | 2.6 | 5.6 | 2.3 | 83.4 |

| Model | Precision (%) | Recall (%) | mAP:0.50 (%) | mAP:0.50:0.95 (%) | Inference (ms) | GFLOPs | Params (M) | F1-Score (%) | Avg. IoU (%) |

|---|---|---|---|---|---|---|---|---|---|

| v12 | 81.5 ± 1.6 | 79.7 ± 0.2 | 87.0 ± 1.2 | 58.0 ± 1.0 | 3.07 ± 0.46 | 5.8 | 2.51 | 82.3 ± 1.1 | 81.2 |

| v12 + Lookahead | 86.9 ± 0.0 | 80.2 ± 0.0 | 86.7 ± 0.0 | 56.9 ± 0.0 | 3.73 ± 0.57 | 5.8 | 2.51 | 83.4 ± 0.0 | 81.2 |

| v12 + GDR-Conv | 84.3 ± 0.6 | 81.9 ± 1.0 | 88.1 ± 0.9 | 60.4 ± 0.8 | 3.80 ± 0.42 | 5.7 | 2.36 | 83.3 ± 0.6 | 82.6 |

| v12 + GTDR-C3 | 84.5 ± 2.7 | 85.0 ± 1.6 | 89.8 ± 1.0 | 64.0 ± 1.3 | 4.37 ± 0.71 | 5.2 | 2.28 | 84.4 ± 0.0 | 83.3 |

| v12 + Lookahead + GDR-Conv + GTDR-C3 (Ours) | 87.3 ± 0.82 | 83.4 ± 1.52 | 90.9 ± 0.70 | 65.5 ± 0.87 | 4.23 ± 1.60 | 4.8 | 2.23 | 85.8 ± 0.4 | 83.4 |

| Model | Precision (%) | Recall (%) | mAP:0.5 (%) | mAP:0.5:0.95 (%) | Inf (ms) | GFLOPs | Parameter (M) | F1-Score (%) |

|---|---|---|---|---|---|---|---|---|

| YOLOv7 | 65.8 | 75.4 | 71.5 | 38.3 | 5.5 | 103.2 | 36.5 | 70.2 |

| YOLOv9 | 86.1 | 83.5 | 89.1 | 62.5 | 6.1 | 26.7 | 7.2 | 84.8 |

| YOLOv10 | 86.8 | 74.2 | 85.2 | 57.6 | 4.3 | 8.2 | 2.7 | 79.9 |

| YOLOv11 | 87.0 | 80.8 | 87.7 | 59.3 | 2.5 | 6.3 | 2.6 | 83.8 |

| YOLOv12 | 85.0 | 79.7 | 87.0 | 58.0 | 3.6 | 5.8 | 2.5 | 82.3 |

| ATSS | 87.8 | 90.0 | 95.0 | 75.5 | – | 279 | 51.1 | 89.0 |

| Double-Head | 84.7 | 93.1 | 93.1 | 93.1 | – | 408 | 46.9 | 88.7 |

| RTMDet | 69.6 | 86.6 | 83.5 | 53.0 | – | 8.0 | 4.9 | 77.1 |

| GTDR-YOLOv12 (Ours) | 88.0 | 83.9 | 90.0 | 63.8 | 5.7 | 4.8 | 2.2 | 85.9 |

| Model | Precision (%) | Recall (%) | mAP:0.5 (%) | mAP:0.5:0.95 (%) | Inference (ms) | GFLOPs | Parameters (M) | F1-Score (%) |

|---|---|---|---|---|---|---|---|---|

| YOLOv12 (COCO Pretrain) | 85.2 | 88.7 | 90.8 | 64.7 | 5.8 | 5.8 | 2.51 | 86.9 |

| YOLOv12-GTDR | 88.0 | 83.9 | 90.0 | 63.8 | 3.0 | 4.8 | 2.23 | 85.9 |

| YOLOv12-GTDR (YOLOv12-GTDR Pretrain) | 87.1 | 87.0 | 91.5 | 67.4 | 4.2 | 4.8 | 2.23 | 87.0 |

| Model | GFLOPs | FPS |

|---|---|---|

| YOLOv12 | 5.8 | 48 |

| YOLOv12 + Lookahead | 5.8 | 48 |

| YOLOv12 + GDR-Conv | 5.7 | 49 |

| YOLOv12 + GTDR-C3 | 5.2 | 54 |

| GTDR-YOLOv12 (Ours) | 4.8 | 58 |

| Model | Dataset | Precision (%) | Recall (%) | mAP:0.5 (%) | FPS | GFLOPs | Embedded Platform | Reference |

|---|---|---|---|---|---|---|---|---|

| YOLO-SW | Weed25 | — | — | 92.3 | 59 | 19.9 | NVIDIA Jetson AGX Orin | [35] |

| YOLOv8n-SSDW | Barnyard Grass | 86.7 | 75.5 | 85.1 | — | 7.4 | NVIDIA Jetson Nano B01 | [36] |

| YOLOv8s-Improve | Maize Field Weed | 95.8 | 93.2 | 94.5 | — | 12.7 | — | [37] |

| Star-YOLO | CottonWeedDet12 | 95.3 | 93.9 | 95.4 | 118 | 5.0 | — | [38] |

| GTDR-YOLOv12 (Ours) | Weeds Detection | 88.0 | 83.9 | 90.0 | 58 | 4.8 | NVIDIA Jetson AGX Xavier | — |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Z.; Khan, Z.; Shen, Y.; Liu, H. GTDR-YOLOv12: Optimizing YOLO for Efficient and Accurate Weed Detection in Agriculture. Agronomy 2025, 15, 1824. https://doi.org/10.3390/agronomy15081824

Yang Z, Khan Z, Shen Y, Liu H. GTDR-YOLOv12: Optimizing YOLO for Efficient and Accurate Weed Detection in Agriculture. Agronomy. 2025; 15(8):1824. https://doi.org/10.3390/agronomy15081824

Chicago/Turabian StyleYang, Zhaofeng, Zohaib Khan, Yue Shen, and Hui Liu. 2025. "GTDR-YOLOv12: Optimizing YOLO for Efficient and Accurate Weed Detection in Agriculture" Agronomy 15, no. 8: 1824. https://doi.org/10.3390/agronomy15081824

APA StyleYang, Z., Khan, Z., Shen, Y., & Liu, H. (2025). GTDR-YOLOv12: Optimizing YOLO for Efficient and Accurate Weed Detection in Agriculture. Agronomy, 15(8), 1824. https://doi.org/10.3390/agronomy15081824