Autonomous Navigation and Obstacle Avoidance for Orchard Spraying Robots: A Sensor-Fusion Approach with ArduPilot, ROS, and EKF

Abstract

1. Introduction

2. Materials and Methods

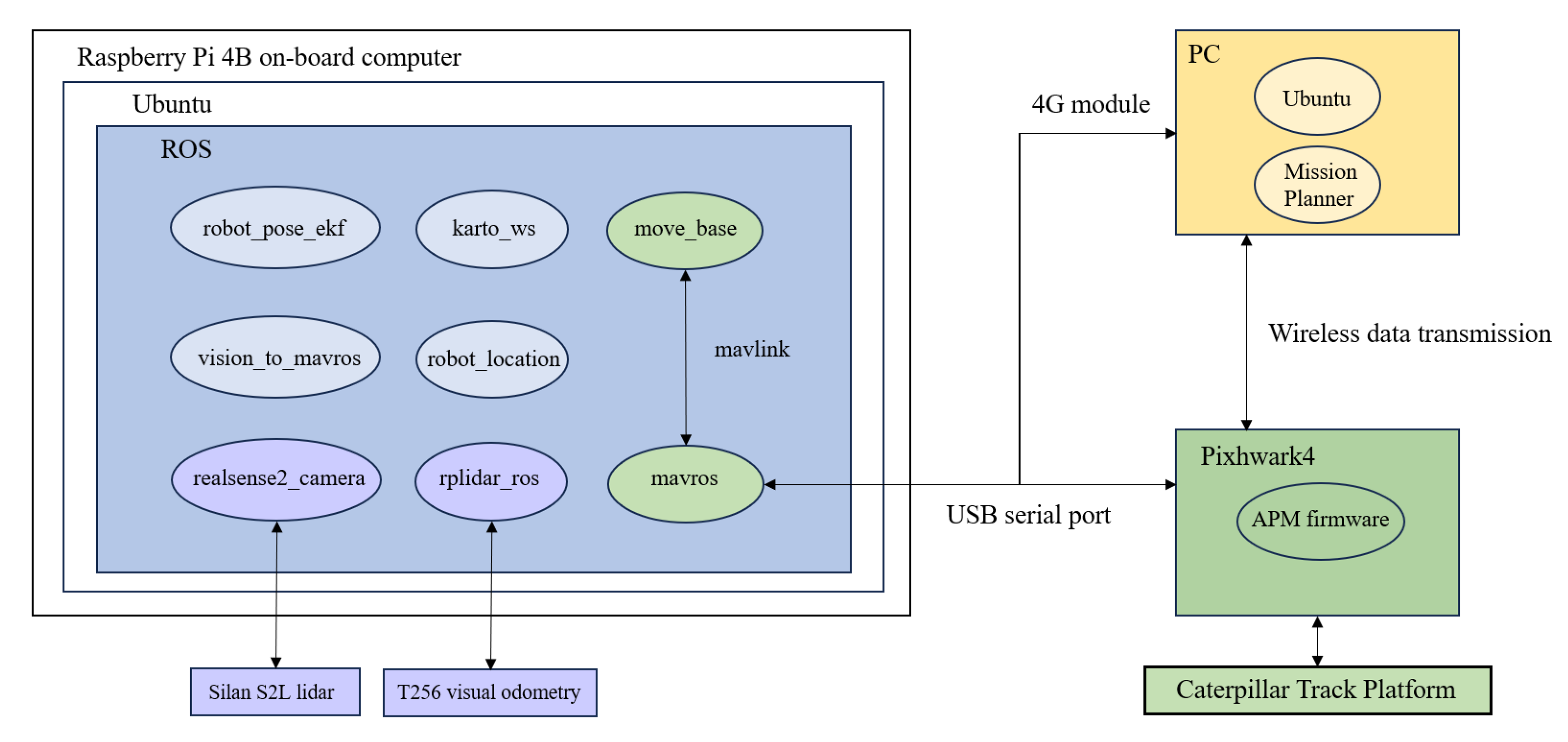

2.1. Design of Autonomous Cruising and Obstacle Avoidance System

2.1.1. System Hardware Design

2.1.2. System Software Design

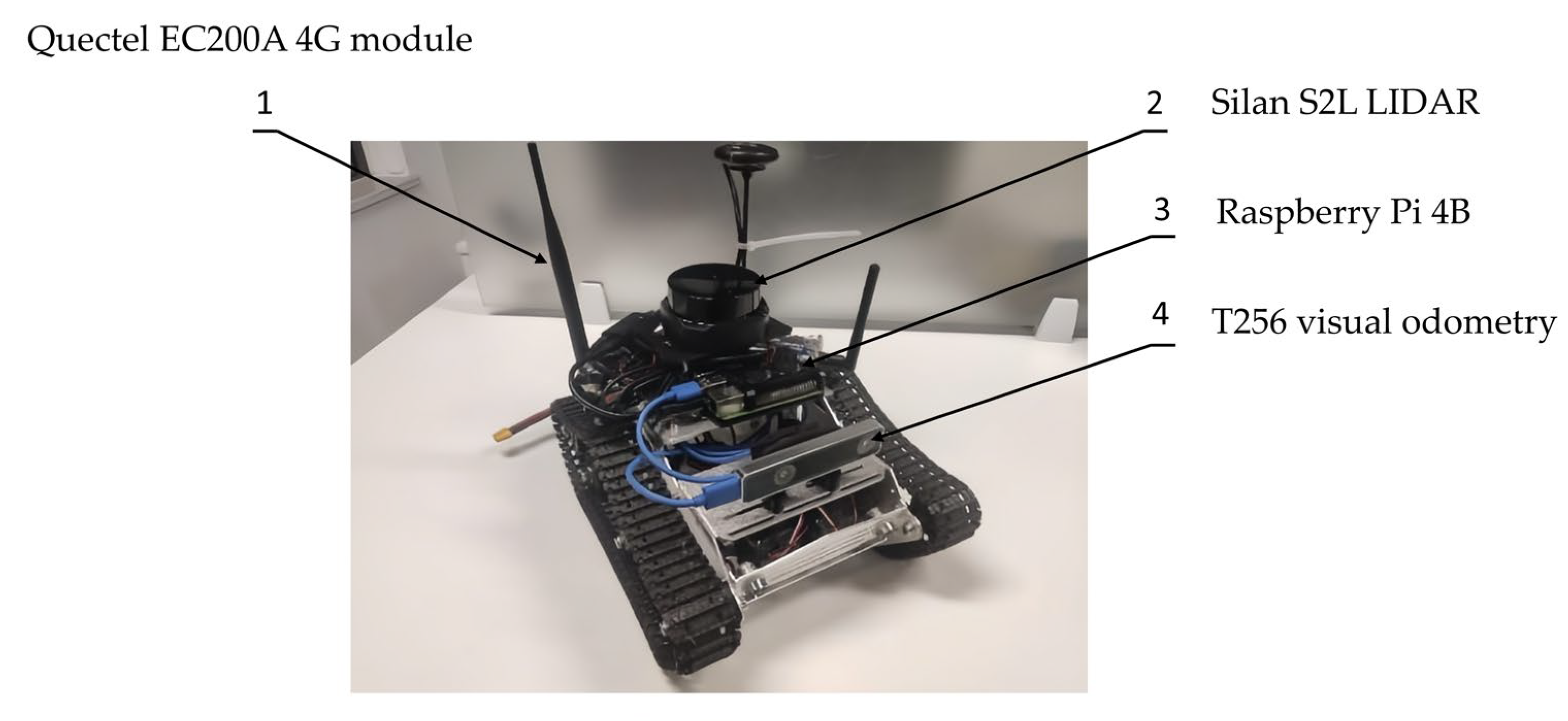

2.2. Experimental Design and Model Vehicle Construction

2.3. Sensor Data Fusion

2.3.1. Extended Kalman Filter

- (1)

- Definition of the state vector

- (2)

- State equation

- (3)

- Measurement equation

- (4)

- State prediction

- (5)

- Calculation of the state transition Jacobian matrix

- (6)

- Covariance prediction

- (7)

- Calculation of the measurement Jacobian matrix

- (8)

- Calculation of the Kalman gain

- (9)

- State update

- (10)

- Covariance update

2.3.2. Fusion of Visual Odometry and IMU Data

2.3.3. Fusion of GPS and Visual Odometry Data

- (1)

- State vector definition

- (2)

- State equation

- (3)

- Measurement equation

- (4)

- Key EKF steps

- (5)

- Kalman gain calculation

- (6)

- State update

2.4. Coordinate System Conversion

2.5. Model Vehicle Test

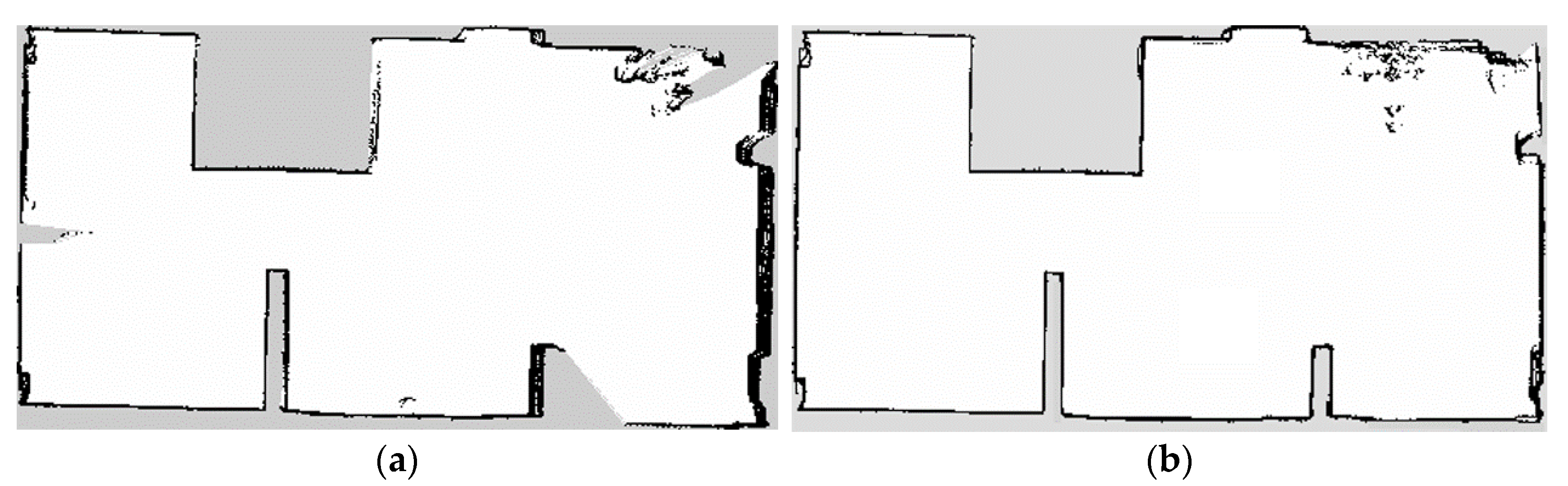

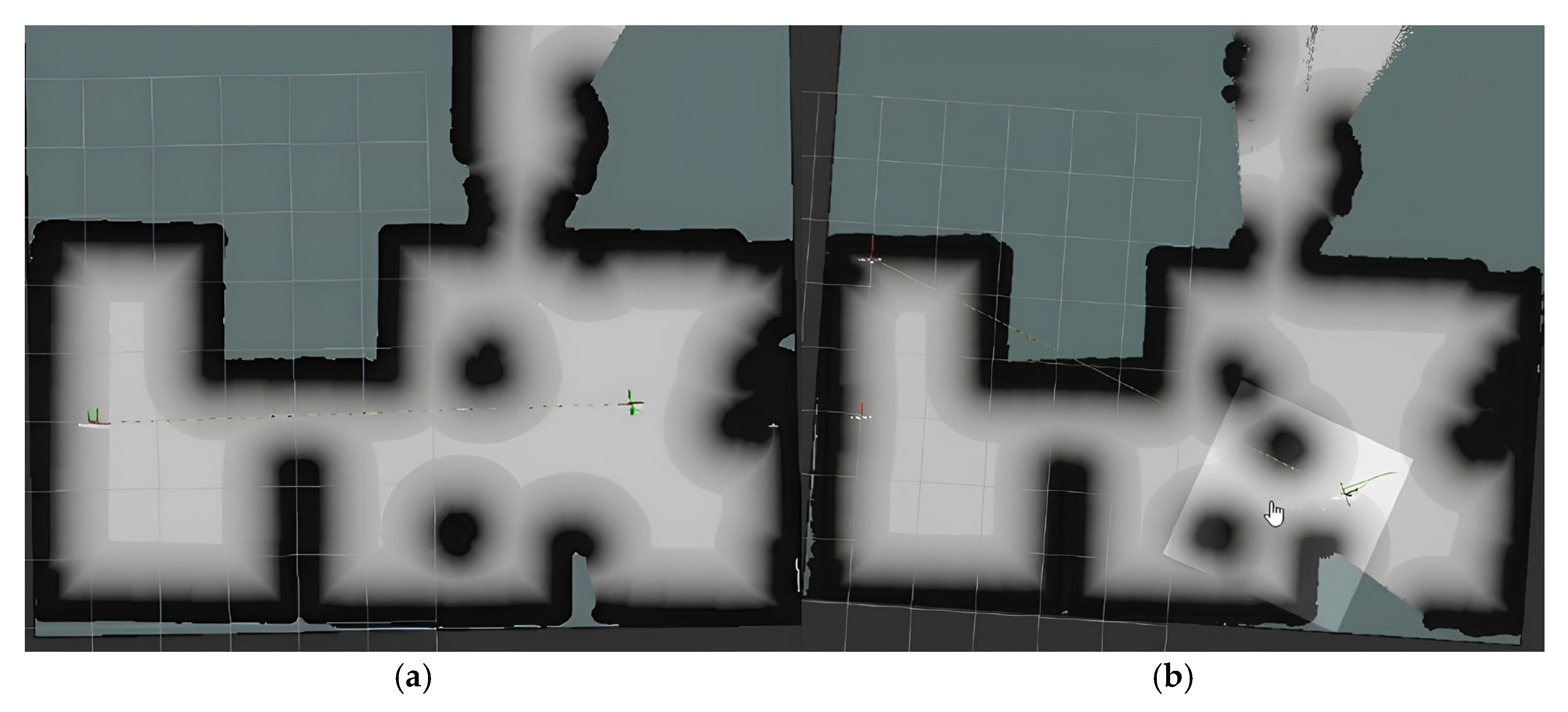

2.5.1. Indoor Mapping and Path Planning Test

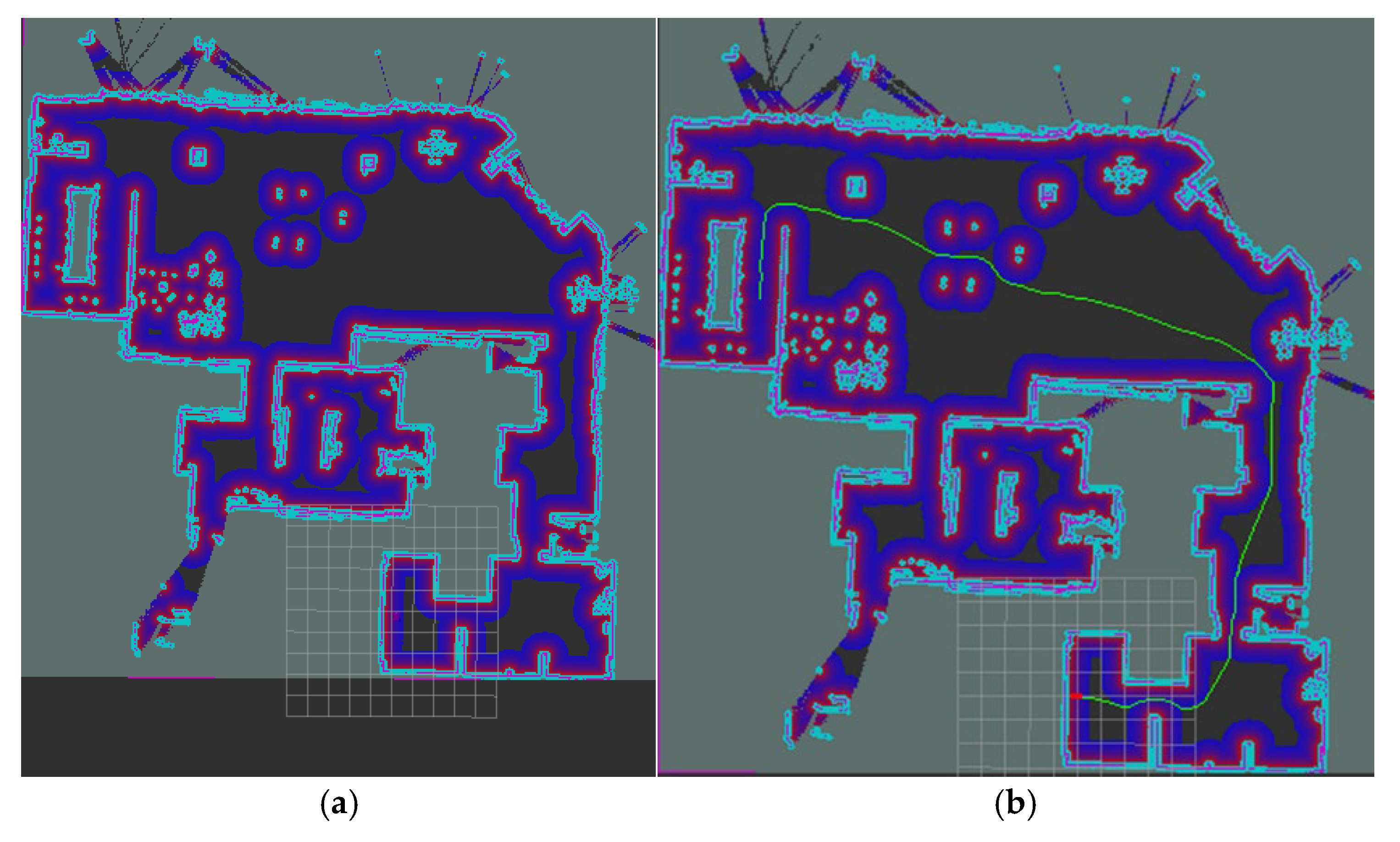

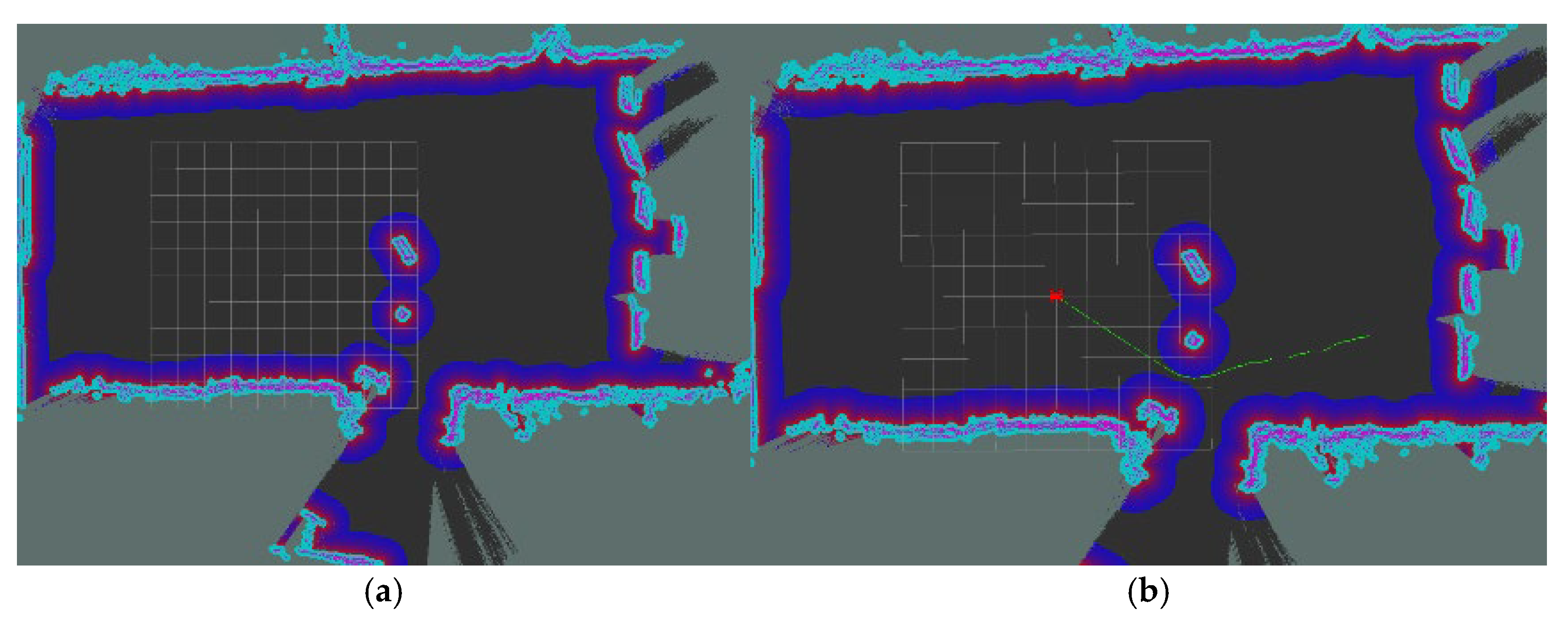

2.5.2. Indoor Obstacle Avoidance Test

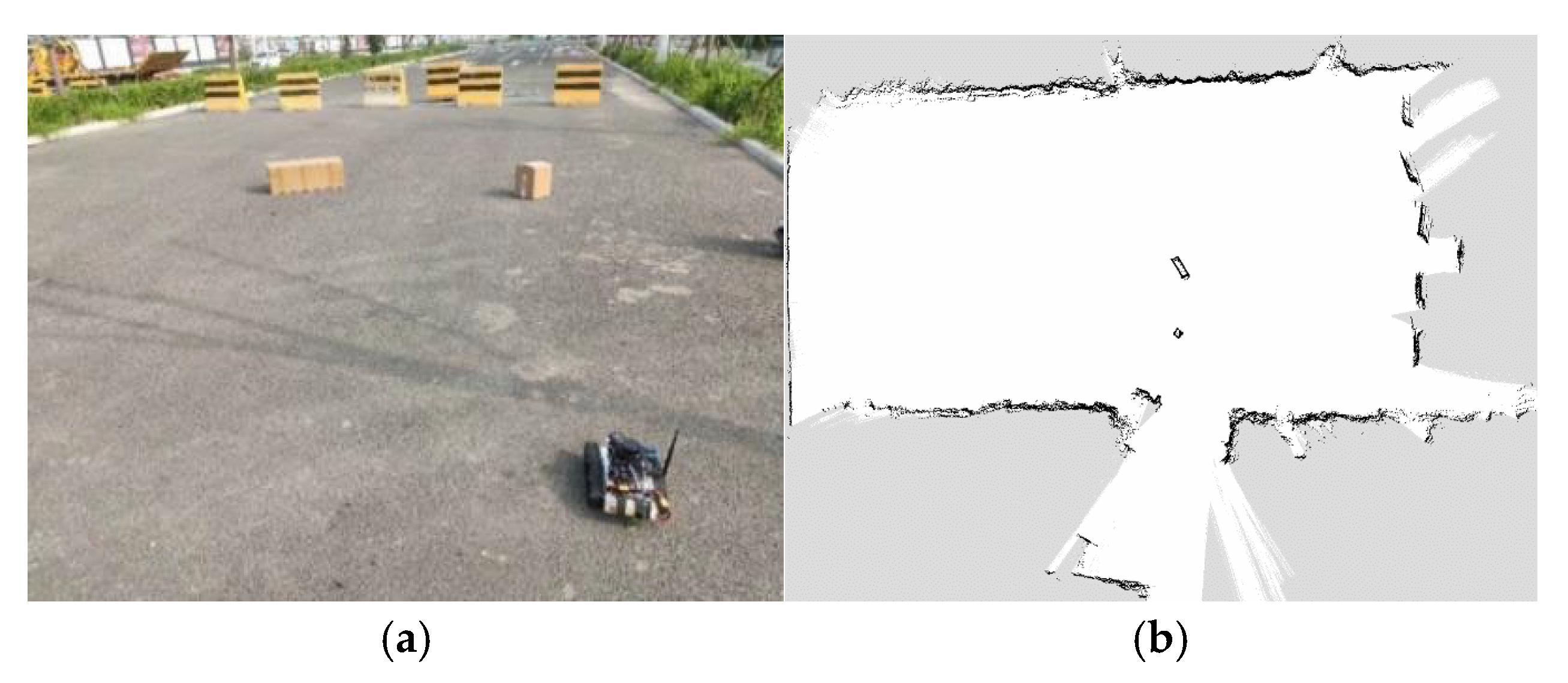

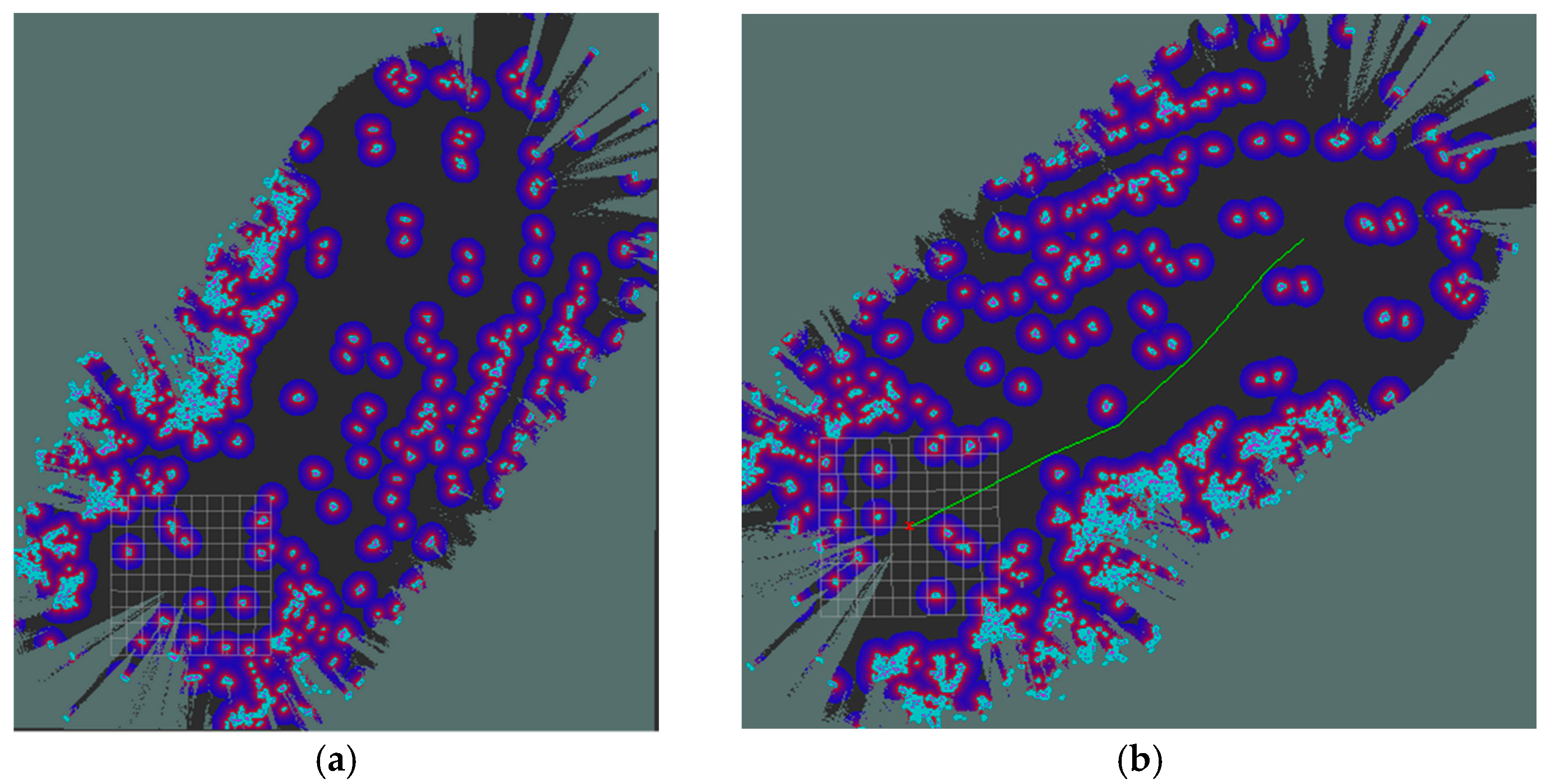

2.5.3. Outdoor Mapping and Path Planning Test

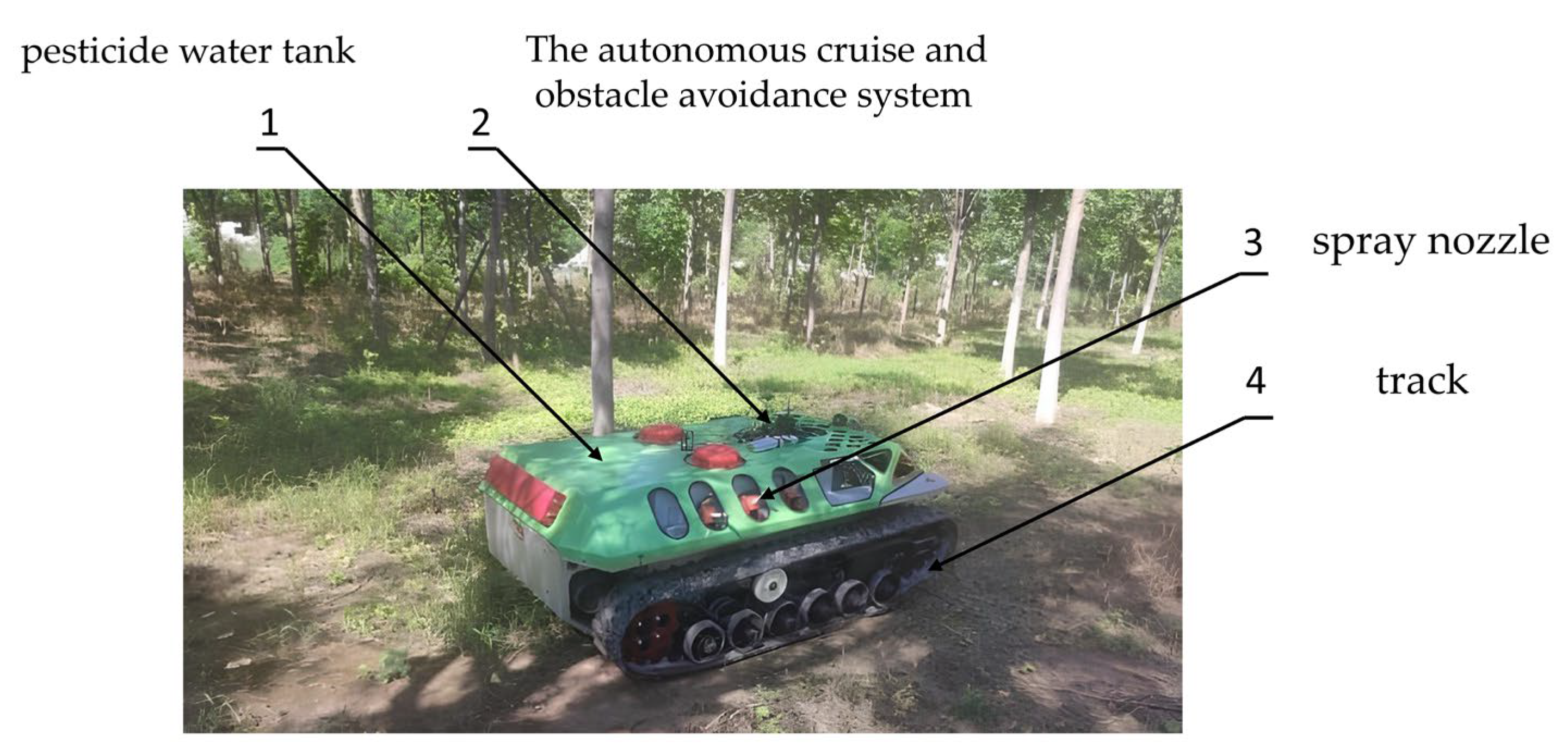

2.6. Modification of Unmanned Spraying Vehicles

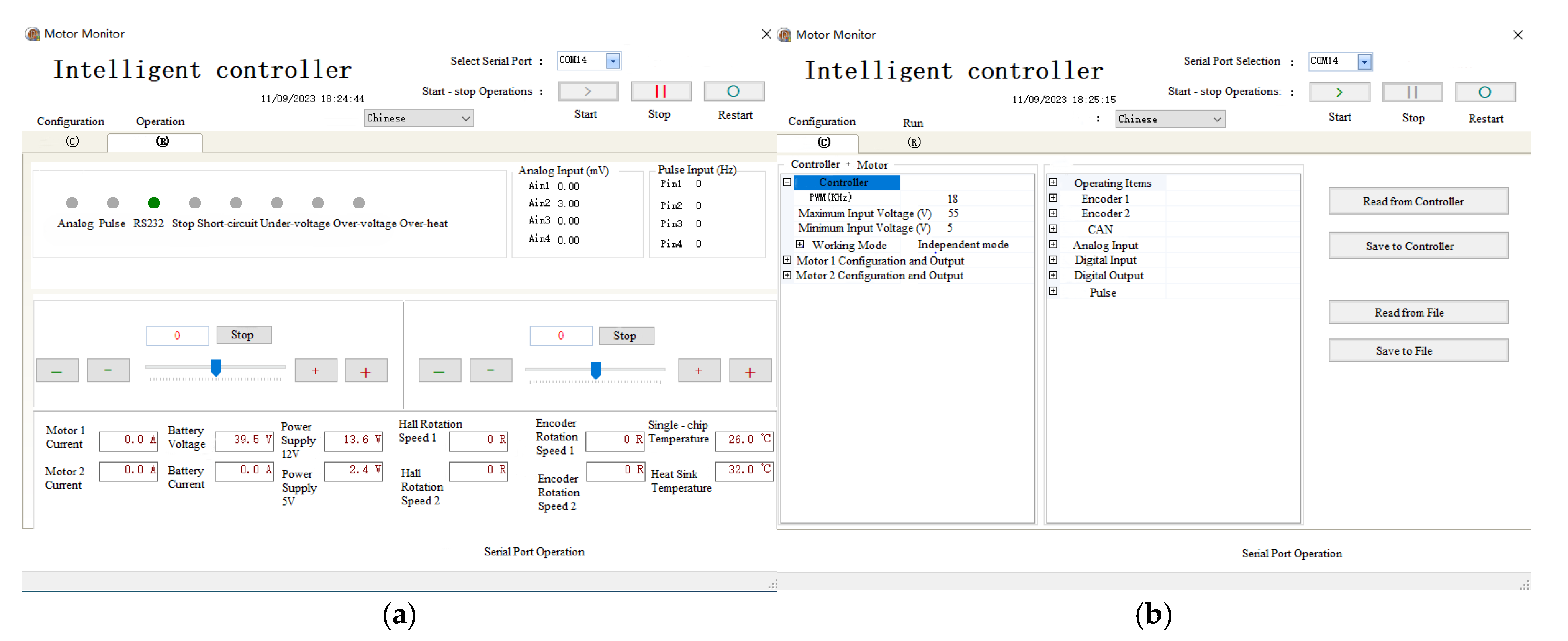

2.6.1. Servo Control Mode and ArduPilot Output Mode Changed

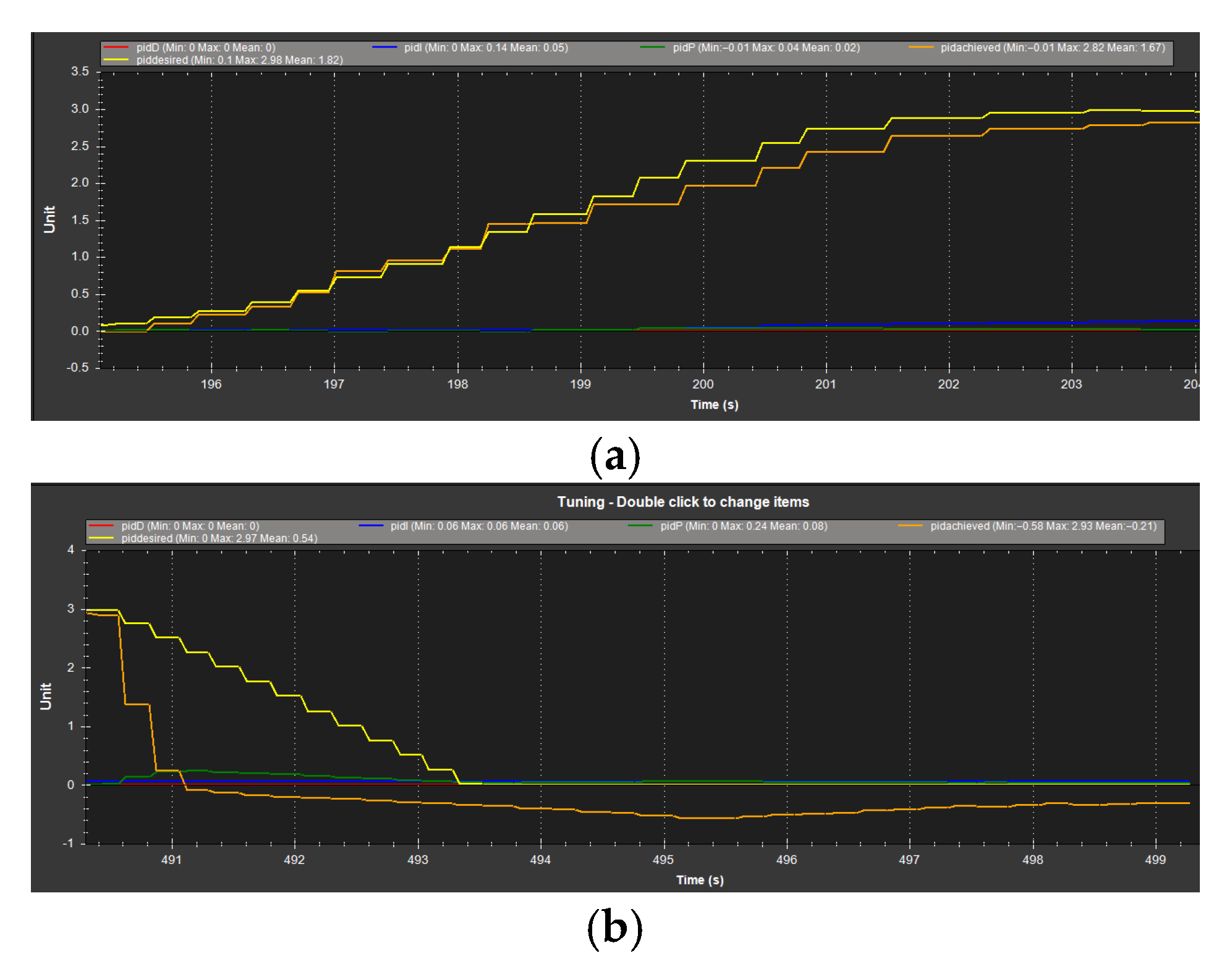

2.6.2. PID Control Parameter Adjustment

2.7. Orchard Test of Unmanned Spraying Vehicle

3. Results and Discussion

3.1. GPS Fusion Positioning

3.2. Test Site Mapping and Path Planning Test

4. Discussion

- (1)

- Our proposed approach uses a combination of advanced sensors and algorithms. The SLAM and path planning functions enabled by Silan S2L LIDAR and T265 visual odometry help the vehicle move precisely in the orchard. This precision ensures that pesticides are sprayed only where necessary, reducing waste and improving utilization. The autonomous operation of the vehicle reduces human exposure to pesticides, protecting the health of workers. Moreover, the system’s ability to work continuously without human intervention throughout the spraying process represents a significant step towards improving automation levels.

- (2)

- The system may also face numerous challenges during actual deployment. The orchard terrain is complex, and environments such as slopes and soft soil can affect the vehicle’s stability and exacerbate mechanical wear. Strong light, dust, and other factors can interfere with the performance of sensors, affecting navigation and obstacle avoidance functions. In addition, long-term operation will lead to high energy consumption, and since power supply in orchards is limited, it is necessary to optimize power consumption or adopt sustainable energy sources. 4G communication is vulnerable to interference, which may affect real-time control, especially during critical operations such as obstacle avoidance. Therefore, the system needs to comprehensively optimize terrain adaptability, environmental tolerance, energy management, and communication stability to ensure reliable operation.

- (3)

- The development of the orchard spraying robot system has two primary future directions: integrating with smart agriculture systems and optimizing sensor-fusion algorithms. For the first direction, while connecting to smart agriculture networks could enable dynamic adjustments of spraying strategies based on real-time weather, soil nutrient, and pest data to enhance plant protection efficiency, challenges such as interoperability between heterogeneous platforms, data standardization, and reliable field communication (e.g., signal attenuation in dense canopies) must be addressed. The second direction involves exploring machine learning-based advanced sensor-fusion techniques to improve positioning accuracy and adaptability to complex environments, though key hurdles include developing lightweight models compatible with low-power hardware and ensuring model generalization across diverse orchard scenarios.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hu, Y.; Yang, H.; Hou, B.; Xi, Z.; Yang, Z. Influence of Spray Control Parameters on the Performance of an Air-Blast Sprayer. Agriculture 2022, 12, 1260. [Google Scholar] [CrossRef]

- Ranta, O.; Marian, O.; Muntean, M.V.; Molnar, A.; Ghețe, A.B.; Crișan, V.; Stănilă, S.; Rittner, T. Quality Analysis of Some Spray Parameters When Performing Treatments in Vineyards in Order to Reduce Environment Pollution. Sustainability 2021, 13, 7780. [Google Scholar] [CrossRef]

- Zhao, Y.; Xiao, H.R.; Mei, S.; Song, Z.Y.; Ding, W.Q.; Jin, Y.; Han, Y.; Xia, X.F.; Yang, G. Current Status and Development Strategies of Orchard Mechanization Production in China. J. China Agric. Univ. 2017, 22, 116–127. (In Chinese) [Google Scholar]

- Zheng, Y.J.; Chen, B.T.; Lyu, H.T.; Kang, F.; Jiang, S.J. Research progress of orchard plant protection mechanization technology and equipment in China. Trans. Chin. Soc. Agric. Eng. 2020, 36, 110–124. (In Chinese) [Google Scholar]

- Du, J.; Liu, X.; Meng, C. Road Intersection Extraction Based on Low-Frequency Vehicle Trajectory Data. Sustainability 2023, 15, 14299. [Google Scholar] [CrossRef]

- Etezadi, H.; Eshkabilov, S. A Comprehensive Overview of Control Algorithms, Sensors, Actuators, and Communication Tools of Autonomous All-Terrain Vehicles in Agriculture. Agriculture 2024, 14, 163. [Google Scholar] [CrossRef]

- Huang, Y.; Fu, J.; Xu, S.; Han, T.; Liu, Y. Research on Integrated Navigation System of Agricultural Machinery Based on RTK-BDS/INS. Agriculture 2022, 12, 1169. [Google Scholar] [CrossRef]

- Jagelčák, J.; Kuba, O.; Kubáňová, J.; Kostrzewski, M.; Nader, M. Dynamic Position Accuracy of Low-Cost Global Navigation Satellite System Sensors Applied in Road Transport for Precision and Measurement Reliability. Sustainability 2024, 16, 5556. [Google Scholar] [CrossRef]

- Han, J.H.; Park, C.H.; Park, Y.J.; Kwon, J.H. Preliminary results of the development of a single−frequency GNSS RTK−based autonomous driving system for a speed sprayer. J. Sens. 2019, 2019, 4687819. [Google Scholar] [CrossRef]

- Xiong, B.; Zhang, J.X.; Qu, F.; Fan, Z.Q.; Wang, D.S.; Li, W. Navigation Control System for Orchard Spraying Machine Based on Beidou Navigation Satellite System. Trans. Chin. Soc. Agric. Mach. 2017, 48, 45–50. (In Chinese) [Google Scholar]

- Shang, Y.; Wang, H.; Qin, W.; Wang, Q.; Liu, H.; Yin, Y.; Song, Z.; Meng, Z. Design and Test of Obstacle Detection and Harvester Pre-Collision System Based on 2D Lidar. Agronomy 2023, 13, 388. [Google Scholar] [CrossRef]

- Hu, C. Research on Positioning and Map Construction of Orchard Operating Robots. Master’s Thesis, Nanjing Agricultural University, Nanjing, China, 2015. (In Chinese). [Google Scholar]

- Hu, L.; Wang, Z.M.; Wang, P.; He, J.; Jiao, J.K.; Wang, C.Y.; Li, M.J. Agricultural robot positioning system based on laser sensing. Trans. Chin. Soc. Agric. Eng. 2023, 39, 1–7. (In Chinese) [Google Scholar]

- Shi, X.; Wang, S.; Zhang, B.; Ding, X.; Qi, P.; Qu, H.; Li, N.; Wu, J.; Yang, H. Advances in Object Detection and Localization Techniques for Fruit Harvesting Robots. Agronomy 2025, 15, 145. [Google Scholar] [CrossRef]

- Adrien, D.P.; Emile, L.F.; Viviane, C.; Thierry, S.; Stavros, V. Tree detection with low−cost three−dimensional sensors for autonomous navigation in orchards. IEEE Robot. Autom. Lett. 2018, 3, 3876–3883. [Google Scholar]

- Fei, K.; Mai, C.; Jiang, R.; Zeng, Y.; Ma, Z.; Cai, J.; Li, J. Research on a Low-Cost High-Precision Positioning System for Orchard Mowers. Agriculture 2024, 14, 813. [Google Scholar] [CrossRef]

- Yin, X.; Wang, Y.X.; Chen, Y.L.; Jin, C.Q.; Du, J. Development of autonomous navigation controller for agricultural vehicles. Int. J. Agric. Biol. Eng. 2020, 13, 70–76. [Google Scholar] [CrossRef]

- Marucci, A.; Colantoni, A.; Zambon, I.; Egidi, G. Precision Farming in Hilly Areas: The Use of Network RTK in GNSS Technology. Agriculture 2017, 7, 60. [Google Scholar] [CrossRef]

- Iberraken, D.; Gaurier, F.; Roux, J.-C.; Chaballier, C.; Lenain, R. Autonomous Vineyard Tracking Using a Four-Wheel-Steering Mobile Robot and a 2D LiDAR. AgriEngineering 2022, 4, 826–846. [Google Scholar] [CrossRef]

- Fujinaga, T. Autonomous navigation method for agricultural robots in high-bed cultivation environments. Comput. Electron. Agric. 2025, 231, 110001. [Google Scholar] [CrossRef]

- Ospina, R.; Itakura, K. Obstacle Detection and Avoidance System Based on Layered Costmaps for Robot Tractors. Smart Agric. Technol. 2025, 11, 100973. [Google Scholar] [CrossRef]

- Jiang, S.; Qi, P.; Han, L.; Liu, L.; Li, Y.; Huang, Z.; Liu, Y.; He, X. Navigation System for Orchard Spraying Robot Based on 3D LiDAR SLAM with NDT_ICP Point Cloud Registration. Comput. Electron. Agric. 2024, 220, 108870. [Google Scholar] [CrossRef]

- Liu, H.; Zeng, X.; Shen, Y.; Xu, J.; Khan, Z. A Single-Stage Navigation Path Extraction Network for Agricultural Robots in Orchards. Comput. Electron. Agric. 2025, 229, 109687. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, H.; Zhang, F.; Zhang, B.; Tao, S.; Li, H.; Qi, K.; Zhang, S.; Ninomiya, S.; Mu, Y. Real-Time Localization and Colorful Three-Dimensional Mapping of Orchards Based on Multi-Sensor Fusion Using Extended Kalman Filter. Agronomy 2023, 13, 2158. [Google Scholar] [CrossRef]

- Chang, C.-L.; Chen, H.-W.; Ke, J.-Y. Robust Guidance and Selective Spraying Based on Deep Learning for an Advanced Four-Wheeled Farming Robot. Agriculture 2024, 14, 57. [Google Scholar] [CrossRef]

| Sensor | Type | Parameters |

|---|---|---|

| LIDAR | Silan S2L | Scanning range: 0.1–12 m Angular resolution: 0.1125° Scan frequency: 10 Hz |

| Visual Odometry | Intel RealSense T265 | Resolution: 848 × 800 pixels Field of view: 87 °H × 58 °V Frame rate: 30 Hz |

| IMU | Pixhawk 4 (ICM-20689) | Accelerometer range: ±4 g Gyroscope range: ±500°/s Frequency: 50 Hz |

| GPS | M9N | Accuracy: 1.5 m (CEP) Update rate: 25 Hz |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, X.; Zhao, X.; Liu, J.; Feng, W.; Fan, X. Autonomous Navigation and Obstacle Avoidance for Orchard Spraying Robots: A Sensor-Fusion Approach with ArduPilot, ROS, and EKF. Agronomy 2025, 15, 1373. https://doi.org/10.3390/agronomy15061373

Zhu X, Zhao X, Liu J, Feng W, Fan X. Autonomous Navigation and Obstacle Avoidance for Orchard Spraying Robots: A Sensor-Fusion Approach with ArduPilot, ROS, and EKF. Agronomy. 2025; 15(6):1373. https://doi.org/10.3390/agronomy15061373

Chicago/Turabian StyleZhu, Xinjie, Xiaoshun Zhao, Jingyan Liu, Weijun Feng, and Xiaofei Fan. 2025. "Autonomous Navigation and Obstacle Avoidance for Orchard Spraying Robots: A Sensor-Fusion Approach with ArduPilot, ROS, and EKF" Agronomy 15, no. 6: 1373. https://doi.org/10.3390/agronomy15061373

APA StyleZhu, X., Zhao, X., Liu, J., Feng, W., & Fan, X. (2025). Autonomous Navigation and Obstacle Avoidance for Orchard Spraying Robots: A Sensor-Fusion Approach with ArduPilot, ROS, and EKF. Agronomy, 15(6), 1373. https://doi.org/10.3390/agronomy15061373