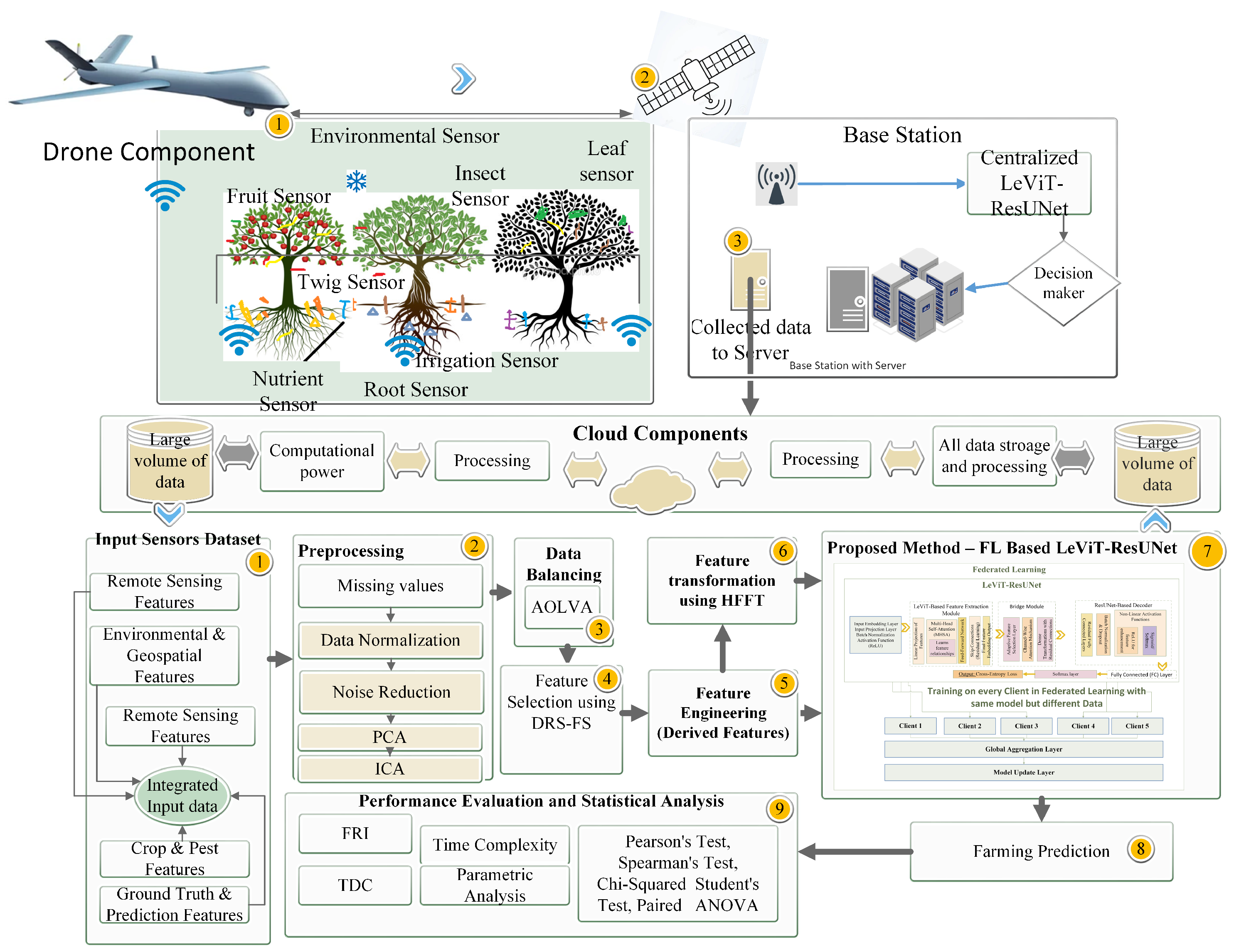

This section details the experimental setup and performance assessment of the FL-LeViT-ResUNet system, which is intended for precision agricultural monitoring. A federated learning approach tests the model for essential tasks such as crop health categorization, insect detection, and yield-related prediction utilizing high-dimensional, multimodal agrarian data.

4.2. Discussion

The effectiveness of the proposed model was thoroughly assessed using a blend of standard evaluation metrics—including accuracy, F1-score, AUC, and log-loss—as well as two newly introduced metrics: the feature relevance index (FRI) and temporal deviation consistency (TDC). Additionally, the model’s performance was benchmarked against several leading deep learning architectures to ensure a comprehensive comparison.

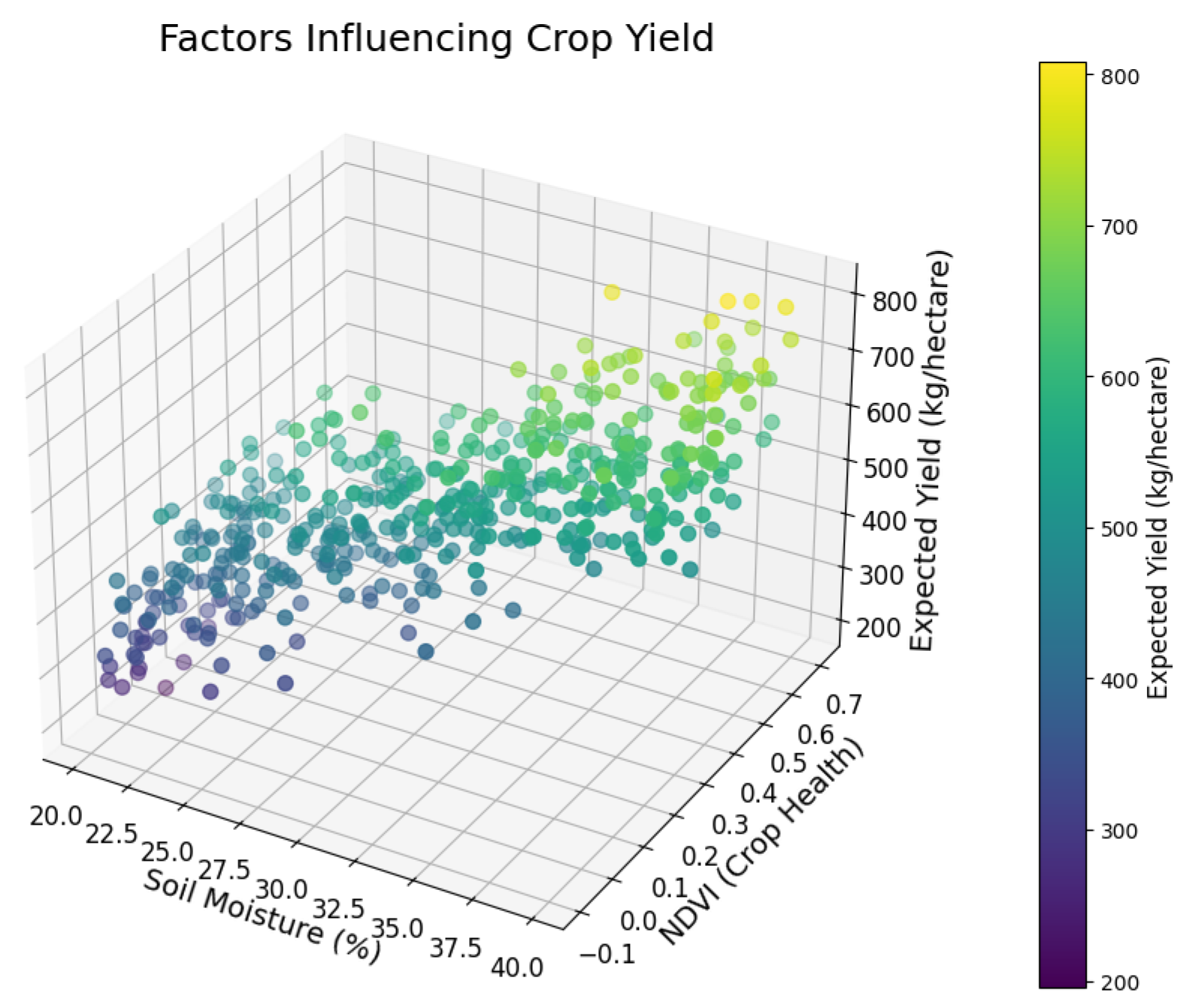

Figure 3 shows a 3D scatter plot examining how soil moisture (%), the NDVI (crop health), and temperature (°C) affect crop production (kg/hectare). The gradient shows yield levels, with darker tones representing greater values. The technical conclusion shows that the NDVI and soil moisture positively affect yield because adequate moisture levels and crop health boost output. The figure also illustrates that temperature excursions from 25 °C reduce yield, emphasizing the need for climatic stability. This visualization quantifies how environmental conditions affect yield, making it essential for agricultural decision making. These correlations help to optimize irrigation, fertilizer, and crop selection for production. This research also helps to design adaptive techniques to reduce crop performance losses from temperature fluctuation.

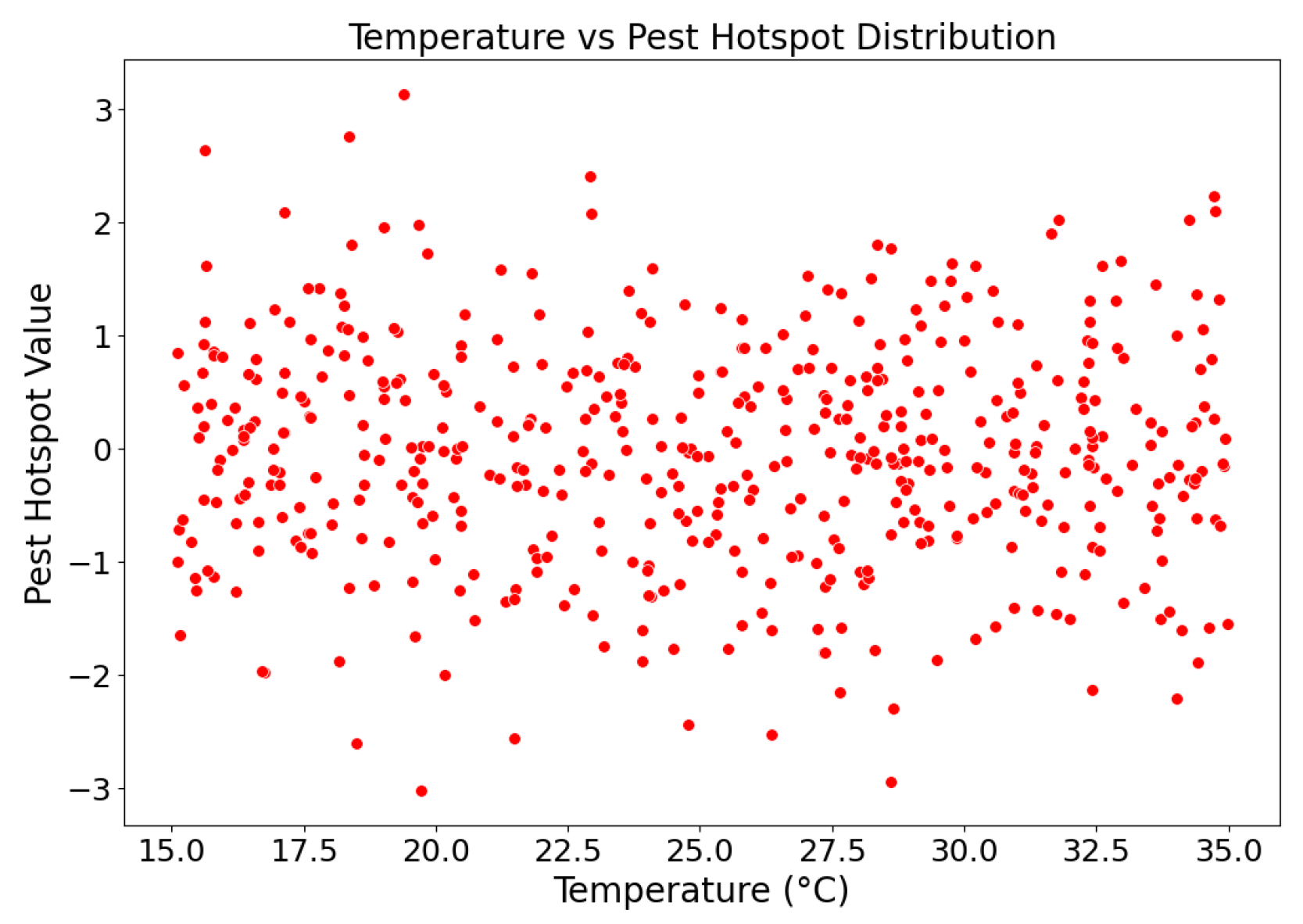

Figure 4 shows a scatter plot of the link between temperature (°C) and pest hotspot distribution. Pest activity is more significant in red data points. The technical result shows a modest association between increasing temperatures and pest infestations, demonstrating pest activity during certain temperatures. This graphic helps to explain how temperature changes affect insect behavior. By pinpointing critical times for pesticide application or biological controls, such insights allow for focused pest management. This study also emphasizes the need for environmentally appropriate environments to decrease insect outbreaks. Preventing production losses from insect activity and encouraging sustainable pest control are crucial for precision agriculture. The visualization predicts temperature-induced insect population increases.

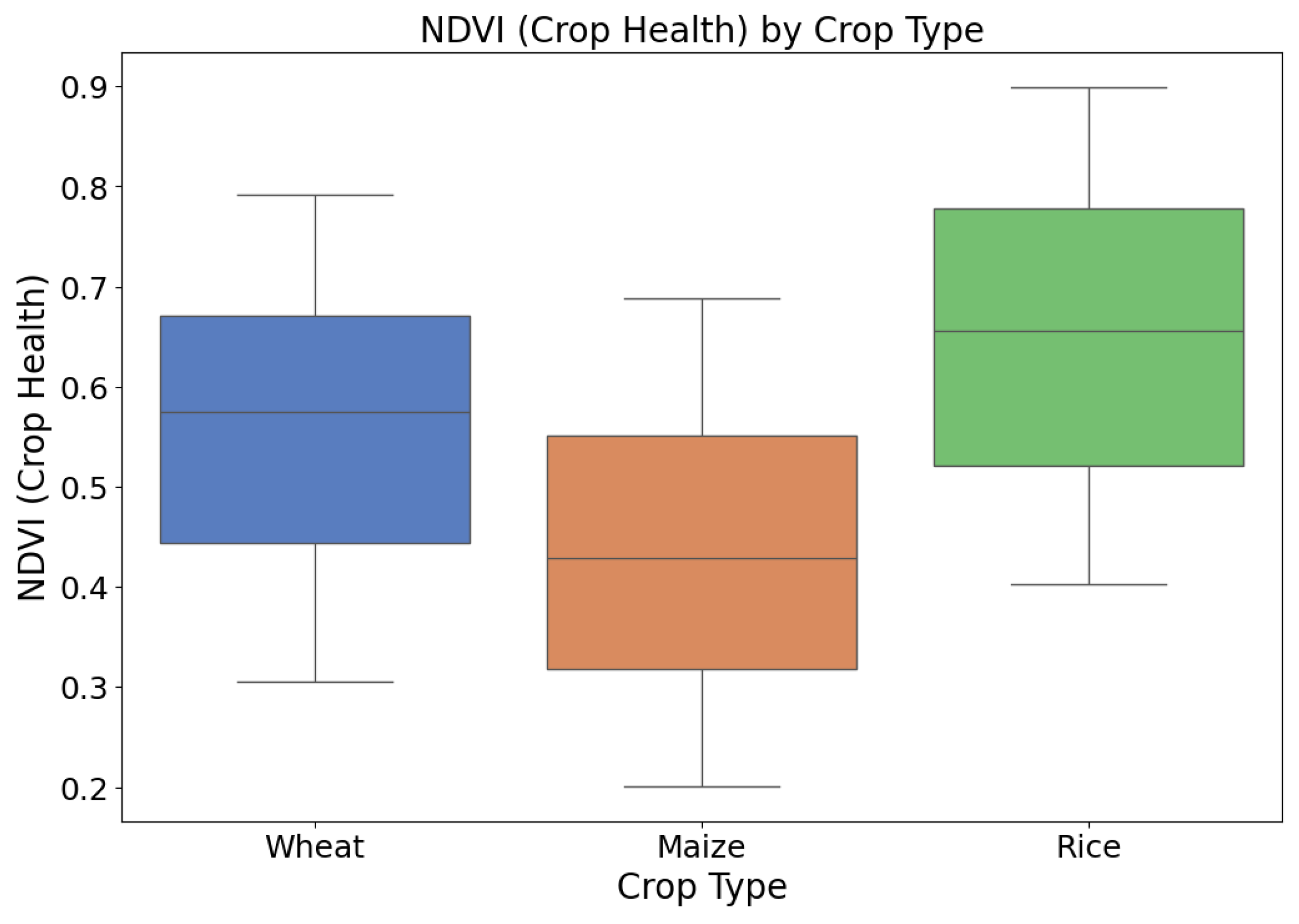

The box plot in

Figure 5 displays NDVI (crop health) data for wheat, maize, and rice crops. Rice has higher NDVI readings, indicating crop health, followed by wheat and maize. The technical result shows crop health heterogeneity among crop kinds under comparable environmental conditions. This depiction is essential for crop evaluation and ecological appropriateness. NDVI values show fluctuation for each crop due to nutrient availability, irrigation efficiency, and insect resistance. This figure helps agricultural stakeholders to allocate resources by identifying high-performing crops for specific situations. It also provides a standard for assessing crop health after fertilization, irrigation, and insect management, allowing focused activities to boost agricultural yield.

Figure 6 shows a correlation matrix heatmap for 25 agricultural parameters, such as the NDVI, soil moisture, temperature, and crop production. The technical conclusion shows high positive connections between the NDVI, soil moisture, and vegetation density and moderate negative associations between temperature fluctuation and crop performance. This graphic helps to select predictive modeling features by showing which factors substantially affect crop health and production. Visualization helps researchers to discover redundant or unnecessary information, improving computing efficiency and model correctness. This approach helps to construct precision agricultural machine learning models by identifying feature interdependencies. The figure also helps to plan interventions by highlighting key characteristics like soil moisture and temperature that must be monitored for sustainable farming and yield prediction.

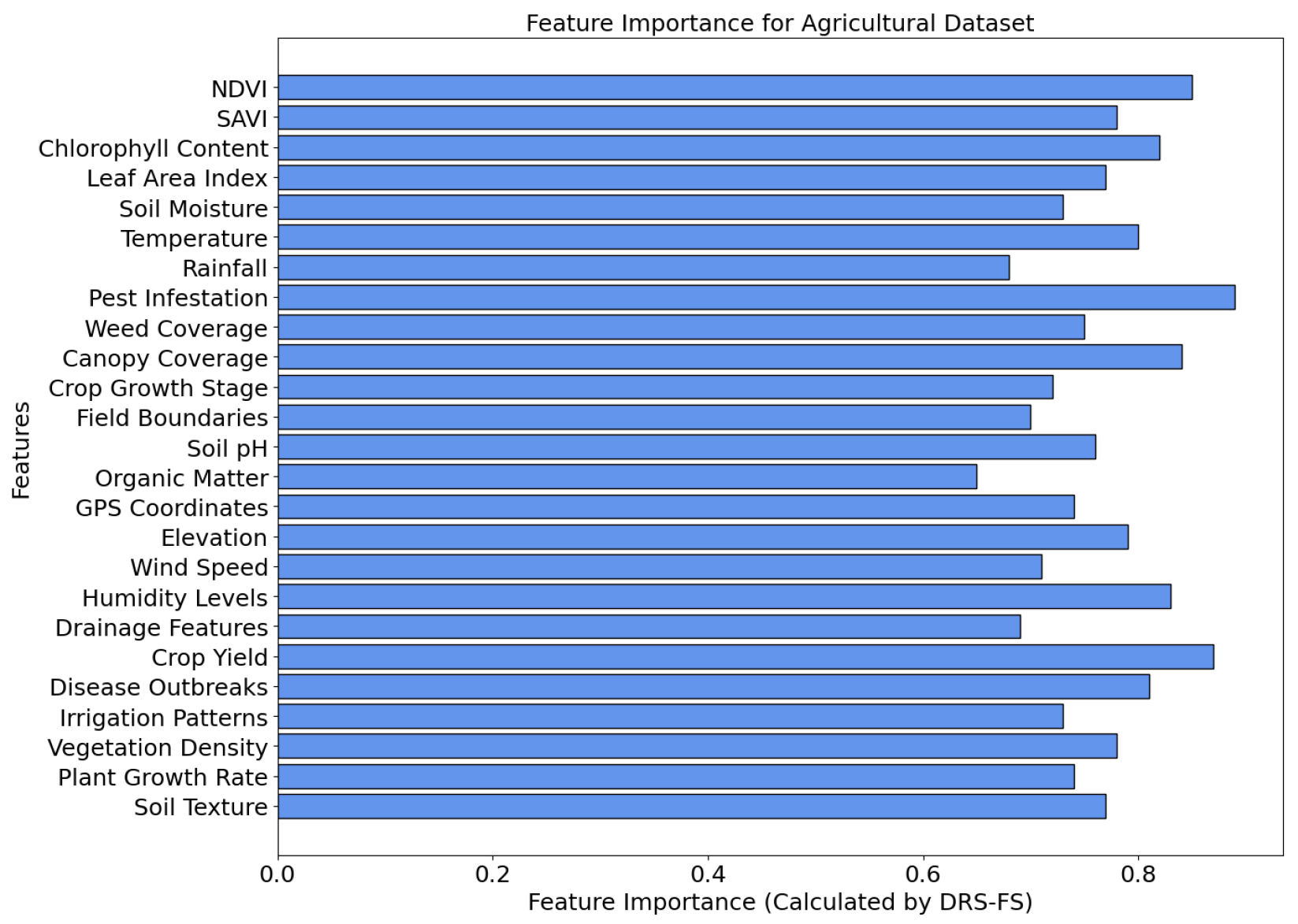

Figure 7 shows DRS-FS-calculated feature significance scores. This bar chart ranks 25 crop management parameters, including the NDVI, pest infestation, soil moisture, and crop growth stage. Pest infestation and the NDVI are essential to agricultural performance. This study illustrates how advanced feature selection techniques like DRS-FS discover important variables while lowering dimensionality. For model optimization, this figure prioritizes essential predictors and reduces computing costs. Practically, the results assist stakeholders in prioritizing prediction accuracy and efficiency variables. Sustainable farming is promoted by targeted analysis, which enhances resource-efficient practices, production estimates, and environmental constraint management.

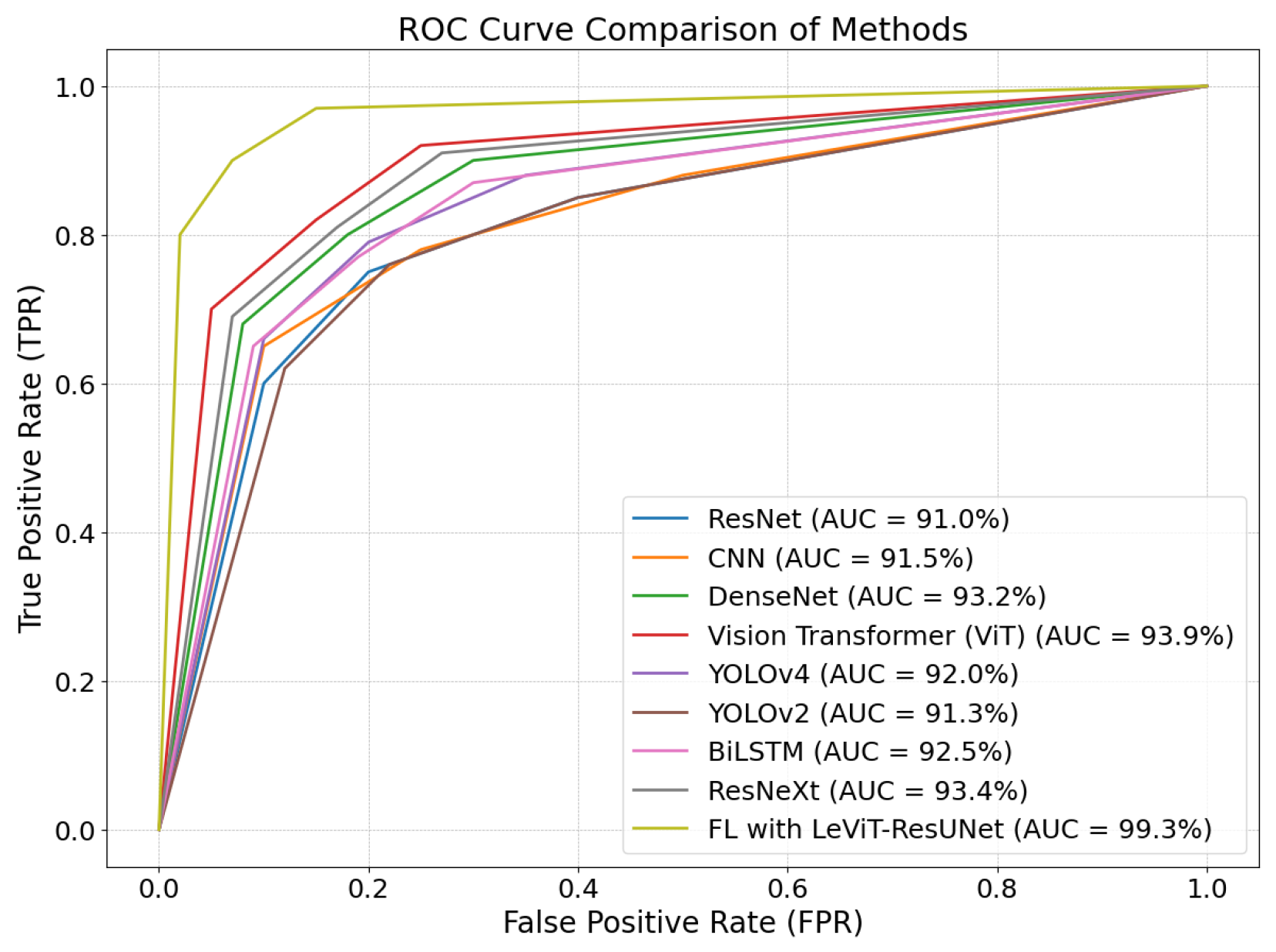

Figure 8 displays ROC curves for ResNet, the CNN, DenseNet, ViT, YOLOv4, and the proposed FL with LeViT-ResUNet. Each curve shows the actual positive–false positive trade-off across thresholds. The suggested technique obtains the most excellent AUC of 99.3%, surpassing ResNet (91.0%) and ViT (93.9%). FL with LeViT-ResUNet categorizes agricultural data more correctly and robustly, as this technical conclusion shows. The ROC analysis shows that the model can handle unbalanced datasets, reduce misclassification, and provide accurate pest identification, crop health assessment, and yield predictions. The graphic shows that the model works in real-world agricultural situations and offers a high-performance solution for precision agriculture difficulties.

Table 3 evaluates machine learning and deep learning strategies for agricultural surveillance. This table compares LeViT-ResUNet with ResNet, the CNN, DenseNet, ViT, YOLO, BiLSTM, ResNeXt, and federated learning. Additionally, it incorporates recent hybrid models such as aKNCN + ELM + mBOA, EBWO-HDLID, Xception + DenseNet-121 fusion, BMA ensemble, MA-CNN-LSTM + AMTBO, and ARIMA-Kalman + SVR-NAR. This comparison shows how well the agricultural data that UAVs gather are examined. Classification accuracy, recall, precision, and other metrics are all outperformed by FL with LeViT-ResUNet compared to conventional models. Regarding feature responsiveness and temporal consistency—two crucial aspects of precision agriculture—the suggested solution outperforms ResNet and DenseNet. The FL’s adaptability, consistency, and flexibility are part of the exhibit with LeViT-ResUNet. Its ability to improve crop health monitoring and output prediction makes it ideal for widespread agricultural deployment.

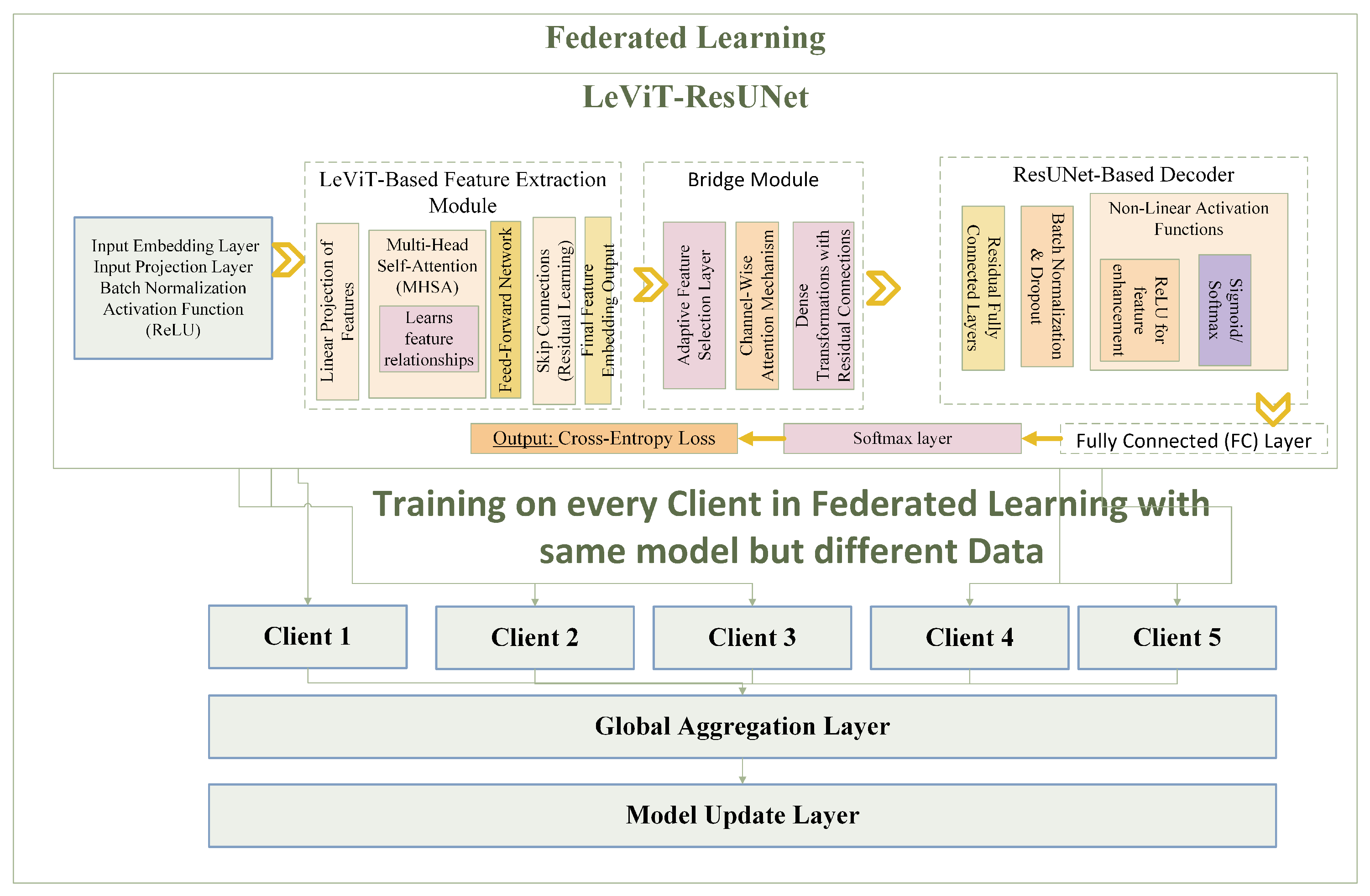

To demonstrate the efficacy and benefits of our suggested FL-LeViT-ResUNet compared to traditional federated CNN designs,

Table 4 presents a comparison overview of important FL performance metrics.

Table 5 compares federated-learning-based classification results for various clients using ResNet, the CNN, DenseNet, ViT, YOLOv4, and the proposed FL with LeViT-ResUNet. The table displays client-specific accuracy, precision, recall, F1-score, and iteration loss. The results for six clients (CL = 1 to CL = 6) demonstrate performance development from the 1st to the 50th iteration. This table shows the federated learning framework’s flexibility and performance improvement across client datasets. With more iterations and clients, the vision transformer (ViT) and DenseNet can generalize across scattered data. Compared to other techniques, the FL with the LeViT-ResUNet model achieves the best accuracy (99.1%) and lowest loss (0.052) by the 50th iteration for the sixth client. This shows its ability to maximize model performance across diverse datasets via federated learning. Client collaboration improves global model performance when the system collects information from dispersed datasets, as seen in the table. Federated learning protects data, making the technique scalable and ideal for agricultural applications. The investigation shows that the suggested technique makes accurate predictions even in remote and resource-constrained contexts. All models in this table were assessed in a federated learning setup with consistent client involvement and aggregation techniques for fair comparison.

Table 6 shows the client-wise contribution to the global model in the federated learning framework. It shows each client’s data size (in samples), local accuracy during training, gradient contribution to the worldwide model, and update frequency (in rounds). The data size column shows sample distribution among the six clients, allowing the federated learning system to use varied datasets. Client models’ accuracy results vary from 96.8% to 97.5%, demonstrating the effectiveness of local training in delivering high precision overdispersed datasets. Gradient contribution estimates each client’s effect on the global model update, stressing the proportionality of data quantity and training quality. Clients with more enormous datasets, such as Client 5 with 5500 samples, have a somewhat more outstanding gradient contribution (22.5%) than others. Federated learning is collaborative since the update frequency statistic shows the number of client–global server communication cycles. Clients with more excellent update rates (e.g., Client 2 with 12 rounds) actively refine the global model, improving aggregated data generalization. This table shows that all customers contribute equally to model optimization. Federated learning incorporates local updates into a strong global model while protecting data privacy, making it ideal for remote and heterogeneous applications like agricultural monitoring systems.

Client-specific optimization results in a federated learning framework are shown in

Table 7 across local training rounds. The table details critical performance data, including client local accuracy, training loss, and training time (in s). Local training iterations are standardized to five rounds for all customers in the “Local Training Round” column. The “Local Accuracy” column shows the efficacy of local training, with values from 96.5% to 97.2%. These high accuracy levels show that each client’s local model captures key data patterns, improving the global model. The "Loss" column shows local training errors. Low loss values, between 0.075 and 0.082, indicate that each client is reducing the training error and that the local optimization process is stable and efficient. The “Time Taken” measure shows the computational overhead of local training, with each client requiring 11.5 to 13 s to complete five cycles. Federated learning systems address computing resources and client data distribution, which affect time. This table highlights all clients’ balanced and efficient contributions to federated learning. The clients keep the federated learning framework scalable and efficient by obtaining high accuracy and low loss in an acceptable time. These findings demonstrate the federated approach’s resilience in dispersed situations where clients work independently yet together to optimize the global model.

Table 8 evaluates feature responsiveness and temporal consistency for each client in the federated learning framework. In the feature responsiveness index (FRI) range of 90.8% to 92.0%, the model prioritizes relevant characteristics for successful decision making. Temporal dependency consistency (TDC) scores of 94.7% to 95.5% demonstrate the model’s ability to capture time-dependent patterns needed for sequential data jobs. The global model’s accuracy results (96.8% to 98.9%) show its outstanding prediction performance for all customers. Clients with higher FRI and TDC scores have greater accuracy, emphasizing feature prioritization and temporal alignment in federated learning. This table shows that the model performs equally across dispersed clients while responding to feature dependencies and temporal dynamics.

Table 9 analyzes communication overhead in federated learning frameworks throughout training cycles. In the table, data transferred to and received from clients, overall communication volume, and delay per round are assessed. The model optimizes communication as training rounds grow, reducing the client data provided and received. Communication overhead decreases from 90 MB at round 10 to 81 MB at round 50. This decrease shows the framework’s ability to balance model updates and communication burden. Later rounds had 130 ms latency, down from 140 ms before. The federated learning setup’s efficient communication protocols and aggregation algorithms enable real-time performance in dispersed situations.

Table 10 compares the computational efficiency of different models across 30 training cycles. The table shows that the federated learning (FL) model, coupled with LeViT-ResUNet, has the lowest execution time per round (8.5 s) and overall (255 s). The lightweight LeViT-ResUNet design minimizes computational and communication overhead for federated learning environments, resulting in efficiency. Traditional models like DenseNet, the vision transformer (ViT), and ResNet take 375–420 s to execute. The computational complexity and resource requirements of processing massive agricultural datasets have increased. The suggested FL-based architecture is more efficient than YOLOv4 and YOLOv2, which have reasonable execution times. The findings demonstrate the benefits of lightweight deep learning models with federated learning. The suggested solution minimizes computing costs while retaining accuracy by dividing the training process over numerous clients and using an efficient architecture. This efficiency makes the FL with the LeViT-ResUNet model ideal for real-time agricultural monitoring applications that need fast processing and low latency for decision making. This table evaluated all models under a federated learning setup with consistent client participation and aggregation strategy for a fair comparison.

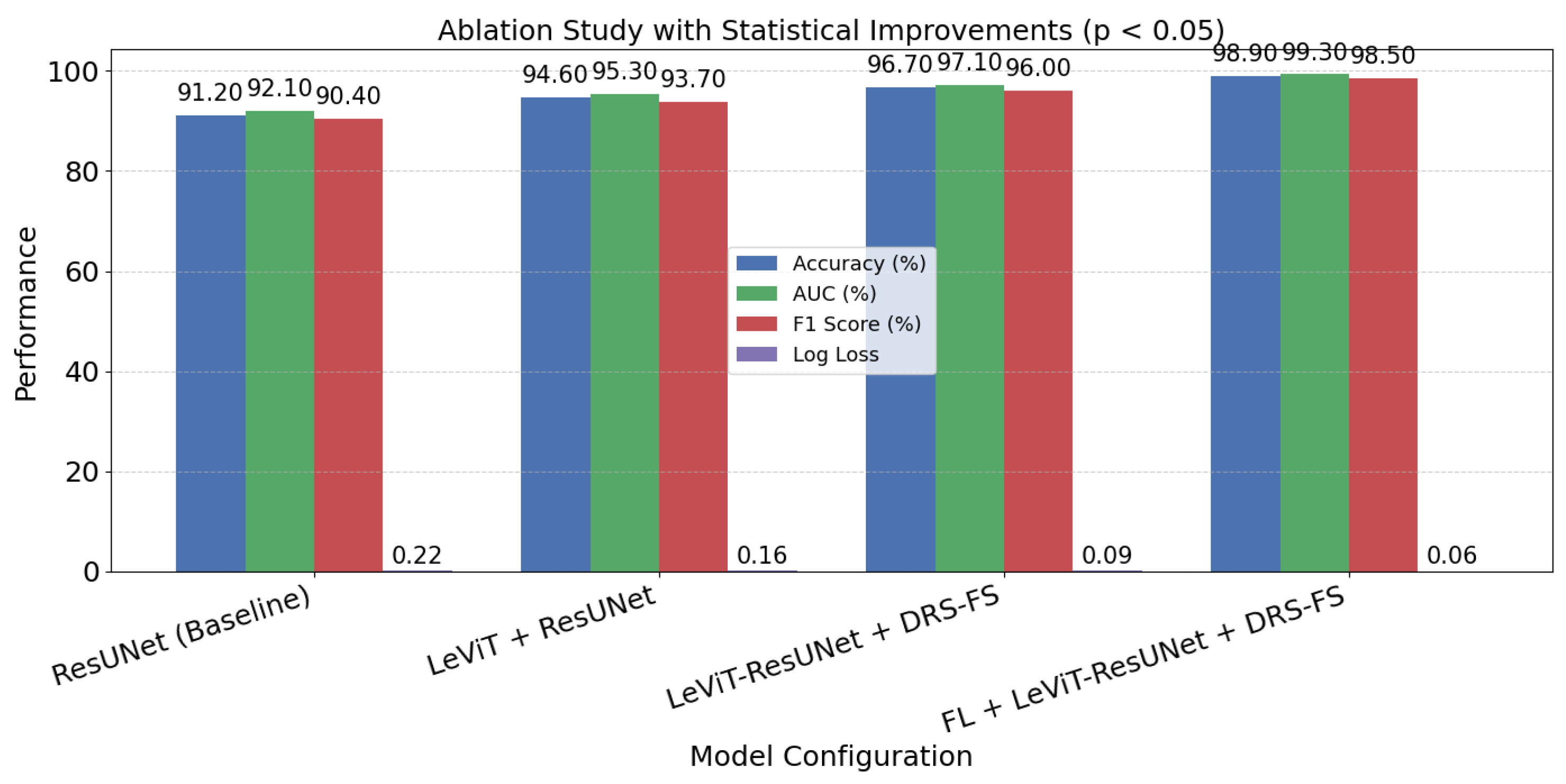

The results in

Table 11 and

Figure 9 demonstrate the individual and collective value of each integrated component within the proposed architecture, and the statistical improvements across configurations are significant (

p < 0.05) as validated using paired Student’s

t-tests, confirming the effectiveness of each integrated component. Starting from the baseline ResUNet, which provides foundational pixel-level segmentation, the addition of the LeViT significantly improves performance by introducing attention-driven, spatially aware feature extraction, resulting in a 3.4% gain in accuracy and a 0.061 reduction in log-loss. When the dynamic relevance and sparsity-based feature selector (DRS-FS) is added, the model further benefits from targeted feature prioritization, improving generalization and interpretability. This is evident with a jump in accuracy from 94.6% to 96.7% and a log-loss drop from 0.157 to 0.094. Finally, integrating federated learning (FL) enables decentralized model training across geographically distributed datasets, preserving data privacy and enhancing regional variability adaptability. This results in the highest observed performance, with an accuracy of 98.9% and the lowest log-loss of 0.058. Overall, the full model achieves a substantial 7.7% increase in classification accuracy and a 0.16 reduction in log-loss compared to the baseline, confirming the necessity and synergistic impact of each component in delivering a robust, privacy-preserving, and high-performing precision agriculture solution.

Table 12 shows that federated learning (FL) using the LeViT-ResUNet model excels in several metrics, such as Spearman’s, Pearson’s, Wilcoxon, Kendall’s, ANOVA, and others. The model’s low statistical error values demonstrate its generalization ability across varied datasets, assuring stable performance and low variability. The model’s unusual architecture—combining LeViT’s lightweight transformer-based feature extraction with ResUNet’s exact pixel-level segmentation—explains its effectiveness. Federated learning uses decentralized, heterogeneous client data to reduce overfitting and improve consistency, enhancing flexibility. FL with LeViT-ResUNet outperforms ResNet, the CNN, and DenseNet in statistical reliability. Its stability in storing complicated agricultural data and low error levels across all tests make it ideal for precision agriculture applications.

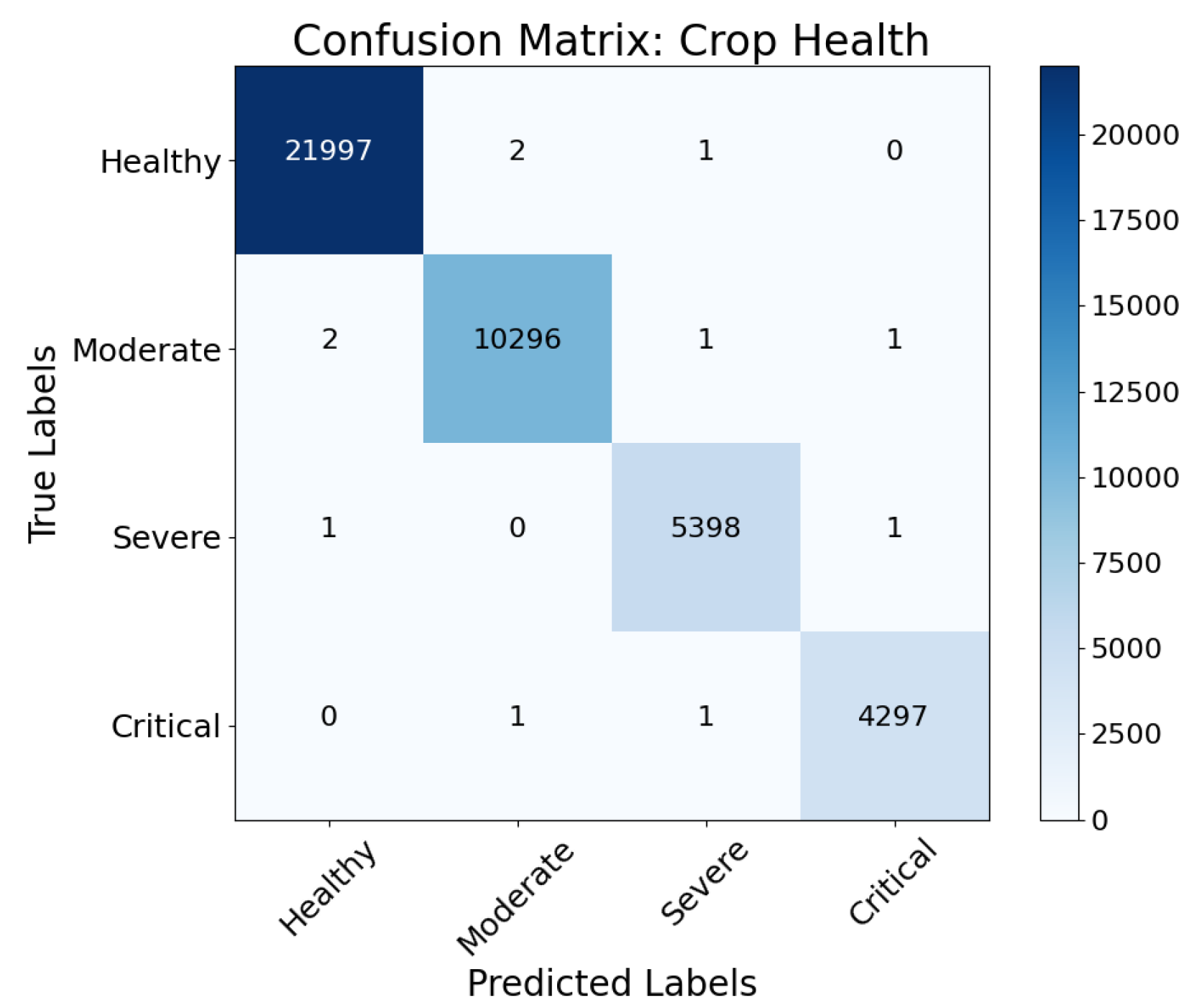

The confusion matrix for crop health classification (

Figure 10) assesses predictions for four categories: healthy, moderate, severe, and critical. High diagonal dominance reflects the model’s accurate categorization with minimal false positives and negatives. Near-perfect accuracy allows the model to identify serious crop health issues in the “Healthy” and “Critical” categories. This technical result reveals that the proposed FL with LeViT-ResUNet can properly assess crop health for resource allocation and early response. Early crop stress diagnosis, risk minimization, and production optimization are possible with accurate classification. Real-world agriculture requires precise crop health evaluation for sustainable and practical farming. Hence, the model’s results justify its implementation.

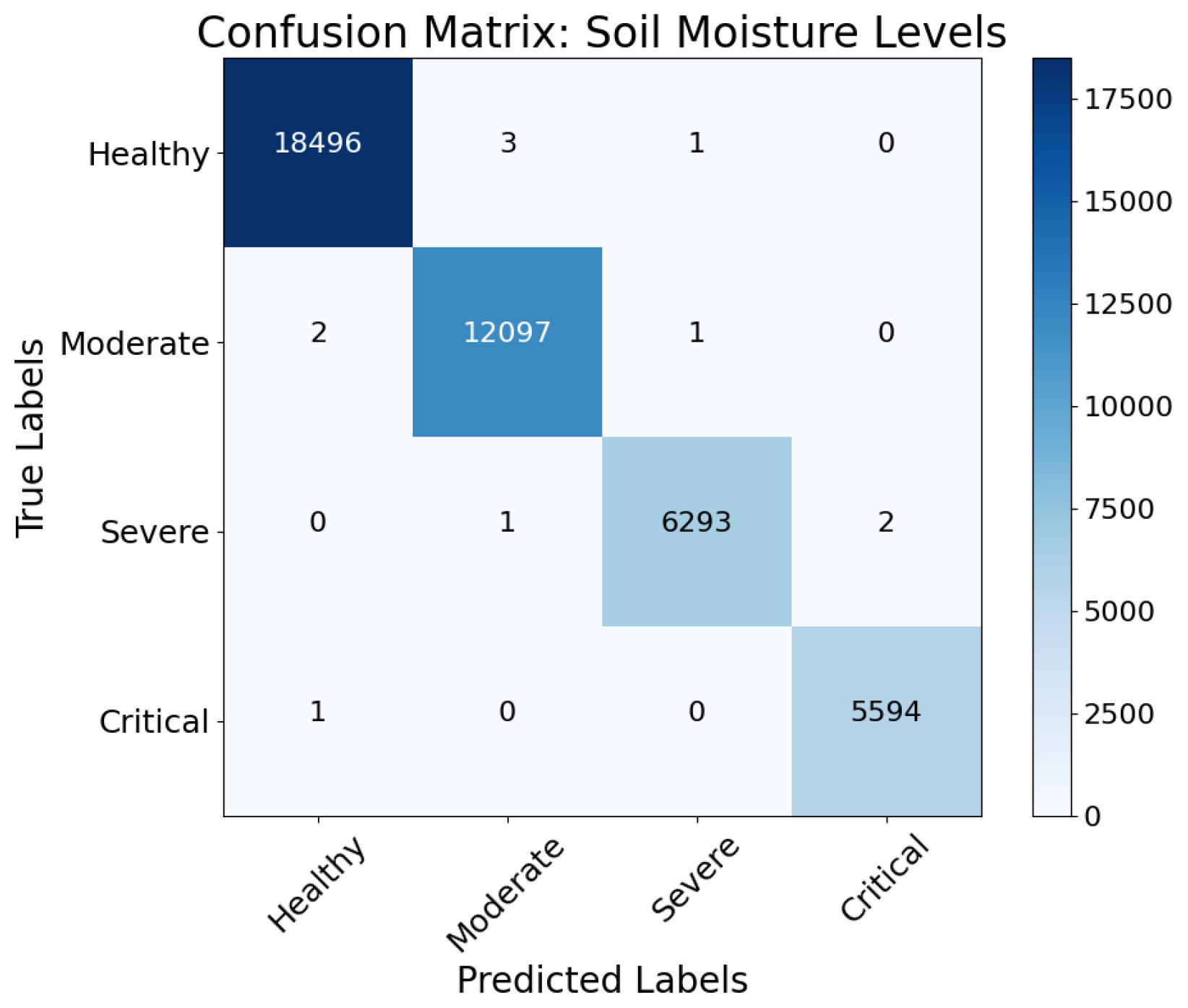

Figure 11 shows the confusion matrix for soil moisture classification: healthy, moderate, severe, and critical. The model’s diagonal dominance indicates excellent accuracy and few misclassifications. This technical result confirms the model’s soil moisture accuracy, which optimizes irrigation. Correct soil moisture categorization saves water, reduces resource waste, and prevents crop damage from over- or under-irrigation. This proves that FL with LeViT-ResUNet can solve precision agricultural water management problems. The approach helps farmers to save water, preserve crop health, and conduct sustainable agriculture by delivering soil moisture insights.

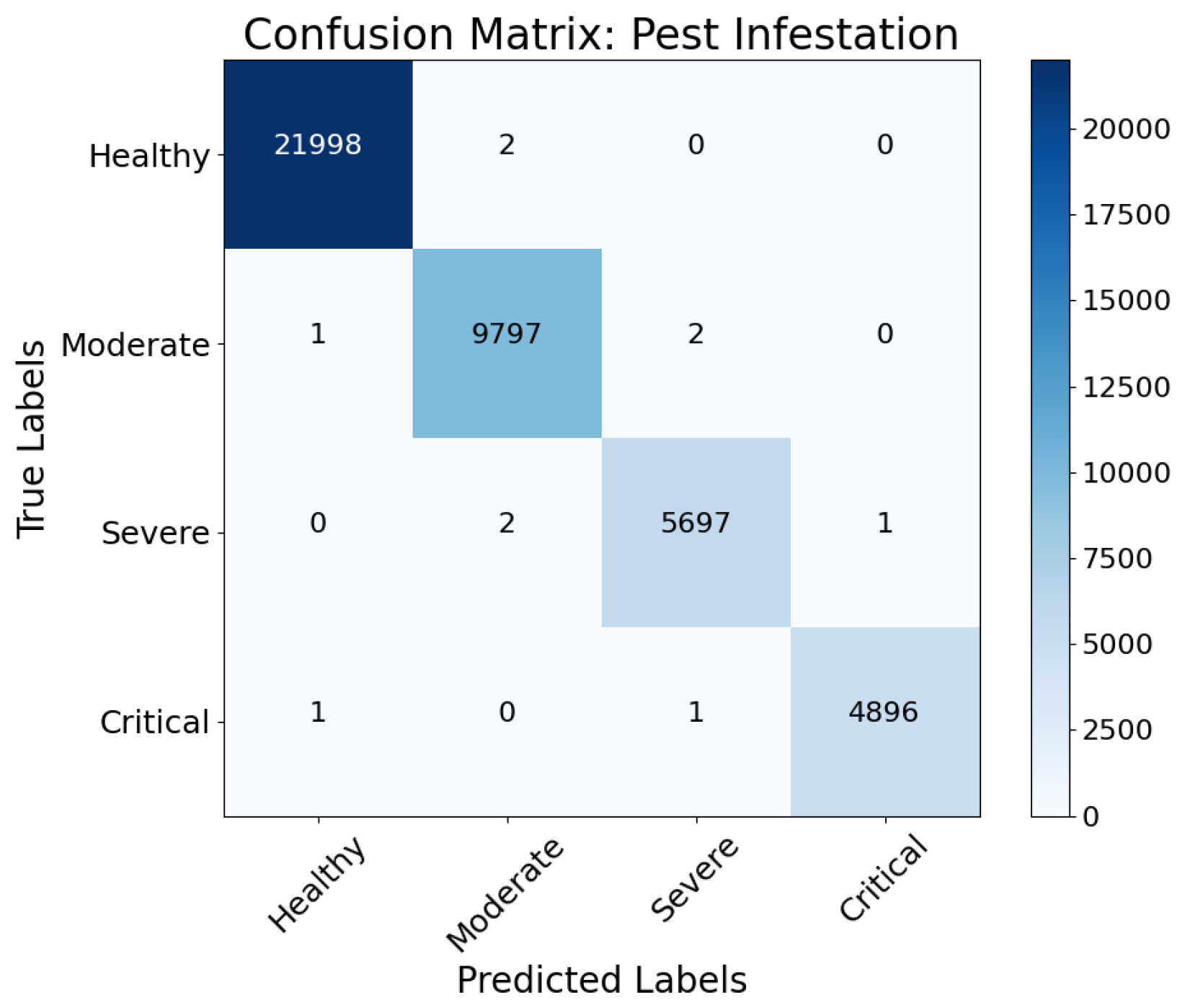

Figure 12 shows the confusion matrix for pest infestation categorization, categorized as healthy, moderate, severe, and critical. The model accurately identifies pest-affected areas with few false positives and negatives. This technical result shows that FL with LeViT-ResUNet can accurately identify pest hotspots for timely interventions and targeted pest management. Accurate pest infestation categorization improves crop quality and ecological balance by reducing pesticide use and environmental consequences. Precision agriculture requires accurate pest monitoring for sustainability and production, as seen in the figure.

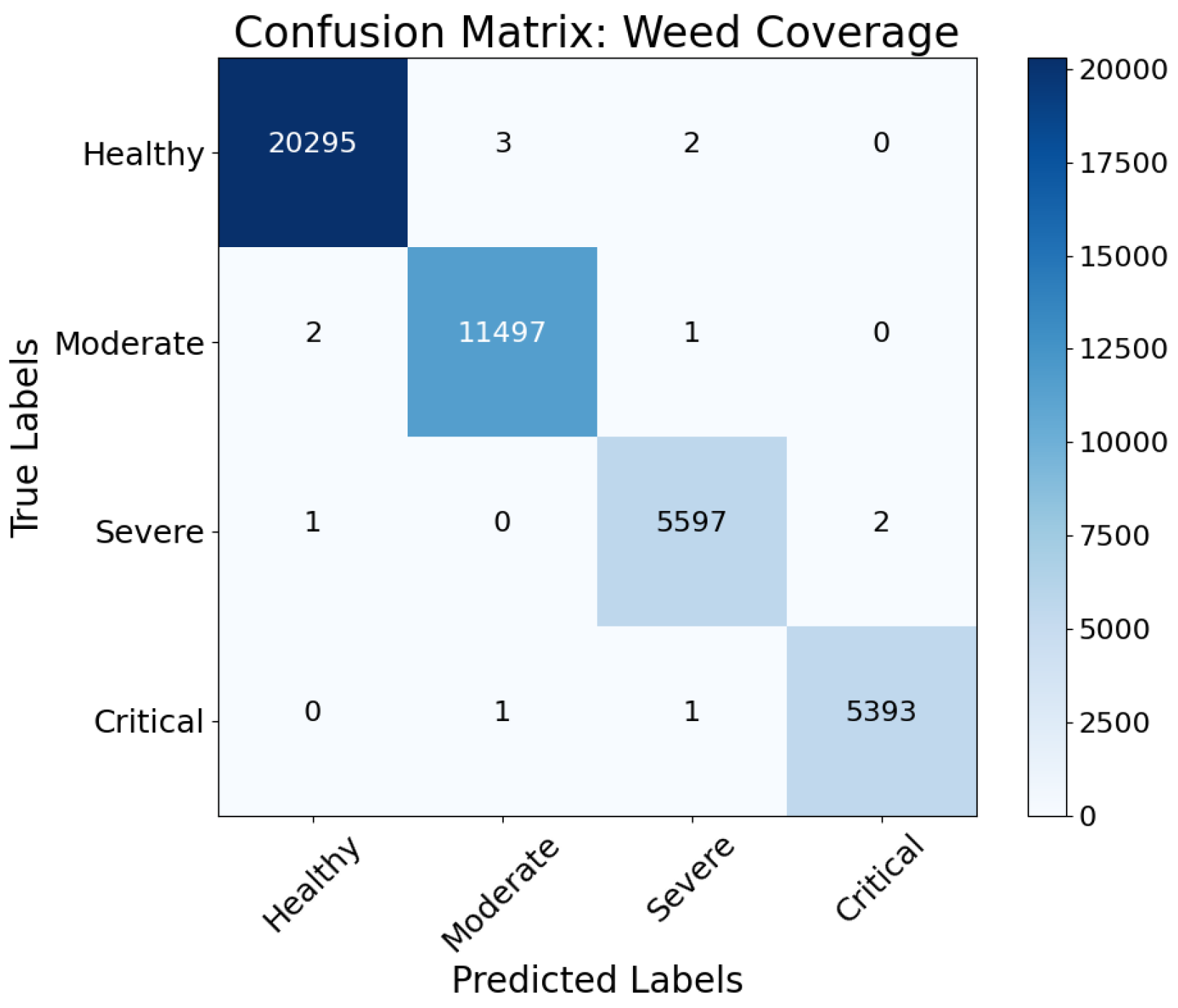

Figure 13 illustrates the confusion matrix for weed coverage categorization, divided into four categories: healthy, moderate, severe, and critical. Strong diagonal dominance and low misclassification rates demonstrate the FL with the LeViT-ResUNet model’s correctness. This technical result shows that the model can accurately identify weed-affected regions for herbicide treatment. Correct weed categorization optimizes crop development, reduces resource waste, and boosts production. The findings confirm the model’s use in real-world farming, where timely and accurate weed removal is crucial for crop health and production.

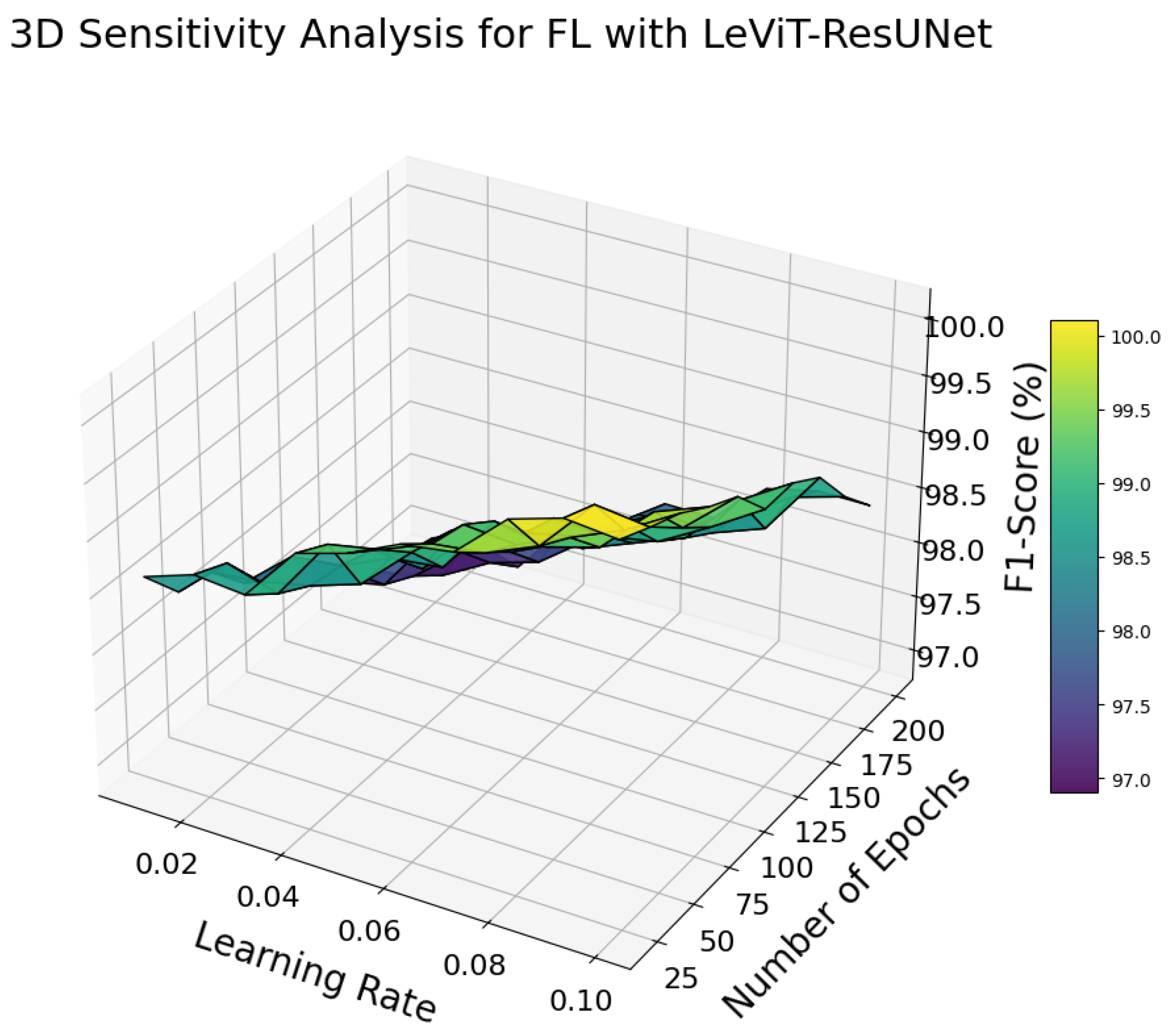

The 3D sensitivity study in

Figure 14 illustrates the correlation between learning rate, epochs, and F1-scores in the FL with the LeViT-ResUNet model. The figure shows that F1-scores improve with the best learning rate (∼

) and sufficient epochs (∼100). This study allows hyperparameter adjustment to optimize model performance and reduce computing costs. Federated learning systems must combine efficiency and accuracy with distributed environment restrictions. Therefore, such insights are essential. The findings confirm the model’s flexibility across parameter configurations, bolstering its precision agricultural resilience.

This study found that FL with the LeViT-ResUNet system works well in most agricultural settings but degrades under challenging circumstances. The algorithm performs poorly in extensive leaf overlap, when numerous plant layers conceal insect or weed patterns, reducing pixel-level segmentation accuracy. Even after preprocessing, cloud cover and uneven illumination in drone footage may distort spectral images. In severe weather conditions like high wind speeds or partial blockage from rain or dust, the model’s ability to detect crop health indicators like the NDVI and insect hotspots decreases. Motion blur, picture artifacts, and ambient noise impact feature spatial coherence. Due to low aerial characteristics, some crop kinds with comparable spectral profiles (e.g., maize vs. sorghum in early development stages) are easier to misclassify. Our preparation workflow uses spatial filtering, temporal alignment, and the DRS-FS feature selector to remove redundant or noisy data. Under high noise or partial occlusions, the model may show a little performance loss (2-4% accuracy reduction in impacted areas). Future research objectives include integrating adaptive attention processes or multi-view fusion procedures to improve resilience under occlusion, illumination change, and visually confusing crop settings.