A Canopy Height Model Derived from Unmanned Aerial System Imagery Provides Late-Season Weed Detection and Explains Variation in Crop Yield

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Background

2.2. UAS Parameters

2.3. Visual Assessments of Weediness

2.4. Aerial Image Collection and Analysis

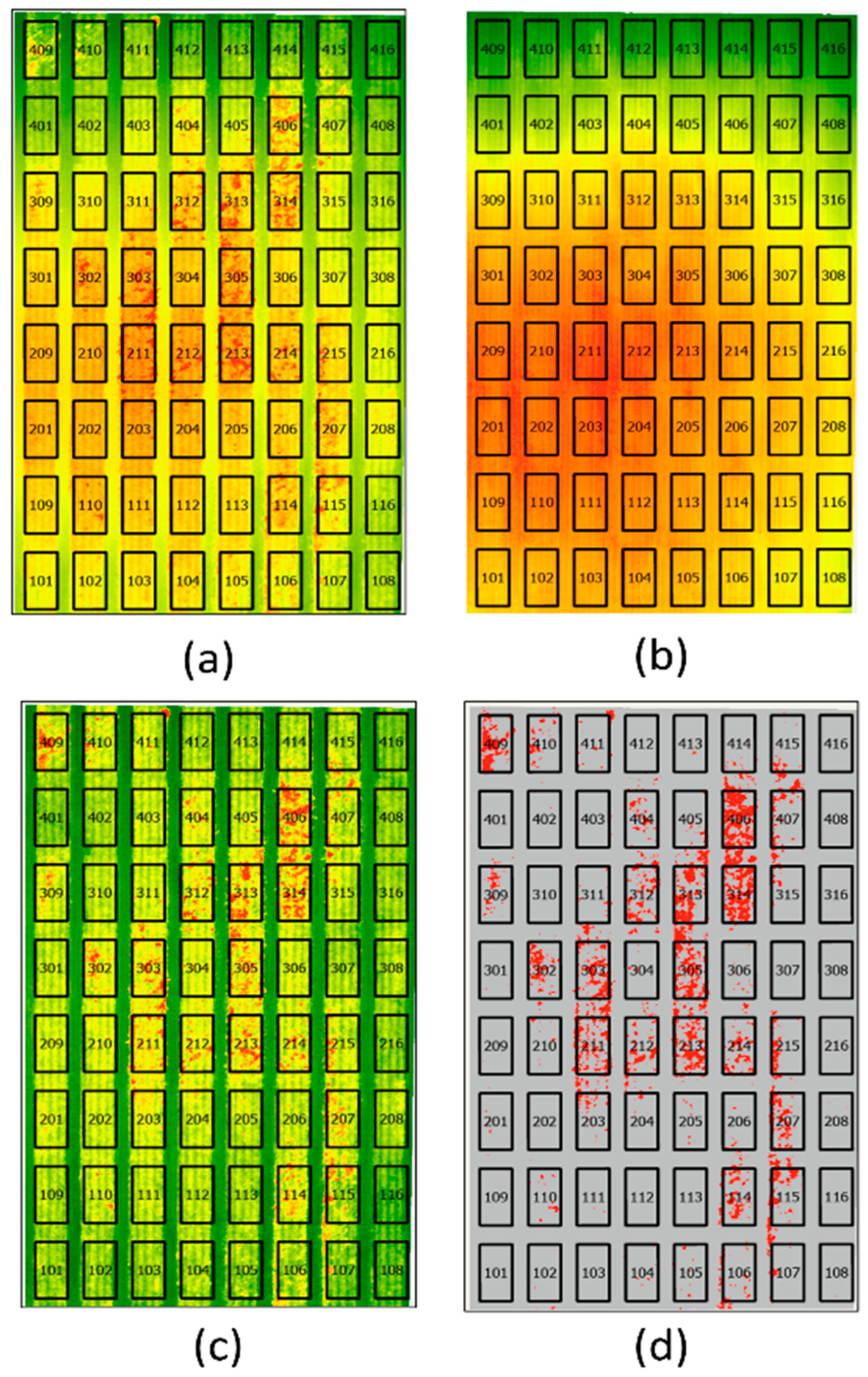

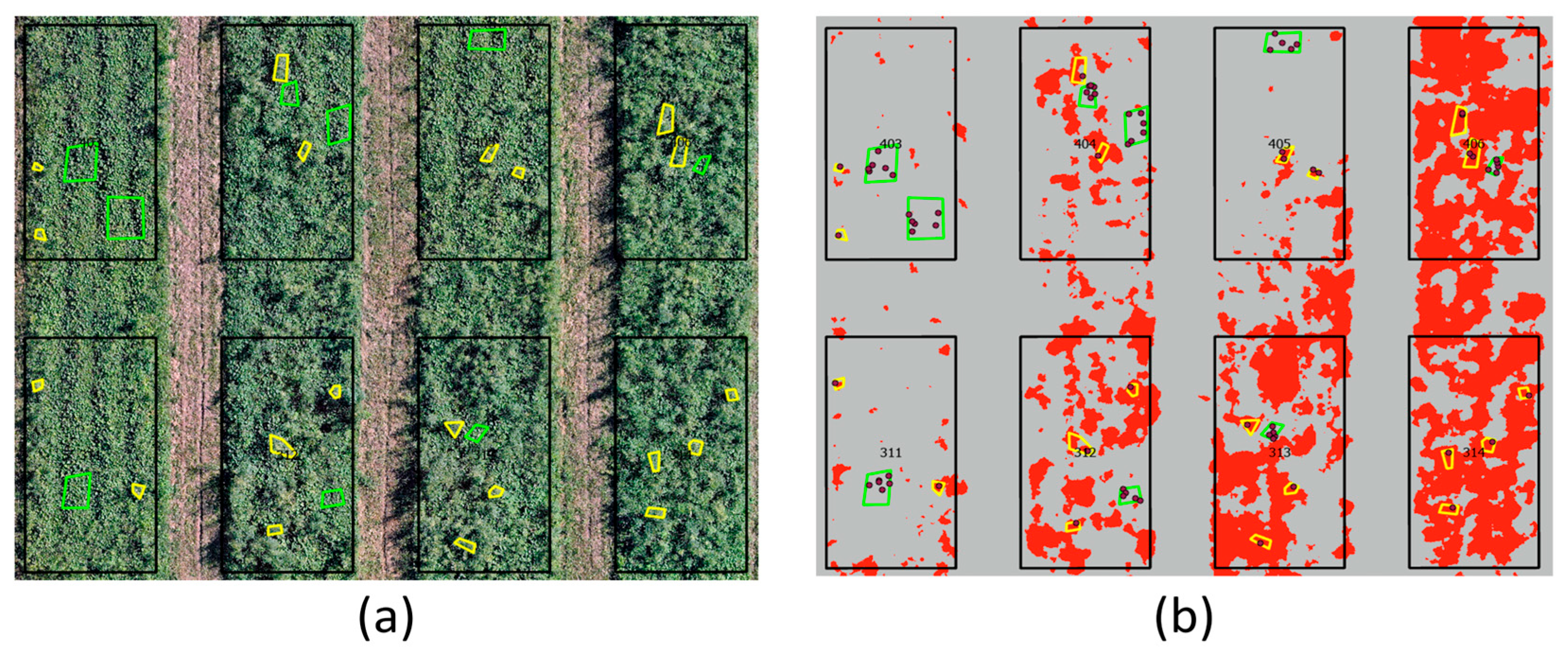

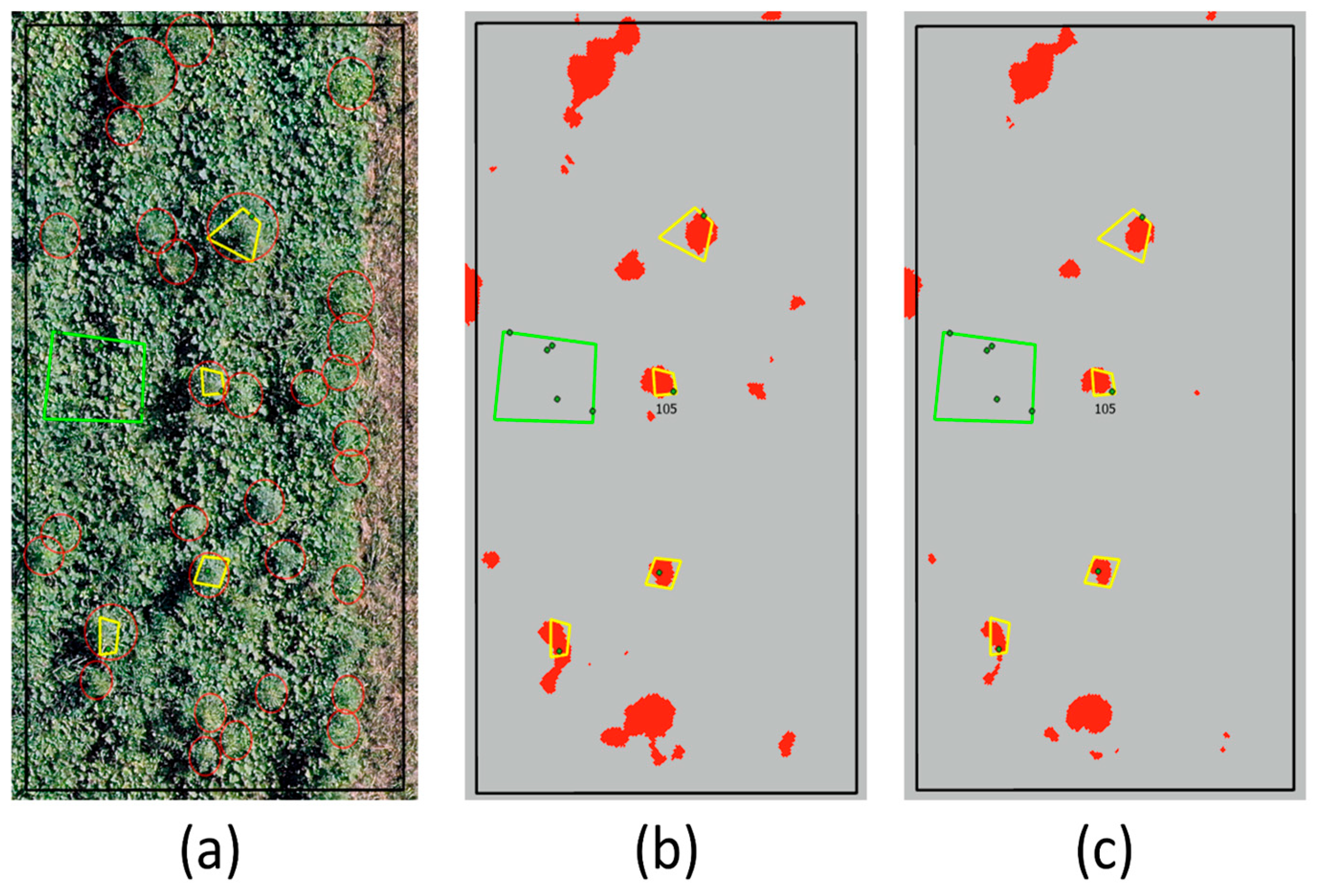

2.4.1. CHM Mask Approach

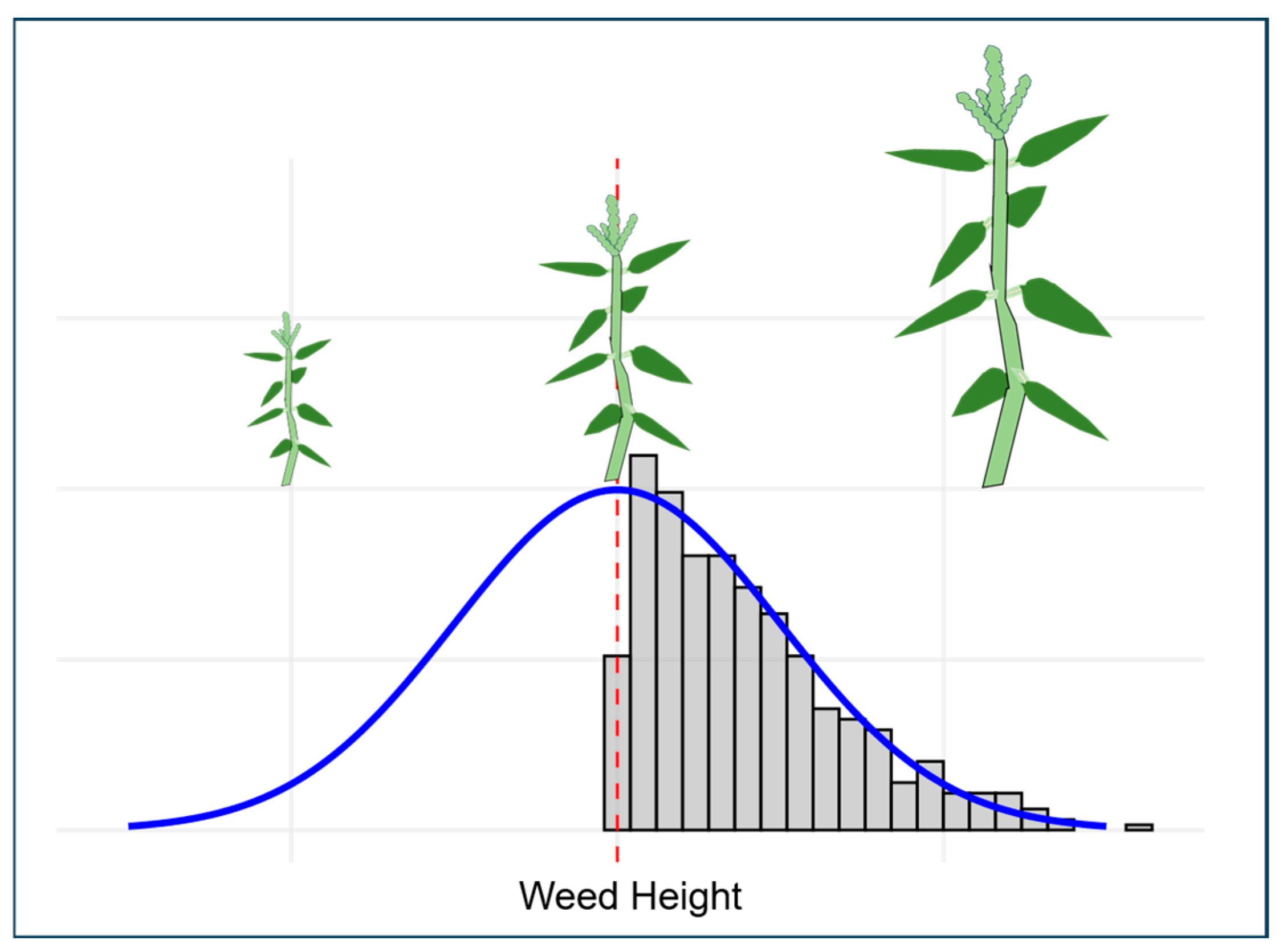

2.4.2. Qualitative Determination of Mask Height Range

2.4.3. Quantitative Evaluation of Mask Heights

2.5. Statistical Analysis

2.5.1. Visual Rating of Weed Pressure as a Response Variable

2.5.2. Modeling CHMM Weed Area as a Response Variable

3. Results and Discussion

3.1. Initial Classification Attempts

3.2. Choosing the Optimum CHM Mask Height

3.3. Cash Crop Yield

3.4. Weediness Metrics

3.4.1. Comparison of the Visual Rating and CHMM

3.4.2. Weediness Metrics Used as Response Variables

3.4.3. Weediness Metrics as Predictors for Crop Yield

| Visual Rating of Weediness | SP Root Yield (Mg·ha−1) (via Weed Rating) 1 | CHMM Weed Area (dm2) 2 | SP Root Yield (Mg·ha−1) (via CHMM) 3 |

|---|---|---|---|

| 1 | 43.3 abc | 82.9 c | 48.1 (±1.62) 4 |

| 2 | 47.5 ab | 265 bc | 47.5 (±1.49) |

| 3 | 50.7 a | 572 ab | 46.3 (±1.34) |

| 4 | 38.6 bc | 1380 a | 43.3 (±1.54) |

| 5 | 33.0 c | 2000 a | 41.0 (±2.11) |

3.5. Comparison with Other UAS Weed Detection Approaches

3.6. Areas for Improving the CHMM and Future Work

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| UAS | Unmanned Aerial System |

| RGB | Red–Green–Blue |

| MS | Multispectral |

| GIS | Geographic Information System |

| SP | Sweetpotato |

| PA | Palmer amaranth |

| DSM | Digital Surface Model |

| DTM | Digital Terrain Model |

| CHM | Canopy Height Model |

| CHMM | Canopy Height Model Mask |

References

- Young, S.L.; Anderson, J.V.; Baerson, S.R.; Bajsa-Hirschel, J.; Blumenthal, D.M.; Boyd, C.S.; Boyette, C.D.; Brennan, E.B.; Cantrell, C.L.; Chao, W.S.; et al. Agricultural Research Service Weed Science Research: Past, Present, and Future. Weed Sci. 2023, 71, 312–327. [Google Scholar] [CrossRef]

- Spargo, J.T.; Cavigelli, M.A.; Mirsky, S.B.; Meisinger, J.J.; Ackroyd, V.J. Organic Supplemental Nitrogen Sources for Field Corn Production After a Hairy Vetch Cover Crop. Agron. J. 2016, 108, 1992–2002. [Google Scholar] [CrossRef]

- Beiküfner, M.; Kühling, I.; Vergara-Hernandez, M.E.; Broll, G.; Trautz, D. Impact of Mechanical Weed Control on Soil N Dynamics, Soil Moisture, and Crop Yield in an Organic Cropping Sequence. Nutr. Cycl. Agroecosyst. 2024, 129, 223–238. [Google Scholar] [CrossRef]

- Sanders, J.T.; Jones, E.A.L.; Austin, R.; Roberson, G.T.; Richardson, R.J.; Everman, W.J. Remote Sensing for Palmer Amaranth (Amaranthus palmeri S. Wats.) Detection in Soybean (Glycine max (L.) Merr.). Agronomy 2021, 11, 1909. [Google Scholar] [CrossRef]

- Gallagher, R.S.; Cardina, J.; Loux, M. Integration of Cover Crops with Postemergence Herbicides in No-Till Corn and Soybean. Weed Sci. 2003, 51, 995–1001. [Google Scholar] [CrossRef]

- Tu, C.; Louws, F.J.; Creamer, N.G.; Paul Mueller, J.; Brownie, C.; Fager, K.; Bell, M.; Hu, S. Responses of Soil Microbial Biomass and N Availability to Transition Strategies from Conventional to Organic Farming Systems. Agric. Ecosyst. Environ. 2006, 113, 206–215. [Google Scholar] [CrossRef]

- Teasdale, J.R.; Mirsky, S.B.; Spargo, J.T.; Cavigelli, M.A.; Maul, J.E. Reduced-Tillage Organic Corn Production in a Hairy Vetch Cover Crop. Agron. J. 2012, 104, 621–628. [Google Scholar] [CrossRef]

- Treadwell, D.D.; Creamer, N.G.; Schultheis, J.R.; Hoyt, G.D. Cover Crop Management Affects Weeds and Yield in Organically Managed Sweetpotato Systems. Weed Technol. 2007, 21, 1039–1048. [Google Scholar] [CrossRef]

- Singh, V.; Rana, A.; Bishop, M.; Filippi, A.M.; Cope, D.; Rajan, N.; Bagavathiannan, M. Chapter Three—Unmanned Aircraft Systems for Precision Weed Detection and Management: Prospects and Challenges. In Advances in Agronomy; Sparks, D.L., Ed.; Academic Press: Cambridge, MA, USA, 2020; Volime 159, pp. 93–134. [Google Scholar]

- Gerhards, R.; Andújar Sanchez, D.; Hamouz, P.; Peteinatos, G.G.; Christensen, S.; Fernandez-Quintanilla, C. Advances in Site-Specific Weed Management in Agriculture—A Review. Weed Res. 2022, 62, 123–133. [Google Scholar] [CrossRef]

- Peña, J.M.; Torres-Sánchez, J.; De Castro, A.I.; Kelly, M.; López-Granados, F. Weed Mapping in Early-Season Maize Fields Using Object-Based Analysis of Unmanned Aerial Vehicle (UAV) Images. PLoS ONE 2013, 8, e77151. [Google Scholar] [CrossRef] [PubMed]

- Peña, J.; Torres-Sánchez, J.; Serrano-Pérez, A.; De Castro, A.; López-Granados, F. Quantifying Efficacy and Limits of Unmanned Aerial Vehicle (UAV) Technology for Weed Seedling Detection as Affected by Sensor Resolution. Sensors 2015, 15, 5609–5626. [Google Scholar] [CrossRef]

- Anderegg, J.; Tschurr, F.; Kirchgessner, N.; Treier, S.; Schmucki, M.; Streit, B.; Walter, A. On-Farm Evaluation of UAV-Based Aerial Imagery for Season-Long Weed Monitoring Under Contrasting Management and Pedoclimatic Conditions in Wheat. Comput. Electron. Agric. 2023, 204, 107558. [Google Scholar] [CrossRef]

- Zhang, J.; Maleski, J.; Jespersen, D.; Waltz, F.C.; Rains, G.; Schwartz, B. Unmanned Aerial System-Based Weed Mapping in Sod Production Using a Convolutional Neural Network. Front. Plant Sci. 2021, 12, 702626. [Google Scholar] [CrossRef]

- García-Navarrete, O.L.; Correa-Guimaraes, A.; Navas-Gracia, L.M. Application of Convolutional Neural Networks in Weed Detection and Identification: A Systematic Review. Agriculture 2024, 14, 568. [Google Scholar] [CrossRef]

- de Castro, A.; Torres-Sánchez, J.; Peña, J.; Jiménez-Brenes, F.; Csillik, O.; López-Granados, F. An Automatic Random Forest-OBIA Algorithm for Early Weed Mapping Between and Within Crop Rows Using UAV Imagery. Remote Sens. 2018, 10, 285. [Google Scholar] [CrossRef]

- Xu, B.; Meng, R.; Chen, G.; Liang, L.; Lv, Z.; Zhou, L.; Sun, R.; Zhao, F.; Yang, W. Improved Weed Mapping in Corn Fields by Combining UAV-Based Spectral, Textural, Structural, and Thermal Measurements. Pest. Manag. Sci. 2023, 79, 2591–2602. [Google Scholar] [CrossRef]

- Meyers, S.L.; Jennings, K.M.; Schultheis, J.R.; Monks, D.W. Interference of Palmer Amaranth (Amaranthus palmeri) in Sweetpotato. Weed Sci. 2010, 58, 199–203. [Google Scholar] [CrossRef]

- Teasley, F.; Woodley, A.; Kulesza, S.; Heitman, J.; Suchoff, D. Weed Pressure Stemming from Practices Intended to Increase Soil Health Decreased Crop Yield During Organic Transition in the Southeastern U.S. Department of Crop and Soil Science, North Carolina State University, Raleigh, NC, USA. 2025; manuscript submitted for publication. [Google Scholar]

- USDA. United States Standards for Grades of Sweet Potatoes; United States Department of Agriculture: Washington, DC, USA, 2005.

- Gil-Docampo, M.L.; Arza-García, M.; Ortiz-Sanz, J.; Martínez-Rodríguez, S.; Marcos-Robles, J.L.; Sánchez-Sastre, L.F. Above-Ground Biomass Estimation of Arable Crops Using UAV-Based SfM Photogrammetry. Geocarto Int. 2020, 35, 687–699. [Google Scholar] [CrossRef]

- Jaeger, B.C.; Edwards, L.J.; Das, K.; Sen, P.K. An R2 Statistic for Fixed Effects in the Generalized Linear Mixed Model. J. Appl. Stat. 2017, 44, 1086–1105. [Google Scholar] [CrossRef]

- SAS Staff. Appropriate Model for Non-Normal Distribution; SAS Communities: Cary, NC, USA, 2025. [Google Scholar]

- Hill, E.C.; Renner, K.A.; Sprague, C.L.; Davis, A.S. Cover Crop Impact on Weed Dynamics in an Organic Dry Bean System. Weed Sci. 2016, 64, 261–275. [Google Scholar] [CrossRef]

- Mohler, C.L.; Teasdale, J.R. Response of Weed Emergence to Rate of Vicia Villosa Roth and Secale cereale L. Residue. Agron. J. 1993, 33, 487–499. [Google Scholar] [CrossRef]

- Andújar, D.; Ribeiro, A.; Carmona, R.; Fernández-Quintanilla, C.; Dorado, J. An Assessment of the Accuracy and Consistency of Human Perception of Weed Cover: Human Perception of Weed Cover. Weed Res. 2010, 50, 638–647. [Google Scholar] [CrossRef]

- Kutugata, M.; Hu, C.; Sapkota, B.; Bagavathiannan, M. Seed Rain Potential in Late-Season Weed Escapes Can Be Estimated Using Remote Sensing. Weed Sci. 2021, 69, 653–659. [Google Scholar] [CrossRef]

- Monks, D.W.; Jennings, K.M.; Meyers, S.L.; Smith, T.P.; Korres, N.E. Sweetpotato: Important Weeds and Sustainable Weed Management. In Weed Control; Korres, N.E., Burgos, N.R., Duke, S.O., Eds.; CRC Press: Boca Raton, FL, USA, 2018; pp. 580–596. ISBN 978-1-315-15591-3. [Google Scholar]

- Rasmussen, J.; Nielsen, J.; Streibig, J.C.; Jensen, J.E.; Pedersen, K.S.; Olsen, S.I. Pre-Harvest Weed Mapping of Cirsium Arvense in Wheat and Barley with off-the-Shelf UAVs. Precis. Agric. 2019, 20, 983–999. [Google Scholar] [CrossRef]

- Rozenberg, G.; Kent, R.; Blank, L. Consumer-Grade UAV Utilized for Detecting and Analyzing Late-Season Weed Spatial Distribution Patterns in Commercial Onion Fields. Precis. Agric. 2021, 22, 1317–1332. [Google Scholar] [CrossRef]

- Goldsmith, A.; Austin, R.; Cahoon, C.W.; Leon, R.G. Predicting Maize Yield Loss with Crop–Weed Leaf Cover Ratios Determined with UAS Imagery. Weed Sci. 2025, 73, e22. [Google Scholar] [CrossRef]

- Veeranampalayam Sivakumar, A.N.; Li, J.; Scott, S.; Psota, E.; Jhala, A.J.; Luck, J.D.; Shi, Y. Comparison of Object Detection and Patch-Based Classification Deep Learning Models on Mid- to Late-Season Weed Detection in UAV Imagery. Remote Sens. 2020, 12, 2136. [Google Scholar] [CrossRef]

- Jamali, M.; Davidsson, P.; Khoshkangini, R.; Ljungqvist, M.G.; Mihailescu, R.-C. Context in Object Detection: A Systematic Literature Review. Artif. Intell. Rev. 2025, 58, 175. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Mesas-Carrascosa, F.J.; Jiménez-Brenes, F.M.; De Castro, A.I.; López-Granados, F. Early Detection of Broad-Leaved and Grass Weeds in Wide Row Crops Using Artificial Neural Networks and UAV Imagery. Agronomy 2021, 11, 749. [Google Scholar] [CrossRef]

- De Souza, C.H.W.; Lamparelli, R.A.C.; Rocha, J.V.; Magalhães, P.S.G. Height Estimation of Sugarcane Using an Unmanned Aerial System (UAS) Based on Structure from Motion (SfM) Point Clouds. Int. J. Remote Sens. 2017, 38, 2218–2230. [Google Scholar] [CrossRef]

- Lv, Z.; Meng, R.; Man, J.; Zeng, L.; Wang, M.; Xu, B.; Gao, R.; Sun, R.; Zhao, F. Modeling of Winter Wheat fAPAR by Integrating Unmanned Aircraft Vehicle-Based Optical, Structural and Thermal Measurement. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102407. [Google Scholar] [CrossRef]

| Mask Height (dm) | User Accuracy (%) | Producer Accuracy (%) | Overall Accuracy (%) | Kappa Statistic (Kc) | ||

|---|---|---|---|---|---|---|

| Non-Weed | Weed | Non-Weed | Weed | |||

| 4.8 | 82 | 78 | 91 | 61 | 81 | 0.55 |

| 5.1 | 91 | 72 | 90 | 75 | 86 | 0.64 |

| 5.5 | 97 | 62 | 87 | 88 | 88 | 0.66 |

| 6.1 | 99 | 51 | 85 | 94 | 87 | 0.59 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Teasley, F.; Woodley, A.L.; Austin, R. A Canopy Height Model Derived from Unmanned Aerial System Imagery Provides Late-Season Weed Detection and Explains Variation in Crop Yield. Agronomy 2025, 15, 2885. https://doi.org/10.3390/agronomy15122885

Teasley F, Woodley AL, Austin R. A Canopy Height Model Derived from Unmanned Aerial System Imagery Provides Late-Season Weed Detection and Explains Variation in Crop Yield. Agronomy. 2025; 15(12):2885. https://doi.org/10.3390/agronomy15122885

Chicago/Turabian StyleTeasley, Fred, Alex L. Woodley, and Robert Austin. 2025. "A Canopy Height Model Derived from Unmanned Aerial System Imagery Provides Late-Season Weed Detection and Explains Variation in Crop Yield" Agronomy 15, no. 12: 2885. https://doi.org/10.3390/agronomy15122885

APA StyleTeasley, F., Woodley, A. L., & Austin, R. (2025). A Canopy Height Model Derived from Unmanned Aerial System Imagery Provides Late-Season Weed Detection and Explains Variation in Crop Yield. Agronomy, 15(12), 2885. https://doi.org/10.3390/agronomy15122885