A New and Improved YOLO Model for Individual Litchi Crown Detection with High-Resolution Satellite RGB Images

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Dataset Collection and Production

2.2.1. High-Resolution Satellite Imagery

2.2.2. Visually Interpreted Data

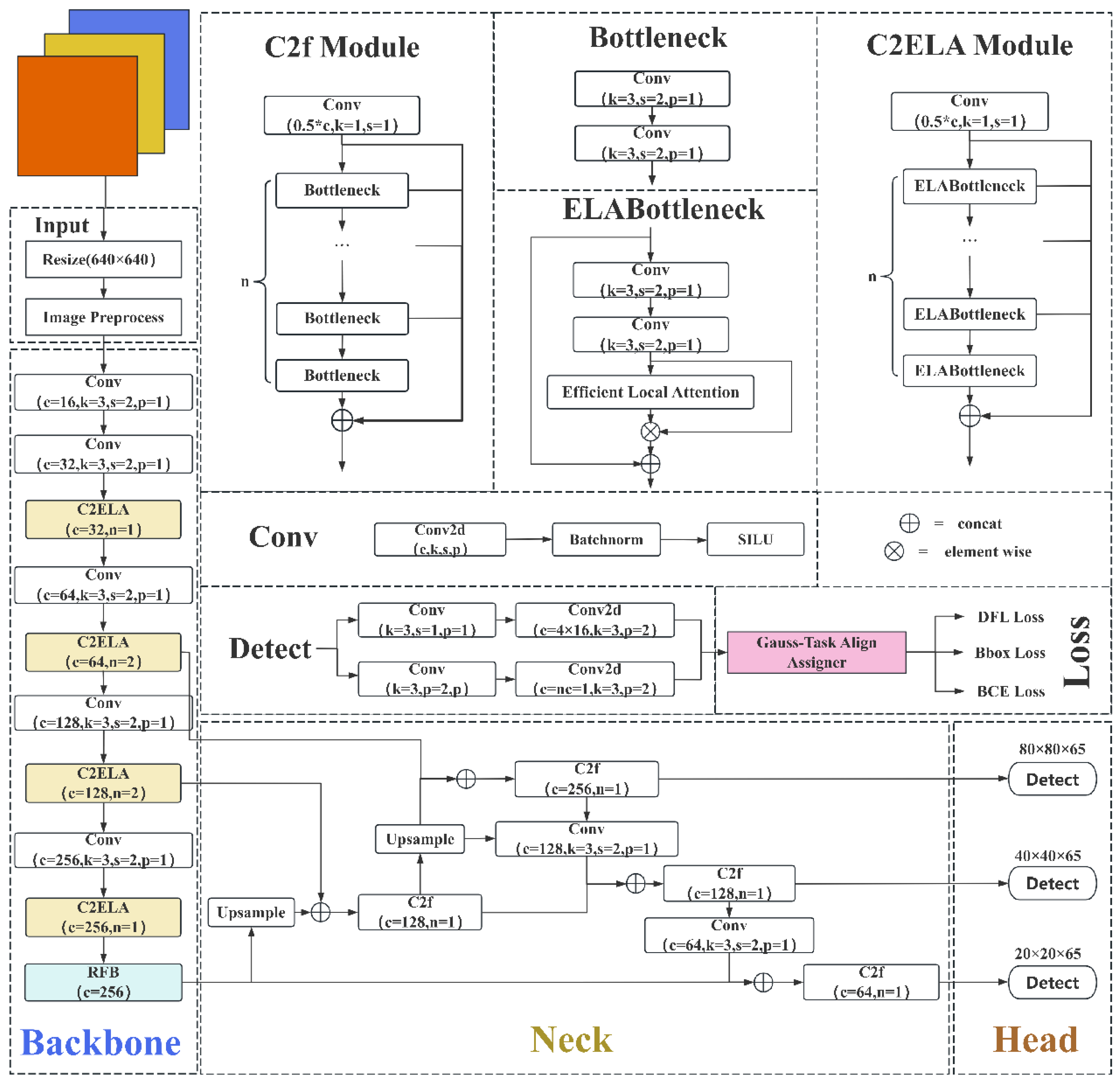

2.3. New Model for Individual Crown Detection

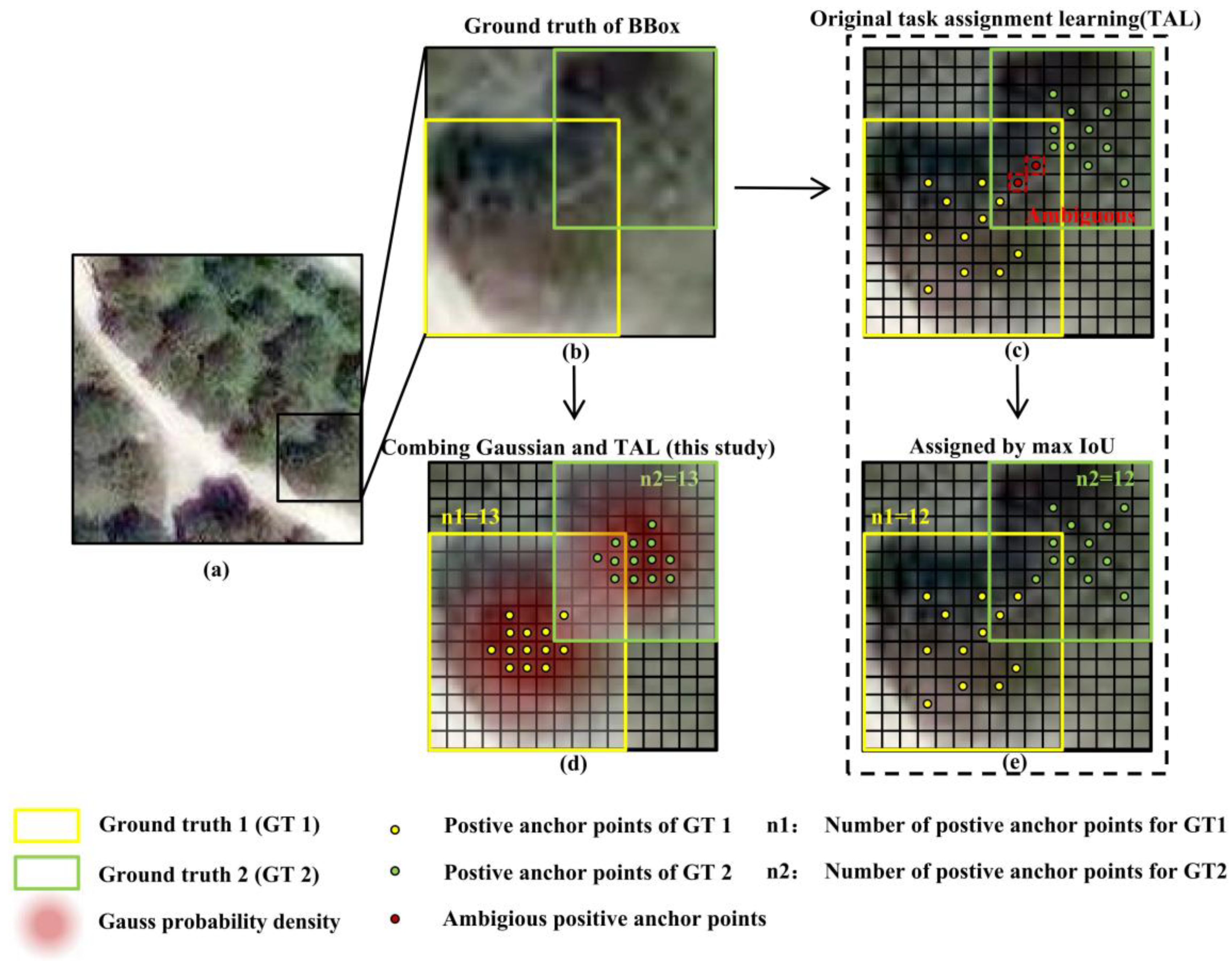

2.3.1. Fusing a Priori Knowledge with the TAL to Decrease Sample Ambiguity

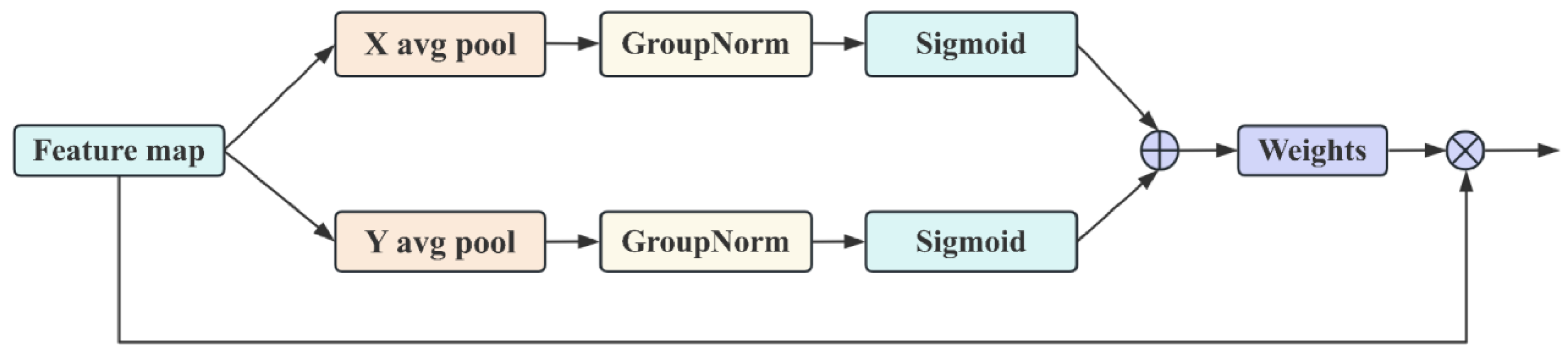

2.3.2. Introducing the ELA Modular to Improve the Center Detection Accuracy

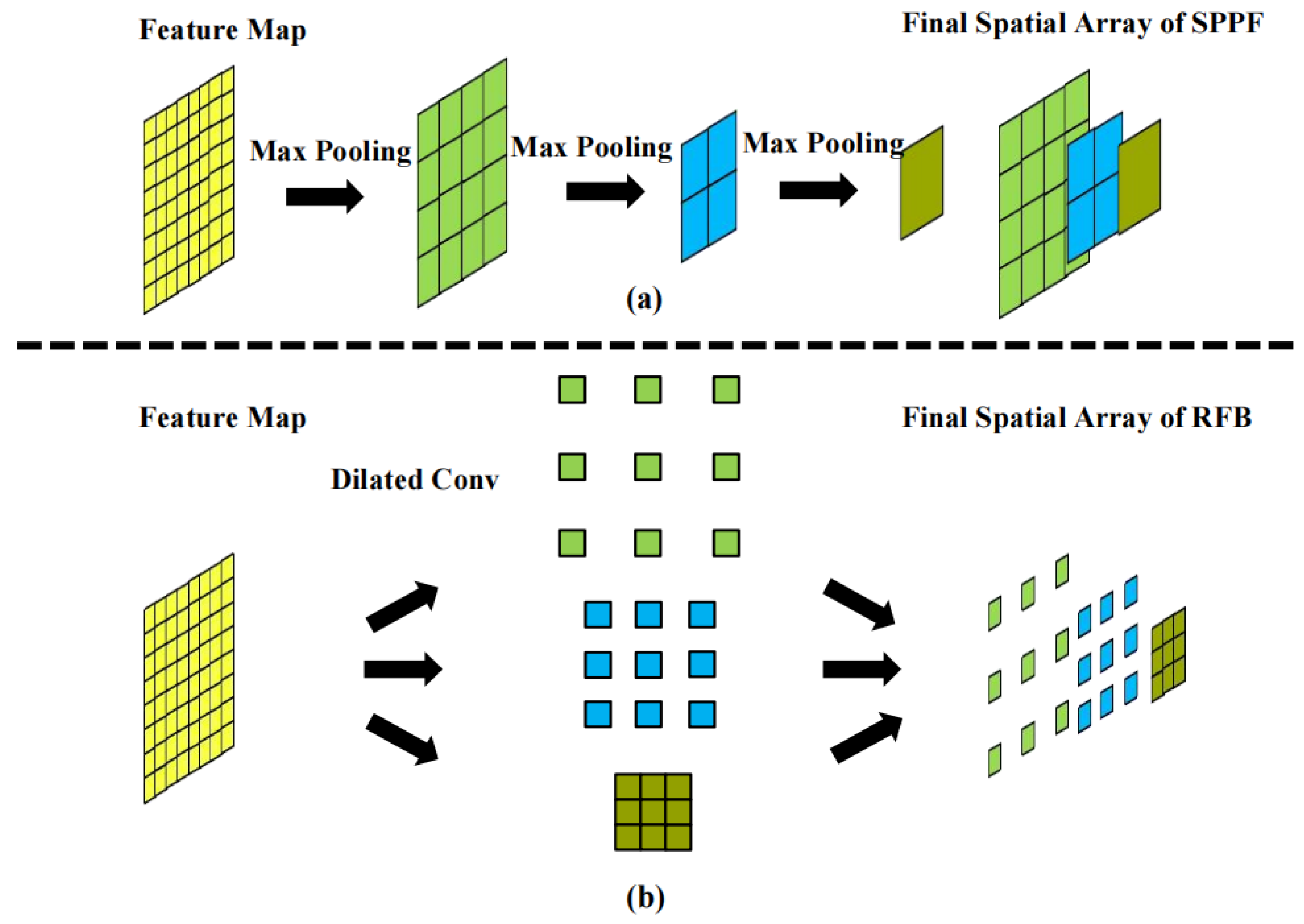

2.3.3. Using the RFB Modular to Increase the Detection Accuracy of Tree Crown

2.4. Data Analysis Methods

2.4.1. Ablation Experiment

2.4.2. Comparison Experiment

2.4.3. Model Evaluation

3. Results

3.1. Statistical Analysis Results for Individual Litchi Crowns from Different Orchards

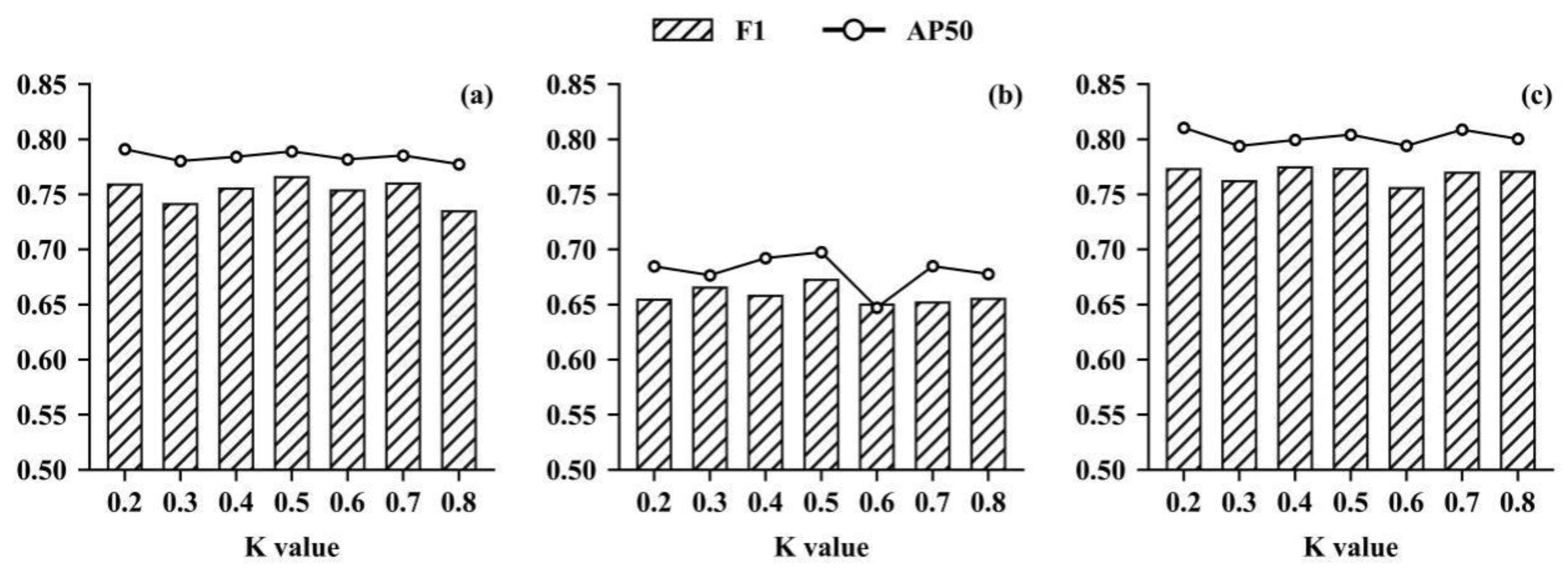

3.2. Results of the Ablation Experiment

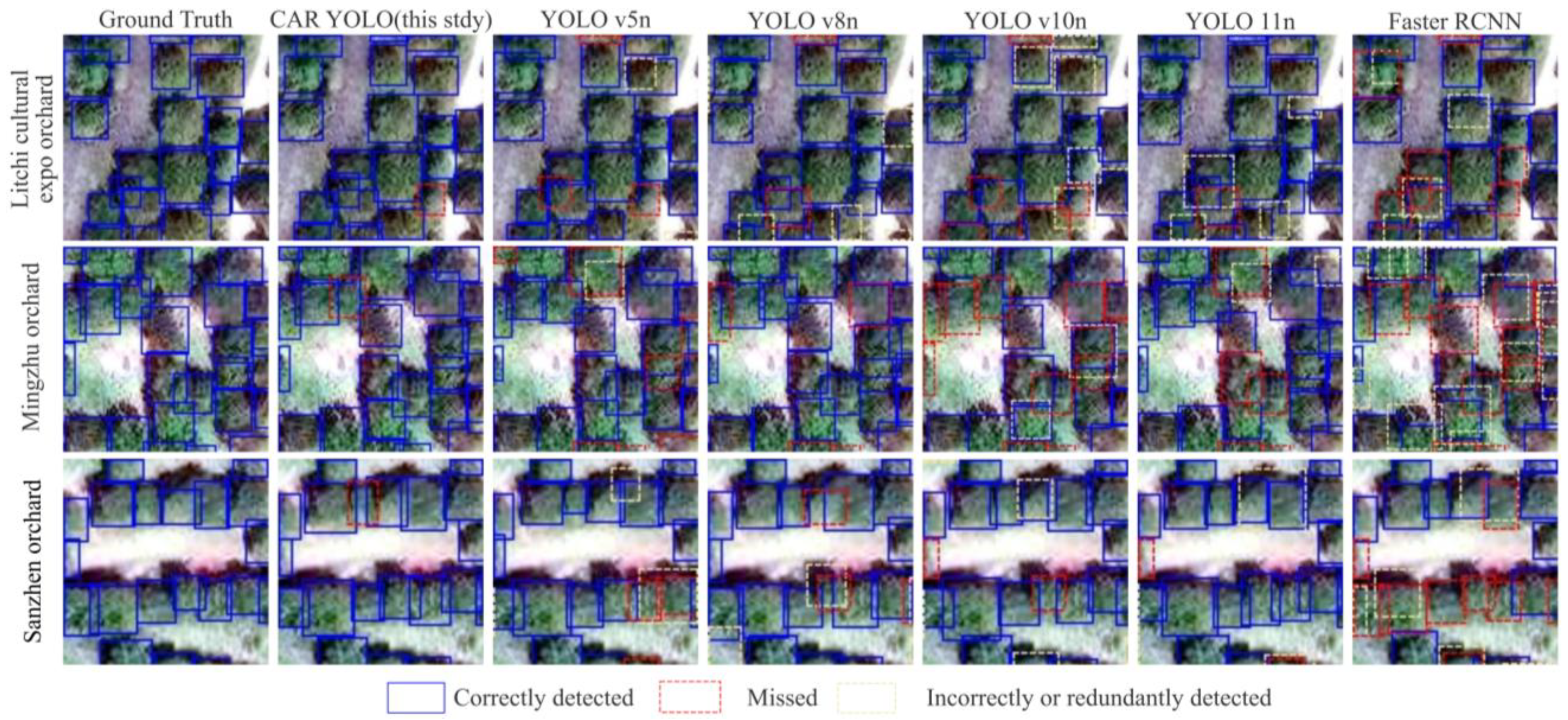

3.3. Results of the Comparison Experiment

4. Discussion

4.1. Improvement of YOLOv8n

4.2. Optimal Individual Litchi Tree Crown Detection Model

4.3. Potential Applications and Limitations

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Xie, J.; Jing, T.; Chen, B.; Peng, J.; Zhang, X.; He, P.; Xiao, A.; Lyu, S.; Li, J. Method for segmentation of litchi branches based on the improved DeepLabv3+. Agronomy 2022, 12, 2812. [Google Scholar] [CrossRef]

- Li, B.; Lu, H.; Wei, X.; Guan, S.; Zhang, Z.; Zhou, X.; Luo, Y. An improved rotating box detection model for litchi detection in natural dense orchards. Agronomy 2024, 14, 95. [Google Scholar] [CrossRef]

- Zhao, H.; Morgenroth, J.; Pearse, G. A systematic review of individual tree crown detection and delineation with convolutional neural networks (CNN). Curr. For. Rep. 2023, 9, 149–170. [Google Scholar] [CrossRef]

- Pu, R. Mapping tree species using advanced remote sensing technologies: A state-of-the-art review and perspective. J. Remote Sens. 2021, 2021, 9812624. [Google Scholar] [CrossRef]

- Zhang, C.; Marzougui, A.; Sankaran, S. High-resolution satellite imagery applications in crop phenotyping: An overview. Comput. Electron. Agric. 2020, 175, 105584. [Google Scholar] [CrossRef]

- Leckie, D.G.; Gougeon, F.A.; Walsworth, N. Stand delineation and composition estimation using semi-automated individual tree crown analysis. Remote Sens. Environ. 2003, 85, 355–369. [Google Scholar] [CrossRef]

- Bunting, P.; Lucas, R. The delineation of tree crowns in Australian mixed species forests using hyperspectral compact airborne spectrographic imager (CASI) data. Remote Sens. Environ. 2006, 101, 230–248. [Google Scholar] [CrossRef]

- Jing, L.; Hu, B.; Noland, T.; Li, J. An individual tree crown delineation method based on multi-scale segmentation of imagery. ISPRS J. Photogramm. Remote Sens. 2012, 70, 88–98. [Google Scholar] [CrossRef]

- Xu, X.; Zhou, Z.; Tang, Y.; Qu, Y. Individual tree crown detection from high spatial resolution imagery using a revised local maximum filtering. Remote Sens. Environ. 2021, 258, 112397. [Google Scholar] [CrossRef]

- Zheng, J.; Yuan, S.; Li, W.; Fu, H.; Yu, L.; Huang, J. A review of individual tree crown detection and delineation from optical remote sensing images. arXiv 2024, arXiv:2310.13481. [Google Scholar]

- Roth, S.I.; Leiterer, R.; Volpi, M.; Celio, E.; Schaepman, M.E.; Joerg, P.C. Automated detection of individual clove trees for yield quantification in northeastern Madagascar based on multi-spectral satellite data. Remote Sens. Environ. 2019, 221, 144–156. [Google Scholar] [CrossRef]

- Wang, Y.; Zhu, X.; Wu, B. Automatic detection of individual oil palm trees from UAV images using HOG features and an SVM classifier. Int. J. Remote Sens. 2019, 40, 7356–7370. [Google Scholar] [CrossRef]

- Weinstein, B.G.; Marconi, S.; Bohlman, S.; Zare, A.; White, E. Individual tree-crown detection in RGB imagery using self-supervised deep learning neural networks. Remote Sens. 2020, 11, 1309. [Google Scholar] [CrossRef]

- Mo, J.; Lan, Y.; Yang, D.; Wen, F.; Qiu, H.; Chen, X.; Deng, X. Deep learning-based instance segmentation method of litchi canopy from UAV-acquired images. Remote Sens. 2021, 13, 3919. [Google Scholar] [CrossRef]

- Wang, X.; Wang, X.; Zhao, Q.; Jiang, P.; Zheng, Y.; Yuan, L.; Yuan, P. LDS-YOLO: A lightweight small object detection method for dead trees from shelter forest. Comput. Electron. Agric. 2022, 198, 107035. [Google Scholar] [CrossRef]

- Xiong, Y.; Zhao, Q.; Jiang, P.; Zheng, Y.; Yuan, L.; Yuan, P. Detecting and mapping individual fruit trees in complex natural environments via UAV remote sensing and optimized YOLOv5. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 7554–7576. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Wang, Y.; Wang, C.; Zhang, H.; Dong, Y.; Wei, S. Automatic ship detection based on RetinaNet using multi-resolution Gaofen-3 imagery. Remote Sens. 2019, 11, 531. [Google Scholar] [CrossRef]

- Vijayakumar, A.; Vairavasundaram, S. YOLO-based object detection models: A review and its applications. Multimed. Tools Appl. 2024, 83, 83535–83574. [Google Scholar] [CrossRef]

- Zheng, J.; Fu, H.; Li, W.; Wu, W.; Yu, L.; Yuan, S.; Tao, T.K.W.; Pang, T.K.; Kanniah, K.D. Growing status observation for oil palm trees using unmanned aerial vehicle (UAV) images. ISPRS J. Photogramm. Remote Sens. 2021, 173, 95–121. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Li, Z.; Yoshie, O.; Sun, J. OTA: Optimal transport assignment for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 303–312. [Google Scholar]

- Lee, S.S.; Lim, L.G.; Palaiahnakote, S.; Cheong, J.X.; Lock, S.M.; Ayub, M.N. Oil palm tree detection in UAV imagery using an enhanced RetinaNet. Comput. Electron. Agric. 2024, 227, 109530. [Google Scholar] [CrossRef]

- Liu, X.; Lei, B.; Chen, P.; Zhou, C. Study on remote sensing monitoring and spatial variation of litchi under different management modes. Guangdong Agric. Sci. 2022, 49, 145–154. [Google Scholar]

- Gong, J.; Liu, Y.; Chen, W. Land suitability evaluation for development using a matter-element model: A case study in Zengcheng, Guangzhou, China. Land Use Policy 2012, 29, 464–472. [Google Scholar] [CrossRef]

- Zhao, F.; Huang, M. Exploring the non-use value of important agricultural heritage system: Case of Lingnan Litchi cultivation system (Zengcheng) in Guangdong, China. Sustainability 2020, 12, 3638. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. TOOD: Task-aligned one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 3490–3499. [Google Scholar]

- Cao, Y.; Chen, K.; Loy, C.C.; Lin, D. Prime sample attention in object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11583–11591. [Google Scholar]

- Cheng, Z.; Qi, L.; Cheng, Y.; Wu, Y.; Zhang, H. Interlacing orchard canopy separation and assessment using UAV images. Remote Sens. 2020, 12, 767. [Google Scholar] [CrossRef]

- Tong, F.; Zhang, Y. Individual tree crown delineation in high resolution aerial RGB imagery using StarDist-based model. Remote Sens. Environ. 2025, 319, 114618. [Google Scholar] [CrossRef]

- Wang, G.; Chen, Y.; An, P.; Hong, H.; Hu, J.; Huang, T. UAV-YOLOv8: A small-object-detection model based on improved YOLOv8 for UAV aerial photography scenarios. Sensors 2023, 23, 7190. [Google Scholar] [CrossRef]

- Xue, Z.; Xu, R.; Bai, D.; Lin, H. YOLO-tea: A tea disease detection model improved by YOLOv5. Forests 2023, 14, 415. [Google Scholar] [CrossRef]

- Xu, W.; Wan, Y. ELA: Efficient local attention for deep convolutional neural networks. arXiv 2024, arXiv:2403.01123. [Google Scholar] [CrossRef]

- Liu, S.; Huang, D. Receptive field block net for accurate and fast object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 385–400. [Google Scholar]

- Chen, P.; Xia, T.; Yang, G. A new strategy for weed detection in maize fields. Eur. J. Agron. 2024, 159, 127289. [Google Scholar] [CrossRef]

- Zhu, B.; Wang, J.; Zhang, J.; Zong, F.; Liu, S.; Li, Z.; Sun, J. AutoAssign: Differentiable label assignment for dense object detection. arXiv 2020, arXiv:2007.03496. [Google Scholar] [CrossRef]

- Chen, H.; Su, L.; Shu, R.; Li, T.; Yin, F. EMB-YOLO: A lightweight object detection algorithm for isolation switch state detection. Appl. Sci. 2024, 14, 9779. [Google Scholar] [CrossRef]

- Sun, P.; Zhang, R.; Jiang, Y.; Kong, T.; Xu, C.; Zhan, W.; Luo, P. Sparse R-CNN: End-to-end object detection with learnable proposals. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 14454–14463. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Khanam, R.; Hussain, M. YOLOv11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

| Orchard | Count | Individual Tree Crowns | Coverage (%) | Density (Trees/100 m2) | |||

|---|---|---|---|---|---|---|---|

| Max. (m2) | Min. (m2) | Mean (m2) | Std. (m2) | ||||

| Litchi cultural expo orchard | 1764 | 74.65 | 4.34 | 27.21 | 11.52 | 44.91 | 1.65 |

| Sanzhen orchard | 3131 | 131.18 | 5.28 | 25.32 | 11.79 | 47.97 | 1.89 |

| Mingzhu orchard | 1850 | 211.66 | 3.73 | 31.14 | 15.91 | 53.43 | 1.72 |

| Datasets | Improvement Strategy | Evaluation Indicator | |||||

|---|---|---|---|---|---|---|---|

| G-TAL | C2ELA | RFB | Precision | Recall | AP50 | F1 | |

| Litchi cultural expo orchard | Original model | 0.7753 | 0.7434 | 0.7783 | 0.7590 | ||

| √ | 0.7878 | 0.7447 | 0.7890 | 0.7657 | |||

| √ | 0.7955 | 0.7335 | 0.7969 | 0.7632 | |||

| √ | 0.7920 | 0.7285 | 0.7969 | 0.7589 | |||

| √ | √ | 0.7499 | 0.7422 | 0.7846 | 0.7460 | ||

| √ | √ | 0.7978 | 0.7397 | 0.8077 | 0.7677 | ||

| √ | √ | 0.7905 | 0.7518 | 0.8087 | 0.7706 | ||

| √ | √ | √ | 0.8003 | 0.7237 | 0.8080 | 0.7601 | |

| Mingzhu orchard | Original model | 0.6682 | 0.6683 | 0.6792 | 0.6683 | ||

| √ | 0.7150 | 0.6345 | 0.6975 | 0.6724 | |||

| √ | 0.7110 | 0.6411 | 0.7015 | 0.6743 | |||

| √ | 0.7120 | 0.6478 | 0.6968 | 0.6784 | |||

| √ | √ | 0.6969 | 0.6502 | 0.6842 | 0.6728 | ||

| √ | √ | 0.7024 | 0.6514 | 0.6926 | 0.6759 | ||

| √ | √ | 0.7080 | 0.6562 | 0.6939 | 0.6811 | ||

| √ | √ | √ | 0.7067 | 0.6755 | 0.7069 | 0.6908 | |

| Sanzhen orchard | Original model | 0.7844 | 0.7423 | 0.7895 | 0.7628 | ||

| √ | 0.7800 | 0.7665 | 0.8042 | 0.7732 | |||

| √ | 0.8071 | 0.7599 | 0.8111 | 0.7828 | |||

| √ | 0.7891 | 0.7778 | 0.8179 | 0.7834 | |||

| √ | √ | 0.7748 | 0.7540 | 0.8127 | 0.7643 | ||

| √ | √ | 0.7841 | 0.7540 | 0.8094 | 0.7688 | ||

| √ | √ | 0.7764 | 0.7577 | 0.8102 | 0.7669 | ||

| √ | √ | √ | 0.7850 | 0.7673 | 0.8121 | 0.7761 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xia, T.; Chen, P.; Liu, X. A New and Improved YOLO Model for Individual Litchi Crown Detection with High-Resolution Satellite RGB Images. Agronomy 2025, 15, 2439. https://doi.org/10.3390/agronomy15102439

Xia T, Chen P, Liu X. A New and Improved YOLO Model for Individual Litchi Crown Detection with High-Resolution Satellite RGB Images. Agronomy. 2025; 15(10):2439. https://doi.org/10.3390/agronomy15102439

Chicago/Turabian StyleXia, Tianshun, Pengfei Chen, and Xiaoke Liu. 2025. "A New and Improved YOLO Model for Individual Litchi Crown Detection with High-Resolution Satellite RGB Images" Agronomy 15, no. 10: 2439. https://doi.org/10.3390/agronomy15102439

APA StyleXia, T., Chen, P., & Liu, X. (2025). A New and Improved YOLO Model for Individual Litchi Crown Detection with High-Resolution Satellite RGB Images. Agronomy, 15(10), 2439. https://doi.org/10.3390/agronomy15102439