1. Introduction

With the steady growth of domestic and international market demand, China’s kiwifruit production and planting area have also been expanding continuously. As the world’s leading producer of kiwifruit, China boasts an annual output exceeding 3 million tons [

1], ranking first globally in both cultivation area and production volume [

2]. Kiwifruit has become a vital cash crop for the country. In this context, harvesting, being the most labor-intensive and time-consuming stage of the production process, underscores its critical importance [

3]. Currently, kiwifruit is primarily harvested manually, which involves high labor intensity. Additionally, as China’s population ages, the available workforce is shrinking, leading to rising agricultural production costs [

4]. Additionally, kiwifruit harvesting must occur within a narrow timeframe to preserve quality; delayed picking causes softening, compromising subsequent transport and storage [

5]. Therefore, intelligent harvesting robot development yields considerable potential. Achieving precise fruit recognition by mitigating complex environmental interference represents a critical technical challenge in developing intelligent kiwifruit harvesting systems. Traditional visual detection methods typically follow the technical workflow of “image preprocessing-feature engineering-classification recognition” [

6], utilizing techniques such as color space transformation [

7] (e.g., HSV, Lab), morphological operations [

8], and texture feature segmentation [

9] for target detection. While these algorithms demonstrate some effectiveness under ideal lighting conditions and in unobstructed scenarios, their recognition accuracy and robustness significantly decline when faced with complex environmental conditions commonly encountered in natural settings, such as leaf obstruction, fruit overlap, and sudden changes in lighting (e.g., strong light reflection or shadow coverage). This exposes inherent limitations such as poor environmental adaptability, significant fluctuations in misclassification rates, and inability to meet real-time operational requirements [

10]. These constraints have motivated the accelerated adoption and advancement of deep learning-based detection methodologies in this domain.

In agricultural intelligent application scenarios, deep learning methods have demonstrated breakthrough progress in visual tasks such as fruit phenotyping and pest and disease identification through multi-layer nonlinear feature extraction mechanisms [

11,

12]. Compared to algorithms that rely on manually designed features, models based on convolutional neural networks [

13] or Transformer architectures [

14] leverage their robust feature self-learning capabilities and end-to-end optimization properties. This not only significantly enhances the robustness of object detection in complex field environments (e.g., continuous tracking of occluded fruits) but also achieves dual breakthroughs in detection accuracy (AP values improved by 15–30%) and processing efficiency (FPS reaching 40+) under the guidance of advanced frameworks such as YOLO [

15] and Mask R-CNN [

16] and other advanced frameworks, achieving dual breakthroughs in detection accuracy (AP values improved by 15–30%) and processing efficiency (FPS reaching 40+), providing innovative solutions for the visual perception systems of agricultural equipment. Dai et al. [

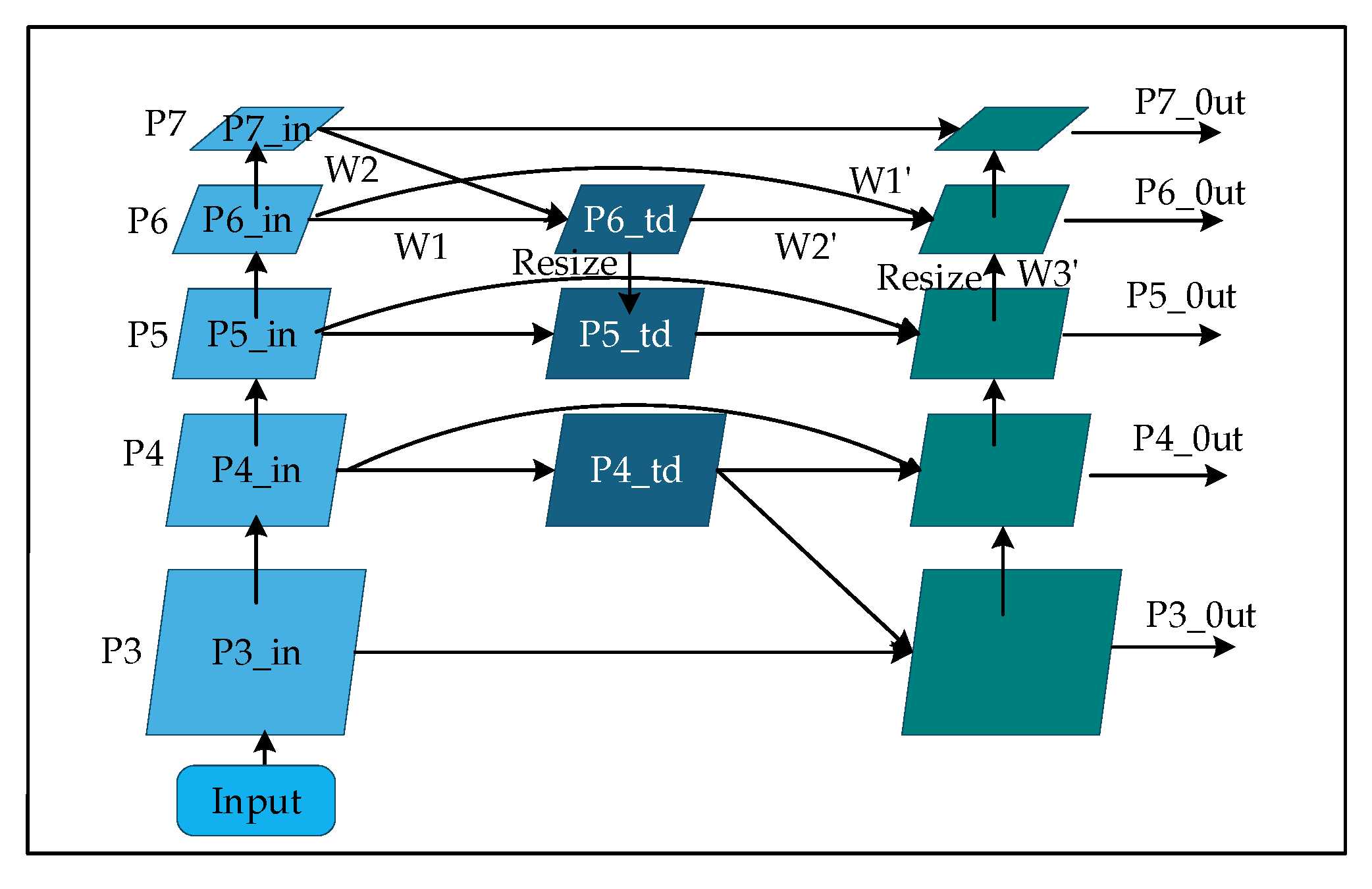

17] built an improved model for precise kiwifruit detection based on the YOLOv5s model, combining stereo vision technology with the Coordinate Attention mechanism and the Bidirectional Feature Pyramid Network (BiFPN). Liu et al. [

18] achieved accurate detection of green apples by introducing the ResNeXt network into DETR and adding a variable attention mechanism. Jia et al. [

19] significantly improved apple detection accuracy by modifying the Mask R-CNN model to combine residual networks (ResNet) with dense connection convolutional networks (DenseNet). Xie et al. [

20] presented YOLOv5-Litchi, a YOLOv5-based detection framework for lychee targets in agricultural settings. By introducing the CBAM attention mechanism and using non-maximum suppression (NMS) as the prediction box fusion method, they achieved significant results in lychee detection. Li et al. [

21] proposed an improved YOLOv7-tiny model, which employs lightweight Ghost convolutions, coordinate attention (CA) modules, and WIoU loss functions to achieve accurate identification of strawberries at different growth stages. Sun et al. [

22] optimized the YOLOv7 model by adding the MobileOne module, SPPFCSPC pyramid pooling, and Focal-EIoU loss function, thereby improving grape detection accuracy and reducing model parameters. Li et al. [

23] proposed a YOLOv7-CS model, which introduced SPD-Conv detection heads, a global attention mechanism (GAM), and a Wise-IoU loss function, achieving good results in bayberry detection. Lv et al. [

24] used YOLOv7 as the base model, constructing PC-ELAN and GS-ELAN modules and embedding a BiFormer attention mechanism to achieve precise identification of citrus fruits.

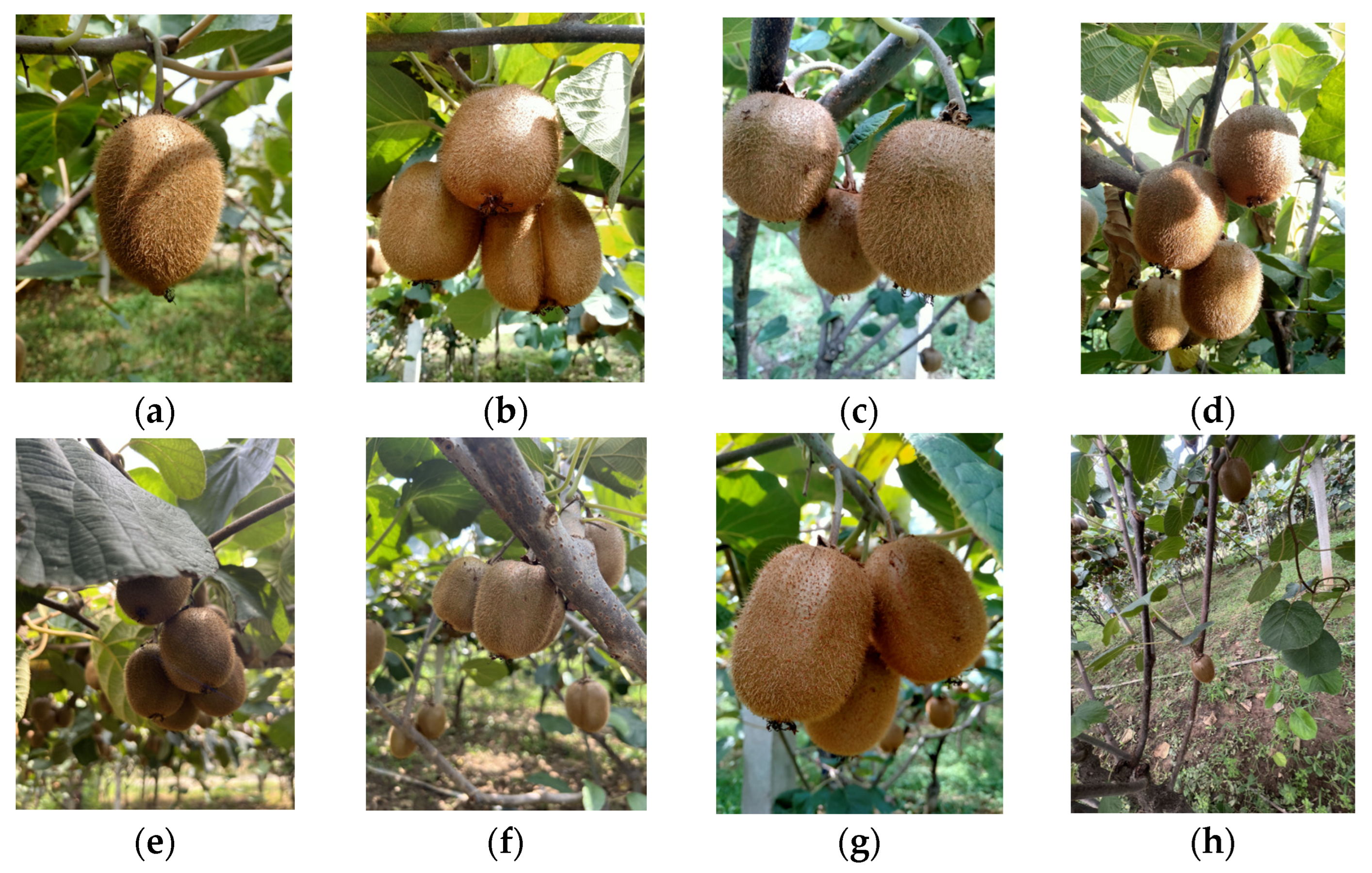

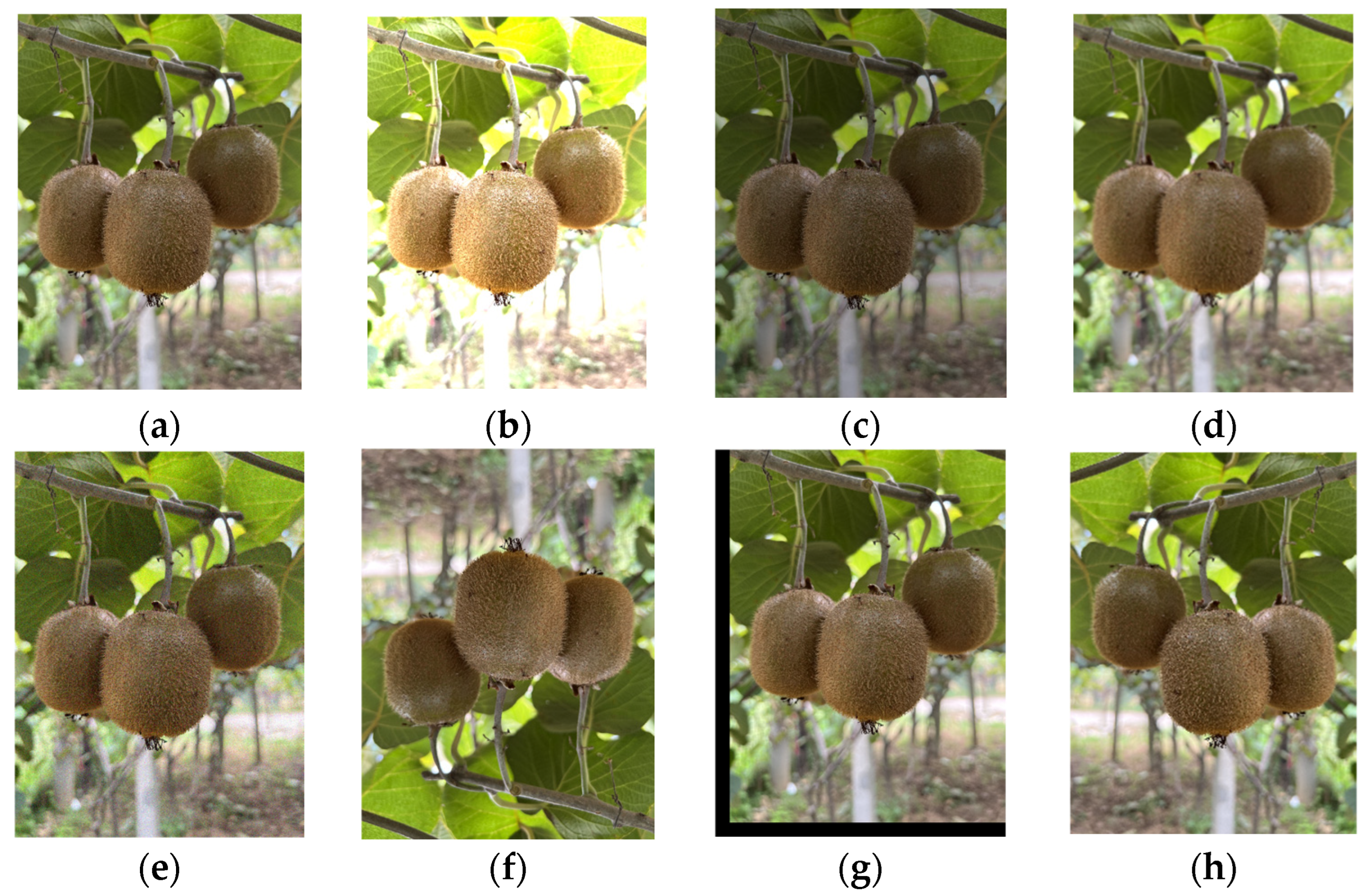

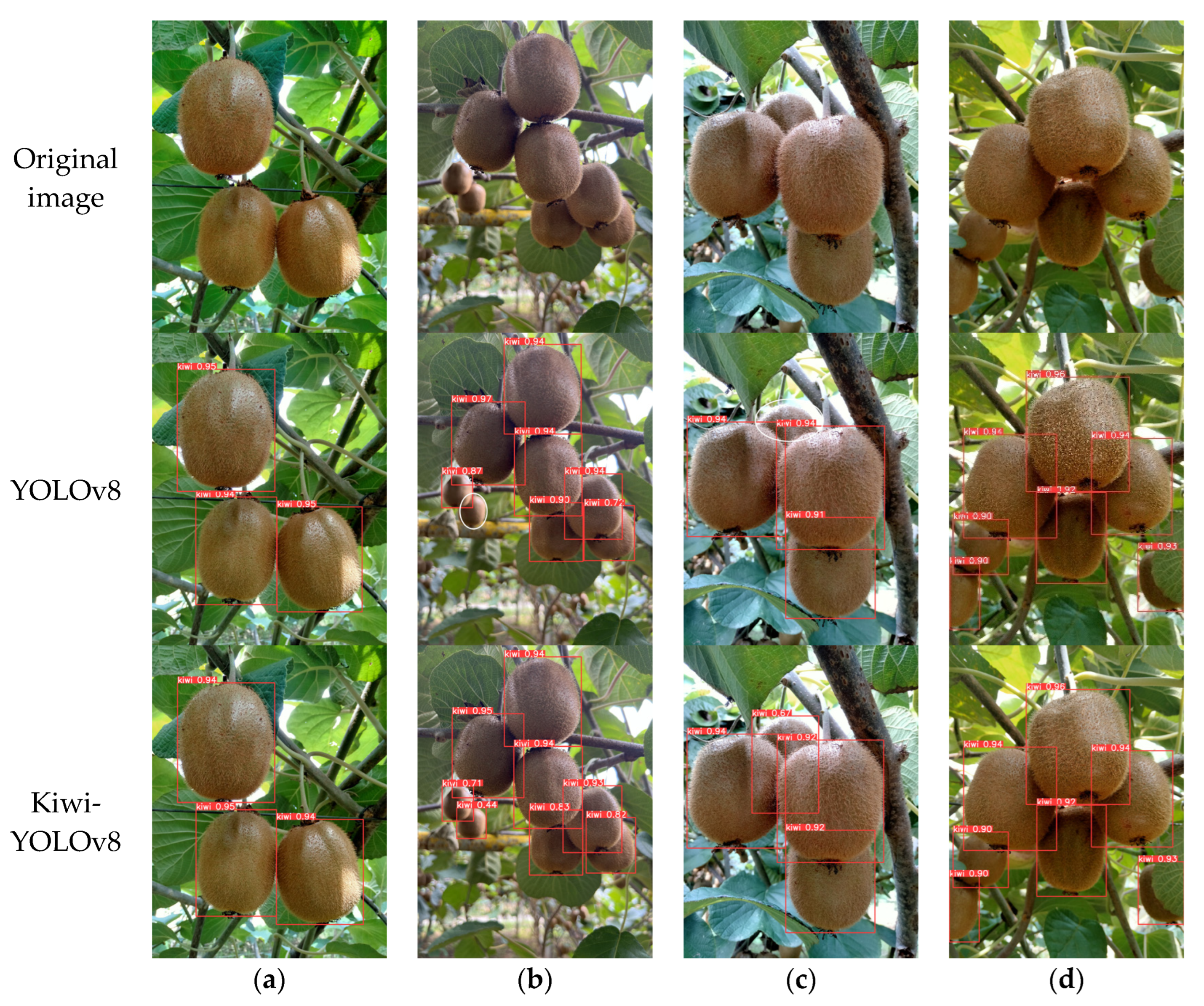

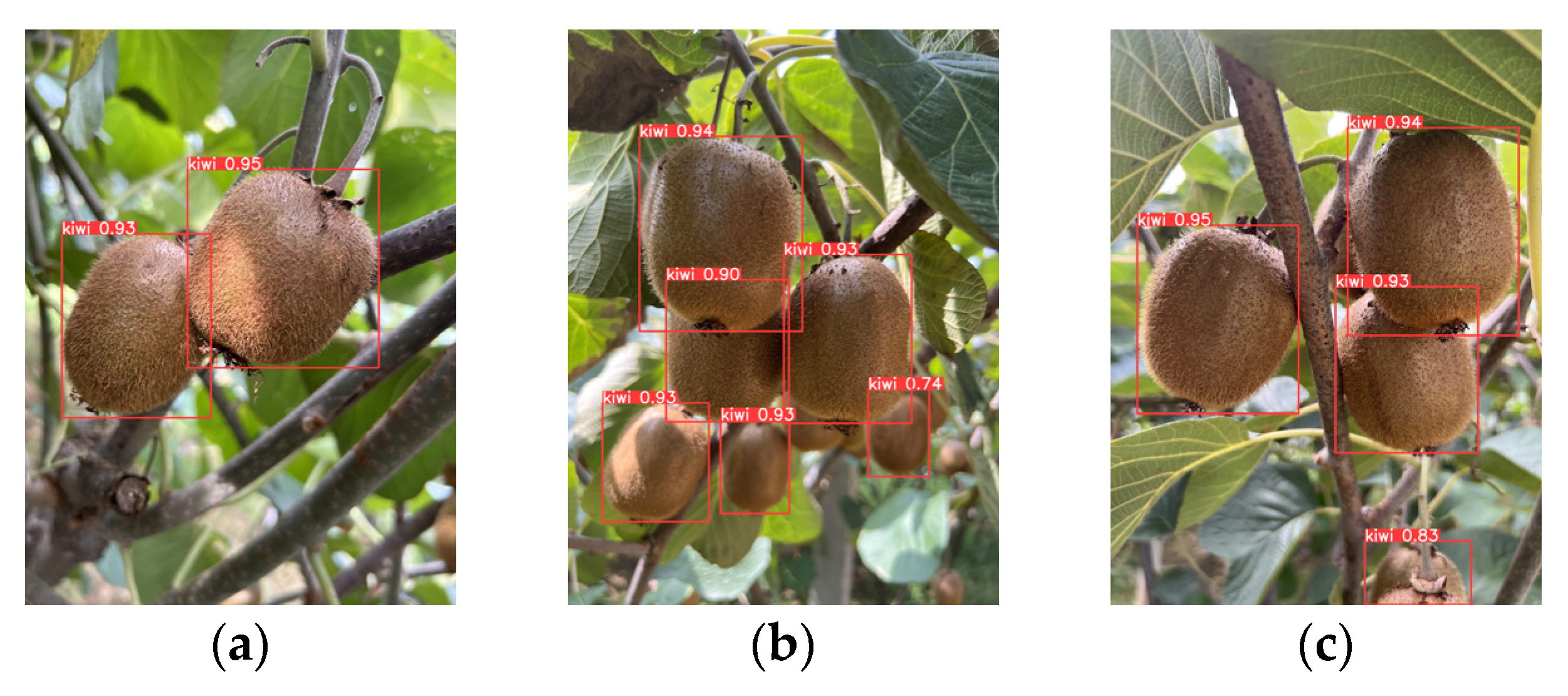

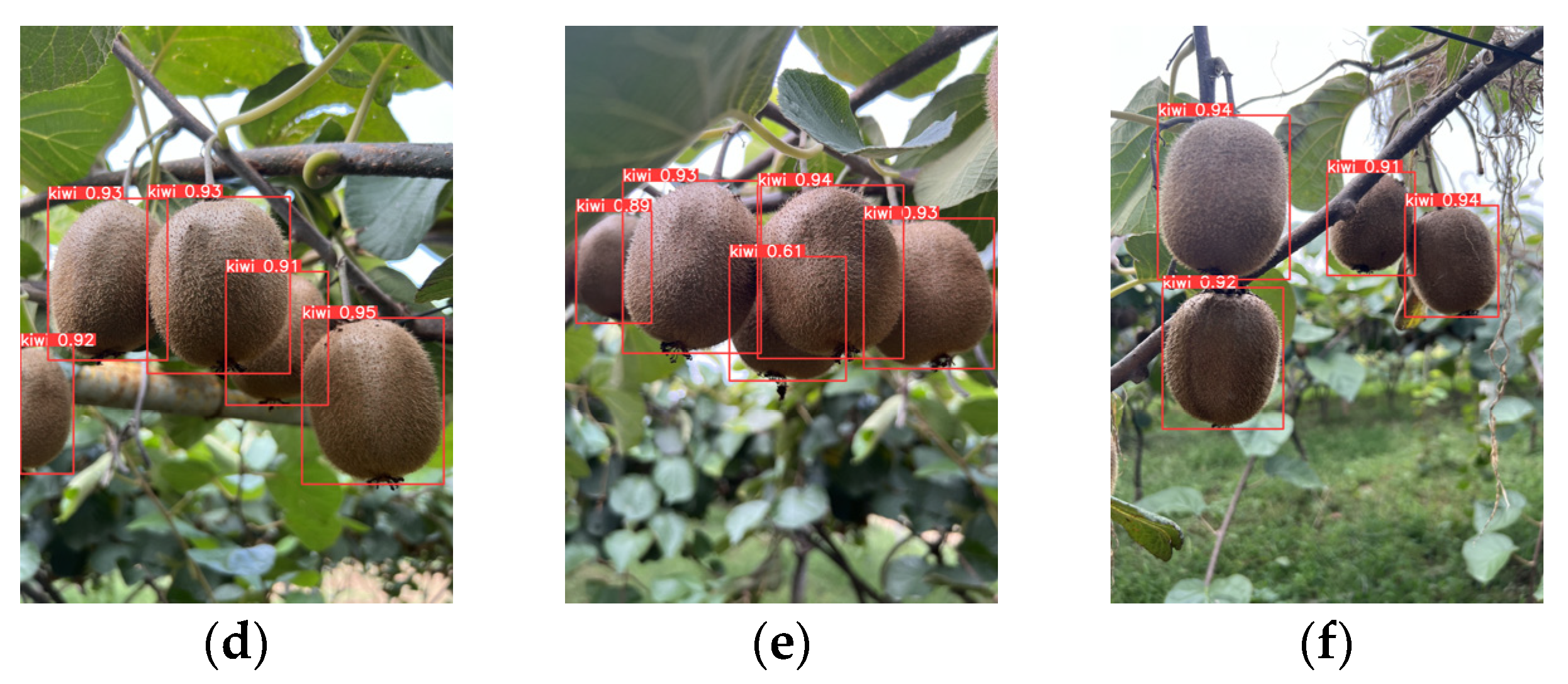

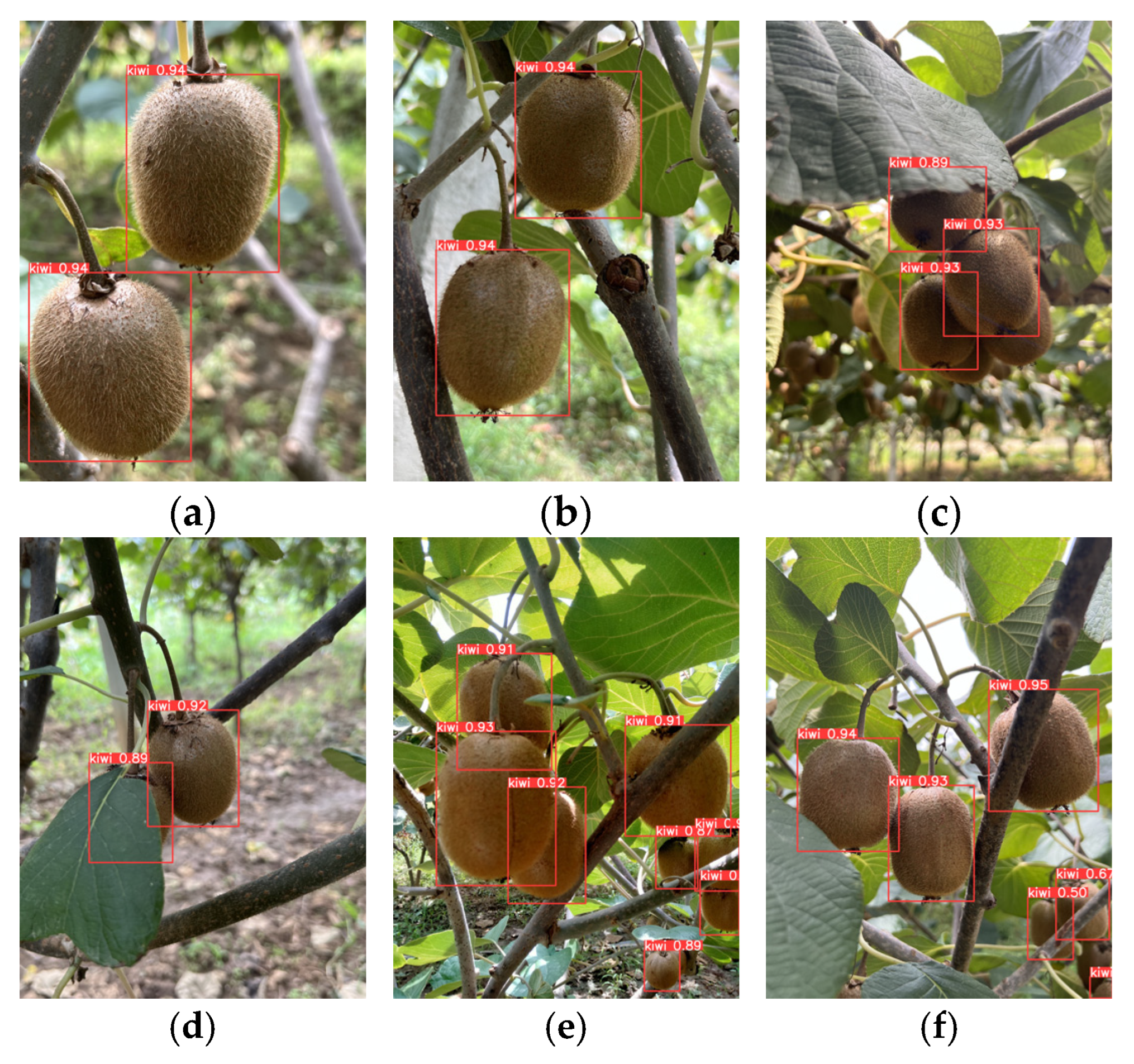

While prior research has achieved substantial improvements in fruit recognition algorithms, most experiments are still based on idealized environmental parameter settings. Specifically, in the context of kiwifruit orchards, the complex environmental conditions pose multiple challenges to detection technology: first, the high-density planting system formed by the main trunk-type wide-row dense planting structure results in high canopy closure, with fruit spatial distribution exhibiting typical cluster-like characteristics, and widespread phenomena of multiple branch and leaf obstructions and fruit overlap; second, the strong canopy heterogeneity caused by the cultivation mode results in uneven light intensity distribution, with significant differences in visible light reflectance characteristics among fruits on different sides of individual trees. The interaction between these morphological features and optical properties affects the precise detection of kiwifruit. To overcome these limitations, this study introduces Kiwi-YOLO, a novel deep learning model designed specifically for robust kiwifruit detection in complex orchard environments.

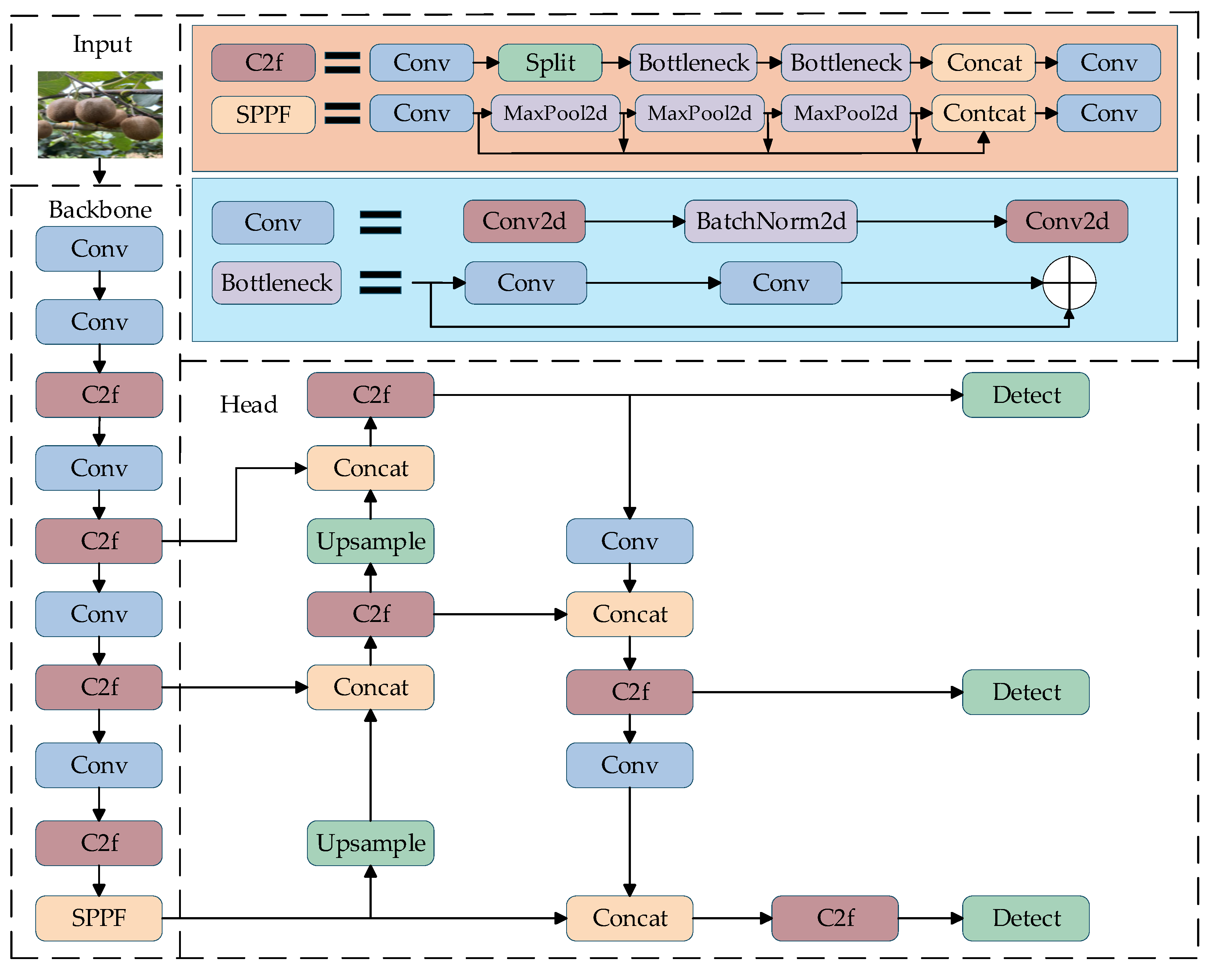

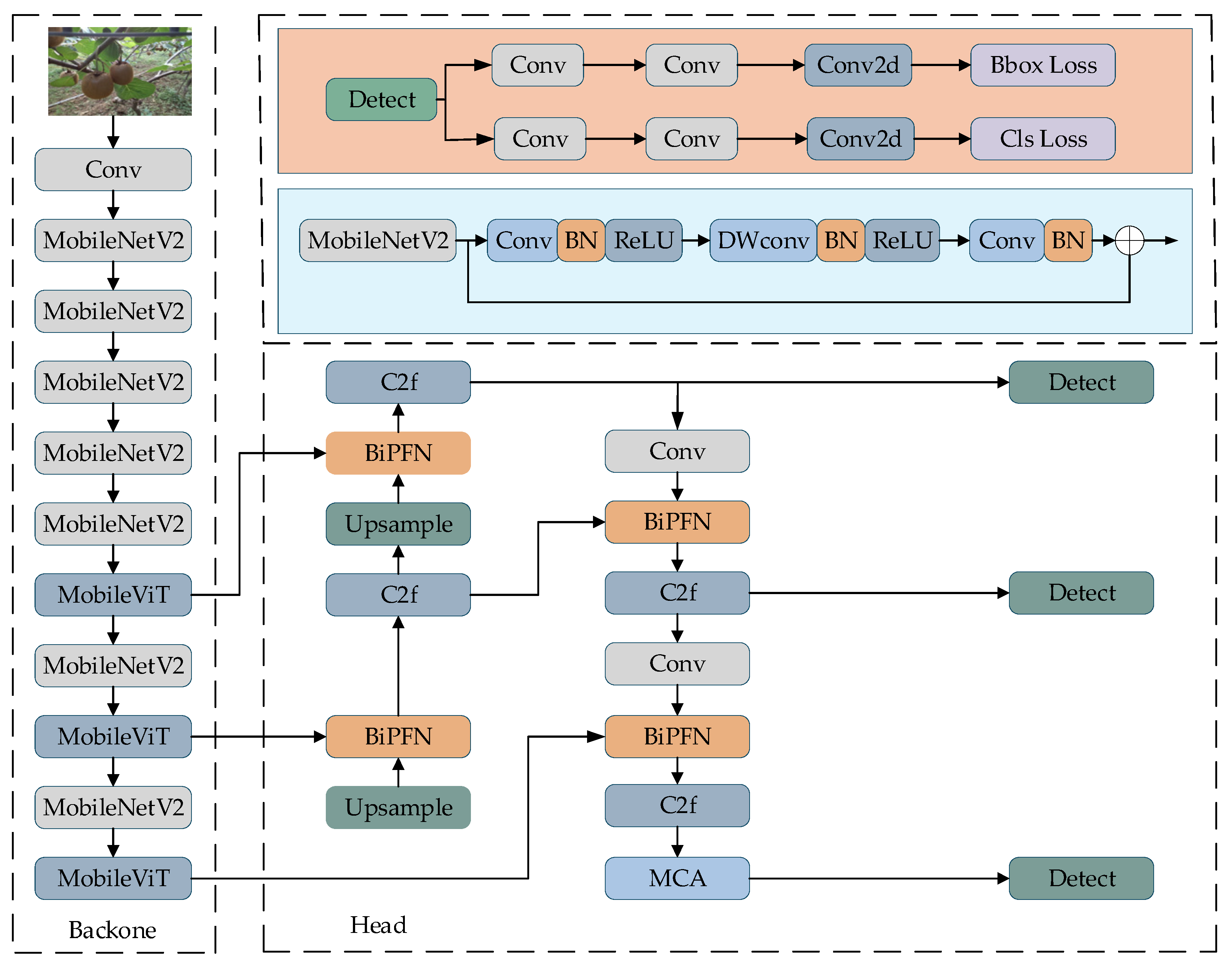

This study made the following improvements to the proposed Kiwi-YOLO kiwifruit detection network:

- (1)

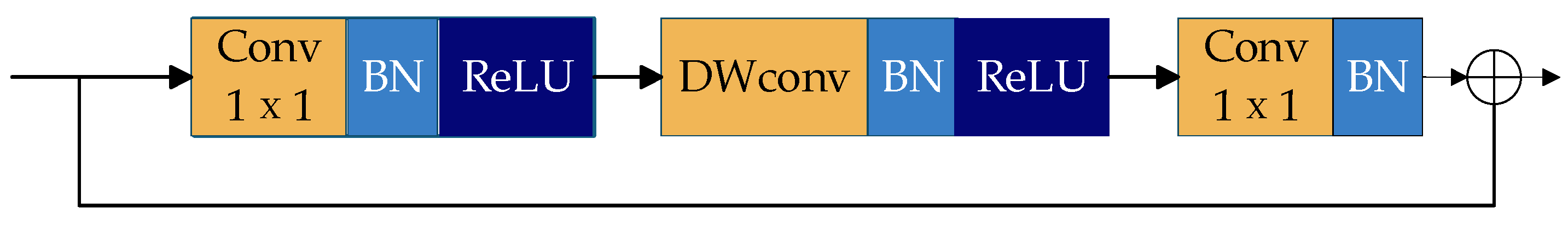

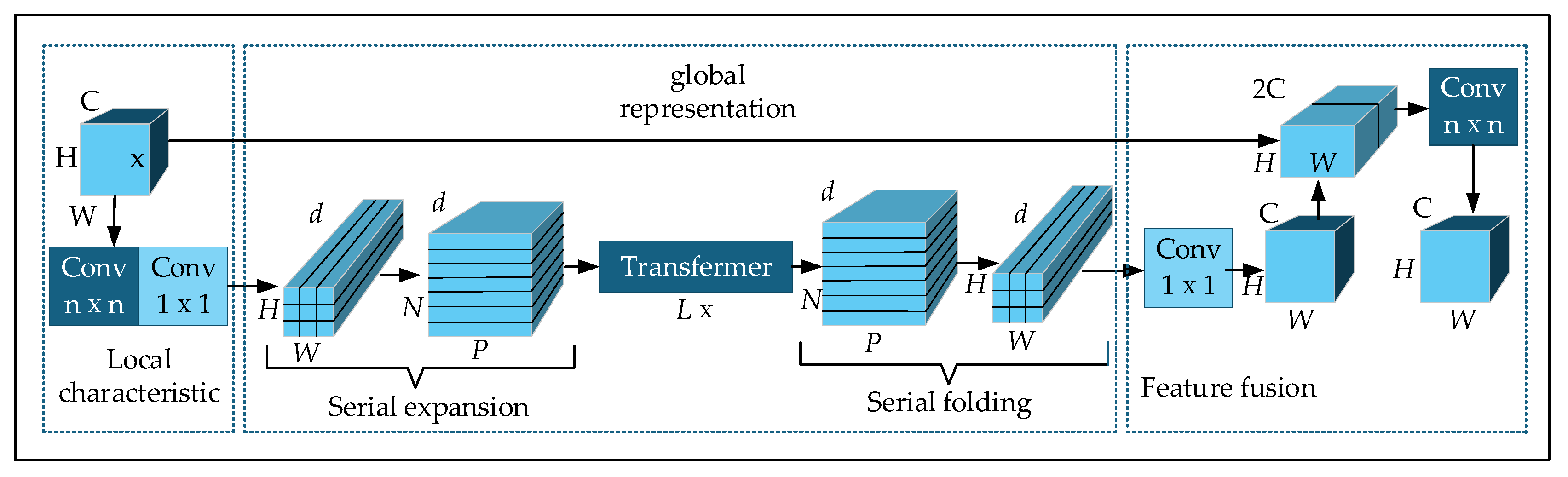

In the main network, the Conv and C2f modules are replaced with MobileViTv1 modules to reduce computational load and parameter count, thereby improving inference efficiency on mobile devices.

- (2)

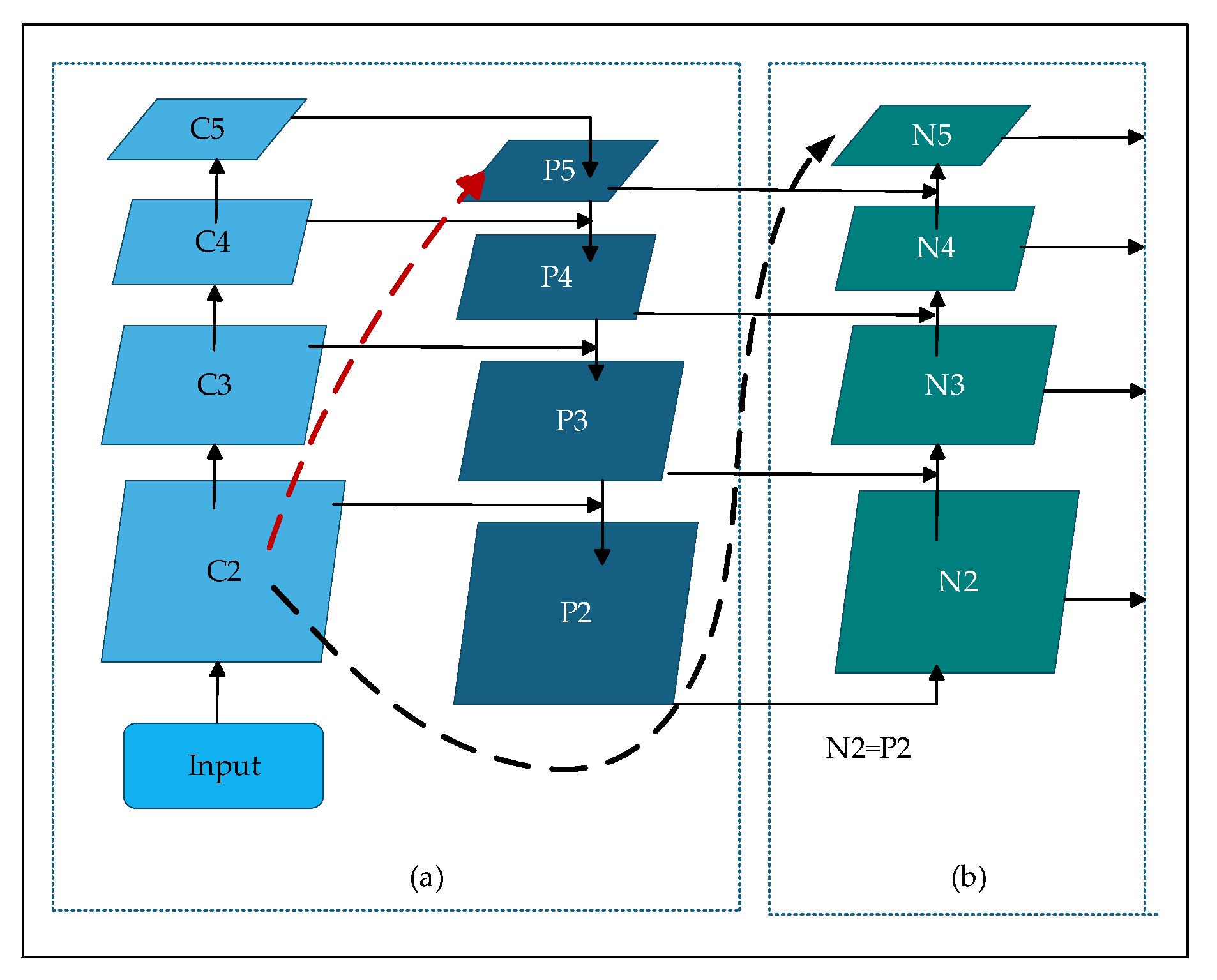

The bidirectional feature pyramid network (BiFPN) is introduced into the feature fusion layer of the base model to replace the original PANet structure, enhancing the model’s ability to distinguish between obstacles and backgrounds through the efficient fusion of cross-scale features.

- (3)

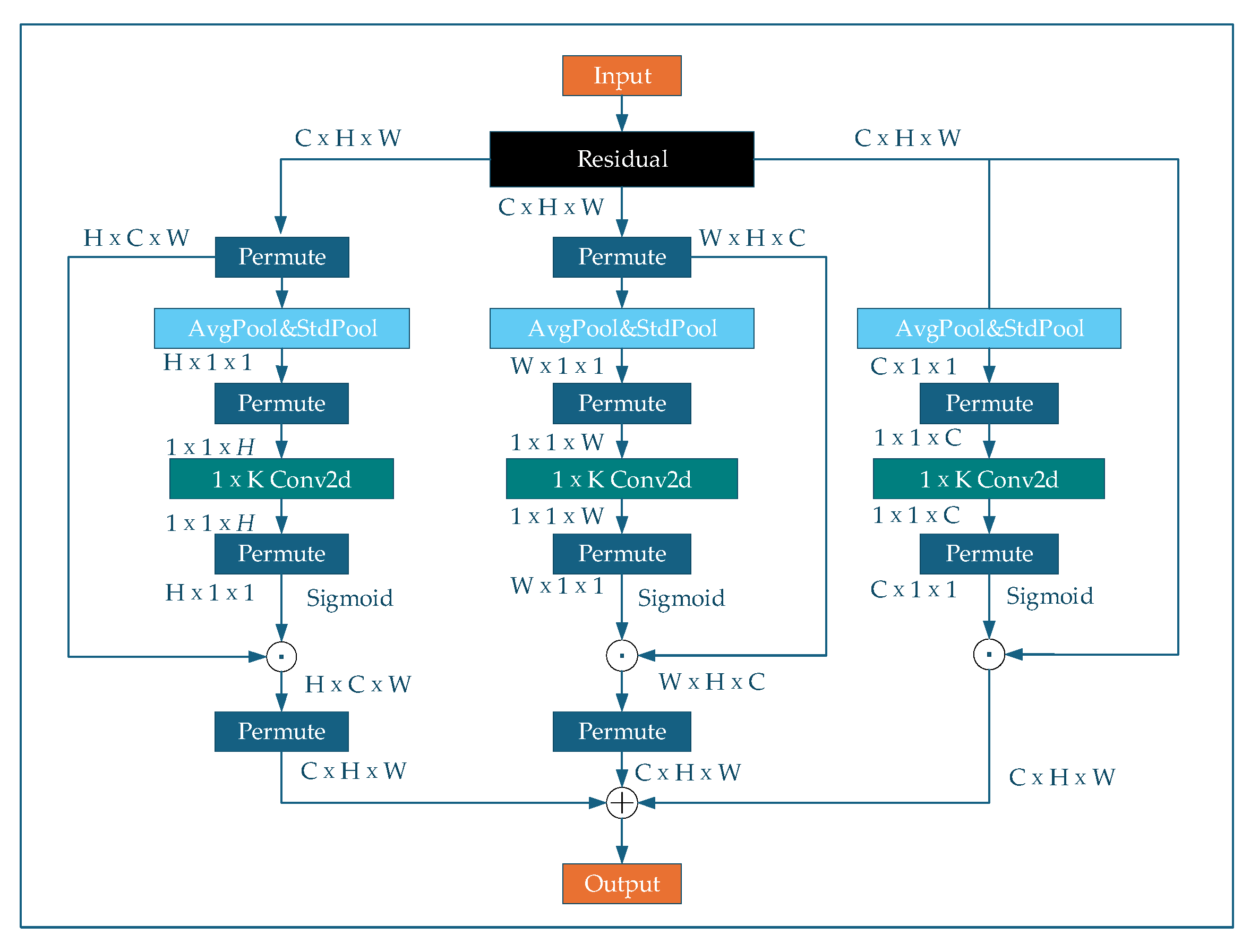

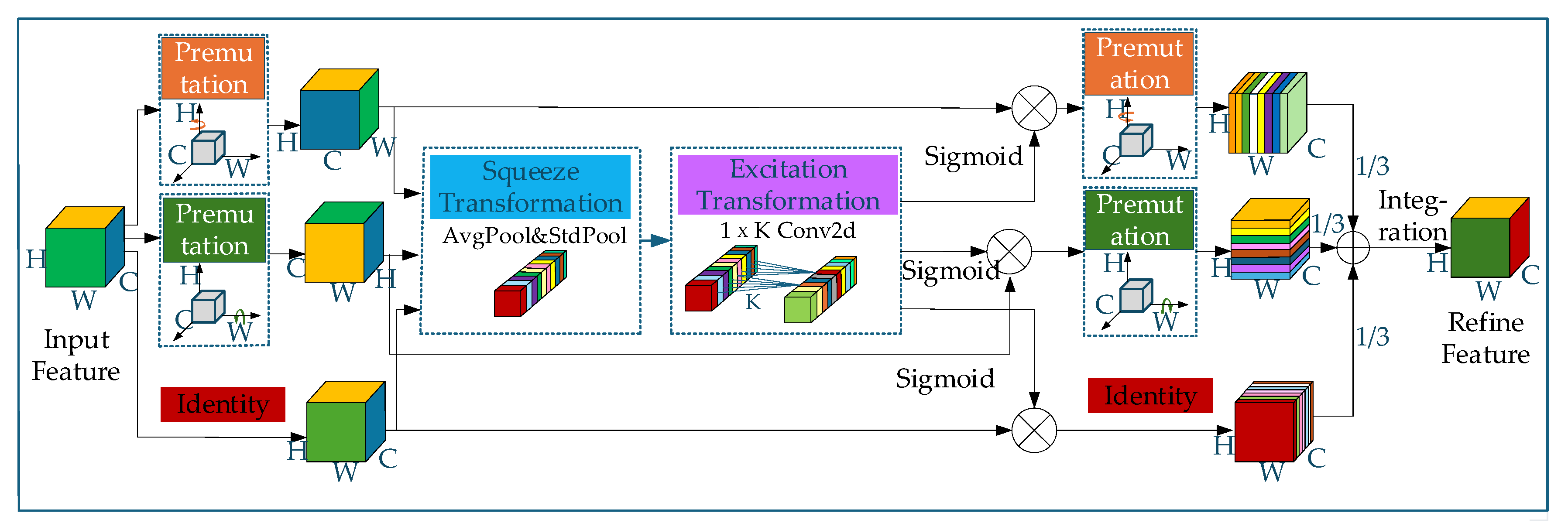

To improve the model’s interference resistance and transfer performance, a multi-dimensional collaborative attention (MCA) mechanism is introduced to strengthen the robustness of feature expression.

- (4)

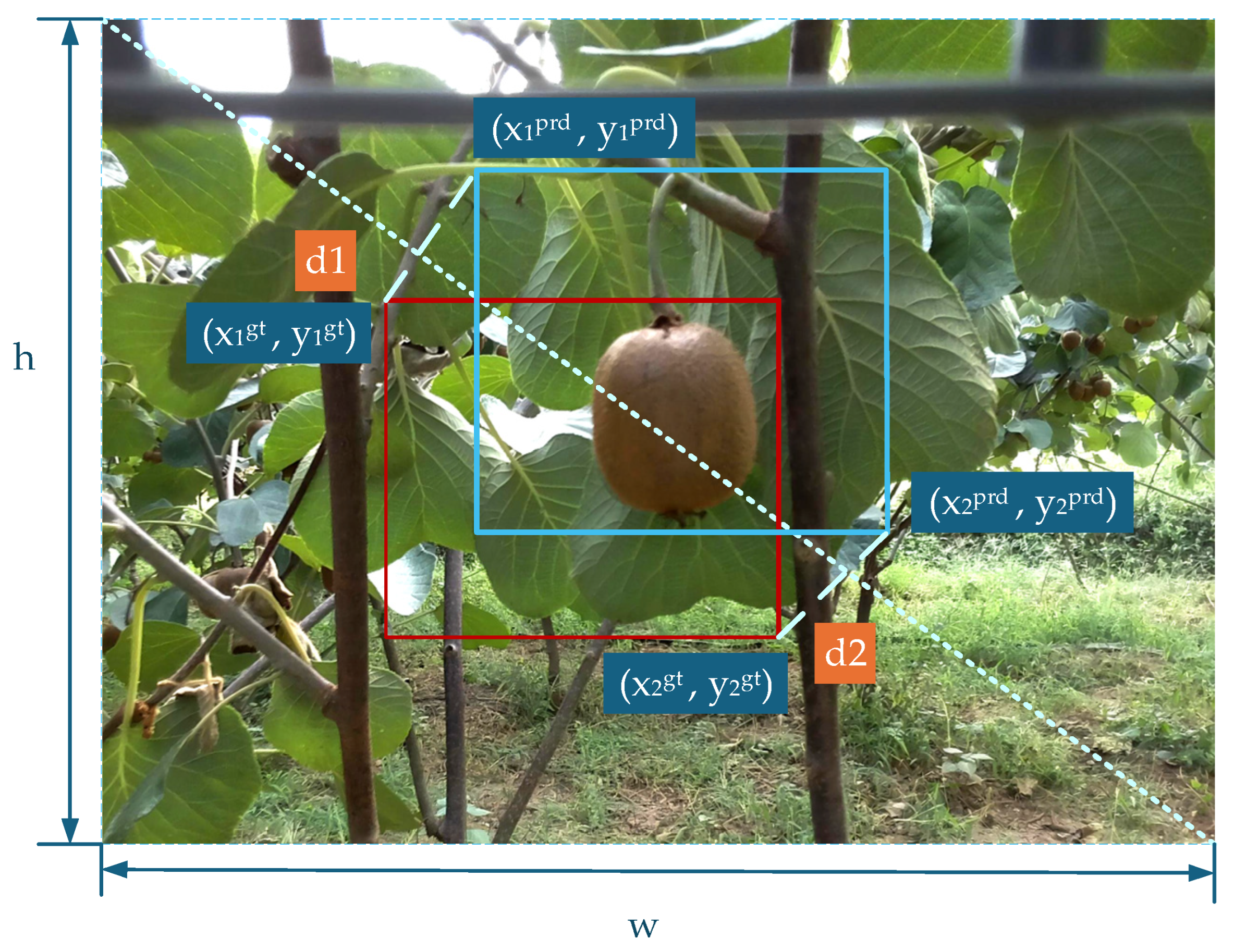

By using the MPDIoU loss function and minimizing the distance between the corners of the bounding box, we can effectively mitigate geometric distortion of the detection box caused by sample differences, accelerate model convergence, and improve target localization accuracy.

5. Conclusions

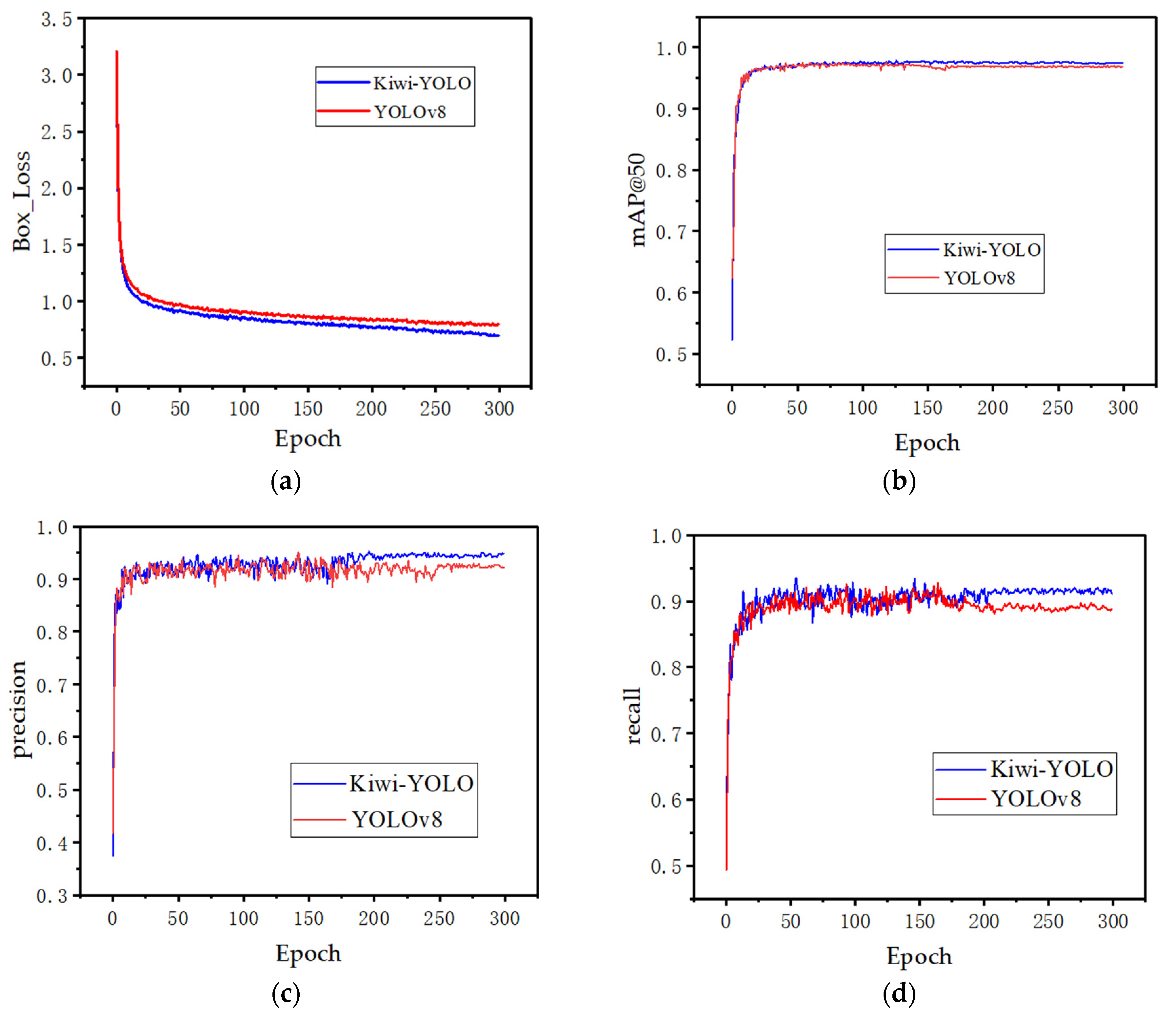

To overcome the challenges of limited adaptability and high computational demands in kiwifruit detection models operating in natural environments, this research enhances the YOLOv8 framework and introduces the Kiwi-YOLO model, attaining an optimal balance between inference efficiency and recognition precision. Firstly, the MobileViTv1 model is used to replace the backbone network, effectively reducing its parameter count and computational load. Secondly, the BiFPN is integrated into the neck of the base model, replacing the original PANet, thereby enhancing the model’s ability to distinguish between backgrounds and obstacles. Additionally, the MPDIoU loss function is utilized to minimize inter-vertex distances of bounding boxes, mitigating detection distortion induced by sample heterogeneity, which accelerates convergence and enhances localization precision. Furthermore, the MCA module is instituted to augment the model’s robustness and generalization capabilities. Kiwi-YOLO demonstrates outstanding performance in complex environments for kiwifruit recognition, achieving precision (P), recall (R), and mean average precision (mAP) of 94.4%, 98.9%, and 97.7%, respectively. On the kiwifruit dataset in an orchard environment, Kiwi-YOLO demonstrates the best overall performance compared to mainstream models and significantly reduces the false negative rate. Compared to the baseline model YOLOv8, its parameter count and floating-point operations (FLOPs) are reduced by 19.71 M and 2.8 G, respectively, while the inference speed (FPS) is improved by 2.73 f·s−1, enhancing its compatibility with resource-constrained mobile platforms. Additionally, in experiments involving complex scene instance recognition with varying lighting conditions and occlusions, Kiwi-YOLO attains superior detection accuracy with enhanced environmental robustness, enabling reliable visual perception for automated kiwifruit harvesting in occlusion-intensive orchards.

Despite its strong performance in detecting kiwifruit under complex conditions, the Kiwi-YODO model’s generalization capability still requires further enhancement. First, the current training dataset primarily includes images of kiwifruit at the mature stage (with yellowish-brown or brownish-brown skin), lacking samples of unripe kiwifruit (dark green). This lack of sample diversity may limit the model’s performance in identifying kiwifruit of different colors and maturity levels. Second, the dataset in this study is primarily based on red-fleshed kiwifruit and was collected from dwarf-cultivated orchards. This variety accounts for a significant proportion of local cultivation areas and is the most representative type in commercial production. Prioritizing its detection issues can directly address industrial needs. In the early stages of this study, the focus was on addressing general environmental disturbances such as “lighting and obstruction,” so the variables related to variety and cultivation mode were temporarily simplified. Additionally, due to research progress issues and the lack of relevant technology, this study did not address issues such as target tracking. Finally, this study focuses on addressing the unique challenges of kiwifruit harvesting, with all experimental designs and parameter optimizations tailored to kiwifruit characteristics. Therefore, the recognition algorithms developed in this study cannot currently be applied to other fruits. Future improvement plans include prioritizing the collection and incorporation of kiwifruit images spanning different growth stages, including the unripe stage, to build a more diverse dataset, simultaneously exploring the expansion of data on other kiwifruit varieties and different cultivation modes (such as trellis systems), and conducting cross-variety and cross-mode generalization capability testing and optimization. Additionally, when technical conditions mature, the study will explore extended functions such as target tracking to enhance the model’s generalization performance and practical adaptability in detecting kiwifruit across different growth stages.