An Enhanced Faster R-CNN for High-Throughput Winter Wheat Spike Monitoring to Improved Yield Prediction and Water Use Efficiency

Abstract

1. Introduction

2. Materials and Methods

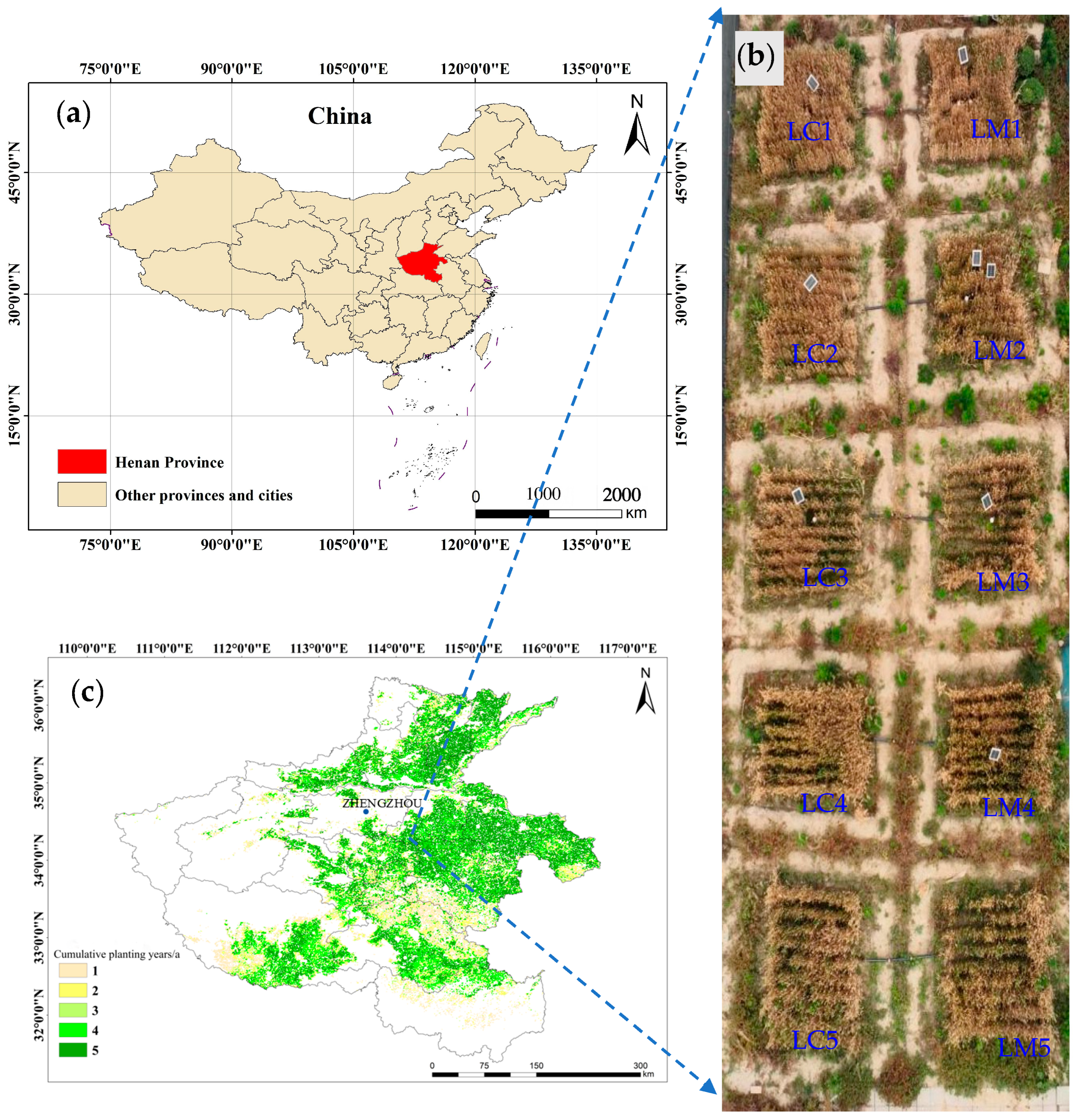

2.1. Site Description and Experimental Treatments

2.2. Multi-Source Data Integration and Image Processing Framework

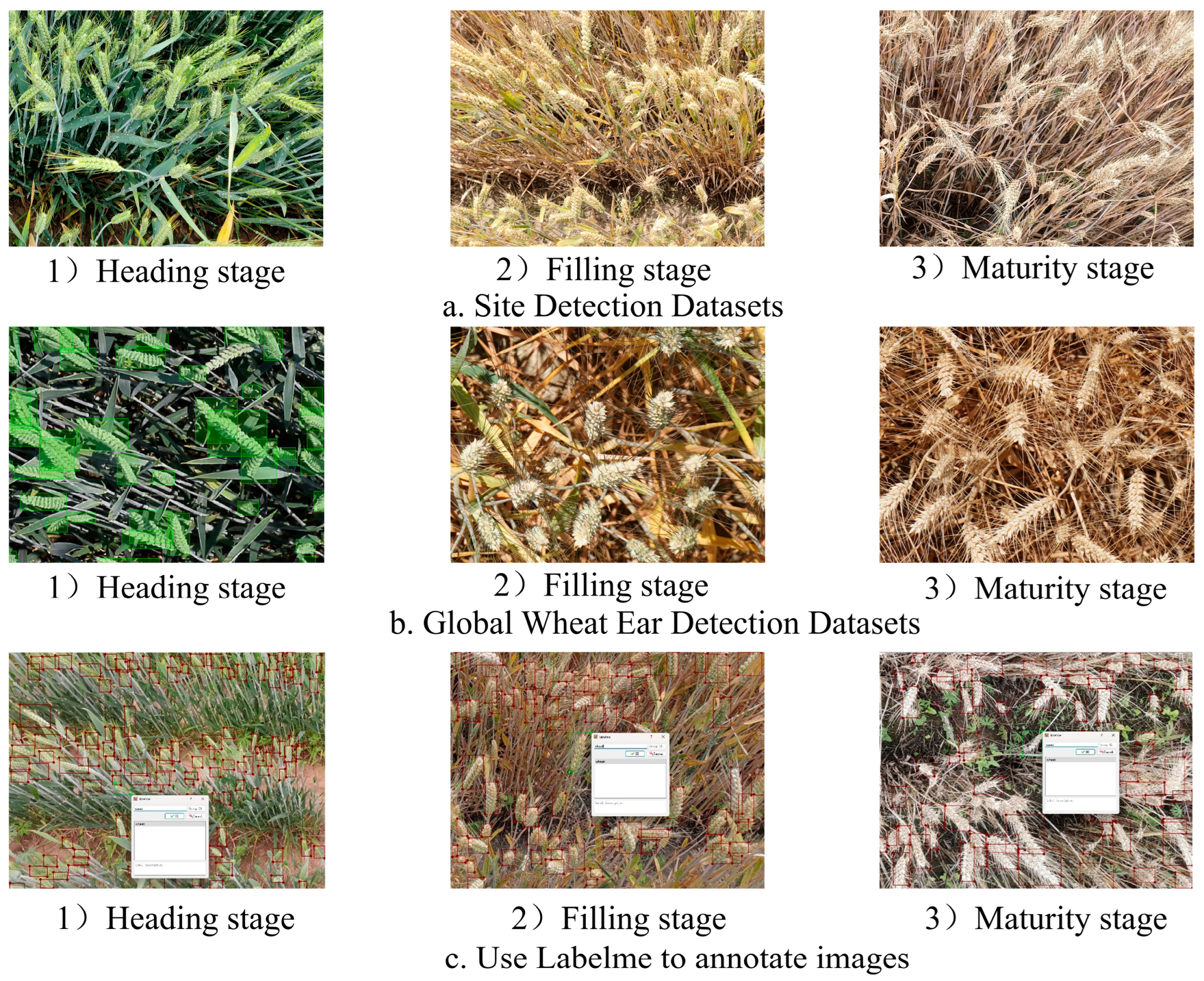

2.2.1. Multi-Source Data Integration Strategy for Cross-Environment Wheat Spike Detection

2.2.2. Image Screening and Normalization for Model Training Requirements

2.3. Model Architecture and Yield Prediction Framework

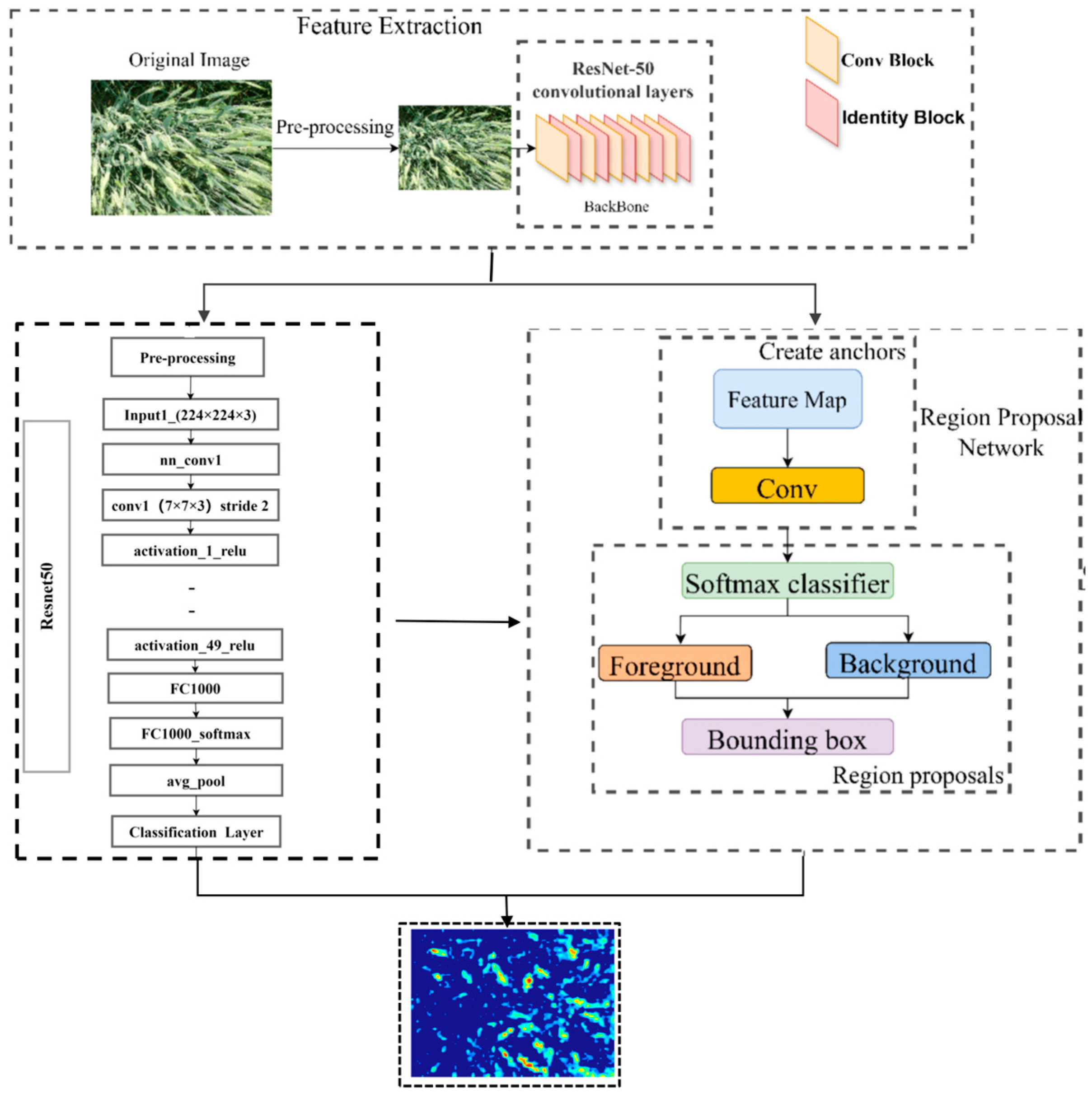

2.3.1. Faster-RCNN Network

2.3.2. ResNet-50 Module Details

2.3.3. Production Forecasting Model

2.4. Evaluation Metrics

3. Results

3.1. Training Dataset Curation and Quality Assurance Framework

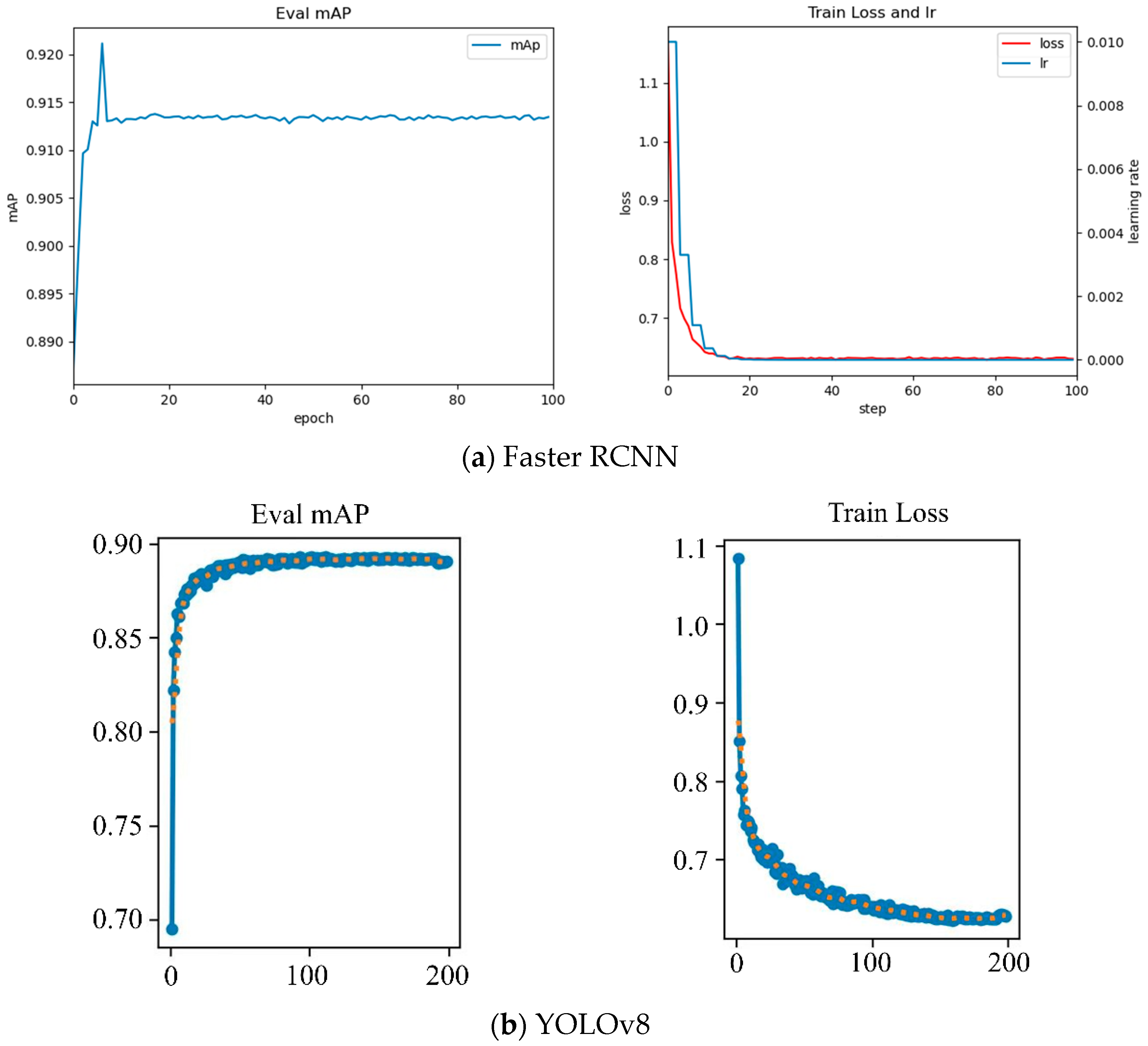

3.2. Performance Comparison of Improved Faster-RCNN and YOLOv8 Models

3.3. Recognition Results Based on Faster R-CNN

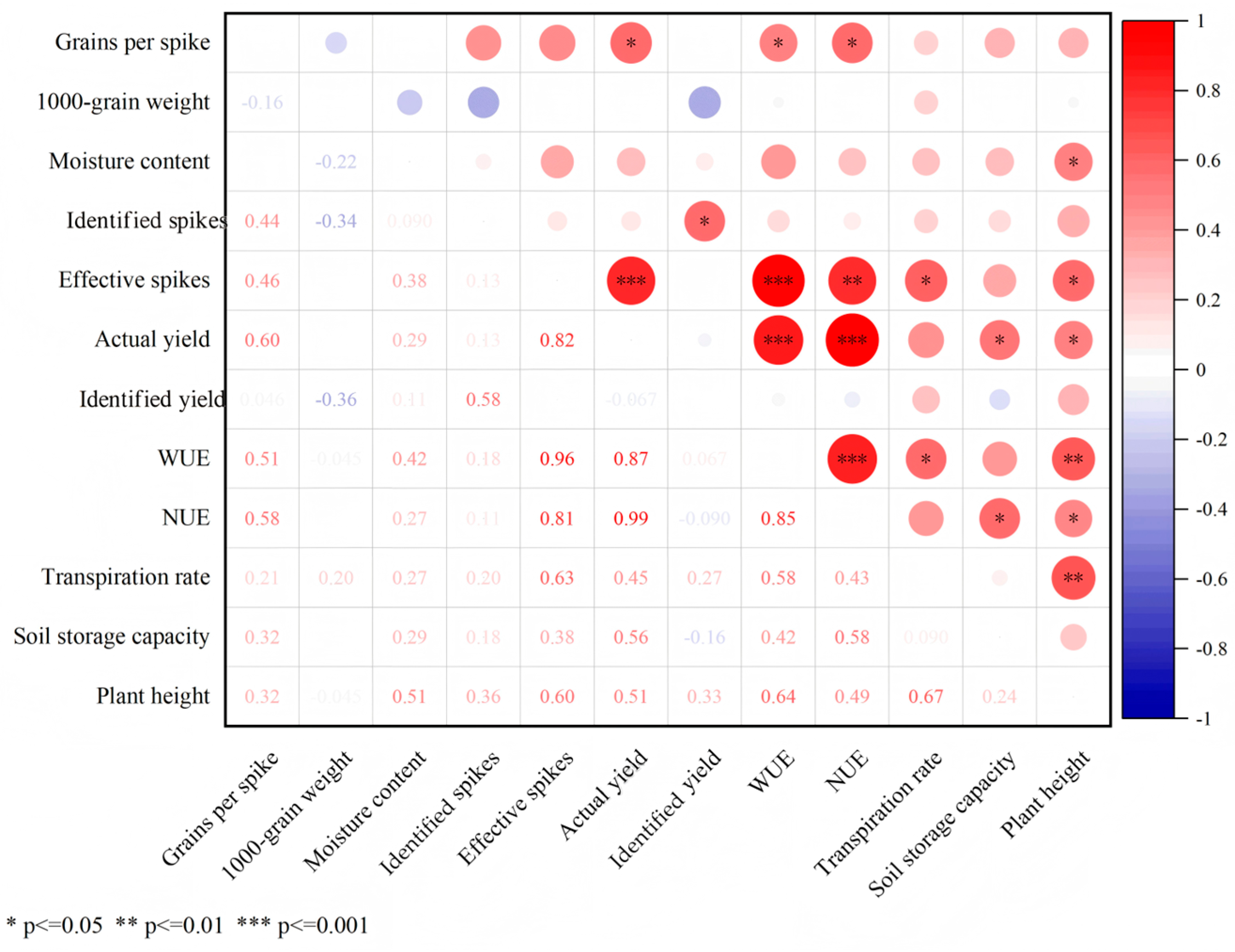

3.4. Wheat Yield Calculation and Correlation Analysis

3.5. Potential Yield of Winter Wheat Under Rainfed Conditions in Future Climate Scenarios

3.6. Water Productivity and Water Use Efficiency

4. Discussion

4.1. Faster R-CNN Model Performance and Innovations

4.2. Yield Prediction and Water–Nitrogen Synergy

4.3. Advantages, Limitations, and Future Directions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, D.; Fu, Y.; Yang, G.; Yang, X.; Liang, D.; Zhou, C.; Zhang, N.; Wu, H.; Zhang, D. Combined Use of FCN and Harris Corner Detection for Counting Wheat Ears in Field Conditions. IEEE Access 2019, 7, 178930–178941. [Google Scholar] [CrossRef]

- Cao, J.; Zhang, Z.; Luo, Y.; Zhang, L.; Zhang, J.; Li, Z.; Tao, F. Wheat Yield Predictions at a County and Field Scale with Deep Learning, Machine Learning, and Google Earth Engine. Eur. J. Agron. 2021, 123, 126204. [Google Scholar] [CrossRef]

- Sheng, Q.; Ma, H.; Zhang, J.; Gui, Z.; Huang, W.; Chen, D.; Wang, B. Coupling Multi-Source Satellite Remote Sensing and Meteorological Data to Discriminate Yellow Rust and Fusarium Head Blight in Winter Wheat. Phyton-Int. J. Exp. Bot. 2025, 94, 421–440. [Google Scholar] [CrossRef]

- Hu, X.; Li, S.; Cai, M. An Improved Bit-Flipping Based Decoding Algorithm for Polar Codes. In Proceedings of the 2021 7th International Conference on Control Science and Systems Engineering (ICCSSE), Beijing, China, 30 July–1 August 2021; pp. 298–301. [Google Scholar] [CrossRef]

- Huang, L.; Wu, K.; Huang, W.; Dong, Y.; Ma, H.; Liu, Y.; Liu, L. Detection of Fusarium Head Blight in Wheat Ears Using Continuous Wavelet Analysis and PSO-SVM. Agriculture 2021, 11, 998. [Google Scholar] [CrossRef]

- Liu, C.; Wang, K.; Lu, H.; Cao, Z. Dynamic Color Transform Networks for Wheat Head Detection. Plant Phenomics 2022, 2022, 9818452. [Google Scholar] [CrossRef]

- Rezaei, E.E.; Webber, H.; Asseng, S.; Boote, K.; Durand, J.L.; Ewert, F.; Martre, P.; MacCarthy, D.S. Climate Change Impacts on Crop Yields. Nat. Rev. Earth Environ. 2023, 4, 831–846. [Google Scholar] [CrossRef]

- Yao, Z.; Zhang, D.; Tian, T.; Zain, M.; Zhang, W.; Yang, T.; Song, X.; Zhu, S.; Liu, T.; Ma, H.; et al. APW: An Ensemble Model for Efficient Wheat Spike Counting in Unmanned Aerial Vehicle Images. Comput. Electron. Agric. 2024, 224, 109204. [Google Scholar] [CrossRef]

- Liu, T.; Wu, W.; Chen, W.; Sun, C.; Zhu, X.; Guo, W. Automated Image-Processing for Counting Seedlings in a Wheat Field. Precis. Agric. 2016, 17, 392–406. [Google Scholar] [CrossRef]

- Madec, S.; Jin, X.; Lu, H.; De Solan, B.; Liu, S.; Duyme, F.; Heritier, E.; Baret, F. Ear Density Estimation from High Resolution RGB Imagery Using Deep Learning Technique. Agric. For. Meteorol. 2019, 264, 225–234. [Google Scholar] [CrossRef]

- Guan, K.; Wu, J.; Kimball, J.S.; Anderson, M.C.; Frolking, S.; Li, B.; Hain, C.R.; Lobell, D.B. The Shared and Unique Values of Optical, Fluorescence, Thermal and Microwave Satellite Data for Estimating Large-Scale Crop Yields. Remote Sens. Environ. 2017, 199, 333–349. [Google Scholar] [CrossRef]

- Han, D.; Wang, P.; Tang, J.; Li, Y.; Wang, Q.; Ma, Y. Enhancing Crop Yield Forecasting Performance through Integration of Process-Based Crop Model and Remote Sensing Data Assimilation Techniques. Agric. For. Meteorol. 2025, 372, 110696. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, S.; Lizaga, I.; Zhang, Y.; Ge, X.; Zhang, Z.; Zhang, W.; Huang, Q.; Hu, Z. UAS-Based Remote Sensing for Agricultural Monitoring: Current Status and Perspectives. Comput. Electron. Agric. 2024, 227, 109501. [Google Scholar] [CrossRef]

- Lu, J.; Li, J.; Fu, H.; Zou, W.; Kang, J.; Yu, H.; Lin, X. Estimation of Rice Yield Using Multi-Source Remote Sensing Data Combined with Crop Growth Model and Deep Learning Algorithm. Agric. For. Meteorol. 2025, 370, 110600. [Google Scholar] [CrossRef]

- Mao, B.; Cheng, Q.; Chen, L.; Duan, F.; Sun, X.; Li, Y.; Li, Z.; Zhai, W.; Ding, F.; Li, H.; et al. Multi-Random Ensemble on Partial Least Squares Regression to Predict Wheat Yield and Its Losses across Water and Nitrogen Stress with Hyperspectral Remote Sensing. Comput. Electron. Agric. 2024, 222, 109046. [Google Scholar] [CrossRef]

- Xiao, G.; Zhang, X.; Niu, Q.; Li, X.; Li, X.; Zhong, L.; Huang, J. Winter Wheat Yield Estimation at the Field Scale Using Sentinel-2 Data and Deep Learning. Comput. Electron. Agric. 2024, 216, 108555. [Google Scholar] [CrossRef]

- Yang, J.; Xu, B.; Wu, B.; Zhao, R.; Liu, L.; Li, F.; Ai, X.; Fan, L.; Yang, Z. Chlorophyll Dynamic Fusion Based on High-Throughput Remote Sensing and Machine Learning Algorithms for Cotton Yield Prediction. Field Crops Res. 2025, 333, 110057. [Google Scholar] [CrossRef]

- Zhou, X.; Zheng, H.B.; Xu, X.Q.; He, J.Y.; Ge, X.K.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Predicting Grain Yield in Rice Using Multi-Temporal Vegetation Indices from UAV-Based Multispectral and Digital Imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Zheng, H.; Ji, W.; Wang, W.; Lu, J.; Li, D.; Guo, C.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y.; et al. Transferability of Models for Predicting Rice Grain Yield from Unmanned Aerial Vehicle (UAV) Multispectral Imagery across Years, Cultivars and Sensors. Drones 2022, 6, 423. [Google Scholar] [CrossRef]

- Haseeb, M.; Tahir, Z.; Mahmood, S.A.; Tariq, A. Winter Wheat Yield Prediction Using Linear and Nonlinear Machine Learning Algorithms Based on Climatological and Remote Sensing Data. Inf. Process. Agric. 2025. [Google Scholar] [CrossRef]

- Hou, X.; Zhang, J.; Luo, X.; Zeng, S.; Lu, Y.; Wei, Q.; Liu, J.; Feng, W.; Li, Q. Peanut Yield Prediction Using Remote Sensing and Machine Learning Approaches Based on Phenological Characteristics. Comput. Electron. Agric. 2025, 232, 110084. [Google Scholar] [CrossRef]

- Zhang, Z.; Guo, J.; Gao, Y.; Zhang, F.; Hou, Z.; An, Q.; Yan, A.; Zhang, L. Increasing Yield Estimation Accuracy for Individual Apple Trees via Ensemble Learning and Growth Stage Stacking. Comput. Electron. Agric. 2025, 237, 110648. [Google Scholar] [CrossRef]

- Liu, X.; Yang, H.; Ata-Ul-Karim, S.T.; Schmidhalter, U.; Qiao, Y.; Dong, B.; Liu, X.; Tian, Y.; Zhu, Y.; Cao, W.; et al. Screening Drought-Resistant and Water-Saving Winter Wheat Varieties by Predicting Yields with Multi-Source UAV Remote Sensing Data. Comput. Electron. Agric. 2025, 234, 110213. [Google Scholar] [CrossRef]

- Yousafzai, S.N.; Nasir, I.M.; Tehsin, S.; Fitriyani, N.L.; Syafrudin, M. FLTrans-Net: Transformer-Based Feature Learning Network for Wheat Head Detection. Comput. Electron. Agric. 2025, 229, 109706. [Google Scholar] [CrossRef]

- Li, J.; Zhu, Z.; Liu, H.; Su, Y.; Deng, L. Strawberry R-CNN: Recognition and Counting Model of Strawberry Based on Improved Faster R-CNN. Ecol. Inform. 2023, 77, 102210. [Google Scholar] [CrossRef]

- Liu, Z.; Abeyrathna, R.M.R.D.; Sampurno, R.M.; Nakaguchi, V.M.; Ahamed, T. Faster-YOLO-AP: A Lightweight Apple Detection Algorithm Based on Improved YOLOv8 with a New Efficient PDWConv in Orchard. Comput. Electron. Agric. 2024, 223, 109118. [Google Scholar] [CrossRef]

- Tetila, E.C.; Wirti, G.; Higa, G.T.H.; da Costa, A.B.; Amorim, W.P.; Pistori, H.; Barbedo, J.G.A. Deep Learning Models for Detection and Recognition of Weed Species in Corn Crop. Crop Prot. 2025, 195, 107237. [Google Scholar] [CrossRef]

- Wang, C.; Wu, X.; Li, Z. Recognition of Maize and Weed Based on Multi-Scale Hierarchical Features Extracted by Convolutional Neural Network. Trans. Chin. Soc. Agric. Eng. 2018, 34, 144–151. [Google Scholar] [CrossRef]

- Cheng, T.; Zhang, D.; Gu, C.; Zhou, X.-G.; Qiao, H.; Guo, W.; Niu, Z.; Xie, J.; Yang, X. YOLO-CG-HS: A Lightweight Spore Detection Method for Wheat Airborne Fungal Pathogens. Comput. Electron. Agric. 2024, 227, 109544. [Google Scholar] [CrossRef]

- Zhang, D.-Y.; Luo, H.-S.; Cheng, T.; Li, W.-F.; Zhou, X.-G.; Wei, G.; Gu, C.-Y.; Diao, Z. Enhancing Wheat Fusarium Head Blight Detection Using Rotation Yolo Wheat Detection Network and Simple Spatial Attention Network. Comput. Electron. Agric. 2023, 211, 107968. [Google Scholar] [CrossRef]

- Kurtulmuş, F.; Kavdir, İ. Detecting Corn Tassels Using Computer Vision and Support Vector Machines. Expert Syst. Appl. 2014, 41, 7390–7397. [Google Scholar] [CrossRef]

- Kuwata, K.; Shibasaki, R. Estimating Crop Yields with Deep Learning and Remotely Sensed Data. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 858–861. [Google Scholar] [CrossRef]

- Zhang, J.; Tian, H.; Wang, P.; Tansey, K.; Zhang, S.; Li, H. Improving Wheat Yield Estimates Using Data Augmentation Models and Remotely Sensed Biophysical Indices within Deep Neural Networks in the Guanzhong Plain, PR China. Comput. Electron. Agric. 2022, 192, 106616. [Google Scholar] [CrossRef]

- Li, Z.; Zhu, Y.; Sui, S.; Zhao, Y.; Liu, P.; Li, X. Real-Time Detection and Counting of Wheat Ears Based on Improved YOLOv7. Comput. Electron. Agric. 2024, 218, 108670. [Google Scholar] [CrossRef]

- Yang, B.; Gao, Z.; Gao, Y.; Zhu, Y. Rapid Detection and Counting of Wheat Ears in the Field Using YOLOv4 with Attention Module. Agronomy 2021, 11, 1202. [Google Scholar] [CrossRef]

- Khaki, S.; Safaei, N.; Pham, H.; Wang, L. WheatNet: A Lightweight Convolutional Neural Network for High-Throughput Image-Based Wheat Head Detection and Counting. Neurocomputing 2022, 489, 78–89. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, X.; Yan, J.; Qiu, X.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W. A Wheat Spike Detection Method in UAV Images Based on Improved YOLOv5. Remote Sens. 2021, 13, 3095. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision–ECCV.; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar] [CrossRef]

- He, M.X.; Hao, P.; Xin, Y.Z. A Robust Method for Wheatear Detection Using UAV in Natural Scenes. IEEE Access 2020, 8, 189043–189053. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Kumar, D.; Kukreja, V.; Goyal, B.; Hariharan, S.; Verma, A. Combining Weather Classification and Mask RCNN for Accurate Wheat Rust Disease Prediction. In Proceedings of the 2023 World Conference on Communication & Computing (WCONF), Dubai, United Arab Emirates, 14–16 July 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Sun, J.; Yang, K.; Chen, C.; Shen, J.; Yang, Y.; Wu, X.; Norton, T. Wheat Head Counting in the Wild by an Augmented Feature Pyramid Networks-Based Convolutional Neural Network. Comput. Electron. Agric. 2022, 193, 106705. [Google Scholar] [CrossRef]

- Li, L.; Hassan, M.A.; Yang, S.; Jing, F.; Yang, M.; Rasheed, A.; Wang, J.; Xia, X.; He, Z.; Xiao, Y. Development of Image-Based Wheat Spike Counter through a Faster R-CNN Algorithm and Application for Genetic Studies. Crop J. 2022, 10, 1303–1311. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiao, D.; Liu, Y.; Wu, H. An Algorithm for Automatic Identification of Multiple Developmental Stages of Rice Spikes Based on Improved Faster R-CNN. Crop J. 2022, 10, 1323–1333. [Google Scholar] [CrossRef]

- Misra, T.; Arora, A.; Marwaha, S.; Chinnusamy, V.; Rao, A.R.; Jain, R.; Sahoo, R.N.; Ray, M.; Kumar, S.; Raju, D.; et al. SpikeSegNet-a Deep Learning Approach Utilizing Encoder-Decoder Network with Hourglass for Spike Segmentation and Counting in Wheat Plant from Visual Imaging. Plant Methods 2020, 16, 40. [Google Scholar] [CrossRef]

- Qassim, H.; Verma, A.; Feinzimer, D. Compressed Residual-VGG16 CNN Model for Big Data Places Image Recognition. In Proceedings of the 2018 IEEE 8th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 8–10 January 2018; pp. 169–175. [Google Scholar] [CrossRef]

- Theckedath, D.; Sedamkar, R.R. Detecting Affect States Using VGG16, ResNet50 and SE-ResNet50 Networks. SN Comput. Sci. 2020, 1, 79. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- David, E.; Madec, S.; Sadeghi-Tehran, P.; Aasen, H.; Zheng, B.; Liu, S.; Kirchgessner, N.; Ishikawa, G.; Nagasawa, K.; Badhon, M.A.; et al. Global Wheat Head Detection (GWHD) Dataset: A Large and Diverse Dataset of High-Resolution RGB-Labelled Images to Develop and Benchmark Wheat Head Detection Methods. Plant Phenomics 2020, 2020, 3521852. [Google Scholar] [CrossRef]

- Bao, W.; Yang, X.; Liang, D.; Hu, G.; Yang, X. Lightweight Convolutional Neural Network Model for Field Wheat Ear Disease Identification. Comput. Electron. Agric. 2021, 189, 106367. [Google Scholar] [CrossRef]

- Hasan, M.M.; Chopin, J.P.; Laga, H.; Miklavcic, S.J. Detection and Analysis of Wheat Spikes Using Convolutional Neural Networks. Plant Methods 2018, 14, 100. [Google Scholar] [CrossRef]

- Sun, J.; Lai, Z.; Di, L.; Sun, Z.; Tao, J.; Shen, Y. Multilevel Deep Learning Network for County-Level Corn Yield Estimation in the U.S. Corn Belt. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5048–5060. [Google Scholar] [CrossRef]

- Pound, M.P.; Atkinson, J.A.; Wells, D.M.; Pridmore, T.P.; French, A.P. Deep Learning for Multi-Task Plant Phenotyping. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 2055–2063. [Google Scholar] [CrossRef]

- Zhang, S.; Huang, W.; Wang, Z. Combing Modified Grabcut, K-means Clustering and Sparse Representation Classification for Weed Recognition in Wheat Field. Neurocomputing 2021, 452, 665–674. [Google Scholar] [CrossRef]

- Tang, Z.; Zhang, W.; Huang, X.; Xiang, Y.; Zhang, F.; Chen, J. Soybean Seed Yield Estimation Model Based on Ground Hyperspectral Remote Sensing Technology. Trans. Chin. Soc. Agric. Mach. 2024, 55, 145–153+240. [Google Scholar] [CrossRef]

- Cai, Y.; Guan, K.; Lobell, D.; Potgieter, A.B.; Wang, S.; Peng, J.; Xu, T.; Asseng, S.; Zhang, Y.; You, L. Integrating Satellite and Climate Data to Predict Wheat Yield in Australia Using Machine Learning Approaches. Agric. For. Meteorol. 2019, 274, 144–159. [Google Scholar] [CrossRef]

- Zhou, X.; Kono, Y.; Win, A.; Matsui, T.; Tanaka, T.S.T. Predicting Within-Field Variability in Grain Yield and Protein Content of Winter Wheat Using UAV-Based Multispectral Imagery and Machine Learning Approaches. Plant Prod. Sci. 2021, 24, 137–151. [Google Scholar] [CrossRef]

- Asibi, A.E.; Hu, F.; Fan, Z.; Chai, Q. Optimized Nitrogen Rate, Plant Density, and Regulated Irrigation Improved Grain, Biomass Yields, and Water Use Efficiency of Maize at the Oasis Irrigation Region of China. Agriculture 2022, 12, 234. [Google Scholar] [CrossRef]

- Zhai, W.; Cheng, Q.; Duan, F.; Huang, X.; Chen, Z. Remote Sensing-Based Analysis of Yield and water-fertilizer use efficiency in winter wheat management. Agricultural Water-Fertilizer Use Efficiency in Winter Wheat Management. Agric. Water Manag. 2025, 311, 109390. [Google Scholar] [CrossRef]

- Wang, L.; Dong, S.; Liu, P.; Zhang, J.; Bin, Z. Effects of Water and Nitrogen Interaction on Physiological and Photosynthetic Characteristics and Yield of Winter Wheat. J. Soil Water Conserv. 2018, 32, 289–297. [Google Scholar] [CrossRef]

- Chandel, N.S.; Jat, D.; Chakraborty, S.K.; Upadhyay, A.; Subeesh, A.; Chouhan, P.; Manjhi, M.; Dubey, K. Deep Learning Assisted Real-Time Nitrogen Stress Detection for Variable Rate Fertilizer Applicator in Wheat Crop. Comput. Electron. Agric. 2025, 237, 110545. [Google Scholar] [CrossRef]

- Jeon, Y.J.; Hong, M.J.; Ko, C.S.; Park, S.J.; Lee, H.; Lee, W.-G.; Jung, D.-H. A Hybrid CNN-Transformer Model for Identification of Wheat Varieties and Growth Stages Using High-Throughput Phenotyping. Comput. Electron. Agric. 2025, 230, 109882. [Google Scholar] [CrossRef]

- Tetila, E.C.; Machado, B.B.; Astolfi, G.; Belete, N.A.S.; Amorim, W.P.; Roel, A.R.; Pistori, H. Detection and Classification of Soybean Pests Using Deep Learning with UAV Images. Comput. Electron. Agric. 2020, 179, 105836. [Google Scholar] [CrossRef]

- Hussain, T.; Li, Y.; Ren, M.; Li, J. Pixel-Level Crack Segmentation and Quantification Enabled by Multi-Modality Cross-Fusion of RGB and Depth Images. Constr. Build. Mater. 2025, 487, 141961. [Google Scholar] [CrossRef]

| Parameter | Value | The Properties of Unit | |

|---|---|---|---|

| Faster R-CNN | YOLOv8 | ||

| Optimizer | SGD | SGD | type |

| Momentum | 0.8~0.95 | 0.8~0.95 | dimensionless |

| Label smoothing | 0.0001~0.2 | 0.0001~0.2 | dimensionless |

| Batch size | 8~16 | 4~8 | images per batch |

| Epochs | 100~200 | 150~300 | training cycles |

| Learning rate | 0.0001~0.01 | 0.0001~0.001 | dimensionless |

| Workers | 4~8 | 4~8 | data loading processes |

| Model | Training Dataset | Precision (mAP@0.5) | Batch Size | Inference GFLOPs | Training Time (min) | Epochs | Training GFLOPs | Loss | Recall |

|---|---|---|---|---|---|---|---|---|---|

| Faster RCNN | Wheat Head Detection | 91.2 ± 0.012 a | 4 | 1.7 | 714 | 100 | 6.5 | 0.6291 | 0.8872 |

| YOLOv8 | Wheat Head Detection | 89.1 ± 0.015 ab | 4 | 0.7 | 53 | 100 | 0.9 | 0.6307 | 0.8825 |

| Treatment | Number of Grains per Spike | Number of Spikes per Square Meter | Effective Number of Spikes per Square Meter | Thousand-Grain Weight | Moisture Content (%) | Theoretical Yield (kg ha−1) | Actual Yield (kg ha−1) |

|---|---|---|---|---|---|---|---|

| LM1 | 29 ± 1 b | 601 ± 13 abc | 566 ± 11 d | 42.53 ± 0.22 d | 8.291 ± 0.15 d | 5992.07 ± 239.08 bc | 4178.76 ± 125.3 c |

| LM2 | 28 ± 2 bc | 747 ± 24 a | 724 ± 14 ab | 44.00 ± 0.35 b | 9.124 ± 0.18 c | 7589.85 ± 564.68 ab | 5073.38 ± 152.2 ab |

| LM3 | 31 ± 2 ab | 800 ± 16 a | 760 ± 15 a | 42.07 ± 0.21 d | 10.218 ± 0.20 b | 8434.16 ± 570.15 a | 4905.64 ± 147.2 abc |

| LM4 | 28 ± 1 bc | 773 ± 16 a | 739 ± 14 ab | 42.20 ± 0.25 d | 11.067 ± 0.22 a | 7474.10 ± 304.94 ab | 5242.76 ± 150.7 ab |

| LM5 | 21 ± 2 d | 459 ± 9 d | 407 ± 8 f | 41.13 ± 0.21 e | 10.273 ± 0.21 b | 2947.91 ± 286.83 e | 2446.62 ± 61.2 d |

| LC1 | 35 ± 2 a | 612 ± 12 bc | 580 ± 11 d | 42.33 ± 0.21 d | 5.603 ± 0.11 g | 7240.35 ± 437.32 ab | 5583.37 ± 119.6 a |

| LC2 | 26 ± 1 bcd | 561 ± 11 cd | 547 ± 10 de | 43.10 ± 0.22 c | 6.738 ± 0.13 f | 5163.54 ± 221.52 cd | 4092.78 ± 122.8 c |

| LC3 | 26 ± 1 bcd | 702 ± 14 ab | 660 ± 13 ab | 45.37 ± 0.23 a | 7.079 ± 0.14 ef | 6718.98 ± 292.28 abc | 4681.69 ± 140.5 bc |

| LC4 | 31 ± 1 ab | 697 ± 13 ab | 650 ± 16 bd | 41.13 ± 0.21 e | 7.594 ± 0.15 e | 7083.07 ± 289.70 abc | 4528.81 ± 135.9 bc |

| LC5 | 20 ± 2 cd | 409 ± 8 d | 378 ± 7 ef | 42.23 ± 0.21 d | 6.155 ± 0.12 f | 2666.37 ± 271.44 de | 2383.62 ± 71.5 d |

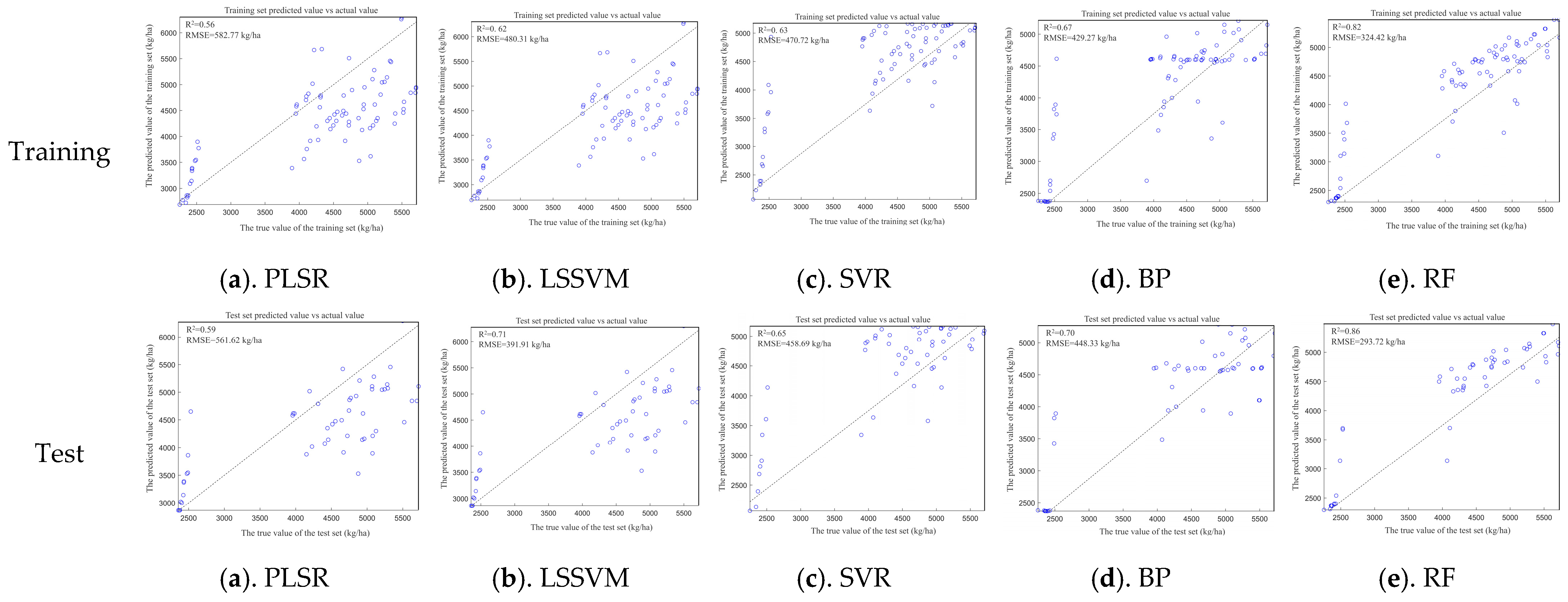

| PLSR | LSSVM | SVR | BP | RF | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| R2 | RMSE (kg ha−1) | R2 | RMSE (kg ha−1) | R2 | RMSE (kg ha−1) | R2 | RMSE (kg ha−1) | R2 | RMSE (kg ha−1) | |

| Train | 0.56 | 582.77 | 0.62 | 480.31 | 0.63 | 460.72 | 0.67 | 429.27 | 0.82 | 324.42 |

| Test | 0.59 | 561.62 | 0.71 | 391.91 | 0.65 | 458.69 | 0.70 | 448.33 | 0.86 | 293.72 |

| Treatment | Actual Wheat Yield (kg ha−1) | Wheat Yield (CNY ha−1) | Irrigation Amount (m3 ha−1) | WP (kg m−3) | Irrigation (mm) | Precipitation (mm) | ET (mm) | Soil Water Stored (m) | WUE (kg∙ha−1∙mm−1) |

|---|---|---|---|---|---|---|---|---|---|

| LM1 | 4178.76 ± 125.3 c | 10,237.96 ± 306.98 e | 1350 | 9.29 ± 0.2784 c | 100.00 | 110.33 | 466.93 | 0.531 | 13.19 |

| LM2 | 5073.38 ± 152.2 ab | 12,429.78 ± 372.89 bc | 1350 | 11.27 ± 0.3382 ab | 100.00 | 110.33 | 434.70 | 0.404 | 17.65 |

| LM3 | 4905.64 ± 147.2 abc | 12,018.82 ± 360.50 c | 1350 | 10.90 ± 0.3256 b | 100.00 | 110.33 | 432.53 | 0.466 | 19.52 |

| LM4 | 5242.76 ± 150.7 ab | 12,844.76 ± 369.21 b | 1350 | 11.65 ± 0.3349 a | 100.00 | 110.33 | 436.93 | 0.483 | 20.16 |

| LM5 | 2446.62 ± 61.2 d | 5994.22 ± 149.94 f | 1350 | 5.44 ± 0.1360 f | 100.00 | 110.33 | 458.16 | 0.515 | 7.02 |

| LC1 | 5583.37 ± 119.6 a | 13,679.26 ± 293.02 a | 2250 | 7.43 ± 0.1595 d | 60.00 | 110.33 | 382.54 | 0.590 | 13.64 |

| LC2 | 4092.78 ± 122.8 c | 10,027.31 ± 300.81 e | 2250 | 5.46 ± 0.1637 f | 60.00 | 110.33 | 417.55 | 0.597 | 10.83 |

| LC3 | 4681.69 ± 140.5 bc | 11,470.14 ± 344.23 d | 2250 | 6.24 ± 0.1873 e | 60.00 | 110.33 | 428.36 | 0.656 | 14.07 |

| LC4 | 4528.81 ± 135.9 bc | 11,095.58 ± 333.00 d | 2250 | 6.04 ± 0.1812 e | 60.00 | 110.33 | 396.26 | 0.557 | 14.67 |

| LC5 | 2383.62 ± 71.5 d | 5839.87 ± 175.18 f | 2250 | 3.18 ± 0.0953 g | 60.00 | 110.33 | 366.94 | 0.476 | 5.22 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, D.; Shi, L.; Li, Y.; Zhang, B.; Yang, G.; Viriri, S. An Enhanced Faster R-CNN for High-Throughput Winter Wheat Spike Monitoring to Improved Yield Prediction and Water Use Efficiency. Agronomy 2025, 15, 2388. https://doi.org/10.3390/agronomy15102388

Wang D, Shi L, Li Y, Zhang B, Yang G, Viriri S. An Enhanced Faster R-CNN for High-Throughput Winter Wheat Spike Monitoring to Improved Yield Prediction and Water Use Efficiency. Agronomy. 2025; 15(10):2388. https://doi.org/10.3390/agronomy15102388

Chicago/Turabian StyleWang, Donglin, Longfei Shi, Yanbin Li, Binbin Zhang, Guangguang Yang, and Serestina Viriri. 2025. "An Enhanced Faster R-CNN for High-Throughput Winter Wheat Spike Monitoring to Improved Yield Prediction and Water Use Efficiency" Agronomy 15, no. 10: 2388. https://doi.org/10.3390/agronomy15102388

APA StyleWang, D., Shi, L., Li, Y., Zhang, B., Yang, G., & Viriri, S. (2025). An Enhanced Faster R-CNN for High-Throughput Winter Wheat Spike Monitoring to Improved Yield Prediction and Water Use Efficiency. Agronomy, 15(10), 2388. https://doi.org/10.3390/agronomy15102388