Abstract

As the global population continues to increase, projected to reach an estimated 9.7 billion people by 2050, there will be a growing demand for food production and agricultural resources. Transition toward Agriculture 4.0 is expected to enhance agricultural productivity through the integration of advanced technologies, increase resource efficiency, ensure long-term food security by applying more sustainable farming practices, and enhance resilience and climate change adaptation. By integrating technologies such as ground IoT sensing and remote sensing, via both satellite and Unmanned Aerial Vehicles (UAVs), and exploiting data fusion and data analytics, farming can make the transition to a more efficient, productive, and sustainable paradigm. The present work performs a systematic literature review (SLR), identifying the challenges associated with UAV, Satellite, and Ground Sensing in their application in agriculture, comparing them and discussing their complementary use to facilitate Precision Agriculture (PA) and transition to Agriculture 4.0.

1. Introduction

Worldwide food production is a more sensitive issue than ever before. The global population is expected to reach 10 billion by 2050, adding an extra 2.4 billion to the global urban population, and increasing overall demand for food production by 70 percent [1]. At the same time, reduced rural population, degraded farmlands [2], climate change decreasing agricultural productivity [3], and food waste [4] make this requirement quite difficult to meet unless the agricultural productive model changes dramatically. To this end, Agriculture 4.0, or the fourth Agricultural Revolution, promises a technological revolution for enhanced agricultural productivity and increased eco-efficiency [5]. Driven by the wider Industry 4.0 paradigm shift, Agriculture 4.0 brings to the agricultural sector a number of mainstream technologies, including sensing infrastructures, big data analytics, Artificial Intelligence, Blockchain, and robotics [6]. It thus engages in a unifying model pertinent to different domains of human activity ([7,8]), recognizing cyberphysical systems and their components as a key element in this transition [9] and targeting an increase in the overall quality of life leading to Society 5.0 paradigm [10].

Sensing plays an important role in the Agriculture 4.0 paradigm shift. Obtaining the necessary information from the field may be achieved by exploiting different technological options that include ground-based sensors, or remote sensing techniques ([11,12]). The former are strongly associated with technologies such as the Internet of Things (IoT) ([13,14]) and the Industrial Internet of Things (IIoT) [15], as well as Wireless Sensor Networks (WSNs) [16]. The latter may be distinguished from airborne remote sensing utilizing Unmanned Aerial Vehicles (UAVs) and spaceborne remote sensing exploiting satellite data.

The present work performs a systematic review related to the three aforementioned main technologies with reference to their application in agriculture. The main research questions (RQs) that the authors wish to answer in this work are the following:

- RQ1 What are the challenges of the three technologies that this work deals with?

- RQ2 How can these three technologies be used in a complementary way so as to facilitate the transition to Agriculture 4.0?

The rest of the paper is structured as follows. An overview of the three aforementioned categories of sensing technologies is presented in Section 2. The methodology followed for the systematic review is showcased in Section 3. The outcome of the literature review performed is elaborated in Section 4 detailing the challenges and synergies found for the three technologies under investigation. Section 5 presents a discussion providing the paper’s view on the research questions formulated. Finally, Section 6 presents conclusions and identifies potential future research directions.

2. Overview of Sensing Technologies

2.1. Ground-Based Sensing

Ground or proximal sensors play a key role in Precision Agriculture (PA). Proximal sensing is defined as the use of field sensors to obtain signals from the analyzed feature (e.g., climate, soil, or plant) when the sensor is in contact with or close to it (within a few meters) ([17,18]). Nowadays, there are many types of proximal sensors capable of monitoring multiple parameters related mainly to the plant water, nutritional, and health status [19]. Another classification includes the division into static proximal sensors, which remain stationary in the field, or mobile proximal sensors, mounted on vehicles or robots [20].

It is well known that climate is one of the main aspects determining plant growth and outputs [21]. This occurs because each plant is sensitive to certain growing conditions such as air temperature, relative humidity, wind, soil temperature, and light. Thus, it is crucial for farmers to understand the climatic conditions on their farms [22]. Hence, meteorological information may help the farmer make the most efficient use of natural resources to improve agricultural production. Among the most common proximal sensors are those that can assess climatic parameters. Usually, such sensors are mounted in small weather stations in the field, and a basic weather station usually consists of a temperature and humidity sensor, a wind speed sensor, a sensor that measures precipitation height, and one that can assess solar radiation information.

Another category of ground-based sensors is soil-based sensors. New-generation proximal soil-based sensors are able to monitor real-time physical and chemical soil parameters, such as moisture, temperature, pH, soil nutrients, and pollutants, providing key information to optimize crop cycle management, combat biotic and abiotic stresses, and improve crop yields.

One of the most common uses of such sensing is for irrigation management, through the capability of estimating the crop reference evapotranspiration (ETO), returning to the plant the full or partial (depending on the farm irrigation strategy) amount of water lost by evapotranspiration, using the FAO-56 Penman–Monteith equation recommended by the United Nations (UN) Food and Agriculture Organization (FAO) [23]. Weather observations and forecasts, coupled with physical observations, can help predict the development of the main pests and can be used to schedule control actions to prevent pest development. Thanks to such information, it is possible to change plant microclimate and influence the habitability for pests, for example, through pruning operations to reduce internal canopy humidity and reduce the probability of infection by plant pathogens. Integrated pest management has been a response to reduce the environmental impact of chemical pesticides [24].

For irrigation scheduling nowadays, there is a tendency to focus on plant-based sensors. Common and reliable sensors capable of continuously estimating plant water status include leaf turgor sensors, devices capable of assessing leaf turgor pressure, a parameter directly related to plant water status; sapflow sensors, capable of providing indications of the plant transpiration flows; trunk dendrometers, capable of monitoring trunk fluctuations over time, dependent on the plant hydration status; and Linear Variable Differential Transformer (LVDT) fruit gauges [25]. The latter are low-cost devices that can continuously and very accurately monitor fruit development over the day, providing information about plant water and nutritional status during the fruit growth stage ([26,27]).

Although plant-based sensors for monitoring plant water status are among the most common, there are other devices that are very useful for crop management. Foliar wetness sensors are devices installed inside the canopy to assess its moisture status, preventing the rise of pathogens and diseases [28]. Optical sensors, working in the visible/near-infrared band, can be useful for estimating the nutritional and health status of the plant ([29,30]). On the other hand, Light Detection and Ranging (LiDAR) technology sensors can be used to assess and measure canopy shape and volume [31].

Last-generation sensors allow continuous data acquisition, greatly increasing the degree of information without increasing the farm workload. Moreover, these sensors can be used to create IoT networks for various applications [32]. IoT focuses primarily on providing many small, interconnected devices, mainly using WSN technology, that can work together with a common purpose [33]. A WSN has as a main target of offering sensing and monitoring capabilities utilizing wireless technologies to connect to sensing devices. In WSNs, data collection and transfer occur in four stages: collecting the data, processing the data, packaging the data, and transferring the data [34]. With the help of WSN technologies, farmers can analyze weather conditions, water use, energy use, soil conditions, and plant morpho-physiological parameters collected from their farm feeding decision-support systems (DSSs) [35]. WSN has to fulfill requirements such as long range, low energy, and adequate data rate. Several technologies, including Long-Range Wide Area Network (LoRaWAN) [36], Narrow Band IoT (NB-IoT) [37], SigFox [38], and Long-Term Evolution for Machines (LTE-M) [39], are arising as candidates in the upcoming transition to 5G communications [40].

In the context of this paper, and from this point on, we use the term IoT to collectively describe the different technologies associated with ground-based proximal sensing.

2.2. Remote Sensing Techniques

UAVs were used in agriculture in 1997 for the first time. This technology was first used in Japan and South Korea, where mountainous terrain and relatively small family-owned farms required lower-cost and higher-precision spraying. Historically, the use of aerial application of pesticides was prohibited by the European Union, inhibiting the growth of UAV application in agriculture. Later use of UAVs was centered mainly around aerial imagery.

Nowadays, UAVs are applied in PA to collect images of high quality, mounting adequate sensors to this end. Sensor choice is carried out carefully according to a number of parameters such as resolution, optical quality, weight, captured images, and price. UAVs may carry multiple types of sensors: RGB (red–green–blue), NIR (near-infrared), IR (infrared), multispectral (MS), and hyperspectral (HS) cameras. RGB and NIR bands are useful for collecting information about vegetation stress and chlorophyll content [41]. Furthermore, LiDAR sensors can also be used in environmental sciences for terrestrial scanning, obtaining information on crop height or canopy size [42].

Each type of sensor can be utilized for monitoring diverse parameters in vegetation. RGB is low in cost and useful for UAV applications of precision farming, such as the creation of orthomosaics, as they can capture images with high resolution. In addition, they are useful in different conditions (sunny and cloudy weather). But they cannot analyze many vegetation indices due to their limited spectral range. MS and HS sensors, compared to RGB, collect data in different spectral channels and acquire images with high quality that are useful for studying a multitude of physical and biological characteristics of plantations [43]. Therefore, MS and HS sensors are the most popular in PA, although they are expensive. Moreover, thermal sensors are used to collect temperature information. Their use proves to be optimal in irrigation-management applications [43]. The specific targeted application type is what dictates the adequate selection of sensors. For instance, MS sensors are suitable for the detection of diseases, offering many bands that can detect the sensitivity of symptoms. On the other hand, one RGB camera should be enough for data collection related to agricultural mapping.

The most well-known applications of UAVs for PA, as found in the literature, include weed mapping and management [44], irrigation management [45], crop spraying [46], vegetation health monitoring and diseases detection [47], and vegetation growth monitoring and yield estimation [43]. UAVs acquire information that can be useful for the measurement of different parameters such as the crop height and the Leaf Area Index (LAI), allowing crop growth control in crops such as cotton, wheat, or sorghum. UAVs can be used to calculate the most common vegetative index to determine the diseased tissue, i.e., the normalized difference vegetation index (NDVI), which is useful for monitoring crop health and detecting diseases at an early stage, also mapping the size of the defect. In addition, a very important field of UAV application is water management, as precision irrigation techniques improve the efficiency of water use resources [43]. UAVs have rapidly evolved into a common tool to increase agricultural output and overall efficiency, decreasing expensive inputs of water, fertilizers, and pesticides. They can also indicate damages in crops that cannot be easily detected from the ground and be applicable in areas of big dimensions [48].

Finally, spaceborne remote sensing satellites equipped with digital RGB cameras and MS or HS sensors are nowadays among the most widely used technologies in monitoring field variability, which usually includes landscape monitoring, yield, field, soil and crop variability, or variability due to anomalous factors [49]. By measuring the reflectance of the light incident on soil and crops, they are used to assess their characteristics and behavior by acquiring information at different spatial, spectral, radiometric, and temporal resolutions. Similarly to UAV-based remote sensing, information acquired from satellites is usually expressed by indices, among which the NDVI index is one of the most widely used. Each index is calculated from values at visible and non-visible wavelengths: red, green, blue, near-infrared, red edge, and infrared bands are the most frequently used ([12,50]).

Every satellite and sensor is characterized by different spatial and temporal resolutions. Temporal resolution is associated with the satellite itself and can be considered as the time the satellite takes to complete an orbit and revisit the same observation area. Sensors, instead, can have high spatial resolution and tend to have small footprints, or they have low spatial resolution and tend to have larger footprints. As Vanguard 2 and TIROS 1 were launched in 1959 and 1960, respectively, and are used for assessing meteorological information, the history of satellites for agricultural use starts in 1972 with Landsat 1 (1972–1978), a satellite capturing multispectral data for earth-surface image acquisition. After that, a series of Landsat satellites (from 2 to 9) were launched. Used in many parts of the world, these satellites (Landsat-7, -8, and -9 are still active) provide high-quality images in order to classify land uses, monitor crop conditions, and estimate irrigation water requirements, resulting in more affordable imagery than the aerial photography once used to classify land use across large regions. Later, from the end of the 1990s to the 2010s, other satellites were launched, such as IKONOS (1999–2015), which provides 4 m spatial resolution images; Worldview-2 (2009–present); and GeoEye-1 (2008–present), with a ≤2 m resolution; Sentinel-2A (2014–present) and -2B (2015–present), with a 10 to 60 m resolution; and other satellites constellations such as Pleiades-1A (2011–present) and -1B (2012–present), SkySat-1 (2013–present) and -2 (2014–present), or Superview-1 (2016–present), namely small satellites with compact, cheaper, and more replaceable sensors [51]. The primary benefits of this type of technology use are widely documented in the literature and include reduced environmental impact, increased crop yield, enhanced product quality, and input savings ([52,53,54]). Despite the number and variety of satellite data being made available at various costs and for different purposes, there are certain challenges to using satellite imaging closely related to the purpose of the study.

3. Methodology

The present review was conducted following the guidelines set by the Preferred Reporting Items for the Systematic Reviews and Meta-Analyses (PRISMA 2020) methodology [55]. The PRISMA statement consists of a checklist of 27 items recommended for reporting in systematic literature reviews. This checklist was designed to help reviewers report the purpose of the review, the methodology that was followed, and the outcomes of their research. This section provides answers to the PRISMA 2020 statement item checklist, giving a comprehensive illustration of the methodology followed in the present work.

As the technology of sensors rapidly advances, a review of current literature in PA is needed to put into scope the available technologies, their competition, the cases that are most favorable for their application, and how to best utilize them in unison to maximize agricultural sustainability and profit for farmers. This review was conducted with the mindset of bringing to the fore the challenges of the three technologies that currently play a central role in the future of PA and sustainable development. To the knowledge of the authors, a systematic review with the scope of attempting to synthesize the literature for these three technologies has not been attempted before, testifying to the novelty of the current work. This study answers the two RQs that were mentioned in the introduction. According to the RQs, the following search query was formulated:

TITLE-ABS-KEY (“Precision Agriculture” AND (((UAV OR Satellite) AND “Remote Sensing”) OR IoT))

All results obtained from this query also had to fulfil the following criteria:

- Should be written in English,

- Should be published after 2017,

- Should be published in Q1 and Q2 journals.

All studies that met the criteria proceeded to the first screening phase. During this phase, all six authors split the remaining papers between themselves and skimmed through the abstract and the main text for phrases mentioning challenges or synergies for the three technologies. At this phase, only phrases were sought. All works that survived this stage passed through a second screening phase, during which remaining works were split between two reviewers and carefully read through. The reviewers had to ascertain to what degree each study analysed the challenge or the synergy it mentioned. If the analysis of the challenge or synergy was profound enough, the work was finally admitted for inclusion. The aforementioned query and exclusion criteria were applied in the Scopus database on 6 October 2022.

The explanation for the choice of this query was that the application of these three technologies in PA was sought for sensing purposes; therefore, “remote sensing” was added with an AND for UAVs and satellites to exclude other uses, e.g., spraying. Furthermore, the term “IoT” was regarded as more appropriate when paired with “Precision Agriculture” to provide a representative set of relevant works, and was preferred over terms such as “Wireless Sensor Networks” or “Ground Sensors”.

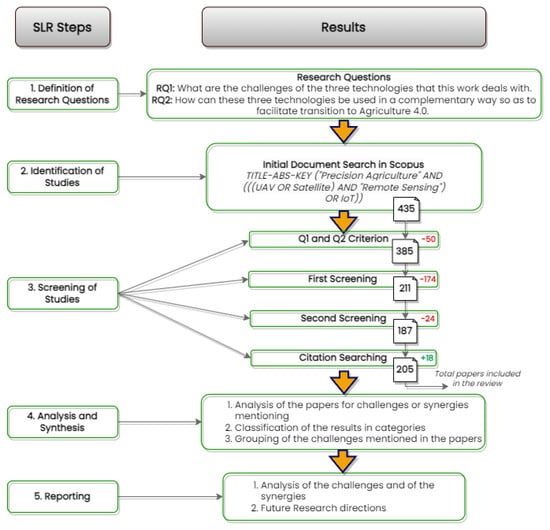

The aforementioned query produced a result of 435 articles. Out of these, 383 were articles published in Q1 and Q2 journals. These 383 articles were divided equally among the six authors for the first screening phase. Articles that had no mention of challenges, or synergy of technologies, were eliminated. The first screening phase eliminated a further 172 works. Therefore, 211 works made it to the second screening phase, during which 24 works were excluded, leading to a total of 187 articles. Looking through these 187 records, it was evident that synergies were underrepresented, as only 6 works mentioned a substantial synergy. Citation searching had to be performed to ensure that the contemporary literature on synergies was adequately represented. Citation searching added a further 18 records, for a total of 205 records to be admitted for inclusion in the final review. Figure 1 presents a flowchart giving an overview of the screening phases and the steps followed for the systematic literature review.

Figure 1.

Systematic Literature Review Flowchart.

During the second screening phase, all challenges found by the authors were added to a spreadsheet. Subsequently, these challenges were grouped together. These groups can be found in Table 1 and Table 2 for UAVs, Table 3 and Table 4 for satellites, and Table 5 and Table 6 for IoT. Papers that mentioned synergies where sparse among the body of results. These works are described in their own Section 4.4.

Table 1.

Unmanned Aerial Vehicle (UAV) Technological Challenges for Applications in Agriculture.

Table 2.

UAV Peripheral Challenges for Applications in Agriculture.

Table 3.

Satellite Technological Challenges for Applications in Agriculture.

Table 4.

Satellite Peripheral Challenges for Applications in Agriculture.

Table 5.

Internet of Things (IoT) Technological Challenges for Applications in Agriculture.

Table 6.

IoT Peripheral Challenges for Applications in Agriculture.

In order to give a sufficient definition of what a challenge is and what a synergy is, we refer to the following. A challenge is any type of impedance or problem that challenges the use of a particular technology in PA. For example, for UAVs, a challenge could be their short flight times, for satellites their lower resolution, and for ground sensors their inflexibility. Synergy is an idea that fuses together traits from two or more technologies to produce an effect that improves the use of both and augments each technology’s value overall. The high-resolution images acquired by UAV, combined with the wide coverage and high spectral resolution of satellite images, constitutes a good example of a synergy. Both challenges and synergies were sought in all examined papers, with the prerequisite that they were sufficiently analyzed in the review text. Therefore, it was much more probable to encounter UAV challenges in a UAV-centric paper than in an IoT-centric or a satellite-centric paper.

To reduce bias, the two first authors double-checked the findings of each of the six authors during the second phase of deciding paper eligibility. Any paper that mentioned a well-analysed and evidence-supported phrase that met the challenge or synergy criteria was eligible for synthesis. During the second screening, a further 24 papers were excluded. After this step, the number of records was reduced to 187. Out of those papers, six of them discussed a synergy of the technologies. Citation searching was performed to identify more records mentioning synergies, which added another 18 records, for a total of 205.

Synthesis of the results required that the phrases extracted from each paper described, in their core, the same basic challenge in order to be grouped with similar phrases from other papers. For example, papers mentioning UAV instability when carrying heavy equipment and UAV reduction in flight time due to heavy payloads were all grouped under the payload-limitation challenge because they describe the wider challenge that UAVs face when there is need to equip them with heavy payloads. As each challenge is further elaborated upon in the main text, the particular details of each phrase are not lost. The grouping helps to provide a summary of the challenge in a form that is quick and easy on the eye and captures how many works have mentioned it in the past.

4. Results

This section presents the results for the synthesis of the challenges for each technology in three subsections and the results for synergies in the fourth subsection.

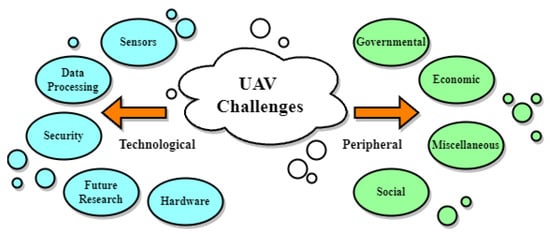

4.1. UAV Challenges

This subsection provides the results of the categorization of challenges for UAV technology. Table 2 presents the types of challenges for implementing UAV technology in agriculture that are peripherally related to the use of UAVs. The categories that were observed in this case were economic, social, and governmental challenges, as well as one miscellaneous challenge. Table 1 presents the type of challenges for implementing UAV technology in agriculture that are native to the UAV technology, and hence, occur according to the UAV system itself. The categories that were observed in this case were challenges concerning the sensors, the system security, the data processing, and the system hardware. Moreover, future research challenges for implementing UAV technology in agriculture are also investigated and included. Figure 2 presents these groups of challenges found in the literature that was examined.

Figure 2.

UAV Challenges Mentioned in the Literature.

Most commercially available UAVs come out of the box with cheap RGB sensors that lack the precision and spectral range for PA applications (for example, for calculating NVDI) [58]. It is necessary to equip UAVs with MS sensors, increasing the overall cost, and even then, cheaper MS sensors face saturation issues [59], especially since there are no standardized methods for their calibration [72]. The calibration of UAV sensors presents a challenge because camera position on a UAV might be slightly different for each flight [59], and differences in the orientation of the sensor result in different calibrations each time. This is especially true with HS sensors, as the slightest tilt has a negative impact in the sensor calibration [80]. With reference to HS sensors, although they have become more lightweight and cheaper, they are still a relatively expensive investment, and the processing of their output data remains extremely complex. Therefore, UAVs present a less cost-effective option for high spectral resolution when compared to satellites [129]. According to [71], another challenge for UAVs is their dependence on payload. Equipping the UAV with multiple sensors is not recommended, as it reduces flight time and affects its balance mid-flight ([81,86]). Therefore, careful choice must be made about which sensors to use and equip the platform with during each flight, resulting in the need for multiple flights when measurements from multiple sensors are required.

All data generated as an outcome of the UAV trips need to be processed to be useful. Some issues arise during this stage. As sensors become better and spatial resolution increases, the volume of acquired data increases, and UAV use in PA is faced with extreme data bloat ([81,110,111]). Moreover, as mentioned earlier, data acquisition is not standardized, adding to the already considerable computing complexity of UAV images ([57,86]). On top of that, the UAV itself can do little to share the burden of data processing as its computing capabilities are very limited ([81,111]). When these ultra-high resolution data are used for machine learning (ML) training, it can take quite a while to complete ([72,106]), even though the final results are more trustworthy and useful than training the ML algorithms with satellite acquired images. Higher resolution is not always a panacea when it is employed for disease classification. The currently developed plant disease classification methods have maximum resolution thresholds, which, when surpassed, result in their algorithm underperforming. Nevertheless, the highest resolutions can be capitalized on in object recognition algorithms that perform disease analysis at the individual plant level [117]. Another issue is that if these data are to be given to some company or organization for processing, issues of data governance arise, as well as security of sensitive data ([64,72,82]).

Furthermore, as the usage of UAVs in agriculture is still in an early stage [123], more research is needed to identify the costs and benefits of the implementation of this technology. For example, [125] recommended the actualization of more studies concerning the validation of low-cost sensors’ results in different conditions. Moreover, according to [71,82], there is a need for the development of fully autonomous UAVs. Ref. [79] also discussed the implementation of multiple coordinated UAVs in an individual field. Finally, the path optimization of UAVs, in order to monitor a whole field with adequate accuracy, is mentioned in [71,75].

UAVs’ application challenges exceed the purely technical aspects. Using a UAV for PA requires a large upfront investment from farmers ([118,127]), and even then, continuous use can be expensive as well [86]. This high initial cost is a considerable risk, as remote sensing is not a standardized procedure for every type of crop and in every environment, and its performance can wildly vary depending on these parameters [86]. The need for skills and a proper way of handling UAVs ([58,66,132]) are further impeding factors. Knowledge of repair and maintenance is also a requirement [64]. Moreover, most governments require special licences for UAV use, and they limit certain aspects of flying, such as flying height ([71,93]), and flying over inhabited areas and beyond visual lines [88].

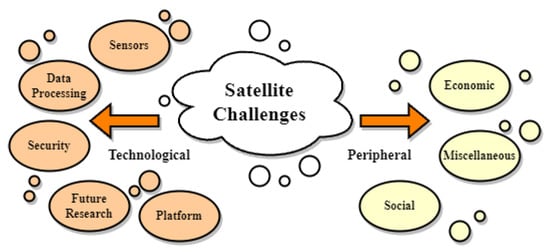

4.2. Satellite Challenges

The present subsection provides the results of the categorization of challenges for satellites. Table 4 presents the type of challenges for using satellites in agriculture, which are not oriented to the technology itself. The categories that were observed in this case were economic and social. Table 3 presents the challenges native to satellite technology. Of this type of challenges, the main categories that were identified were related to sensors, security, data processing, and the platform. In addition, some future research challenges are also mentioned and analyzed. Figure 3 presents these groups of challenges found in the literature that was examined.

Figure 3.

Satellite Challenges Mentioned in the Literature.

An important drawback of satellite imagery is its lower spatial resolution compared to airborne images ([101,135]). Satellite images of medium or low spatial resolution are freely available but do not provide adequate data for a handful of different PA uses, such as disease monitoring, soil spectral measurement, wild weed identification, or applications that require individual plant identification through ML ([136,137,138]). Very-high-resolution (VHR) images from satellites are also available but incur a significant cost. Even then, VHR satellites have trouble in applications that require object identification in agricultural environments, as they provide less information than their airborne counterparts. Due to this lower spatial resolution, satellites are not optimal for heterogeneous environments, for example, for the size and distribution of most of the European olive grove systems or vineyards ([142,159]).

Similarly to UAVs, satellites generate big data images. Their processing is a time-consuming and computationally intensive endeavour [144]. Processing these data under time constraints may affect the quality of the final result [148]. Storing and managing the sheer volumes of imagery is a considerable challenge on its own. Furthermore, satellite data, because of their low spatial and temporal resolution, are not great candidates for AI calibration purposes or training of ML algorithms [149]. As with all big data applications, satellite imagery can also be leaked or be used by third parties for unknown purposes, so care must be taken ([72,142]).

Another drawback of satellites is their susceptibility to cloudy weather. Depending on cloud coverage, a satellite may not be able to acquire images at all [151]. The consensus in the literature is that cloud coverage reduces satellite temporal resolution ([83,149]). Disease monitoring in particular is highly dependent on high temporal resolution; thus, satellite use for this particular application is inappropriate [158]. Satellites as a platform have inherently low temporal resolution, as they cannot be used for imaging at will but rather at their particular revisit time, but if at that moment the cloud coverage is dense, the chance for image acquisition may be lost. While satellites bearing active sensors are a solution to cloud coverage, their spatial resolution is even lower, and hence they do not solve the disease-monitoring conundrum ([57,64]). The low temporal resolution of satellites is a problem not only for disease monitoring but for monitoring in general. Satellites are inflexible platforms without the ability to interchange sensors at will, and waiting for a satellite with a suitable sensor for the required measurement is another cause of low temporal resolution. When the highest spatial resolution is needed from VHR satellites, they may not be there to provide it ([64,83]), but a very small number of satellites are equipped with both MS and HS sensors [129].

With reference to future research challenges, there is a need to sufficiently integrate and connect satellites with different technologies and devices, as well as enhance their level of cooperation between one another [142]. According to [121], there is also the need to perform more research for satellites on transforming image classification maps into application maps. Additionally, as the complexity of the usage of a satellite platform is a factor that hinders its adoption, the overall technology needs to become more approachable for farmers and be used as a DSS [155].

Concerning the satellite technology’s peripheral challenges for applications in agriculture, the cost of acquiring useful information from satellite imagery incorporates the costs of taking the images and processing them. Specialized companies gather the imagery from specific satellites and process them themselves before finally delivering the final product to the customer. Satellite data for PA have been in use for many years, more than UAVs, and the whole process is more streamlined. Still, the cost of the man-hours involved is high, particularly when data processing must be undertaken quickly [57]. All in all, access to everyone is still hindered due to the scientific and technological knowledge required to deploy an effective method that brings true and valuable results to farmers [131].

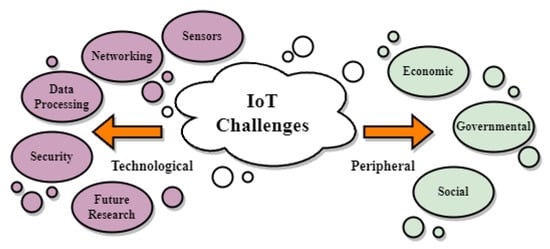

4.3. IoT Challenges

The present subsection provides the outcomes of the categorization of challenges for IoT technology. Table 6 presents the type of challenges for implementing IoT in agriculture that are not native to the technology itself. The categories that were identified from this case were mainly economic, social, and governmental. Table 5 presents the IoT-technology-oriented challenges. From this group of challenges, the main categories that were observed were related to sensors, security, networking, data processing, and future research challenges. Figure 4 presents these groups of challenges found in the literature that was examined.

Figure 4.

IoT Challenges Mentioned in the Literature.

Each sensor in an IoT context comprises four parts: a sensing part, a computing part, a transmitting part, and a battery. The batteries are usually not rechargeable or changeable. Therefore, there is a pressing need for saving energy ([166,167,169,170]). Most of its energy is spent in transmitting sensed data back to the main station. Energy saving can be achieved by using operations with a low duty cycle, minimizing delays, or optimizing routing. Another challenge for IoT is the lack of reliable measurements for low-cost ground sensors [175]. For example, the measurement of soil moisture with ground sensors is challenging because the capacitive soil moisture sensors operate at low frequencies and are sensitive to the soil texture and salinity level [193]. The placement of sensors is another important challenge of incorporating IoT. Sensors have to be placed strategically to ensure maximum coverage and well-established communication between them ([91,175,192]). The difficulty of this task is proportional to the size of the field. As size increases, the placement of sensors becomes more difficult, and the cost of implementing a critical mass of sensors increases as well. This is known as the scalability problem ([168,172]). Harsh environmental conditions is another factor impeding ground sensors ([166,184]). Meteorological variables such as snow, fog, and solar radiance have an impact on both the sensor network and the planting [185]. The electrodes of low-cost sensors suffer deterioration quite quickly, and it is important to somehow ensure protection from adverse conditions [175]. Underground sensors constitute a solution to this problem, as meteorological phenomena do not affect them, and they do not impact the above-ground activities of machinery or animals, but at the cost of limited range due to interference by soil elements [191]. Another challenge for IoT is the need for sensor specialization, for each crop or disease in need of monitoring. They should be tailored to the needs of wildly different sectors in agriculture such as fruits, arable crops, or trees ([188,189,190]). Furthermore, all these sensors create electronic waste when they finally expire, and some of them contain harmful chemicals that are toxic to soils, crops, and stored grains [167].

Moving on, the architecture of the network is an important aspect of designing an IoT solution. Integrating data sources and distributed sensors is complicated [204]. IoT system design in PA is based on heterogeneous network topologies that lead to complex communications. IoT devices have to communicate and interact seamlessly through different platforms and infrastructures [168]. This is worsened by non-standardized data-communication protocols [166] and different IoT node synchronization [177], as communication protocols differ from crop to crop [175]. Another important aspect is the network layer itself. WiFi communications are not ideal for agricultural applications, which usually face extreme weather conditions and have insufficient power structure, particularly when devices are spread far over a field, and they work on non-rechargeable battery power [167]. On the other hand, cellular communication suffers in agriculture, as the operational area is far away from antennae of cellular networks, and thus the connectivity of 3G/4G is very low. Moreover, 4G cannot support ultra-low latency with high connectivity because it allows IP-based packet switching connectivity [171]. LoRaWAN has arisen as a replacement for WiFi/cellular networks in agricultural IoT. While it has low bandwidth, it boasts very low energy consumption and high connectivity across vast distances [175]. Its drawbacks are that its radio-based signals are affected by vegetation foliage ([175,185,186]). Another defect is its high latency [166], but this is not always a problem in agricultural application, except if a real-time approach is taken to field monitoring.

From the point of view of the data processing challenge, reference is made to data bloat in [189]. Existing cloud-base storage does not provide useful solutions to the problem of manipulating the different data patterns produced by the IoT [168]. The analysis of these data is another challenge. According to [188], it has to be simplified so that it can be easily understood by most farmers. Moreover, computational efficiency needs to increase on the agricultural domain, and it remains a challenge to come up with efficient alternatives to provide sufficient resources to IoT in rural environments [206]. IoT networks that use WiFi—or cellular—for their network infrastructure are more vulnerable to hacking attacks ([192,197]). A malicious party can infiltrate the network and cause a wide number of issues to the IoT network. Attacks can target various aspects such as the network itself, its nodes, the data it transmits, and the code by which nodes operate. The purpose of these attacks could be brute attempts to disrupt the network and cease its operation, or more subtle malicious attacks, such as data theft and faulty operation of sensors that lead to miscalculations and false measurements ([199,200,203]). Authentication, encryption, and securely generated passwords are required to prevent security compromise [168]. LoRaWAN, as it does not use the Internet and has already authentication and encryption systems built-in, has a higher degree of security. Furthermore, the data governance of IoT is another issue. Whoever processes the data extracted by the IoT has full access to sensitive information, and care must be taken about whom farmers entrust these data to [200].

Future research on agricultural IoT systems may focus on many different aspects. An important one is power management. Batteries of sensors that are self-rechargeable through solar power or other means would greatly enhance the capabilities of agricultural IoT ([166,190,201]). Research on an IoT capability to monitor crops in real time is another important characteristic of sensor networks that is constrained by the limited power resources [204]. Ground robots bearing moving sensors need improvement in guidance systems to achieve smooth navigation through a farming environment [212]. The technology’s approachability for farmers is another issue in need of improvement. The ergonomic design of sensors, so that they can be better integrated into the farming environment, and the facilitation of IoT device implementation in a “plug-and-play” manner are desirable improvements. Finally, there are numerous voices claiming that the 5G network could revolutionize the agricultural sector, but concerns have been raised regarding the absolute validity of those claims. To start with, there will be high infrastructure costs, because additional stations are needed due to 5G’s shorter range. The end users’ device cost would be higher, as would service tariffs [171]. Moreover, there are doubts that 5G is an environmentally friendly technology, as widespread deployment would translate to higher use of computing devices and an increase in energy demand to power the computing devices, which would exacerbate climate change. It is also worth noting that certain reports have raised concerns about 5G’s high radiation [167].

When it comes to IoT peripheral challenges, the most commonly mentioned among the papers analysed was the high costs of implementation. According to [64], the measurement of ground-based parameters can be costly and time-consuming, as well as requiring large amounts of human resources. Furthermore, Ref. [166] described the business issue of implementing an IoT-based system with three categories, the setup cost, the running cost, and the upgrading cost. All of these categories can incur high costs for farmers, making the adoption of IoT in agriculture a dilemma. Besides economic challenges, there is also a lack of theoretical and practical knowledge about such systems [166], and there is always a problem with the farmers’ lack of awareness for implementing new technologies in their farms [192], or even hesitance of the farmers to use them [165]. Finally, as these technologies are still at the infant stage of development, there is also a lack of policies and regulations [218], as well as ownership and processing issues related to the generated data [166].

4.4. Synergies Mentioned in the Literature

Works that present substantial synergies between technologies are sparse. This subsection summarises the review findings. While data fusion between different sensors for agriculture has been explored in previous works [225,226], the particularities of fusing or combining sensory output originating from two or more sources as diverse as IoT, UAV, and satellites has not been touched upon adequately for agricultural purposes. Alvarez-Vanhard et al., 2021 [225] gives a thorough review of the literature from 2021 and before, which mentions the synergical use of satellites and UAVs for agriculture, but it does not mention combinations of IoT and any of the two aforementioned technologies. The current work attempts to take the reasoning of [225] one step further by focusing on the particularities of each paper reviewed and dissecting the exact reasons that make the use of a synergy desirable. These reasons can be traced back to the challenges mentioned in Section 4.1–Section 4.3, as the complementary use of two or more technologies attempts to mitigate the limitations and challenges each technology faces on its own. Table 7 and Table 8 present the logic permeating each type of synergy encountered during the literature review by contrasting its complementary technological traits. Table 9, Table 10 and Table 11, describe the use cases of synergies performed in relevant works and characterize them according to the particular synergy types as defined in Table 7 and Table 8.

Table 7.

UAV and Satellite Synergy Types.

Table 8.

UAV/Satellite and IoT Synergy Types.

Table 9.

Synergies Part 1.

Table 10.

Synergies Part 2.

Table 11.

Synergies Part 3.

Among UAV-Satellite papers, Type 3 and Type 5 synergies are the most common. Interestingly, only two papers have utilized the flexibility of the UAV, and only two papers have utilized its enhanced capacity to create 3D point clouds, while no work combining UAV and satellite requires the historical records of past satellite overpasses.

Among IoT-UAV/Satellite works, Type 8 was the most common, utilizing the proximity of IoT measurements to clear up uncertainties in UAV/satellite remote sensing. Type 10 and Type 12 are the next most commonly mentioned synergies. Most Type 10 synergies utilise meteorological data in ML models to complement remote sensing predictions, while Type 12’s use of historical satellite data was quite common in contrast with UAV–Satellite papers. Type 9 and Type 11 are only encountered once, since real-time ground sensor monitoring is still in its infancy as a practice, and attempts to perform orthorectification through ground measurements are also scarce. Synergy Type 7 constitutes an exception, as it is commonly encountered across a very large number of scientific papers, which were not reviewed, as they were considered outside the scope of the current work.

While synergical use can mitigate certain liabilities and provide solutions, there are some challenges that are shared by two of the technologies examined or even by all three. Therefore, such challenges continue to exist when a synergy is attempted. Data privacy is a prominent concern for all three technologies, which cannot be mitigated by combining them. Another shared challenge is the large data volumes, which become more cumbersome when we have data fused from different sources. This is coupled with the increase in processing time and costs. In fact, the fusion of data of different types (sensor data and images of different resolutions) creates further challenges to the goal of having them efficiently integrated. A further challenge of the complementary use of the aformentioned technologies is that farmers need to become acquainted with not just one but all the technologies that are being combined. Moreover, it should be mentioned that the lack of current research regarding actual synergies between the three technologies is both an important impeding factor towards a holistic paradigm involving all three and a challenge for future research and pilot projects. In fact, in our literature review, out of the 205 reviewed works, only 24 papers provided synergy use cases. Finally, the lack of standardization poses further challenges with reference to their complementary use. In fact, all use cases found by the authors miss any particular standardization procedure, which could be beneficial for the future of synergical use between technologies.

5. Discussion

The value of satellite imagery in PA greatly depends on the application it is required for, as well as the particularities of the crops and the field. Even VHR satellites such as World View-3 are not suitable for individual plant analysis. While they provide a 30 cm per pixel spatial resolution on RGB imagery, on MS imagery, their spatial resolution is about 1.24 m per pixel. Thus, while their RGB imagery resolution is good enough for distinguishing canopies, the same satellite MS spatial resolution is not enough to provide adequate data for further analysis of these canopies. Evidently, satellite use is limited in heterogeneous farms, meaning farms that provide imagery that combines many different textures, such as soil, plants, wild weeds, or farming structures. In contrast, satellites are more useful for crops that cover an area homogeneously, such as cereal crops. But even in these types of plantations, applications such as wild-weed identification and soil spectral analysis benefit the most from the highest possible spatial resolution. Therefore, UAVs have an edge in applications of this type or heterogeneous farms. Very-high-definition images from UAVs, on the other hand, may introduce data redundancy, which lowers the performance of the algorithms used to process the images [117]. Upscaling may be necessary to a resolution scale, which ensures optimal performance of the algorithm used, which is another impediment to the UAV standardization of procedures regarding PA. It is therefore necessary to know the optimal resolution scale for each use case, as different combinations of algorithms and crops require different optimal resolutions. An added benefit is that lower resolution requirements allow the UAVs to fly to a higher altitude, thus also reducing battery expenditure and the time needed for the image-gathering excursion [116].

It is less probable to obtain usable imagery during rainy weather with a satellite than it is with a UAV system ([57,74]), as cloud coverage greatly affects satellite image quality. It might even render satellite images unusable if the coverage is too dense. Diseases can evolve rapidly, requiring precise and strict monitoring to ensure results, and skipping a measurement because of cloud coverage might throw off the project completely. On the opposite end, UAVs, being able to fly at will and being unaffected by cloud coverage, have a much higher capability for frequent imaging. A satellite’s lower resolution does come with certain benefits. Wide coverage is a satellite’s inherent advantage, and, as there is no piloting involved, the homogeneity of data is guaranteed. In contrast, UAVs have to be piloted carefully, they have to be stable enough to follow their required trajectory, and they have to maintain the same height over crops. Covering the same area as a satellite requires more images, more time, an expert pilot, and more processing of the images to create the final mosaics. It is therefore evident that UAVs are optimal for small to medium farms, where they are not required to cover vast areas for image acquisition. Large farms are better served by satellite imagery, supposing that they are not very heterogeneous.

UAVs, being highly modular platforms that come in all shapes and sizes while also being able to carry many different sensors, have abundant flexibility but at the cost of standardization. Sensor calibration is a process that is dependent on the equipment; therefore, each combination of UAV model and sensor requires its own calibration procedure. In contrast, satellites do not face such issues, as their calibration is a standardized procedure that has been taken care of by the platform operators. Therefore, the risk of obtaining unusable data due to calibration faults is higher in UAVs. Moreover, due to this lack of standardization, UAV images have a slightly higher processing complexity than satellite imagery. Still, by having a much higher temporal resolution and by being devices that can become part of a wider IoT network, UAVs can provide their data faster for processing and can even utilize edge computing if there is available on-field infrastructure.

Nevertheless, UAVs require a large upfront investment, that includes the cost of buying the vehicle, as well as the cost of buying its sensory equipment. Following this considerable investment, the cost of using and maintaining the platform includes recharging costs, the cost of man hours for processing the images, the cost of man hours for piloting the platform, and any costs for repairs and spare parts. Satellites, on the other hand, do not require the large upfront investment, but high spatial resolution satellite images can only be acquired by paying a hefty price. A further cost is added due to the man hours and skills required to process the satellite data. Adding to the challenge of buying and setting up a UAV platform for remote sensing are the bureaucratic difficulties of acquiring a licence to fly it. In contrast, satellite data require almost no bureaucratic hurdle for their acquisition.

The third PA technology analysed in this review is IoT. This may incorporate any type of sensor, from soil sensors that measure soil parameters, such as moisture, temperature, and the concentration of elements such as carbon and nitrogen, to sensors that measure water levels in crops for irrigation purposes, to MS sensors that measure vegetation indices. An IoT device can have any combination of sensors and can be adapted to the particularities of the farm under examination. IoT tends to be more expensive than UAVs or satellite data, but provides the most noise-free data out of the three technologies. Moreover, their capacity to capitalize on edge-computing puts them at the forefront of scientific research, as this allows real-time monitoring, which is an important future prospect for PA. Out of the three technologies analyzed, IoT is the only technology that has the capacity for continuous monitoring. Measurements from ground sensors are used as training and evaluation sets for ML algorithms for application in remote sensing, because their data are the most trustworthy source of measurement regarding soil moisture, concentrations of various elements in the soil, water plant status, and similar quantities that can be measured through physical contact or proximal sensing. The calculation of vegetation indices through ground remote sensors, on the other hand, is a more challenging endeavor. Ground remote sensing requires many sensors to be placed strategically across the field for maximum coverage, and even then, only homogeneous crop types can be measured safely. Image recognition of canopies or rows of vines in heterogeneous environments is very difficult or even impossible to achieve when using ground remote sensors because of the angle the images are taken from.

IoT systems are susceptible to attacks by malicious third parties, as they always require a network infrastructure to operate. On the other hand, UAVs usually operate without becoming a part of a network. Thus, they are less susceptible to hacking. Satellites, as they are not operated by the farmer, cannot become victims of hacking attacks. Nevertheless, satellite data from a farmer’s field can still be used for nefarious purposes, similar to data extracted from IoT and UAVs.

Satellites and UAVs are reusable assets, in contrast to cheap ground sensors. The environmental waste from an IoT system can be an important cause for concern. In particular, as the dimensions of the under-measurement field become larger, so does the number of sensors required to cover it. It can be safely assumed that, cost-wise and environmentally, IoT for large fields is not an optimal choice.

Complementary use relies on capitalizing on the strengths of one technology while complementing its weaknesses with another technology. UAVs can be used to enhance the spatial resolution of satellite MS data on certain parts of a field in order to examine that part more carefully. UAVs are also sources of excellent training data for ML algorithms that will then be applied to satellite imagery. Similarly, IoT systems are great producers of training data, especially for the purposes of irrigation and the measurement of soil parameters. LiDAR and thermal sensors on the ground can be used to enhance remotely sensed imagery. UAVs and IoT can therefore provide precision when satellite information is not precise enough for the needed application.

IoT and UAVs can act in synergy in a multitude of ways. The UAV can provide its remotely sensed data to complement data gathered by the ground nodes. A UAV can itself become another node in an IoT system so that its information can be processed in tandem with the information of the other nodes. UAVs can even help mitigate power expenditure in an IoT system, which is an important limitation, by serving as a carrier of data from sensors to the main station.

6. Conclusions

This systematic review summarized the limitations of ground-based, space-borne, and airborne technologies for PA, as they are presented in recent literature. It also pinpointed and summarized works that provide useful synergies between these technologies. These studies, when paralleled with the limitations of each technology, enable certain conclusions to be drawn, which can be summed up in the following points:

- Satellite is the broadest of the three technologies, providing ample farm coverage and good image detail. Cost-wise, it is the least risky source of PA data out of the three technologies, but it is also the slowest to provide usable results.

- UAVs have great flexibility and the capability to provide very specific information, but they are costly when applied to large farms, while also requiring a risky upfront investment from farmers. Their ability to analyze crops in very fine detail allows them to deal with specialized problems such as diseases or wild weeds. Still, using them is far from a standardized procedure, and more research is needed to establish the UAVs as a definitive solution.

- IoT provides the most specific information, as it can be tailored to the particular crop and farm. Moreover, it takes the smallest amount of time for data processing and has the unique potential of constant field monitoring. Its high specialization comes at the cost of reduced flexibility and area coverage.

- The review process included reviewing 24 use cases of synergies in the domain of agriculture between the technologies in question. Open challenges setting the ground for future research include data privacy issues, large data volumes, data fusion, farmers’ acquaintance with the technology, and lack of standardization. Taking steps toward addressing such challenges could facilitate further synergies and help the agricultural domain advance toward the Agriculture 4.0 paradigm.

As things stand, complementary use of the three technologies requires further research. The number of works that make an effort to combine them is far too small when compared with the number of works that have come out in PA recently. The future of agriculture relies on optimally using all the technological tools currently at our disposal.

Author Contributions

A.K., S.P., A.C., S.B.A., K.K. and A.A. contributed in the conception of the study; A.K., A.A. and K.K. contributed in the design of the study and the choice of the search query; All authors read an equal subdivision of the results of the query; A.A. and K.K. wrote the first draft of the manuscript. A.K., S.P., A.C., S.B.A. and R.O. wrote parts of the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

The present review paper has been developed as part of the SUSTAINABLE project, funded by the European Union’s Horizon 2020 research and innovation program under the Marie Sklodowska-Curie-RISE Grant Agreement No. 101007702, accessed on 25 May 2021. https://www.projectsustainable.eu.

Data Availability Statement

Data sharing not applicable No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| UAV | Unmanned Aerial Vehicle |

| IoT | Internet of Things |

| WSN | Wireless Sensor Network |

| PA | Precision Agriculture |

| IIoT | Industrial Internet of Things |

| ETO | Evapotranspiration |

| UN | United Nations |

| FAO | Food and Agriculture Organization |

| LVDT | Linear Variable Differential Transformer |

| LiDAR | Light Detection and Ranging |

| DSS | Decision Support System |

| LoRAWAN | Long Range Wide Area Network |

| NB-IoT | Narrow Band IoT |

| RGB | Red-Green-Blue |

| NIR | Near Infrared |

| IR | Infrared |

| MS | Multispectral |

| HS | Hyperspectral |

| LAI | Leaf Area Index |

| NDVI | Normalized Difference Vegetation Index |

| ML | Machine Learning |

| VHR | Very High Resolution |

| SfM | Structure from Motion |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

References

- De Clercq, M.; Vats, A.; Biel, A. Agriculture 4.0: The future of farming technology. In Proceedings of the World Government Summit, Dubai, United Arab Emirates, 17 January 2018; pp. 11–13. [Google Scholar]

- Leakey, R.R. Addressing the causes of land degradation, food/nutritional insecurity and poverty: A new approach to agricultural intensification in the tropics and sub-tropics. In Trade and Environment Review 2013: Wake Up Before it is too Late: Make Agriculture Truly Sustainable Now for Food Security in a Changing Climate; UN Publication: Geneva, Switzerland, 2013. [Google Scholar]

- Ray, D.K.; West, P.C.; Clark, M.; Gerber, J.S.; Prishchepov, A.V.; Chatterjee, S. Climate change has likely already affected global food production. PLoS ONE 2019, 14, e0217148. [Google Scholar] [CrossRef] [PubMed]

- Caldeira, C.; De Laurentiis, V.; Corrado, S.; van Holsteijn, F.; Sala, S. Quantification of food waste per product group along the food supply chain in the European Union: A mass flow analysis. Resour. Conserv. Recycl. 2019, 149, 479–488. [Google Scholar] [CrossRef]

- Rose, D.C.; Chilvers, J. Agriculture 4.0: Broadening responsible innovation in an era of smart farming. Front. Sustain. Food Syst. 2018, 2, 87. [Google Scholar] [CrossRef]

- Liu, Y.; Ma, X.; Shu, L.; Hancke, G.P.; Abu-Mahfouz, A.M. From Industry 4.0 to Agriculture 4.0: Current status, enabling technologies, and research challenges. IEEE Trans. Ind. Inform. 2020, 17, 4322–4334. [Google Scholar] [CrossRef]

- Mylonas, G.; Kalogeras, A.; Kalogeras, G.; Anagnostopoulos, C.; Alexakos, C.; Muñoz, L. Digital twins from smart manufacturing to smart cities: A survey. IEEE Access 2021, 9, 143222–143249. [Google Scholar] [CrossRef]

- Kalogeras, A.P.; Rivano, H.; Ferrarini, L.; Alexakos, C.; Iova, O.; Rastegarpour, S.; Mbacké, A.A. Cyber physical systems and Internet of Things: Emerging paradigms on smart cities. In Proceedings of the 2019 First International Conference on Societal Automation (SA), Krakow, Poland, 4–6 September 2019; pp. 1–13. [Google Scholar]

- Koulamas, C.; Kalogeras, A. Cyber-physical systems and digital twins in the industrial internet of things [cyber-physical systems]. Computer 2018, 51, 95–98. [Google Scholar] [CrossRef]

- Kalogeras, G.; Anagnostopoulos, C.; Alexakos, C.; Kalogeras, A.; Mylonas, G. Cyber Physical Systems for Smarter Society: A use case in the manufacturing sector. In Proceedings of the 2021 IEEE International Conference on Smart Internet of Things (SmartIoT), Jeju, Republic of Korea, 13–15 August 2021; pp. 371–376. [Google Scholar]

- Fountas, S.; Aggelopoulou, K.; Gemtos, T.A. Precision agriculture: Crop management for improved productivity and reduced environmental impact or improved sustainability. In Supply Chain Management for Sustainable Food Networks; John Wiley & Sons: Hoboken, NJ, USA, 2015; pp. 41–65. [Google Scholar]

- Roma, E.; Catania, P. Precision Oliviculture: Research Topics, Challenges, and Opportunities—A Review. Remote Sens. 2022, 14, 1668. [Google Scholar] [CrossRef]

- Alexakos, C.; Kalogeras, A.P. Internet of Things integration to a multi agent system based manufacturing environment. In Proceedings of the 2015 IEEE 20th Conference on Emerging Technologies & Factory Automation (ETFA), Luxembourg, 8–11 September 2015; pp. 1–8. [Google Scholar]

- Alexakos, C.; Anagnostopoulos, C.; Kalogeras, A.P. Integrating IoT to manufacturing processes utilizing semantics. In Proceedings of the 2016 IEEE 14th International Conference on Industrial Informatics (INDIN), Poitiers, France, 19–21 July 2016; pp. 154–159. [Google Scholar]

- Lalos, A.S.; Kalogeras, A.P.; Koulamas, C.; Tselios, C.; Alexakos, C.; Serpanos, D. Secure and safe iiot systems via machine and deep learning approaches. In Security and Quality in Cyber-Physical Systems Engineering; Springer: Cham, Switerland, 2019; pp. 443–470. [Google Scholar]

- Kandris, D.; Nakas, C.; Vomvas, D.; Koulouras, G. Applications of wireless sensor networks: An up-to-date survey. Appl. Syst. Innov. 2020, 3, 14. [Google Scholar] [CrossRef]

- Rossel, R.V.; Behrens, T. Using data mining to model and interpret soil diffuse reflectance spectra. Geoderma 2010, 158, 46–54. [Google Scholar] [CrossRef]

- Pallottino, F.; Antonucci, F.; Costa, C.; Bisaglia, C.; Figorilli, S.; Menesatti, P. Optoelectronic proximal sensing vehicle-mounted technologies in precision agriculture: A review. Comput. Electron. Agric. 2019, 162, 859–873. [Google Scholar] [CrossRef]

- Shafi, U.; Mumtaz, R.; García-Nieto, J.; Hassan, S.A.; Zaidi, S.A.R.; Iqbal, N. Precision agriculture techniques and practices: From considerations to applications. Sensors 2019, 19, 3796. [Google Scholar] [CrossRef]

- Babaeian, E.; Sadeghi, M.; Jones, S.B.; Montzka, C.; Vereecken, H.; Tuller, M. Ground, proximal, and satellite remote sensing of soil moisture. Rev. Geophys. 2019, 57, 530–616. [Google Scholar] [CrossRef]

- Adams, R.M.; Hurd, B.H.; Lenhart, S.; Leary, N. Effects of global climate change on agriculture: An interpretative review. Clim. Res. 1998, 11, 19–30. [Google Scholar] [CrossRef]

- Decoteau, D. Plant Physiology: Environmental Factors and Photosynthesis; Department of Horticulture, Pennsylvania State University: State College, PA, USA, 1998. [Google Scholar]

- Allen, R.G.; Pereira, L.S.; Raes, D.; Smith, M. Crop Evapotranspiration-Guidelines for Computing Crop Water Requirements-FAO Irrigation and Drainage Paper 56; FAO: Rome, Italy,, 1998; Volume 300, p. D05109. [Google Scholar]

- Strand, J.F. Some agrometeorological aspects of pest and disease management for the 21st century. Agric. For. Meteorol. 2000, 103, 73–82. [Google Scholar] [CrossRef]

- Scalisi, A.; Bresilla, K.; Simões Grilo, F. Continuous determination of fruit tree water-status by plant-based sensors. Italus Hortus 2017, 24, 39–50. [Google Scholar] [CrossRef]

- Morandi, B.; Manfrini, L.; Zibordi, M.; Noferini, M.; Fiori, G.; Grappadelli, L.C. A low-cost device for accurate and continuous measurements of fruit diameter. HortScience 2007, 42, 1380–1382. [Google Scholar] [CrossRef]

- Carella, A.; Gianguzzi, G.; Scalisi, A.; Farina, V.; Inglese, P.; Bianco, R.L. Fruit Growth Stage Transitions in Two Mango Cultivars Grown in a Mediterranean Environment. Plants 2021, 10, 1332. [Google Scholar] [CrossRef] [PubMed]

- Hornero, G.; Gaitán-Pitre, J.E.; Serrano-Finetti, E.; Casas, O.; Pallas-Areny, R. A novel low-cost smart leaf wetness sensor. Comput. Electron. Agric. 2017, 143, 286–292. [Google Scholar] [CrossRef]

- Karpyshev, P.; Ilin, V.; Kalinov, I.; Petrovsky, A.; Tsetserukou, D. Autonomous mobile robot for apple plant disease detection based on cnn and multi-spectral vision system. In Proceedings of the 2021 IEEE/SICE international symposium on system integration (SII), Virtual, 11–14 January 2021; pp. 157–162. [Google Scholar]

- Cardim Ferreira Lima, M.; Krus, A.; Valero, C.; Barrientos, A.; Del Cerro, J.; Roldán-Gómez, J.J. Monitoring plant status and fertilization strategy through multispectral images. Sensors 2020, 20, 435. [Google Scholar] [CrossRef]

- Vidoni, R.; Gallo, R.; Ristorto, G.; Carabin, G.; Mazzetto, F.; Scalera, L.; Gasparetto, A. ByeLab: An agricultural mobile robot prototype for proximal sensing and precision farming. In Proceedings of the ASME International Mechanical Engineering Congress and Exposition, Tampa, FL, USA, 3–9 November 2017; American Society of Mechanical Engineers: New York, NY, USA, 2017; Volume 58370, p. V04AT05A057. [Google Scholar]

- Anderson, V.; Leung, A.C.; Mehdipoor, H.; Jänicke, B.; Milošević, D.; Oliveira, A.; Manavvi, S.; Kabano, P.; Dzyuban, Y.; Aguilar, R.; et al. Technological opportunities for sensing of the health effects of weather and climate change: A state-of-the-art-review. Int. J. Biometeorol. 2021, 65, 779–803. [Google Scholar] [CrossRef]

- Ji, W.; Li, L.; Zhou, W. Design and implementation of a RFID reader/router in RFID-WSN hybrid system. Future Internet 2018, 10, 106. [Google Scholar] [CrossRef]

- Kocakulak, M.; Butun, I. An overview of Wireless Sensor Networks towards internet of things. In Proceedings of the 2017 IEEE 7th annual computing and communication workshop and conference (CCWC), Las Vegas, NV, USA, 9–11 January 2017; pp. 1–6. [Google Scholar]

- Mekonnen, Y.; Namuduri, S.; Burton, L.; Sarwat, A.; Bhansali, S. Machine learning techniques in wireless sensor network based precision agriculture. J. Electrochem. Soc. 2019, 167, 037522. [Google Scholar] [CrossRef]

- Haxhibeqiri, J.; De Poorter, E.; Moerman, I.; Hoebeke, J. A survey of LoRaWAN for IoT: From technology to application. Sensors 2018, 18, 3995. [Google Scholar] [CrossRef] [PubMed]

- Martinez, B.; Adelantado, F.; Bartoli, A.; Vilajosana, X. Exploring the performance boundaries of NB-IoT. IEEE Internet Things J. 2019, 6, 5702–5712. [Google Scholar] [CrossRef]

- Lavric, A.; Petrariu, A.I.; Popa, V. SigFox communication protocol: The new era of IoT? In Proceedings of the 2019 international conference on sensing and instrumentation in IoT Era (ISSI), Lisbon, Portugal, 29–30 August 2019; pp. 1–4. [Google Scholar]

- Borkar, S.R. Long-term evolution for machines (LTE-M). In LPWAN Technologies for IoT and M2M Applications; Elsevier: Amsterdam, The Netherlands, 2020; pp. 145–166. [Google Scholar]

- Aldahdouh, K.A.; Darabkh, K.A.; Al-Sit, W. A survey of 5G emerging wireless technologies featuring LoRaWAN, Sigfox, NB-IoT and LTE-M. In Proceedings of the 2019 International Conference on Wireless Communications Signal Processing and Networking (WiSPNET), Chennai, India, 21–23 March 2019; pp. 561–566. [Google Scholar]

- Milics, G. Application of uavs in precision agriculture. In International Climate Protection; Springer: Berlin/Heidelberg, Germany, 2019; pp. 93–97. [Google Scholar]

- Maltamo, M.; Næsset, E.; Vauhkonen, J. Forestry applications of airborne laser scanning. Concepts Case Stud. Manag. Ecosys 2014, 27, 460. [Google Scholar]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A review on UAV-based applications for precision agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef]

- dos Santos Ferreira, A.; Freitas, D.M.; da Silva, G.G.; Pistori, H.; Folhes, M.T. Weed detection in soybean crops using ConvNets. Comput. Electron. Agric. 2017, 143, 314–324. [Google Scholar] [CrossRef]

- Quebrajo, L.; Perez-Ruiz, M.; Pérez-Urrestarazu, L.; Martínez, G.; Egea, G. Linking thermal imaging and soil remote sensing to enhance irrigation management of sugar beet. Biosyst. Eng. 2018, 165, 77–87. [Google Scholar] [CrossRef]

- Garre, P.; Harish, A. Autonomous agricultural pesticide spraying uav. In Proceedings of the IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2018; Volume 455, p. 012030. [Google Scholar]

- Kerkech, M.; Hafiane, A.; Canals, R. Deep leaning approach with colorimetric spaces and vegetation indices for vine diseases detection in UAV images. Comput. Electron. Agric. 2018, 155, 237–243. [Google Scholar] [CrossRef]

- del Cerro, J.; Cruz Ulloa, C.; Barrientos, A.; de León Rivas, J. Unmanned aerial vehicles in agriculture: A survey. Agronomy 2021, 11, 203. [Google Scholar] [CrossRef]

- Lal, R. 16 Challenges and Opportunities in Precision Agriculture. Soil-Specif. Farming Precis. Agric. 2015, 22, 391. [Google Scholar]

- Teke, M.; Deveci, H.S.; Haliloğlu, O.; Gürbüz, S.Z.; Sakarya, U. A short survey of hyperspectral remote sensing applications in agriculture. In Proceedings of the 2013 6th international conference on recent advances in space technologies (RAST), Istanbul, Turkey, 12–14 June 2013; pp. 171–176. [Google Scholar]

- Sishodia, R.P.; Ray, R.L.; Singh, S.K. Applications of remote sensing in precision agriculture: A review. Remote Sens. 2020, 12, 3136. [Google Scholar] [CrossRef]

- López-Granados, F.; Jurado-Expósito, M.; Alamo, S.; Garcıa-Torres, L. Leaf nutrient spatial variability and site-specific fertilization maps within olive (Olea europaea L.) orchards. Eur. J. Agron. 2004, 21, 209–222. [Google Scholar] [CrossRef]

- Noori, O.; Panda, S.S. Site-specific management of common olive: Remote sensing, geospatial, and advanced image processing applications. Comput. Electron. Agric. 2016, 127, 680–689. [Google Scholar] [CrossRef]

- Van Evert, F.K.; Gaitán-Cremaschi, D.; Fountas, S.; Kempenaar, C. Can precision agriculture increase the profitability and sustainability of the production of potatoes and olives? Sustainability 2017, 9, 1863. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. Int. J. Surg. 2021, 88, 105906. [Google Scholar] [CrossRef]

- Wang, T.; Liu, Y.; Wang, M.; Fan, Q.; Tian, H.; Qiao, X.; Li, Y. Applications of UAS in crop biomass monitoring: A review. Front. Plant Sci. 2021, 12, 616689. [Google Scholar] [CrossRef]

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Pajuelo Madrigal, V.; Mallinis, G.; Ben Dor, E.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the use of unmanned aerial systems for environmental monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef]

- Ezenne, G.; Jupp, L.; Mantel, S.; Tanner, J. Current and potential capabilities of UAS for crop water productivity in precision agriculture. Agric. Water Manag. 2019, 218, 158–164. [Google Scholar] [CrossRef]

- Niu, H.; Hollenbeck, D.; Zhao, T.; Wang, D.; Chen, Y. Evapotranspiration estimation with small UAVs in precision agriculture. Sensors 2020, 20, 6427. [Google Scholar] [CrossRef]

- Pereira, F.d.S.; de Lima, J.; Freitas, R.; Dos Reis, A.A.; do Amaral, L.R.; Figueiredo, G.K.D.A.; Lamparelli, R.A.; Magalhães, P.S.G. Nitrogen variability assessment of pasture fields under an integrated crop-livestock system using UAV, PlanetScope, and Sentinel-2 data. Comput. Electron. Agric. 2022, 193, 106645. [Google Scholar] [CrossRef]

- Veysi, S.; Naseri, A.A.; Hamzeh, S. Relationship between field measurement of soil moisture in the effective depth of sugarcane root zone and extracted indices from spectral reflectance of optical/thermal bands of multispectral satellite images. J. Indian Soc. Remote Sens. 2020, 48, 1035–1044. [Google Scholar] [CrossRef]

- Sun, C.; Zhou, J.; Ma, Y.; Xu, Y.; Pan, B.; Zhang, Z. A review of remote sensing for potato traits characterization in precision agriculture. Front. Plant Sci. 2022, 13, 871859. [Google Scholar] [CrossRef] [PubMed]

- Günder, M.; Ispizua Yamati, F.R.; Kierdorf, J.; Roscher, R.; Mahlein, A.K.; Bauckhage, C. Agricultural plant cataloging and establishment of a data framework from UAV-based crop images by computer vision. GigaScience 2022, 11, giac054. [Google Scholar] [CrossRef]

- Panday, U.S.; Pratihast, A.K.; Aryal, J.; Kayastha, R.B. A review on drone-based data solutions for cereal crops. Drones 2020, 4, 41. [Google Scholar] [CrossRef]

- Vlachopoulos, O.; Leblon, B.; Wang, J.; Haddadi, A.; LaRocque, A.; Patterson, G. Evaluation of Crop Health Status With UAS Multispectral Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 15, 297–308. [Google Scholar] [CrossRef]

- Bahuguna, S.; Anchal, S.; Guleria, D.; Devi, M.; Meenakshi; Kumar, D.; Kumar, R.; Murthy, P.; Kumar, A. Unmanned aerial vehicle-based multispectral remote sensing for commercially important aromatic crops in India for its efficient monitoring and management. J. Indian Soc. Remote Sens. 2022, 50, 397–407. [Google Scholar] [CrossRef]

- Thilakarathna, M.S.; Raizada, M.N. Challenges in using precision agriculture to optimize symbiotic nitrogen fixation in legumes: Progress, limitations, and future improvements needed in diagnostic testing. Agronomy 2018, 8, 78. [Google Scholar] [CrossRef]

- Luo, S.; Jiang, X.; Yang, K.; Li, Y.; Fang, S. Multispectral remote sensing for accurate acquisition of rice phenotypes: Impacts of radiometric calibration and unmanned aerial vehicle flying altitudes. Front. Plant Sci. 2022, 13, 958106. [Google Scholar] [CrossRef]

- Hunt, E.R.; Horneck, D.A.; Spinelli, C.B.; Turner, R.W.; Bruce, A.E.; Gadler, D.J.; Brungardt, J.J.; Hamm, P.B. Monitoring nitrogen status of potatoes using small unmanned aerial vehicles. Precis. Agric. 2018, 19, 314–333. [Google Scholar] [CrossRef]

- Di Gennaro, S.F.; Toscano, P.; Gatti, M.; Poni, S.; Berton, A.; Matese, A. Spectral Comparison of UAV-Based Hyper and Multispectral Cameras for Precision Viticulture. Remote Sens. 2022, 14, 449. [Google Scholar] [CrossRef]

- Barbosa Júnior, M.R.; Moreira, B.R.d.A.; Brito Filho, A.L.d.; Tedesco, D.; Shiratsuchi, L.S.; Silva, R.P.d. UAVs to Monitor and Manage Sugarcane: Integrative Review. Agronomy 2022, 12, 661. [Google Scholar] [CrossRef]

- Martos, V.; Ahmad, A.; Cartujo, P.; Ordoñez, J. Ensuring agricultural sustainability through remote sensing in the era of agriculture 5.0. Appl. Sci. 2021, 11, 5911. [Google Scholar] [CrossRef]

- Velusamy, P.; Rajendran, S.; Mahendran, R.K.; Naseer, S.; Shafiq, M.; Choi, J.G. Unmanned Aerial Vehicles (UAV) in precision agriculture: Applications and challenges. Energies 2021, 15, 217. [Google Scholar] [CrossRef]

- Rasmussen, J.; Azim, S.; Boldsen, S.K.; Nitschke, T.; Jensen, S.M.; Nielsen, J.; Christensen, S. The challenge of reproducing remote sensing data from satellites and unmanned aerial vehicles (UAVs) in the context of management zones and precision agriculture. Precis. Agric. 2021, 22, 834–851. [Google Scholar] [CrossRef]

- Aliane, N.; Muñoz, C.Q.G.; Sánchez-Soriano, J. Web and MATLAB-Based Platform for UAV Flight Management and Multispectral Image Processing. Sensors 2022, 22, 4243. [Google Scholar] [CrossRef]