Branch Interference Sensing and Handling by Tactile Enabled Robotic Apple Harvesting

Abstract

:1. Introduction

2. Literature Review

3. Methods and Materials

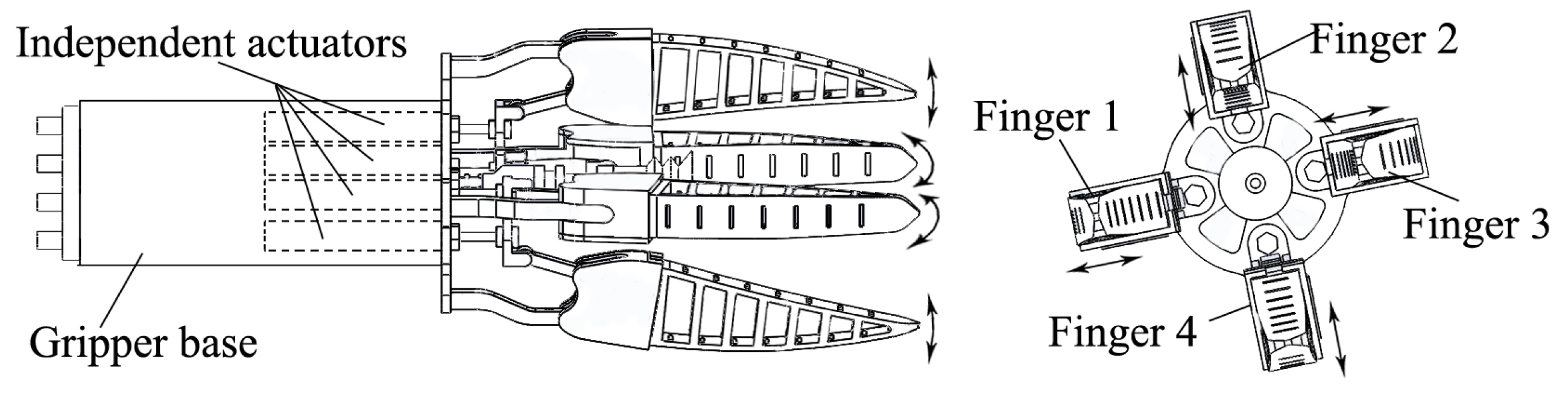

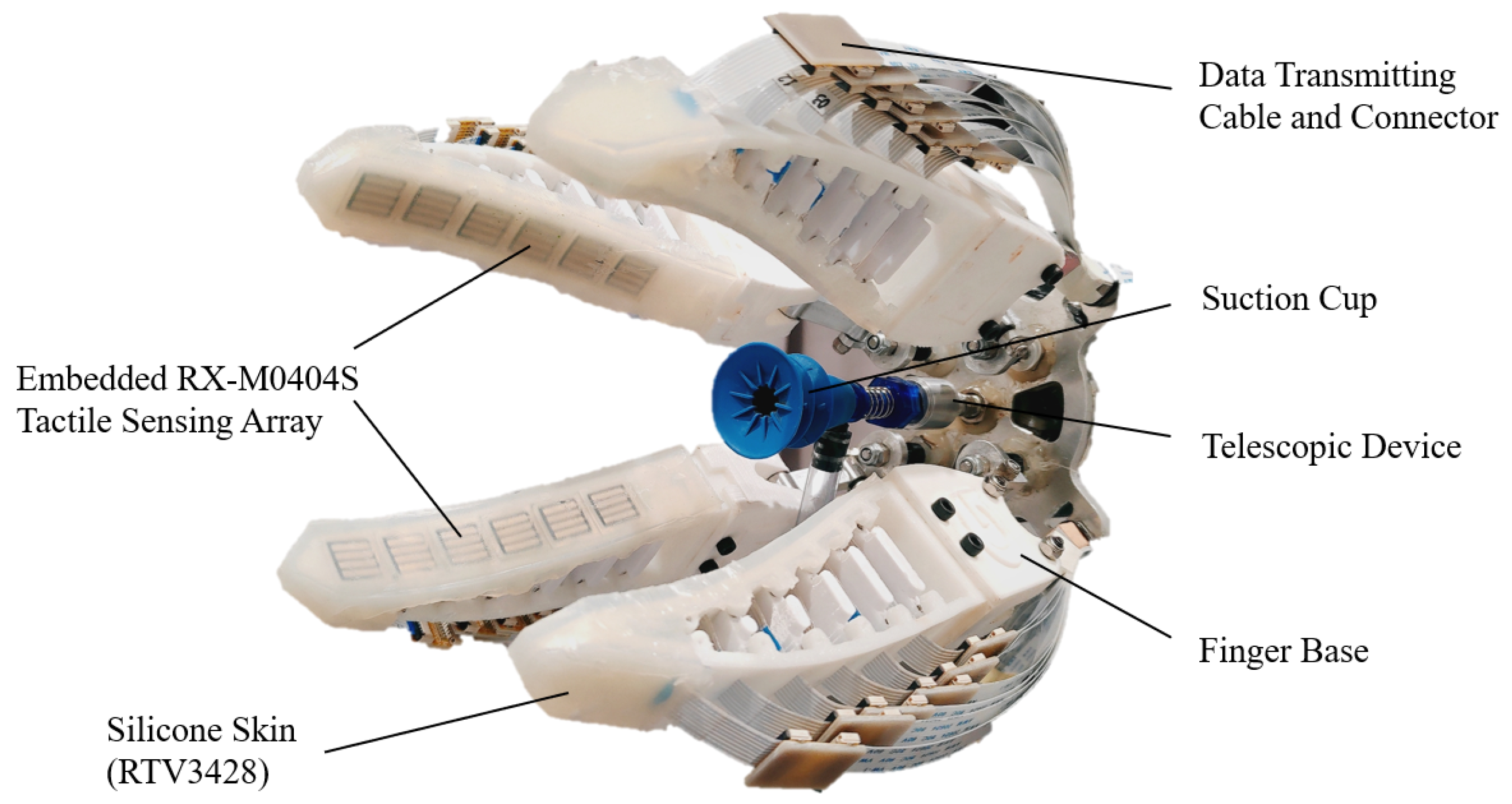

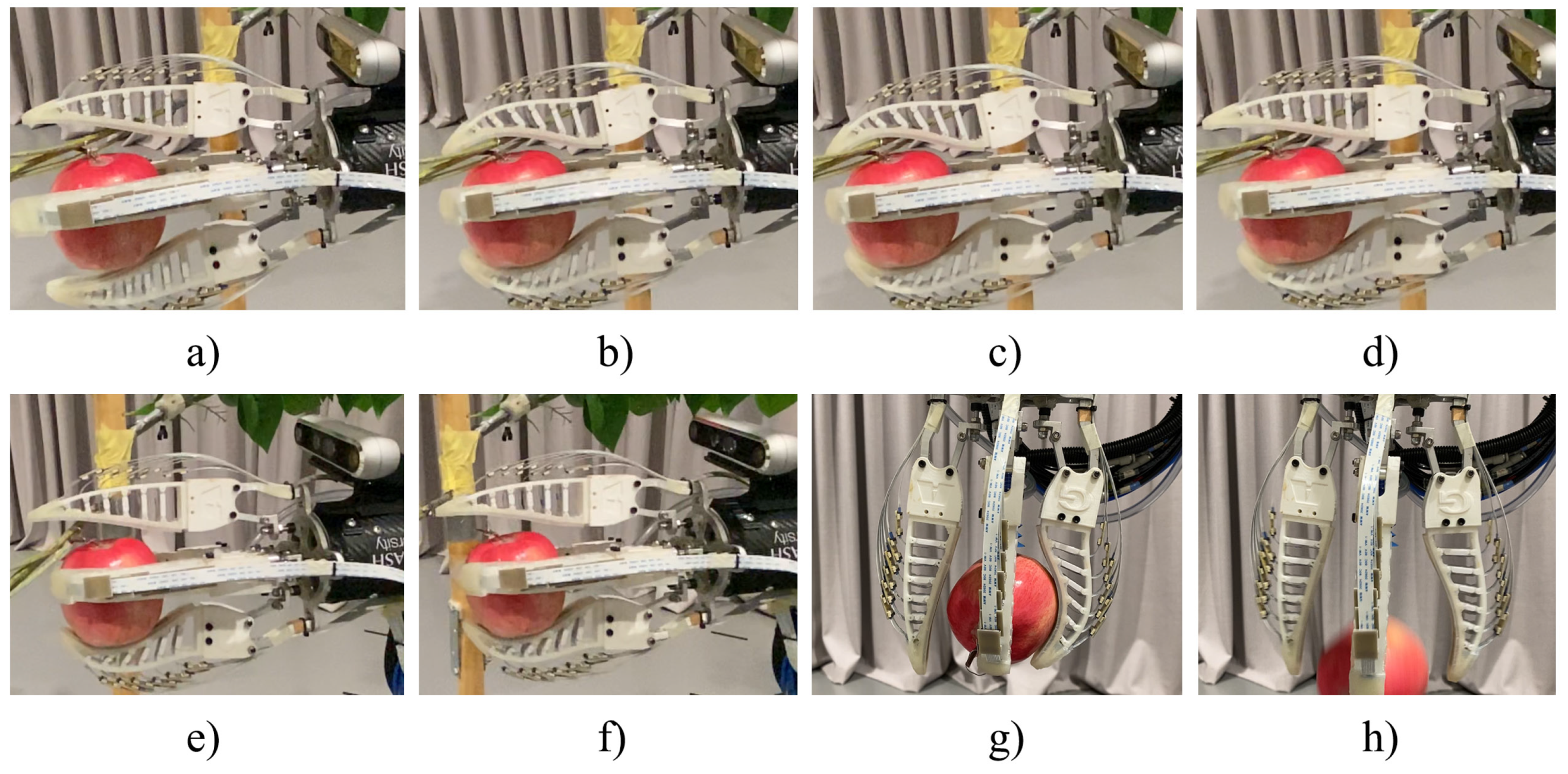

3.1. End-Effector Design

Tactile Design

3.2. Tactile Perception

3.2.1. Grasp Status Definition

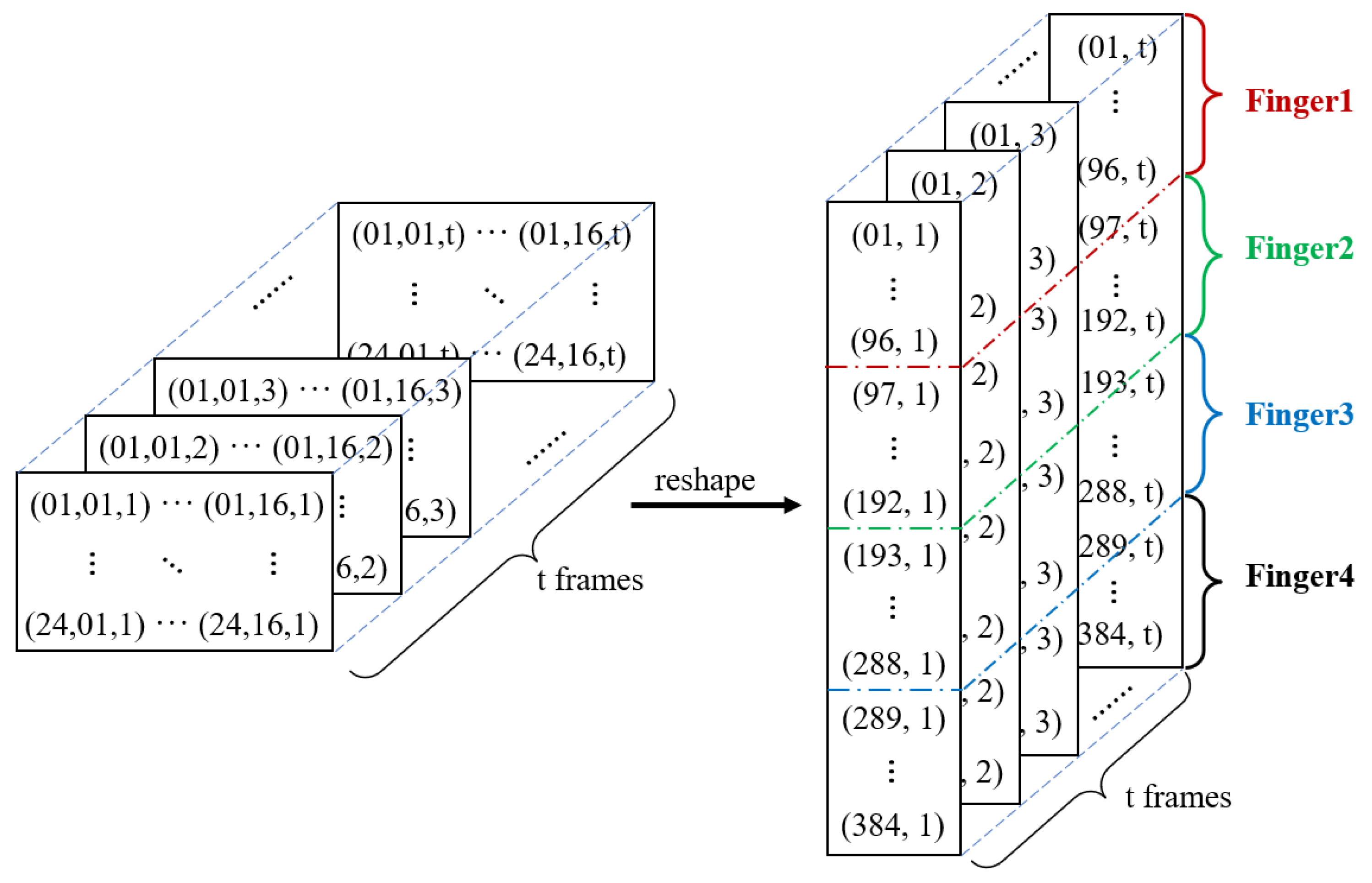

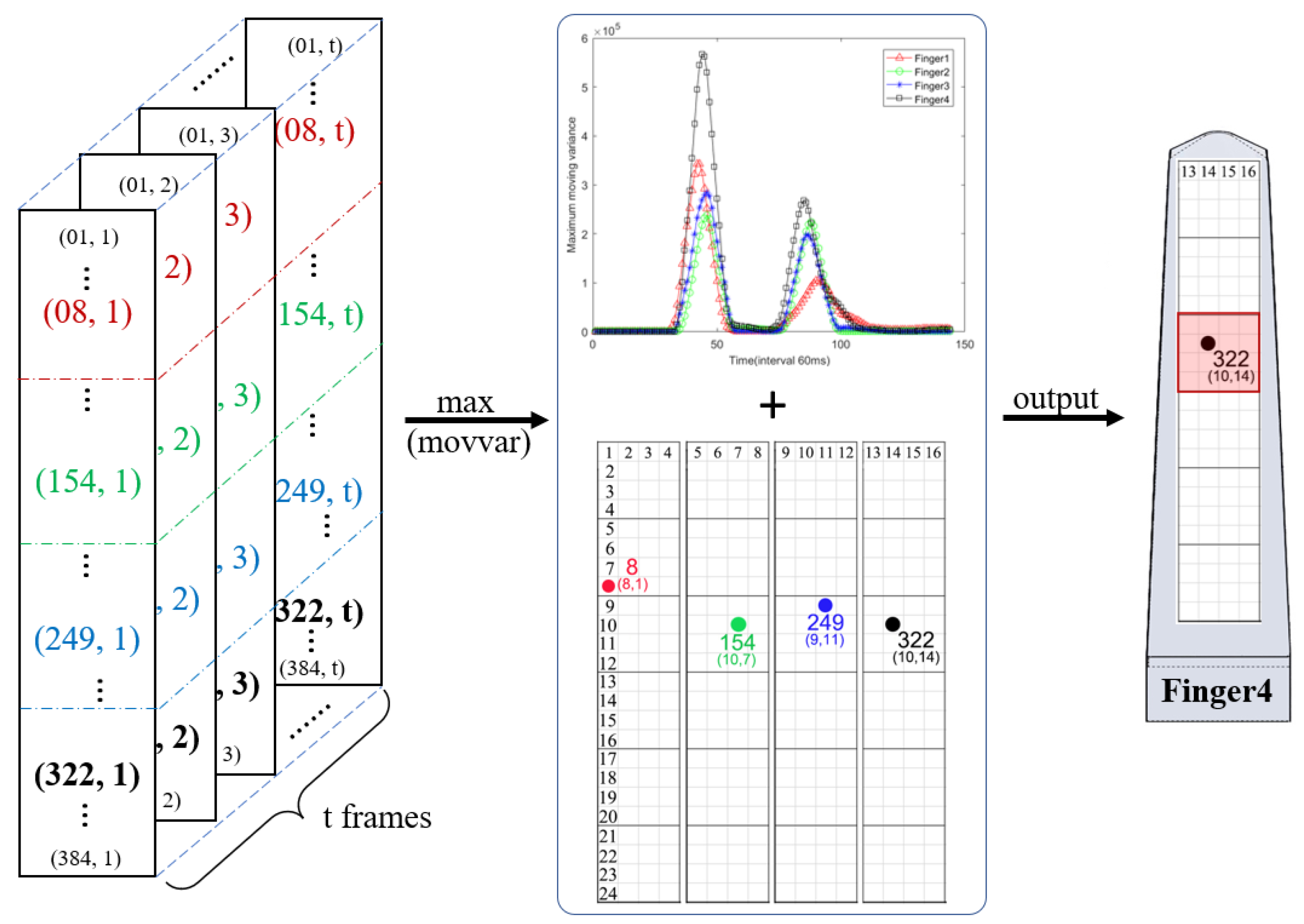

3.2.2. Data Processing

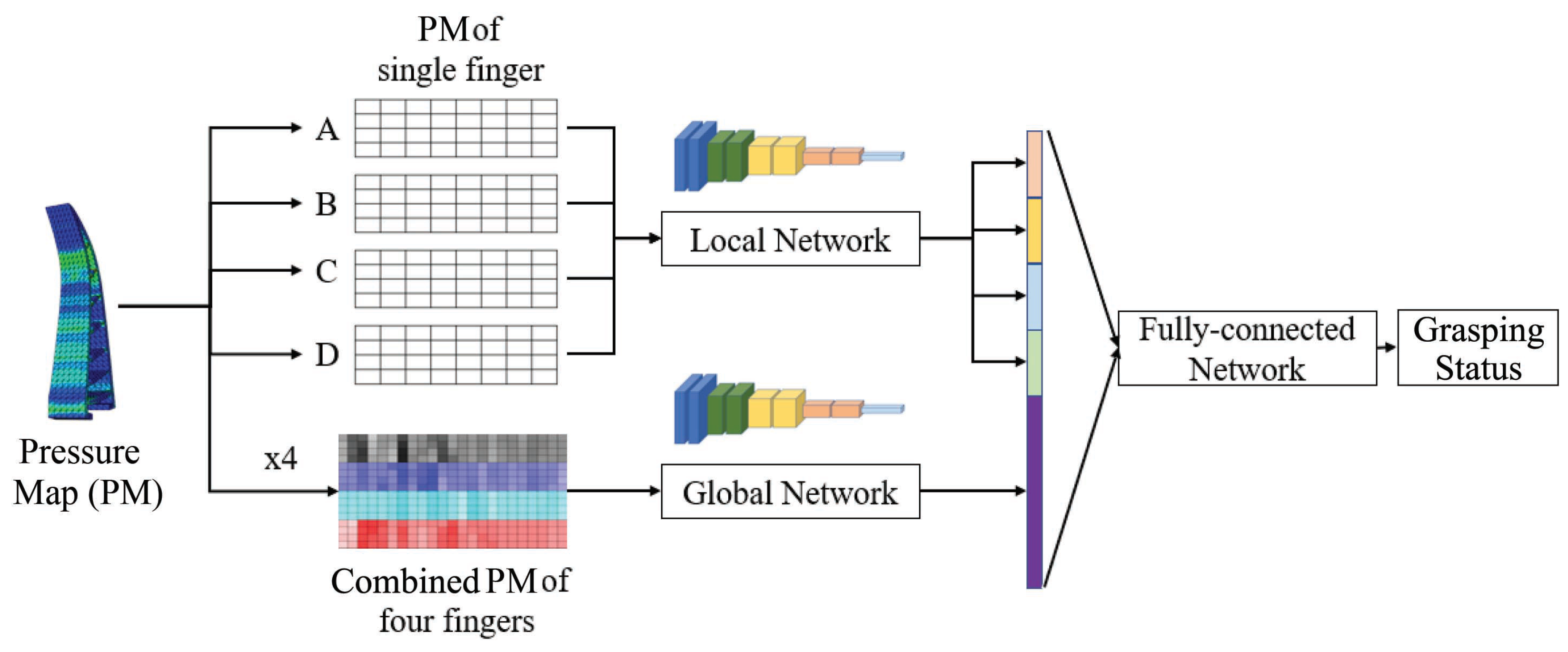

3.2.3. Grasping Status Classification

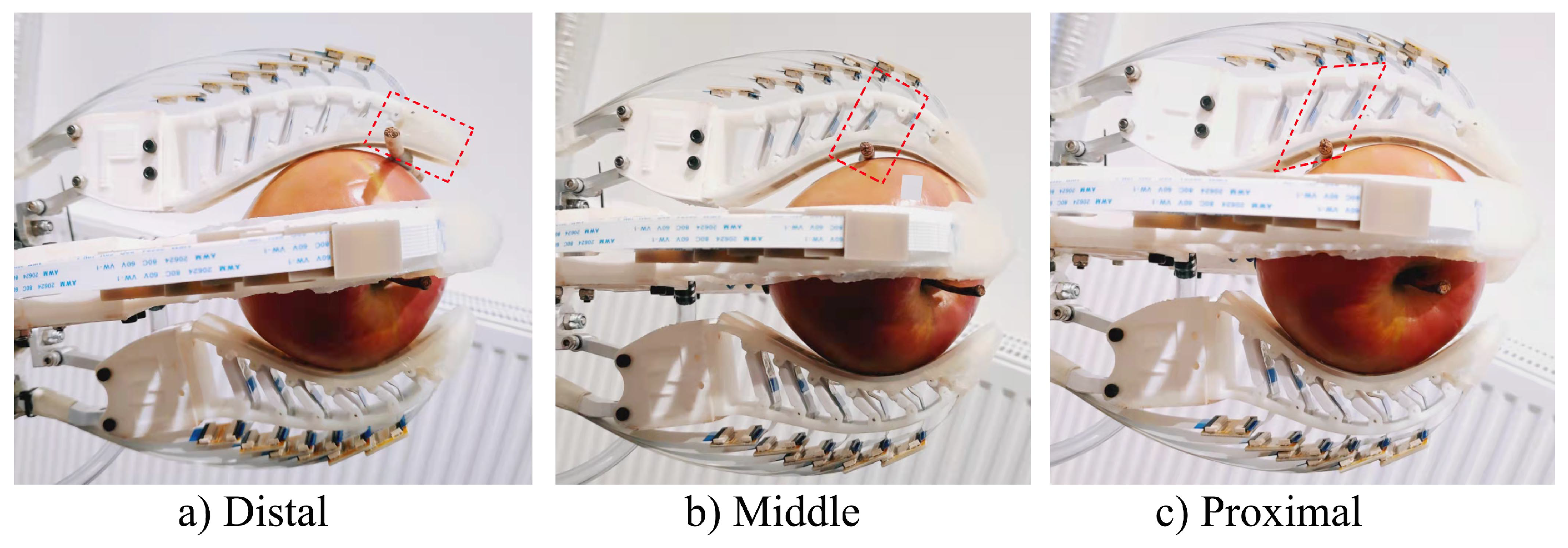

3.2.4. Interference Localization

4. Experiments and Results

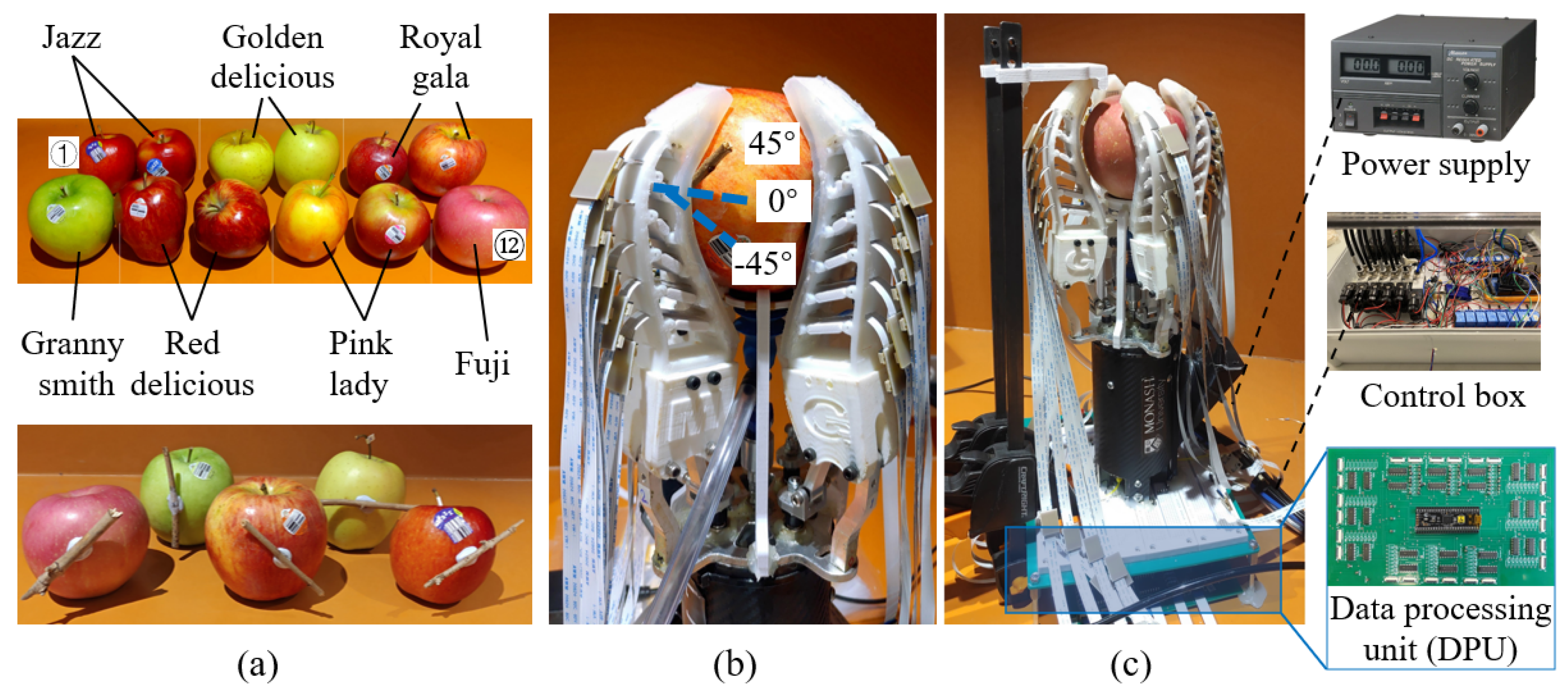

4.1. Experiment Setup

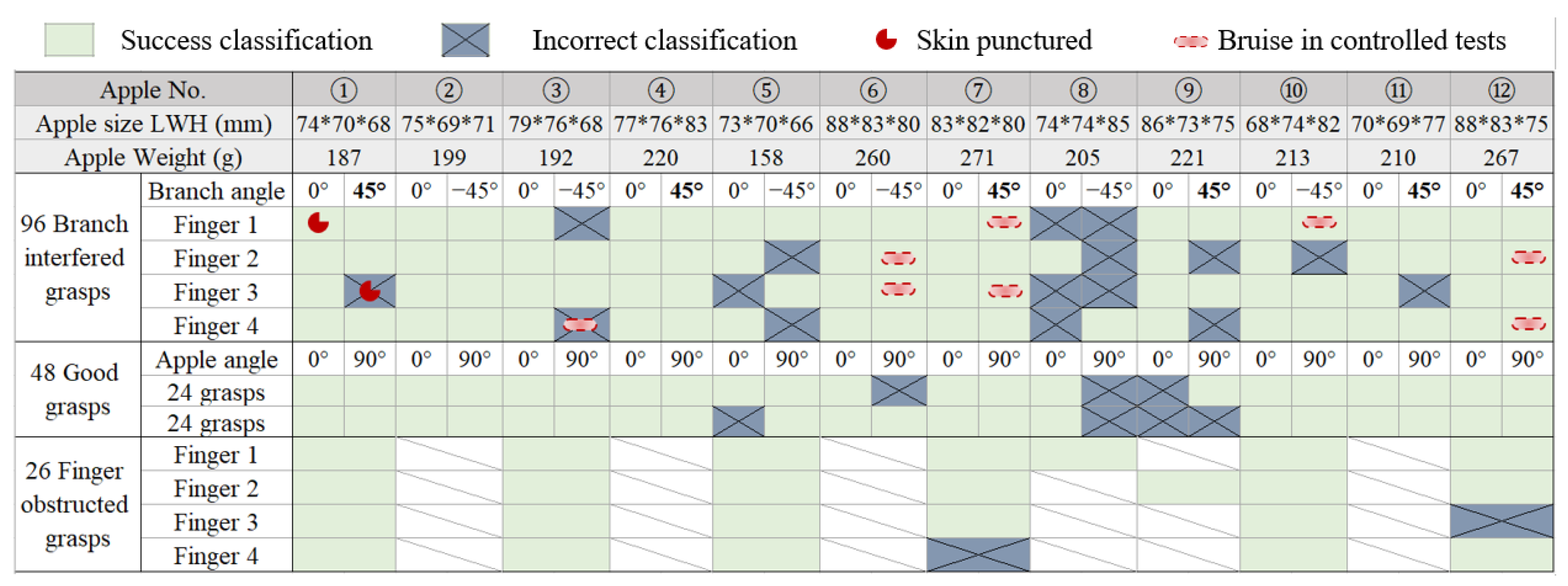

4.2. Experiment on Status Detection

4.3. Experiment on Interference Localization

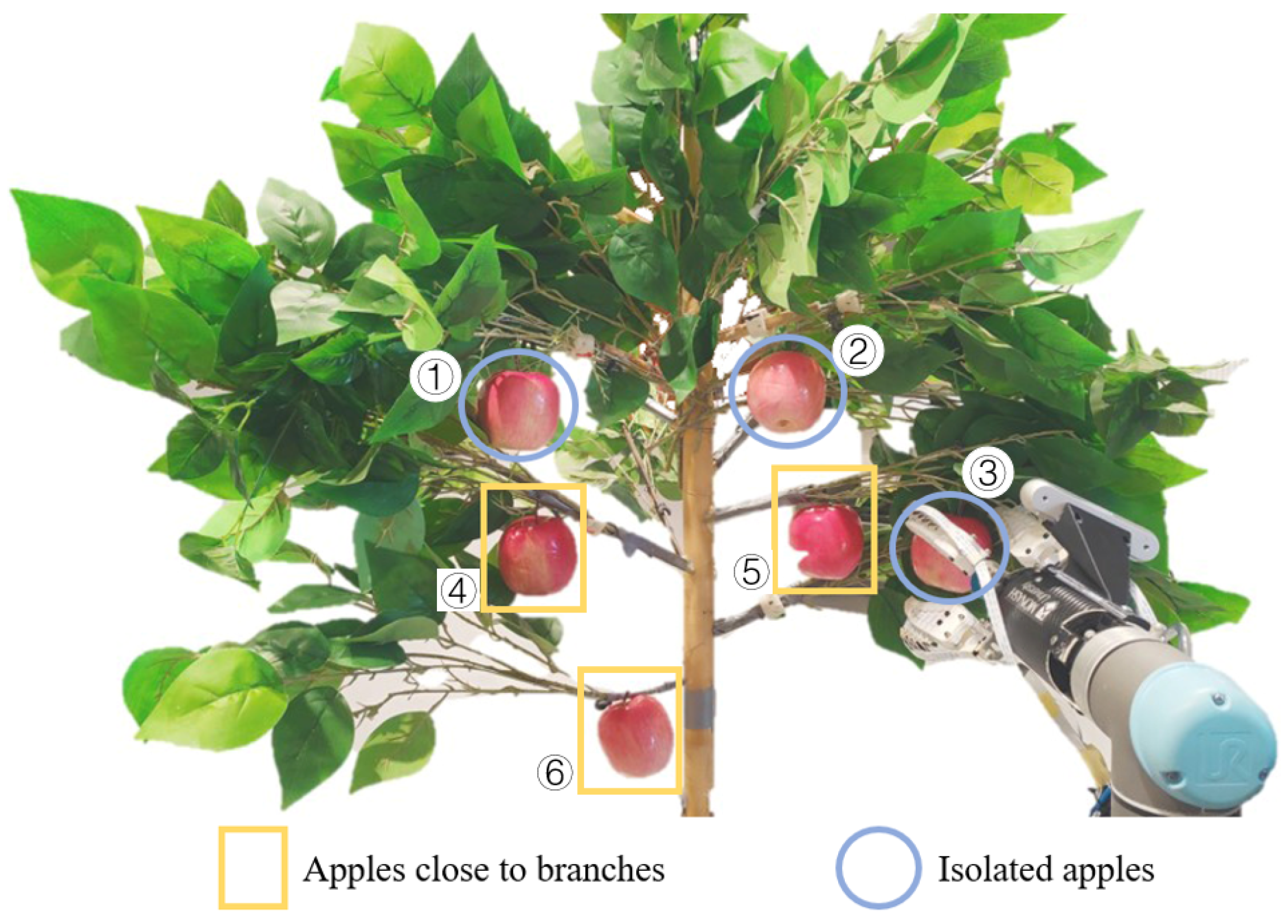

4.4. Experiment on the Robotic Harvesting System

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| DoF | Degree of freedom |

| TPU | Thermoplastic Polyurethane |

| PM | Pressure Map |

| CNN | Convolutional neural network |

| ResNet | Residual neural network |

| FFC | Flat Flexible Cable |

References

- Zhao, Y.; Gong, L.; Huang, Y.; Liu, C. A review of key techniques of vision-based control for harvesting robot. Comput. Electron. Agric. 2016, 127, 311–323. [Google Scholar]

- Kang, H.; Zhou, H.; Wang, X.; Chen, C. Real-time fruit recognition and grasping estimation for robotic apple harvesting. Sensors 2020, 20, 5670. [Google Scholar] [PubMed]

- Lin, G.; Zhu, L.; Li, J.; Zou, X.; Tang, Y. Collision-free path planning for a guava-harvesting robot based on recurrent deep reinforcement learning. Comput. Electron. Agric. 2021, 188, 106350. [Google Scholar]

- Tang, Y.; Chen, M.; Wang, C.; Luo, L.; Li, J.; Lian, G.; Zou, X. Recognition and localization methods for vision-based fruit picking robots: A review. Front. Plant Sci. 2020, 11, 510. [Google Scholar] [PubMed]

- Wang, X.; Khara, A.; Chen, C. A soft pneumatic bistable reinforced actuator bioinspired by Venus Flytrap with enhanced grasping capability. Bioinspir. Biomim. 2020, 15, 056017. [Google Scholar]

- Wang, X.; Zhou, H.; Kang, H.; Au, W.; Chen, C. Bio-inspired soft bistable actuator with dual actuations. Smart Mater. Struct. 2021, 30, 125001. [Google Scholar] [CrossRef]

- Zhou, H.; Wang, X.; Au, W.; Kang, H.; Chen, C. Intelligent robots for fruit harvesting: Recent developments and future challenges. Precis. Agric. 2022, 23, 1856–1907. [Google Scholar] [CrossRef]

- Hussein, Z.; Fawole, O.; Opara, U. Harvest and postharvest factors affecting bruise damage of fresh fruits. Hortic. Plant J. 2020, 6, 1–13. [Google Scholar]

- Silwal, A.; Davidson, J.; Karkee, M.; Mo, C.; Zhang, Q.; Lewis, K. Design, integration, and field evaluation of a robotic apple harvester. J. Field Robot. 2017, 34, 1140–1159. [Google Scholar]

- Baeten, J.; Donné, K.; Boedrij, S.; Beckers, W.; Claesen, E. Autonomous fruit picking machine: A robotic apple harvester. In Proceedings of the 6th International Conference on Field and Service Robotics-FSR 2007, Chamonix, France, 9–12 July 2007. [Google Scholar]

- Lehnert, C.; English, A.; McCool, C.; Tow, A.; Perez, T. Autonomous sweet pepper harvesting for protected cropping systems. IEEE Robot. Autom. Lett. 2017, 2, 872–879. [Google Scholar]

- Williams, H.; Jones, M.; Nejati, M.; Seabright, M.; Bell, J.; Penhall, N.; Barnett, J.; Duke, M.; Scarfe, A.; Ahn, H.; et al. Robotic kiwifruit harvesting using machine vision, convolutional neural networks, and robotic arms. Biosyst. Eng. 2019, 181, 140–156. [Google Scholar]

- Bu, L.; Hu, G.; Chen, C.; Sugirbay, A.; Chen, J. Experimental and simulation analysis of optimum picking patterns for robotic apple harvesting. Sci. Hortic. 2020, 261, 108937. [Google Scholar]

- Polat, R.; Aktas, T.; Ikinci, A. Selected mechanical properties and bruise susceptibility of nectarine fruit. Int. J. Food Prop. 2012, 15, 1369–1380. [Google Scholar]

- Kang, H.; Chen, C. Fast implementation of real-time fruit detection in apple orchards using deep learning. Comput. Electron. Agric. 2020, 168, 105108. [Google Scholar] [CrossRef]

- Font, D.; Pallejà, T.; Tresanchez, M.; Runcan, D.; Moreno, J.; Martínez, D.; Teixidó, M.; Palacín, J. A proposal for automatic fruit harvesting by combining a low cost stereovision camera and a robotic arm. Sensors 2014, 14, 11557–11579. [Google Scholar] [PubMed]

- Zhou, Y.; Tang, Y.; Zou, X.; Wu, M.; Tang, W.; Meng, F.; Zhang, Y.; Kang, H. Adaptive Active Positioning of Camellia oleifera Fruit Picking Points: Classical Image Processing and YOLOv7 Fusion Algorithm. Appl. Sci. 2022, 12, 12959. [Google Scholar] [CrossRef]

- Tang, Y.; Zhou, H.; Wang, H.; Zhang, Y. Fruit detection and positioning technology for a Camellia oleifera C. Abel orchard based on improved YOLOv4-tiny model and binocular stereo vision. Expert Syst. Appl. 2023, 211, 118573. [Google Scholar] [CrossRef]

- Shiigi, T.; Kurita, M.; Kondo, N.; Ninomiya, K.; Rajendra, P.; Kamata, J.; Hayashi, S.; Kobayashi, K.; Shigematsu, K.; Kohno, Y. Strawberry harvesting robot for fruits grown on table top culture. In Proceedings of the American Society of Agricultural and Biological Engineers Annual International Meeting 2008, ASABE 2008, Providence, RI, USA, 29 June–2 July 2008; pp. 3139–3148. [Google Scholar]

- Feng, Q.; Wang, X.; Zheng, W.; Qiu, Q.; Jiang, K. New strawberry harvesting robot for elevated-trough culture. Int. J. Agric. Biol. Eng. 2012, 5, 1–8. [Google Scholar]

- Hayashi, S.; Shigematsu, K.; Yamamoto, S.; Kobayashi, K.; Kohno, Y.; Kamata, J.; Kurita, M. Evaluation of a strawberry-harvesting robot in a field test. Biosyst. Eng. 2010, 105, 160–171. [Google Scholar]

- Baeten, J.; Donné, K.; Boedrij, S.; Beckers, W.; Claesen, E. Autonomous fruit picking machine: A robotic apple harvester. Field Serv. Robot. 2008, 42, 531–539. [Google Scholar]

- Kondo, N.; Yata, K.; Iida, M.; Shiigi, T.; Monta, M.; Kurita, M.; Omori, H. Development of an end-effector for a tomato cluster harvesting robot. Eng. Agric. Environ. Food 2010, 3, 20–24. [Google Scholar] [CrossRef]

- Feng, Q.; Zou, W.; Fan, P.; Zhang, C.; Wang, X. Design and test of robotic harvesting system for cherry tomato. Int. J. Agric. Biol. Eng. 2018, 11, 96–100. [Google Scholar] [CrossRef]

- Bachche, S.; Oka, K. Performance testing of thermal cutting systems for sweet pepper harvesting robot in greenhouse horticulture. J. Syst. Des. Dyn. 2013, 7, 36–51. [Google Scholar] [CrossRef]

- Bogue, R. Robots poised to transform agriculture. Ind. Robot. Int. J. Robot. Res. Appl. 2021, 48, 637–642. [Google Scholar]

- Anandan, T. Cultivating robotics and AI: Smarter machines help relieve aging agricultural workforce and fewer workers. Control Eng. 2020, 67, M1. [Google Scholar]

- Davidson, J.; Silwal, A.; Hohimer, C.; Karkee, M.; Mo, C.; Zhang, Q. Proof-of-concept of a robotic apple harvester. In Proceedings of the 2016 IEEE/RSJ International Conference On Intelligent Robots And Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 634–639. [Google Scholar]

- Jin, T.; Sun, Z.; Li, L.; Zhang, Q.; Zhu, M.; Zhang, Z.; Yuan, G.; Chen, T.; Tian, Y.; Hou, X.; et al. Triboelectric nanogenerator sensors for soft robotics aiming at digital twin applications. Nat. Commun. 2020, 11, 5381. [Google Scholar] [CrossRef]

- Donlon, E.; Dong, S.; Liu, M.; Li, J.; Adelson, E.; Rodriguez, A. Gelslim: A high-resolution, compact, robust, and calibrated tactile-sensing finger. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots And Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1927–1934. [Google Scholar]

- Yuan, W.; Dong, S.; Adelson, E. Gelsight: High-resolution robot tactile sensors for estimating geometry and force. Sensors 2017, 17, 2762. [Google Scholar]

- Wang, Y.; Chen, J.; Mei, D. Flexible tactile sensor array for slippage and grooved surface recognition in sliding movement. Micromachines 2019, 10, 579. [Google Scholar]

- Zhu, L.; Wang, Y.; Mei, D.; Jiang, C. Development of fully flexible tactile pressure sensor with bilayer interlaced bumps for robotic grasping applications. Micromachines 2020, 11, 770. [Google Scholar] [CrossRef]

- Yang, L.; Han, X.; Guo, W.; Wan, F.; Pan, J.; Song, C. Learning-based optoelectronically innervated tactile finger for rigid-soft interactive grasping. IEEE Robot. Autom. Lett. 2021, 6, 3817–3824. [Google Scholar] [CrossRef]

- Guo, D.; Sun, F.; Fang, B.; Yang, C.; Xi, N. Robotic grasping using visual and tactile sensing. Inf. Sci. 2017, 417, 274–286. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhou, J.; Yan, Z.; Wang, K.; Mao, J.; Jiang, Z. Hardness recognition of fruits and vegetables based on tactile array information of manipulator. Comput. Electron. Agric. 2021, 181, 105959. [Google Scholar] [CrossRef]

- Cortés, V.; Blanes, C.; Blasco, J.; Ortiz, C.; Aleixos, N.; Mellado, M.; Cubero, S.; Talens, P. Integration of simultaneous tactile sensing and visible and near-infrared reflectance spectroscopy in a robot gripper for mango quality assessment. Biosyst. Eng. 2017, 162, 112–123. [Google Scholar] [CrossRef]

- Zhou, H.; Xiao, J.; Kang, H.; Wang, X.; Au, W.; Chen, C. Learning-based slip detection for robotic fruit grasping and manipulation under leaf interference. Sensors 2022, 22, 5483. [Google Scholar]

- Wang, X.; Kang, H.; Zhou, H.; Au, W.; Chen, C. Geometry-aware fruit grasping estimation for robotic harvesting in apple orchards. Comput. Electron. Agric. 2022, 193, 106716. [Google Scholar]

- Gonzalez, K. Bruising Profile of Fresh ‘Golden Delicious’ Apples. Ph.D. Thesis, Washington State University, Pullman, WA, USA, 2009. [Google Scholar]

- Xu, F.; Li, X.; Shi, Y.; Li, L.; Wang, W.; He, L.; Liu, R. Recent developments for flexible pressure sensors: A review. Micromachines 2018, 9, 580. [Google Scholar]

- Romano, J.; Hsiao, K.; Niemeyer, G.; Chitta, S.; Kuchenbecker, K. Human-inspired robotic grasp control with tactile sensing. IEEE Trans. Robot. 2011, 27, 1067–1079. [Google Scholar]

- Lauri, P. Developing a new paradigm for apple training. Compact. Fruit Tree 2009, 42, 17–19. [Google Scholar]

| Conditions | Null Grasp | Finger Obstructed Grasp | Good Grasp | Branch Interfered Grasp | Overall |

|---|---|---|---|---|---|

| Accuracy | 29/30 | 24/26 | 41/48 | 80/96 | 174/200 |

| percentage | 96.6% | 92.3% | 85.4% | 83.3% | 87.0% |

| Interference Localisation Tests | Distal | Middle | Proximal | Overall Interference Localisation Success | |

|---|---|---|---|---|---|

| 96 grasps | Finger1 | 7/8 | 8/8 | 7/8 | 83.30% |

| Finger2 | 7/8 | 8/8 | 6/8 | ||

| Finger3 | 5/8 | 8/8 | 5/8 | ||

| Finger4 | 5/8 | 8/8 | 6/8 | ||

| Apple No. | ① | ② | ③ | ④ | ⑤ | ⑥ | |

|---|---|---|---|---|---|---|---|

| Distance to the trunk (mm) | 161 | 84 | 238 | 151 | 116 | 63 | |

| Distance to the nearest branch (mm) | 51 | 52 | 57 | 12 | 0 | 0 | |

| Test round 1 | Label | Null | Good | Good | Obstructed | Branch | Branch |

| Detection | Null | Good | Good | Branch | Branch | Branch | |

| Distance to the nearest branch (mm) | 50 | 55 | 53 | 18 | 9 | 0 | |

| Test round 2 | Label | Good | Good | Null | Obstructed | Obstructed | Branch |

| Detection | Good | Good | Null | Obstructed | Branch | Branch | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, H.; Kang, H.; Wang, X.; Au, W.; Wang, M.Y.; Chen, C. Branch Interference Sensing and Handling by Tactile Enabled Robotic Apple Harvesting. Agronomy 2023, 13, 503. https://doi.org/10.3390/agronomy13020503

Zhou H, Kang H, Wang X, Au W, Wang MY, Chen C. Branch Interference Sensing and Handling by Tactile Enabled Robotic Apple Harvesting. Agronomy. 2023; 13(2):503. https://doi.org/10.3390/agronomy13020503

Chicago/Turabian StyleZhou, Hongyu, Hanwen Kang, Xing Wang, Wesley Au, Michael Yu Wang, and Chao Chen. 2023. "Branch Interference Sensing and Handling by Tactile Enabled Robotic Apple Harvesting" Agronomy 13, no. 2: 503. https://doi.org/10.3390/agronomy13020503

APA StyleZhou, H., Kang, H., Wang, X., Au, W., Wang, M. Y., & Chen, C. (2023). Branch Interference Sensing and Handling by Tactile Enabled Robotic Apple Harvesting. Agronomy, 13(2), 503. https://doi.org/10.3390/agronomy13020503