Research on Instance Segmentation Algorithm of Greenhouse Sweet Pepper Detection Based on Improved Mask RCNN

Abstract

1. Introduction

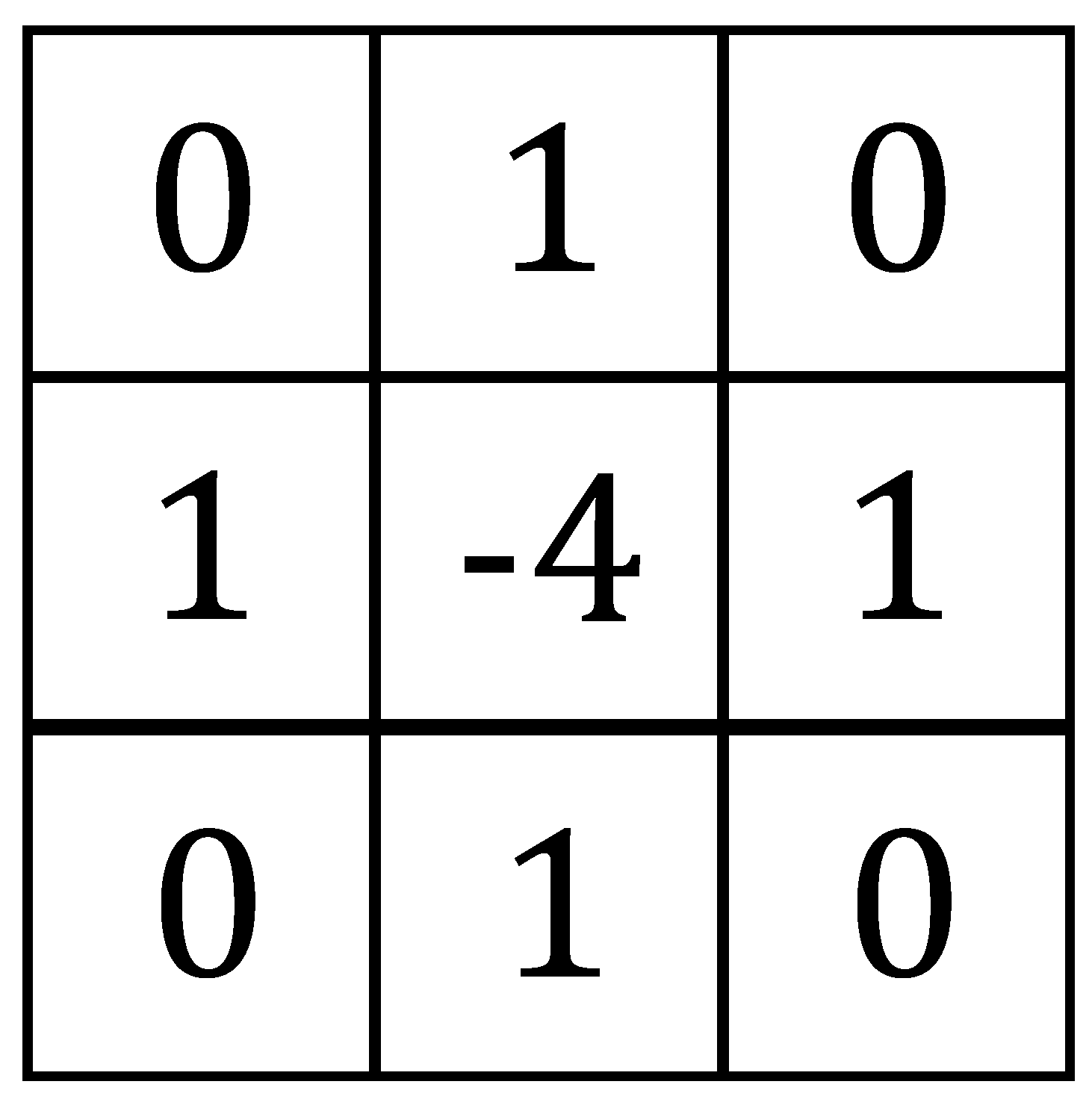

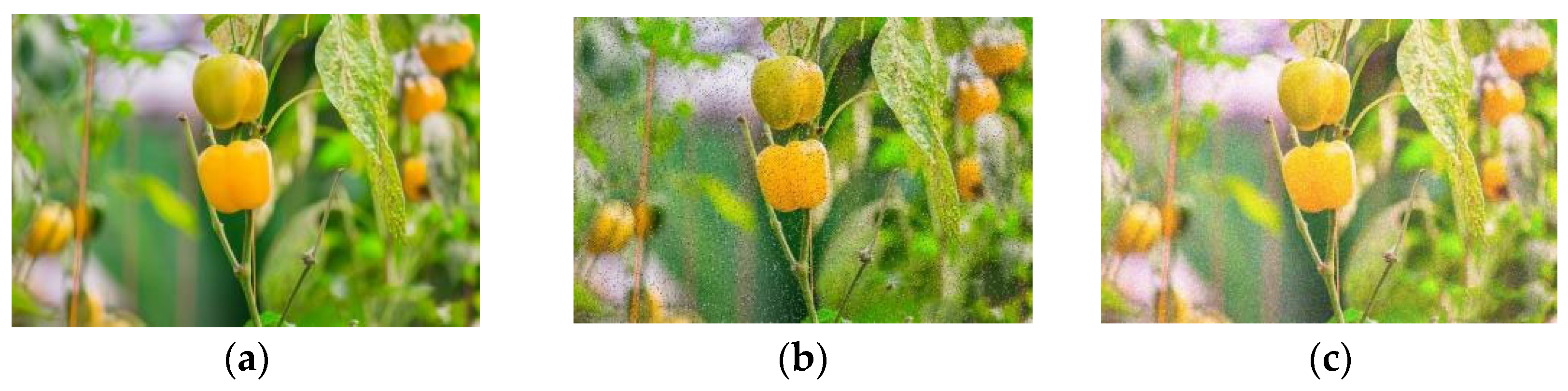

- Image preprocessing methods such as sharpening, salt and pepper noise, and Gaussian noise are used to enhance the data of a limited number of image samples to enrich them, thereby increasing dataset richness.

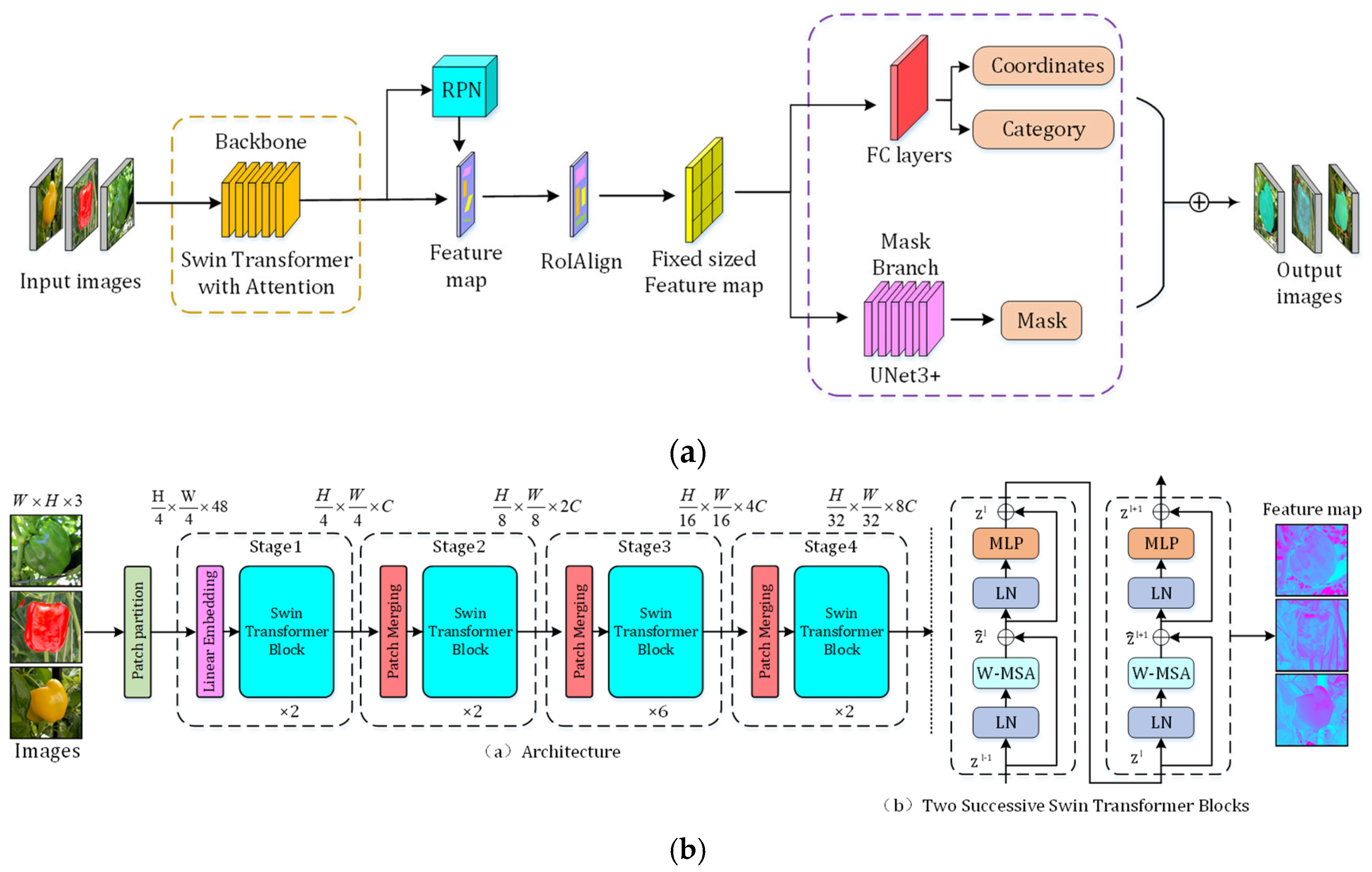

- The Swin Transformer attention mechanism is introduced into the backbone network of the Mask RCNN to enhance the feature extraction ability of the network. The Swin Transformer reduces computational complexity by moving the windows for image feature learning and calculating self-attention in the window.

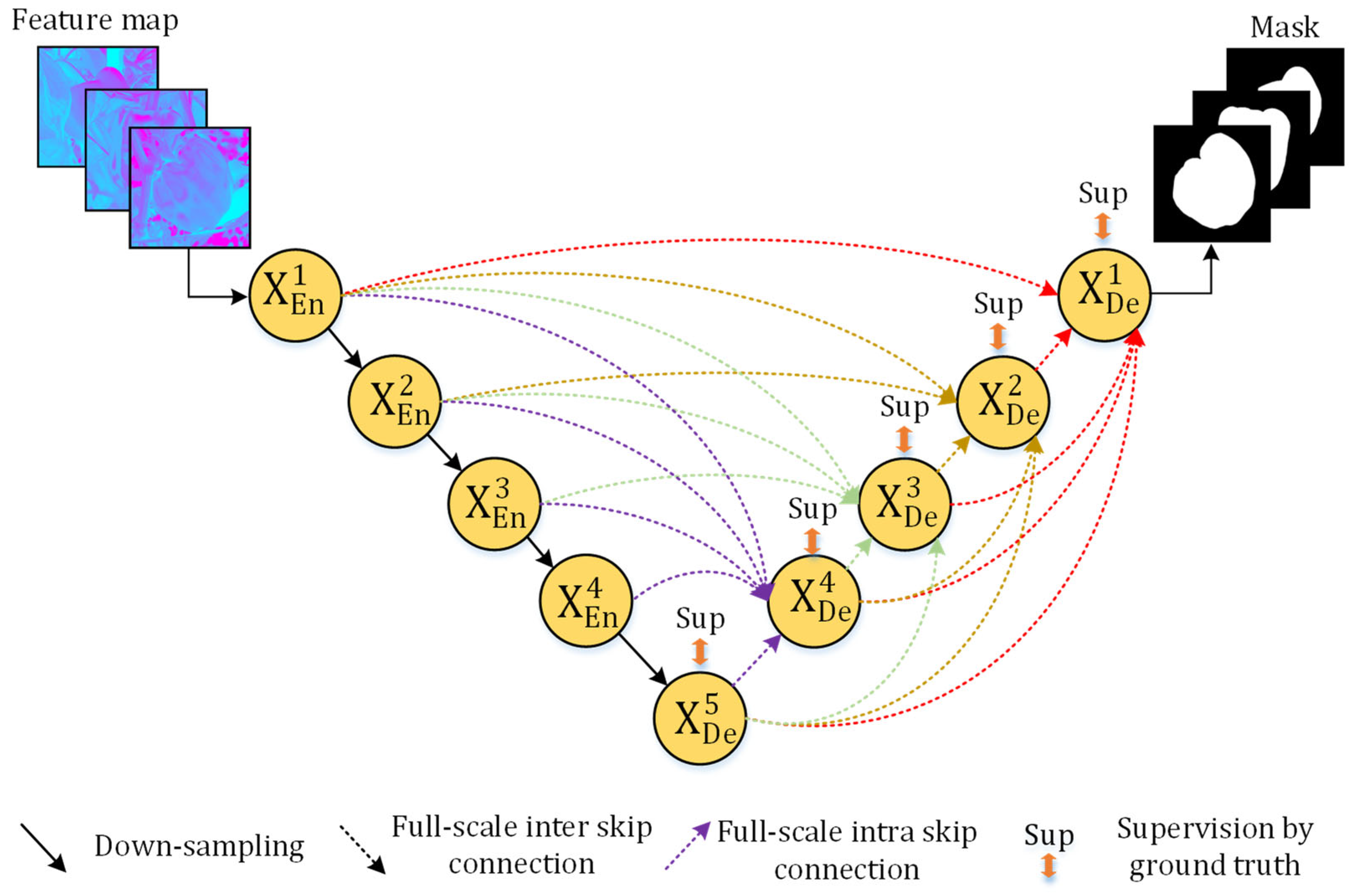

- UNet3 + is used to improve the mask branch and replace the full convolutional network (FCN) of the original algorithm to improve the segmentation quality of the mask further. The UNet3+ network with a full-size jump connection can fully extract shallow and deep feature information.

- Ablation experiments were designed to explore the effects of the above improvements on the Mask RCNN algorithm in sweet pepper instance segmentation. In addition, the proposed algorithm was compared and tested using different algorithms to evaluate its advanced nature.

2. Materials and Methods

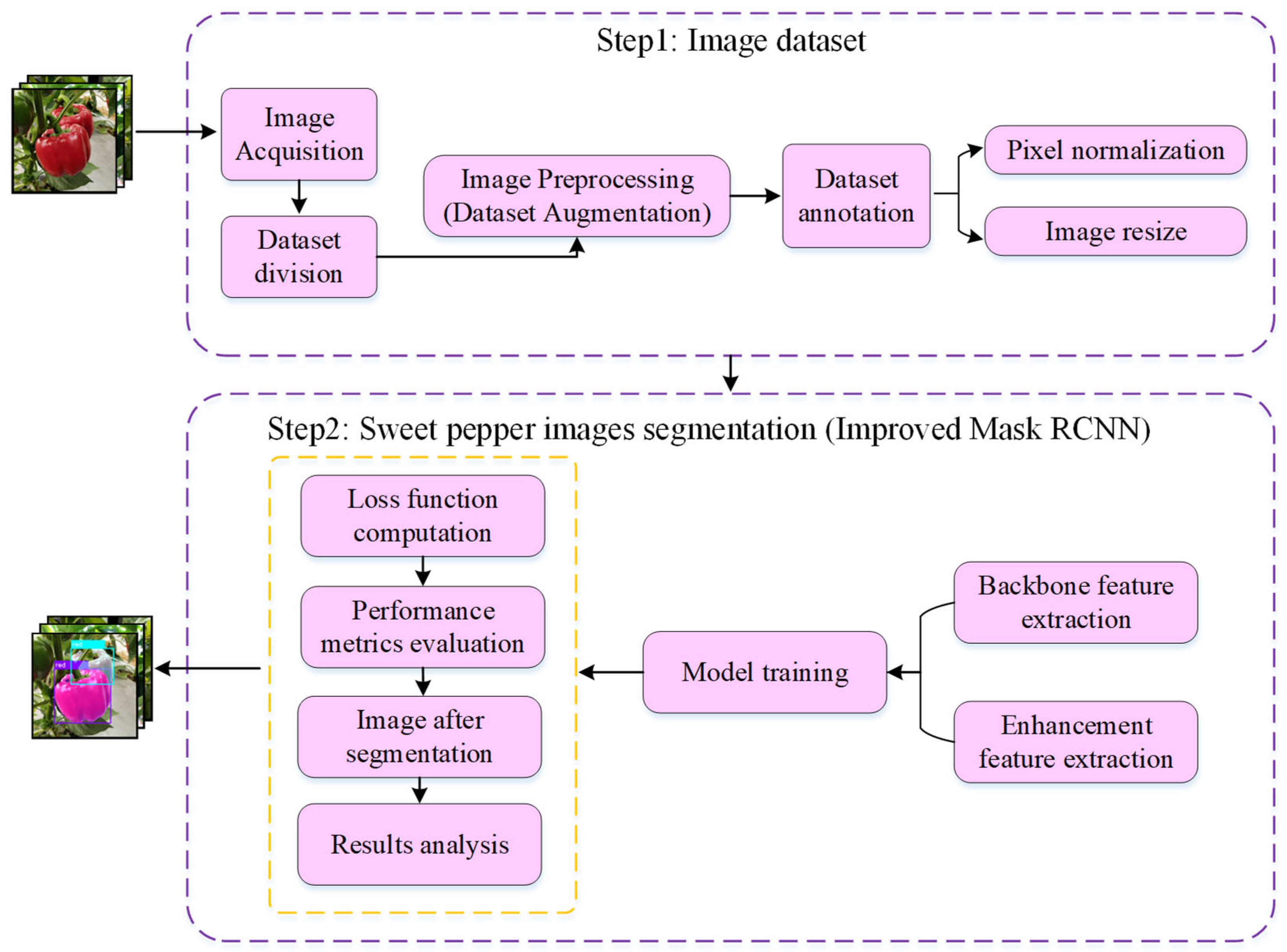

2.1. The Overall Sweet Pepper Segmentation Framework

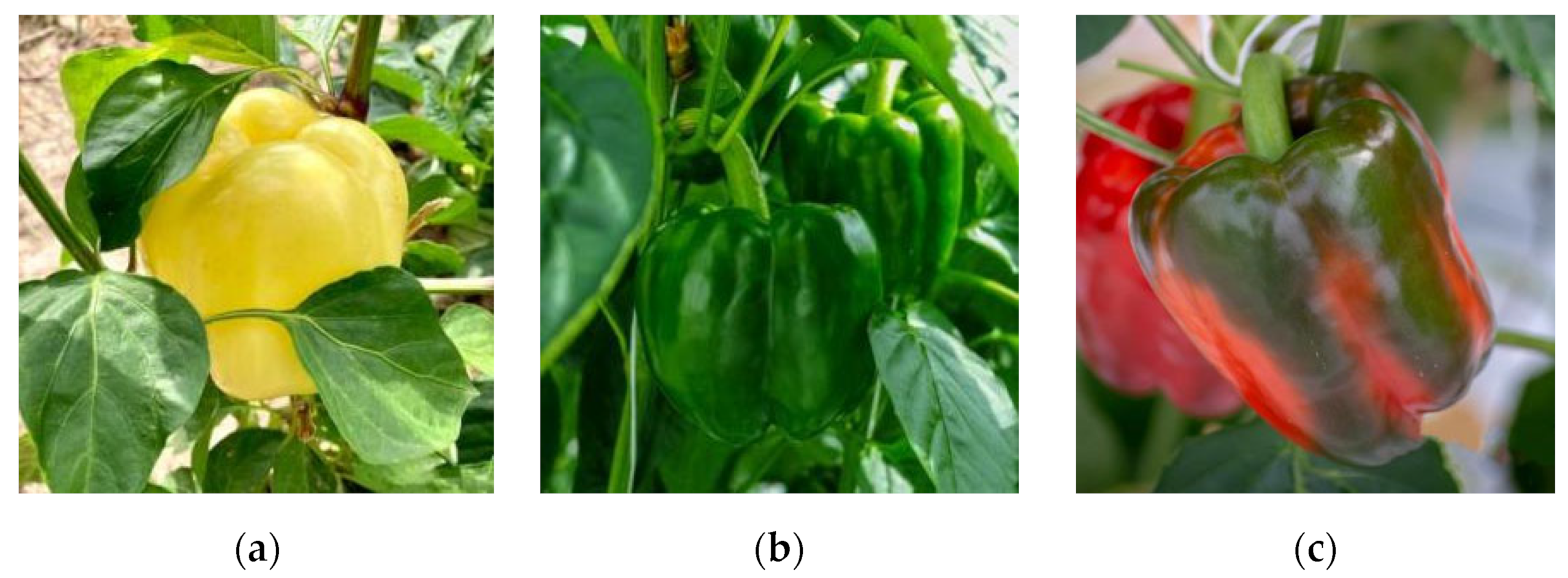

2.2. Image Acquisition

2.3. Image Preprocessing

2.4. Dataset Annotation

2.5. Improved Mask RCNN Instance Segmentation Algorithm

2.5.1. Feature Extraction

2.5.2. Generation of Region of Interest (RoI) and RoIAlign

2.5.3. Mask Head

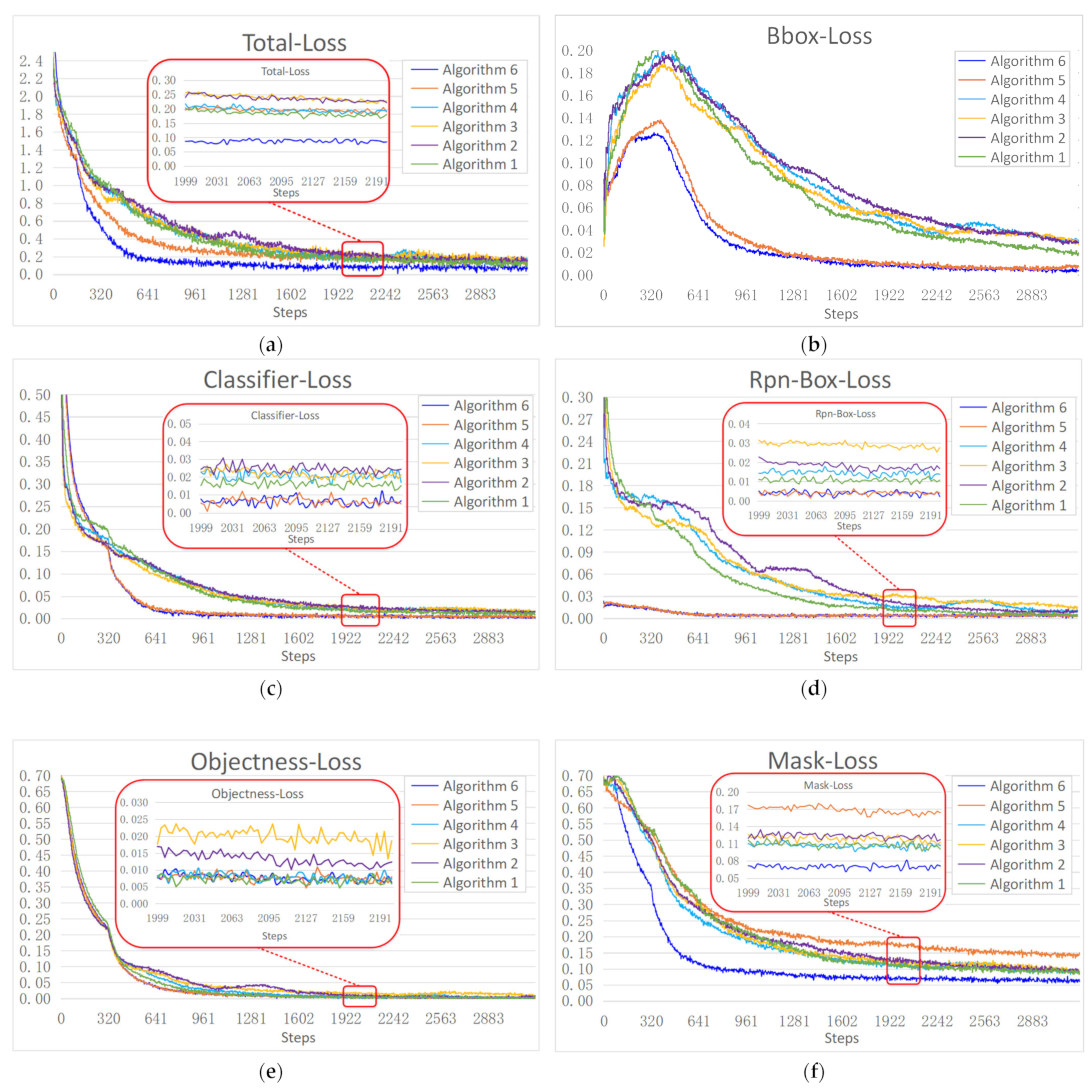

2.5.4. Loss Function

2.6. Network Training

2.7. Network Model Performance Evaluation Index

3. Results

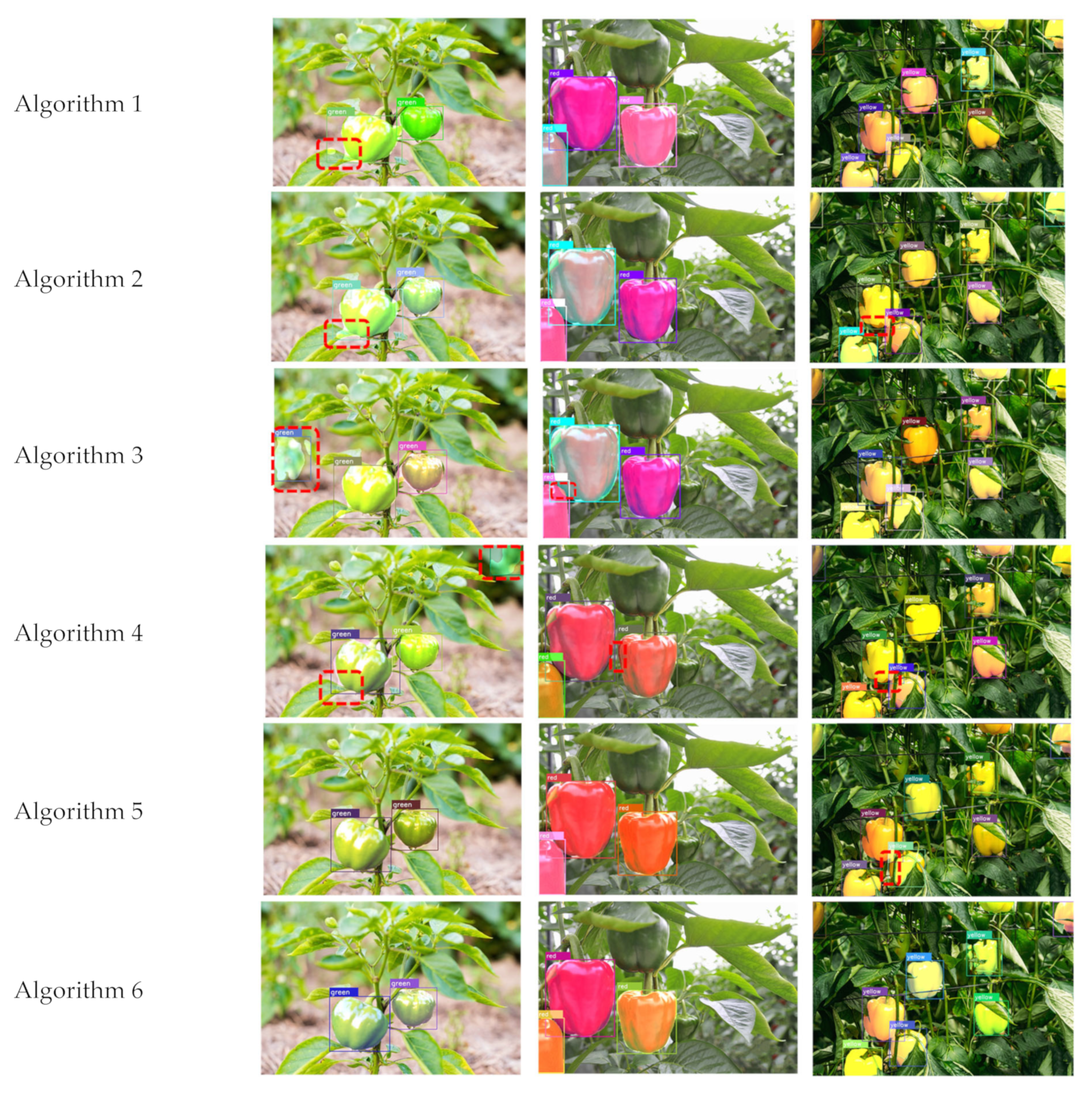

3.1. Qualitative Analysis of Improved Mask RCNN Model Performance

3.2. Ablation Experiment

3.3. Analysis of Segmentation Results of Different Types of Sweet Pepper

3.4. Effect of Data Enhancement Technology on Segmentation Results

3.5. Comparison of Different Instance Segmentation Algorithms

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bargoti, S.; Underwood, J. Deep fruit detection in orchards. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Marina Bay Sands, Singapore, 29 May–3 June 2017; pp. 3626–3633. [Google Scholar]

- Attia, A.; Govind, A.; Qureshi, A.S.; Feike, T.; Rizk, M.S.; Shabana, M.M.; Kheir, A.M. Coupling Process-Based Models and Machine Learning Algorithms for Predicting Yield and Evapotranspiration of Maize in Arid Environments. Water 2022, 14, 3647. [Google Scholar] [CrossRef]

- Kheir, A.M.; Ammar, K.A.; Amer, A.; Ali, M.G.; Ding, Z.; Elnashar, A. Machine learning-based cloud computing improved wheat yield simulation in arid regions. Comput. Electron. Agric. 2022, 203, 107457. [Google Scholar] [CrossRef]

- Rocha, A.; Hauagge, D.C.; Wainer, J.; Goldenstein, S. Automatic fruit and vegetable classification from images. Comput. Electron. Agric. 2010, 70, 96–104. [Google Scholar] [CrossRef]

- Kumar, C.; Chauhan, S.; Alla, R.N. Classifications of citrus fruit using image processing-GLCM parameters. In Proceedings of the International Conference on Communications and Signal Processing (ICCSP), Tamilnadu, India, 2–4 April 2015; pp. 1743–1747. [Google Scholar]

- Kanade, A.; Shaligram, A. Development of machine vision based system for classification of Guava fruits on the basis of CIE1931 chromaticity coordinates. In Proceedings of the 2nd International Symposium on Physics and Technology of Sensors (ISPTS), Pune, India, 7–10 March 2015; pp. 177–180. [Google Scholar]

- Jiang, L.; Koch, A.; Scherer, S.A.; Zell, A. Multi-class fruit classification using RGB-D data for indoor robots. In Proceedings of the 2013 IEEE International Conference on Robotics and Biomimetics (ROBIO), Shenzhen, China, 12–14 December 2013; pp. 587–592. [Google Scholar]

- Visa, S.; Cao, C.; Gardener, M.S.; Van Der Knaap, E. Modeling of tomato fruits into nine shape categories using elliptic fourier shape modeling and Bayesian classification of contour morphometric data. Euphytica 2014, 200, 429–439. [Google Scholar] [CrossRef]

- Xin, S.; Lei, Y. The Study of Adaptive Multi Threshold Segmentation Method for Apple Fruit Based on the Fractal Characteristics. In Proceedings of the 2015 8th International Symposium on Computational Intelligence and Design (ISCID), Hangzhou, China, 12–13 December 2015; pp. 168–171. [Google Scholar]

- Efi, V.; Yael, E. Adaptive thresholding with fusion using a RGBD sensor for red sweet-pepper detection. Biosyst. Eng. 2016, 146, 45–56. [Google Scholar] [CrossRef]

- Wen, D.; Ren, A.; Ji, T.; Flores-Parra, I.M.; Yang, X.; Li, M. Segmentation of thermal infrared images of cucumber leaves using K-means clustering for estimating leaf wetness duration. Int. J. Agric. Biol. Eng. 2020, 13, 161–167. [Google Scholar] [CrossRef]

- Bai, X.; Li, X.; Fu, Z.; Lv, X.; Zhang, L. A fuzzy clustering segmentation method based on neighborhood grayscale information for defining cucumber leaf spot disease images. Comput. Electron. Agric. 2017, 136, 157–165. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Li, E.; Liang, Z. Instance segmentation of apple flowers using the improved mask R-CNN model. Biosyst. Eng. 2020, 193, 264–278. [Google Scholar] [CrossRef]

- Cheng, C.A.; Bo, L.B.; Jl, A.; Ren, N. Monocular positioning of sweet peppers: An instance segmentation approach for harvest robots. Biosyst. Eng. 2020, 196, 15–28. [Google Scholar] [CrossRef]

- Jia, W.; Zhang, Z.; Shao, W.; Hou, S.; Ji, Z.; Liu, G.; Yin, X. FoveaMask: A fast and accurate deep learning model for green fruit instance segmentation. Comput. Electron. Agric. 2021, 191, 106488. [Google Scholar] [CrossRef]

- Hameed, K.; Chai, D.; Rassau, A. Score-based mask edge improvement of Mask–RCNN for segmentation of fruit and vegetables. Expert Syst. Appl. 2022, 190, 116205. [Google Scholar] [CrossRef]

- Wang, X.; Kang, H.; Zhou, H.; Au, W.; Chen, C. Geometry-Aware Fruit Grasping Estimation for Robotic Harvesting in Orchards. Comput. Electron. Agric. 2022, 193, 106716. [Google Scholar] [CrossRef]

- Su, F.; Zhao, Y.; Wang, G.; Liu, P.; Yan, Y.; Zu, L. Tomato Maturity Classification Based on SE-YOLOv3-MobileNetV1 Network under Nature Greenhouse Environment. Agronomy 2022, 12, 1638. [Google Scholar] [CrossRef]

- Li, Z.; Jiang, X.; Shuai, L.; Zhang, B.; Yang, Y.; Mu, J. A Real-Time Detection Algorithm for Sweet Cherry Fruit Maturity Based on YOLOX in the Natural Environment. Agronomy 2022, 12, 2482. [Google Scholar] [CrossRef]

- Wu, L.; Ma, J.; Zhao, Y.; Liu, H. Apple detection in complex scene using the improved YOLOv4 model. Agronomy 2021, 11, 476. [Google Scholar] [CrossRef]

- Yang, J.; Wang, Y.; Chen, Y.; Yu, J. Detection of weeds growing in Alfalfa using convolutional neural networks. Agronomy 2022, 12, 1459. [Google Scholar] [CrossRef]

- Chen, Z.; Wu, R.; Lin, Y.; Li, C.; Chen, S.; Yuan, Z.; Chen, S.; Zou, X. Plant disease recognition model based on improved YOLOv5. Agronomy 2022, 12, 365. [Google Scholar] [CrossRef]

- Wang, F.; Sun, Z.; Chen, Y.; Zheng, H.; Jiang, J. Xiaomila Green Pepper Target Detection Method under Complex Environment Based on Improved YOLOv5s. Agronomy 2022, 12, 1477. [Google Scholar] [CrossRef]

- Ajala, S.; Muraleedharan Jalajamony, H.; Nair, M.; Marimuthu, P.; Fernandez, R.E. Comparing machine learning and deep learning regression frameworks for accurate prediction of dielectrophoretic force. Sci. Rep. 2022, 12, 1–17. [Google Scholar] [CrossRef]

- Liu, G.; Nouaze, J.C.; Touko, P.L.; Kim, J.H. YOLO-Tomato: A Robust Algorithm for Tomato Detection based on YOLOv3. Sensors 2020, 20, 2145. [Google Scholar] [CrossRef]

- Kang, H.; Chen, C. Fast implementation of real-time fruit detection in apple orchards using deep learning. Comput. Electron. Agric. 2020, 168, 105108. [Google Scholar] [CrossRef]

- Sa, Y.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. DeepFruits: A Fruit Detection System Using Deep Neural Networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef] [PubMed]

- Gao, F.; Fu, L.; Zhang, X.; Majeed, Y.; Li, R.; Karkee, M.; Zhang, Q. Multi-class fruit-on-plant detection for apple in SNAP system using Faster R-CNN. Comput. Electron. Agric. 2020, 176, 105634. [Google Scholar] [CrossRef]

- Wan, S.; Goudos, S. Faster R-CNN for multi-class fruit detection using a robotic vision system. Comput. Netw. 2019, 168, 107036. [Google Scholar] [CrossRef]

- Gan, H.; Lee, W.S.; Alchantis, V.; Abd-Elrahman, A. Active thermal imaging for immature citrus fruit detection. Biosyst. Eng. 2020, 198, 291–303. [Google Scholar] [CrossRef]

- Kang, H.; Chen, C. Fruit Detection and Segmentation for Apple Harvesting Using Visual Sensor in Orchards. Sensors 2019, 19, 4599. [Google Scholar] [CrossRef]

- Roy, K.; Chaudhuri, S.S.; Pramanik, S. Deep learning based real-time Industrial framework for rotten and fresh fruit detection using semantic segmentation. Microsyst. Technol. 2020, 27, 1–11. [Google Scholar] [CrossRef]

- Ni, X.; Li, C.; Jiang, H.; Takeda, F. Deep learning image segmentation and extraction of blueberry fruit traits associated with harvest ability and yield. Horticult. Res. 2020, 7, 1–14. [Google Scholar] [CrossRef]

- Yu, Y.; Zhang, K.; Yang, L.; Zhang, D. Fruit detection for strawberry harvesting robot in non-structural environment based on Mask-RCNN. Comput. Electron. Agric. 2019, 163, 104846. [Google Scholar] [CrossRef]

- Jia, W.; Tian, Y.; Luo, R.; Zhang, Z.; Lian, J.; Zheng, Y. Detection and segmentation of overlapped fruits based on optimized Mask RCNN application in apple harvesting robot. Comput. Electron. Agric. 2020, 172, 105380. [Google Scholar] [CrossRef]

- Li, H.; Li, C.; Li, G.; Chen, L. A real-time table grape detection method based on improved YOLOv4-tiny network in complex background. Biosyst. Eng. 2021, 212, 347–359. [Google Scholar] [CrossRef]

- Min, X.; Fei, X.; Cheng, H.D.; Zhang, Y.; Ding, J. EISeg: Effective Interactive Segmentation. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 1982–1987. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. NIPS 2015, 28, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.-W.; Wu, J. UNet 3+: A Full-Scale Connected UNet for Medical Image Segmentation. In Proceedings of the 2020 IEEE International Conference on Acoustics, Speech and Signal Processing, Barcelona, Spain, 4–8 May 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Khan, A.; Sohail, A.; Zahoora, U.; Qureshi, A.S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 2020, 53, 5455–5516. [Google Scholar] [CrossRef]

- Lin, T.; Wang, Y.; Liu, X.; Qiu, X. A Survey of Transformers. arXiv 2021, arXiv:2106.04554. [Google Scholar] [CrossRef]

- Zhou, Z.; Siddiquee, M.; Tajbakhsh, N.; Liang, J. UNet++: Redesigning Skip Connections to Exploit Multiscale Features in Image Segmentation. IEEE Trans. Med. Imag. 2020, 39, 1856–1867. [Google Scholar] [CrossRef]

- Chen, H.; Sun, K.; Tian, Z.; Shen, C.; Huang, Y.; Yan, Y. BlendMask: Top–Down Meets Bottom-Up for Instance Segmentation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Tian, Z.; Shen, C.; Wang, X.; Chen, H. BoxInst: High-Performance Instance Segmentation with Box Annotations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 5443–5452. [Google Scholar]

- Tian, Z.; Zhang, B.; Chen, H.; Shen, C. Instance and Panoptic Segmentation Using Conditional Convolutions. TPAMI 2022, 45, 669–680. [Google Scholar] [CrossRef]

| Category | Training Set | Verification Set |

|---|---|---|

| Green | 632 | 126 |

| Red | 638 | 128 |

| Yellow | 635 | 127 |

| Parameter | Value |

|---|---|

| CPU | Intel Core i9-10900K |

| Memory/GB | 32 GB |

| GPU | NVIDIA GeForce RTX 2080Ti |

| System | Windows 10 |

| Development tool | PyCharm |

| Network framework | PyTorch 1.10.0 |

| Batch size | 2 |

| Weight attenuation | 0.0005 |

| Basic learning rate | 0.004 |

| Epochs | 10 |

| Momentum | 0.9 |

| Optimizer | SGD |

| Input image size | 500 × 500 × 3 (RGB) |

| Number | Algorithm | Backbone | Mask Head | (Bbox) AP (%) | (Bbox) AR (%) | (Seg)AP (%) | F1 (%) | Speed (s) |

|---|---|---|---|---|---|---|---|---|

| Algorithm 1 | Mask RCNN | ResNet-50 | FCN | 63.6 | 67.6 | 61.8 | 65.5 | 0.24 |

| Algorithm 2 | Mask RCNN | ResNet-101 | FCN | 71.4 | 75.3 | 65.3 | 73.3 | 0.23 |

| Algorithm 3 | Mask RCNN | ResNext-50 | FCN | 70.0 | 72.7 | 64.6 | 71.3 | 0.20 |

| Algorithm 4 | Mask RCNN | ResNext-101 | FCN | 73.7 | 76.6 | 67.2 | 75.1 | 0.26 |

| Algorithm 5 | Mask RCNN | Swin Transformer | FCN | 94.4 | 97.2 | 61.7 | 95.8 | 0.19 |

| Algorithm 6 | Mask RCNN | Swin Transformer | UNet3+ | 95.7 | 98.3 | 84.5 | 96.9 | 0.20 |

| Category | (Bbox)AP (%) | (Bbox)AR (%) | (Seg)AP (%) | F1 (%) |

|---|---|---|---|---|

| Green | 94.1 | 99.8 | 74.3 | 96.8 |

| Red | 96.5 | 97.5 | 89.3 | 97.0 |

| Yellow | 96.5 | 97.7 | 89.8 | 97.1 |

| Method | (Bbox)AP (%) | (Bbox)AR (%) | (Seg)AP (%) | F1 (%) |

|---|---|---|---|---|

| Data enhancement not used | 95.7 | 98.3 | 84.5 | 97.0 |

| Laplace sharpening | 97.1 | 98.9 | 93.1 | 98.0 |

| Salt and pepper noise | 97.3 | 98.9 | 93.2 | 98.2 |

| Gaussian noise | 96.2 | 98.5 | 92.7 | 97.3 |

| Three data enhancement methods simultaneously | 98.1 | 99.4 | 94.8 | 98.8 |

| Algorithm | (Bbox)AP (%) | (Bbox)AR (%) | (Seg)AP (%) | F1 (%) | Speed (s) |

|---|---|---|---|---|---|

| BlendMask | 47.5 | 56.9 | 48.1 | 51.8 | 0.36 |

| BoxInst | 49.6 | 65.7 | 41.8 | 56.5 | 0.30 |

| CondInst | 40.1 | 61.3 | 48.2 | 48.5 | 0.31 |

| Mask RCNN | 73.7 | 76.6 | 67.2 | 75.1 | 0.26 |

| Improved Mask RCNN | 95.7 | 98.3 | 84.5 | 96.9 | 0.20 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cong, P.; Li, S.; Zhou, J.; Lv, K.; Feng, H. Research on Instance Segmentation Algorithm of Greenhouse Sweet Pepper Detection Based on Improved Mask RCNN. Agronomy 2023, 13, 196. https://doi.org/10.3390/agronomy13010196

Cong P, Li S, Zhou J, Lv K, Feng H. Research on Instance Segmentation Algorithm of Greenhouse Sweet Pepper Detection Based on Improved Mask RCNN. Agronomy. 2023; 13(1):196. https://doi.org/10.3390/agronomy13010196

Chicago/Turabian StyleCong, Peichao, Shanda Li, Jiachao Zhou, Kunfeng Lv, and Hao Feng. 2023. "Research on Instance Segmentation Algorithm of Greenhouse Sweet Pepper Detection Based on Improved Mask RCNN" Agronomy 13, no. 1: 196. https://doi.org/10.3390/agronomy13010196

APA StyleCong, P., Li, S., Zhou, J., Lv, K., & Feng, H. (2023). Research on Instance Segmentation Algorithm of Greenhouse Sweet Pepper Detection Based on Improved Mask RCNN. Agronomy, 13(1), 196. https://doi.org/10.3390/agronomy13010196