Using Image Analysis and Regression Modeling to Develop a Diagnostic Tool for Peanut Foliar Symptoms

Abstract

1. Introduction

2. Materials and Methods

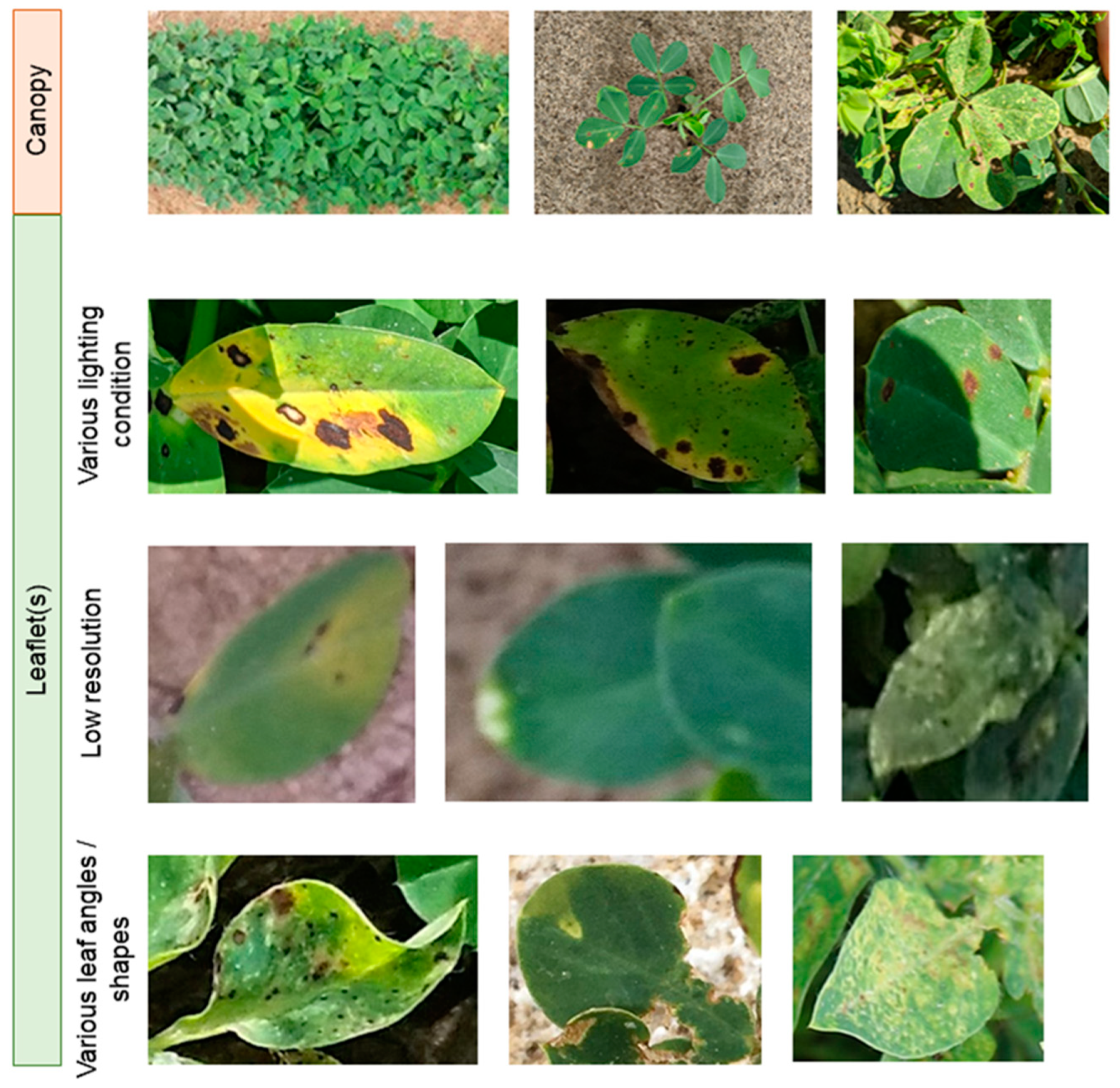

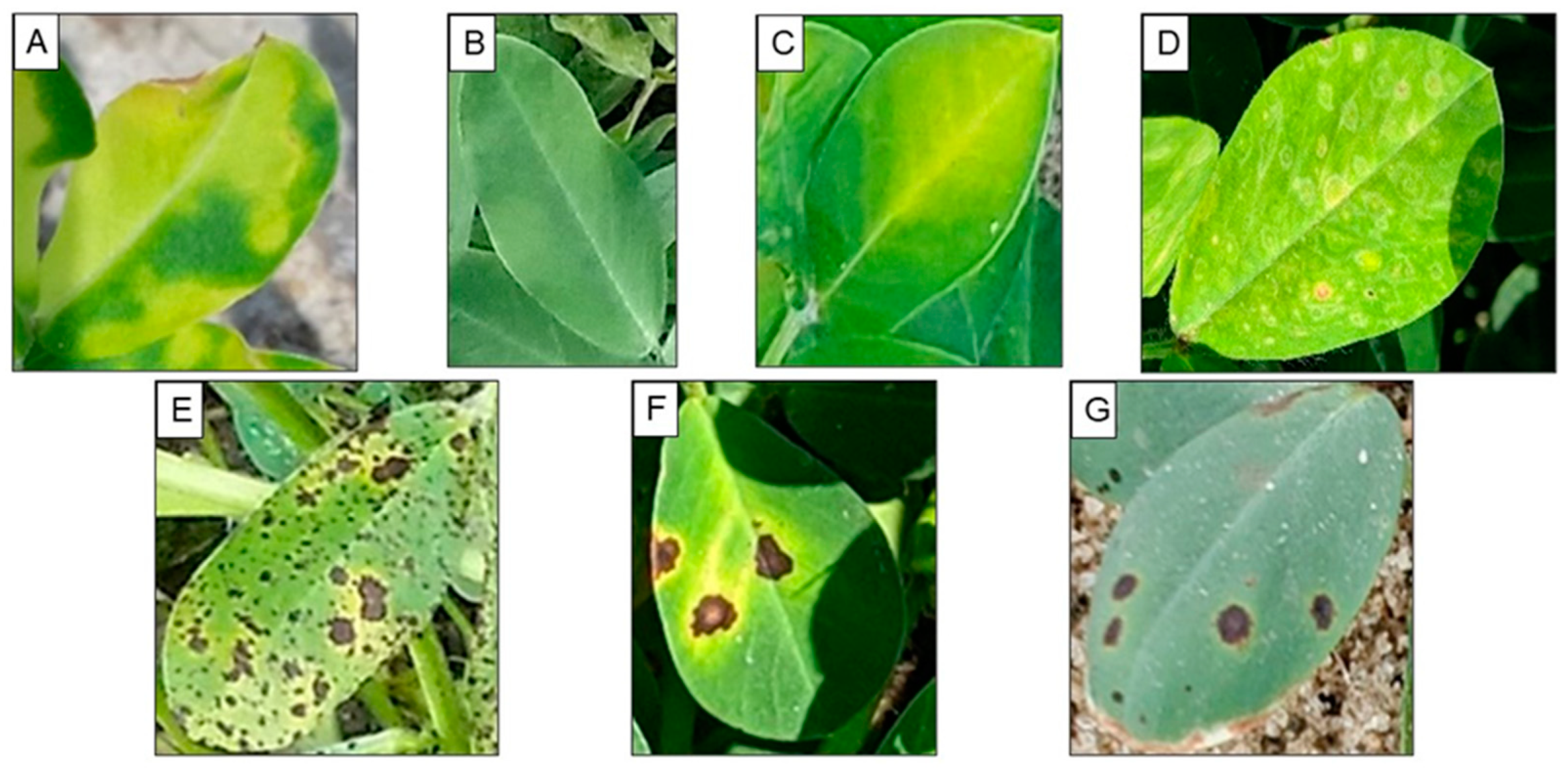

2.1. Image Acquisition

2.2. Image Processing

2.3. Data Analysis

3. Results

3.1. Model Performance

3.2. Impact of Image Quality on Model Accuracy

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- USDA/NASS QuickStats Query Tool. Available online: https://quickstats.nass.usda.gov/ (accessed on 30 August 2022).

- Porter, D.M. Peanut Diseases. In Compendium of Peanut Diseases, 2nd ed.; Kokalis-Burelle, N., Porter, D.M., Rodrigues-Kabana, R., Smith, D.H., Subrahmanyam, P., Eds.; American Phytopathological Society Press: St. Paul, MN, USA, 1997. [Google Scholar]

- Anco, D.; Thomas, J.S.; Marshall, M.; Kirk, K.R.; Plumblee, M.T.; Smith, N.; Farmaha, B.; Payero, J. Peanut Money-Maker 2021 Production Guide; Circular 588; Clemson University Extension: Clemson, SC, USA, 2021. [Google Scholar]

- Fang, Y.; Ramasamy, R.P. Current and Prospective Methods for Plant Disease Detection. Biosensors 2015, 5, 537–561. [Google Scholar] [CrossRef]

- Hahn, M. The rising threat of fungicide resistance in plant pathogenic fungi: Botrytis as a case study. J. Chem. Biol. 2014, 7, 133–141. [Google Scholar] [CrossRef]

- Li, L.; Zhang, Q.; Huang, D. A Review of Imaging Techniques for Plant Phenotyping. Sensors 2014, 14, 20078–20111. [Google Scholar] [CrossRef]

- Mutka, A.; Bart, R.S. Image-based phenotyping of plant disease symptoms. Front. Plant Sci. 2015, 5, 734. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. An Automatic Method to Detect and Measure Leaf Disease Symptoms Using Digital Image Processing. Plant Dis. 2014, 98, 1709–1716. [Google Scholar] [CrossRef]

- Oppenheim, D.; Shani, G.; Erlich, O.; Tsror, L. Using Deep Learning for Image-Based Potato Tuber Disease Detection. Phytopathology 2019, 109, 1083–1087. [Google Scholar] [CrossRef]

- Olivito, T.; Andrade, S.M.; Del Ponte, E.M. Measuring plant disease severity in R: Introducing and evaluating the plinman package. Trop. Plant Pathol. 2022, 47, 95–104. [Google Scholar] [CrossRef]

- Bock, C.H.; Barbedo, J.G.A.; Del Ponte, E.M.; Bohnenkamp, D.; Mahlein, A.-K. From visual estimates to fully automated sensor-based measurements of plant disease severity: Status and challenges for improving accuracy. Phytopathol. Res. 2020, 2, 9. [Google Scholar] [CrossRef]

- Bauriegel, E.; Herppich, W.B. Hyperspectral and Chlorophyll Fluorescence Imaging for Early Detection of Plant Diseases, with Special Reference to Fusarium spec. Infections on Wheat. Agriculture 2014, 4, 32–57. [Google Scholar] [CrossRef]

- Belasque, J.J.; Gasparoto, M.C.G.; Marcassa, L.G. Detection of mechanical and disease stresses in citrus plants by fluorescence spectroscopy. Appl. Opt. 2008, 47, 1922–1926. [Google Scholar] [CrossRef]

- Daley, P.F. Chlorophyll fluorescence analysis and imaging in plant stress and disease. Can. J. Plant Pathol. 1995, 17, 167–173. [Google Scholar] [CrossRef]

- Nilsson, H.E. Hand-held radiometry and IR-thermography of plant diseases in field plot experiments†. Int. J. Remote Sens. 1991, 12, 545–557. [Google Scholar] [CrossRef]

- Oerke, E.; Steiner, U.; Dehne, H.; Lindenthal, M. Thermal imaging of cucumber leaves affected by downey mildew and environmental conditions. J. Exp. Bot. 2006, 57, 2121–2132. [Google Scholar] [CrossRef]

- Wang, M.; Ling, N.; Dong, X.; Zhu, Y.; Shen, Q.; Guo, S. Thermographic visualization of leaf response in cucumber plants infected with the soil-borne pathogen Fusarium oxysporum f. sp. cucumerinum. Plant Physiol. Biochem. 2012, 61, 153–161. [Google Scholar] [CrossRef]

- Sandmann, M.; Grosch, R.; Graefe, J. The Use of Features from Fluorescence, Thermography, and NDVI Imaging to Detect Biotic Stress in Lettuce. Plant Dis. 2018, 102, 1101–1107. [Google Scholar] [CrossRef]

- Wang, L.; Duan, Y.; Zhang, L.; Rehman, T.U.; Ma, D.; Jin, J. Precise Estimation of NDVI with a Simple NIR Sensitive RGB Camera and Machine Learning Methods for Corn Plants. Sensors 2020, 20, 3208. [Google Scholar] [CrossRef]

- Pérez-Bueno, M.L.; Pineda, M.; Vida, C.; Fernández-Ortuño, D.; Torés, J.A.; de Vicente, A.; Cazorla, F.M.; Barón, M. Detection of White Root Rot in Avocado Trees by Remote Sensing. Plant Dis. 2019, 103, 1119–1125. [Google Scholar] [CrossRef]

- Raikes, C.; Burpee, L.L. Use of multispectral radiometry for assessment of Rhizoctonia Blight in Creeping Bentgrass. Phytopathology 1998, 88, 446–449. [Google Scholar] [CrossRef][Green Version]

- Cui, D.; Zhang, Q.; Li, M.; Hartman, G.L.; Zhao, Y. Image processing methods for quantitatively detecting soybean rust from multispectral images. Biosyst. Eng. 2010, 17, 186–193. [Google Scholar] [CrossRef]

- Mahleim, A.K.; Oerke, E.C.; Steiner, U.; Dehne, H.W. Recent advantages in sensing plant diseases for precision crop protection. Eur. J. Plant Pathol. 2012, 133, 197–209. [Google Scholar] [CrossRef]

- Steddom, K.; McMullen, M.; Schatz, B.; Rush, C.M. Comparing Image Format and Resolution for Assessment of Foliar Diseases of Wheat. Plant Health Prog. 2005, 6, 11. [Google Scholar] [CrossRef]

- Bock, C.H.; Parker, P.E.; Cook, A.Z.; Gottwald, T.R. Visual Rating and the Use of Image Analysis for Assessing Different Symptoms of Citrus Canker on Grapefruit Leaves. Plant Dis. 2008, 92, 530–541. [Google Scholar] [CrossRef]

- Kwack, M.S.; Kim, E.N.; Lee, H.; Kim, J.-W.; Chun, S.-C.; Kim, K.D. Digital image analysis to measure lesion area of cucumber anthracnose by Colletotrichum orbiculare. J. Gen. Plant Pathol. 2005, 71, 418–421. [Google Scholar] [CrossRef]

- Peressotti, E.; Duchêne, E.; Merdinoglu, D.; Mestre, P. A semi-automatic non-destructive method to quantify grapevine downy mildew sporulation. J. Microbiol. Methods 2011, 84, 265–271. [Google Scholar] [CrossRef]

- Kirk, K.R. Batch Load Image Processor; v.1.1.; Clemson University: Clemson, SC, USA, 2022. [Google Scholar]

- Muthukrishnan, R.; Radha, M. Edge detection techniques for image segmentation. Int. J. Comput. Sci. Inf. Technol. 2011, 3, 259–267. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Loddo, A.; Di Ruberto, C.; Vale, A.M.P.G.; Ucchesu, M.; Soares, J.M.; Bacchetta, G. An effective and friendly tool for seed image analysis. Vis. Comput. 2022, 1–18. [Google Scholar] [CrossRef]

- Barhimi, M.; Boukhalfa, K.; Moussaoui, A. Deep learning for tomato diseases: Classification and symptoms visualization. Appl. Artif. Intell. 2017, 31, 299–315. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, M.; Zhu, J.; Geng, S. Spectral prediction of Phytophthora infestans infection on tomatoes using artificial neural network (ANN). Int. J. Remote Sens. 2008, 29, 1693–1706. [Google Scholar] [CrossRef]

- Sanyal, P.; Patel, S.C. Pattern recognition method to detect two diseases in rice plants. Imaging Sci. J. 2008, 56, 319–325. [Google Scholar] [CrossRef]

- Lu, J.; Hu, J.; Zhao, G.; Mei, F.; Zhang, C. An in-field automatic wheat disease diagnosis system. Comput. Electron. Agric. 2017, 142, 369–379. [Google Scholar] [CrossRef]

- Barman, U.; Choudhury, R.D.; Sahu, D.; Barman, G.G. Comparison of convolution neural networks for smartphone image based real time classification of citrus leaf disease. Comput. Electron. Agric. 2020, 177, 105661. [Google Scholar] [CrossRef]

- Plantix, Version 3.6.0. Mobile Application Software. PEAT GmbH: Berlin, Germany, 2015. Available online: https://plantix.net/en/ (accessed on 15 March 2022).

| HCI Color a | Red | Green | Blue | Corresponding Color |

|---|---|---|---|---|

| 0 | 185 | 152 | 49 | |

| 1 | 81 | 116 | 60 | |

| 2 | 50 | 37 | 41 | |

| 3 | 159 | 201 | 81 | |

| 4 | 193 | 204 | 72 | |

| 5 | 129 | 417 | 36 | |

| 6 | 150 | 168 | 137 | |

| 7 | 209 | 195 | 114 | |

| 8 | 96 | 73 | 75 | |

| 9 | 237 | 227 | 49 | |

| 10 | 231 | 238 | 218 | |

| 11 | 202 | 213 | 155 | |

| 12 | 152 | 111 | 104 |

| Model | Accuracy (%) a | Sensitivity (%) b | Specificity (%) c |

|---|---|---|---|

| Paraquat injury | 98.7 | 86.6 | 99.3 |

| Healthy | 91.6 | 76.3 | 95.8 |

| Hopperburn | 96.3 | 70.0 | 98.6 |

| Late leaf spot | 89.4 | 71.7 | 94.9 |

| Provost injury | 97.8 | 93.1 | 98.7 |

| Surfactant injury | 94.3 | 91.3 | 96.1 |

| Tomato spotted wilt | 95.2 | 73.5 | 98.1 |

| Model | Size Class a | Total Images | Event Images b | Accuracy (%) c |

|---|---|---|---|---|

| Paraquat injury | Class 1 | 377 | 4 | 94.2 |

| Class 2 | 164 | 11 | 95.7 | |

| Class 3 | 99 | 11 | 85.9 | |

| Class 4 | 201 | 20 | 96.0 | |

| Healthy | Class 1 | 377 | 169 | 86.7 |

| Class 2 | 164 | 14 | 82.3 | |

| Class 3 | 99 | 6 | 72.7 | |

| Class 4 | 201 | 8 | 82.1 | |

| Hopperburn | Class 1 | 377 | 26 | 95.2 |

| Class 2 | 164 | 6 | 84.1 | |

| Class 3 | 99 | 9 | 72.7 | |

| Class 4 | 201 | 23 | 83.1 | |

| Late leaf spot | Class 1 | 377 | 115 | 82.0 |

| Class 2 | 164 | 42 | 75.6 | |

| Class 3 | 99 | 12 | 58.6 | |

| Class 4 | 201 | 31 | 76.6 | |

| Provost injury | Class 1 | 377 | 36 | 95.2 |

| Class 2 | 164 | 28 | 82.9 | |

| Class 3 | 99 | 19 | 65.7 | |

| Class 4 | 201 | 49 | 92.5 | |

| Surfactant injury | Class 1 | 377 | 44 | 89.7 |

| Class 2 | 164 | 77 | 78.7 | |

| Class 3 | 99 | 60 | 61.6 | |

| Class 4 | 201 | 124 | 78.1 | |

| Tomato spotted wilt | Class 1 | 377 | 19 | 90.5 |

| Class 2 | 164 | 24 | 81.7 | |

| Class 3 | 99 | 12 | 71.7 | |

| Class 4 | 201 | 27 | 93.0 |

| Model | Brightness Class a | Total Images | Event Images b | Accuracy (%) c |

|---|---|---|---|---|

| Paraquat injury | Class 1 | 125 | 1 | 84.0 |

| Class 2 | 259 | 4 | 92.7 | |

| Class 3 | 352 | 31 | 96.6 | |

| Class 4 | 105 | 10 | 83.8 | |

| Healthy | Class 1 | 125 | 16 | 72.8 |

| Class 2 | 259 | 66 | 83.8 | |

| Class 3 | 352 | 87 | 87.5 | |

| Class 4 | 105 | 28 | 66.7 | |

| Hopperburn | Class 1 | 125 | 9 | 74.4 |

| Class 2 | 259 | 25 | 83.8 | |

| Class 3 | 352 | 27 | 90.3 | |

| Class 4 | 105 | 3 | 76.2 | |

| Late leaf spot | Class 1 | 125 | 34 | 61.6 |

| Class 2 | 259 | 51 | 81.5 | |

| Class 3 | 352 | 80 | 83.0 | |

| Class 4 | 105 | 35 | 54.3 | |

| Provost injury | Class 1 | 125 | 42 | 72.8 |

| Class 2 | 259 | 35 | 93.1 | |

| Class 3 | 352 | 47 | 92.6 | |

| Class 4 | 105 | 8 | 87.6 | |

| Surfactant injury | Class 1 | 125 | 62 | 67.2 |

| Class 2 | 259 | 93 | 85.3 | |

| Class 3 | 352 | 123 | 91.8 | |

| Class 4 | 105 | 27 | 72.4 | |

| Tomato spotted wilt | Class 1 | 125 | 5 | 86.4 |

| Class 2 | 259 | 26 | 92.3 | |

| Class 3 | 352 | 40 | 88.1 | |

| Class 4 | 105 | 11 | 76.2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Renfroe-Becton, H.; Kirk, K.R.; Anco, D.J. Using Image Analysis and Regression Modeling to Develop a Diagnostic Tool for Peanut Foliar Symptoms. Agronomy 2022, 12, 2712. https://doi.org/10.3390/agronomy12112712

Renfroe-Becton H, Kirk KR, Anco DJ. Using Image Analysis and Regression Modeling to Develop a Diagnostic Tool for Peanut Foliar Symptoms. Agronomy. 2022; 12(11):2712. https://doi.org/10.3390/agronomy12112712

Chicago/Turabian StyleRenfroe-Becton, Hope, Kendall R. Kirk, and Daniel J. Anco. 2022. "Using Image Analysis and Regression Modeling to Develop a Diagnostic Tool for Peanut Foliar Symptoms" Agronomy 12, no. 11: 2712. https://doi.org/10.3390/agronomy12112712

APA StyleRenfroe-Becton, H., Kirk, K. R., & Anco, D. J. (2022). Using Image Analysis and Regression Modeling to Develop a Diagnostic Tool for Peanut Foliar Symptoms. Agronomy, 12(11), 2712. https://doi.org/10.3390/agronomy12112712