Tree Trunk and Obstacle Detection in Apple Orchard Based on Improved YOLOv5s Model

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Acquisition and Dataset Preparation

2.2. Improvement of YOLOv5s Network Architecture

2.3. Pruning of the YOLOv5s Model

2.4. The Route Direction Determination of the Carrier Platform

2.5. Network Visualization Based on Grad-CAM

3. Results

3.1. Model Pruning Operation

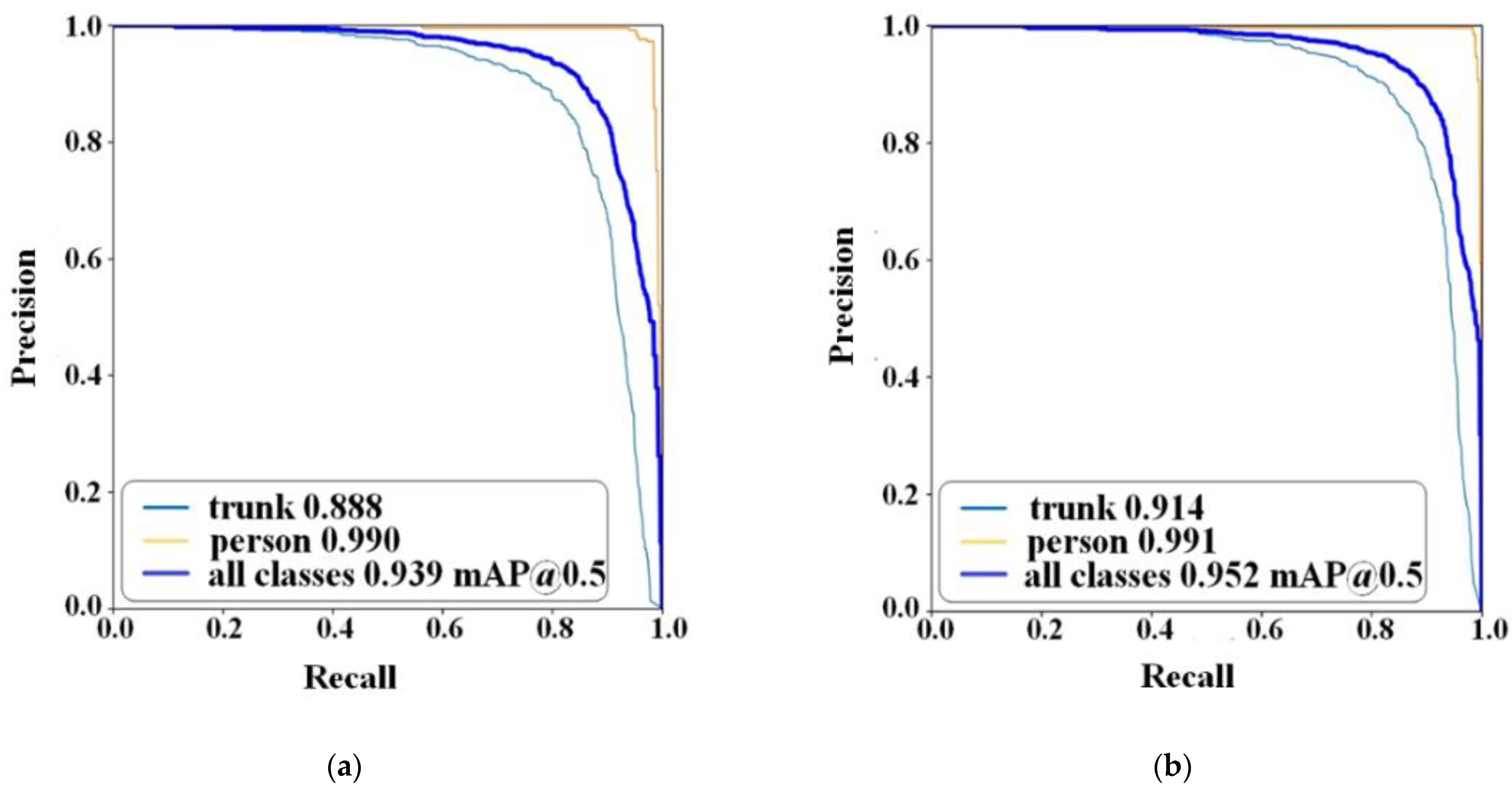

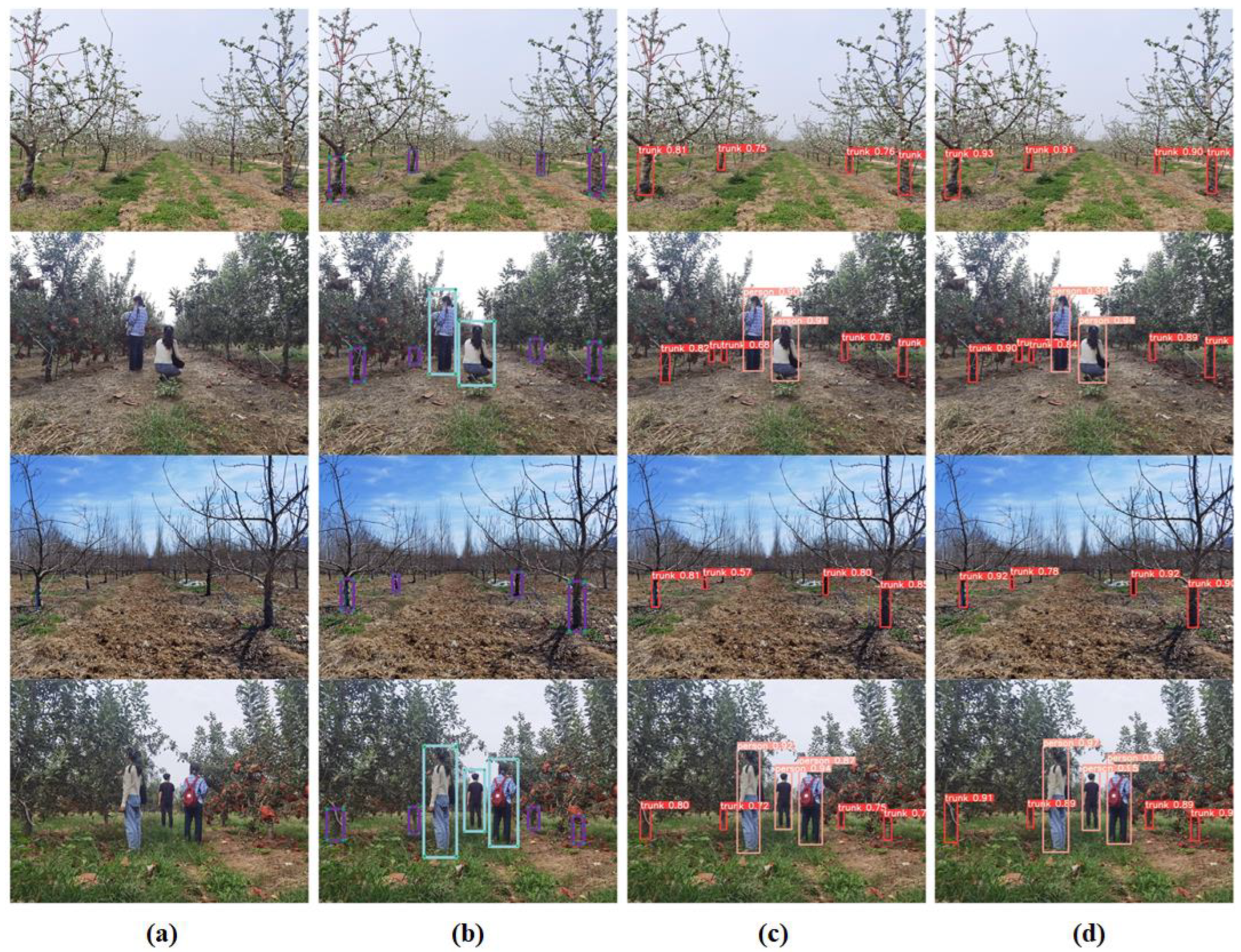

3.2. Model Performance Comparison before and after Improvement

3.3. Model Detection Performance Comparison among Four Seasons

3.4. Comparison under Different Lighting Conditions

3.5. In Field Detection on a Carrier Platform

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Clark, B.; Jones, G.D.; Kendall, H.; Taylor, J.; Cao, Y.; Li, W.; Zhao, C.; Chen, J.; Yang, G.; Chen, L.; et al. A proposed framework for accelerating technology trajectories in agriculture: A case study in China. Front. Agric. Sci. Eng. 2018, 5, 485–498. [Google Scholar] [CrossRef]

- Li, Y.; Li, M.; Qi, J.; Zhou, D.; Liu, K. Detection of typical obstacles in orchards based on deep convolutional neural network. Comput. Electron. Agric. 2021, 181, 105932. [Google Scholar] [CrossRef]

- Harshe, K.D.; Gode, N.P.; Mangtani, P.P.; Patel, N.R. A review on orchard vehicles for obstacle detection. Int. J. Electr. Electron. Data Commun. 2013, 1, 69. [Google Scholar]

- Malavazi, F.B.P.; Guyonneau, R.; Fasquel, J.B.; Lagrange, S.; Mercier, F. LiDAR-only based navigation algorithm for an autonomous agricultural robot. Comput. Electron. Agric. 2018, 154, 71–79. [Google Scholar] [CrossRef]

- Gu, C.; Zhai, C.; Wang, X.; Wang, S. CMPC: An Innovative Lidar-Based Method to Estimate Tree Canopy Meshing-Profile Volumes for Orchard Target-Oriented Spray. Sensors 2021, 21, 4252. [Google Scholar] [CrossRef] [PubMed]

- Kolb, A.; Meaclem, C.; Chen, X.Q.; Parker, R.; Milne, B. Tree trunk detection system using LiDAR for a semi-autonomous tree felling robot. In Proceedings of the 2015 IEEE 10th Conference on Industrial Electronics and Applications (ICIEA), Auckland, New Zealand, 15–17 June 2015; pp. 84–89. [Google Scholar]

- Chen, X.; Wang, S.; Zhang, B.; Liang, L. Multi-feature fusion tree trunk detection and orchard mobile robot localization using camera/ultrasonic sensors. Comput. Electron. Agric. 2018, 147, 91–108. [Google Scholar] [CrossRef]

- Vodacek, A.; Hoffman, M.J.; Chen, B.; Uzkent, B. Feature Matching With an Adaptive Optical Sensor in a Ground Target Tracking System. IEEE Sens. J. 2015, 15, 510–519. [Google Scholar]

- Zhang, X.; Karkee, M.; Zhang, Q.; Whiting, M.D. Computer vision-based tree trunk and branch identification and shaking points detection in Dense-Foliage canopy for automated harvesting of apples. J. Field Robot. 2021, 58, 476–493. [Google Scholar] [CrossRef]

- Wang, L.; Lan, Y.; Zhang, Y.; Zhang, H.; Tahir, M.N.; Ou, S.; Liu, X.; Chen, P. Applications and Prospects of Agricultural Unmanned Aerial Vehicle Obstacle Avoidance Technology in China. Sensors 2019, 19, 642. [Google Scholar] [CrossRef] [PubMed]

- Shalal, N.; Low, T.; Mccarthy, C.; Hancock, N. A preliminary evaluation of vision and laser sensing for tree trunk detection and orchard mapping. In Proceedings of the Australasian Conference on Robotics and Automation (ACRA 2013), University of New South Wales, Sydney, Australia, 2–4 December 2013; pp. 80–89. [Google Scholar]

- Freitas, G.; Hamner, B.; Bergerman, M.; Singh, S. A practical obstacle detection system for autonomous orchard vehicles. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Algarve, Portugal, 7–12 October 2012; pp. 3391–3398. [Google Scholar]

- Bietresato, M.; Carabin, G.; Vidoni, R.; Gasparetto, A.; Mazzetto, F. Evaluation of a LiDAR-based 3D-stereoscopic vision system for crop-monitoring applications. Comput. Electron. Agric. 2016, 124, 1–13. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldu, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Yang, T.; Zhou, S.; Xu, A. Rapid Image Detection of Tree Trunks Using a Convolutional Neural Network and Transfer Learning. IAENG Int. J. Comput. Sci. 2021, 48, 257–265. [Google Scholar]

- Zhang, Q.; Karkee, M.; Tabb, A. The use of agricultural robots in orchard management. arXiv 2019, arXiv:1907.13114. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Zhang, C.; Yong, L.; Chen, Y.; Zhang, S.; Ge, L.; Wang, S.; Li, W. A Rubber-Tapping Robot Forest Navigation and Information Collection System Based on 2D LiDAR and a Gyroscope. Sensors 2019, 19, 2136. [Google Scholar] [CrossRef]

- Zhang, X.; Li, X.; Zhang, B.; Zhou, J.; Tian, G.; Xiong, Y.; Gu, B. Automated robust crop-row detection in maize fields based on position clustering algorithm and shortest path method. Comput. Electron. Agric. 2018, 154, 165–175. [Google Scholar] [CrossRef]

- Chandra, A.L.; Desai, S.V.; Guo, W.; Balasubramanian, V.N. Computer Vision with Deep Learning for Plant Phenotyping in Agriculture: A Survey. arXiv 2020, arXiv:2006.11391. [Google Scholar]

- Jie, H.; Li, S.; Gang, S.; Albanie, S. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar]

- Wu, D.; Lv, S.; Jiang, M.; Song, H. Using channel pruning-based YOLO v4 deep learning algorithm for the real-time and accurate detection of apple flowers in natural environments. Comput. Electron. Agric. 2020, 178, 105742. [Google Scholar] [CrossRef]

- Li, Y.; Iida, M.; Suyama, T.; Suguri, M.; Masuda, R. Implementation of deep-learning algorithm for obstacle detection and collision avoidance for robotic harvester. Comput. Electron. Agric. 2020, 174, 105499. [Google Scholar] [CrossRef]

- Moghadam, P.; Starzyk, J.A.; Wijesoma, W.S. Fast Vanishing-Point Detection in Unstructured Environments. IEEE Trans. Image Process. 2012, 21, 425–430. [Google Scholar] [CrossRef]

- Zhou, Y.; Yang, Y.; Zhang, B.; Wen, X.; Yue, X.; Chen, L. Autonomous detection of crop rows based on adaptive multi-ROI in maize fields. Int. J. Agric. Biol. Eng. 2021, 014, 217–225. [Google Scholar] [CrossRef]

- Aguiar, A.S.; Monteiro, N.N.; Santos, F.N.D.; Pires, E.J.S.; Silva, D.; Sousa, A.J.; Boaventura-Cunha, J. Bringing semantics to the vineyard: An approach on deep learning-based vine trunk detection. Agriculture 2021, 11, 131. [Google Scholar] [CrossRef]

- Badeka, E.; Kalampokas, T.; Vrochidou, E.; Tziridis, K.; Papakostas, G.; Pachidis, T.; Kaburlasos, V. Real-time vineyard trunk detection for a grapes harvesting robot via deep learning. In Proceedings of the Thirteenth International Conference on Machine Vision, Rome, Italy, 2–6 November 2020; Osten, W., Nikolaev, D.P., Zhou, J., Eds.; International Society for Optics and Photonics (SPIE): Bellingham, WA, USA, 2021; Volume 11605, pp. 394–400. [Google Scholar]

- Zhao, W.; Wang, X.; Qi, B.; Runge, T. Ground-Level Mapping and Navigating for Agriculture Based on IoT and Computer Vision. IEEE Access 2020, 8, 221975–221985. [Google Scholar] [CrossRef]

| Configuration | Parameter |

|---|---|

| CPU | Intel(R) Core(TM) i5-9300H CPU @ 2.40 GHz |

| GPU | NVIDIA GeForce GTX 1650 |

| Development environment | Python 3.7, PyTorch 1.6, Anaconda3 |

| Operating system | Windows10 (64-bit) |

| Model | Model Size/MB | Precision/% | Recall/% | mAP/% | Detection Time/ms |

|---|---|---|---|---|---|

| YOLOv5s | 27.50 | 86.20 | 99.00 | 93.90 | 35.00 |

| Improved YOLOv5s | 13.90 | 91.80 | 99.00 | 95.20 | 33.00 |

| Season | Spring | Summer | Autumn | Winter |

|---|---|---|---|---|

| mAP/% | 95.61 | 98.37 | 96.53 | 89.61 |

| Class | P/% | R/% | mAP/% | |||

|---|---|---|---|---|---|---|

| Front-Light | Back-Light | Front-Light | Back-Light | Front-Light | Back-Light | |

| All | 90.50 | 90.40 | 90.50 | 83.30 | 94.20 | 91.20 |

| Trunk | 88.00 | 86.20 | 82.20 | 72.30 | 89.50 | 85.80 |

| Obstacle | 93.00 | 94.70 | 98.70 | 94.30 | 99.00 | 96.60 |

| Configuration | Parameter |

|---|---|

| Power | 2 × 48 V |

| Power inverter | HS-08 |

| Camera | OPENMV4 H7 PLUS |

| Control module | STM32F103ZET6 |

| Relay | MY4N-J |

| AC contactor | NXC-40 |

| Source | Method | Object | Orchard Season Considerations | Result |

|---|---|---|---|---|

| Li et al. [2] | YOLOv3 | humans, cement columns and utility poles | No | mAP: 88.64% |

| Yang et al. [21] | YOLOv3 | trunk, telephone poles and streetlight | No | Recall: >93.00% |

| Chen et al. [7] | ultrasonic sensors | trunk | No | Recall: 92.14% |

| Proposed method | YOLOv5s | trunk, obstacle and determine the direction | Yes | mAP: 95.20% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, F.; Zhao, Y.; Shi, Y.; Zhao, D.; Wang, G.; Yan, Y.; Zu, L.; Chang, S. Tree Trunk and Obstacle Detection in Apple Orchard Based on Improved YOLOv5s Model. Agronomy 2022, 12, 2427. https://doi.org/10.3390/agronomy12102427

Su F, Zhao Y, Shi Y, Zhao D, Wang G, Yan Y, Zu L, Chang S. Tree Trunk and Obstacle Detection in Apple Orchard Based on Improved YOLOv5s Model. Agronomy. 2022; 12(10):2427. https://doi.org/10.3390/agronomy12102427

Chicago/Turabian StyleSu, Fei, Yanping Zhao, Yanxia Shi, Dong Zhao, Guanghui Wang, Yinfa Yan, Linlu Zu, and Siyuan Chang. 2022. "Tree Trunk and Obstacle Detection in Apple Orchard Based on Improved YOLOv5s Model" Agronomy 12, no. 10: 2427. https://doi.org/10.3390/agronomy12102427

APA StyleSu, F., Zhao, Y., Shi, Y., Zhao, D., Wang, G., Yan, Y., Zu, L., & Chang, S. (2022). Tree Trunk and Obstacle Detection in Apple Orchard Based on Improved YOLOv5s Model. Agronomy, 12(10), 2427. https://doi.org/10.3390/agronomy12102427