Abstract

Livestock welfare and management could be greatly enhanced by the replacement of branding or ear tagging with less invasive visual biometric identification methods. Biometric identification of cattle from muzzle patterns has previously indicated promising results. Significant barriers exist in the translation of these initial findings into a practical precision livestock monitoring system, which can be deployed at scale for large herds. The objective of this study was to investigate and address key limitations to the autonomous biometric identification of cattle. The contributions of this work are fourfold: (1) provision of a large publicly-available dataset of cattle face images (300 individual cattle) to facilitate further research in this field, (2) development of a two-stage YOLOv3-ResNet50 algorithm that first detects and extracts the cattle muzzle region in images and then applies deep transfer learning for biometric identification, (3) evaluation of model performance across a range of cattle breeds, and (4) utilizing few-shot learning (five images per individual) to greatly reduce both the data collection requirements and duration of model training. Results indicated excellent model performance. Muzzle detection accuracy was 99.13% (1024 × 1024 image resolution) and biometric identification achieved 99.11% testing accuracy. Overall, the two-stage YOLOv3-ResNet50 algorithm proposed has substantial potential to form the foundation of a highly accurate automated cattle biometric identification system, which is applicable in livestock farming systems. The obtained results indicate that utilizing livestock biometric monitoring in an advanced manner for resource management at multiple scales of production is possible for future agriculture decision support systems, including providing useful information to forecast acceptable stocking rates of pastures.

1. Introduction

In grazing systems, livestock are primary consumers of biomass, whilst in intensive production systems such as feedlots, livestock require vast amounts of grains and roughage. On the other hand, in order to meet an increase in global demand for livestock products, there is considerable pressure to raise greater numbers of livestock within shorter durations of time. Consumer preference and legislative requirements also require adherence to high standards of animal welfare. Keeping track and effectively monitoring the welfare of large numbers of individual animals is an increasingly difficult task, but it provides useful information to inform grain feed demand forecasts and acceptable stocking rates of pastures. Traditional approaches to herd management are labor intensive and invasive with the potential to cause pain and morbidity to stock. A wide range of approaches, including ear tagging, ear tattooing, hot ironing, freeze branding, ID collars, microchipping and visual markers, such as paint, have been used to track and identify individual animals within herds [1]. Metallic and plastic ear tags can cause damage [2] and considerable pain to stock [3]. Ear tattooing requires extensive handling and restraint of stock and is visible only at short distances [4]. Hot iron and freeze branding have been demonstrated to cause distress in cattle [5]; whilst collar straps still require manual handling of stock and pose the risk of collar loss or entanglement [6]. Careful design and placement was required to achieve minimal injury when using VHF/GPS collars for horse monitoring [7] and similar issues would likely arise with cattle. Electronic identification tags and collars, such as National Livestock Identification Tags, require diligent record keeping and management with a certain proportion of tag failures incurred [8]. Other electronic approaches, such as microchipping, require subcutaneous injection [9] or injection of other electronic transponders or sensors into body parts such as the scutulum [10]. Livestock markers and paint, although fast and economical, still require manual application and have a limited duration of effectiveness. Therefore, currently available cattle identification approaches have significant limitations; however, recent innovations in human biometrics research, such as face recognition systems, offer promising alternative options. Biometric identification offers less invasive monitoring with corresponding benefits to livestock welfare. In addition, biometric identification allows for a wide range of future applications, including animal tracking, ownership verification, bio-security control, livestock registration, behavior monitoring and animal research [1,11]. A major challenge to biometric algorithms is achieving the extremely high standards of accuracy required, particularly for large and diverse herds of cattle breeds.

The literature reports extensive prior research on animal biometrics, both for wildlife and livestock. Unique patterns and discriminatory markings have been used for computer-assisted individual identification, including spot patterns in manta rays [12], penguins [13] and whale sharks [14,15] and stripe patterns in tigers [16]. Facial recognition technology has also been adapted and applied to several animal species, including chimpanzees [17] and lemurs [18]. Research on the biometric identification of livestock spans cattle [19,20,21], sheep [22,23], horses [24,25] and pigs [26,27]. Biometric recognition of cattle is of particular interest given the major role of the beef and dairy industries. Cattle biometric recognition approaches include coat pattern analysis [21,28,29], facial recognition [30,31], gait analysis [32] and muzzle print pattern analysis [33,34,35]. The various approaches offer several advantages and disadvantages. Coat pattern analysis is useful for cattle breeds with distinctive patterns but otherwise limited for many other breeds, particularly for beef cattle. Facial recognition is promising but requires far more extensive research to ensure high-quality performance across range of herd sizes, occlusion, multi-scale, variable lighting, and image resolution issues. On the other hand, in order to achieve accurate cattle face recognition, their face must be precisely detected [36]. Gait analysis via video has excellent potential but is constrained by requiring special camera configurations, generating large amounts of data and being computationally intensive. Muzzle patterns, in contrast, have been observed to have minutiae details similar to fingerprints in humans with the potential for high accuracy identification [19,37], but require close range imaging of stock at frontal angles. Our work focuses on muzzle print pattern identification due to envisaged applications involving stock theft investigations, veterinary e-records and sales tracking, which require the highest standards of identification with handling and containment of cattle in crushes, or similar being permissible.

Considerable prior research has investigated computer-assisted muzzle print identification of cattle. These models have utilized different approaches, including deep learning [35], support vector machine [38,39,40], group sparse representation [41], fuzzy-k-nearest neighbor [42], Fisher locality projections [38], decision trees [43], AdaBoost classifier [44], SIFT matching [45], bag-of-visual words [46] and classifiers fusion [33] to identify individual cattle. Excellent identification accuracy has been achieved, for instance [38] achieved 96.87% accuracy but there are major barriers to the practical implementation of this technology. The algorithms presented in the literature have been assessed on single cattle breeds or small scales (<66 individuals per study). Furthermore, current algorithms require both a pre-cropped muzzle print image along with a prohibitive number of images per individual for model training. The algorithm of [38], for instance, utilizes at least 10 images per individual. Obtaining such large numbers of images per individual is both inconvenient and impractical in most livestock production settings. In this paper, we introduce a novel deep learning model combining joint muzzle detector and biometric classifier, which seeks to address these limitations. This model is evaluated on a herd (300 individuals) of mixed breed cattle using a strict limit of five images per individual for model training and exhibits excellent (99.11%) accuracy.

2. Materials and Methods

2.1. Data

2.1.1. Data Collection

Biometric images consisting of the cattle muzzle and face were collected at the University of New England’s Tullimba Research Feedlot, Kingstown, NSW, Australia. The images were collected during Induction Day in February 2019 when animals were vaccinated and tagged. In total, 300 cattle were inducted, which involved each animal being restrained in a crush restraint with its head placed in a “head scoop” for application of an eye treatment and micro-chipping. Upon its containment in the crush, a photographer stood approximately 1–2 m in front and photographed images of each individual animal in frontal pose from a camera set at 1 m above the ground. The camera was a Canon D800 (Toyko, Japan) equipped with a 18–55 mm lens (Canon EF-S 18–55 mm f/4–5.6 IS STM) [47]. Images of the cattle’s face were taken while the focus of the camera was on the muzzle. The image resolution was 4000 (width) × 6000 (height) pixels in RGB mode and JPEG format without compression and auto lighting balance. The camera was set to “burst” mode to capture 10 images in rapid (6 shots per second) succession, due to the rapid nature of the induction process and the need to capture multiple images of each individual animal. Data collection was conducted between 8:00 and 16:00 on a sunny day and under natural lighting conditions. The captured images were stored in the camera’s local SD card.

2.1.2. Dataset

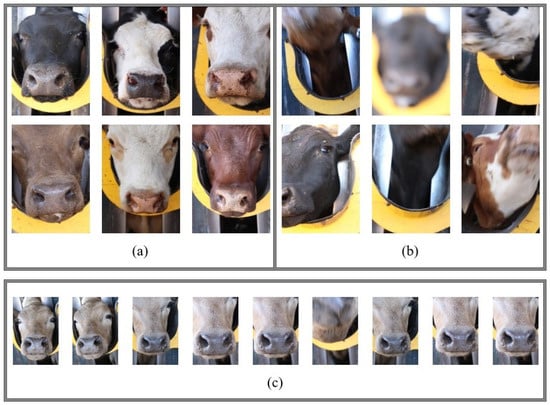

A total of 2900 images were captured from 300 animals consisting of Bos taurus beef cattle of mixed breeds including Angus, Hereford Charolais and Simmental. A proportion (268 images or 9.24%) of the images did not capture the muzzle or were extremely blurry due to significant movement of the animals’ heads (Figure 1). We have released this dataset as supplementary material and to the best of our knowledge it is currently the largest and most comprehensive livestock biometrics dataset which is publicly available.

Figure 1.

Samples of cattle face images: (a) acceptable images, (b) unacceptable images for biometric identification and (c) sample photos of an individual cattle demonstrating cattle head movement.

2.2. Muzzle Detection and Extraction

2.2.1. YOLOv3 Muzzle Detector

A key step in the biometric identification processing chain is the detection and extraction of the cattle muzzle. Head movements and slight differences in alignment of the photo resulted in the muzzle location being different between images. The YOLOv3 object detector [48] was utilized for muzzle detection, with transfer learning implemented to customize the YOLOv3 network weights for this task. In the YOLOv3 framework, the darknet-53 [49] convolutional neural network with 53 layers, is utilized to predict both object categories and bounding boxes. Convolutional layers with stride 2 (down-sampling by 2) without feature pooling ensure that the output of each feature layer is smaller than the previous, thereby reducing computational complexity and processing times.

In the context of muzzle detection, the YOLOv3 framework both detects the presence of a muzzle and provides the rectangular image coordinates of its location within the image. The YOLOv3 framework achieves this task by dividing images into S × S grid, where S represents the size of the grid, and uses each grid to predict the object centered in that grid cell. In each grid cell, B bounding boxes are predicted and the confidence scores for each is calculated. The confidence score indicates how likely the object exists in that bounding box. A cell with no object detected returns to zero for the confidence score. The confidence score is defined and calculated by the formula:

The intersection over union (IOU) between the predicted box and any ground truth box is expected to be equal to the confidence score [48]. Bounding boxes, the confidence score and the class probabilities are encoded as a S × S × (B × 5 + C) tensor where, as previously mentioned, S represents the size of grid and B indicates the number of predicted bounding boxes for each grid. There is a set of five predicted values for each bounding box, namely x, y, w, h, and the confidence score. The center of the bounding box is represented by the pair of (x, y) coordinates. The w and h parameters represent the width and height of the predicted bounding box, respectively. The parameter C represents the conditional class probabilities that are conditioned on the grid cell containing an object [50].

YOLOv3′s detection requires only one pass through the network and, as a result, is comparatively fast. It also achieves an excellent balance between computational processing times and detector accuracy [50]. Reduced versions of the YOLOv3 network architecture also exist, thereby facilitating future incorporation of the muzzle detector on mobile computing platforms, such as smartphones.

2.2.2. YOLOv3 Muzzle Detector Training

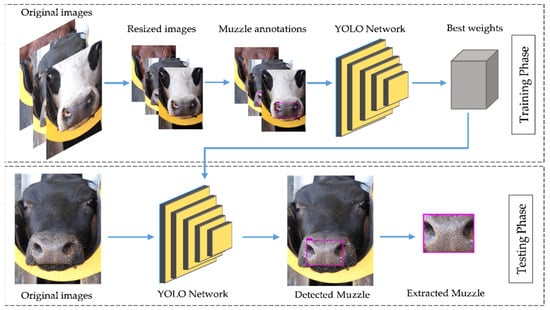

Figure 2 illustrates the muzzle detection and extraction procedure based on YOLOv3. Transfer learning was utilized via ImageNet pre-trained darknet-53 weights [51]. To train the YOLOv3 model for muzzle detection, 80% (2320) of the images were randomly selected for training and validation and the remaining 20% (580) used for model testing. The large size of the original images (4000 × 6000 × 3 pixels) proved computationally prohibitive to model training. Therefore, images were re-scaled (608 × 832 × 3 pixels) using the Bicubic interpolation of the Pillow library [52]. The visual object tagging tool (VoTT) [53] was used to annotate the muzzle region of each image as required by the YOLOv3 model training process. Model training was performed using a Lambda Quad RTX 6000 computer (hardware specified in Table 1) equipped with the CUDA toolkit (NVIDIA, Santa Clara, CA, USA, 2007) to perform rapid computations using the Graphical Processing Unit.

Figure 2.

Muzzle detection and extraction procedure based on YOLOv3.

Table 1.

Lambda Quad RTX 6000 hardware details.

2.2.3. Muzzle Detector Model Hyper-Parameter Configuration

The YOLOv3 framework with the darknet-53 CNN requires specification of model hyper-parameters prior to training. There are several hyper-parameters including: batch size, learning rate, channel size and network resolution. These hyper-parameters were set to batch size = 64, channel size = 3, learning rate = 0.001 and network resolution = 608 × 832. The full set of YOLOv3 muzzle detection hyper-parameters is provided as a configuration file in the supplementary material.

The batch size refers to the number of image samples passed to the network through each step of model training. The magnitude of batch size is adjusted according to hardware performance (CPU and GPU) and memory availability. Generally, large batch sizes are preferred to allow the network to access more samples and features through each iteration. In the YOLOv3 muzzle detector, the batch size hyper-parameter was found by a process of on-line monitoring of hardware performance and network convergence through several trial model training runs across a set of batch sizes (4, 8, 16, 32, 64 and 128). The channel size refers to the number of color channels in the image. The muzzle images are RGB (3 channels) and to utilize all available color information, the channel size parameter was set to 3. As stated in Section 2.2.2 the input image resolution was down-sampled to (608 × 832 × 3 pixels) as the original image resolution was too high (4000 × 6000 × 3 pixels) for model training, exhausting available GPU memory. Progressively down-sampling (in factors of 32, as required by the darknet-53 network), was implemented with model training monitored. A network resolution of (608 × 832 × 3 pixels) was found to permit model training within available computational resources whilst also allowing a sufficiently large resolution for the muzzle detection task. Muzzle detector model training was then performed utilizing this set of hyper-parameters and model configurations. The number of model training iterations was set to a maximum of 10,000. On-line monitoring of average loss and mean average precision (mAP) per iteration, indicated that network convergence had been achieved by 6000 iterations with the lowest model training error rate and highest validation set accuracy. This lower magnitude of iterations was preferred to help safeguard from model over-fitting. Therefore, the YOLOv3 darknet-53 weights at 6000 iterations was accessed via saved checkpoints and utilized as the optimal muzzle detector model.

2.3. Cattle Identification Model

2.3.1. Data Pre-Processing

To identify the individual cattle, depending on the available database, unique features should be selected so that the identification operation can be performed efficiently. Based on this requirement, the muzzle was selected. In most of the proposed methods so far, the muzzle was extracted manually [35,54,55], which is both time-consuming and not suitable for the practical applications. Therefore, an effective automatic model for detecting and extracting the muzzle utilizing YOLOv3 darknet-53 was deployed in this study. This process was performed automatically in software using the bounding box coordinates output by the muzzle detector. The muzzle detector was found to be highly effective in both the detection and extraction of the cattle muzzle region. Post muzzle detection and extraction, each individual animal had at least seven images (e.g., please refer to Figure 1c) suitable for further analysis (those discarded by the muzzle detector were either too blurry or partially out-of-frame). A biometric model identification model training data set was produced by randomly sampling five images per individual while almost half of the remaining images were used for validation, and others were used for testing. In total, the biometric model evaluation dataset included 300 cattle, with 1500 images for training, 569 images for validation and 563 images for the testing. Data augmentation, in the form of 15-degree rotation, was utilized to increase the model training set size and enhance model robustness and performance.

2.3.2. Biometric Identification Using ResNet-50 CNN

Overview:

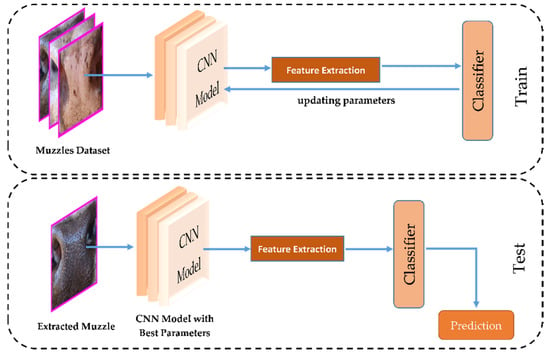

The cattle biometric identification process is displayed in Figure 3. This process follows the structure of the ACE-V (analysis, comparison, evaluation and verification) procedure [21]. A muzzles dataset is formed using muzzle images from known individual animals. Automatic feature learning and extraction is applied using a ResNet-50 CNN. The ResNet-50 CNN was pre-initialized with ImageNet weights and then modified for biometric muzzle print recognition by implementing transfer learning through CNN re-training and modification of the final network layer (modified from 1000 object classes to the 300 individual animal identifier classes). Then, by using the SoftMax classifier, parameters were updated based on the loss to learn individual cattle. Therefore, the classifier assigns each class with a specific predicted output. Muzzle print identification from an unknown individual was then performed by first extracting the muzzle pattern (using YOLOv3 darknet-53 muzzle detector) and then by utilizing the model with best parameters (ResNet-50 CNN), the features were extracted. Then, the SoftMax classifier was used to predict the class by the highest probability it had. In this research, the “unknown” individual muzzle patterns are in fact sourced from the model test data set and therefore it is possible for model accuracies to be reported.

Figure 3.

Overview of cattle biometric identification process.

2.3.3. Biometric Model Training

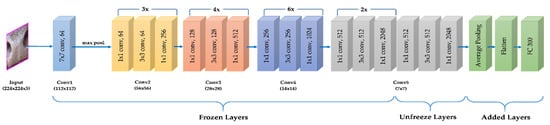

The ResNet-50 CNN was used to automatically extract features from each muzzle image [56]. Selection of ResNet-50 was based on the fact that (i) it is a top-performer on object classification tasks [57,58,59], (ii) the fact its depth still permits mobile computing [60] and (iii) its depth does not require large numbers of samples per class to prevent over-fitting [61]. A ResNet-50 pre-trained with ImageNet data consisting of (1.28 million training images belongs to 1000 object classes) was used to greatly reduce the sample size (muzzle image) requirements by Keras with TensorFlow backend [56]. A strategy of transfer learning with fine-tuning was implemented to modify the ResNet-50 ImageNet CNN into a ResNet-50 muzzle pattern identifier. Transfer learning with fine-tuning involves removing the final pooling and fully connected layer in the original ResNet-50 ImageNet model. Next, an average pooling and a flattened layer, followed by a dense layer, are added to the end of the network. Figure 4 shows the network after the three new fully connected layers have been added, this network architecture formed the basis of the ResNet-50 muzzle pattern identifier.

Figure 4.

The proposed ResNet-50 muzzle pattern identifier model architecture.

In Figure 4, each box represents a layer of the model’s architecture which consists of “Frozen Layers”, “Unfrozen layers” and “Added layers”. The frozen convolutional layers (1–46) were the initial layers of the network and consist of more general image features (lines, squares, circles) that were learned during the ImageNet pretraining. The Unfrozen layers (47–49) were the trainable parts of the network specific to muzzle pattern features. Whilst the ‘Added Layers’ consist of the classifier components trained after the flattening the output from the unfrozen convolutional layers. The number of outputs in the final fully connected layer was set at 300 classes, corresponding to the number of individual animals in the study.

In total, four different model training strategies were evaluated. These were: (a) training from scratch using the ResNet-50 architecture without pre-initialization, (b) transfer learning using ResNet-50 pre-initialized with ImageNet weights with all layers frozen but a SoftMax classifier added to the final layer to train the model to identify the individual animals, (c) fine-tuning the last convolutional layer whereby transfer learning was used as previously but the last convolutional layer was unfrozen and thereby its weights could be modified and (d) fine-tuning the last 3 convolutional layers, similar to transfer learning but the last 3 convolutional layers were unfrozen and therefore these weights could be modified.

The Resnet-50 muzzle pattern identifier was trained with 1500 muzzle pattern images and their corresponding individual identity labels using fine-tuning transfer learning from a ResNet-50 ImageNet classifier. All model training was performed on a Lambda Quad RTX 6000 (Table 1). The data generator package and Keras library [62] were used in conjunction with the GPU version of TensorFlow (1.13.1) [63]. All muzzle images were reshaped to (224 × 224 × 3 pixels) dimensions in order to meet the requirements of the ResNet-50 architecture. Note that the ResNet-50 architecture still permitted the use of RGB-format color images. The ResNet-50 model hyper-parameters were set to learning rate: 0.0001 (0.01 training from scratch), batch size: 10, resulting in 150 iterations per epoch. These hyper-parameters were set based on well-established magnitudes used by practitioners followed by careful tuning and modification based on monitoring model performance and convergence. Post hyper-parameter tuning the full ResNet-50 transfer learning process was implemented. The ADAM optimizer [64] was utilized in conjunction with the cross-entropy loss function. Model training was continued for 100 epochs (totaling 15,000 iterations) and performance evaluated by the held-back validation set of 569 muzzle images. The ResNet-50 muzzle pattern identifier was then assessed for accuracy on the test data set consisting of 563 muzzle images from known individual animals.

3. Results

3.1. Muzzle Detection

The weights of the network resulted from training are adopted to evaluate the trained model with the test dataset. To obtain the optimal resolution for the input to the network in the test phase, we tried different resolutions according to the specification of YOLO. In [49], the authors advised using the highest network resolution possible as this will increase the precision of detection and will be useful in detecting small objects. Based on the result of the trials, a resolution of 1024 × 1024 provided the best precision and was adopted in the test phase. Table 2 compares the accuracy and the true and false positive rates as well as negative rates in determining the network resolution.

Table 2.

Multiple resolution comparison of the muzzle detection accuracy and the true and false positive rates and negative rates during the YOLOv3 darknet-53 network test phase.

According to the results, the trained model was able to detect the muzzle region in the test set with an accuracy of 99.13% providing very strong support that it is reliable for the detection task. However, as the model is based on YOLO, it suffers from one of its shortcomings and that is sensitivity to the background. The proposed model requires very little computation time, but the accuracy of detection is highly dependent on the training data. In other words, if the cattle images have not been captured in almost the same angle and distance, the model could fail to detect the muzzle region in those situations. In smart camera monitoring situations, this detector property is an advantage as it ensures that only those frames suitable for muzzle biometric recognition are captured for further processing.

3.2. Biometric Recognition

The speed of execution of deep learning models depends on the hardware used. In this study, all models have been implemented and tested on a Lambda Quad RTX 6000. Details of hardware information in this experiment are provided in Table 1.

The batch size was set as 10, resulting in 150 iterations in each epoch. The proposed model was trained in 100 epochs (totaling 15,000 iterations). An initial learning rate of 0.0001 was used, which is smaller than the learning rate for training a model from scratch, normally 0.01. Through using a very small learning rate, the newly added layers were able to learn patterns from the previously learned convolutional layers. If a higher learning rate was used instead, the risk of losing previous knowledge would increase.

We experimented with different configurations for our model to verify the performance of the model in different scenarios. The results of the training procedure under different settings are summarized in Table 3.

Table 3.

Comparison of training results of the ResNet-50 muzzle pattern identifier model under varying number of trainable layers.

In the first stage, the model was trained from scratch and without using pre-trained weights. Over-fitting occurred as expected. In the second phase, ImageNet pre-trained weights were used. All layers of the CNN were frozen, while a Softmax classifier was added to train the model based on the classes in the dataset, which enabled the model to overcome over-fitting and achieve reasonable accuracy. As can be noticed in Table 3, the number of trainable parameters and the average validation accuracy of different settings, when compared, revealed that by training the last three convolutional layers, the proposed model managed to reach the highest average validation of accuracy.

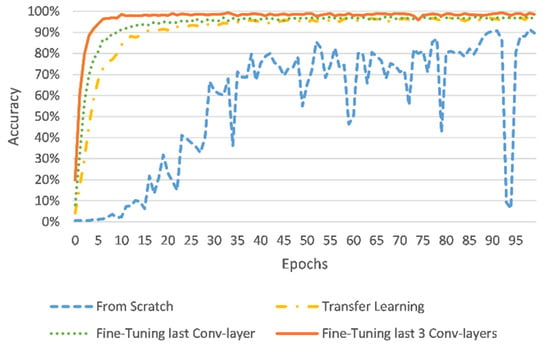

Although transfer learning was clearly helpful for training the model, one can notice in Figure 5 that the model required more epochs to achieve an acceptable accuracy. As a result, in the following stage, the last convolution layer of the proposed model was unfrozen to make it trainable, which resulted in the model being able to learn to differentiate the classes faster than before. After freezing different layers, the model was found to achieve the best performance when the previous three convolutional layers were unfrozen. One can compare, in Figure 5 and Figure 6, both the validation accuracies and the loss incurred by the proposed model under four different settings described earlier.

Figure 5.

Validation accuracies of the proposed model in different settings.

Figure 6.

Validation loss of the proposed model in different settings.

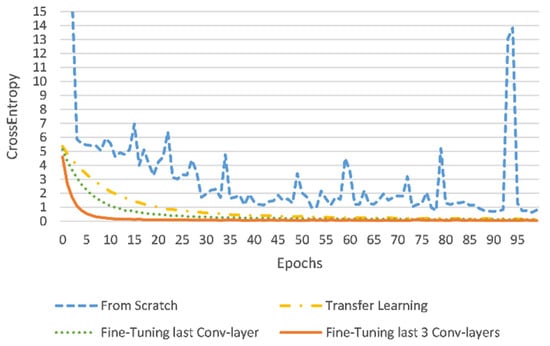

Based on the results shown in Figure 5 and Figure 6, the best performance was achieved when the last three convolutional layers were trained along with the added layers. Furthermore, as one can see in Figure 7a,b, the training and validation accuracies rapidly increased while the training loss and validation loss rapidly decreased. In addition, the model managed to achieve the highest training and validation accuracies after just 10 epochs, highlighting the reliability of the model. The network managed to converge relatively quickly but after 10 epochs, further training would not likely improve the accuracy of the model significantly. Accordingly, to minimize computation, the training process can be terminated after around 10 epochs.

Figure 7.

Performance related to the best overall configuration: (a) training and validation accuracy and (b) training and validation loss.

Testing the Model

Although most deep learning models employed the SoftMax activation function for classification tasks [65], in addition to the SoftMax classifier, three well known classifiers (K-Nearest Neighbors (KNN) [66], Support Vector Machine (SVM) [65], and Multilayer Perceptron (MLP) [67]) were utilized as well to investigate the precision of the proposed model. To apply the listed classifiers, the last fully connected layer of the model was removed and then the features and labels of all images were extracted and stored. Finally, for performing the prediction using the listed classifiers, the best hyper-parameters were found using grid search. For this purpose, a test set containing 563 images that belonged to 300 individual cattle was used. It is worth noting that the test set was obtained through random sampling. Although all the classifiers were able to achieve acceptable accuracy, SoftMax was able to correctly identify the classes of 558 out of the 563 images and managed to achieve the highest accuracy (99.11%) as expected. The obtained accuracies and inference time per image are listed in Table 4.

Table 4.

List of utilized classifiers.

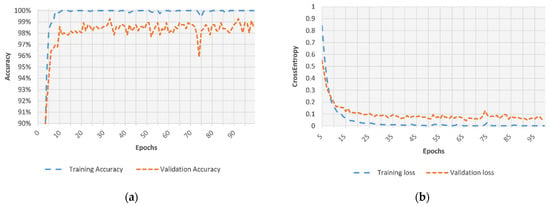

Figure 8 illustrates a few examples of the true and false identification images of several classes.

Figure 8.

True and false identification examples from the ResNet-50 muzzle pattern identifier model: (a) true identification examples and (b) false identification examples.

Table 5 presents results of several recent studies in the same field. As one would notice, most of the models need large numbers of training images for each individual animal. In practical settings, acquisition of a large number of images is not always possible. Moreover, collecting a large number of images is both time-consuming and costly as well as a training model using a large dataset requires more computation.

Table 5.

Recent studies and their implemented models showing data size and cattle identification accuracy.

4. Discussion

A fully automated detection system should be capable of not only detecting and classifying objects, but it should also segment the object accurately from the background. The YOLO-ResNet model pipeline described in this paper offers this capability, which is a significant practical advancement over previous cattle identification using muzzle patterns. The YOLO-ResNet model proposed in this paper utilizes a YOLO muzzle detector to first detect the region-of-interest followed by a fine-tuned ResNet-50 model to extract muzzle features and provide individual identification. To our knowledge, all previously reported muzzle print cattle identification methods have required the extraction of the muzzle region of interest by manual procedures [35,54,55,70]. Incorporation of the muzzle detector and biometric recognition algorithms facilitates the further development of this technology within precision livestock settings.

Few-shot learning was also utilized within the RestNet-50 biometric recognition model. There was a strict limit of five training images per individual animal. This differs from the other cattle muzzle biometric studies listed in

Table 5. The proposed YOLO-ResNet-50 model has the lowest number of training images per individual amongst any relevant study in the literature. The proposed model has the higher accuracy compared to competitive methods, but the results are not directly comparable due to the lower number of training images per individual and the overall greater number of individual animals than most studies. From a machine learning perspective, increasing the number of training images per individual animal might actually further improve performance of the YOLO-ResNet50 model. However, limiting the number of training images per individual has significant practical benefits; in many operational precision livestock management settings, it might not be feasible to obtain and label an extensive training data set. Furthermore, greater numbers of training images per individual increase the computational complexity of model updates. Deployment of cattle muzzle biometric algorithms into commercial settings will require such model updating to produce a model suitable for identification of the particular animals in a herd.

Envisaged applications of the YOLO-ResNet50 cattle biometric recognition model include livestock monitoring via ‘smart cameras’ coupled with edge computing network infrastructure through to smartphone-based biometric recognition apps. Both the YOLOv3 and ResNet50 network architectures are well-established and tested throughout a range of visual recognition applications, including livestock monitoring. The deeper ResNet-50 network architecture is well suited for processors utilized in many edge and fog computing applications, but it might not be the most suitable model for smartphone applications. Future research should investigate the benefits of smaller-sized models, which would have additional benefits for deployment within smartphone applications.

Biometric recognition of livestock has advanced far over the past decade, particularly those models using convolutional neural networks. Despite the considerable advances in the computer science and engineering aspects, there remain several unknowns regarding the utilization of livestock biometric monitoring within practical settings. This study is one of the largest cattle muzzle print biometric recognition studies to date; however, even 300 individuals is a small scale herd in many settings. Extensive research needs to be conducted to determine if this approach is suitable for a large national or international scale systems incorporating millions of animals or, alternatively, whether the models are more suitable for within herd applications on a farm (e.g., automated feed intake monitoring). The relevance of the YOLO-ResNet-50 (and similar) models for the biometric recognition of sheep, pigs, goats, horses, dairy cattle and other livestock is also of practical interest. Although many studies have recently been conducted on animal biometric characteristics, the possibility of successfully using the proposed models in practical environments is still unknown. Developing a better understanding of convolutional neural network-based livestock biometric recognition, in particular its benefits and limitations, will lead to greater confidence in these systems and facilitate industry adoption.

5. Conclusions

In this paper, we have proposed the YOLO-ResNet-50 muzzle biometric identification system as a novel deep learning modelling approach for the identification of individual cattle. The YOLO-ResNet-50 model addressed a major limitation of previous cattle identification systems by automating both the muzzle detection and individual identification steps within a single workflow. The implementation of the YOLO-ResNet50 model finds and detects the muzzle region automatically by using images taken of the frontal view of cattle. By extracting the muzzle region-of-interest, a cattle muzzle database was created, which is another main contribution of this study. Next, unknown images of cattle were compared and matched to return their ID utilizing a fine-tuned ResNet-50 model. Experimental results demonstrate that by using transfer learning with fine-tuning (rather than developing a new network architecture), it was possible to develop a leading biometric recognition model with 99.11% accuracy. Furthermore, by utilizing transfer learning, the amount of time and effort required for data collection and training can be reduced.

The proposed YOLO-ResNet50 model can classify an individual animal using the muzzle pattern with just five images. This outperforms similar approaches that require larger sets of images for training. In addition, distinct from other studies, the YOLO-ResNet50 model evaluations were performed on mixed breeds of cattle, which indicates that the biometric recognition model is not confined to one particular cattle breed. The model system architecture and workflow provide a useful template for similar livestock monitoring applications, such as the automated detection and identification of livestock from surveillance video or drone footage. The ability to achieve high classification accuracy underpins that further development of an automated livestock identification system is feasible and fast becoming a reality.

Supplementary Materials

All supplementary material including the dataset can be accessed through https://cloud.une.edu.au/index.php/s/eMwaHAPK08dCDru, accessed date 21 November 2021.

Author Contributions

Conceptualization, A.S., G.F. and P.K.; Data curation, A.S., G.F., F.C.C. and N.H.; Methodology, A.S., G.F. and N.H.; Supervision, G.F., P.K., F.C.C. and D.P.; Writing—original draft, A.S.; Writing—review and editing, A.S., G.F., P.K., N.H., F.C.C. and D.P. All authors have read and agreed to the published version of the manuscript.

Funding

The authors acknowledge the support provided through the International Post-graduate Award (IPRA) scholarship for Ali Shojaeipour.

Institutional Review Board Statement

The study was approved by the Animal Ethics Committee of the University of New England (UNE), Australia. (Ethical authority number: AEC18-135, date of approval: 21 December 2018).

Acknowledgments

Ali Shojaeipour is supported by the International Postgraduate Award (IPRA) scholarship granted by the University of New England (UNE). We would like to thank the University of New England’s Tullimba Research Feedlot, Kingstown, NSW, Australia for allowing us to collect the required data.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Petrovska-Delacrétaz, D.; Edwards, A.; Chiasson, J.; Chollet, G.; Pilliod, D.S. A reference system for animal biometrics: Application to the northern leopard frog. In Proceedings of the 2014 1st International Conference on Advanced Technologies for Signal and Image Processing (ATSIP), Sousse, Tunisia, 17–19 March 2014. [Google Scholar]

- Johnston, A.M.; Edwards, D.S. Welfare implications of identification of cattle by ear tags. Veter. Rec. 1996, 138, 612–614. [Google Scholar] [CrossRef]

- Chen, J.; Wen, Q.; Qu, W. Panda facial region detection based on topology modelling. In Proceedings of the 2012 5th International Congress on Image and Signal Processing, Chongqing, China, 16–18 October 2012. [Google Scholar]

- Jones, S.M. Tattooing of Cattle and Goats; FSA (University of Arkansas (System). Cooperative Extension Service): Little Rock, AR, USA, 2014; p. 4015. [Google Scholar]

- Lay, D., Jr.; Friend, T.; Randel, R.; Bowers, C.; Grissom, K.; Jenkins, O. Behavioral and physiological effects of freeze or hot-iron branding on crossbred cattle. J. Anim. Sci. 1992, 70, 330–336. [Google Scholar] [CrossRef]

- Schwartzkopf-Genswein, K.S.; Stookey, J.M.; Welford, R. Behavior of cattle during hot-iron and freeze branding and the effects on subsequent handling ease. J. Anim. Sci. 1997, 75, 2064–2072. [Google Scholar] [CrossRef]

- Collins, G.H.; Petersen, S.L.; Carr, C.A.; Pielstick, L. Testing VHF/GPS collar design and safety in the study of free-roaming horses. PLoS ONE 2014, 9, e103189. [Google Scholar] [CrossRef] [PubMed]

- Dairy-Australia. Precision Dairy Technology Electronic Cow Identification; Dairy Australia Limited: Southbank, Australia, 2016. [Google Scholar]

- Løken, T.; Vatn, G.; Kummen, E. Subcutaneous electronic identification in cattle: A field study. Veter. Rec. 2011, 169, 250. [Google Scholar] [CrossRef] [PubMed]

- Klindtworth, M.; Wendl, G.; Klindtworth, K.; Pirkelmann, H. Electronic identification of cattle with injectable transponders. Comput. Electron. Agric. 1999, 24, 65–79. [Google Scholar] [CrossRef]

- Jovani, R.; Pérez-Rodríguez, L.; Mougeot, F. Fractal geometry for animal biometrics: A response to Kühl and Burghardt. Trends Ecol. Evol. 2013, 28, 499–500. [Google Scholar] [CrossRef][Green Version]

- Town, C.; Marshall, A.; Sethasathien, N. M anta M atcher: Automated photographic identification of manta rays using keypoint features. Ecol. Evol. 2013, 3, 1902–1914. [Google Scholar] [CrossRef]

- Sherley, R.B.; Burghardt, T.; Barham, P.J.; Campbell, N.; Cuthill, I.C. Spotting the difference: Towards fully-automated population monitoring of African penguins Spheniscus demersus. Endanger. Species Res. 2010, 11, 101–111. [Google Scholar] [CrossRef]

- Arzoumanian, Z.; Holmberg, J.; Norman, B. An astronomical pattern-matching algorithm for computer-aided identification of whale sharks Rhincodon typus. J. Appl. Ecol. 2005, 42, 999–1011. [Google Scholar] [CrossRef]

- Bonnell, T.R.; Henzi, S.P.; Barrett, L. Sparse movement data can reveal social influences on individual travel decisions. arXiv 2015, arXiv:1511.01536. [Google Scholar]

- Hiby, L.; Lovell, P.; Patil, N.; Kumar, N.S.; Gopalaswamy, A.M.; Karanth, K.U. A tiger cannot change its stripes: Using a three-dimensional model to match images of living tigers and tiger skins. Biol. Lett. 2009, 5, 383–386. [Google Scholar] [CrossRef]

- Loos, A.; Ernst, A. An automated chimpanzee identification system using face detection and recognition. EURASIP J. Image Video Process. 2013, 2013, 49. [Google Scholar] [CrossRef]

- Crouse, D.; Jacobs, R.L.; Richardson, Z.; Klum, S.; Jain, A.; Baden, A.L.; Tecot, S.R. LemurFaceID: A face recognition system to facilitate individual identification of lemurs. BMC Zool. 2017, 2, 562. [Google Scholar] [CrossRef]

- Barry, B.; Gonzales-Barron, U.A.; McDonnell, K.; Butler, F.; Ward, S. Using Muzzle Pattern Recognition as a Biometric Approach for Cattle Identification. Trans. ASABE 2007, 50, 1073–1080. [Google Scholar] [CrossRef]

- Awad, A.I.; Zawbaa, H.M.; Mahmoud, H.A.; Nabi, E.H.H.A.; Fayed, R.H.; Hassanien, A.E. A robust cattle identification scheme using muzzle print images. In Proceedings of the 2013 Federated Conference on Computer Science and Information Systems (FedCSIS), Krakow, Poland, 8–11 September 2013. [Google Scholar]

- Zhao, K.; Jin, X.; Ji, J.; Wang, J.; Ma, H.; Zhu, Z. Individual identification of Holstein dairy cows based on detecting and matching feature points in body images. Biosyst. Eng. 2019, 181, 128–139. [Google Scholar] [CrossRef]

- Corkery, G.; Gonzales-Barron, U.A.; Butler, F.; McDonnell, K.; Ward, S. A Preliminary Investigation on Face Recognition as a Biometric Identifier of Sheep. Trans. ASABE 2007, 50, 313–320. [Google Scholar] [CrossRef]

- Barron, U.G.; Corkery, G.; Barry, B.; Butler, F.; McDonnell, K.; Ward, S. Assessment of retinal recognition technology as a biometric method for sheep identification. Comput. Electron. Agric. 2008, 60, 156–166. [Google Scholar] [CrossRef]

- Jarraya, I.; Ouarda, W.; Alimi, A.M. A preliminary investigation on horses recognition using facial texture features. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Hong Kong, China, 9–12 October 2015. [Google Scholar]

- Jarraya, I.; Ouarda, W.; Alimi, A.M. Deep neural network features for horses identity recognition using multiview horses’ face pattern. In Proceedings of the Ninth International Conference on Machine Vision (ICMV 2016), Nice, France, 18–20 November 2016; International Society for Optics and Photonics: Bellingham, WA, USA, 2017. [Google Scholar]

- Hansen, M.F.; Smith, M.L.; Smith, L.N.; Salter, M.G.; Baxter, E.M.; Farish, M.; Grieve, B. Towards on-farm pig face recognition using convolutional neural networks. Comput. Ind. 2018, 98, 145–152. [Google Scholar] [CrossRef]

- Wang, J.; Liu, A.; Xiao, J. Video-Based Pig Recognition with Feature-Integrated Transfer Learning. In Proceedings of the Chinese Conference on Biometric Recognition, Urumchi, China, 11–12 August 2018; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Kim, H.T.; Choi, H.L.; Lee, D.W.; Yoon, Y.C. Recognition of Individual Holstein Cattle by Imaging Body Patterns. Asian-Australas. J. Anim. Sci. 2005, 18, 1194–1198. [Google Scholar] [CrossRef]

- Andrew, W.; Hannuna, S.; Campbell, N.; Burghardt, T. Automatic individual holstein friesian cattle identification via selective local coat pattern matching in RGB-D imagery. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016. [Google Scholar]

- Cai, C.; Li, J. Cattle face recognition using local binary pattern descriptor. In Proceedings of the Signal and Information Processing Association Annual Summit and Conference (APSIPA), 2013 Asia-Pacific, Kaohsiung, Taiwan, 29 October–1 November 2013. [Google Scholar]

- Kumar, S.; Tiwari, S.; Singh, S.K. Face Recognition of Cattle: Can it be Done? Proc. Natl. Acad. Sci. India Sect. A Phys. Sci. 2016, 86, 137–148. [Google Scholar] [CrossRef]

- Qiao, Y.; Su, D.; Kong, H.; Sukkarieh, S.; Lomax, S.; Clark, C. Individual Cattle Identification Using a Deep Learning Based Framework. IFAC-PapersOnLine 2019, 52, 318–323. [Google Scholar] [CrossRef]

- Tharwat, A.; Gaber, T.; Hassanien, A.E. Two biometric approaches for cattle identification based on features and classifiers fusion. Int. J. Image Min. 2015, 1, 342–365. [Google Scholar] [CrossRef]

- Kumar, S.; Singh, S.K.; Singh, A.K. Muzzle point pattern based techniques for individual cattle identification. IET Image Process. 2017, 11, 805–814. [Google Scholar] [CrossRef]

- Kumar, S.; Pandey, A.; Sai, K.; Kumar, S.; Singh, S.K.; Singh, A.K.; Mohan, A. Deep learning framework for recognition of cattle using muzzle point image pattern. Measurement 2018, 116, 1–17. [Google Scholar] [CrossRef]

- Xu, B.; Wang, W.; Guo, L.; Chen, G.; Wang, Y.; Zhang, W.; Li, Y. Evaluation of Deep Learning for Automatic Multi-View Face Detection in Cattle. Agriculture 2021, 11, 1062. [Google Scholar] [CrossRef]

- Baranov, A.S.; Graml, R.; Pirchner, F.; Schmid, D.O. Breed differences and intra-breed genetic variability of dermatoglyphic pattern of cattle. J. Anim. Breed. Genet. 1993, 110, 385–392. [Google Scholar] [CrossRef]

- Kumar, S.; Singh, S.K.; Singh, R.S.; Singh, A.K.; Tiwari, S. Real-time recognition of cattle using animal biometrics. J. Real-Time Image Process. 2016, 13, 505–526. [Google Scholar] [CrossRef]

- Mahmoud, H.A.; Hadad, H.M.R.E. Automatic cattle muzzle print classification system using multiclass support vector machine. Int. J. Image Min. 2015, 1, 126–140. [Google Scholar] [CrossRef]

- Tharwat, A.; Gaber, T.; Hassanien, A.E. Cattle Identification Based on Muzzle Images Using Gabor Features and SVM Classifier; Springer International Publishing: Cham, Switzerland, 2014. [Google Scholar]

- Kumar, S.; Singh, S.K.; Abidi, A.I.; Datta, D.; Sangaiah, A.K. Group Sparse Representation Approach for Recognition of Cattle on Muzzle Point Images. Int. J. Parallel Program. 2017, 46, 812–837. [Google Scholar] [CrossRef]

- Kumar, S.; Singh, S.K. Automatic identification of cattle using muzzle point pattern: A hybrid feature extraction and classification paradigm. Multimedia Tools Appl. 2017, 76, 26551–26580. [Google Scholar] [CrossRef]

- El-Henawy, I.; El Bakry, H.M.; El Hadad, H.M. A new muzzle classification model using decision tree classifier. Int. J. Electron. Inf. Eng. 2017, 6, 12–24. [Google Scholar]

- Gaber, T.; Tharwat, A.; Hassanien, A.E.; Vaclav, S. Biometric cattle identification approach based on Weber’s Local Descriptor and AdaBoost classifier. Comput. Electron. Agric. 2016, 122, 55–66. [Google Scholar] [CrossRef]

- Noviyanto, A.; Arymurthy, A.M. Beef cattle identification based on muzzle pattern using a matching refinement technique in the SIFT method. Comput. Electron. Agric. 2013, 99, 77–84. [Google Scholar] [CrossRef]

- Awad, A.I.; Hassaballah, M. Bag-of-Visual-Words for Cattle Identification from Muzzle Print Images. Appl. Sci. 2019, 9, 4914. [Google Scholar] [CrossRef]

- Tokyo. Canon Lense. Japan-Lense. Available online: https://www.canon.com.au/camera-lenses/ef-s-18-55mm-f-4-5-6-is-stm (accessed on 1 February 2019).

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Du, J. Understanding of Object Detection Based on CNN Family and YOLO. J. Phys. Conf. Ser. 2018, 1004, 012029. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2009, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Clark, A. Pillow (PIL Fork) Documentation; 2015. Available online: https://buildmedia.readthedocs.org/media/pdf/pillow/latest/pillow.pdf (accessed on 12 June 2019).

- VoTT. 2019. Available online: https://github.com/microsoft/VoTT (accessed on 10 May 2019).

- Tharwat, A.; Gaber, T.; Hassanien, A.E.; Hassanien, H.A.; Tolba, M.F. Cattle Identification Using Muzzle Print Images Based on Texture Features Approach; Springer International Publishing: Cham, Switzerland, 2014. [Google Scholar]

- Ahmed, S.; Gaber, T.; Tharwat, A.; Hassanien, A.E.; Snael, V. Muzzle-based cattle identification using speed up robust feature approach. In Proceedings of the 2015 International Conference on Intelligent Networking and Collaborative Systems (INCOS), Taipei, Taiwan, 2–4 September 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Theckedath, D.; Sedamkar, R.R. Detecting Affect States Using VGG16, ResNet50 and SE-ResNet50 Networks. SN Comput. Sci. 2020, 1, 79. [Google Scholar] [CrossRef]

- Al-Haija, Q.A.; Adebanjo, A. Breast Cancer Diagnosis in Histopathological Images Using ResNet-50 Convolutional Neural Network. In Proceedings of the 2020 IEEE International IOT, Electronics and Mechatronics Conference (IEMTRONICS), Vancouver, BC, Canada, 9–12 September 2020. [Google Scholar]

- Islam, M.; Tasnim, N.; Baek, J.-H. Human Gender Classification Using Transfer Learning via Pareto Frontier CNN Networks. Inventions 2020, 5, 16. [Google Scholar] [CrossRef]

- Niu, W.; Ma, X.; Wang, Y.; Ren, B. 26ms inference time for resnet-50: Towards real-time execution of all dnns on smartphone. arXiv 2019, arXiv:1905.00571. [Google Scholar]

- Shahinfar, S.; Meek, P.; Falzon, G. “How many images do I need?” Understanding how sample size per class affects deep learning model performance metrics for balanced designs in autonomous wildlife monitoring. Ecol. Inform. 2020, 57, 101085. [Google Scholar] [CrossRef]

- Chollet, F. Keras: The Python Deep Learning Library; Astrophysics Source Code Library: New York, NY, USA, 2018. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M. Tensorflow: A system for large-scale machine learning. In Proceedings of the 12th {USENIX} Symposium on Operating Systems Design and Implementation ({OSDI} 16), Savannah, GA, USA, 2–4 November 2016. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Tang, Y. Deep learning using linear support vector machines. arXiv 2013, arXiv:1306.0239. [Google Scholar]

- Cunningham, P.; Delany, S.J. k-Nearest neighbour classifiers: (with Python examples). arXiv 2020, arXiv:2004.04523. [Google Scholar]

- Desai, M.; Shah, M. An anatomization on breast cancer detection and diagnosis employing multi-layer perceptron neural network (MLP) and Convolutional neural network (CNN). Clin. eHealth 2020, 4, 1–11. [Google Scholar] [CrossRef]

- Andrew, W.; Greatwood, C.; Burghardt, T. Visual localisation and individual identification of Holstein Friesian cattle via deep learning. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Andrew, W.; Gao, J.; Mullan, S.; Campbell, N.; Dowsey, A.W.; Burghardt, T. Visual identification of individual Holstein-Friesian cattle via deep metric learning. Comput. Electron. Agric. 2021, 185, 106133. [Google Scholar] [CrossRef]

- El-Henawy, I.; El-Bakry, H.; El-Hadad, H. Muzzle Classification Using Neural Networks. Int. Arab. J. Inf. Technol. (IAJIT) 2017, 14, 464–472. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).