MSDSI-FND: Multi-Stage Detection Model of Influential Users’ Fake News in Online Social Networks

Abstract

1. Introduction

- A novel English language dataset collected from X based on influential users’ tweets related to COVID-19 and their followers’ engagements and manually labeled as fake/real news tweets.

- Exploratory data analysis was applied to the created dataset using in-depth statistical analysis to understand and visualize the proposed dataset.

- (MSDSI-FND): A proposed multi-stage detection system for online fake news disseminated by influential users was developed and tested with promising performance for detecting fake news.

2. Related Work

2.1. Types of Fake News

2.2. Fake News in OSNs

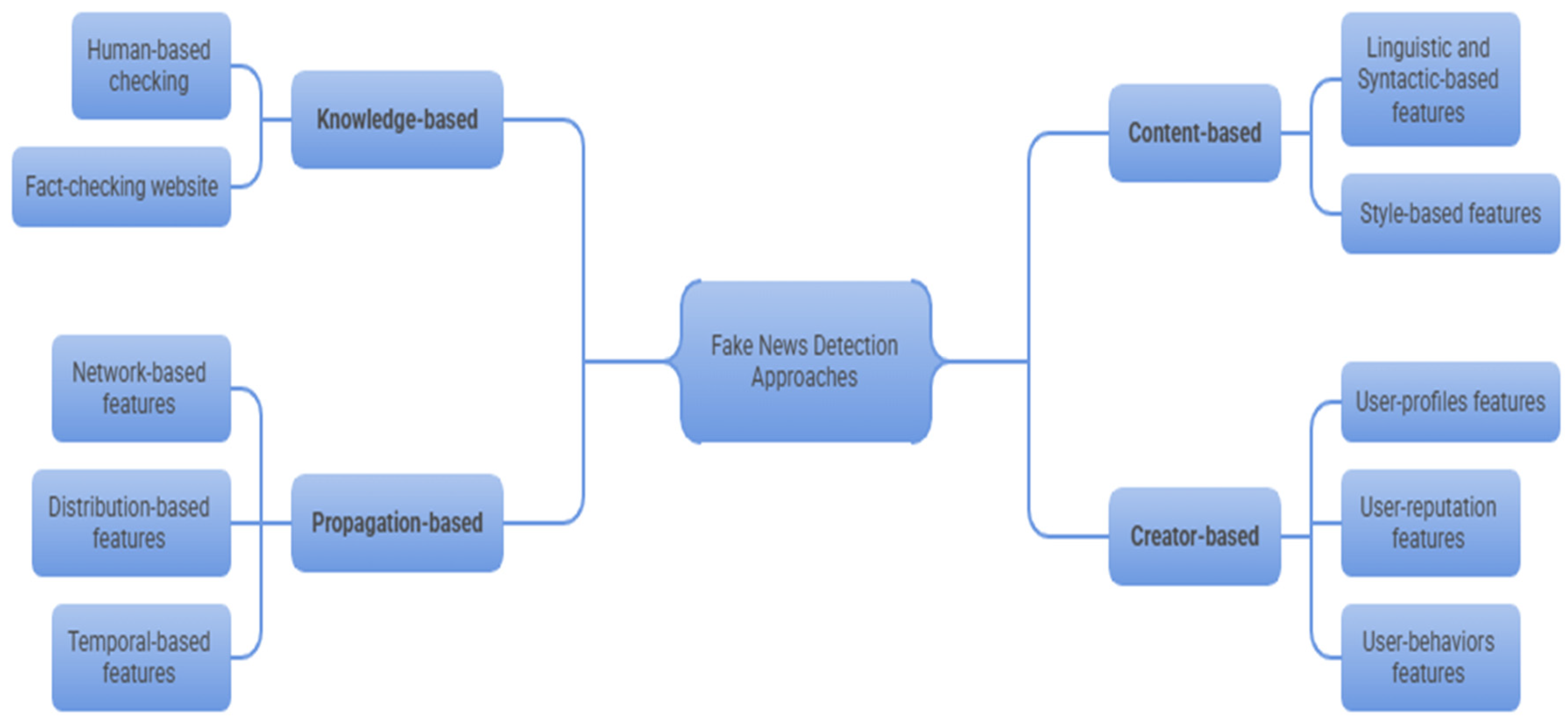

2.3. Fake News Detection Approaches and Techniques

2.3.1. Knowledge-Based Fake News Detection

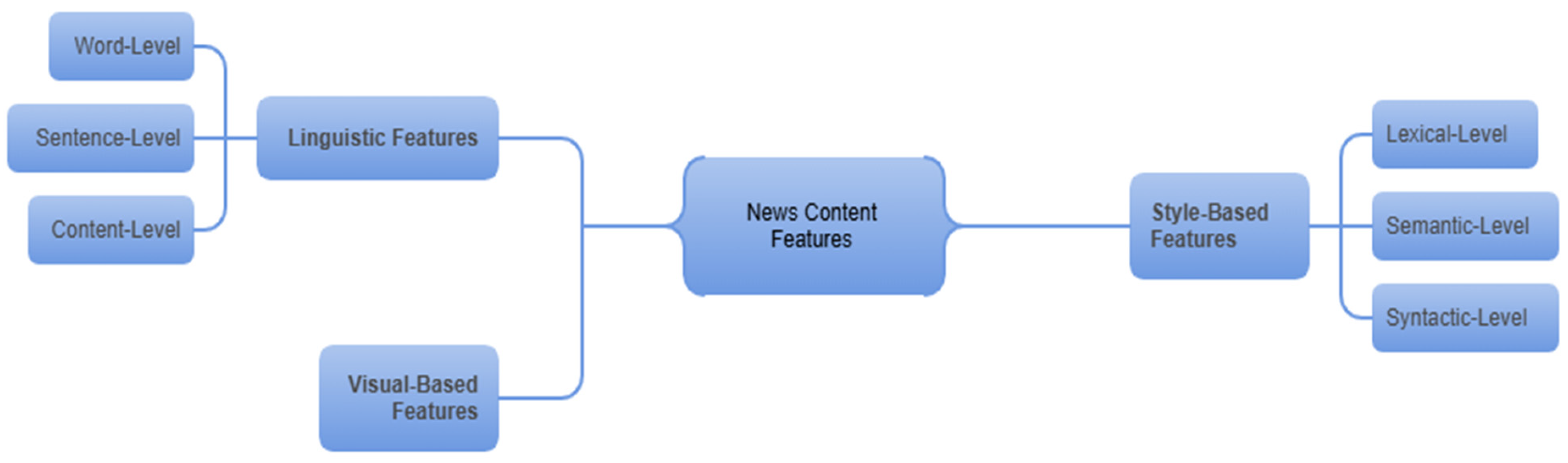

2.3.2. Content-Based Fake News Detection

2.3.3. Creator/Source-Based Fake News Detection

2.3.4. Propagation-Based Fake News Detection

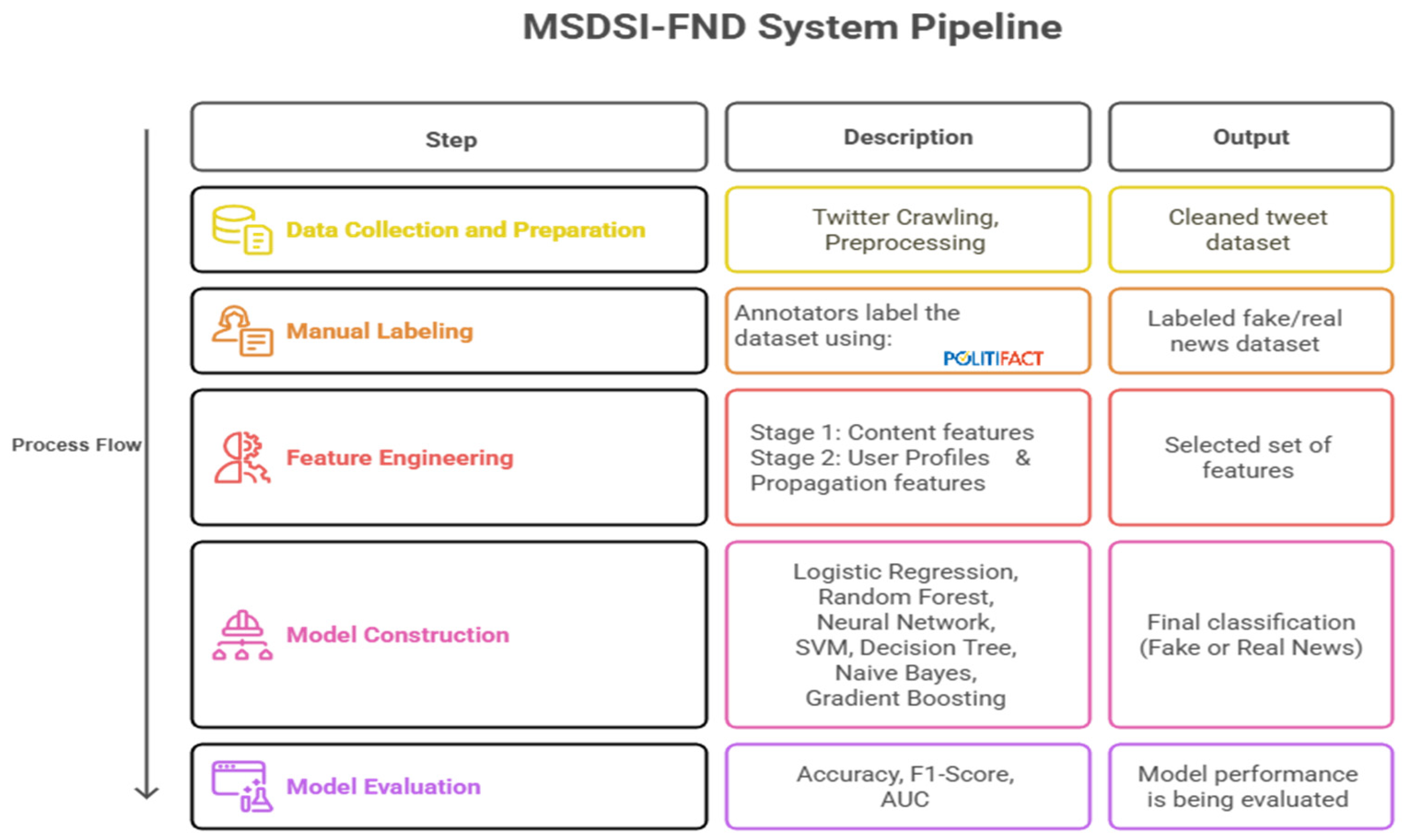

3. Methodology

- Construct a labeled dataset from X specific to this study, as it will be described in detail later.

- Analyze and model the content-based features of fake news tweets shared by influential users by examining their tweets and followers’ replies.

- Analyze and model influential user profiles and social context features related to fake news by exploring users’ sharing behaviors (followers) along with news tweets cascades.

- Develop and evaluate a detection system that leverages extracted features to identify fake news shared on OSNs by influential users.

| Feature Type | Feature Description |

|---|---|

| Content-Based | Used to extract the textual features of influential users’ fake/real news posts. |

| Hierarchical Propagation | -Explicit user profile features include the basic user information from X API (e.g., account name, registration time, verified account or not, and number of followers). -Implicit user profile features can only be obtained with different psycholinguistic patterns tools, such as LIWC. |

| -The distribution pattern of online news and the interaction between online users, as the features presented in [14] and in [48] |

3.1. Data Collection and Preparation

3.1.1. Data Collection

- Verified status on X, ensuring that the accounts belonged to authentic public figures rather than bots or anonymous users.

- Public-figure identity, such as politicians, journalists, scientists, or media personalities, whose posts naturally receive wide visibility and impact public discourse.

- Follower count exceeding 10,000, ensuring that each selected account had substantial audience reach and engagement potential.

3.1.2. Data Preprocessing

- Text Cleaning: Removing URLs, mentions, hashtags, special characters, and punctuation from the tweet text.

- Tokenization: Splitting the text into individual words or tokens.

- Stop Word Removal: Eliminating common words (e.g., “the,” “a,” “is”) that do not contribute significantly to the meaning.

- Stemming/Lemmatization: Reducing words to their root form to normalize the vocabulary.

- Lowercasing: Converting all text to lowercase to ensure consistency.

3.2. Manual Labeling

- First, the annotators checked whether the tweet had been flagged or labeled as misleading by X itself. If so, it was categorized as fake new as shown in Figure 5.

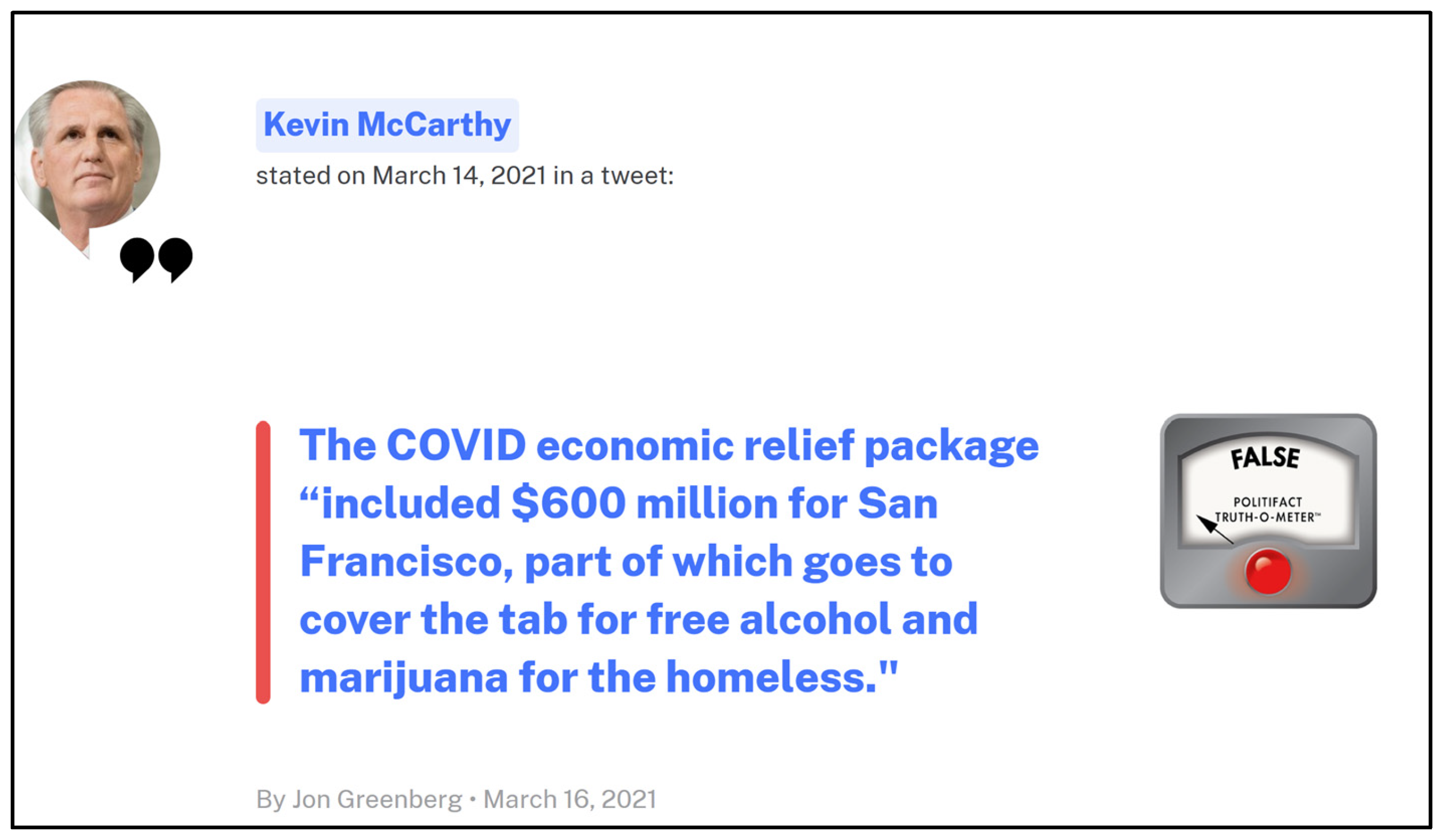

- If no platform-based flag was found, the annotators cross-verified the content using PolitiFact.com; a well-established fact-checking organization that evaluates claims based on evidence from multiple reputable sources as shown in Figure 6.

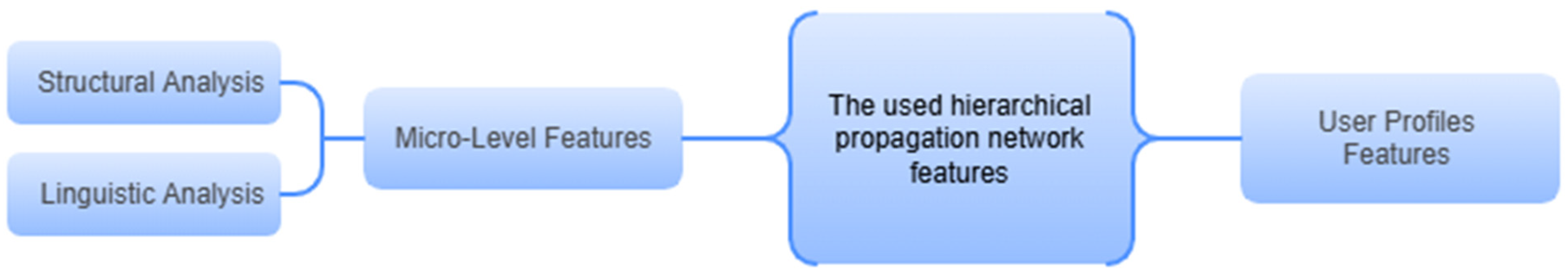

3.3. Feature Engineering

3.3.1. Stage 1: Content Features Extraction and Selection

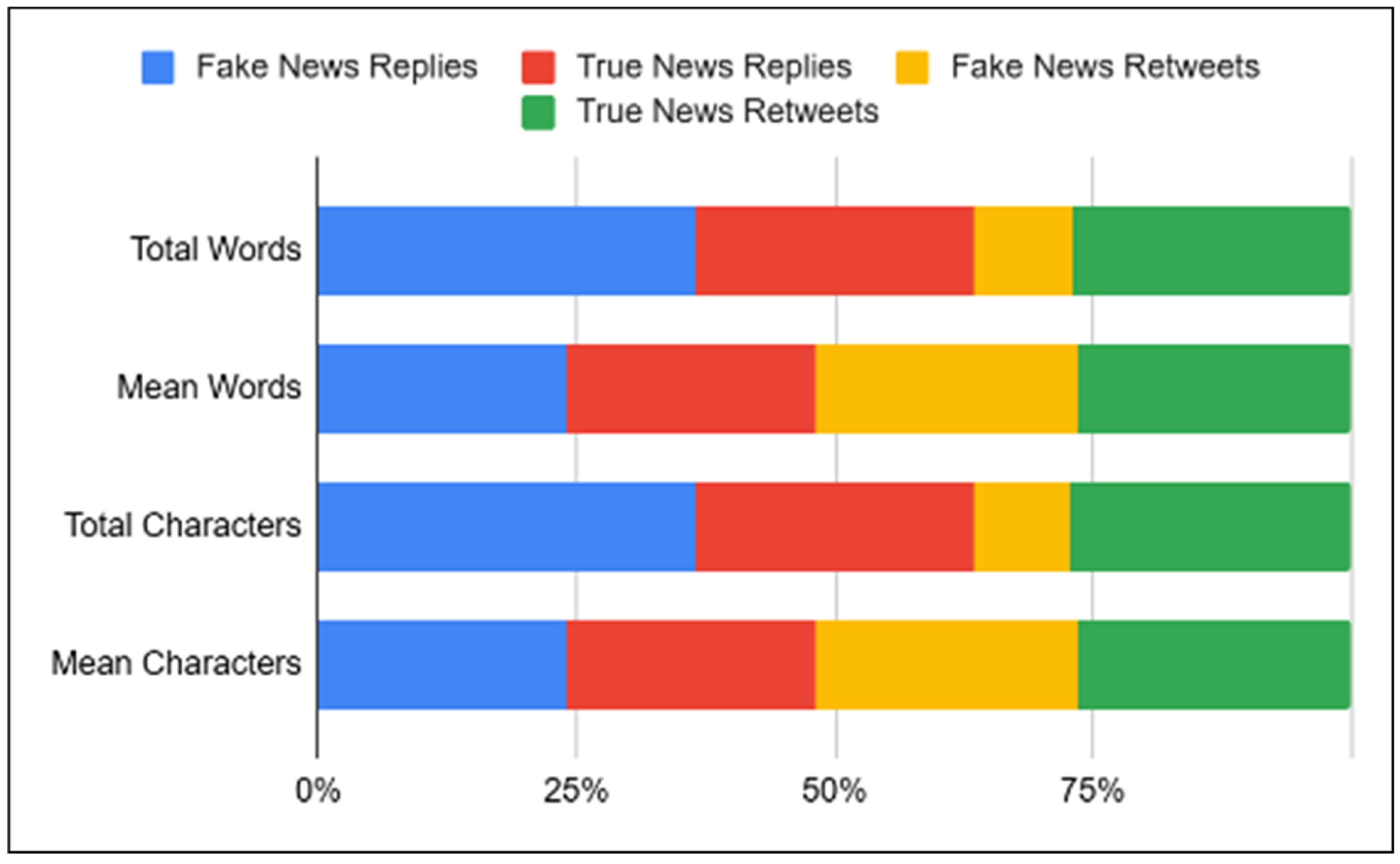

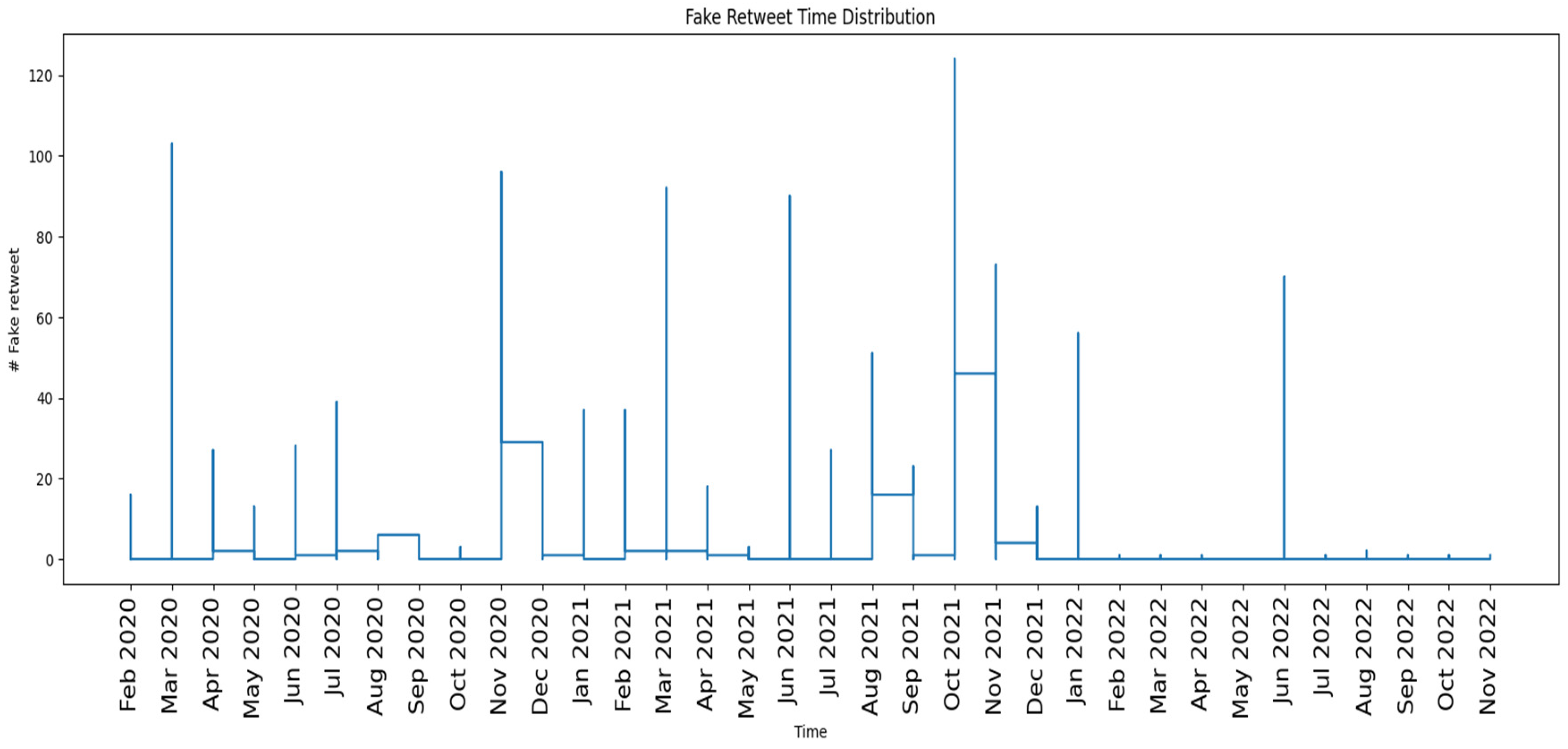

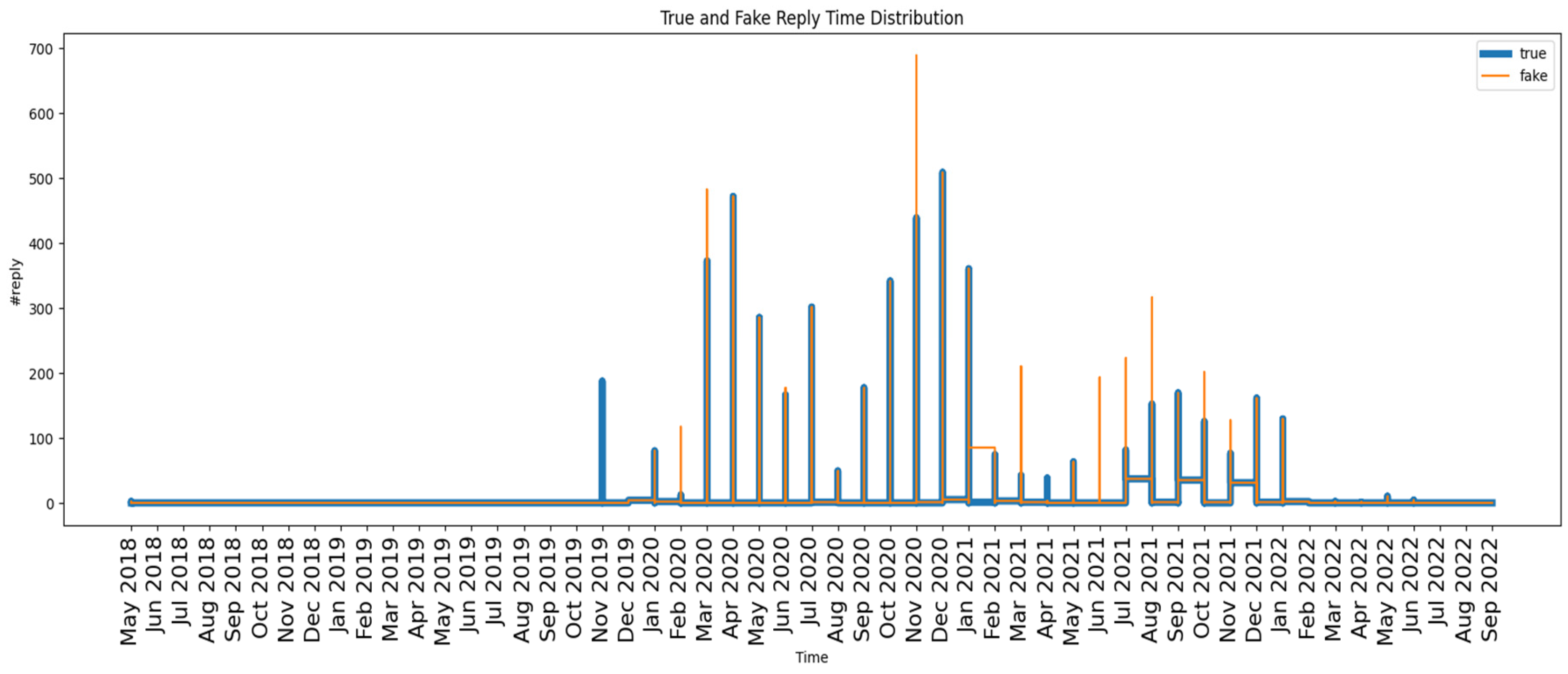

Exploratory Data Analysis

Linguistic Inquiry and Word Count: LIWC

3.3.2. Stage 2: User Profiles and Propagation Features Extraction and Selection

3.4. Model Construction

3.5. Model Evaluation

4. Results and Discussion

Ablation Study on Multi-Stage Feature Contributions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wu, L.; Morstatter, F.; Carley, K.M.; Liu, H. Misinformation in social media: Definition, Manipulation, and Detection. SIGKDD Explor. Newsl. 2019, 21, 80–90. [Google Scholar] [CrossRef]

- Shu, K.; Sliva, A.; Wang, S.; Tang, J.; Liu, H. Fake News Detection on Social Media: A Data Mining Perspective. ACM SIGKDD Explor. Newsl. 2017, 19, 15. [Google Scholar] [CrossRef]

- Vosoughi, S.; Roy, D.; Aral, S. The Spread of True and False News Online. Science 2018, 359, 1146–1151. [Google Scholar] [CrossRef] [PubMed]

- Twitter Permanent Suspension of @realDonaldTrump. Available online: https://blog.x.com/en_us/topics/company/2020/suspension (accessed on 27 May 2021).

- Gupta, A.; Kumaraguru, P.; Castillo, C.; Meier, P. TweetCred: Real-Time Credibility Assessment of Content on Twitter. In Social Informatics; Aiello, L.M., McFarland, D., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Germany, 2014; Volume 8851, pp. 228–243. ISBN 978-3-319-13733-9. [Google Scholar]

- Here’s How We’re Using AI to Help Detect Misinformation. 2020. Available online: https://ai.meta.com/blog/heres-how-were-using-ai-to-help-detect-misinformation/ (accessed on 22 May 2021).

- Zhou, X.; Zafarani, R. A Survey of Fake News: Fundamental Theories, Detection Methods, and Opportunities. ACM Comput. Surv. 2020, 53, 109. [Google Scholar] [CrossRef]

- Bondielli, A.; Marcelloni, F. A Survey on Fake News and Rumour Detection Techniques. Inf. Sci. 2019, 497, 38–55. [Google Scholar] [CrossRef]

- Rubin, V.L.; Chen, Y.; Conroy, N.K. Deception Detection for News: Three Types of Fakes. Proc. Assoc. Inf. Sci. Technol. 2015, 52, 1–4. [Google Scholar] [CrossRef]

- Zubiaga, A.; Aker, A.; Bontcheva, K.; Liakata, M.; Procter, R. Detection and Resolution of Rumours in Social Media: A Survey. ACM Comput. Surv. 2018, 51, 1–36. [Google Scholar] [CrossRef]

- Kshetri, N.; Voas, J. The Economics of ‘Fake News’. IT Prof. 2017, 19, 8–12. [Google Scholar] [CrossRef]

- Shu, K.; Wang, S.; Lee, D.; Liu, H. Disinformation, Misinformation, and Fake News in Social Media: Emerging Research Challenges and Opportunities; Shu, K., Wang, S., Lee, D., Liu, H., Eds.; Lecture Notes in Social Networks; Springer International Publishing: Cham, Germany, 2020; ISBN 978-3-030-42698-9. [Google Scholar]

- Google Trends. “Fake News”. Available online: https://trends.google.com/trends/explore?date=2013-12-06%202021-03-06&geo=US&q=fake%20news (accessed on 10 October 2025).

- Shu, K.; Mahudeswaran, D.; Wang, S.; Liu, H. Hierarchical Propagation Networks for Fake News Detection: Investigation and Exploitation. Proc. Int. AAAI Conf. Web Soc. Media 2020, 14, 626–637. [Google Scholar] [CrossRef]

- Sharma, K.; Qian, F.; Jiang, H.; Ruchansky, N.; Zhang, M.; Liu, Y. Combating Fake News: A Survey on Identification and Mitigation Techniques. ACM Trans. Intell. Syst. Technol. 2019, 10, 1–42. [Google Scholar] [CrossRef]

- Delirrad, M.; Mohammadi, A.B. New Methanol Poisoning Outbreaks in Iran Following COVID-19 Pandemic. Alcohol Alcohol. 2020, 55, 347–348. [Google Scholar] [CrossRef]

- Ferrara, E.; Varol, O.; Davis, C.; Menczer, F.; Flammini, A. The Rise of Social Bots. Commun. ACM 2016, 59, 96–104. [Google Scholar] [CrossRef]

- Shao, C.; Ciampaglia, G.L.; Varol, O.; Yang, K.; Flammini, A.; Menczer, F. The Spread of Low-Credibility Content by Social Bots. Nat. Commun. 2018, 9, 4787. [Google Scholar] [CrossRef]

- Jamieson, K.H.; Cappella, J.N. Echo Chamber: Rush Limbaugh and the Conservative Media Establishmen; Oxford University Press: Oxford, UK, 2008. [Google Scholar]

- Pariser, E. The Filter Bubble: How the New Personalized Web Is Changing What We Read and How We Think; Penguin: London, UK, 2011. [Google Scholar]

- Ciampaglia, G.L.; Shiralkar, P.; Rocha, L.M.; Bollen, J.; Menczer, F.; Flammini, A. Computational Fact Checking from Knowledge Networks. PLoS ONE 2015, 10, e0128193. [Google Scholar] [CrossRef]

- Luhn, H.P. A Statistical Approach to Mechanized Encoding and Searching of Literary Information. IBM J. Res. Dev. 1957, 1, 309–317. [Google Scholar] [CrossRef]

- Jones, K.S. A Statistical Interpretation of Term Specificity and Its Application in Retrieval. J. Doc. 1972, 28, 11–21. [Google Scholar] [CrossRef]

- Castel, S.; Almeida, T.; Elghafari, A.; Santos, A.; Pham, K.; Nakamura, E.; Freire, J. A Topic-Agnostic Approach for Identifying Fake News Pages. In Companion Proceedings of the 2019 World Wide Web Conference; ACM Digital Library: New York, NY, USA, 2019; pp. 975–980. [Google Scholar] [CrossRef]

- Pérez-Rosas, V.; Kleinberg, B.; Lefevre, A.; Mihalcea, R. Automatic Detection of Fake News. arXiv 2017, arXiv:1708.07104. [Google Scholar] [CrossRef]

- Potthast, M.; Kiesel, J.; Reinartz, K.; Bevendorff, J.; Stein, B. A Stylometric Inquiry into Hyperpartisan and Fake News. arXiv 2017, arXiv:1702.05638. [Google Scholar] [CrossRef]

- Castillo, C.; Mendoza, M.; Poblete, B. Information Credibility on Twitter. In Proceedings of the 20th International Conference on World Wide Web—WWW ’11, Hyderabad, India, 28 March–1 April 2011; ACM Press: Hyderabad, India, 2011; p. 675. [Google Scholar]

- Abbasi, M.-A.; Liu, H. Measuring User Credibility in Social Media. In Social Computing, Behavioral-Cultural Modeling and Prediction; Greenberg, A.M., Kennedy, W.G., Bos, N.D., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2013; Volume 7812, pp. 441–448. ISBN 978-3-642-37209-4. [Google Scholar]

- Zhang, X.; Ghorbani, A.A. An Overview of Online Fake News: Characterization, Detection, and Discussion. Inf. Process. Manag. 2020, 57, 102025. [Google Scholar] [CrossRef]

- Viviani, M.; Pasi, G. Credibility in Social Media: Opinions, News, and Health Information-a Survey: Credibility in Social Media. WIREs Data Min. Knowl. Discov. 2017, 7, e1209. [Google Scholar] [CrossRef]

- Liu, Y.; Xu, S. Detecting Rumors Through Modeling Information Propagation Networks in a Social Media Environment. IEEE Trans. Comput. Soc. Syst. 2016, 3, 46–62. [Google Scholar] [CrossRef]

- Islam, M.R.; Liu, S.; Wang, X.; Xu, G. Deep Learning for Misinformation Detection on Online Social Networks: A Survey and New Perspectives. Soc. Netw. Anal. Min. 2020, 10, 82. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.; Gao, W.; Mitra, P.; Kwon, S.; Jansen, B.J.; Wong, K.F.; Cha, M. Detecting Rumors from Microblogs with Recurrent Neural Networks. In Proceedings of the 25th International Joint Conference on Artificial Intelligence (IJCAI 2016), New York, NY, USA, 9–15 July 2016; AAAI Press: New York, NY, USA, 2016; p. 9. [Google Scholar]

- Al-Sarem, M.; Boulila, W.; Al-Harby, M.; Qadir, J.; Alsaeedi, A. Deep Learning-Based Rumor Detection on Microblogging Platforms: A Systematic Review. IEEE Access 2019, 7, 152788–152812. [Google Scholar] [CrossRef]

- Young, T.; Hazarika, D.; Poria, S.; Cambria, E. Recent Trends in Deep Learning Based Natural Language Processing. arXiv 2018, arXiv:1708.02709. [Google Scholar] [CrossRef]

- Hassan, N.; Gomaa, W.; Khoriba, G.; Haggag, M. Credibility Detection in Twitter Using Word N-Gram Analysis and Supervised Machine Learning Techniques. Int. J. Intell. Eng. Syst. 2020, 13, 291–300. [Google Scholar] [CrossRef]

- Gravanis, G.; Vakali, A.; Diamantaras, K.; Karadais, P. Behind the Cues: A Benchmarking Study for Fake News Detection. Expert Syst. Appl. 2019, 128, 201–213. [Google Scholar] [CrossRef]

- Alghamdi, J.; Lin, Y.; Luo, S. Towards COVID-19 Fake News Detection Using Transformer-Based Models. Knowl.-Based Syst. 2023, 274, 110642. [Google Scholar] [CrossRef]

- Padalko, H.; Chomko, V.; Chumachenko, D. A Novel Approach to Fake News Classification Using LSTM-Based Deep Learning Models. Front. Big Data 2024, 6, 1320800. [Google Scholar] [CrossRef]

- Hamed, S.K.; Ab Aziz, M.J.; Yaakub, M.R. Fake News Detection Model on Social Media by Leveraging Sentiment Analysis of News Content and Emotion Analysis of Users’ Comments. Sensors 2023, 23, 1748. [Google Scholar] [CrossRef]

- Hashmi, E.; Yayilgan, S.Y.; Yamin, M.M.; Ali, S.; Abomhara, M. Abomhara Advancing Fake News Detection: Hybrid Deep Learning With FastText and Explainable AI. IEEE Access 2024, 12, 44462–44480. [Google Scholar] [CrossRef]

- Choudhry, A.; Khatri, I.; Jain, M.; Vishwakarma, D.K. An Emotion-Aware Multitask Approach to Fake News and Rumor Detection Using Transfer Learning. IEEE Trans. Comput. Soc. Syst. 2024, 11, 588–599. [Google Scholar] [CrossRef]

- Alghamdi, J.; Lin, Y.; Luo, S. Does Context Matter? Effective Deep Learning Approaches to Curb Fake News Dissemination on Social Media. Appl. Sci. 2023, 13, 3345. [Google Scholar] [CrossRef]

- Raza, S.; Ding, C. Fake News Detection Based on News Content and Social Contexts: A Transformer-Based Approach. Int. J. Data Sci. Anal. 2022, 13, 335–362. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Zhang, Y.; Li, X.; Yu, X. COVID-19 Fake News Detection Using Bidirectional Encoder Representations from Transformers Based Models. arXiv 2021, arXiv:2109.14816. [Google Scholar] [CrossRef]

- Azizah, S.F.N.; Cahyono, H.D.; Sihwi, S.W.; Widiarto, W. Performance Analysis of Transformer Based Models (BERT, ALBERT, and RoBERTa) in Fake News Detection. In Proceedings of the 2023 6th International Conference on Information and Communications Technology (ICOIACT), Yogyakarta, Indonesia, 10 November 2023; IEEE: New York, NY, USA, 2024; pp. 425–430. [Google Scholar]

- Raza, S.; Paulen-Patterson, D.; Ding, C. Fake News Detection: Comparative Evaluation of BERT-like Models and Large Language Models with Generative AI-Annotated Data. Knowl. Inf. Syst. 2025, 67, 3267–3292. [Google Scholar] [CrossRef]

- Shu, K.; Zhou, X.; Wang, S.; Zafarani, R.; Liu, H. The Role of User Profiles for Fake News Detection. In Proceedings of the 2019 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining, Vancouver, BC, Canada, 27–30 August 2019; ACM: Vancouver, BC, Canada, 2019; pp. 436–439. [Google Scholar]

- Sahoo, K.; Samal, A.K.; Pramanik, J.; Pani, S.K. Pani. Exploratory Data Analysis Using Python. Int. J. Innov. Technol. Explor. Eng. 2019, 8, 4727–4735. [Google Scholar] [CrossRef]

- Tausczik, Y.R.; Pennebaker, J.W. Pennebaker The Psychological Meaning of Words: LIWC and Computerized Text Analysis Methods. J. Lang. Soc. Psychol. 2009, 29, 24–54. [Google Scholar] [CrossRef]

| Website | Theme | Analyzed Content | Classification Labels |

|---|---|---|---|

| FactCheck.org | American politics | News, interviews, debates, TV shows, and speeches | True, No evidence, False |

| PolitiFact | American politics | Statements | True, mostly true, Half true, mostly false, False, Pants on fire |

| Snopes | Politics and other general topics | News articles and videos | True, mostly true, Mixture, mostly false, False, Unproven, Outdated, Miscaptioned, correct attribution, misattributed, scam, legend |

| The Washington Post Fact Checker | American politics | Statements and claims | One Pinocchio, Two Pinocchio, Three Pinocchio, Four Pinocchio. |

| Misbar | Claims on social media (specifically in the Middle East, North Africa, and the US) | Statements and claims | Fake, Misleading, True, Myth, Selective, Suspicious, Commotion, and Satire |

| FND Approach | The Goal | The Context | Features Set |

|---|---|---|---|

| Knowledge-based | To assess the authenticity of the news | News content | Textual and visual features set |

| Content-Based | Analyze news content based on an evaluation of its intention | News content | Textual and visual features set |

| Creator/Source-Based | Captures the specific characteristics of suspicious user accounts or bots | User profile | Users’ profile data: implicit and explicit |

| Propagation-Based | Exploits social context information | User social engagements | User posts, retweets, likes, and temporal features |

| Ref. | Technique | Features | Dataset(s) | Accuracy |

|---|---|---|---|---|

| [36] | LSVM + TF-IDF | Unigrams, bigrams | Twitter posts | 84.9% |

| [37] | ML benchmarking pipeline | Linguistic features | UNBiased (UNB) | 95% |

| [40] | Bi-LSTM | Sentiment and emotion from news + comments | Fakeddit | 96.77% |

| [41] | CNN + LSTM + FastText | Textual + semantic | WELFake, FakeNewsNet, FakeNewsPrediction | 0.99, 0.97, 0.99 respectively |

| [43] | BERTbase–CNN–BiGRU–ATT | Content + User behavior | FakeNewsNet | 92% |

| BERTbase–BiGRU–CNN–ATT | 87% | |||

| BERTbase-CNN-BiLSTM | 91% | |||

| [44] | Transformer encoder–decoder | Content, social context, detection | NELA-GT-19 and Fakeddit | 74% |

| [45] | Fine-tuned BERT + CNN + Bi-LSTM | COVID-19 tweets | COVID-19 dataset | 80% |

| [46] | BERT, ALBERT, RoBERTa | Text features | Indonesian dataset | 87.6% |

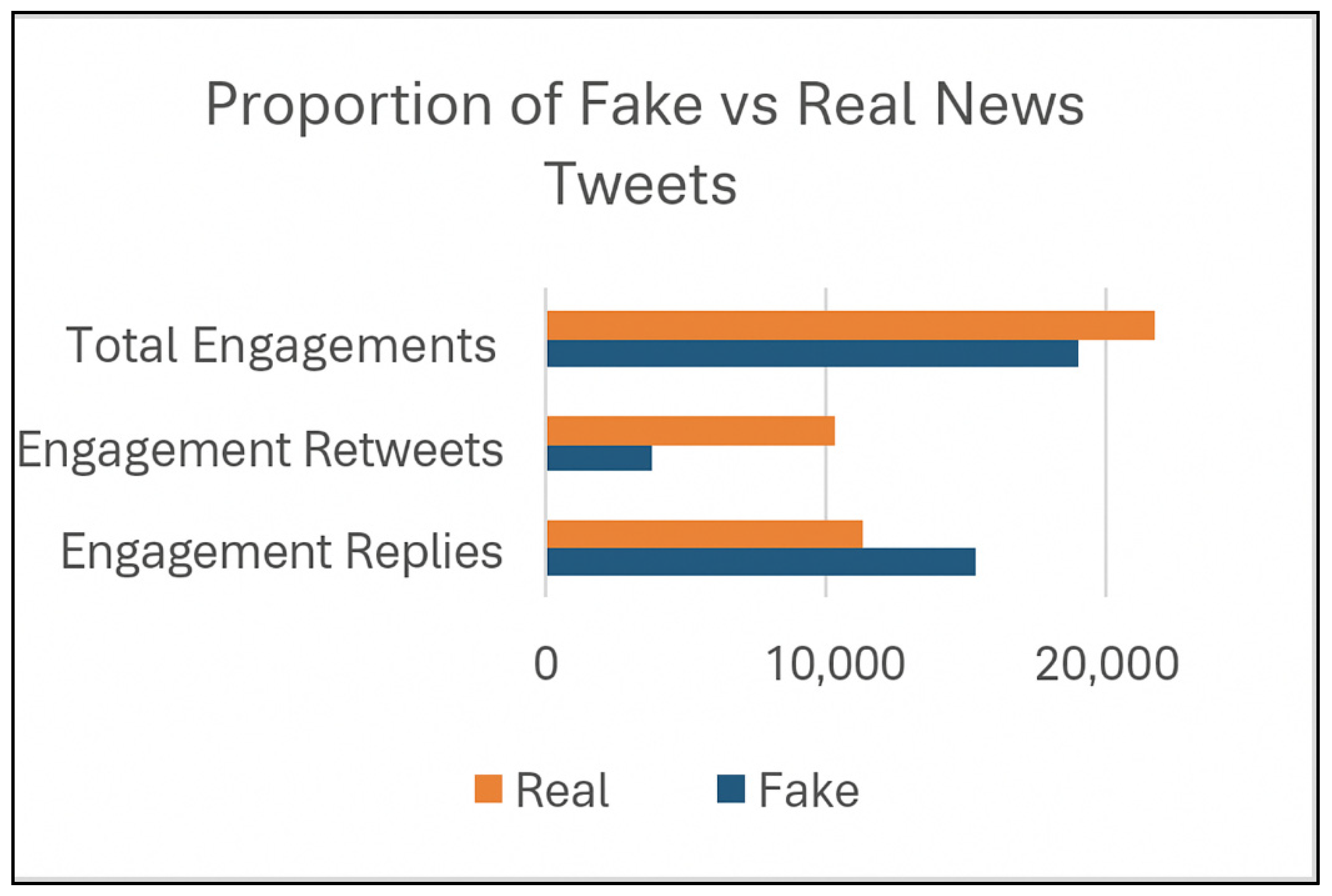

| Category | Fake | Real | Total |

|---|---|---|---|

| Root tweets | 42 | 26 | 68 |

| Engagement Replies | 15,362 | 11,340 | 26,702 |

| Engagement Retweets | 3733 | 10,327 | 14,060 |

| Total Engagements | 19,095 | 21,667 | 40,762 |

| Feature Type | Features List |

|---|---|

| Structural | S10: Tree depth |

| S11: Number of nodes | |

| S12: Maximum out-degree | |

| S13: Number of cascades with replies | |

| S14: Fraction of cascades with replies | |

| User Profile | U1: nodes’ average friends |

| U2: source friends | |

| U3: nodes’ average followers | |

| U4: source followers | |

| U5: nodes’ average favorites | |

| U6: source favorites | |

| U7: nodes average listed. | |

| U8: source listed. | |

| U9: nodes’ average statuses | |

| U10: source statuses | |

| Linguistic | L1: Sentiment ratio of all replies |

| L2: Average sentiment of all replies | |

| L3: Average sentiment of first-level replies | |

| L4: Average sentiment of replies in deepest cascade | |

| L5: Average sentiment of first-level replies in deepest cascade |

| Classifier | Key Parameters | Justification |

|---|---|---|

| Logistic Regression | solver = “liblinear” | Suitable for binary classification. |

| Naive Bayes | default parameters | Used as a simple probabilistic baseline model. |

| Random Forest | max_depth = 2, random_state = 0 | Depth limited to prevent overfitting; random state ensures reproducibility. |

| Decision Tree | random_state = 0 | Default settings used; random state for consistent results. |

| Gradient Boosting | n_estimators = 10, learning_rate = 1.0, max_depth = 1, random_state = 0 | Parameters tuned on validation data for a balance between bias and variance. |

| Neural Network (MLP) | solver = ‘lbfgs’, alpha = 1 × 10−5, hidden_layer_sizes = (20, 2), random_state = 0 | Small hidden layers and regularization are chosen for stable convergence on limited data. |

| SVM | kernel = ‘linear’, probability = True, random_state = 0 | Linear kernel preferred for interpretability; probability enabled for AUC computation. |

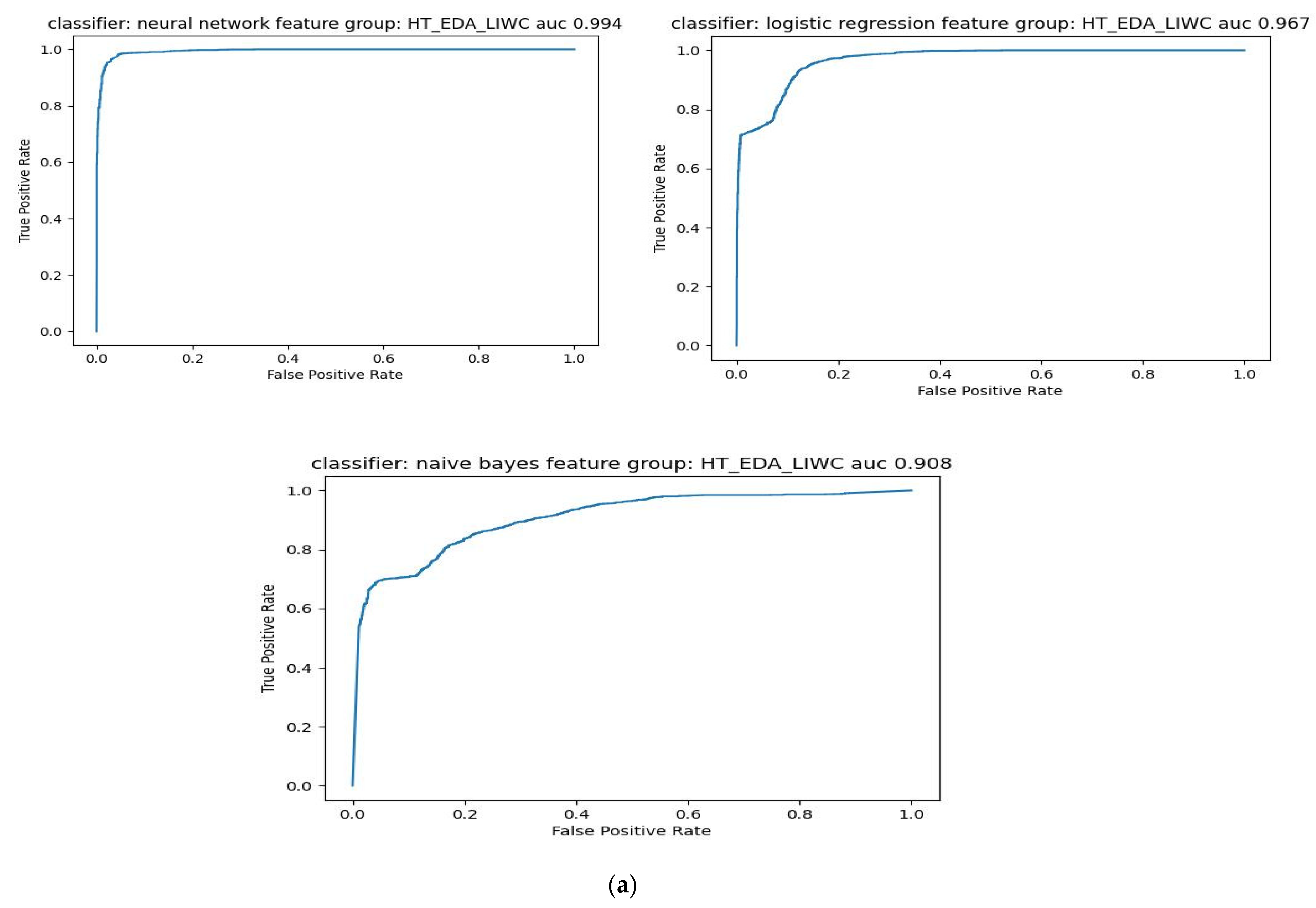

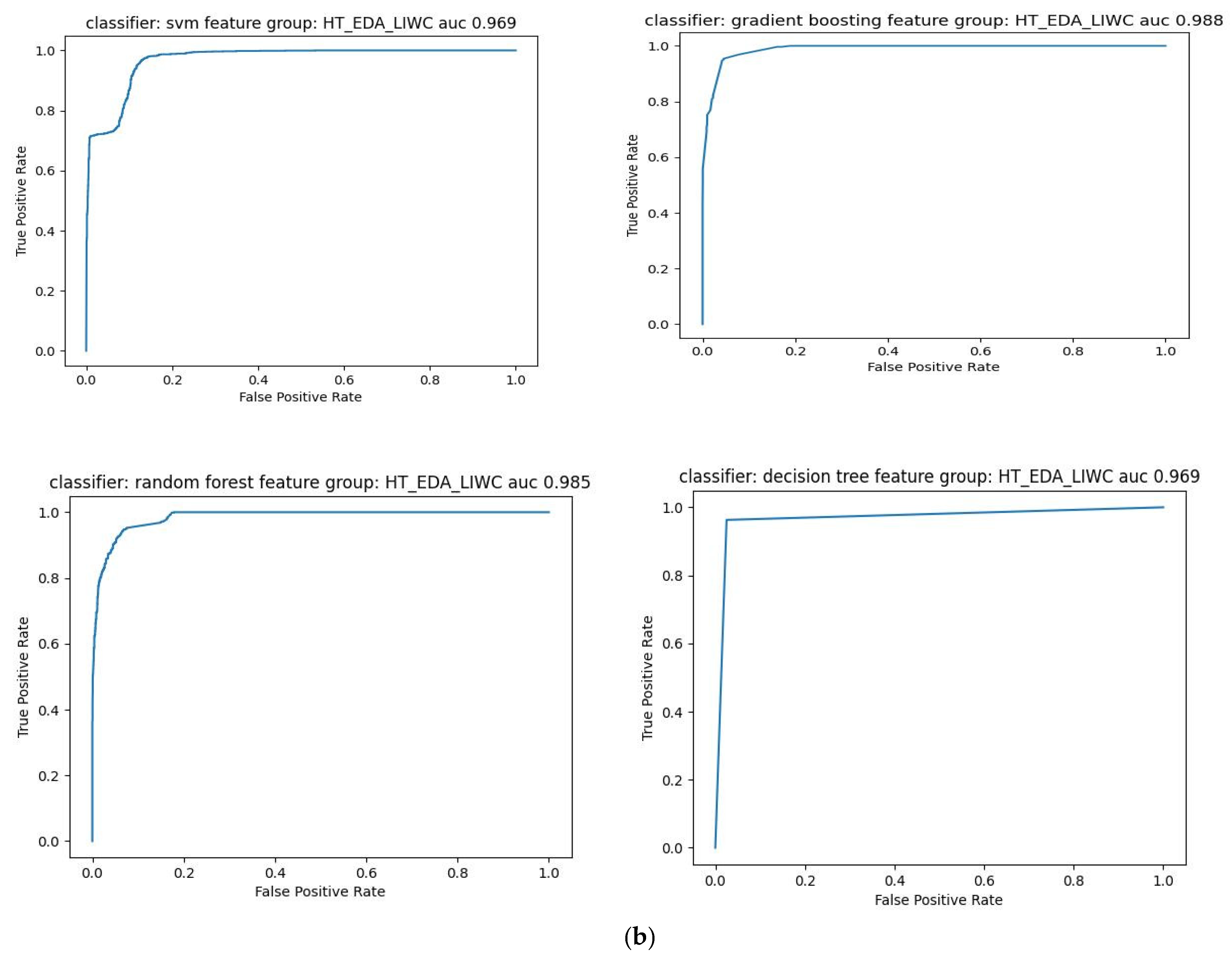

| No. | Algorithm | Features | Metrics | ||

|---|---|---|---|---|---|

| F1 Score | Accuracy | AUC | |||

| 1 | LR | HT_EDA_LIWC | 87.3 | 87.7 | 97.6 |

| 2 | SVM | HT_EDA_LIWC | 87.7 | 88 | 96.9 |

| 3 | DT | HT_EDA_LIWC | 62 | 50.9 | 96.9 |

| 4 | RF | HT_EDA_LIWC | 93.1 | 93.2 | 98.5 |

| 5 | NB | HT_EDA_LIWC | 55.4 | 58.3 | 90.8 |

| 6 | GB | HT_EDA_LIWC | 95.2 | 95.3 | 98.8 |

| 7 | NN | HT_EDA_LIWC | 96.6 | 96.7 | 99.4 |

| No. | Algorithm | Features | Metrics | ||

|---|---|---|---|---|---|

| F1-Score | Accuracy | AUC | |||

| 1 | LR | EDA features | 40.7 | 57.9 | 58.4 |

| LIWC | 77.7 | 78.4 | 91.4 | ||

| 2 | SVM | EDA features | 36.5 | 57.5 | 52.1 |

| LIWC | 78.9 | 79.4 | 91.7 | ||

| 3 | DT | EDA features | 24.9 | 28.5 | 77.4 |

| LIWC | 90.3 | 90.6 | 90.2 | ||

| 4 | RF | EDA features | 36.5 | 57.5 | 57.4 |

| LIWC | 86 | 86.7 | 95.9 | ||

| 5 | NB | EDA features | 35.7 | 45.1 | 57.6 |

| LIWC | 77 | 79.2 | 82.8 | ||

| 6 | GB | EDA features | 48.1 | 56.8 | 57.4 |

| LIWC | 88 | 88.3 | 96.3 | ||

| 7 | NN | EDA features | 38.1 | 57.7 | 58.1 |

| LIWC | 78.9 | 79.4 | 91.7 | ||

| No. | Algorithm | Features | Metrics | ||

|---|---|---|---|---|---|

| F1 Score | Accuracy | AUC | |||

| 1 | LR | Original DS + HT | 62 | 69.8 | 81.4 |

| 2 | SVM | Original DS + HT | 62 | 69.8 | 81.4 |

| 3 | DT | Original DS + HT | 62 | 69.8 | 81.4 |

| 4 | RF | Original DS + HT | 68.3 | 69.1 | 81.4 |

| 5 | NB | Original DS + HT | 57.3 | 59.8 | 80.3 |

| 6 | GB | Original DS + HT | 62.0 | 69.8 | 81.4 |

| 7 | NN | Original DS + HT | 62.0 | 69.8 | 81.4 |

| No. | Algorithm | Features | Metrics | ||

|---|---|---|---|---|---|

| F1 Score | Accuracy | AUC | |||

| 1 | LR | HT_EDA | 62.9 | 69.9 | 81 |

| HT_EDA_LIWC | 87.3 | 87.7 | 96.7 | ||

| 2 | SVM | HT_EDA | 61.9 | 69.7 | 80.9 |

| HT_EDA_LIWC | 87.7 | 88 | 96.9 | ||

| 3 | DT | HT_EDA | 48.3 | 50.9 | 54.6 |

| HT_EDA_LIWC | 62 | 50.9 | 96.9 | ||

| 4 | RF | HT_EDA | 68.4 | 69.2 | 81.5 |

| HT_EDA_LIWC | 93.1 | 93.2 | 98.5 | ||

| 5 | NB | HT_EDA | 43.4 | 49.7 | 79.5 |

| HT_EDA_LIWC | 55.4 | 58.3 | 90.8 | ||

| 6 | GB | HT_EDA | 62 | 69.8 | 81.4 |

| HT_EDA_LIWC | 95.2 | 95.3 | 98.8 | ||

| 7 | NN | HT_EDA | 64.9 | 67.7 | 79.3 |

| HT_EDA_LIWC | 96.6 | 96.7 | 99.4 | ||

| Feature-Set | Feature Type | Avg. Accuracy | Avg. AUC | Key Contribution |

|---|---|---|---|---|

| I | LIWC_EDA (content only) | ~86% | ~95% | Captures linguistic deception and psychological tone |

| II | Social context (HT) | ~69% | ~81% | Models’ user influence, engagement, and propagation dynamics |

| III | HT_EDA_LIWC (combined) | >90% | >96% | Learns integrated linguistic + behavioral patterns of misinformation |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Mutair, H.; Berri, J. MSDSI-FND: Multi-Stage Detection Model of Influential Users’ Fake News in Online Social Networks. Computers 2025, 14, 517. https://doi.org/10.3390/computers14120517

Al-Mutair H, Berri J. MSDSI-FND: Multi-Stage Detection Model of Influential Users’ Fake News in Online Social Networks. Computers. 2025; 14(12):517. https://doi.org/10.3390/computers14120517

Chicago/Turabian StyleAl-Mutair, Hala, and Jawad Berri. 2025. "MSDSI-FND: Multi-Stage Detection Model of Influential Users’ Fake News in Online Social Networks" Computers 14, no. 12: 517. https://doi.org/10.3390/computers14120517

APA StyleAl-Mutair, H., & Berri, J. (2025). MSDSI-FND: Multi-Stage Detection Model of Influential Users’ Fake News in Online Social Networks. Computers, 14(12), 517. https://doi.org/10.3390/computers14120517