Abstract

Benchmarking has been central to performance evaluation for more than four decades. Reinhold P. Weicker’s 1990 survey in IEEE Computer offered an early, rigorous critique of standard benchmarks, warning about pitfalls that continue to surface in contemporary practice. This review synthesizes the evolution from classical synthetic benchmarks (Whetstone, Dhrystone) and application kernels (LINPACK) to modern suites (SPEC CPU2017), domain-specific metrics (TPC), data-intensive and graph workloads (Graph500), and Artificial Intelligence/Machine Learning (AI/ML) benchmarks (MLPerf, TPCx-AI). We emphasize energy and sustainability (Green500, SPECpower, MLPerf Power), reproducibility (artifacts, environments, rules), and domain-specific representativeness, especially in biomedical and bioinformatics contexts. Building upon Weicker’s methodological cautions, we formulate a concise checklist for fair, multidimensional, reproducible benchmarking and identify open challenges and future directions.

Keywords:

benchmarking; performance evaluation; HPC; AI; reproducibility; bioinformatics; energy efficiency 1. Introduction

Performance comparisons guide system design and procurement. Early single-number metrics (e.g., million instructions per second (MIPS), million floating-point operations per second (MFLOPS)) provided convenience but were quickly recognized as misleading across instruction sets and compilers. Weicker’s [1] article systematized “what benchmarks measure” and, crucially, “what they do not,” articulating issues of representativeness, compiler influence, and non Central Processing Unit (non-CPU) effects [1]. Since then, the hardware landscape (multicores, accelerators), workloads (data analytics, ML), and deployment models (cloud/edge) have diversified, increasing the need for multidimensional and context-aware evaluation.

Throughout this review, several key technical terms are employed, each requiring a precise definition to ensure conceptual clarity and consistency. (i) Representativeness [2] refers to the extent to which a benchmark’s instruction mix, data access patterns, and control flow accurately reflect those of the target application domain. This property is typically validated through statistical correlation analyses of runtime profiles, ensuring that benchmarked workloads are meaningfully aligned with real-world computational behaviors. (ii) Domain shift [3] describes a phenomenon particularly relevant in artificial intelligence and machine learning benchmarks, wherein models trained on benchmark datasets exhibit degraded performance when applied to real-world data due to underlying distributional differences. This issue is of particular concern for the clinical translation of biomedical AI models, where generalization across data sources is critical for reliable deployment [4,5]. (iii) Reproducibility [6] is used here following Peng’s established hierarchy, which distinguishes between computational reproducibility, defined as the ability to obtain identical results from the same code, data, and computational environment and empirical reproducibility, referring to the consistency of findings across independent implementations. The reproducibility framework proposed in this study (Section 3.6) specifically targets the computational dimension of this hierarchy, and (iv) Energy proportionality [7] denotes the characteristic whereby a system’s power consumption scales linearly with its level of utilization. Modern computing systems often deviate from this ideal behavior, thereby motivating the adoption of per-task energy metrics (expressed in joules) rather than instantaneous power measures (in watts) to better capture workload efficiency.

The evaluation of computing performance has always been central to computer science and engineering. Since the earliest decades of digital computing, system designers and users have sought reliable ways to compare different architectures, programming paradigms, and execution environments. In the 1960s and 1970s, the first attempts at performance comparison often relied on raw technical specifications such as processor clock speed, memory size, or basic instruction throughput. Measures such as MIPS gained popularity as seemingly simple, universal performance indicators. However, as systems diversified and compiler technologies advanced, it became increasingly evident that such metrics were misleading and vulnerable to manipulation [8].

It was in this context that the notion of benchmarking gained relevance. A benchmark was not simply a measurement of hardware capacity, but rather a standardized test program, or suite of programs, designed to represent some meaningful workload. By the late 1970s, a variety of benchmarks had emerged. Some, such as Whetstone (1976), attempted to capture floating-point performance through a carefully constructed synthetic program [9]. Others, such as Dhrystone (1984), measured integer performance and quickly became widely used because of their simplicity [10]. Still others, such as LINPACK (1979), sought to represent actual scientific workloads, in this case, the solution of systems of linear equations using floating-point arithmetic [11].

By the end of the 1980s, the benchmarking landscape had become crowded, and with it came confusion and controversy. Different benchmarks often produced contradictory system performance assessments, and vendors sometimes optimized specifically for benchmark scores rather than for real user workloads. It was in this context that Reinhold P. Weicker published “An Overview of Common Benchmarks” in 1990 in IEEE Computer [1]. His article did not simply catalog the available benchmarks but offered a systematic classification and a critical perspective of their limitations.

This review is different from other work in three important ways: Weicker [1] only looked at benchmarks that were available at the time, like Whetstone, Dhrystone, and LINPACK. In contrast, we look at the whole history of benchmarks, from modern AI/ML benchmarks like MLPerf and TPCx-AI to domain-specific suites, especially in biomedicine. Second, we make a clear connection between the historical methodological problems that Weicker found and the current problems with reproducibility, energy measurement, and multi-objective optimization. This is different from general performance evaluation surveys. Third, we offer a cohesive, actionable reporting framework that synthesizes insights from 35 years of benchmarking experience across various fields (high performance computing (HPC), AI, databases, bioinformatics), rather than addressing these sectors in isolation. We made our synthesis so that researchers and practitioners who are making new benchmarks or looking at old results can use it right away.

2. Scope and Approach

2.1. Review Methodology

This review follows a systematic approach adapted from PRISMA guidelines [12] for literature synthesis in computer systems research. Our methodology comprised four stages:

- Selection criteria

- a.

- Temporal Scope: Benchmarks published during 1970−2025, with emphasis on post-1990 developments building on Weicker’s survey [1].

- b.

- Inclusion Criteria: (i) Benchmarks with formal specifications or standardized implementations; (ii) adoption by ≥2 independent research groups or industry consortia; and (iii) availability of published performance results in peer-reviewed venues or official repositories.

- c.

- Domain Focus: General-purpose computing (SPEC CPU), HPC (TOP500/HPCG/Graph500), AI/ML (MLPerf, TPCx-AI), and biomedical sciences (genomics, imaging).

- d.

- Exclusion Criteria: Proprietary benchmarks without public documentation; microbenchmarks targeting single hardware features; and benchmarks cited in <5 publications.

- Information sources

- a.

- Primary Sources: Official benchmark specifications from SPEC, TPC, MLCommons, and Graph500 Steering Committee.

- b.

- Academic Databases: IEEE Xplore, ACM Digital Library, PubMed (search terms: “benchmark* AND (performance OR evaluation OR reproducibility)”).

- c.

- Gray Literature: Technical reports from national laboratories (LLNL, ANL) and manufacturer white papers with traceable data.

- Data extraction: For each benchmark, we systematically documented:

- a.

- Design methodology (synthetic vs. application-based);

- b.

- Measured metrics (speed, energy, accuracy);

- c.

- Reproducibility provisions (run rules, artifact availability);

- d.

- Domain representativeness claims and validation evidence.

- Synthesis approach: No meta-analytic statistics were computed due to heterogeneity in reporting formats. Instead, we provide qualitative synthesis organized by historical periods (pre−1990, 1990−2010, 2010−2025) and thematic analysis of recurring methodological challenges identified by Weicker [1].

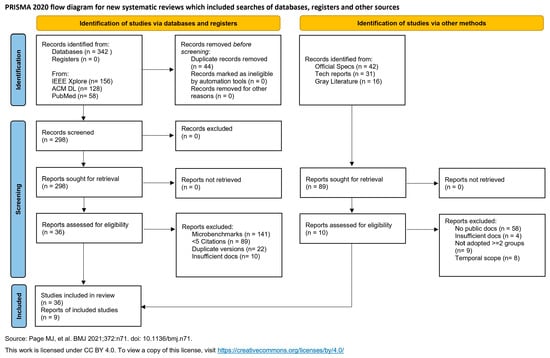

A complete PRISMA 2020 checklist documenting compliance with systematic review guidelines is provided in the Supplementary Materials (Table S1). Of 27 checklist items, 19 are fully reported, 4 are partially reported, and 6 are marked not applicable, as they pertain to clinical interventions rather than technical specifications. A detailed flow is described in Figure 1.

Figure 1.

PRISMA 2020 flow diagram for systematic review of computing benchmarks. The diagram illustrates our four-stage selection process: identification (database searches and other sources), screening (title/abstract review), eligibility (full-text assessment), and inclusion (final synthesis). Adapted from Page et al., BMJ 2021 [12].

2.2. Limitations

This review does not include: (i) exhaustive coverage of domain-specific benchmarks outside stated focus areas; (ii) unpublished or proprietary benchmark modifications; and (iii) formal quality assessment scoring (e.g., AMSTAR-2) due to the technical nature of benchmark specifications rather than clinical studies. The selection reflects expert judgment informed by community adoption metrics (citation counts, conference proceedings) rather than algorithmic search protocols.

3. Weicker: Contributions and Enduring Pitfalls

During the 1970s and 1980s, benchmarks followed two main strategies: synthetic programs designed to approximate workloads, and application-based programs directly derived from real scientific or business tasks [13].

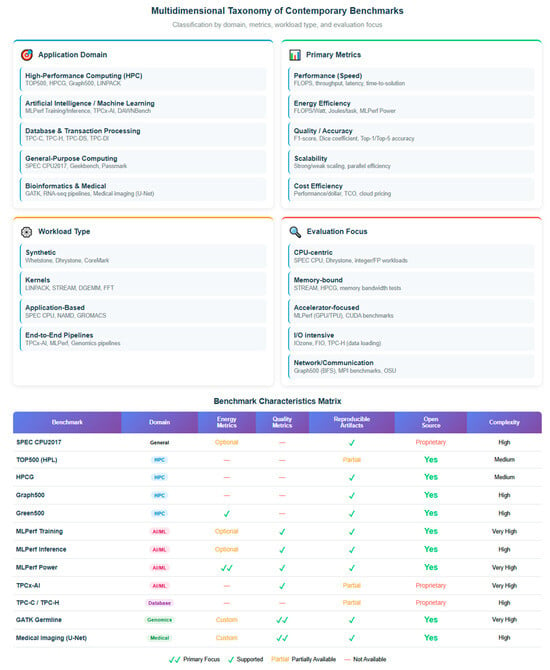

Before examining individual benchmarks, we provide visual context for the multidimensional taxonomy of contemporary evaluation practices. Figure 2 organizes benchmarks along four axes: application domain (HPC, AI/ML, database, general-purpose, biomedical), primary metrics (performance, energy, quality, scalability, cost), workload type (synthetic, kernels, application-based, end-to-end), and evaluation focus (CPU-centric, memory-bound, accelerator-focused, input and ouput (I/O) intensive, network/communication). This taxonomy reveals that classical benchmarks (Whetstone, Dhrystone) occupy the “Synthetic × CPU-centric” quadrant with optional energy metrics, whereas modern AI benchmarks (MLPerf training/inference/power) span “End-to-End × Accelerator-focused” with mandatory quality criteria. The matrix below the taxonomy classifies representative benchmarks, showing that only recent suites (Green500, MLPerf Power, GATK Germline) integrate energy as a primary, rather than optional metric. This shift reflects the evolution from Weicker’s era, when “performance” implicitly meant speed, to today’s multidimensional objectives [1,14,15].

Figure 2.

Multidimensional taxonomy classifying contemporary benchmarks by application domain, primary metrics, workload type, and evaluation focus. The matrix compares 12 representative benchmarks across energy metrics support, quality metrics integration, reproducible artifacts availability, open-source licensing, and implementation complexity.

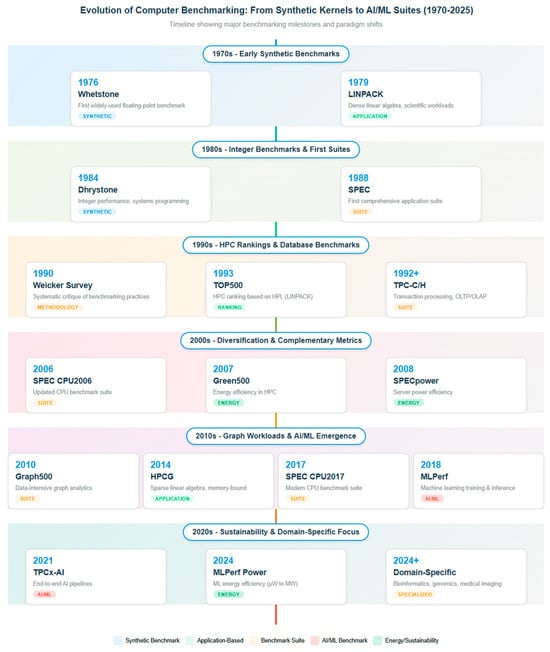

Figure 3 traces the historical trajectory from synthetic kernels (1970s) through application suites (1980s−1990s), HPC rankings (1990s−2000s), graph/complementary workloads (2000s−2010s), AI/ML emergence (2010s), and, finally, sustainability-focused benchmarks (2020s). Key observations include the following: (i) the 18-year gap between SPEC’s founding (1988) and MLPerf’s introduction (2018) reflects domain-specific needs unmet by general-purpose suites; (ii) energy benchmarks (Green500, SPECpower, MLPerf Power) emerged 14−31 years after their performance-only predecessors, indicating that efficiency became a first-class concern only recently; and (iii) domain-specific biomedical benchmarks (2024+) represent the latest frontier, driven by clinical reproducibility requirements absent in traditional HPC [4,5,16,17,18,19].

Figure 3.

Five-decade evolution of computer benchmarking (1970−2025) showing paradigm shifts from synthetic kernels (Whetstone, Dhrystone) through application-based suites (SPEC, LINPACK) to AI/ML benchmarks (MLPerf) and sustainability-focused metrics (Green500, MLPerf Power). Color coding distinguishes synthetic benchmarks (gray), application-based (green), suites (yellow), AI/ML (pink), and energy/sustainability (teal).

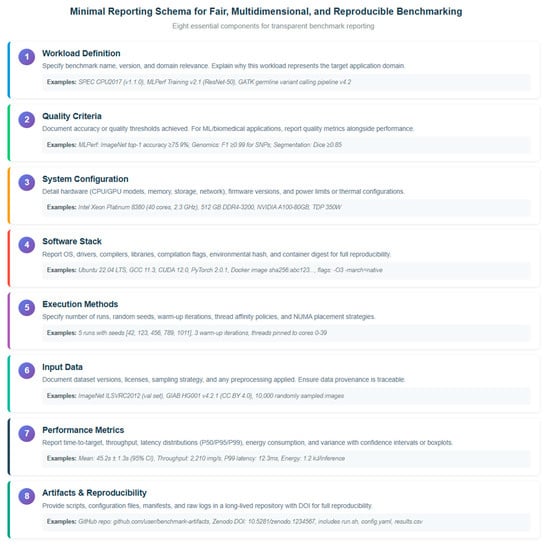

Figure 4 operationalizes our minimal reporting schema (Section 3.6) through concrete examples of each component. Note that “Quality Criteria” (Component 2) differentiates modern benchmarks from classical ones: MLPerf mandates ImageNet top-1 accuracy ≥75.9% [20], while Whetstone had no correctness thresholds beyond “results printed.” Similarly, “Artifacts and Reproducibility” (Component 8) now expects GitHub repositories with Digital Objetc Identifiers (DOIs) and data provenance, practices nonexistent when Weicker surveyed benchmarks [1]. This Figure 4 serves as a practical guide for benchmark designers and performance engineers implementing our recommendations.

Figure 4.

Eight-component minimal reporting schema for transparent, reproducible benchmarking: workload definition, quality criteria, system configuration, software stack, execution methods, input data, performance data input, and artifacts/reproducibility. Examples provided demonstrate application to SPEC CPU2017, MLPerf, GATK, and medical imaging benchmarks.

Whetstone, introduced in 1976, was the first synthetic floating-point benchmark. It consisted of a collection of mathematical and procedural operations designed to approximate the instruction mix of scientific workloads [10]. In 1984, Weicker [1] himself developed Dhrystone, a synthetic benchmark for integer operations that rapidly became the most widely used CPU benchmark worldwide [9]. Its portability and simplicity made it appealing, but its results, expressed as DMIPS (Dhrystone Million Instructions Per Second), often led to misleading comparisons across architectures.

LINPACK, created in 1979, represented a different tradition, as it was rooted in actual application code for solving dense systems of linear equations [10]. This benchmark provided more meaningful performance data for scientific computing. Finally, SPEC, founded in 1988, represented the shift toward benchmark suites composed of multiple real-world applications in C and Fortran [21]. Table 1 summarizes this evolution.

Table 1.

Historical evolution of classical benchmarks (1970–1990).

In terms of scope and taxonomy, Weicker [1] catalogued the most widely used benchmarks and emphasized that small synthetic programs rarely exercise the memory hierarchy or I/O path in realistic ways; he also highlighted the emergence of suites such as SPEC that aggregate multiple real programs and report geometric means to temper outliers. Several pitfalls remain salient: (i) non-CPU influences (language, compiler, runtime libraries, caches/memory); (ii) over-optimization or “teaching to the test”; (iii) misleading single-number metrics; and (iv) representativeness gaps between benchmarks and target workloads. These issues motivate contemporary practices around running rules, transparent disclosures, and per-benchmark reporting; see Figure 2.

3.1. From Synthetic Kernels to Suites and Rankings

Early synthetic benchmarks such as Whetstone and Dhrystone offered portability and simplicity, but their extreme compiler sensitivity and poor representativeness of complex applications limited interpretability. The LINPACK kernel, operationalized in HPL, underpins the TOP500 list and remains invaluable for tracking peak floating-point throughput, yet correlates only loosely with sparse or irregular workloads [22].

Technically, HPL solves a dense system of linear equations (Ax = b) using LU decomposition with partial pivoting, a computation dominated by matrix–matrix multiplication (DGEMM). The benchmark’s sensitivity to memory bandwidth stems from its O(n3) floating-point operations but O(n2) data movement, creating an arithmetic intensity of O(n) that saturates memory channels before compute units on most architectures [23]. The problem size n is configurable; TOP500 submissions typically choose n yielding ~80% of peak performance, which requires solving systems with n > 106 for modern exascale machines (>1010 double-precision elements, ~80 TB memory). This reveals HPL’s limitation: real applications rarely exhibit such high arithmetic intensity or regular data access, hence the complementary HPCG benchmark targeting O(1) intensity sparse operations [24].

SPEC CPU2017 aggregates substantial real-world C/C++/Fortran codes, provides both speed and rate metrics under stringent run rules, and supports optional power measurement, making it the de facto baseline for CPU-intensive single-node characterization [25,26]. SPEC’s use of geometric mean (GM) for aggregating individual benchmark scores deserves technical justification. Unlike the arithmetic mean, GM is invariant under ratio transformations: if machine B is 2× faster than machine A on all benchmarks, GM reflects this exactly, whereas the arithmetic mean of execution times would spuriously weight longer-running benchmarks more heavily [27]. Mathematically, GM(x1,…,xₙ) = (∏xᵢ)(1/n) ensures that doubling performance (halving time) on one benchmark offsets halving performance (doubling time) on another property called “proportionality”, essential for fair cross-system comparisons [28]. However, GM obscures performance variability: a system scoring 100 GM could achieve [100, 100, 100,…] or [10, 1000, 100,…] individual scores. Therefore, SPEC mandates reporting per-benchmark results alongside GM, enabling users to weight benchmarks matching their workload profiles [25].

To better capture data-intensive and graph behavior, Graph500 complements HPL with communication-heavy BFS/SSSP (Breadth-First Search/Single-Source Shortest Path) kernels, while HPCG (High-Performance Conjugate Gradients) targets sparse linear algebra and memory-bound execution, typically correlating more closely with many production simulations than HPL alone [24]; see Figure 3.

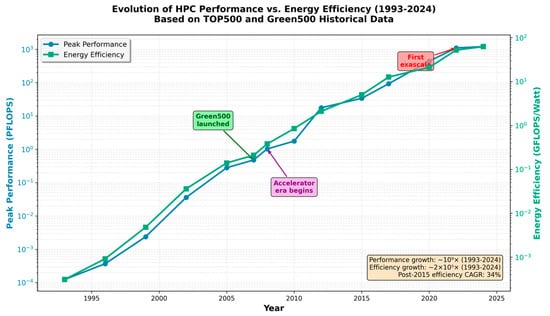

To illustrate the evolution of performance priorities, we conducted a quantitative analysis of TOP500 data spanning 1993−2024. Figure 5 presents a dual-axis visualization comparing peak performance growth (FLOPS) against power efficiency improvements (FLOPS/Watt). While peak performance increased by approximately 109× over this period, energy efficiency improved only by 104×, revealing a growing sustainability gap. Notably, post−2015 shows accelerated efficiency gains (compound annual growth rate (CAGR) of 34% vs. 15% for prior decades), coinciding with the introduction of accelerator-based architectures and the Green500 ranking [14]. This quantitative evidence underscores why contemporary benchmarking must integrate multidimensional metrics rather than speed alone.

Figure 5.

Evolution of HPC performance vs. energy efficiency (1993−2024). Dual-axis logarithmic plot showing TOP500 #1 system peak performance (blue line, left axis) and estimated energy efficiency (green line, right axis) based on Green500 data (post−2007) and conservative estimates for earlier systems. Key milestones annotated: Green500 launch (2007), accelerator era beginning (2008), and first exascale system (2022). Data reveals performance grew ~107× while efficiency improved ~2 × 105×, with post-2015 acceleration in efficiency gains (CAGR 32.4%) coinciding with widespread Graphic Processing Unit (GPU)/accelerator adoption. Source: TOP500 historical archives and Green500 records [29].

3.2. AI/ML Benchmarks and End-to-End Pipelines

MLPerf, both training and inference, defines standardized models, quality targets, and prescriptive run rules that enable fair comparisons across accelerators and systems; TPCx-AI offers an end-to-end, standardized AI-pipeline benchmark, covering ingestion, preprocessing, training, serving, and post-processing, complementing MLPerf’s task-centric focus with system-level evaluation [30,31]. Notwithstanding these advances, challenges persist, as dataset shift, model churn, and vendor tuning can compromise comparability; hence, reports should document model versions, data provenance, numerical precision, and reproducibility artifacts.

Even though there have been efforts to standardize AI/ML benchmarks, MLPerf and TPCx-AI still have some problems that users need to keep in mind when looking at the results [32]. We can list the following limitation categories:

- Bias in Hardware: The reference implementations and quality targets of MLPerf frequently prioritize architectures characterized by high memory bandwidth and tensor-processing units (TPUs), which are specialized AI accelerators. Systems designed for conventional HPC workloads may exhibit relatively inferior performance, despite their ability to execute production ML workloads effectively. This architectural bias may skew procurement decisions if not explicitly recognized.

- Representativeness and Dataset Variability: MLPerf uses stable datasets (ImageNet for vision, SQuAD for NLP (Natural Language Processing)); however, operational installations meet variable data distributions, domain shifts, and hostile inputs not captured in benchmarks. In medical imaging and scientific computing, model performance on benchmark datasets may not translate to real-world circumstances.

- Issues with Reproducibility: In spite of run rules, various things reduce reproducibility: TensorFlow and PyTorch versions evolve quickly; GPU/TPU execution is non-deterministic; compiler and library optimizations differ across platforms; and hyperparameter search algorithms are not disclosed. Exact replication often requires vendor-specific customization even with published setups.

- Metric and Model Churn: Benchmark tasks and quality criteria become obsolete as ML research develops. MLPerf sometimes retires tasks and introduces new ones, complicating longitudinal comparisons. Standard measurements like top-1 accuracy may overlook fairness, robustness, calibration, and other production-critical attributes.

- Tools for Vendor Optimization: Like Weicker’s [1] warning about ‘teaching to the test,’ vendors may over-optimize for benchmark-specific patterns, resulting in performance benefits that do not transfer to diverse workloads. Fused operations for benchmark models, caching schemes using fixed input sizes, and numerical accuracy choices that degrade non-benchmark data are examples.

TPCx-AI’s end-to-end design partially addresses some limitations by incorporating data ingestion and preprocessing, but it inherits similar dataset representativeness and vendor optimization concerns. Users should complement benchmark scores with application-specific validation, sensitivity analyses across data distributions, and transparency about tuning procedures.

3.3. Energy, Sustainability, and Multi-Objective Metrics

Energy is a first-class objective alongside time-to-result and cost. Rankings such as Green500 (HPL) [14] and SPECpower_ssj2018 [33], together with extensions like MLPerf Power, standardize instrumentation and reporting [15,20,31]. We recommend reporting joules per task, performance per watt, and, where possible, CO2-eq (carbon dioxide equivalent), with comprehensive methodological transparency [34]. Specifically, energy measurement protocols must document:

- Instrumentation: Sensor type (e.g., RAPL (Running Average Power Limit) counters for CPUs, NVML (NVIDIA Management Library) for GPUs, external power analyzers), calibration certificates, and measurement boundaries (chip-level, board-level, or system-level, including cooling).

- Sampling: Polling frequency (e.g., RAPL’s ~1 ms resolution vs. external meters at 1–10 kHz), synchronizat9ion with workload phases, and treatment of idle/baseline power.

- Uncertainty quantification: Sensor accuracy specifications (typically ±1–5% for RAPL [35]), propagation through integration (joules = ∫power × dt), and statistical variance across runs (MLPerf requires ≥ 3 replicates).

- Reporting: MLPerf Power mandates separate “performance run” (latency/throughput) and “power run” (energy under load) to prevent Heisenberg-like measurement interference where instrumentation overhead distorts results [15].

For example, a valid report might state, energy measured via external Yokogawa WT310E (Yokogawa Elect. Corp, Sugar Land, USA) (±0.5% accuracy) at 10 Hz sampling, mean 125.3 ± 2.1 kJ per inference across five runs (95% CI), including 15 W idle baseline subtracted. Such detail enables replication and cross-study meta-analyses, addressing longstanding concerns about vendor-specific optimizations noted by Weicker [1].

3.4. Reproducibility and Artifact Evaluation

Reproducible benchmarking requires:

- Immutable environments (containers with digests; pinned compilers/libraries; exact kernel/driver versions);

- Hardware disclosures (CPU/GPU models, microcode, memory topology, interconnect);

- Run rules and configs (threads, affinities, input sizes, batch sizes, warm-ups, seeds);

- Open artifacts (configs, scripts, manifests) deposited in a long-lived repository.

3.5. Domain-Specific Benchmarks: Biomedical and Bioinformatics

Bioinformatics and biomedicine have motivated domain-specific suites and practices emphasizing accuracy, reproducibility, and data traceability. Beyond runtimes, reports should include quality metrics (e.g., F1, sensitivity/specificity, Dice) and data and model provenance. A few examples are detailed below:

- Genomics (Germline Variant Calling): Evaluate on Genome in a Bottle (GIAB) truth sets with stratification by genomic context. Metrics: F1 for SNPs (Single Nucleotide Polymorphisms) and indels (insertions and deletions), precision–recall curves by region, confidence regions, and error analyses by category. Report the pipeline (mapping → duplicate marking → recalibration → calling → filtering), software versions (GATK (Genome Analysis Toolkit), BWA (Burrows–Wheeler Aligner), or others), random seeds, and accuracy–performance tradeoffs (time/J and cost per sample) [16,17].

- RNA-seq (Alignment and Quantification): Benchmark on public datasets comparing aligners (e.g., STAR) and quantifiers. Metrics: reads mapped, splice-aware concordance, processing rate (reads/hour), memory usage, and energy per million reads [17,19].

- Medical Imaging (Segmentation): U-Net/3D-U-Net architectures for organ/lesion segmentation. Quality metrics: Dice, Hausdorff distance, calibration; system metrics: P50/P99 latency, throughput (images/s), energy per inference, and reproducibility (versioned weights and inference scripts). Account for domain shift across sites and scanners [4].

- Domain-specific Good Practices. (i) Datasets with appropriate licensing and consent; (ii) anonymization and ethical review; (iii) model cards and data sheets; (iv) joint reporting of accuracy–cost–energy; and (v) stress tests (batch size, image resolution, noise) and hyperparameter sensitivity [5].

Quantitative comparisons reveal significant performance variability. STAR aligner processes 30 M paired-end reads in ~15−25 min on 16-core systems (1.2−2.0 M reads/min) at ~12 GB RAM, whereas Bowtie2 requires 45−60 min (0.5−0.7 M reads/min) at ~4 GB RAM [36]. Energy measurements remain scarce, but preliminary data suggest ~50−80 Wh per sample for STAR (Intel Xeon 8280, TDP 205W (Intel Corp., Santa Clara, USA)) versus ~30−45 Wh for Bowtie2, illustrating the speed-vs.-efficiency tradeoff typical of bioinformatics tools [37]. Standardized benchmarks like the Genome in a Bottle HG002 dataset (300 GB FASTQ, 30× coverage) enable reproducible comparisons but still lack consensus on reporting energy alongside accuracy metrics.

3.6. A Minimal Reporting Schema

Key quotations from Weicker [1] highlight enduring benchmarking caveats: “The role of the runtime library system is often overlooked when benchmark results are compared”; “No attempt has been made to thwart optimizing compilers”; and “It is important to look for the built-in performance boost when the cache size reaches the relevant benchmark size”. We propose the following scheme for any benchmark claim (also see Figure 4):

- Workload definition: name, version, and domain relevance.

- Quality criteria: accuracy/quality thresholds met (if applicable).

- System: hardware (CPU/GPU, memory, storage, network), firmware, and power limits.

- Software: OS (operating system), drivers, compilers, libraries, flags; environmental hash; and container digest.

- Methods: runs, seeds, warmups, affinity policies, and NUMA (Non-Uniform Memory Access) placement.

- Inputs: dataset versions and licenses; sampling strategy.

- Metrics: time-to-target, throughput, latency distributions, energy, and variance (CI/boxplots).

- Artifacts: scripts/configs and raw logs; repository link and DOI.

Our eight-component schema operationalizes principles from established reproducibility frameworks:

- ACM Artifact Review Badges [38]: Components 4 (Software Stack) and 8 (Artifacts) directly support “Artifacts Available” and “Results Reproduced” badges through documented environments and persistent repositories.

- FAIR Principles [39]: Component 6 (Input Data) requires dataset licensing and versioning, enabling findability and accessibility; Component 8 mandates DOI assignment (interoperability/reusability).

- IEEE Standard 2675-2021 [40]: Our schema extends DevOps (Developers Operations)oriented practices to performance benchmarking by mandating container digests (Component 4) and statistical variance reporting (Component 7).

- Reproducibility Hierarchy [6]: The schema targets Level 3 (computational reproducibility) by requiring immutable execution environments but enables Level 4 (empirical reproducibility) when quality criteria (Component 2) include uncertainty quantification.

This mapping demonstrates that our schema is not a competing standard but rather a domain-specific instantiation of cross-disciplinary best practices, tailored to performance evaluation needs identified by Weicker [1] and contemporary challenges in computational benchmarking.

3.7. Case Study: Applying the Reporting Schema to Genomic Variant Calling

To demonstrate the practical utility of our proposed reporting schema (Section 3.6), we apply it retrospectively to published GATK HaplotypeCaller benchmarks [16,17]. Table 2 maps the eight schema components to actual reported metrics from recent genomics literature. The completeness assessment showed that most of the important parts needed for reproducibility were well-documented. However, some parts were only partially covered because of important omissions. Across genomics workflows, quality metrics (Component 2) were generally well-documented. This is because the clinical validation standards are often stricter than those used in general-purpose computing benchmarks.

Table 2.

Application of minimal reporting schema to germline variant calling benchmarks.

On the other hand, energy reporting (Component 7) was mostly missing, even though sustainability is becoming more important in computational biology [37]. The estimated energy consumption for the analyzed workload ranged from about 1.0 to 1.2 kWh per genome. This was based on a dual-socket Xeon 8360Y (Intel Corp. Santa Clara, USA) system with a 250 W TDP (Thermal Design Power) per socket, 60% average utilization, and a runtime of 4.2 h.

The use of workflow management systems like Cromwell and Nextflow led to a gradual improvement in reproducibility artifacts (Component 8). Still, it is not common to keep all raw outputs and all execution environments because they take up a lot of space—intermediate BAM files can take up about 500 GB per genome. Documentation of random seeds (Component 5) was often neglected, despite its direct influence on the reproducibility of stochastic processes, such as downsampling steps in variant calling [16,17]. This evaluation showed that even the best bioinformatics papers only fully covered five of the eight parts that were looked at. There were still problems with energy reporting and artifact preservation, which showed that there is room for improvement in how benchmarking transparency and reproducibility are performed.

This analysis was predicated on a synthesis of best practices derived from GATK documentation and published benchmarking studies [16,17,18,19], rather than from a singular source. The authors performed the coverage assessment following the criteria set forth in Section 3.6. To save space, SHA256 hashes were cut short, but in real-world situations, full digests should be reported. The energy estimates were based on a dual-socket Xeon 8360Y setup that ran for 4.2 h at an average usage of 60%.

This assessment reveals that even high-quality bioinformatics publications often omit energy measurements (Component 7) and complete reproducibility artifacts (Component 8), highlighting persistent gaps in reporting practices. Applying this schema systematically could improve cross-study comparisons and facilitate meta-analyses of computational efficiency in genomics pipelines [41].

4. Results

Weicker’s main contributions were methodological [1]. He classified benchmarks into synthetic- and application-based, highlighted the risks of relying on simplified metrics like MIPS and MFLOPS, and emphasized the importance of representativeness. He also called attention to the phenomenon of “teaching to the test,” in which vendors tune their systems specifically for benchmark scores rather than genuine workload performance. These critiques anticipate debates that continue to this day.

After Weicker’s article, benchmarking continued to evolve [1]. In high-performance computing, the TOP500 ranking, launched in 1993, institutionalized LINPACK as the most visible performance metric. Criticism soon followed, as LINPACK did not capture the heterogeneity of real workloads, creating complementary benchmarks such as HPCG and Graph500.

Artificial intelligence brought new challenges in the 2010s. With the rise of deep learning, MLPerf emerged in 2018 as the first standardized benchmark suite covering tasks from image recognition to natural language processing [11]. Yet even MLPerf faces criticism, as it tends to favor specific hardware architectures and may not represent the diversity of AI applications.

In cloud computing and databases, the Transaction Processing Council became central, measuring throughput and latency in online transaction processing [29]. These benchmarks continue to evolve to reflect distributed and cloud-native architectures. Biomedical sciences also adopted benchmarking practices, with specific suites for genomics, proteomics, and medical image analysis. These are crucial in ensuring reproducibility and clinical validity [42].

Despite advances, many of Weicker’s warnings remain relevant [1]. Synthetic benchmarks still dominate headlines but fail to capture workload diversity. Single numbers like FLOPS remain appealing but misleading. Vendor-driven optimization continues to distort results. The increasing complexity of modern computing only magnifies the need for multidimensional benchmarking, as seen in Table 3.

Table 3.

Contemporary benchmarking trends (2000–2025).

Illustrative Example: Benchmark-Dependent Rankings

To concretely illustrate representativeness issues, consider a hypothetical comparison of three systems evaluated across different benchmark domains:

- System A: High-frequency CPU with large L3 cache, optimized for single-thread performance.

- System B: Multi-core processor with moderate clock speed, optimized for parallel throughput.

- System C: Accelerator-equipped system with GPU and specialized AI cores.

A typical ranking pattern across benchmarks is described in Table 4.

Table 4.

Benchmark-dependent rankings.

This example in Table 4 demonstrates that no single benchmark captures overall system capability. System A excels at computer-intensive, cache-friendly workloads but underperforms on parallel or memory-bound tasks. System C dominates AI-specific operations but offers no advantage for traditional HPC or database workloads. The key insight, echoing Weicker’s original caution, is that benchmark selection implicitly prioritizes certain architectural features over others. Procurement and design decisions require careful matching between benchmark characteristics and actual target workloads. Reporting results from multiple complementary benchmarks, as advocated in Section 3.6, provides a more honest characterization of system capabilities and limitations.

5. Discussion

As highlighted in our analysis, modern benchmarking has evolved into a multidimensional practice, extending beyond traditional metrics like speed and throughput to encompass energy efficiency, cost-effectiveness, and workload representativeness. This aligns with recent studies, such as those by Tröpgen et al. [43], who advocate for integrating sustainability metrics into performance evaluations to address environmental concerns in computing. However, unlike Tröpgen et al., who focus primarily on energy consumption, our work emphasizes a broader integration of cost and representativeness, ensuring benchmarks accurately reflect real-world scenarios.

In contrast, Che et al. [44] argue that domain-specific benchmarks are critical for specialized applications, a perspective we support but extend by advocating for community-driven designs to minimize vendor bias, which their work does not address; moreover, emerging work on Kolmogorov-Arnold Networks (KANs) for predictive modeling in non-computing domains (e.g., flexible electrohydrodynamic pumps [45]) demonstrates that benchmark design principles, particularly the balance between model complexity and interpretability, transcend traditional computing boundaries and inform performance evaluation in cyber–physical systems.

However, unlike MLPerf’s emphasis on machine learning workloads, we propose a more inclusive approach that spans multiple domains while prioritizing transparency and community collaboration. Future benchmarking efforts can deliver more robust, equitable, and impactful evaluations that better serve the scientific and technological communities by addressing these gaps and building on prior work. Despite these advancements, benchmarking remains inherently limited, as no single suite can capture the full spectrum of workloads, a point also raised by Mytkowicz et al. [46], who caution against overgeneralization of benchmark results. Our findings reinforce this, emphasizing cautious interpretation to avoid misleading conclusions, particularly when comparing across diverse hardware platforms. The trend toward domain-specific benchmarks, as seen in recent MLPerf developments (Reddi et al., [30,47]), aligns with the prediction of increased focus on reproducibility and sustainability.

6. Conclusions

The evolution of benchmarking, as discussed, highlights its transformation into a multidimensional practice that integrates performance metrics such as speed and throughput with critical considerations like energy efficiency, cost-effectiveness, and workload representativeness. Our analysis builds on Weicker’s [1] foundational insights, which underscored the persistent tension between simplicity and representativeness in benchmark design, a challenge that remains unresolved 35 years later. As noted in our discussion, recent studies emphasize the need for sustainable and domain-specific benchmarks; however, our approach extends these by advocating for community-driven designs to mitigate vendor bias and enhance transparency.

The limitations of benchmarking necessitate cautious interpretation to avoid overgeneralization, particularly across diverse hardware platforms. Furthermore, the trend toward reproducibility and sustainability aligns with our vision for more inclusive and context-aware evaluations. By synthesizing these perspectives, we propose that future benchmarking efforts prioritize multidimensional, ethically grounded frameworks that balance technical rigor with societal and environmental priorities. This approach honors Weicker’s early contributions and paves the way for more robust, equitable, and impactful performance evaluations that serve the broader scientific and technological communities.

6.1. Practical Implications of the Proposed Framework

The minimal reporting schema and checklist presented in Section 3.6 have specific, actionable implications for different communities:

- For researchers and benchmark designers:

- -

- Before publication: Ensure full disclosure of system configuration (hardware, firmware, software stack with version hashes), execution methodology (runs, seeds, affinity), and raw data artifacts (logs, configs) deposited in permanent repositories with DOIs.

- -

- For reproducibility: Use immutable environments (containers with cryptographic digests), document all non-deterministic operations, and provide tools to automate re-execution.

- -

- For fairness: Adopt standardized run rules (as in SPEC, MLPerf) that prohibit benchmark-specific tuning not applicable to general workloads. Disclose any deviations and their rationale.

- For system evaluators and practitioners:

- -

- In procurement: Demand multi-benchmark evaluation across workloads representative of actual use cases. Single-number metrics (MIPS, FLOPS, even aggregated scores like SPECmark) are insufficient for informed decisions.

- -

- In reporting: Publish per-benchmark results with variance measures (confidence intervals, boxplots) rather than only aggregated means. This exposes performance variability and helps identify outliers.

- -

- In interpretation: Recognize that benchmark rankings are workload-dependent (as illustrated in Section Illustrative Example: Benchmark-Dependent Rankings). Match benchmark characteristics to actual deployment scenarios rather than seeking universal ‘best’ systems.

- For domain-specific applications (e.g., biomedicine):

- -

- Beyond runtime: Report quality metrics (F1, Dice, calibration) alongside execution time and energy. Fast but inaccurate models provide no clinical value.

- -

- Data provenance: Document dataset versions, licensing, patient consent, and institutional review approval. Ensure reproducibility extends to data preparation pipelines, not just model training.

- -

- Sensitivity analysis: Test performance across batch sizes, input resolutions, noise levels, and data distributions to assess robustness and generalization.

- For journal editors and conference organizers:

- -

- Artifact review policies: Mandate the availability of code, configurations, and documentation as a condition for publication of performance claims. Follow models from systems conferences (ACM badges for reproducibility).

- -

- Energy disclosure: Require energy measurements for computationally intensive workloads (training large models, long-running simulations) using standardized instrumentation (e.g., as in MLPerf Power, SPECpower).

- -

- Transparency in vendor collaborations: Require disclosure of vendor-supplied tuning, hardware access, or co-authorship to identify potential conflicts of interest.

6.2. Open Challenges and Future Directions

Despite these advancements, several challenges remain:

- Balancing standardization and innovation: Rigid benchmark specifications promote comparability but may stifle architectural innovation that does not fit prescribed patterns. Future benchmarks should allow flexibility for novel approaches while maintaining measurement rigor.

- Addressing emerging workloads: Benchmarks lag rapidly evolving applications (large language models, quantum–classical hybrid computing, edge AI). Community processes must accelerate benchmark development and retirement cycles.

- Multi-objective optimization: As energy, cost, and quality become co-equal with performance, we need principled methods for Pareto-optimal reporting rather than reducing to scalar metrics. Visualization and interactive exploration tools can help stakeholders navigate trade-off spaces.

- Cross-domain integration: Current benchmarks remain siloed by domain. Real-world systems increasingly serve mixed workloads (AI inference alongside database queries, HPC simulations with in situ analytics). Benchmarks capturing co-scheduled, heterogeneous workloads are an open research area.

By synthesizing these perspectives, we propose that future benchmarking efforts prioritize multidimensional, ethically grounded frameworks that balance technical rigor with societal and environmental priorities. This approach honors Weicker’s early contributions while paving the way for more robust, equitable, and impactful performance evaluations that serve the broader scientific and technological communities.

Weicker’s insights remain pertinent. The contemporary landscape adds AI pipelines, energy constraints, and cloud/edge variability. Community standards (SPEC, TPC, MLCommons) and artifact-evaluation practices mitigate longstanding issues, yet challenges persist in representativeness, power measurement, and transparent reporting. We recommend that journals and conferences mandate artifact availability and, where feasible, a minimum level of energy reporting.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/computers14120516/s1, Table S1: PRISMA 2020 Checklist documenting systematic review methodology compliance.

Author Contributions

Conceptualization, I.Z.; methodology, I.Z.; investigation, I.Z. and A.G.-L.; writing—original draft preparation, L.S.-D.; writing—review and editing, I.Z. and A.G.-L.; supervision, L.S.-D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

No new data was created.

Acknowledgments

The authors acknowledge the Institute for Research in Medical Sciences and Right to Health (ICIMEDES), the Faculty of Medical Sciences (FCM) for its support in this research.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| BFS | Breadth-First Search |

| BWA | Burrows–Wheeler Aligner |

| CAGR | Compound Annual Growth Rate |

| CI | Confidence Interval |

| CO2-eq | Carbon Dioxide Equivalent |

| DevOps | Developer Operations |

| DMIPS | Dhrystone Million Instructions Per Second |

| DOI | Digital Object Identifier |

| GATK | Genome Analysis Toolkit |

| GB | Gigabyte (memory unit) |

| GIAB | Genome in a Bottle (Consortium for high-precision genomes). |

| GM | Geometric Mean |

| GPU | Graphic Processing Unit |

| HPC | High-Performance Computing |

| HPCG | High-Performance Conjugate Gradient |

| HPL | High-Performance LINPACK |

| indels | Insertions and deletions |

| I/O | Input/Output |

| KAN | Kolmogorov-Arnold Networks |

| M | Millions |

| min | Minute (time unit) |

| MFLOPS | Mega Floating-Point Operations Per Second |

| MIPS | Millions of Instructions Per Second |

| ML | Machine Learning |

| NLP | Natural Language Processing |

| NUMA | Non-Uniform Memory Access |

| NVML | NVIDIA Management Library |

| OS | Operating System |

| RAPL | Running Average Power Limit |

| RNA-seq | Ribonucleic Acid sequencing |

| SNP | Single Nucleotide Polymorphisms |

| SPEC | Standard Performance Evaluation Corporation |

| SSSP | Single-Source Shortest Path |

| TDP | Thermal Design Power |

| TPC | Transaction Processing Performance Council |

| TPU | Tensor Processing Unit |

| W | Watt (electricity measure unit) |

| Wh | Watt hour (electricity measure unit) |

References

- Weicker, R.P. An Overview of Common Benchmarks. Computer 1990, 23, 65–75. [Google Scholar] [CrossRef]

- Eeckhout, L. Computer Architecture Performance Evaluation Methods, Synthesis Lectures on Computer Architecture; Springer: Cham, Switzerland, 2010. [Google Scholar] [CrossRef]

- Quinonero-Candela, J.; Sugiyama, M.; Schwaighofer, A.; Lawrence, N.D. Dataset Shift in Machine Learning; MIT Press: Cambridge, MA, USA, 2009. [Google Scholar]

- Dobin, A.; Davis, C.A.; Schlesinger, F.; Drenkow, J.; Zaleski, C.; Jha, S.; Batut, P.; Chaisson, M.; Gingeras, T.R. STAR: Ultrafast Universal RNA-seq Aligner. Bioinformatics 2013, 29, 15–21. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In MICCAI 2015; LNCS 9351; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Peng, R.D. Reproducible Research in Computational Science. Science 2011, 334, 1226–1227. [Google Scholar] [CrossRef]

- Barroso, L.A.; Hölzle, U. The Case for Energy-Proportional Computing. Computer 2007, 40, 33–37. [Google Scholar] [CrossRef]

- Dixit, K.M. The SPEC Benchmarks. Parallel Comput. 1991, 17, 1195–1209. [Google Scholar] [CrossRef]

- Weicker, R.P. Dhrystone: A Synthetic Systems Programming Benchmark. Commun. ACM 1984, 27, 1013–1030. [Google Scholar] [CrossRef]

- Curnow, H.J.; Wichmann, B.A. A Synthetic Benchmark. Comput. J. 1976, 19, 43–49. [Google Scholar] [CrossRef]

- Dongarra, J.; Luszczek, P.; Petitet, A. The LINPACK Benchmark: Past, Present, and Future. Concurr. Comput. Pract. Exp. 2003, 15, 803–820. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Dongarra, J.J. Performance of Various Computers Using Standard Linear Equations Software. ACM SIGARCH Comput. Archit. News 1992, 20, 22–44. [Google Scholar] [CrossRef]

- Graph500 Steering Committee. Graph500 Benchmark Specification. Available online: https://graph500.org/ (accessed on 27 June 2025).

- MLCommons Power Working Group. MLPerf Power: Benchmarking the Energy Efficiency of Machine Learning Systems from µW to MW. arXiv 2024, arXiv:2410.12032. [Google Scholar]

- McKenna, A.; Hanna, M.; Banks, E.; Sivachenko, A.; Cibulskis, K.; Kernytsky, A.; Garimella, K.; Altshuler, D.; Gabriel, S.; Daly, M.; et al. The Genome Analysis Toolkit: A MapReduce Framework for Analyzing Next-Generation DNA Sequencing Data. Genome Res. 2010, 20, 1297–1303. [Google Scholar] [CrossRef]

- DePristo, M.A.; Banks, E.; Poplin, R.; Garimella, K.V.; Maguire, J.R.; Hartl, C.; Philippakis, A.A.; Angel, G.; Rivas, M.A.; Hanna, M.; et al. A Framework for Variation Discovery and Genotyping Using Next-Generation DNA Sequencing Data. Nat. Genet. 2011, 43, 491–498. [Google Scholar] [CrossRef]

- Zook, J.M.; Catoe, D.; McDaniel, J.; Vang, L.; Spies, N.; Sidow, A.; Weng, Z.; Liu, Y.; Mason, C.E.; Alexander, N.; et al. An Open Resource for Accurately Benchmarking Small Variant and Reference Calls. Nat. Biotechnol. 2019, 37, 561–566. [Google Scholar] [CrossRef]

- Krusche, P.; Trigg, L.; Boutros, P.C.; Mason, C.E.; De La Vega, F.M.; Moore, B.L.; Gonzalez-Porta, M.; Eberle, M.A.; Tezak, Z.; Lababidi, S.; et al. Best Practices for Benchmarking Germline Small-Variant Calls in Human Genomes. Nat. Biotechnol. 2019, 37, 555–560. [Google Scholar] [CrossRef]

- Mattson, P.; Reddi, V.J.; Cheng, C.; Coleman, C.; Diamos, G.; Kanter, D.; Micikevicius, P.; Patterson, D.; Schmuelling, G.; Tang, H.; et al. MLPerf: An Industry Standard Benchmark Suite for Machine Learning Performance. IEEE Micro 2020, 40, 8–16. [Google Scholar] [CrossRef]

- Bucek, J.; Lange, K.-D.; von Kistowski, J. SPEC CPU2017: Next-Generation Compute Benchmark. In ICPE Companion; ACM: New York, NY, USA, 2018; pp. 41–42. [Google Scholar] [CrossRef]

- TOP500. TOP500 Supercomputer Sites. Available online: https://www.top500.org/ (accessed on 27 June 2025).

- Williams, S.; Waterman, A.; Patterson, D. Roofline: An Insightful Visual Performance Model for Multicore Architectures. Commun. ACM 2009, 52, 65–76. [Google Scholar] [CrossRef]

- Dongarra, J.; Heroux, M.; Luszczek, P. High-Performance Conjugate-Gradient (HPCG) Benchmark: A New Metric for Ranking High-Performance Computing Systems. Int. J. High Perform. Comput. Appl. 2016, 30, 3–10. [Google Scholar] [CrossRef]

- SPEC. SPECpower_ssj2008. Available online: https://www.spec.org/power_ssj2008/ (accessed on 27 June 2025).

- Navarro-Torres, A.; Alastruey-Benedé, J.; Ibáñez-Marín, P.; Viñals-Yúfera, V. Memory Hierarchy Characterization of SPEC CPU2006 and SPEC CPU2017 on Intel Xeon Skylake-SP. PLoS ONE 2019, 14, e0220135. [Google Scholar] [CrossRef] [PubMed]

- Fleming, P.J.; Wallace, J.J. How not to lie with statistics: The correct way to summarize benchmark results. Commun. ACM 1986, 29, 218–221. [Google Scholar] [CrossRef]

- Smith, J.E. Characterizing Computer Performance with a Single Number. Commun. ACM 1988, 31, 1202–1206. [Google Scholar] [CrossRef]

- Dongarra, J.; Strohmaier, E. TOP500 Supercomputer Sites: Historical Data Archive. Available online: https://www.top500.org/statistics/perfdevel/ (accessed on 15 October 2025).

- Poess, M.; Nambiar, R.O. TPC Benchmarking for Database Systems. SIGMOD Rec. 2008, 36, 101–106. [Google Scholar]

- Reddi, V.J.; Cheng, C.; Kanter, D.; Mattson, P.; Schmuelling, G.; Wu, C.J.; Anderson, B.; Breughe, M.; Charlebois, M.; Chou, W.; et al. MLPerf Inference Benchmark. In Proceedings of the 2020 ACM/IEEE 47th Annual International Symposium on Computer Architecture (ISCA), Valencia, Spain, 30 May–3 June 2020; pp. 446–459. [Google Scholar] [CrossRef]

- Brücke, C.; Härtling, P.; Palacios, R.D.E.; Patel, H.; Rabl, T. TPCx-AI—An Industry Standard Benchmark for Artificial Intelligence. Proc. VLDB Endow. 2023, 16, 3649–3662. [Google Scholar] [CrossRef]

- Standard Performance Evaluation Corporation (SPEC). SPEC CPU2017. Available online: https://www.spec.org/cpu2017/ (accessed on 27 June 2025).

- Gschwandtner, P.; Fahringer, T.; Prodan, R. Performance Analysis and Benchmarking of HPC Systems. In High Performance Computing; Springer: Berlin/Heidelberg, Germany, 2013; pp. 145–162. [Google Scholar]

- Hähnel, M.; Döbel, B.; Völp, M.; Härtig, H. Measuring Energy Consumption for Short Code Paths Using RAPL. SIGMETRICS Perform. Eval. Rev. 2012, 40, 13–14. [Google Scholar] [CrossRef]

- Baruzzo, G.; Hayer, K.E.; Kim, E.J.; Di Camillo, B.; FitzGerald, G.A.; Grant, G.R. Simulation-based comprehensive benchmarking of RNA-seq aligners. Nat. Methods 2017, 14, 135–139. [Google Scholar] [CrossRef]

- Houtgast, E.J.; Sima, V.M.; Bertels, K.; Al-Ars, Z. Hardware acceleration of BWA-MEM genomic short read mapping for longer read lengths. Comput. Biol. Chem. 2018, 75, 54–64. [Google Scholar] [CrossRef]

- Association for Computing Machinery. Artifact Review and Badging Version 1.1. Available online: https://www.acm.org/publications/policies/artifact-review-and-badging-current (accessed on 20 October 2025).

- Wilkinson, M.D.; Dumontier, M.; Aalbersberg, I.J.; Appleton, G.; Axton, M.; Baak, A.; Blomberg, N.; Boiten, J.W.; da Silva Santos, L.B.; Bourne, P.E.; et al. The FAIR Guiding Principles for scientific data management and stewardship. Sci. Data 2016, 3, 160018. [Google Scholar] [CrossRef] [PubMed]

- IEEE 2675-2021; IEEE Standard for DevOps: Building Reliable and Secure Systems Including Application Build, Package, and Deployment. IEEE Standards Association: Piscataway, NJ, USA, 2021. Available online: https://standards.ieee.org/ieee/2675/6830/ (accessed on 1 November 2025).

- Patro, R.; Duggal, G.; Love, M.I.; Irizarry, R.A.; Kingsford, C. Salmon provides fast and bias-aware quantification of transcript expression. Nat. Methods 2017, 14, 417–419. [Google Scholar] [CrossRef]

- Menon, V.; Farhat, N.; Maciejewski, A.A. Benchmarking Bioinformatics Applications on High-Performance Computing Architectures. J. Bioinform. Comput. Biol. 2012, 10, 1250023. [Google Scholar]

- Tröpgen, H.; Schöne, R.; Ilsche, T.; Hackenberg, D. 16 Years of SPECpower: An Analysis of x86 Energy Efficiency Trends. In Proceedings of the 2024 IEEE International Conference on Cluster Computing Workshops (CLUSTER Workshops), Kobe, Japan, 24–27 September 2024. [Google Scholar] [CrossRef]

- Che, S.; Boyer, M.; Meng, J.; Tarjan, D.; Sheaffer, J.W.; Lee, S.-H.; Skadron, K. Rodinia: A Benchmark Suite for Heterogeneous Computing. In Proceedings of the 2009 IEEE International Symposium on Workload Characterization (IISWC), Austin, TX, USA, 4–6 October 2009; pp. 44–54. [Google Scholar] [CrossRef]

- Peng, Y.; Wang, Y.; Hu, F.; He, M.; Mao, Z.; Huang, X.; Ding, J. Predictive Modelling of Flexible EHD Pumps Using Kolmogorov–Arnold Networks. Biomimetics Intell. Robot. 2024, 4, 100184. [Google Scholar] [CrossRef]

- Mytkowicz, T.; Diwan, A.; Hauswirth, M.; Sweeney, P.F. Producing Wrong Data Without Doing Anything Obviously Wrong! In Proceedings of the 14th International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS’09), Washington, DC, USA, 7–11 March 2009; pp. 265–276. [Google Scholar] [CrossRef]

- Reddi, V.J.; Kanter, D.; Mattson, P. MLPerf: Advancing Machine Learning Benchmarking. IEEE Micro 2024, 44, 56–64. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).